Abstract

Variational autoencoders (VAEs) play an important role in high-dimensional data generation based on their ability to fuse the stochastic data representation with the power of recent deep learning techniques. The main advantages of these types of generators lie in their ability to encode the information with the possibility to decode and generalize new samples. This capability was heavily explored for 2D image processing; however, only limited research focuses on VAEs for 3D data processing. In this article, we provide a thorough review of the latest achievements in 3D data processing using VAEs. These 3D data types are mostly point clouds, meshes, and voxel grids, which are the focus of a wide range of applications, especially in robotics. First, we shortly present the basic autoencoder with the extensions towards the VAE with further subcategories relevant to discrete point cloud processing. Then, the 3D data specific VAEs are presented according to how they operate on spatial data. Finally, a few comprehensive table summarizing the methods, codes, and datasets as well as a citation map is presented for a better understanding of the VAEs applied to 3D data. The structure of the analyzed papers follows a taxonomy, which differentiates the algorithms according to their primary data types and application domains.

Similar content being viewed by others

1 Introduction

Training deep learning models on higher dimensional spaces is a challenging task due to the runtime complexity. This is especially true for the 3D dimensional space with non-Euclidean constraints and irregular data distribution for which the direct application of the 2D convolution operators is not feasible. With the recent advances in the field of deep learning for generative models, the VAEs (Kingma and Welling 2014) has come into focus thanks to their ability to generalize data even in high-dimensional spaces. Variational Autoencoders are a class of deep learning generative methods based on variational methods (Mittal and Behl 2018) and enable the compressed representation of higher dimension spaces efficiently. Furthermore, with almost real-time processing capabilities, the VAEs prove to be efficient in scene completion, recognition, and segmentation tasks as well.

1.1 Motivation

The recent advances in inexpensive 2.5D depth sensors such as RealSense, Kinect, or Apple Prime, resulted in an increased interest in point cloud processing. As these sensors provide relatively high frame rate depth information, it is crucial for the fast processing of the spatial image stream. Currently, numerous methods are created to process 3D information, to reach the level of optimization and performance seen in the 2D domain. Compared to the 2D domain, the main disadvantage of the 3D methods, in addition to the later start, is the computational complexity needed for higher dimensionality of input data. Tutorials and surveys exist about VAEs, although they focus on the simpler task of 2D image generation with limited resolution. This paper provides an overview of existing 3D data generation methods using VAE architecture, which to the best of our knowledge is the first systematic overview of the VAEs for the 3D domain. Instead of including the wide range of 2D VAE methods, we provide cornerstone review papers from the 2D VAE domain highlighting the common aspects between the 2D and 3D domains.

In this article, we provide a systematic overview of the recent VAE-based spatial data processing methods. To ensure a proper focus of our investigation, we narrowed down the analysis to the VAEs operating on 3D discrete data such as point clouds or meshes. With this selection, we hope to provide a good starting point for readers interested in spatial data processing using Variational Autoencoders.

1.2 Structure of the article

The article gives an overview of the fundamental principles of the VAE in Sect. 2 assuming the basic knowledge of probabilistic distributions and neuronal networks. Besides the basic neural network variants, the extension towards specific VAEs, such as \(\beta \)-VAE, are discussed too, focusing on the problem of hyperparameter tuning for these methods. In the next Section, a thorough analysis of existing VAE methods for spatial data processing is presented by grouping the methods into different sub-classes according to the types of data on which they are operating. The taxonomy of this grouping is further presented in Sect. 3. The presented articles are summarized in a table according to the data types used on input, code availability, and the references on which these methods are based. Further on, a reference graph is provided highlighting the connections in time among different articles of VAE dealing with 3D data processing. Finally, the paper concludes with the current state-of-the-art overview and the future possibilities within this domain.

2 Background

Machine learning algorithms are discriminative or generative (Ng and Jordan 2001). A discriminative model extracts information about the data analyzed, such as classification. Generative models, on the other hand, generate new data based on the specific class distribution. In other words, generative models are often seen as the opposite of discriminative models. For this type of algorithm, we differentiate two major architectures: Generative Adversarial Networks (GANs) (Goodfellow et al. 2014; Creswell et al. 2018) and Variational Autoencoders (VAEs) (Kingma and Welling 2014).

GANs have two competing modules: generator and analyzer (the latter is a discriminator model). The generative part is trained to create meaningful data that are similar to observed data, while the analyzer is trained to differentiate between generated and real data. Training them as competitors results in a generator model capable of creating high-quality synthetic data. Such an example is available in the work of Antal and Bodó (2021), where the authors created a method, that generates realistic faces, while also learning facial features. This method is useful for drawing composite sketches or data augmentation. Learning facial features has multiple significant applications, such as identity recognition. Further surveys exist on comparing face recognition methods (Li et al. 2022a).

Typically, GANs produce more photorealistic images than VAEs; however, GANs require more data and tuning than VAEs (Mi et al. 2018). The increased training efforts are caused by two main problems: unstable convergence and mode collapse. The latter means that if one of the modules (usually the discriminator) is too powerful, the other module fails to learn every aspect of the input data, as the training gradient vanishes. In other words, even if the generator creates higher-quality images than in the previous iteration, from the point of view of the discriminator, the real data are equally superior to the generated image as in the previous iteration.

VAEs also have two modules: encoder and decoder; however, in this case, they are not competitors. The encoder part is searching for few, yet meaningful variables to describe the characteristics of the input data, while the decoder is trained to reconstruct the original data from these variables. Different flavors of VAE produce comparable results to GANs, for example, Vector Quantized Variational Autoencoder (VQ-VAE-2) (Razavi et al. 2019). Furthermore, combinations of VAEs and GANs are proposed by Larsen et al. (2016), Makhzani et al. (2015), Zamorski et al. (2020).

The customizability of VAEs makes them suitable for higher-level generation tasks; therefore, the center of this work is the VAE architecture, thus we start by providing a theoretical background about VAEs (Doersch 2016; Kingma and Welling 2019).

2.1 Theoretical foundations of Variational Autoencoders

Variational Autoencoders, as the name suggests, evolved from autoencoders; therefore, our starting point is the autoencoder by Kramer (1991). It has two components: the encoder and the decoder, as shown in Fig. 1. The encoder compresses the data into a latent space using latent variables which are highly representative of the original data but more difficult to understand for the human observer. Meanwhile, the decoder extracts these variables, in other words, reconstructs the original data. Hence, one of the main applications of autoencoders is data compressing.

The second use case is the denoising of data. Mafi et al. (2019) provide a survey on different denoising applications, among them, autoencoder-based methods are recommended due to their performance.

Third, autoencoder-based video generation methods are used in video forensic forgery detection applications (Javed et al. 2021). Similarly, Dhiman and Vishwakarma (2019) collect methods on abnormal human activity detectors both in 2D and 3D domains. The authors claim that autoencoder-based human activity detectors are sensitive to variations in the view.

The goal of the autoencoder is to recreate the original data; therefore, the metric used compares the input data with the output data, calculating the difference between them. This metric is called reconstruction loss (1), and in most cases mean squared error is used:

where \({\textbf{x}}\) is the input data, \(\mathbf {x'}\) is the reconstructed data, \({\textbf{z}}\) is the latent space, \(e(\cdot )\) is the encoder and \(d(\cdot )\) is the decoder.

For simple data reconstruction, this architecture is adequate; however, it is not sufficient for new data generation. The main reason is that the obtained latent space is not uniformly distributed but contains discrete data patches (Kingma and Welling 2014). The interpolation between these patches in the latent space results in highly meaningless and incomprehensible data at the output of the decoder. Therefore, to generate previously unseen data, the latent space must be organized (Kingma and Welling 2014).

The solution to the problem of the non-uniformly distributed latent space is approximating latent distributions rather than distinct values but finding these distributions takes an exponential amount of time, hence they are intractable in most cases. To solve this problem, Kingma and Welling (2014) in their pioneering work created the base of VAE. They proposed to find a simpler probabilistic distribution that is tractable and approximates the original probabilistic distribution, or, in other words, optimizes the marginal likelihood (4). This causes the latent space to be filled in a predictive manner, according to the chosen simplified probabilistic distribution, thus the interpolation between latent patches becomes possible, and results in comprehensible data at generation. This is called mean-field variational inference, and it is commonly used with the Gaussian probabilistic distribution, also known as the normal distribution: \({\mathcal {N}}(0,{\textbf{I}})\).

As in (4) the marginal likelihood is composed of two terms: the Kullback- Leibler divergence \((D_{KL})\) (2) and the Evidence Lower Bound (ELBO) (3), also called variational lower bound (Kullback and Leibler 1951; Bulinski and Dimitrov 2021). These two components are inversely proportional, thus maximizing the ELBO results in minimizing the \(D_{KL}\).

where \({\mathcal {L}}(\varvec{\theta },\varvec{\phi };\,{\textbf{x}}^{(i)})\) is the ELBO, \({\mathbb {E}}_{q_{\varvec{\phi }}({\textbf{z}}|{\textbf{x}})}\) is the expected value operator with respect to the distribution q, \(\varvec{\phi }, \varvec{\theta }\) are the parameters (weights) of the encoder and decoder, respectively, and \(q_{\varvec{\phi }}(\cdot ), p_{\varvec{\theta }}(\cdot ),\) are the probabilistic distributions over the parameters \(\varvec{\phi }, \varvec{\theta }\).

While training, the encoder produces two vectors describing the distribution: the mean \(\varvec{\mu }\) and the standard deviation \(\varvec{\sigma }\). Initially, the decoder samples several values from the latent space to reconstruct the information. But this sampling creates a nondeterministic node \({\textbf{z}}\) on the path of data, which makes backpropagation impossible through that node. Kingma and Welling (2014) propose reparameterization trick (5) to solve this problem. They extract the sampling to a separate node with normal distribution \(p(\varvec{\epsilon })\), therefore, the backpropagation becomes possible.

The architecture of a VAE is shown in Fig. 2.

2.2 Variational autoencoders extensions

Although, the basic VAE is considered a powerful architecture compared to simple autoencoders, room for improvement by expanding the architecture exists. The first variant is \(\beta \)-VAE, which balances the capacity of the latent channels and the independence constraints, which describe the relations of the features decoded inside the latent space. Further architectural modifications such as Adversarial Autoencoder (AAE), two-stage VAEs, and hierarchical VAEs also exist.

2.2.1 \(\beta \)-VAE with hyperparameters

A method that is focused on generating new 2D images that are similar to existing images is called \(\beta \)-VAE proposed by Higgins et al. (2017). The \(\beta \) is a hyperparameter that is used to balance latent channel capacity and independence constraints while discovering more latent features. The drawback of this method is the dependence on the sampling density and the correct choice of \(\beta \). In this \(\beta \)-VAE method, the authors mention that the data generation of VAEs is often referred to as learning a disentangled representation of the latent space, as latent units are sensitive to one generative factor, but insensitive to other factors, that is, the insight for a generative VAE considered disentangled VAE (Burgess et al. 2018). In Fig. 3, handwritten digits generated using the basic VAE and \(\beta \)-VAE methods are shown. The digits look like a mixture of many other digits, proving that a VAE interpolates the latent space. Furthermore, the basic VAE also generates unrealistic-looking digits, which shows its entangled nature, whereas the \(\beta \)-VAE generates more realistic digits by disentangling the latent space.

Different digits were generated using basic VAE, and \(\beta \)-VAE by Spanopoulos and Konstantinidis (2021)

The hyperparameter \(\beta \) is an additional extension for reparameterizing the VAE for creating disentangled latent space representation. Another hyperparameter called C is used to control the information capacity of the latent space \(q({\textbf{z}}|{\textbf{x}})\). These parameters are proposed by Higgins et al. (2017) as follows in (6):

2.2.2 Adversarial autoencoders

Architectural modifications are also used to expand the capabilities of VAEs. Mi et al. (2018) provide a comparison between GANs and VAEs, and their derivatives such as Wasserstein GAN (Arjovsky et al. 2017) or the best resulting quality method, VAE-GAN (Larsen et al. 2016). Another mixture of a VAE and a GAN is called AAE architecture (Makhzani et al. 2015). The difference lies in the introduction of a discriminator module (D). This module decides whether a given object is real or fake in the regularization phase. In this phase only the discriminator (D) is updated, the encoder and decoder modules are updated in the next cycle, called reconstruction phase. Also, in this case, the decoder is called generator (G). Therefore, the reconstruction loss is supplemented by the adversarial training criterion from the GAN architectures(7):

2.2.3 Two-stage VAE

The basic intuition behind the Two-stage VAE (Dai and Wipf 2019) is that the Gaussian encoder and decoder assumption reduces the effectiveness of the model, hence the authors propose the two-stage VAE architecture, where the first stage learns the manifold for each allowance, thus providing a mapping to a lower-dimensional representation. The second stage is smaller and converts the low-dimensional data into latent values. This work ensures that a two-stage VAE produces high-quality images without the blurred edges specific for VAEs, although GANs are still superior in this context to the two-stage VAE.

2.2.4 Hierarchical VAE

A hierarchical VAE, proposed under the name of Nouveau VAE (Vahdat and Kautz 2020), is similar to a two-stage VAE. This method ensures that the architecture has multiple latent spaces on different levels. All of them have the usual elements, like reparameterization trick. The residual blocks are situated between the latent layers, and they act like very simple encoder or decoder between the layers. Inside the model, two paths are defined: bottom-up and top-down. The advantage of multiple latent spaces is that each of them learns a specific feature; thus, in a generative process, the generative task itself is controlled to a much higher degree, resulting in more realistic and sharper data.

2.2.5 Other VAE variants

The recent advances of the generative models has an impact on the VAE models, such as VAEs, as described so far. However, more alterations of this architecture exist Wei et al. (2020); Wei and Mahmood (2021a), that we enumerate in a non exhaustive manner:

-

1.

f-VAEGAN-D2 Xian et al. (2019)

-

2.

InfoVAEGAN Ye and Bors (2021)

-

3.

Adversarial Symmetric VAEPu et al. (2017)

-

4.

Lifelong VAE-GANYe and Bors (2020)

2.3 Overview of existing VAE surveys

Multiple applications benefit from the capabilities of VAEs in the field of 2D and 3D data processing. For the 3D domain, the main applications include model generation, object deformation, classification, and segmentation. To our knowledge, no 3D specific VAE survey exists, although considerable research output on 2D domain specific VAEs is available. The existing 2D-specific VAE surveys are presented in this section as a preliminary step to the 3D domain-specific part.

2.3.1 Wei and Mahmood: recent advances in variational autoencoders with representation learning for biomedical informatics: a survey

A survey by Wei and Mahmood (2021b) focuses on different biomedical measurements. The use of VAEs in biomedical informatics goes from data generation to representation learning. The authors differentiate three major categories, such as molecular design, sequence dataset analysis, medical imaging and image analysis, and several different subcategories, including string representation design, graph representation design, sequence engineering, dimensionality reduction, integrated multi-omics data analysis, mutation effect prediction, gene expression analysis, DNA methylation analysis, image augmentation for a downstream task, representation learning for decision making. One important notice is that a molecule can be seen as a graph and treated as one using a VAE.

2.3.2 Asperti et al.: a survey on variational autoencoders from a green AI perspective

The next survey, by Asperti et al. (2021), compares the different methods mainly from a power efficiency standpoint, like current consumption, describing the basic concepts of the VAEs and possible improvements. After testing the different methods, the authors of the survey concluded notable characteristics of the VAE. Firstly, in their experience, the decoder is more important than the encoder, and increasing the number of the latent variables improves reconstruction, but does not necessarily affect the generation.

The idea of comparing architectures from the efficiency standpoint and current consumption comes from Green AI Schwartz et al. (2020), which states the importance of the trade-off between the performance and efficiency of a model, and how this is affected by design, model description, and mathematical formulation. The metric for modeling the efficiency from a Green AI perspective is the computation of the Floating Point Operations (FLOPS), which does not depend on the hardware, yet it correlates with the runtime, even though it is highly affected by memory accessing time. So the goal is to achieve a higher number of FLOPS.

Furthermore, Asperti et al. mention that the training of a VAE is expected to be improved by using von Mises-Fisher from the Hyperspherical VAE (Davidson et al. 2018) instead of the Gaussian distribution. Besides this, Hou et al. (2017) states that using deeply hidden features extracted from other pretrained image processing modules overcomes blurriness. The authors of this survey also emphasize the importance of the running average method, which maintains a balance between the reconstruction loss and the \(D_{KL}\), since if the \(D_{KL}\) does not decrease while the reconstruction loss does, the improvement of the model will be prevented. This is known as the phenomenon variable collapse.

2.3.3 Kovenko and Bogach: a comprehensive study of autoencoders’ applications related to images

The third survey on autoencoder-based 2D image processing methods was created by Kovenko and Bogach (2020). The authors notice, that Bernoulli distribution was not considered before in VAEs, but it works better for grayscale images, such as the MNIST dataset, while for RGB data the Gaussian distribution is suggested. A so-called warmup phase is mentioned, where they gradually modify a \(\beta \) variable (Sønderby et al. 2016), unlike the original article (Higgins et al. 2017), where \(\beta \) is kept constant. This is done to prevent latent units from becoming inactive during training, which would otherwise lead to a poorly trained model. The authors show that the warmup phase improves training and validation losses by approximately 7%.

3 Relevant 3D methods using variational autoencoders

The most common data types that generative models, like VAEs, learn are 2D images, 1D audio signals, and text semantics.

However, being in a time, when the advance in 3D image processing techniques is fast, our main focus is on generative models that work with 3D objects, represented with depth images, meshes, or point clouds. An overview of the different 3D representations is provided by Friedrich et al. (2018). Each of them has an advantage for a specific task, depending on the available resources or the given architecture. The conversion is possible between different types of representations if needed. The conversion is simple for specific cases, for instance from depth image to point cloud, and more tedious for other cases, for instance from point cloud to polygon mesh.

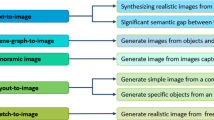

In the following part, we provide an overview of different techniques that are using VAE architectures for processing 3D data. We organized them into categories according to the type of data and scope in order to have a valid taxonomy. The first dimension characterizes the primary data types used in the analyzed papers, while the second dimension focuses on different types of applications and data processing domains. In 3D, we can specify 3 popular data formats, which are: polygon mesh, voxel grid, and point cloud. These 3 formats represent 3 distinct categories in the first taxonomical dimension, while the other data types build up a fourth category. This division is presented in Fig. 4. Later, an overview is provided about the analyzed methods, where multiple tables correspond to the first dimension of the taxonomy.

3.1 Polygon mesh transformations

In computer graphics and CAD applications, 3D objects are often stored using polygon meshes. This representation technique is slightly more complex than other types, such as depth images or point clouds, but the relationships of the edges, faces, and vertices are well-defined, and therefore the complex objects are stored with high accuracy. This well-defined nature of a mesh makes the objects easily deformable by applying a transformation on a given vertex or edge.

3.1.1 Mesh deformation

The first method that we analyzed was created by Tan et al. (2018), and it deforms the 3D mesh models into other shapes; more specifically, the method deforms human poses. The method, called mesh VAE, explores the probabilistic latent space of 3D mesh surfaces. The reasoning behind the choice of the data type is that the deformation operation on meshes is a straightforward process, while the voxel representation creates too rough edges. This method is based on an advanced surface representation technique, Rotation Invariant Mesh Difference (RIMD) (Gao et al. 2016), which, as the name suggests, is translation and rotation invariant. This representation recovers the shapes accurately and efficiently by solving the nonlinear optimization as-rigid-as-possible (Sorkine and Alexa 2007), and applying the rotation transformation Murray et al. (1994). The combination of mean-squared error as a loss function (8) and the hyperbolic tangent (tanh) as the activation function results in a method that generates new poses from the existing meshes of human bodies. As an extension, this method is completed by an adjustable parameter, enforcing specific features in the latent space. Thus, mesh VAE becomes more controllable. A further update of this method is to develop the ability to process non-homogeneous meshes, since the time of writing, the architecture only works with homogeneous meshes.

where \({\textbf{h}}\) is the preprocessed RIMD feature, \({\textbf{g}}\) is the output of the VAE framework, M is the number of models, K is the number of feature dimensions and \(\alpha \) is the tuning parameter.

Furthermore, RIMD states that the difference in the deformation of a model from the reference model is the energy needed for the transformation (9). Then the deformation is decomposed into a rotation and a shearing part.

where \({\textbf{T}}_i\)—deformation gradient in the one-ring neighborhood; \(N_i\)—one-ring neighbors of vertex \(v_i\); \(\mathbf {e'}_{ij}=\mathbf {p'}_i - \mathbf {p'}_j\)—for the deformed model; \({\textbf{e}}_{ij}={\textbf{p}}_i - {\textbf{p}}_j\)—for the reference model; \(c_{ij} = \cot {\alpha _{i,j}} + \cot {\beta _{ij}}\)—cotangent weights (\(\alpha _{ij}\) and \(\beta _{ij}\) are angels opposite the edge connecting \(v_i\) and \(v_j\)); \({\textbf{R}}_i\)—rotation; \(dR_{ti}\)—rotation difference; \({\textbf{S}}_i\)—scaling or shearing; \(c_i'=\frac{1}{|N_i|}\).

By minimizing the energy, one gets the feature representation as (10):

3.1.2 Body and cloth moulding with CAPE

Ma et al. (2020b) describe a method, called Clothed Auto Person Encoding (CAPE), that generates different human poses, specifically with different styles of clothing on, modeling even the wrinkles, Fig. 5. The basis of this method is the Skinned Multi-person Linear Model (SMPL) (Loper et al. 2015), which is a novel vertex-based body representation format. Unlike the usually regression-based methods, the CAPE is rather a probabilistic generation task, which results in a model that generates multiple clothing deformations for a single pose and body type, unlike the previously achieved one-to-one correspondence. By expanding the SMPLify method (Bogo et al. 2016), they achieve better estimates of the generation of human bodies and clothes. Ma et al. (2020b) describe clothing with the clothing displacement graph, which is processed by an architecture that is a combination of the VAE and the GAN architectures; thus, the authors gave this method a second name, Mesh-VAE-GAN. This means that after the encoder-decoder part of VAE, they introduce a discriminator, to improve the deformation quality. However, we can mention a few limitations to this method. Firstly, CAPE has an offset-based limitation in representing clothes, hence a multi-layer model is in need of development to overcome this drawback. Secondly, the mesh resolution of SMPL limits the quality of the CAPE, hence, depending on the scenario, a higher mesh resolution is required. Thirdly, this method is based on poses, while dynamic motions are currently out of reach.

Dressing as a human in clothing (Ma et al. 2020b)

3.1.3 Mesh generation and edge contraction pooling

For better 3D shape generation and a better understanding of probabilistic latent spaces, we need a reliable pooling operation. This is what Yuan et al. (2020) proposed for meshes using VAEs, based on (Garland and Heckbert 1997). The method uses mesh simplification to create a hierarchy, avoiding irregular-sized triangles in the mesh. The method is effective for denser meshes because the number of parameters used is less than usual. The hierarchy of different layers means that different levels of detail are feasible for the model to analyze, thus keeping track of the mapping between coarser and finer meshes. In (Garland and Heckbert 1997), repeated edge simplification is used, which is modified to produce evenly sized triangles by introducing the length of the edges into the metrics. Yuan et al. (2020) defined the de-pooling operation as the inverse of the pooling operation. The structure of the method is completed with spectral graph convolutions from (Defferrard et al. 2016) instead of fully connected layers. This method is expanded by the possibility to generate shapes, by implementing a Conditional Variational Autoencoder (CVAE) architecture, where the user defines the parameters that must be taken into account when generating a new shape. Also at the input, this method uses vertex-based deformation feature representation (Gao et al. 2019). While Yuan et al. (2020) produce better quality meshes than (Garland and Heckbert 1997), only homogeneous meshes are processable due to the mesh simplification-based pooling.

The industry lacks research and data consisting of 3D faces or surfaces. This is the reason why Park and Kim (2021) created the Face-based Variational Autoencoder (FaceVAE) model that generates new 3D face data for industrial sites. This method uses polygon meshes, as the authors consider this type of data representation for faces to be a rarity, unlike 2D image-based, voxel-based, or point-cloud-based models. The main building components of this method are adjacency matrices and feature matrices with the graph data structure. The data are converted into binary format, still, good results were obtained by focusing on the structurization aspect of the VAEs instead of the pure generation aspect of them. The binary conversion is similar to a voxelization step, although this is in a specific matrix form. This model works optimally with vertices of 300 or less, for higher resolutions the octree concept might be a suitable candidate.

3.1.4 3D CAD model retrieval

CAD models are commonly based on polygon meshes, and they are often used in engineering, such as designing parts and assemblies in production. For these parts, the existence of the schematic is required, together with the loops of the task done to achieve the given part. For the occasion of a missing schematic or missing process tree, Qin et al. (2022) proposes a method, that retrieves information about a given 3D CAD model, including loop attributes, loop structures, and loop relation trees. This method also describes the structural semantic information of a part, by training a VAE-based recursive neural network (RvNN) (Socher et al. 2011) with backpropagation through structure (BPTS) (Goller and Küchler 1996). Therefore at the output of the model, a sketch/view is obtained about the input CAD model. This paper also utilizes a type of parameter tuning to a great extent, namely the cyclical learning rate (CLR) (Smith 2017). This approach further requires model optimizations and an implementation of more than one retrieval mode, such as coarse-grained and fine-grained retrievals.

3.2 Voxel grid processing

Another well-known 3D data representation format is the voxel grid. The 3D space is partitioned into a grid, where each cell stores a point or not. This type of organization is similar to a 2D image, where each pixel has a defined place. Although voxel grids are considered well organized, the stored information is not continuous enough. Still, because they are well organized, voxel grids are widely used, especially in discriminative tasks, such as segmentation.

3.2.1 Voxel segmentation

Voxel segmentation is simpler than data generation, yet an optimized method significantly helps other methods by supplying fast and accurately segmented labels. Such a segmentation method is the work of Meng et al. (2019). As a basis of the architecture, they used VAE and Radial Basis Function (RBF) (Buhmann 2000) (11), (12). The authors complain about the ineffectiveness of point clouds due to their unordered nature and lack of symmetry; thus, a voxel grid is proposed. Typically, a voxel only contains a boolean occupancy status, which is not descriptive enough, but with RBF, they are interpolated, which leads to a representation with more resolution.

where N is the number of data points, \(w_j\) is the scalar value, \(\eta (\cdot )\) is the symmetry function, \(\mathbf {c_p}\) is the center point of a voxel, and \({\textbf{v}}\) are the data points and:

where V is the set of points and \(\delta \) is the predefined parameter (usually a multiple of the size of the subvoxel).

Furthermore, in this method, Group Equivariant Convolutional Neural Network (G-CNN) (Cohen and Welling 2016) is used, which improves the invariance against rotation, translation, and scaling, by capturing the intrinsic symmetry of point clouds. In general, G-CNNs increase the expressive capacity of the given network while maintaining the number of parameters. In this particular case, their role more specifically is to detect co-occurrences in the latent space. By doing so, the method reduces the number of parameters without reducing the information density.

In addition, the Multilayer Perceptron (MLP) functions (Ruck et al. 1990) are used to combine the per-point features. RBF-VAE is the first module, and the segmentation (group convolution) is the second one, they are trained separately. As the loss function, they have used cross-entropy loss for segmentation (13):

where L is the number of labels, \({\textbf{p}_{\textbf{gt}}}_l\) is the probability of the ground truth label and \({\textbf{p}}_l\) is the probability of each label.

While the work of Meng et al. (2019) presents a robust segmentation method, its performance is reduced in certain shapes, most probably due to the encoded \(90^{\circ }\) symmetry. Further experiments are planned to verify if this method is suitable for surface normal estimation.

3.2.2 Generative and discriminative voxel modeling

Brock et al. (2016) realized the importance of choosing the correct representation for 3D data. Hence, they are experimenting with the viability of using voxel grids with VAE architecture. They analyze not only the quality of the latent space created by the encoder but also the interpolation capabilities of the decoder in the task of shape generation and classification.

The created model is well suited for generating dense objects but struggles with thin shapes, like table legs and sharp edges. The reasoning is that these features fail to be encoded correctly in the latent space, so a loss of local features is expected. The smoothness of the interpolation between two shapes suggests that the grouping and coverage of the latent space meet expectations. The classification task tends to perform slightly worse with few training data than the Orientation-boosted Voxel Net (ORION) (Sedaghat et al. 2017) method but outperforms it after introducing more training data. Proposed future works consist of improved resolution, spatial occupancy data, adding data augmentation, and experimenting with different Voxception architectures.

3.2.3 Volumetric shape generation

The next method, proposed by Guan et al. (2020), is derived from the work of Wang et al. (2014), by lifting it from conventional 2D images to 3D voxel grids. This architecture is superior to a simple autoencoder in that it further analyzes the data connections, and instead of mapping each data to a discrete point in the latent space, a set of instances is mapped, so this architecture lies between the traditional AE and VAE architectures. Thus, we achieve a model that interpolates between shapes but maintains edge sharpness better than a simple VAE, as shown in Fig. 6. The model is capable of extrapolating to a certain degree, creating new unseen data. The latent space, as expected, shows a good grouping of similar characteristics. The drawback of this method, compared to VAE is the longer training time, and larger memory consumption, thus adapting it to large 3D models leads to complex training. The usage of voxel grids helps to improve the performance, but it leads to a fragmented look. As a future work, the authors propose to optimize the model to reduce the memory and time required for training the model, or to use higher resolution voxels. Another idea is to experiment with semantic relations besides the Chamfer distance.

Generating objects as voxel grids using 3D-GAE (Guan et al. 2020)

3.2.4 Data compression

One of the main applications of autoencoders is compression. Depending on the data types different architectures are used for compressing images, such as CNNs, Recurrent Neural Networks (RNNs), GANs, or VAEs. Mishra et al. (2022) provide a comprehensive study on these methods; unfortunately, 3D applications are rarely mentioned. Wang et al. (2021b) expanded the idea of data compression based on autoencoders for 3D using VAEs, to obtain better performance by applying them to point clouds. Although this method is officially for point clouds, pre-processing and post-processing phases are necessary to voxelize the point clouds, and optionally scale or partition them. The basic 3D convolutional unit of this method is Voxeption-ResNet (VRN) (Brock et al. 2016). Nine of these are used for analysis and synthesis transforms, which are then used in the encoder and decoder. Quantization is the process when instead of rounding a representation, they add a uniform noise, thus maintaining the ability to backpropagate through the model. As a metric, Weighted Binary Cross-ntropy (WBCE) loss is used. Furthermore, an adaptive module takes care of the distortions and removes unnecessary voxels according to a threshold. Standardized geometry-based compression algorithms such as Moving Picture Experts Group (MPEG) exist that are outperformed by this method by at least 60% in the perspective of the Bjöntegaard Delta Rate (BDR). While traditional codecs perform better in the evaluation of human visual systems, deep neural network-based approaches are better at reconstructing spatial, textual, and structural features. On the other hand, this model struggles with joint rate-distortion optimization, which is a feature of traditional codecs.

Working with voxels leads to high-performance requirements, which are unfeasible in lightweight scenarios like robotic navigation. Liu et al. (2020a) tackle this problem by introducing a VAE-based voxel compressor. The authors studied different 3D data capture systems, calibrations, and data formats, and concluded that for capturing 3D data, the stereo or depth cameras are optimal and that despite the larger amount of data in the case of adding dimensions for voxel grids, they tend to be relatively easy to work with. Furthermore, using octomap from Robot Operating System (ROS), they convert the voxels into octree representation (Wurm et al. 2010), as homogeneous octants. This lossless compression is then followed by the compression with VAE. One minor drawback is that the reconstructed voxel grid is usually sparser than the original grid, most probably due to overfitting.

Data compression in a real-time application is a desired task, especially if a given robot needs to pass information to another one. For remotely controlled robots, the data needs to reach the operator in near real-time, but with information captured with a Light Detection and Ranging (LIDAR), depth camera, or even with a conventional 2D camera, this task is expected to be slow. Yu and Oh (2022) created a data compression method that takes advantage of the capabilities of VAEs by combining this architecture with an approach anytime estimation (Larsson et al. 2017), thus achieving over-compression of the data if necessary. The authors studied category-specific multimodal prior VAEs (Yu and Lee 2018; Yu et al. 2019b; Yu and Lee 2019) to obtain missing elements that occur in harsh environments. In the training phase, missing data points are simulated by applying dropouts to the model. For better scene understanding, the approximation of the correct modals is important, so that they are separated in the latent space; thus, distinguishing categories becomes easier. This method slightly outperforms simple AE and VAE models in most tests, but if the missing rate is below 10% or above 90%, the accuracy of the method of Yu and Oh (2022) could drop below competing models.

3.3 Point cloud processing

Perhaps the most important task a VAE performs is to generate new data. By analyzing existing shapes, the model learns basic features and principles about the objects with which we are training. In many cases, the operator is allowed to set multiple variables to force the desired characteristic on the generated object. Most of these methods work with point clouds since their unordered nature ensures that the models generate the coordinates of each point independently, but the relationship between neighboring points is maintained. Below we present several methods that generate new point clouds using VAE architectures.

3.3.1 Point cloud generation

CompoNet by Schor et al. (2019) is a part-based 2D or 3D object generator, where the final object is composed of the smaller priors, that are generated separately, thus they are considered to be deformable. The part-based nature improves the variability and quality of the final object. The model has two parts, first, the distinct generative module at the part level, and second, the conditional part composition network, which is based on a spatial transformer network (Jaderberg et al. 2015), which creates new shapes. The main advantage of this method is that the training is specified for lightweight datasets, whereas other methods struggle to learn a proper representation due to the lack of data. As future ideas, the authors mention limitations such as part diversity, part structure modification, and feature transfer between classes.

The next method by Saha et al. (2020), which was later expanded in (Saha et al. 2022), called Point Cloud Variational Autoencoder (PC-VAE), uses VAEs to help 3D car designers by generating new unseen models from existing models. Naturally, the method is not limited to car body shapes, but in itself, due to its complexity, the body of the car deserves higher attention, since it has to be designed to be aerodynamically competent, safe, and pleasant to the eye. The VAE is used to specify local features in the latent space, not just global ones. By combining optimization and MLP, the operator instructs the model to generate a specific car type that is different but real-like. This method works with point clouds since they are flexible and require less memory than a voxel grid or polygon mesh. The authors consider (Schor et al. 2019) closest in terms of model generation. The work is further based on a point cloud autoencoder proposed by Achlioptas et al. (2018), that is further advanced in (Rios et al. 2019a, b). The PC-VAE architecture consists of five 1D convolutional layers (as described in (Qi et al. 2017a)), with ReLU after each layer (Nair and Hinton 2010), and at the end a batch normalization layer (Saha et al. 2019). The decoder part consists of three fully connected layers. Although this approach has a huge potential, further optimization is needed to create a complete cooperative framework.

Yang et al. (2019a) tackle the problem of generating realistic 3D point clouds by encoding them as the distributions of shapes, which in turn are the distributions of points. Thus, the method samples according to a shape or the number of points. The method is called PointFlow, and it greatly utilizes the advantages of normalizing flows (Rezende and Mohamed 2015) in the VAE architecture. This method might not exceed the accuracy of state-of-the-art approaches, but it has great potential in the usage of image-based point cloud reconstructions.

The problem of generating complex shapes is also addressed by Li et al. (2022b), who propose EditVAE, which introduces part awareness into the model, in other words, the object generation task is divided into the part generation task, where the parts are assembled following a schematic, and each part has its own disentangled latent representation, which also learns the dependencies between parts. With standardized transformation, the authors achieved part-aware point cloud generation and shape editing by interchanging parts between objects. Previously, an attempt was made to do this using (Gal et al. 2021), but that method generated semantically insufficient parts. The authors use a primitive-based point cloud segmentation derived from shapes created by Paschalidou et al. (2019). Objects are built from generic primitives, such as cubes and spheres; on them, a superquadric parameterization and other deformation parameters (Barr 1984) are computed. An inductive bias (Locatello et al. 2019) is used to disentangle the latent representation, where semantically meaningful parts are classified by shape or pose. An approximate posterior is achieved via a simple deterministic mapping (Nielsen et al. 2020), and via the usage of PointNet architecture (Qi et al. 2017a) as the posterior, and TreeGAN (Shu et al. 2019) as the decoder-generator module. EditVAE in most cases outperforms the state-of-the-art methods, but further optimization is required to expect the best accuracy across the board.

3.3.2 Point cloud classification and generation

The next method is from Gadelha et al. (2018), which has an encoder capable of classifying point clouds, as well as a decoder to generate new point clouds from conventional RGB images. The latter is also called image-to-shape inference (Simonyan and Zisserman 2015). Both branches are based on kd-trees (Klokov and Lempitsky 2017), which divides the data in such a way that each split is along one of the axes; in other words, the split is alternated between the three axes. Instead of kd-trees, rp-trees (Dasgupta and Freund 2008) are also possibilities, the difference being that in this case, the splits are not strictly along an axis, but more arbitrary, for instance along an edge. The tree structure represents a locality preserving a 1D ordered list of points, that is efficient in feed-forward processes using 1D convolutions. The splitting results in a probabilistic kd-trees, which performs multiplicative transformations while maintaining a good scaling quality.

The main reason why this method is based on point clouds rather than voxel representation is that voxel grids scale poorly and do not model surface details, without additional extensions like RBF. Also, because of self-occlusions, image-based representations are insufficient for modeling anything other than the surfaces seen from the sensor. Although the accuracies are decent, further optimization is possible.

The architecture of this method is built using various implementations and ideas: multigrid network (Ke et al. 2017), multiscale (Lin et al. 2017; He et al. 2015), dilated (Yu and Koltun 2016) and atreus (Chen et al. 2018) filters. Multigrid networks mean that the data are converted into multiple resolutions, and the architecture of the model is the connections of pyramids with different resolutions. This results in an architecture where at the feedforward processing, a change in a certain resolution influences the change in other ones, adjacent resolution layers as well. In other words, scaling and learning of the global-to-local feature ratio is managed. The dilated filters in the convolutions are specifically meant for dense predictions, as in the case of point clouds, where the multiscale information is relatively hard to gather without loss of resolution. Atreus filters or upsampled convolutions are working with dilated filters as they make it easier to choose the correct resolution, without drastically increasing the parameters. Often, neural networks require specific layer sizes or scales, but multiscale networks cope with arbitrary layered sizes.

For the shape classification task for each point cloud, approximately \(10^3\) points are sampled using Poisson disk sampling (Bowers et al. 2010). The splitting into probabilistic kd-trees comes next. In training, the model minimizes cross-entropy loss. Anisotropic scaling factors are applied as scale augmentation. The model creates the kd-trees of a given point cloud 16 times, and the final version is the average of these trees. For this task, the ModelNet (Wu et al. 2015) dataset is used.

For image-to-shape inference, the encoder is borrowed from Visual Geometry Group (VGG) (Simonyan and Zisserman 2015); however, the decoder is proprietary. The generated shapes are set to have \(4\cdot 10^3\) points. For this task, the ShapeNet dataset is used, along with the Chamfer Distance (CD) (14) as the loss function. The combination of these two tasks is used for unsupervised learning applications.

3.3.3 Point cloud completion

By Han et al. (2019) PC-VAE was proposed, which trains its VAE architecture by creating multiple viewpoints for each 3D object, then the method divides them into a front half and a back half, based on the geodesic distance. The Euclidean distance is ignored because it causes non-semantic splitting, as suggested by Crane et al. (2017). By learning to reconstruct parts of the objects from different angles, this method simultaneously learns global and local features, along with the relationship between them, like geometric and structural information, resulting in unsupervised training, which makes the model capable of generating new objects as well.

The authors chose point clouds in this case because point clouds are relatively easy to create, collect, and process. Therefore, the encoding of the aforementioned front and back halves is based on PointNet++ (Qi et al. 2017b), which is an extension of PointNet (Qi et al. 2017a). Then, given the encoding of the front half, the decoder is trained to reconstruct the back half. This method considers the Earth Mover’s distance (EMD) (Rubner et al. 2000) (15) to be more suitable than the CD (14) since it results in better visual quality.

This half-to-half prediction and self-reconstruction are achieved using three branches: aggregation, reconstruction, and prediction. The aggregation branch contains two encoder, one for local features and another for global features, extracted from the front half of each sample point cloud. Additionally, this branch is completed by an aggregation RNN (Liu et al. 2019b). The reconstruction branch recreates the full object from the front half, while the prediction branch generates only the other half for the given front half. The reconstruction branch is a decoder while the prediction branch is a prediction RNN.

The RNN structure is mentioned and used by Liu et al. (2019b), in which they created a sequence learning model for point clouds formed into an encoder-decoder structure. By using an RNN architecture, the method has the advantage of capturing fine-grained information about smaller sequences of data and then transferring it to another sequence, discovering new features and relationships along the way.

The contribution of Kim et al. (2021) to the 3D VAE models is the SetVAE architecture, which is specifically created for working with unorganized sets, such as point clouds. This is a hierarchical model, that is capable of learning coarse-to-fine surface features on different levels. Their main focus is on the two principles of working with set-structured data, which are exchangeability and variable cardinality, in other words, the model should be invariant to the ordering of the elements and their number. The authors maintain the interactions between the elements of a set using attention-based set transformers (Lee et al. 2019) and multi-head attention (Vaswani et al. 2017), to achieve a hierarchy of latent variables (Sønderby et al. 2016; Vahdat and Kautz 2020). Then, their generator is composed of special attentive bottleneck layers, which ensures stochastic interactions between latent variables. The input is processed first by the multi-head attention block and then by the induced set attention block. The authors discovered that their model learns disentangled properties for generative purposes while using fewer parameters, but still outperforms PointFlow (Yang et al. 2019a) and other GAN-based methods. Further, attention-based methods are presented in the work of de Santana Correia and Colombini (2022), including VAE-based methods in the 2D domain. For Kim et al. (2021) further optimization is needed to outperform all the state-of-the-art methods.

3.3.4 Point cloud segmentation

In graphics applications and computer vision, part segmentation is a base task. For this reason, Nash and Williams (2017) created Shape Variational Autoencoder (ShapeVAE), which describes the joint distribution over parts. The capability of synthesizing shapes makes it suitable for performing surface reconstruction and also for imputation of missing parts for completion. The authors take advantage of the work of Huang et al. (2015), by applying dense point correspondences in segmented parts. More specifically, this method consists of 3D keypoint modeling using a beta shape machine, which is a variant of a multi-layered Boltzmann machine. This results in a powerful latent space representation for both global and local features, which reconstructs good-quality surfaces. 3D objects often contain a large amount of data, like surface points and surface normals, so it is necessary to capture the semantic characteristics. The authors introduce a hierarchical model in which each layer is responsible for a certain type of feature; the higher layers capture global features and the lower layers capture local features. This method is strict from the point of view of the input data. Each type of object has a predefined number of categories for the parts from which at most one category is allowed to be missing. Additionally, the input meshes must be consistently scaled and aligned with each other. The drawback of this approach is the dependency of consistent mesh segmentations and dense correspondences. The proposed solution is to experiment with unordered point sets. Also, the use of GANs might increase the quality of the fine edges.

Neural Radiance Fields (NeRF) (Mildenhall et al. 2020) is created for the incorporation of geometric structures and is a differentiable volume rendering, mainly used in the understanding of the scene. On its own NeRF is a 6D continuous vector function, where the inputs are ray coordinates. These ray coordinates are similar to points from a point cloud, which was converted from a depth image. The problem with the basic NeRF is that too much optimization is required for each scene, and new scenes are hardly generalizable. To solve the problem, starting from (Eslami et al. 2018), Kosiorek et al. (2021) mixed NeRF with VAE to create Neural Radiance Fields VAE (NeRF-VAE), the novelty of which is the ability to generate new scenes from a previously unseen environment. The NeRF-VAE learns a distribution over a radiance field by conditioning them into the latent space. Since the training contains the camera orientation of each scene, the model learns different viewpoints as well, hence better control over the generative phase. The model is improved by creating an attention-based NeRF-VAE. The approach described in Kosiorek et al. (2021) limits the expressivity of each scene to increase the overall accuracy over multiple scenes, which is the opposite of the basic NeRF. Also, low-dimensional latent variables are a promising idea for future work.

Following the trend of presenting discriminator-type methods based on VAEs, Anvekar et al. (2022) created a method, that captures unsupervised hierarchical local and global geometric signatures. The authors propose a Geometric Proximity Correlator (GPC) and variational sampling to extract and analyze the morphology of the point clouds. Their classification tests revealed that the combination of this method with the PointNet (Qi et al. 2017a) method outperforms several other methods in accuracy, losing out to only the Point Transformer Network (Zhao et al. 2021). This approach outperforms competing models in some of the classes while falling back on others.

Yu et al. (2022) generalize the idea of transformers from BERT (Devlin et al. 2019) for point clouds. The created method, Point-BERT, is pre-trained using an MPM task. This is followed by dividing the point cloud into local patches, and then a discrete VAE (Rolfe 2017) is used to generate discrete tokens storing the required information. After randomly masking out patches, the transformer improves the quality of the model, also learning to estimate the information hidden behind the masked patches. For the division, DGCNN (Wang et al. 2019) tokenizer with FoldingNET (Yang et al. 2018) is used. Time optimization is needed for possible future implementations.

3.3.5 Anomaly detection

For point cloud generation, detecting outlier data, or anomalies is an important step. Masuda et al. (2021) created a method, based on VAEs, that detects anomalies in point clouds without the need for supervision. The model trains to extract the distribution of the different characteristics of the given shapes using a graph-based encoder (Shen et al. 2018), then tries to reconstruct them using the FoldingNet-based decoder (Yang et al. 2018). This is completed with a spherical shape, like a grid, rather than a plane. Two distance metrics were considered, the EMD (Rubner et al. 2000) (15) and the CD (14). But because of faster convergence, the CD was preferred. The detected level of anomaly is expressed as the area under the curve of the receiver operating characteristic, which verifies whether a sample point cloud is correctly classified as normal or abnormal. This approach is currently showing only theoretical advantages, while real-life experiments are planned in the future.

Czerniawski et al. (2021) propose a change detector method for 3D computer models of buildings, similar to the anomaly detection method. They implement point cloud completion with hierarchical VAE, which improves the change detection by about 0.2 of the total area under the curve. The hierarchical structure is ensured by skipping connections between the layers from the encoder and decoder. Buildings are often stored using Building Information Modeling (BIM) (Eastman et al. 2021), which is a digital automated platform. Scanning buildings is a complex task since current data acquisition devices, such as laser scanners and depth cameras, have many shortcomings; for example, covered parts or nonoptimal reflective surfaces often cause the surfaces to be missing or deformed. In addition, capturing every detail of a building leads to impractically large datasets. Thus, the perceptual completion of the proposed method helps compare two computer models or point clouds. The basis of this method is the Variational Shape Learner (VSL) network (Liu et al. 2018). The authors create their dataset, using a LIDAR or a timeof-flight (ToF) camera to scan buildings and create point clouds (Ma et al. 2020a). Furthermore, they modified the scans (Liu et al. 2018) to be incomplete. However, the VSL network is pretrained on the ModelNet (Wu et al. 2015) synthetic dataset. However, limitations for this approach exist. The lack of automated change detection, limited resolution, and diversity necessitates more real-life data.

For robot autonomy, the possibility of serving erroneous data, called anomalies, is unavoidable for any sensor. To detect these anomalies and resolve potential failures, Ji et al. (2020) proposed the Supervised Variational Autoencoder (SVAE) architecture. The core of this model is the global feature extraction from the high-dimensional inputs. The method is specifically tested for agbots, or low-cost robots for agriculture, that roam under the crop canopies, to help manage the ecosystem (Higuti et al. 2019; Kayacan and Chowdhary 2019). For these robots, identifying anomalies and their causes without high computational demands is important. The input is multi-modal consisting of a high-dimensional sensor, like LIDAR or a ToF camera, and a low-dimensional sensor, like the wheel encoder. The output of the model specifies whether an anomaly exists or not, with the necessary control sequence. The two parts of the model are the feature generator and the classifier. The balance between these two parts is maintained with VAE. The encoder, by compressing the high-dimensional signals, filters out the noise and finds the global features. The training of the model is a one-stage procedure, and the extracted features in anomaly detection outperform baseline methods, such as VAE Fixed Features + MLP proposed by Kingma et al. (2014).

3.3.6 Representation learning

All of the currently available 3D data representation methods have limitations that negatively affect the results (Friedrich et al. 2018). One of the most popular 3D representation formats is the point cloud; however, a point cloud is unstructured, which is a major bottleneck in many applications, since finding the connections between the points is a difficult and time-consuming procedure. These applications are usually limited to a certain number of points per point cloud, usually, 2048, to retain an acceptable runtime. On the other hand depth images offer the same performance and efficiency as more conventional 2D image processing techniques (Molnár et al. 2021). However, depth images suffer from self-occlusion, so they contain only a projection of an object or a scene, without storing information about hidden objects. Capturing the same object from different viewpoints and treating them as a collection of images, or as a omniview projection, is a possible solution; however, this results in duplicated data points, while the problem of hidden surfaces remains.

To solve the problem of processing complex data formats, such as point clouds, we further present methods that compress point clouds into a latent representation. Representing the data in the latent space is less intuitively comprehensive for a human observer, but it is more convenient for any neural network to work with, arriving at a solution that is runtime efficient and has acceptable data preservation capabilities at the same time. Such work is proposed by Achlioptas et al. (2018), in which the authors suggest that a GAN performs better if it works in the latent space. First, an autoencoder calculates a latent space, and then a GAN is trained inside that latent space. This reduces the mode collapse and other difficulties in training a GAN and results in newly generated point clouds. One slight drawback is the required separate training for every category. We conducted a small test by introducing a latent vector, which encoded a chair with missing legs. The output was a noisy object, but all four legs were attached, Fig. 7. This method could decode insufficiently some details or partial shapes.

Experiment with (Achlioptas et al. 2018). The model was trained with conventional chairs, and then we reconstructed a chair with missing legs. The legs in the reconstruction are present, but the point cloud is noisy

In this work as a metric, the CD (14) and the EMD (Rubner et al. 2000) (15) are considered. The EMD (Rubner et al. 2000) (15) is differentiable almost everywhere, while the CD is differentiable everywhere and computationally less demanding. Despite this Zamorski et al. (2020) state that the EMD (Rubner et al. 2000) (15) leads to better generative capabilities because it compares distributions of points, rather than distances between points.

Zamorski et al. (2020), following the idea of combining GANs and VAEs, created a method, which allows the model to learn latent space representation of objects and to generate point clouds. Latent space is defined as continuous or binary. The architecture is the mixture of VAEs and GANs, which is known as AAE architecture (Makhzani et al. 2015). In this case, the decoder is called a generator module, and an additional discriminator module is added, which decides whether the latent variables are fake or real. The encoder and decoder are updated in an alternating way, using (7), with the results shown in Fig. 8. This has the advantage over a basic autoencoder in that in many cases new data are created in the latent space and a simple autoencoder struggles to decode previously unseen values. This work proves that using more advanced architectures, such as VAEs and GANs, improves the reconstruction as opposed to simple AEs presented in Achlioptas et al. (2018).

Reconstruction of 3D objects from latent space using 3dAAE (Zamorski et al. 2020)

where \(\mathbf {S_1}\) and \(\mathbf {S_2}\) are two equally sized subsets of points.

Sharing the idea of creating a manageable latent representation format for point clouds, Wang et al. (2020) proposed the usage of geometry images (Gu et al. 2002). Thus, the converted point clouds were processed as basic 2D images, with minimal information loss. An example of the conversion is shown in Fig. 9. A geometry image is a reshaped point cloud, where the x, y, and z coordinates are changed to RGB colors. To increase the training stability of a GAN, an AAE architecture was used by Wang et al. (2020).

The conversion from a polygon mesh to a geometry image is possible to be done without neural networks. As an advantage, we mention that the results are reliably high resolution, but they require more processing time. The original method by Gu et al. (2002) divides the input model into disks and then folds it into an atlas. This means that the information about the connection between different surfaces was lost. To solve this issue, Sinha et al. (2016) proposed a method that introduced planar parameterization by first deforming the mesh into a genus-0 (Fan et al. 2020) type model, which means that it does not have holes in it. Then the object is transferred into a spherical domain, from which it is sampled into an octahedron. The method traces paths on the surface of the object along the edges of the octahedron and cuts them into pieces. From these pieces, flat 2D geometry images are obtained. The boundaries of the resulting image are without discontinuities. The possibility exists to ransom this complicated procedure with VAE-based networks, unfortunately, at the time of writing, no such method is available.

Following the idea of geometry images, Molnár et al. Molnár and Tamás (2022) proposed a VAE-based representation learning method, which is capable of generating a point cloud by generating geometry images first. Their contribution is the hyperparameter tuning of the \(\beta \)-VAE, and the impact of using different geometry image sizes. Additionally, they also introduced an autoencoder-based point cloud to geometry image converter for mitigating the issue of unstable conversion typical for this task. The main drawback of using geometry images is the geometry image creation itself, then the loss of fine-detailed edges.

Finding the optimal representations for working with point clouds is a critical problem. Using other representation data formats, such as depth images or geometry images, together with point clouds yields transfer learning (Molnár et al. 2021) or depth data error detection (Masuda et al. 2021). Therefore, they must be chosen correctly depending on the task. This problem is often referred to as representation learning.

Another representation is the simple single-view image and, with the help of the work of Zhang et al. (2021), converting it to a 3D shape. View-aware Geometry-structure Network (VGSNet) learns multimodal feature representation from 2D images, 3D geometry, and structure, producing a method that is capable of reconstructing the geometry and structure of a shape based on a single-view image. The VGSNet is based on StructureNet (Mo et al. 2019a). The main goal is to create 3D point clouds from multimodal input. The model trains the images and their 3D point clouds simultaneously, achieving one-to-one mapping while learning a multimodal feature representation (Guo et al. 2019). By learning the shape of parts, this method achieves consistent 3D reconstruction. Similarly to the work of Wang and Yoon (2021), the main branch consists of a VAE, while an auxiliary branch is introduced for separate image encoding. VGSNet is tested with synthetic images, while real-life evaluation is preserved for future work. Further optimizations are planned by experimenting with different losses and data structures.

Wu et al. (2016) initially made a 3D-GAN model, then an extension to it, called 3dvaegan, which is a VAE and GAN-based 3D shape generator. The novelty is an encoder that takes 2D images, learns their latent representation, and generates a new image with the appropriate generative and discriminative modules. The method is very similar to Larsen et al. (2016), but 3D voxel grids are used. Promising unsupervised method, however, optimization is needed to compete with supervised approaches, such as Sedaghat et al. (2017).

3.4 Other 3D-related applications

We focus on methods that do not rely on one of the previous three data representation techniques (polygon mesh, voxel grid, point cloud) and are specialized in a certain domain. The described methods come from the domain of medicine, motion sequence generation, auditory signal processing, hand pose estimation, and evaluation tasks.

3.4.1 Evaluation of the latent space for different architectures

Talking about point cloud compression into latent space and other representation learning procedures, the question arises how well does this latent space store relevant information? Ali and van Kaick (2021) answer this question by creating a comparison between different models and the quality of their latent spaces. The analyzed architectures are 3D-AE (Guan et al. 2020), 3D-VAE (Brock et al. 2016), and modified GANs like 3D-GAE (Guan et al. 2020) or 3D-PGAE, an inhouse modification for the 3D-GAE. In comparison, the latent spaces of these methods are compared in their interpolation capabilities and the level of meaningful organization of the information inside the latent space. These properties are intuitive, but they are rarely optimized.

The authors of this paper create a synthetic dataset, where they know the prior structure and semantic attributes of the shapes. The dataset creation is based on the split grammar of Wonka et al. (2003). As metrics, they measure the correlation between the shapes and the latent space, and between the shape attributes and the organization of the latent space, as similar features should be grouped near each other. The idea comes from a similar comparison paper (Hinton and Salakhutdinov 2006), which is for 2D embeddings, in which the learned latent variables are colored according to the class label. As an evaluation metric, Theis et al. (2016) discuss Parzen window estimates. Ali and van Kaick (2021) conclude that VAE-based methods provide lower quality latent space organization, than other autoencoder-based methods, while GAN-based methods produce better qualitative results than VAEs. This experiment was limited by the number of shapes and the detail level. Future works include increasing the shapes as well as experimenting with different data structures.

3.4.2 Hand pose estimation in 6DoF

In the case of the work of Spurr et al. (2018), based on the work of Wan et al. (2017), a 3-dimensional hand pose is estimated using 2D RGB images or depth images. Cross-modal training is rarely used, although the nature of VAEs creates the possibility to encode more data types in the same latent space, from which the desired data type is recovered. This method offers significant improvement against Zimmermann and Brox (2017) in RHD, however, falls back in STB. The architecture can be seen in Fig. 10.

The architecture from Spurr et al. (2018), which is an example of multimodal data processing. The model has a branch for RGB images and another for 3D hand pose, however, the latent space is common

Hand pose estimation using (Spurr et al. 2018)

This particular example utilizes this capability to a high extent by estimating hand-poses from depth images or even from conventional RGB images, as in Fig. 11. This model is very specific, and although only a finite number of hand poses exist (Santello et al. 1998), this number was further reduced in dimensions by Tagliasacchi et al. (2015). However, the results of this hand pose estimator are still notable. The VAE architecture is very well utilized to project 2D key points into low-dimensional latent space, then estimate the 3D joint posterior, outperforming the similar method of Zimmermann and Brox (2017).

Yang et al. (2019b) complain that the method of Spurr et al. (2018) converges too slowly and compromises the accuracy of pose reconstruction. Yang et al. (2019b), in addition to the RGB data, rather explore other input data types like point clouds and heat maps. As for the architecture, they extend the cross-modal VAE architecture seen in the work of Spurr et al. (2018). Then, the use of two latent space alignment operator strategies causes the model to learn the hand poses.

Gu et al. (2020) highlight the problem of balancing modality-specific representations and shared representations. Additionally, other unnecessary information is stored in the shared latent space, such as the intrinsic camera or background. Gu et al. (2020) propose the disentangling of these features from the latent space. They achieve better mean joint error than previous methods, by joining two VAEs by their latent spaces, to process both the 2D modalities and the 3D modalities as well. The architecture can be seen in Fig. 12.

The architecture from Gu et al. (2020), which is an example of multimodal data processing. The model has a branch for RGB images and another for 3D hand pose. Between the branches, there is a translator (T) layer and two discriminator modules (\(D_1\), \(D_2\))

3.4.3 Auditory 3D perception

The majority of the perceived data comes from visuals, by auditive, we estimate the position of the sound source. To exploit this phenomenon, audio device manufacturers need to analyze auditory information. Since everyone is different, the shapes of the ears and the ear canals are different as well. To achieve the best possible surround sound, companies need to develop Head Related Transfer Function (HRTF), which tailors the sound for each individual. Usually, this requires a tedious procedure, where a professional measures the hearing of the person with specific tools. To address this issue, Yamamoto and Igarashi (2017) developed a VAE-based 3D spatial sound individualization technique, where the calibration procedure is composed of only sound tests and the response of the user. Similarly, better spatial audio profiles are created by analyzing audio sources (Karamatlı et al. 2019). Although the work of Yamamoto and Igarashi (2017) is promising, further data variability tests should be conducted, as well as quality assessments and calibration optimization.

3.4.4 Variational Autoencoders for medical domain using 3D data

Besides computer vision and object reconstruction, the adaptation of VAEs in the medical domain is also widespread. Unfortunately, one of the main problems with our health is caused by heart dysfunction. For this, medical specialists must understand the internal structure of a patient. High-resolution 3DMR sequences provide a detailed model of the heart, but the data acquisition time is very long. Multiplanar breath-hold 2D cites sequences are considered standard, but these do not necessarily hold the required amount of information about the condition of the heart. Biffi et al. (2019) proposed a method based on VAE, which is capable of generating high-resolution 3D segmentation of the left ventricular myocardium from only three segmentations from standard 2D cardiac views. The standard VAE method is slightly modified to achieve a CVAE, with two encoder, one for the 2D data, and another for training 3D data. This way, it is easier to learn the latent space of the required 3D features when we want to encode both data types into the same latent space. In this work, further real-life tests should be conducted.

The work of Lyu and Shu (2021) is also part of the medical domain, more specifically in 3D brain tumor detection and segmentation. They, inspired by the winners of Multimodal Brain Tumor Segmentation Challenge (BraTS) 2018 (Myronenko 2019), created a two-stage cascade network, which provides quantitative and qualitative improvements for model ensembling and stabilizes the predictions. The first stage calculates rough segmentation, while the second stage concatenates preliminary maps from the first stage and MRI input and refines the results. Attention-gates (AGs) (Oktay et al. 2018) are used to suppress irrelevant background data. The first branch is a mixture of U-Net (Ronneberger et al. 2015) and VAE architectures. A large encoder extracts the features, a smaller decoder predicts the segmentation maps, while VAE reconstructs the input images. The second stage is an attention-gated mixture of U-Net (Ronneberger et al. 2015) and VAE architecture. The encoder is similar to the previous stage; the only change is that the input is the segmentation map and the multimodal MRI. Whereas the decoder received the AGs, so each level has a gate, that helps to decide the attention coefficient. The work of Lyu and Shu (2021) requires further optimization to reduce the memory and time constraint limitations. Additionally, future work includes experimenting with combining two attention mechanisms.

3.4.5 Motion sequence generation