Abstract

Person and suspicious activity detection is a major challenge for image-based surveillance systems. However, the accuracy of person detection is affected by several factors, such as the presence of the person, his trajectory, posture, complex background, and object distortion. In this work, we developed a person-focused dataset that includes various behaviors of students in an educational institution, such as cheating, theft of lab equipment, fights, and threatening situations. This dataset ensures consistent and standardized identification annotations for individuals, making it suitable for detection, tracking, and behavioral analysis of individuals. In addition, we have increased the detection accuracy through an improved architecture called YOLOv5 and introduced an efficient method for detecting global and local anomalous behaviors. This method extracts motion features that accurately describe the person’s movement, speed, and direction. To evaluate the effectiveness of our proposed approach, we validated it against our proposed database and publicly available benchmark datasets. Our method achieves state-of-the-art detection accuracy, namely 96.12%, with an error rate of 6.68% compared to existing methods. The empirical results show a significant improvement in anomalous activity detection. Our paper concludes with a summary and a discussion of possible future research directions.

Similar content being viewed by others

1 Introduction

The word surveillance is composed of the prefix sur, meaning “over,” and veiller, meaning "to watch." Steve Mann in Minsky and Kurzweil (2013) introduces sousveillance as a substitute for surveillance; in contrast to sur, sous means "under," i.e., a camera is attached to the object (e.g., a head- mounted camera). Figure 1 illustrates the difference between surveillance and sous surveillance. The illustration was created by Stephanie Mann, the daughter of Steve Mann. In both surveillance and sous-surveillance, the goal is to obtain information through continuous observation of a criminal, a prisoner, a person, a group, or an ongoing operation.

Government agencies and businesses are increasingly turning to surveillance technologies to enhance traditional security measures. Surveillance systems are being widely researched and deployed in many application areas, including (a) transportation systems (train stations, airports, city and state road networks), (b) government facilities (military bases, detention centers, commercial technical infrastructure, research laboratories, radar centers, and hospitals), (c) industrial environments, ATMs, banks, shopping malls, and government buildings, and so on. To detect suspicious travelers on video footage, human observers are often used in government and private surveillance technologies (Li et al. 2022; Cao et al. 2022). In some areas, people using wheelchairs or walking or running down the aisle are considered as pedestrians. The main function of the video management system is to detect and monitor suspicious walking behavior. The learning method is not suitable for real-time situation assessment in dynamic situations because it is difficult to know each object in advance. Nevertheless, artificial intelligence-based approaches continue to be used because of their reliability and accuracy.

Several researchers have successfully developed Deep Learning (DL) based models for categorization in video surveillance successfully. Probabilistic Neural Network (PNN) (Wu et al. 2019), Radial Basis Neural Network (RBN) (Alireza et al. 2011), and other models are examples. Artificial Neural Network (ANN) (Emil et al. 2009), Support Vector Machine (SVM) (Kang et al. 2002), AdaBoost (Geronimo et al. 2006), and other learning-based algorithms are just some examples. To classify the type of object, these methods use features such as a histogram of oriented gradients (HOG) (Dalal and Triggs 2005), Local Binary Pattern (LBP) (Wang et al. (2009), Speeded-up Robust Features (SURF) (He et al. 2016), Scale and Invariant Feature Transform (SIFT) (Zhao et al. 2019), etc. The Deep Belief Networks (DBN) (Kangming 2016), Recurrent Neural Networks (RNN) (Szegedy et al. 2016), Generative Adversarial Networks (GANs) (Radford et al. 2015), Convolutional Neural Networks (CNN) (Ross et al. 2008), Restricted Boltzmann Machine (RBM) (Jiao 2022), AlphaGo (Pan and Hu 2007), AlphaZero (Porikli et al. 2006), and capsule networks are some examples of deep learning algorithms that specifically represent these features Bidirectional Encoder Representations of transformers (BERT) (Jepson et al. 2003). etc.

Next, the term abnormal activity refers to any strange or suspicious behavior rarely exhibited by a person in public. Examples include leaving explosives in luggage, stealing, avoiding crowds, fighting and attacking people, vandalism, and border crossing (Pouyan et al. 2023). Normal activities are the typical human behaviors that take place in public spaces, such as hand waving, applauding, jogging, boxing, and walking (Pogadadanda et al. 2023). Nowadays, more and more people are using video surveillance to keep an eye on human activities and stop questionable behavior.

Deep learning techniques have recently become increasingly popular for detecting suspicious activity by extracting and learning features from video footage (Wang et al. 2023). These techniques have proven successful in overcoming many of the difficulties faced by previous systems, including lighting variations, shadows, noise, occlusions, and low resolution. The following are some of the major issues in detecting suspicious activity (Wang et al. 2023):

-

1.

Scalability: Creating deep-learning models that can be used for large-scale surveillance systems after training.

-

2.

Interpretability: Developing techniques to decipher the judgments made by deep-learning models to understand why they classify certain behaviors as suspicious.

-

3.

Robustness to adversarial attacks: Develop techniques to make deep learning models more resilient to these attacks, which aim to fool the machine into miss-classifying data.

-

4.

Dynamic variations in lighting conditions: It is caused by day-night changes and weather, can make it difficult to accurately detect static and moving objects.

-

5.

Object shadows: shadows can obscure the appearance of an object, making it difficult to locate and identify.

-

6.

Obstruction by objects: Partial or complete occlusion of objects can make them difficult to identify correctly.

-

7.

Out-of-focus objects: It can be difficult to classify and distinguish out-of-focus objects.

-

8.

Poor resolution: It can be challenging to distinguish between different objects and their boundaries in the video image with poor quality.

-

9.

Real-time processing: it is difficult to create an intelligent real-time monitoring system that can effectively process complicated video.

To create intelligent video surveillance systems that can successfully and reliably detect suspicious human activity, these difficulties must be overcome. Deep learning algorithms have recently become a key component of suspicious behavior detection strategies. These systems are based on learning features from video data. These techniques have proven successful in overcoming many of the difficulties faced by previous systems, including illumination variations, shadows, noise, occlusions, and low resolution. Key modern techniques include:

-

1.

Multi-modal Deep Learning Wang et al. (2023): this approach incorporates data from interaction, motion, and appearance features to increase recognition accuracy.

-

2.

Spatio-temporal neural networks Zhang et al. (2023): This technique uses occlusions and cluttered situations to detect suspicious activity by learning the connections between objects in video sequences.

-

3.

Self-supervised learning Yuan et al. (2023): this technique learns features that detect suspicious activity even with a small amount of labeled data.

-

4.

Attentive recurrent neural networks Kumar et al. (2023): In this technique, a recurrent neural network is used to detect the temporal dynamics of suspicious activities. The network has been shown to be capable of detecting suspicious activity in records that have long stretches of inconspicuous activity.

Although these techniques are still being refined, they could greatly increase the precision and efficiency of suspicious activity detection systems.

The remainder of the research paper is organized as follows. In Sect. 1, an introduction to the video surveillance system is given. Section 2 describes the recent architectural innovations in person and suspicious activity detection technology and Deep Learning. Section 3 describes the proposed person dataset in an academic institute. Section 4 describes the Enhanced YOLOv5 architecture. Section 5 describes the proposed method for suspicious activity detection based on motion features. Experimental results and a comparative analysis of the proposed and relevant state-of-the-art methods along with ablation studies are presented in Sect. 6 for Enhanced YOLOv5 and the suspicious activity detection algorithm. Section 7 describes the conclusion and further research direction.

2 Related work

This paper discusses modern video surveillance systems and the latest research on deep learning architectures, person recognition, and suspicious activity detection. The conventional and deep learning architectures available in the literature are described in the next section.

2.1 Conventional person detection methods

Many conventional person detection methods developed by researchers are described in the literature. However, the accuracy of person detectors can be affected by complex backgrounds, changes in human size, object occlusion, and changes in illumination Ross et al. (2008), Porikli et al. (2006), Kwon and Lee (2008). These problems have only been partially solved. According to (Xu and Puig 2006), directional gradient histogram (HOG) is the most popular technique for human analysis (Dalal and Triggs 2005). This technique uses directional gradients to identify objects in an image. However, the HOG method cannot detect objects in occlusions. Again, the process of obtaining HOG features is time consuming.

It is therefore not suitable for real-time use. Resolve the partial blocking of a person. In Yilmaz et al. (2004), the author proposed a combined HOG and LBP (Hou et al. 2015) method. This technique uses LBP to extract texture features from an image. However, it is not accurate and fast enough to be used for real word application analysis. Regional methods, on the other hand, benefit from human domain schemes. These methods provide more accurate and precise results. In (Zhao et al. 2019), the author proposes a scale-based method for object segmentation. This method uses a combination of scale invariant feature transformation (SIFT) (Zhou et al. 2012) and CNN features (Pan and Hu 2007) to find objects. There are some limitations of these methods, such as 1) if the objects cannot be found due to the hierarchical structure of the image, the non-adjacent parts cannot be merged into one part. The most commonly used hand-crafted features include HOG (Jepson et al. 2003), LBP (Yilmaz et al. 2004), Integral Channel Features (Xu and Puig 2006), Gray Level Co-occurrence Matrix (Xu and Puig 2006), CNN Features (Ross et al. 2008), HAAR -Wavelet (Porikli et al. 2006), and Oriented Gradients (Jepson et al. 2003). As a result, the researcher turns to region-based deep learning methods to solve the problems with the current hand-crafted feature-based system (Pan and Hu 2007), as shown in Table 1.

2.2 Deep-learning based person detection methods

The first CNN architecture presented was LeNet (Li et al. 2018) in 1998. This architecture has a pooling layer, a backpropagation network for training, and only two convolutional layers. LeNet was trained with the database MNIST (Szegedy et al. 2016) database trained, which contains 50,000 images divided into 10 categories. The error rate was 26.2%. Then, in 2012, AlexNet (Alex et al. 2012) was introduced. Both are identical. In AlexNet, the cross entropy loss function and ReLU activation were used. In AlexNet, there are many convolutional layers. The huge training database of ImageNet (Ross et al. 2008) includes more than 1 million images from 1000 categories. AlexNet is used for object recognition tasks and has higher object recognition accuracy than LeNet. After 15.4%, the error has decreased. The modified version of AlexNet was released in 2013. Instead of AlexNet’s 11x11 filter in the first convolutional layer, ZFNet (Fergus 2013) uses 7 × 7 filters. It achieves an error rate of 11.2%. The author of (Jiao 2022) designed an unsupervised deep neural network. DBN (Kangming 2016) consists of different layers and numerous feature detectors or hidden units.

The number of convolutional layers has been increased to 19 layers. The size of the filter is limited to 3 × 3 and has been reduced by a factor of 16. Residual Neural Network (ResNet) (He et al. 2015), GoogLeNet (Szegedy et al. 2016) both use similar architectural patterns. The author of Simonyan and Zisserman (2015) designed the Google Inception network using VGGNet (Fergus 2013), which was published in 2014. It performs slightly better than VGGNet Alex et al. (2012) in terms of error rate (6.7%), but at the cost of complex architectural patterns. The author used ResNet (He et al. 2016) for the first time in 2015, and it achieves an error rate of 3.57% error rate. ResNet consists of 152 convolutional layers. The semantic segmentation algorithm classifies the object at the pixel level. A more recent architecture, i.e. Mask-RCNN (He et al. 2020), uses segmentation as an example. It is based on its earlier variants Faster R-CNN (Ren et al. 2017), Fast R-CNN (Girshick 2015), as shown in Table 2. Identifying each instance of an image object and finding each pixel of the instance is called instance segmentation. However, segmentation is difficult because it requires accurate location and identification of all moving and non-moving objects in the image. Therefore, object analysis and semantic segmentation techniques are combined. The latest methods for detecting suspicious activity are described in the next section.

2.3 Suspicious activity detection

The detection of unexpected activities has recently attracted the attention of researchers in the field of video surveillance systems. In Gaddigoudar et al. (2017), the author describes the problem of decoding and simulating behavior in surveillance videos. Violations are detected using likelihood ratio analysis and categories of person actions in unsupervised learning. In Mehmood (2021), the author describes a technique to search for anomalies in a spatiotemporal environment. Given a scene containing the position, motion, direction, and velocity of an object, they show an atomic event for the object. They combine three distributed atomic events to describe legitimate events. It is difficult to understand the motion of a person in crowded situations. Therefore, these methods are not suitable.

In areas of high population density, classification of individuals is done using CNN. Scaling and illumination is one of the many problems and difficulties solved by the author’s techniques. Some new researchers focus on person direction and speed information. Kanade–Lucas–Tomasi (KLT) (Jin et al. 2021) uses vertices to represent moving people and groups motion-informed features in a controlled environment Zhang et al. (2020). To find anomalies in the scene, the author uses different types of historical autodescriptors as well as the history of nearby objects.

In Chebli and Khalifa (2018), the author describes a technique for counting the number of people using motion patterns for both optical flow and foreground relations. They calculated exponential crowd distribution patterns as well as the dynamic performance of using optical flow to distinguish between walking and running activities (Vasishta et al. 2018). In Wang and Hou (2016), the authors used the social power model to describe the behavior of people. The optical flow pattern of a moving object is calculated to identify people in a busy situation (Zhang et al. 2020; Fekry et al. 2019; Kim et al. 2018; Zhu et al. 2019).

The associated digestion map practically allows them to detect local abnormal behavior. In Zaki and Sayed (2018), the author uses motion intensity to create a heat map of motion and compare it to local motion variations. The author proposed to identify abnormal activity in heavily populated areas using texture features (Direkoglu 2020). By using moving objects, optical flows, and directional information, the analysis of human behavior is complete. In Papathanasopoulou et al. (2021), the author proposed a method for extracting moving objects from stack images under highly congested conditions. The tactics are generally limited to detecting unusual activity at a local or global location, although research has shown them to be useful.

According to our reasoning, the combined consideration of motion flow patterns, object size changes, and interactions between nearby objects within an image can describe the activity of people in a high-density scene, leading to improved performance in anomalous activity detection. We present a practical method to address the problems and difficulties associated with grouping motion patterns in successive images. In the proposed approach, we first capture moving parts of different sizes and directions from a sequence of images. Moreover, we use motion analysis to detect unusual actions in the scene. In the following section, we describe the proposed person dataset.

3 Proposed person dataset in academic institution

Modern human analysis research has been conducted using publicly available benchmark datasets, including Caltech (Cao et al. 2022), INRIA (I.P. dataset 2021), MS COCO (MS COCO 2018), ETH (E. P. dataset 2021), KITTI (Cao et al. 2022). However, each dataset has its own limitations, such as: (1) the number of people on the street is smaller because the dataset was developed in a controlled environment specifically in urban areas; (2) the size of the dataset is small and not suitable for deep learning techniques, (3) annotations and semantic information are not available for humans in the video, only specific behaviors are described in the reference dataset. The proposed dataset consists of video recordings of student behaviors in academic institutions, including exam cheating, theft of lab materials, student disputes, and threats in institutional scenarios. It is suitable for identifying and tracking individuals and detecting behaviors because it provides consistent and reliable ID annotations of individuals.

3.1 Video analysis

The proposed dataset contains 600 sample videos. Each video depicts student behavior on campus. Various forms of student behavior observed include instances of cheating on exams, theft of lab materials, fights between students and threats to the institution, and discussions between students in the classroom and lab. The data set was divided into two categories: normal and deviant student behavior, which was recorded in different places, especially in the laboratory, classroom, examination room, etc. in Yeshwantrao Chavan College of Engineering, Nagpur, Maharashtra, India. The researcher can use this dataset to perform various experiments related to computer vision. The framework for video retrieval followed by annotation process is described in the next section.

3.2 Image/video acquisition framework

The students’ actions in the academic premises were recorded from a different angle using a high-quality DSLR camera. We captured videos at 30 frames per second, 4K recording, 3840 × 2160 resolution, and H.264 compression in.mp4 format. Each sample video lasts 20 to 30 min. The tilt range of the camera is 45° to 90°. The students are predominantly of Indian origin and range in age from 22 to 27 years old. Table 3 shows a summary of the main characteristics of the data collection settings. The next section describes the process of annotating individuals.

3.3 Person annotation

The proposed dataset contains human expert annotations for each video frame. We created two different types of annotation files. (1) CSV annotation files for each video frame and (2) XML Pascal Visual Object Classes (VOC) annotations (Gorska et al. 2022). The annotation process consists of three steps: (1) Each video frame is carefully annotated by a human expert. A rectangular bounding box representing the region of interest (ROI) of the input image is used to label each person. (2) A YOLOv5 model (Xu et al. 2022) is used for human object detection in each image.

YOLOv5 is a deep learning object recognition model that can be used to create bounding boxes around objects in images and videos. These bounding boxes can be used to annotate objects in video surveillance footage. The accuracy of the annotation process can be improved by using YOLOv5 to create initial bounding boxes for objects. This can help reduce the time and effort required by human experts to annotate footage. In the final step of the annotation process, the bounding boxes generated by YOLOv5 and the bounding boxes annotated by the expert are combined to form the final annotated coordinate. This ensures that the final annotations are accurate and complete. Here is an example of how YOLOv5 can be used to improve the precision of the annotation process:

-

1.

A human expert annotates the video footage with bounding boxes around the objects of interest.

-

2.

YOLOv5 is used to create initial bounding boxes for the objects in the footage.

-

3.

The expert reviews the bounding boxes generated by YOLOv5 and makes the necessary adjustments.

-

4.

The final annotated coordinates are formed by combining the initial bounding boxes generated by the YOLOv5 and the bounding boxes adjusted by the expert.

YOLOv5 generates the initial bounding boxes, the human expert can focus on correcting errors and annotating objects that are difficult for YOLOv5 to detect. This can lead to more accurate and complete annotation of the footage. Overall, YOLOv5 can be a useful tool to improve the precision of the annotation process for detecting suspicious activity. (3) Non-maximal suppression (NMS) (Bodla et al. 2017) is used at the end to select a bounding box from overlapping objects as shown in Fig. 3.

The annotation method is shown in Fig. 2. The human annotated bounding box is used to validate the accuracy of the proposed method. The NMS algorithm contains a list of suggestion boxes B, associated confidence values S, final suggestion list boxes D, and an overlap threshold N. The confidence value indicates the accuracy of the bounding box and the probability that an object is in that box.

Each frame is represented by the information ID, a bounding box pointing to the ROI, and image information as well as a labeled summary for each person within the frame. Each sequence of video frames is annotated by human experts with two different types of person behavior information.

-

1.

Person Positioning with Bounding Box We use this information for person recognition, as the position of each person in the video image is represented as a bounding box.

-

2.

Annotated class label and ID It is a text-based component. Each person has a distinctive identifier that is constant across all frames of the video.

In the next section, the student activity information of the proposed dataset is described.

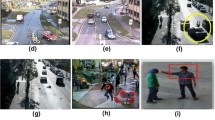

3.4 Student activity details

We design a person database composed of different actions performed by students in different academic contexts, e.g., classrooms, exam rooms, scenarios, a student copying an exam, a student taking a recording device outside the exam room, a student stealing a phone or other electronic devices such as a mouse or keyboard, a student stealing lab equipment, a student dispute on college campus, and a dispute between students, as shown in Fig. 4.

An example of the proposed database. The scenarios in the first row followed by annotation in green box depict stealing the mobile in the lab. An example of a student threatening behavior is seen in the first row followed by annotation in red box. The third scenario depicts a conflict between students in a lab followed by annotation in orange box. The scenario of exam-hall cheating is depicted followed by annotation in blue box

The number of samples for each student activity describe in Table 4. In total, the collection contains 600 video samples. The seven different student actions shown in Fig. 4. Table 5 and Fig. 5 describe more information about the relationship between student ID and suspicious behavior. The sample videos in the dataset contain individuals of different sizes and scales.

In the proposed improved YOLOv5 architecture, the Scale Invariant Cross-Stage Partial Network (SCSP) is a neural network architecture that is designed to improve the detection of small objects. SCSP works by combining features from different stages of the improved YOLOv5 network. This allows the network to learn more robust features that are invariant to scale changes. SCSP also uses a partial convolution operation, which allows the network to focus on specific parts of the image. This is particularly useful for detecting small objects, which may only be partially visible in the image. Next, we have described the steps used in SCSP in improved YOLOv5:

-

1.

The improved YOLOv5 network is divided into a number of stages.

-

2.

Features from each stage of the network are extracted.

-

3.

The features from different stages are combined using a weighted sum.

-

4.

The combined features are then passed through a partial convolution operation.

-

5.

The output of the partial convolution operation is used to predict the bounding boxes for the objects in the image.

SCSP has been shown to improve the detection of small objects in improved YOLOv5. For example, in a experimentation we noticed that on the COCO dataset, SCSP was able to improve the detection of small objects by up to 10%. Therefore, improved YOLOv5 is adapted to detect small invariant individuals in the scene.

Direction and motion components for a moving person are used to predict the motion of the person in subsequent frames until the person is seen within the view length. The proposed method is used to detect one or more persons in a scene. The improved YOLOv5 distinguishes between large and small objects using convolutional filters of size 19 × 19 × 255 and 76 × 76 × 255. These CNN filters are used in the improved YOLOv5 for object detection and classification. The proposed contribution is effective in a densely populated area as it accurately detects multiple people. This also helps COVID-19 as police officers can control densely populated areas with real-time person detection. The following section describes the proposed improved YOLOv5 system.

4 Proposed enhanced YOLOv5 for person detection

The proposed architecture uses the YOLOv5 recognition technique. A new feature layer with gray background is added to the image to better detect the flat part of the small walkers. The importance of the fusion layer is to obtain detailed information about a person of small size. To reduce the loss of feature information for small individuals, background elements of the network are added to the feature fusion layer, and a scale invariant cross-stage partial network (SCSP) is also implemented. It is used to improve the final recognition result, which is determined by the recommended size, the use of these object maps, and the measurement of fixed object maps. Through the proposed functional map and the two-level backbone network, which is suitable for the simultaneous implementation of different scales, final findings are obtained. With different input scales, the enhanced YOLOv5 can achieve high recognition performance. The YOLOv5 enhancement is more efficient in terms of training and testing time because it shares convolutional features of the whole image with multiple target representations. The proposed enhanced YOLOv5 technique can detect small individuals indiscriminately and effectively. The effectiveness of the proposed contribution for many residential areas is that it can accurately identify many individuals. It is also useful for COVID-19 because administrators can easily track individuals in many locations. In the next section, we describe the advanced YOLOv5 deep learning architecture.

Improved YOLOv5 detects individuals at multiple levels. The improved YOLOv5 detection method consists of three components: (1) a new gray background feature fusion layer is added to extract shallower feature information from small individuals; (2) backbone network features are added to the black highlighted functional fusion layers to reduce the loss of functional information from small individuals; and (3) a scale invariant cross-stage Partial Network (SCSP) is added to the conventional method. The improved YOLOv5 architecture is shown in Fig. 6.

The design of the extended YOLOv5 procedure differs from the original YOLOv5 procedure in three ways, and Table 6 shows the model settings. The first step is to add a new fusion layer that creates a large object map of 152 × 152 × 255 pixels. To transfer feature data from the backbone to the feature fusion layers, several red links are created. In a third step, SCSP modules containing fusion layers are added. First, a new fusion layer was created to improve the performance of YOLOv5 in detecting small objects.

This layer is useful for creating features with dimensions of 152 × 152 × 255. compared to the YOLOv5 model. The improved YOLOv5 model has four levels of fusion. Starting from the original mesh, 152 × 152 pixels of the background mesh are used to create another layer of fused features. CSP and CBL modules are also used in this method to stop the exponential evolution of the computation time.

Second, transfer the data from the CSP to the background network in the following sizes: 152 × 152, 76 × 76, and 38 × 38. The red lines are for illustrative purposes. They provide details of large person objects in the input image as well as different scales of people in the images.

Pyramid network function for person detection. The main concept is to create an image pyramid from a large input image and then detect people at each level with different spatial resolutions. Figure 7 shows the proposed pyramid-based technique. It is a four-stage process that involves building hierarchical pyramid structures, observing people, and moving objects. In the first stage, an image pyramid is created by back-sampling multiple layers of input images at different resolutions. For YOLOv5, the input images are divided into a collection of small images with a pixel size of 300 × 300.

The purpose of creating an image pyramid is to avoid scaling problems and other unseen scenarios for person object detection. In the upper layers of the image pyramid, the number of people in the input pyramid decreases in the lower layer. Higher layer images can be used for large person detection. The non-max suppression (NMS) technique improves the visible results of the lower layers. It avoids redundant false objects in the final output image and transfers the recognition results of each layer to the original input image. The size of the input image and the number of extracted layers are determined by the YOLOv5 method. The top layer should be less than four times the size of the input image.

If the size of the generated layer is four times the size of the YOLOv5 input, a new, longer layer is created instead. Each part of the input image is fed into YOLOv5, which is then used to predict where people appear in the image. Finally, the duplicates are removed from the original input image and the recognition results are back. The migration process actually consists of two phases. One is to transfer the recognition results of each component to the appropriate layer. Using CSP, the redundant gradient problems of other, larger ConvNet backbones are solved, resulting in fewer parameters with the same importance. In YOLOv5 model, fast derivation and compact model are required for real-time deployment. The input image is filtered with convolutional filters, as shown in Table 7. The use of Conv2D layers provides the best accuracy. The following section describes the analysis of suspicious activity.

5 Suspicious activity detection-motivation

Previous studies have shown the value of the motion flow technique, and we believe that current methods have room for improvement. Information about objects of different sizes, their movements, velocities, and interactions between images must be collected. By carefully reviewing the facts, we can improve the performance of our method. If we consider the knowledge about this motion, we can perform better. The proposed method captures the motion of a moving person regardless of the size and direction of the object.

The different activity zones of the video images are divided into two categories: local and global. Figure 8 shows the abnormal activity area and the normal activity area. A local region is a clearly defined active area within an abnormal region. The global region, on the other hand, focuses on the area where strange behavior is observed. We evaluate the proposed method using our own dataset of student behavior as well as benchmark datasets from another institution, including the College of Minnesota anomaly detection dataset (Zhang et al. 2020).

5.1 Proposed motion based suspicious activity detection

he proposed method uses motion data from individuals to accurately describe moving objects in areas of high motion density. We detect fast and slow movements of unusual person activity in both local and global regions of the image. Figure 9 illustrates the main blocks of the proposed method. Each image is divided into blocks, from which motion data is then extracted to create pixel-level and block-level motion features. The motion feature extraction process includes the following steps:

-

1.

After a series of images, the activities of the moving object are separated at the pixel and block levels.

-

2.

First, the optical flow is used to retrieve the motion data at the pixel level. The motion feature, denoted by (u, v), encodes the displacement in the x and y directions between pixels in an eight-neighborhood (left, right, up-left, up-right, down, down-left, and down-right). For the pixel I(x, y, t), the optical flow is calculated. At time dt it shifts its position by (dx, dy). It is represented by Eq. 1.

$$\begin{aligned} I(x,y,t)=I(x+dx,y+dy,t+dt) \end{aligned}$$(1) -

3.

Next, the Taylor series approximation of the right-hand side is taken, frequent phrases are removed, and dt is divided to obtain the following Eq. 2:

$$\begin{aligned} f_{x}u + f_{y}v + f_{t} = 0 \end{aligned}$$(2)Where, \(f_{x}\), \(f_{y}\), and \(f_{t}\) are the first-order partial derivatives of a function f with respect to x, y, and t, respectively.

$$\begin{aligned} f_{x}= & {} \frac{\partial f}{\partial x}\hbox { and }f_{y} = \frac{\partial f}{\partial y} \\ u= & {} \frac{\partial x}{dt}\hbox { and }v = \frac{\partial y}{dt} \end{aligned}$$ -

4.

The function f is calculated in the Taylor series approximation by evaluating f at the point where the Taylor series is centered. This point is typically chosen to be a point where the function f is known or easy to evaluate. For example, consider the Taylor series approximation of the function \(f_{x} = sin(x)\) centered at the point x = 0 using Eq. 3:

$$\begin{aligned} f_{x} = f_{(0)} + f'_{(0)}(x - 0) + f''_{(0)}(x - 0)^2 / 2! \\ + f'''_{(0)}(x - 0)^3 / 3! +... \end{aligned}$$(3)To evaluate this Taylor series approximation, we need to evaluate the function \(f_{x}\) and its first three derivatives initialize as \(f_{(0)}\) = 0, \(f'_{(0)}\) = 1, and \(f''_{(0)}\) = 0. This approximation is accurate to first order in the difference between x and 0 using Eq. 4:

$$\begin{aligned} f_{x} = 0 + 1(x - 0) + 0(x - 0)^2 / 2! \\ + 0(x - 0)^3 / 3! +... \end{aligned}$$(4)To compute the function f in the Taylor series approximation, we must evaluate f and its derivatives at the point where the Taylor series is centered. The higher the derivatives we evaluate, the more accurate the approximation to the Taylor series.

-

5.

The slope in this case is represented by \(f_{x}\), \(f_{y}\), and \(f_{t}\), which is the slope versus time. The Lucas-Kanade method is used to calculate (u, v), the unknown term.

$$\begin{aligned} \left[ {\begin{array}{cc} u \\ v \\ \end{array} } \right] = \left[ {\begin{array}{cc} \sum _{i} fx_{i}^2 &{} \sum _{i} fx_{i}fy_{i} \\ \sum _{i} fx_{i}fy_{i} &{} \sum _{i} fy_{i}^2 \\ \end{array} } \right] \left[ {\begin{array}{cc} - \sum _{i} fx_{i}ft_{i} \\ - \sum _{i} fy_{i}ft_{i} \\ \end{array} } \right] \end{aligned}$$ -

6.

Different orientations are used to extract the motion features. After integrating the motion data, a single feature matrix is created to represent both the spatial and temporal features.

-

7.

K-Means clustering was used to categorize the activity and determine the global and local regions for each zone.

-

8.

The motion of an object in each frame is one of the extracted features. Clusters are created at the frame level, and the individual clustered motion pattern data is represented as a feature.

-

9.

We use pixel-level localization to determine the exact location of the unexpected behavior after an image is classified as unusual. The procedure continues until the entire video sequence is created.

-

10.

The proposed method isolates each pixel of the optical flow in Eq. 5 as the first step of a method to detect anomalous human activity by analyzing each frame of the video sequence. Here, \(Block_i\) denotes the optical flow of the \(i\)th block, the pixel size is represented by j, the \(i\)th block of the optical flow of the \(j\)th pixel is represented by \(f(x_{i},y_{i})^j\).

Next, the threshold \(Th_d\) for the block is calculated by representing the motion vector \(Block_i\) and the width Wd in Eq. 6. Here, \(Block_i\) represents the optical flow of the \(i\)th block and j represents the pixel size. The threshold value of the block was then calculated using the motion vector \(Block_i\).

The angle formed by the calculated feature vector \(\theta _{ij}\). \(EucliD_{(i,j)}\) is the Euclidean distance between objects i and j. \(EucliD_{(i,j)}\) is represented by Eq. 6.

The motion feature extraction method is represented by Eq. 8.

and MotF is the map of motion features in angle \(\theta\), and \(Block_i\) denotes the \(i\)th block of optical flow flow. Algorithm 1 describes the motion feature extraction procedure used to detect unexpected human motion in the video. Furthermore, the motion region in the image is represented by image plane clusters, where the optical pixel flow of each cluster in a different direction is considered as a feature vector. The probability of unexpected behavior of an associated block decreases as the distance between the blocks decreases. We can classify anomalies characteristic of successive images when a higher distance value is found. The current scene is identified as an unusual activity image when the distance is greater than a given constant threshold. Experimental results for the proposed contribution are described below.

6 Experiment result and analysis

We evaluated the performance of the proposed Enhanced YOLOv5 and suspicious activity detection on benchmark person databases such as Caltech (Cao et al. 2022), INRIA (I.P. dataset 2021), MS COCO (MS COCO 2018), ETH (E. P. dataset 2021), KITTI (Cao et al. 2022), University of Minnesota’s databases (Qianyin et al. 2015), etc., and our person database. The experiments and the proposed deep learning framework were run on a single NVIDIA GPU and a CPU Intel Core i5 3.4GHz processor with 16GB RAM and 16GB NVIDIA graphics card. Table 8 shows the experimental platform information in detail.

6.1 Enhanced YOLOv5

Modern person analysis research was conducted using publicly available benchmark datasets, including Caltech (Cao et al. 2022), INRIA (I.P. dataset 2021), MS COCO (MS COCO 2018), ETH (E. P. dataset 2021), KITTI (Cao et al. 2022), etc. The fact that the dataset includes the behavior of individuals in many contexts and activities was a key factor. However, for classification purposes, an extended YOLOv5 model and ReLU as an activation function are proposed. In the proposed method, extended YOLOv5 models are assembled using a sparse categorical cross entropy function instead of a categorical cross entropy. Using a sparse classification cross entropy saves time and computational effort, which is its main advantage. Unlike using the full feature vector as the class label, only a single value is used. The proposed method takes advantage of concurrent callbacks. A so-called callback has the ability to perform operations at different stages of the training phase. The experimental results and their analysis are described in the following subsection.

6.2 Evaluation metrics-enhanced YOLOv5

The accuracy of the proposed improved YOLOv5 object detectors was evaluated using average precision (AP), precision, recognition, and mean accuracy (mAP) metrics evaluated. In the range of 0 to 1, AP determines the average precision value of the recall value. The following criteria are considered in the experiments.

-

1.

Classification of item recognition as True Positive if IoU \(\ge\) 0.5. (TP). The AP calculated at IoU 0.50 and 0.75 is calculated using the mathematical symbols \({{\rm{AP}}_{50}}\), \({\text{AP}}_{75}\). In addition, small, medium and large scales are represented as \({\text{AP}}_{S}\), \({\text{AP}}_{M}\), \({\text{AP}}_{L}\), respectively, as shown in Table 10.

-

2.

If the IoU is less than 0.5, the detection was performed incorrectly and is referred to as a false positive (FP).

-

3.

If the model could not find the object, it was classified as False Negative (FN).

-

4.

If the classifier cannot detect an object, the entire image is classified as True Negative (TN). Ignore TN, as this measure is useless for object detection.

Equations 9, 10, and 11 are used to calculate the accuracy measure.

In Table 9, compares the improved YOLOv5 with state-of-the-art methods for person detection. The proposed model performs better than the baseline versions of previous state-of-the-art YOLOv5 models. It includes the winners of the COCO 2015 and 2016 Challenge, MNC (Wang and Li 2019) and FCIS (Wang and Li 2019), respectively. FCIS is outperformed by the improved YOLOv5 with ResNet-101 FPN backbone, according to Wang and Li (2019). It achieved a benchmark AP of 79%, \({{\rm {AP}}_{50}}\) of 68%, and \({{\rm AP}_{75}}\) of 64%. Again, the proposed scheme was validated at different scales and achieved \({{\rm AP}_{S}}\) of 16.5%, \({{\rm AP}_{M}}\) of 39.5%, and \({{\rm AP}_{L}}\) of 54.5%, respectively. The qualitative results of the enhanced YOLOv5 under challenging conditions, such as the successful detection of small unchanged individuals with the enhanced YOLOv5, are shown in Fig. 10.

Extended YOLOv5 result. First row: the proposed technique. Annotated benchmark in the second row. MS COCO Images of the dataset in the third row, with the proposed method MS COCO (2018). Benchmark annotation for the Caltech dataset in the fourth row. Fifth row: proposed method using the Caltech dataset Cao et al. (2022). The benchmark annotation of the Caltech dataset is in the sixth row

6.3 Comparison with state-of-the-art person detection methods-YOLOv5

6.3.1 Caltech

The proposed framework was trained using images from the Caltech database. 2.300 individuals and 350,000 bounding boxes with annotations. The proposed method is compared with other techniques such as SAF R-CNN (Li et al. 2017), Side Fusion FPN (SF-FPN) (Li et al. 2017), Small-scale sense network (SSN) (Ren et al. 2017), Attention-Enhanced Multi-Scale Region, and Proposal Network (AEMS-RPN) (Wang and Li 2019) in Fig. 11a. It can be observed that the improved YOLOv5 performed significantly better than the other approaches. It achieved the lowest false error rate of 9.11% and mAP of 77%. As shown in Table 11, this is the best performance with the enhanced YOLOv5.

6.3.2 INRIA and ETH

Additionally trained and evaluated with images from the databases INRIA and ETH databases trained and evaluated using the enhanced YOLOv5. The comparison results are shown in Fig. 11b and c, respectively. The 498 images in the INRIA collection contain manual labels. The proposed method yields an error rate of 7.31% and mAP of 71% for the images in the INRIA dataset. Compared to the current methods YOLOv4 (Liu et al. 2023), SAF R-CNN (Li et al. 2017), MNPrioriBoxes-Yolo (Ren et al. 2017), and AEMS-RPN (Wang and Li 2019), the proposed methodology performs better. Second, the error rate of the proposed model for the dataset ETH is 32.63% and mAP of 78.3% compared with 33.87% of Ren et al. (2017) and 36.46% of Wang and Li (2019). In the ETH dataset, 1776 individuals were labeled. In general, the proposed technique achieves both a lower miss rate and a higher recognition rate in this dataset. The improved YOLOv5 was also validated on the KITTI dataset.

6.3.3 KITTI

There are 6744 images of individuals with annotations. Figure 11c compares the person recognition performance of the improved YOLOv5 with the following methods: YOLOv4 (Liu et al. 2023), SAF R-CNN (Li et al. 2017), MN-Priori-Boxes-Yolo (Ren et al. 2017), and AEMS-RPN (Wang and Li 2019). On the KITTI dataset, the proposed method gives encouraging results with 79%, 68%, and 64% and mAP of 78.34%.

6.3.4 MS COCO and proposed person dataset

The Fig. 11d and e show the results. The annotations include instances of various objects belonging to 80 object classes and human segmentation for 91 different categories. The proposed method was compared with current approaches in YOLOv4 (Liu et al. 2023), SAF R-CNN (Li et al. 2017), MNPrioriBoxes-Yolo (Ren et al. 2017), and AEMS-RPN (Wang and Li 2019). On MS COCO, it yields a miss rate of 8.56%, while on the proposed person dataset it yields a miss rate of 8.56% and a mAP of 79.31%.

Finally, we compared the results of the following research: Caltech (Cao et al. 2022), INRIA (I.P. dataset 2021), MS COCO (MS COCO 2018), ETH (E. P. dataset 2021), KITTI (Cao et al. 2022), and the proposed dataset, as shown in Fig. 11f. We found that the proposed improved YOLOv5 was successfully able to identify individuals in each dataset. As shown in Fig. 11g for multiple person datasets, the improved YOLOv5 outperforms the existing algorithms in terms of accuracy, speed, and time required for person detection.

The comparison between proposed improved YOLOv5 and state-of-the-art deep learning models such as Faster RCNN (Ren et al. 2017), YOLO (He et al. 2021), Fast R-CNN (Girshick 2015), SAF R-CNN (Li et al. 2017) is shown in Table 11 and Fig. 11h. The proposed improved YOLOv5 efficiently detects the person when artifacts are present. For benchmark person databases, the person recognition accuracy is 96%,which is a remarkable accuracy.

The enhanced YOLOv5 Performance: a Caltech (Cao et al. 2022) dataset has the lowest log-average miss rate 9.10%. b INRIA (I.P. dataset 2021) dataset has the lowest log-average miss rate 7.31%. c ETH (E. P. dataset 2021) dataset miss rate of is 32.63%. d KITTI (Cao et al. 2022) dataset-enhanced YOLOv5 yields encouraging results of 79%, 68%, and 64%. e MS COCO (MS COCO 2018) dataset has a miss rate of 8.56%. f Miss rate for the proposed person dataset-enhanced YOLOv5 is 8.68%. g Comparative analysis of Caltech (Cao et al. 2022), INRIA (I.P. dataset 2021), MS COCO (MS COCO 2018), ETH (E. P. dataset 2021), and KITTI (Cao et al. 2022) and proposed dataset. h Comparison enhanced YOLOv5 with current state-of-the-art techniques for precision vs. recall

6.4 Enhanced YOLOv5-comparative analysis

The Enhanced YOLOv5s calculation results were examined on Caltech (Cao et al. 2022), INRIA (I.P. dataset 2021), MS COCO (MS COCO 2018), ETH (E. P. dataset 2021), and KITTI (Cao et al. 2022), etc. as shown in Fig. 12. The results showed that Enhanced YOLOv5, the number of parameters in the model increased by 2.5M. Still, the increase in the number of parameters was negligible compared with the disk capacity of hundreds of GB. The speed was not significantly improved, but the \({{\rm {AP}}_{50}}\) of the model increased by 2.1%, mAP increased by 2.2%, and the accuracy increased by 1.5%. The recall rate increased by 1.19%.

The model got a notable accuracy of 96% on the testing set and 95% of accuracy on the validation set. Next, we have computed the training loss and accuracy and validation loss accuracy. The Fig. 13a illustrate the accuracy and epochs plot for representing accuracy and validation accuracy and Fig. 13b illustrate the loss and epochs plot for representing loss and validation loss. The loss represented training loss. The accuracy represented training accuracy. The valLoss represented validation accuracy, and the valAcc represented validation accuracy.

6.5 Ablation studies

Ablation studies will be used to develop a robust person recognition algorithm to increase speed and accuracy. An ablation study simply removes some parts of the detection algorithm and observes the impact on performance. The benchmark detector and the proposed YOLOv5 architecture trained and tested with the proposed and benchmark dataset are shown in Table 12. For example, the long-standing DarkNet53 backbone, the CSP -Dark-Net53 backbone, and the Focus-CSPNet backbone can be validated with a different activation function. Although YOLOv4 (Liu et al. 2023), YOLOv5 (Xu et al. 2022) their backbone experience from CSPNet, scale invariant cross-stage partial network (SCSP) to improve the learning capability of CNN, ResNet. It also solves saturated drop accuracy for deeper neural networks and reducing the number of parameters without network performance is the limits. With the reduction of parameters, the recognition speed of the ResNet architecture used decreases. In the proposed YOLOv5 recognition algorithm, a feature fusion layer with SCSP as the neck is used to obtain a pyramid of features that can form a good general person scaling model.

6.5.1 Ablation studies-enhanced YOLOv5

Ablation studies on different adaptations of the improved YOLOv5 are needed to investigate the features responsible for the improvement in recognition performance. The average ablation results for the improved YOLOv5 are shown in Table 13. The results obtained show a difference in the average F1 score between the algorithms. However, the mAP in Table 13 is considered more accurate than the F1 score because the global precision and recall are higher for this reason. enhanced YOLOv5-ResNet43-Leaky’s AP is more accurate with 85.9% more than enhanced YOLOv5-ResNet70-Leaky with 84.9%. This shows that it is more accurate. The YOLOv5-ResNet43-Mish access point is 0.1% lower than YOLOv5-ResNet43-Leaky in comparison, at 85.8%. An indication that the Leaky activation feature is more compatible with the ResNet architecture. Adding PANet to enhanced YOLOv5-ResNet43-PANet increases AP by 86.5%, which is only 0.6%. Meanwhile, AP of the enhanced YOLOv5-ResNet43-ReLU by 0.6% at 85.3%, while AP enhanced YOLOv5-ResNet43-Swish 85.9%. The activation function enhanced YOLOv5-ResNet43-Combined improved the performance of AP to 86.2%, which is 0.3% more than enhanced YOLOv5-ResNet43-Leaky and 0.3% less than enhanced YOLOv5-ResNet43-PANet. In this context, the level of AP result is measured as enhanced YOLO-ResNet43-PANet is greater than enhanced YOLOv5-ResNet43-Combined, enhanced YOLO-ResNet43-Leaky is equal to enhanced YOLO-ResNet43-Swish, and greater than enhanced YOLOv5-ResNet43-Mish, enhanced YOLOv5-ResNet43-ReLU.

The larger the weight, the longer the detection time, as shown in Table 13. The person detection time of enhanced YOLOv5-ResNet43-Leaky, enhanced YOLOv5-ResNet43-ReLU and the enhanced YOLOv5-ResNet43-Combined is 11.9ms, while enhanced YOLOv5-ResNet70-Leaky is 15.0ms and enhanced YOLOv5-ResNet43-Mish is 12.4 ms. We received the best performance on enhanced YOLOv5-ResNet-ReLU is 10.2 ms. The algorithm with the lowest detection time is considered the fastest, which can also be converted into a frame.

6.5.2 Ablation studies-YOLOv5

There is no doubt that the weight of YOLOv5 (Xu et al. 2022) is lower compared to other architectures. However, they show ablation findings of different YOLOv5 (Xu et al. 2022) mutations, which are YOLOv5-sR-FPN (Xu et al. 2022) is faster than other algorithms with a detection time of 2.7 ms. The YOLOv5 (Xu et al. 2022) mutation is only related to the spine and neck. Among the other algorithms, the average detection accuracy of YOLOv5s Xu et al. (2022) is 82.7% higher. In contrast to the improved YOLOv5 (Xu et al. 2022) and YOLOv4 (Liu et al. 2023) change, the average AP YOLOv5s (Xu et al. 2022) decreases from 82.7% to 80.9% YOLOv5-sR (Xu et al. 2022) and further to 80.6% YOLOv5-s-FPN (Xu et al. 2022). Interestingly, an increase of 81.9% YOLOv5-sR-FPN (Xu et al. 2022) was observed at AP, which should be investigated in the future. However, YOLOv5-sR-FPN (Xu et al. 2022) was observed at 82.6% per YOLOv5-sR-FPN (Xu et al. 2022) is 12.9% faster and 0.8% less accurate than YOLOv5. This shows that the performance of YOLOv5-sR-FPN (Xu et al. 2022) is better than that of YOLOv5s (Xu et al. 2022) and clearly represents the YOLOv5 (Xu et al. 2022) model as shown in the Table 13.

6.5.3 Ablation studies-YOLOv4

The ablation studies of the different modifications of YOLOv4 (Liu et al. 2023) algorithm are based on Neck, a PANet, FPN, Mish and combined activation function. Table 13 shows that the average AP of YOLOv4-RPAnet (Liu et al. 2023) at 86.7% is 2% higher than YOLOv4-PANet (Liu et al. 2023) at 84.7% with the same Mish and incorporation of PANet. Under the influence of Mish and FPN, AP YOLOv4-RFPN (Liu et al. 2023) at 85.2% is 1.1% higher than YOLOv4-FPN (Liu et al. 2023) at 84.1%. This is further evidence that it is more accurate. However, AP YOLOv4-Combined (Liu et al. 2023) is the worst of the algorithms with 83.5%. This means that the proposed combined activation function is not compatible with the CSPDarkNet backbone to improve the performance. YOLOv4-RFPN (Liu et al. 2023) and YOLOv4-RCP (Liu et al. 2023). The smallest size has a detection time of 16.6ms and 16.5ms, respectively. With this large effect, the detection time is 22.3 ms for YOLOv4-PANet (Liu et al. 2023), 18.4 ms for YOLOv4-FPN (Liu et al. 2023) and YOLOv4-RPAnet (Liu et al. 2023) is 20.6 ms. Comparing all the algorithms for the best performance, the YOLOv4-RFPN (Liu et al. 2023) algorithm is amazing witha detection accuracy of 85.2% and a detection time of 16.6ms. So, the YOLOv4-RFPN Liu et al. (2023) algorithm is presented as the YOLOv4 (Liu et al. 2023) recognition algorithm in this section. The next section describes the experimental results and analysis of suspicious activity detection.

6.6 Suspicious activity recognition

The experiment used datasets from the public domain, the University of Minnesota’s databases (Qianyin et al. 2015) for anomaly detection, and proposed datasets for student behaviour. The proposed technique was used to compare the social force model of Qianyin et al. (2015), sparse representation of Jhapate et al. (2020), and mixture of dynamic textures method of Jin et al. (2021), Li et al. (2022), Minguez et al. (2019). The performance is assessed using standard performance evaluation metrics. First, we ran tests using the dataset for anomaly detection from the University of Minnesota. The Receiving Operating Curve (ROC) for the proposed and current technique described in Jhapate et al. (2020) is shown in Fig. 14.

In a similar manner, we calculated the Receiving Operating Curve (ROC) for the proposed student behavior dataset shown in Fig. 14b. The proposed framework is effective and outperformed the method described in the literature after the ROC curve was examined. For a quantitative comparison, we estimated ERR and AUC for the proposed and existing methods, as displayed in Tables 14 computed using Eqs. 12, and 13. We noticed that the proposed technique, which yields error rates of 16.1% and 18.1% on the two datasets, respectively, delivers less error rate in comparison, respectively, for both datasets. The AUC for the proposed technique is 73.2% and 72.1%. It demonstrates that the proposed framework is superior to existing methods for detecting suspicious activity in that it is more reliable and accurate across both datasets.

7 Conclusion and future scope

In this work, for accurate person recognition, an improved YOLOv5 along with an approach based on motion and direction information is proposed. It provides a recognition accuracy of 96.12% and an error rate of 6.68% compared to the existing methods. The feature extraction capabilities of the network were improved by removing the repetitive convolutional layer and adding a scale-invariant cross-stage sub-network layer to detect individuals of different sizes. In the proposed approach, a new feature fusion layer was implemented to collect more information about small individuals. In addition, the proposed approach uses the backbone networks with flat compact features for small individuals. A pyramid-based approach has been developed to detect individuals of multiple sizes. Experimental results show significant performance improvements in benchmark datasets. Next, we proposed a novel method for detecting unusual human activity in an academic setting. Based on the spatial and temporal characteristics of motion features, we can classify frames as normal or abnormal activity of the subject and also be able to localize regions of abnormal activity within the frame as a local or global region.

We conducted experiments using the College of Minnesota anomaly detection datasets and the proposed dataset. It was confirmed that the proposed method is effective and outperforms other competing methods in the literature. However, the purpose of this research is to detect abnormal actions in an academic setting where cameras generally cover a large area. In the future, the proposed framework can be improved in the following aspects: Motion blur, partial or complete occlusion, can be effectively solved by extracting various features from person objects that are invariant to occlusion and motion blur to improve the feature extraction process. An unsupervised approach to person detection can be useful to reduce the time required. Again, a path prediction method is used to predict the path of a person. We have highlighted its performance in numerous benchmarks and shown how accurate it is compared to previous real-time algorithms. Our technique has some drawbacks. Numerous other features of human behavior, such as physical and psychological characteristics, age, gender, and contextual conditions, are not modeled by the underlying approach. In our future work, we aim to circumvent these limitations.

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request. All data generated or analysed during this study are included in this published article (and its supplementary information files).

References

Alex K, Sutskever I, Hinton G (2012) ImageNet classification with deep convolutional neural networks, NIPS, pp 1–9, Nevada

Asvadi A, Karami-Mollaie M, Baleghi Y, Seyyedi-Andi H (2011) Improved object tracking using RBFNN. In: MVIP, 16th–17th Nov 2011. pp 1–5

Athanesious JJ, Suresh PV (2012) Systematic survey on object tracking methods in video. Int J Adv Res Comput Eng Technol 1(8):242–247

Bodla N, Singh B, Chellappa R, Davis LS (2017) 2nd International Conference on Data, Engineering and Applications (IDEA)

Cao J, Pang Y, Xie J, Khan FS, Shao L (2022) From handcrafted to deep features for pedestrian detection: a survey. IEEE TPAMI 44(9):4913–4934

Chebli K, Khalifa AB (2018) Pedestrian detection based on background compensation with block-matching algorithm, In: 2018 15th International Multi-Conference on Systems, Signals and Devices (SSD), pp 497–501

Dalal N, Triggs B (2005) HOG for human detection. CVPR, CA. pp 886–893

Direkoglu C (2020) Abnormal crowd behavior detection using motion information images and CNN. IEEE Access 8:408–480

E. P. dataset (2021) ETH, http://www.vision.ee.ethz.ch/~aess/dataset/

Emil N, Neghina M (2009) A NN approach to pedestrian detection, ICCOMP. pp 374–379

Fekry A, Dafoulas G, Ismail M (2019) The relation between individual student behaviours in video presentation and their modalities using vark and paei results. In: 2019 2nd International Conference on new Trends in Computing Sciences (ICTCS), pp 1–7, 10 2019

Gaddigoudar PK, Balihalli TR, Ijantkar SS, Iyer NC, Ped SM (2017) Dete. and track. using particle filtering. In: International Conference on Computing, Communication Automation (ICCCA), pp 110–115

Geronimo D, Sappa A, Lopez A, Ponsa D (2006) Pedestrian detection using AdaBoost learning of features and vehicle pitch estimation. In: International conference on visualization, imaging and image processing, 28th–30th Aug 2006. pp 1–8

Girshick R (2015) Fast r-cnn. IEEE, 2015, pp 1440–1448

Gorska A, Guzal P, Namiotko I, Wedol A, Wloszcz M, Ruminski J (2022) Aitp-ai thermal ped. dataset. IEEE, pp 1–4

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. IEEE 6:770–778

He K, Gkioxari G, Dollar P, Girshick R (2020) Mask R-CNN. TPAMI 42(2):386–397

He C, Zhang X, Miao Z, Sun T (2021) Intelligent vehicle pedestrian tracking based on yolov3 and dasiamrpn, 2021 40th Chinese Control Conference (CCC), pp 4181–4186

He K, Zhang X, Ren S, Sun J (2015) Deep residual learning, CVPR, pp 1–10, Boston

Hou L, Wan W, Lee K-H, J-N Hwang, G Okopal, JW Pitton (2015) Deformable multiple-kernel based human tracking using a moving camera, In: 2015 IEEE International Conference on Acoustics, Speech and Sign. Process (ICASSP), pp 2249–2253

I.P. dataset (2021) INRIA, http://pascal.inrialpes.fr/

Jepson A, Fleet D, El-Maraghi T (2003) Robust online appear models for visual track. IEEE TPAMI 25(10):1296–1311

Jhapate AK, Malviya S (2020) Unusual crowd activity detection using opencv and motion influence map. 2nd International Conference on Data, Engineering and Applications. pp 1–6

Jiao L et al (2022) New generation deep learning for video object detection: a survey. IEEE Trans Neural Netw Learn Syst 33(8):3195–3215

Jin, D, Bai X, Wang Y (2021) Integrating structural symmetry and local homoplasy information in intuitionistic fuzzy clustering for infrared ped. segm., In: IEEE IEEE Transactions on Systems, Man, and Cybernetics, vol 51, pp 4365–4378

Kang S, Byun H, Lee S (2002) Real-time pedestrian detection using support vector machines. First international workshop on SVM: pattern recognition with SVM. pp 268–277

Kangming L (2016) Research on an improved pedestrian detection method based on DBN classification algorithm. RISTI 17(3):77–87

Kim SJ, Nam JY, Ko BC (2018) Online tracker optimi: for multi ped. tracking using a moving vehicle camera. IEEE Access 6:48675–48687

Kumar M, Patel AK, Biswas M et al (2023) Attention-based bidirectional-long short-term memory for abnormal human activity detection. Springer, Scientific Report

Kwon J, Lee KM (2008) Tracking of abrupt motion using Wang-landau Monte Carlo estimation. pp 387–400

Li L, Xie L, Li W, Liu Z, Wang Z (2018) Improved deep belief networks (idbn) dynamic model-based detection and mitigation for targeted attacks on heavy duty robots. Appl Sci 8:676

Li Z, Liu F, Yang W, Peng S, Zhou J (2022) A survey of convolutional neural networks: analysis, applications, and prospects. IEEE Trans Neural Netw Learn Syst 33(12):6999–7019

Li F, Li X, Liu Q, Li Z (2022) Occlusion handling and multi-scale pedestrian detection based on deep learning: a review. IEEE Access 10:19937–19957

Li J, Liang X, Shen S, Xu T, Feng J, Yan S (2017) Scale-aware fast r-cnn for pedestrian detection, IEEE TIM, pp 1–1

Liu M, Wan L, Wang B et al (2023) SE-YOLOv4: shuffle expansion YOLOv4 for pedestrian detection based on PixelShuffle. Appl Intell 53:18171–18188

Mehmood A (2021) Efficient anomaly detection in crowd videos using pre-trained 2d CNN, In: IEEE Access, vol 9, pp 138 283–138

Minguez RQ, Alonso IP, Fernaindez-Llorca D, Sotelo MA (2019) Pedestrian path, pose, and intention prediction through gaussian process dynamical models and ped. activity recognition. IEEE Trans Intell Trans Syst 20(5):1803–1814

Minsky M, Kurzweil R (2013) The society of intelligent veillance. In: IEEE international symposium on technology and society, Toronto, pp 13–17

MS COCO (2018) dataset, Microsoft, https://cocodataset.org/

Pan J, Hu B (2007) Robust occlusion handling in object tracking, In: 2007 IEEE Conference on CVPR, pp 1–8

Papathanasopoulou V, Spyropoulou I, Perakis H, Gikas V, Andrikopoulou E (2021) Classi. of ped. behavior using real trajectory data. International Conference on Models and Technologies for Intelligent Transportation Systems (MT-ITS), pp 1–6

Pogadadanda V, Shaik S, Neeraj GVS, Siralam HV, Joseph IT, Rao KBVB (2023) Abnormal activity recognition on surveillance: a review, 2023 Third International Conference on Artificial Intelligence and Smart Energy (ICAIS), Coimbatore, pp 1072–1077

Porikli F, Tuzel O, Meer P (2006) CVPR 1:728–735

Pouyan S, Charmi M, Azarpeyvand A, Hassanpoor H (2023) Propounding first artificial intelligence approach for predicting robbery behavior potential in an indoor security camera. IEEE Access 11:60471–60489

Qianyin J, Guoming L, Jinwei Y, Xiying L (2015) A model based method of pedestrian abnormal behavior detection in traffic scene, 2015 IEEE First International Smart Cities Conference (ISC2), pp 1–6

Radford A, Metz L, Chintala S (2015) Unsupervised representation learning with deep convolutional generative adversarial networks, CVPR, pp 1–10, Boston

Redmon J, Divvala S, Girshick R, Farhadi A (2016) You only look once: Unified, real-time object detection. IEEE Conference CVPR 6 2016. pp 779–788

Ren S, He K, Girshick R, Sun J (2017) Faster r-cnn: towards real-time object detection with region proposal networks. IEEE TPAMI 39:1137–1149

Ross DA, Lim J, Lin R-S, Yang M-H (2008) Incremental learning for robust visual track. IJCV 77:125–141

Simonyan K, Zisserman A (2015) Very deep convolutional networks for large-scale image recognition, CVPR, pp 1–10

Szegedy C, Ioffe S, Vanhoucke V, Alemi A (2016) Inception-v4, Inception-ResNet and the impact of residual connections on learning, CVPR, pp 1–10

Szegedy C, Ioffe S, Vanhoucke V, Alemi A (2016) Inception-v4, inception-resnet and the impact of residual connections on learning, International Conference on Artificial Intelligence

Vasishta P, Vasista D, Spalanzani A (2018), Building prior knowledge: A markov based ped. prediction model using urban environmental data, 2018 In: 15th International Conference on Control, Automation, Robotics and Vision (ICARCV), pp 247–253

Wang J-X, Hou Y-R (2016) Ped. fall action detection and alarm in video surveillance, In: 2016 3rd International Conference on Information Science and Control Engineering (ICISCE), pp 502–505

Wang J, Li G (2019) Accelerate proposal generation in r-cnn methods for fast ped. extraction. Electron Lib 37:435–453

Wang Y, Zhao R, Liu Y, Zhang L (2023) Multi-modal deep learning for suspicious activity recognition in video surveillance. IEEE Trans Inform Forensics Secur 18(10):2473–2486

Wang X, Han TX, Yan S (2009) An HOG-LBP human detector with partial occlusion handling. ICCV, pp 32–39

Wu C, Yue J, Wang L, Lyu F (2019) Detection and classification of recessive weakness in superbuck converter based on WPD-PCA and probabilistic neural network. Electronics 8(290):1–17

Xu L-Q, Puig P (2006) Multi-level attention model for tracking and segmentation of objects under complex occlusion. BT Technol J 24:180–185

Xu S, Guo Z, Liu Y, Fan J, Liu X (2022) An improved lightweight yolov5 model based on attention mechanism for face mask detection, EEE Signal Processing Communicating Applied Conference

Yang B, Cao J, Wang N, Liu X (2019) Anomalous behaviors detection in moving crowds based on a weighted convol. autoencoder-long short-term memory network. IEEE Trans Cognit Dev Syst 11(12):473–482

Yilmaz A, Li X, Shah M (2004) Contour-based object tracking with occlusion handling in video acquired using mobile cameras. IEEE TPAMI 26(11):1531–1536

Yuan H, Chan S, Creagh AP, Tong C, Clifton DA, Doherty A (2023) Self-supervised learning for human activity recognition using 700,000 person-days of wearable data. Proc ACM Interact Mob Wearable Ubiquitous Technol 7(2):1–23

Zaki MH, Sayed T (2018) Automated analysis of ped. Group behavior in urban settings. IEEE Trans Intell Trans Syst 19(6):1880–1889

Zeiler M (2013) Fergus. Visualizing and understanding convolutional networks, CVPR, pp. 1–11

Zhang W, Dong X, Li H, Xu J, Wang D (2020) Unsupervised detection of abnormal electricity consumption behavior based on feature Engg. In: IEEE Access, vol 8, pp 55483–55500

Zhang Q, Huang C, Xia L, Wang Z, Yiu SM, Han R (2023) Spatial-Temporal Graph Learning with Adversarial Contrastive Adaptation, ortieth annual conference is held Sun. pp. 1–11, Hawaii

Zhao Z-Q, Zheng P, Xu S-T, Wu X (2019) Object detection with deep learning: a review. IEEE Trans Neural Netw Learn Syst 30(11):3212–3232

Zhou X, Lu Y, Lu J, Zhou J (2012) Abrupt motion tracking via intensively adaptive Markov–chain Monte Carlo sampling. IEEE TIP 21:789–801

Zhu R, Xiao Z, Li Y, Yang M, Tan Y, Zhou L, Lin S, Wen H (2019) Efficient human activity recognition solving the confusing activities via deep ensemble learning. IEEE Access 7:490–499

Author information

Authors and Affiliations

Contributions

UG, KH, and YG contributed to the study conception and design. Material preparation, data collection and analysis were performed by UG, KH, and YG. The first draft of the manuscript was written by UG and UG, KH, and YG commented on previous versions of the manuscript. UG, KH, and YG read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The corresponding author confirms on behalf of all authors that there have been no involvements that might raise the question of bias in the work reported or in the conclusions, implications, or opinions stated. The authors declare that they have no conflicts of interest.

Ethical approval

All applicable international, national, and/or institutional guidelines for the care and use of animals were followed. This article does not contain any studies involving animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gawande, U., Hajari, K. & Golhar, Y. Novel person detection and suspicious activity recognition using enhanced YOLOv5 and motion feature map. Artif Intell Rev 57, 16 (2024). https://doi.org/10.1007/s10462-023-10630-0

Accepted:

Published:

DOI: https://doi.org/10.1007/s10462-023-10630-0