Abstract

The learning environment (LE) includes social interactions, organizational culture, structures, and physical and virtual spaces that influence the learning experiences of students. Despite numerous studies exploring the perception of healthcare professional students (HCPS) of their LE, the validity evidence of the utilized questionnaires remains unclear. This scoping review aimed to identify questionnaires used to examine the perception of undergraduate HCPS of their LE and to assess their validity evidence. Five key concepts were used: (1) higher education; (2) questionnaire; (3) LE; (4) perception; and (5) health professions (HP). PubMed, ERIC, ProQuest, and Cochrane databases were searched for studies developing or adapting questionnaires to examine LE. This review employed the APERA standards of validity evidence and Beckman et al. (J Gen Intern Med 20:1159–1164, 2005) interpretation of these standards according to 5 categories: content, internal structure, response process, relation to other variables, and consequences. Out of 41 questionnaires included in this review, the analysis revealed a predominant emphasis on content and internal structure categories. However, less than 10% of the included questionnaires provided information in relation to other variables, consequences, and response process categories. Most of the identified questionnaires received extensive coverage in the fields of medicine and nursing, followed by dentistry. This review identified diverse questionnaires utilized for examining the perception of students of their LE across different HPs. Given the limited validity evidence for existing questionnaires, future research should prioritize the development and validation of psychometric measures. This will ultimately ensure sound and evidence-based quality improvement measures of the LE in HP education programs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Improving the learning experience of students in healthcare profession education programs (HPEPs) has been a demanding process in the healthcare professions education (HPE) (Carr et al., 2015). Indeed, HPEPs (e.g., pharmacy, medicine, nursing, and health sciences) are expected to prepare graduates with fundamental competencies, skills, and professional attributes and qualifications (Carr et al., 2015). Healthcare professional educators believe that the theoretical and clinical experiences that the students gain in their learning environment (LE) can significantly impact their attitudes, knowledge acquisition, skills development, and behaviors (Genn, 2001; Lizzio et al., 2002; Pimparyon, 2000). This is particularly important because the competencies of healthcare professionals influence patients' safety and ultimately health outcomes (Dunne et al., 2006).

The learning environment (LE) refers to the interactive combination of physical settings, educational resources, instructional approaches, and interpersonal dynamics that impact the learning journeys and experiences of students (Closs et al., 2022). According to Maudsley, a LE exists wherever and whenever students congregate, and it contains a variety of elements that support good instruction and serve as the curriculum's context (Maudsley, 2001). Hoidn (2016) argues that the LE demonstrates how various curricular components have an impact on students (Hoidn, 2016). Several studies have pointed out the important role that the LE has on the satisfaction and self-confidence of students (Al Ayed & Sheik, 2008; Lizzio et al., 2002; Wach et al., 2016; White, 2010). Therefore, accrediting bodies have increased their focus on the quality of the LE, highlighting that HPEPs are responsible for facilitating a positive LE, which supports the learning and professional development of students (Council, 1998; Education, 2009; Rusticus et al., 2020). Moreover, improving the LE has been recognized as a key standard in the World Federation for Medical Education (WFME) standards, which aim to ensure continuous quality improvement of medical education programs (Council, 1998).

According to (Genn, 2001), the crucial aspect lies in how students perceive their LE. The perception of students of their learning environment (LE) involves how learners perceive and make sense of the various elements, conditions, and factors that make up their educational surroundings (Genn, 2001). The perception can be enhanced by improving the motivation of students towards their learning and their interpersonal relationships, developing effective teaching strategies, and increasing the availability of infrastructure facilities (Genn, 2001). Additionally, enhancing the compliance of the higher education providers with cultural and international administrative standards within the physical environment is crucial for shaping a positive perception of the learning environment (Brown et al., 2011; Rawas & Yasmeen, 2019). Medical educators argue that the perception of students of their LE is one of the determinants of their academic and professional outcomes, therefore, assessing it is essential (Genn, 2001; Roff & McAleer, 2001).

Many educational institutes have investigated the perception of students of their LE regionally and internationally (Al Ayed & Sheik, 2008; Al-Hazimi et al., 2004a, 2004b; Lizzio et al., 2002; Rothman & Ayoade, 1970). In that regard, several studies indicated that the perception of students of their LE is affected by several factors, such as the gender of students and their academic achievement, as well as the curriculum content and the teaching styles (Cerón et al., 2016; Lokuhetty et al., 2010; Pimparyon, 2000). In a study conducted by a medical school that utilizes problem-based learning as a teaching strategy, first-year students exhibited neutral perception toward their LE, possibly due to their excitement upon entering the medical college; however, as they progressed in their study, they become more critical of the educational environment, indicating a shift in their perceptions over time (Nosair et al., 2015). Ahmed et al. (2018) argued that the perception of students of their LE and the factors that affect this perception should be assessed using reliable and comprehensive approaches (Ahmed et al., 2018). The approaches that have been used in the literature were quantitative (Rusticus et al., 2020) or qualitative (Britt et al., 2022; Fego et al., 2022) assessments. Quantitative assessment involves using validated and reliable questionnaires (RoffS et al., 1997; Rusticus et al., 2014, 2020), which should be ideally selected based on their comprehensiveness, quality, and validity evidence (Kishore et al., 2021). Furthermore, a key aspect to consider while assessing the comprehensiveness and robustness of a questionnaire is its theoretical foundation (Schönrock-Adema et al., 2012; Klein, 2016), because it reveals the key determinants of the measured outcome (Schönrock-Adema et al., 2012). Therefore, a review of the literature is required to identify the questionnaires used to assess the perception of students of their LE and to compare the quality of those questionnaires. The American Psychological and Educational Research Associations (APERA) have established standards for validity evidence, encompassing five key dimensions: (1) Content, (2) Response Process, (3) Internal Structure, (4) Relation to Other Variables, and (5) Consequences (Eignor, 2013). Content validity focuses on the development process and theoretical foundation of questionnaires. The response process centers on the analysis, accuracy, and thought processes related to respondents. Internal structure primarily addresses the reliability and factor analysis used to confirm the data structure of questionnaires. Relations to other variables examine the potential correlation between assessment scores and theoretically predicted outcomes or measures of the same construct. Consequences primarily describe the impact of assessment consequences on the validity of the score interpretation (Eignor, 2013).

The theoretical foundation/framework underpinning the questionnaire is a critical factor influencing its content validity, and hence its robustness (Beckman et al., 2005). Multiple theories and frameworks have been employed to ascertain the primary factors influencing the perceptions of students of their learning environment in the literature. Predominantly, experiential learning theory (Kolb, 1984), which emphasizes the central role that experience plays in the learning process, distinguishing this theory by its focus on experiential elements. Another common theory is the social theory (Bandura & Walters, 1977), which posits that individuals learn not only through direct experience but also by observing and imitating the behaviors of others. It also highlights the dynamic interaction between cognitive processes, environmental influences, and behavioral outcomes, and offers insights into how individuals acquire new behaviors through social interactions. Moos's framework (Moos, 1973, 1991) is the most commonly applied framework in the literature. Moos's renowned framework stands out for its emphasis on the interplay of environmental and interpersonal factors shaping individual experiences. Moos's conceptual model provides a nuanced perspective on the multifaceted influences that contribute to an individual's development and experiences, offering valuable insights into the realms of personal growth, social dynamics, and systemic adaptability (Moos, 1973, 1991).

Several systematic reviews were conducted to identify and compare the questionnaires that are used to examine the perception of healthcare professional students of their LE (Colbert-Getz et al., 2014; Hooven, 2014; Irby et al., 2021; Mansutti et al., 2017). These systematic reviews, however, were specific to one profession (Colbert-Getz et al., 2014; Hooven, 2014; Irby et al., 2021; Mansutti et al., 2017) or one setting (i.e., clinical versus preclinical). Only one systematic review, published in 2010, assessed the perception of students at a multidisciplinary level, including medicine, nursing and dentistry (Soemantri et al., 2010b). However, several newly developed questionnaires have been published after 2010 (Leighton, 2015; Rusticus et al., 2020; Shochet et al., 2015), including those emerged as a result of changes in the LE in the last years with the integration of artificial intelligence and virtual learning, and the development of educational and information technologies (Isba et al., 2020; Leighton, 2015; Rusticus et al., 2020; Shochet et al., 2015; Thibault, 2020). In that regard, no previous reviews included those newly developed questionnaires and examined the theoretical foundations of the developed questionnaires (Colbert-Getz et al., 2014; Hooven, 2014; Irby et al., 2021; Mansutti et al., 2017; Soemantri et al., 2010). Therefore, to overcome the potential gaps in the literature, this study aims to provide an up-to-date identification of questionnaires used to examine the perception of undergraduate healthcare professional students of their LE and to assess the quality of those identified questionnaires. The main objectives of this scoping review are to 1) categorize questionnaires used to assess the LE as perceived by undergraduate healthcare professional students based on development strategy, profession, and the setting; 2) identify the most commonly used questionnaires; 3) assess the validity evidence of the identified questionnaires; and 4) assess the theoretical foundation of the included questionnaires.

Methods

Protocol and Registration

This scoping review is compliant with the 2018 PRISMA statement for scoping reviews (PRISMA-ScR) (Tricco et al., 2018). The protocol for this scoping review was registered at RESEARCH REGISTRY and is available online at: [https://www.researchregistry. com/browse-the registry#registryofsystematicreviewsmetaanalyses/registryofsystematicreviewsmetaanalysesdetails/ 60070249970590001bd06f38/] with the number [reviewregistry1069].

Eligibility criteria

This review aimed to identify articles that assess the perception of undergraduate healthcare professional students of their LE. While there is no universally established definition for healthcare professional educational programs or a standardized list of included educational professional programs, the researchers categorized these programs as educational programs associated with specific professions, namely, medicine, pharmacy, dentistry, nursing, and allied health. The term "allied health personnel" in PubMed's MeSH is utilized to define allied health, and relevant professions listed under this MeSH term. Studies were included if the following criteria were met: (1) used a questionnaire that was originally developed to assess LE in HPE; (2) focused on undergraduate students only, or both undergraduate and postgraduate students; (3) aimed to describe a questionnaire development, or to analyze the psychometric measures of a questionnaire, or to describe the utilization of a questionnaire; (4) published as research articles; and (5) published in peer-reviewed journals.

Studies were excluded if they (1) used a questionnaire that was not developed to assess LE in HPE; (2) focused on postgraduate students only; (3) did not describe the development, validity evidence, or the utilization of a questionnaire (i.e. studies that used only qualitative methods); (4) not research articles (e.g., theses and dissertations, conference papers, and abstracts); or (5) not published in peer-reviewed journals.

Information sources

An electronic search was conducted in PubMed, ERIC, ProQuest, and Cochrane Library databases. The search was conducted between 1st July 2022 and 31st July 2022. Additional articles were identified from the reference lists of the identified articles and from other relevant reviews.

Search strategy

The search strategy was developed by the research team (BM, OY, and SE), who are academics with expertise in pharmacy education and HPE research. The search strategy was revised by the Head of the Research and Instruction Section of the library at Qatar University, who has extensive expertise in health science, education, pharmacy, and medical databases.

Five main concepts were used “learning environment”, “healthcare professions”, “higher education”, “questionnaire”, and “perception”. Several keywords were identified for each concept (Appendix 1) and were matched to database-specific indexing terms. The identified concepts were combined using Boolean connectors (AND) and the keywords were combined using a Boolean connector (OR). The search results were then imported into EndNote version 9 and duplicates were identified and removed. The search was restricted to the English language, but no restriction was applied to the year of publication. A filter for peer-reviewed articles was used only when available. The detailed search strategy is demonstrated in Appendix 1.

Selection of evidence sources

Two researchers (BM and OY) conducted the title/abstract screening for the identified articles. and excluded articles that are irrelevant to the research question based on the article title and abstract. Differences were resolved by a discussion with the third researcher (SE). The full-text screening was done by two investigators (OY and SE) who assessed the eligibility of the studies independently. Any disagreements were resolved by consensus via meetings and discussions. After the completion of the full-text screening, one researcher (OY) categorized the included questionnaires based on their utilization in the study into the following categories (originally developed questionnaires, adopted questionnaires, or adapted questionnaires). Studies that adopted a previously developed and validated questionnaire were not included in the data extraction of this scoping review, because they did not provide additional data about the development of the questionnaire or about the validity evidence of the questionnaire. However, the number of adoptions per questionnaire was recorded to address objective two of this review which is to identify the most commonly used questionnaires. In addition to the original development studies, adaptation studies that conducted psychometric measures testing, other than those done on the original development studies, were included in the data extraction.

Data charting process and data items

Two researchers performed the data extraction independently using a data collection EXCEL sheet to tabulate data extracted from the included articles. The extracted data included the title of the manuscript, name of authors, year of publication, country where studies were conducted, aim and objectives of the research, study design, and study setting (i.e., clinical, preclinical, or both). Moreover, data related to the identified questionnaires were extracted, including the type of the questionnaire (i.e. new, adapted, or adopted), description of the domains and content, healthcare profession of which the research was conducted, and validity evidence of the questionnaire (including the use of theory or a theoretical framework in questionnaire development). Before the data extraction sheet was fully implemented, two investigators (SE and OY) piloted it using a sample of the articles from the review to determine its applicability, identify potential issues, and make the required changes. Piloting the data extraction sheet helped to improve the consistency and dependability of data extraction. Following successful piloting, the full data extraction was carried out using the data extraction sheet by the two investigators independently.

Assessment of the psychometric properties of the included questionnaires

Studies that describe the development or assess the psychometric properties of questionnaires should ideally be based on high standards of methodological quality to be regarded as a legitimate and trustworthy instrument (Beckman et al., 2005). Data about the psychometric properties of the included questionnaires were collected, summarized, and assessed using the American Psychological and Education Research Associations (APERA) standards of validity evidence: (1) Content, (2) Response Process, (3) Internal Structure, (4) Relation to Other Variables, and (5) Consequences (Eignor, 2013), and using Beckman et al. (2005) interpretation of these standard categories (Beckman et al., 2005). Beckman et al. (2005) interpretation of these standard categories has been previously applied in various systematic reviews (Colbert-Getz et al., 2014; Fluit et al., 2010); including one systematic review that assessed the validity evidence of questionnaires that assess the perception of healthcare professional students of their LE(Colbert-Getz et al., 2014), aligning with the focus of this study. According to the assessment framework proposed by Beckman et al. (2005), each standard category was assigned a rating of N, 0, 1, or 2. The overall rating for each assessment tool was determined by calculating the total number of ratings corresponding to each standard category. However, it's important to note an overlap between "N" and "0″ ratings, where both can contribute to a zero-weight total score, despite their distinct interpretations. In response to this, the authors adopted a modified scoring system for the total sum score: "N" was treated as zero, "0″ as one, "1″ as two, and "2″ as three. Evaluating the theoretical basis of questionnaires was included in the total validity score, as part of APERA standards of validity evidence, under the ‘content’ category, where mentioning whether the questionnaire development was based on a theoretical basis and/or defining how this theoretical basis was applied/utilized would significantly change the score for the”content validity”. The definitions of Beckman et al. Table 1 summarizes the definitions of psychometric measures assessed by the Beckman et al. (2005) criteria and the interpretation of scores.

Results

Out of 5723 articles retrieved from databases, 1517 articles were duplicates and were removed. After the title/abstract screening of 4206 articles, 3723 articles were irrelevant studies and excluded. This resulted in 483 articles eligible for full-text screening. After the full-text screening, 359 articles adopted previously developed questionnaires and were excluded because they did not provide any data about the psychometric properties of the adopted questionnaire. In addition, 72 articles were excluded for other reasons (i.e., were not conducted in HPE, did not include undergraduate students, assessed a specific aspect of the LE only, such as assessed LE of a specific course in the curriculum, did not provide data about the questionnaire development/ validation). Moreover, reviewing the reference lists of the eligible articles identified an additional six articles. This resulted in 52 articles eligible for data extraction; 41 articles were the original articles for the development of the questionnaires, and 11 articles were adaptation studies that tested one or more of the psychometric measures of the questionnaire. Figure 1 illustrates the PRISMA flowchart of the article selection process.

Summary of the identified questionnaires

After the full-text screening, 41 questionnaires in the included articles were identified for data extraction. Table 2 provides a summary of the included questionnaires. The identified questionnaires in the included articles were divided into 3 categories, according to their development strategy. The first category included questionnaires developed based on a theoretical framework/theory, such as the Health Education Learning Environment Survey (HELES) (Rusticus et al., 2020), and the Manchester Clinical Placement Index (MCPI) (Dornan et al., 2012). The second category included adapted questionnaires, such as the Medical School Learning Environment Survey (MSLES) (Marshall, 1978) and the Dental Student Learning Environment Survey (DSLES) (Henzi et al., 2005). The third category included questionnaires developed through Delphi processes/ expert opinions, such as the Dundee Ready Educational Environment Measure (DREEM) questionnaire (Roff et al., 1997).

The majority of the identified questionnaires in the included articles were originally developed for one profession and, hence, were suitable to examine aspects specific to the context of that profession. For example, a total of 22 questionnaires out of the 41 identified questionnaires were specific to the medical profession. DREEM was originally developed for the medical profession, was the most adopted questionnaire across the medical profession and other HPs (Fig. 2), and it was translated into more than 5 languages (Al-Hazimi et al., 2004a, 2004b; Andalib et al., 2015; Demiroren et al., 2008; Dimoliatis et al., 2010; Miles et al., 2012). Fourteen questionnaires were developed specifically for the nursing profession, with CLES + T being the most widely adopted and translated into multiple languages (Johansson et al., 2010; Tomietto et al., 2012; Vizcaya-Moreno et al., 2015). For the dentistry profession, only two questionnaires were identified: DECLEI and DSLES, where DSLES was adopted more in subsequent dentistry profession studies than DECLEI. Only a few questionnaires were originally developed to evaluate the perception of multidisciplinary students of their LE. However, some questionnaires that were originally developed for a specific profession were utilized to evaluate the perception of students of their LE in other professions. For example, although (e.g., DREEM) was initially administered among medical students, it was also pilot-tested in the nursing profession, in the original development study (Roff et al., 1997) and then was adopted in the dental (Ali et al., 2012), health-sciences (Sunkad et al., 2015), and nursing professions (Abusaad et al., 2015). Regarding the setting for which the identified questionnaire in the included articles was developed, some questionnaires were developed to evaluate the perception of students of their LE in the clinical setting (e.g., the Clinical Learning Environment Inventory (CLEI) and MCPI), while others were used to evaluate the perception of students of their LE in both non-clinical and clinical settings (e.g., DREEM). Table 4 summarizes the identified questionnaires based on the settings of the LE and the profession.

The psychometric properties of the identified questionnaires

Evaluation of the psychometric properties of the questionnaires in the included articles was conducted using the five standard categories of APERA standards of validity evidence: content validity, response process, internal structure, relation to other variables, and consequences (Eignor, 2013), and using Beckman et al. (2005) interpretation of these standard categories (Beckman et al., 2005). Content validity and internal structure categories were reported and assessed in most of the questionnaires, while the response process and relation to other variables measures were reported and assessed in a smaller number of studies. Only one questionnaire; the Preclinical Learning Climate Scale (PLCS) provided data on the five psychometric measures (Yılmaz et al., 2016). Furthermore, the PLCS has the highest Beckman et al. (2005) total validity score among other questionnaires, followed by the Johns Hopkins Learning Environment Scale (JHLES). Evaluating the validity evidence of the questionnaires in the included articles based on the profession for which they were originally developed demonstrated that Clinical Learning Environment Quick Survey (CLEQS) (Simpson et al., 2021), followed by DREEM (Roff et al., 1997) scored the highest among the questionnaires developed for the medical profession. Whereas the Clinical Learning Environment, Supervision and Nurse Teacher (CLES + T) Scale (Saarikoski et al., 2008), the Clinical Learning Environment and Supervision (CLES) instrument (Saarikoski & Leino-Kilpi, 2002), and the Clinical Learning Environment Diagnostic Inventory (CLEDI) (Hosoda, 2006) scored the highest among questionnaires developed for the nursing profession, followed by the Clinical Learning Environment Comparison Survey (CLECS) (Leighton, 2015). Evaluating the validity evidence of the questionnaires developed for the dentistry profession indicated that DECLEI had a higher validity evidence score than DSLES. Finally, the MSLES (Marshall, 1978) followed by the Healthcare Education Micro-Learning Environment Measure (HEMLEM) (Isba et al., 2020) scored the highest among questionnaires developed for multidisciplinary. Table 3 provides a summary of the validity evidence of the identified questionnaires in the included articles.

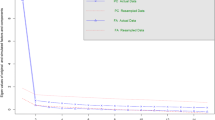

Figure 2 demonstrates the relationship between the validity evidence score of each questionnaire and the frequency of subsequent use (adoption) of the questionnaire. The adoption of the majority of the identified questionnaires in subsequent studies was limited, with DREEM, CLES + T, CLEI, CLES, JHLES, and MSLES being the most frequently adopted questionnaires. Notably, although PLCS had the highest validity evidence score among all identified questionnaires, it was not adopted in subsequent studies. While DREEM was the most frequently adopted questionnaire, it ranked ninth in the validity evidence score.

Framework use

Less than half of the identified questionnaires in the included articles (n = 15/41) were developed based on a theory or a theoretical framework, such as the JHLES, HELES, and CLEI questionnaires. The most commonly used theory in the development of the questionnaires was the experiential learning theory which emphasizes learning through experience and reflection (Kolb, 1984). Additionally, Moos's framework, known for its focus on environmental and interpersonal factors influencing individuals, was the most commonly used theoretical framework (Moos, 1973). The theoretical frameworks and theories utilized are summarized in Table 2.

Discussion

This scoping review aims to identify questionnaires used to examine the perception of undergraduate healthcare professional students of their LE and to assess the validity evidence of those identified questionnaires. This review resulted in identifying original or adapted questionnaires used to assess the perception of undergraduate healthcare professional students of their LE and in providing an assessment of their validity evidence. This review shed light on the most frequently reported psychometric properties for developing and validating questionnaires that were used to assess the perception of healthcare professional students of their LE in HPEPs, as well as on the trends of adopting LE questionnaires across different HPEPs (Table 4).

The findings of this review suggested that DREEM was the most commonly used questionnaire for examining LE in medical profession and across different professions, and was widely adopted in various countries and cultures worldwide (Dimoliatis et al., 2010; Soemantri et al., 2010). This finding aligns with the results of Colbert-Getz et al.’s (2014) systematic review (Colbert-Getz et al., 2014), which argued that DREEM, initially developed by international students in Dundee University Medical School, achieved widespread usage as these students implemented it in their respective institutions (Colbert-Getz et al., 2014). Moreover, Colbert-Getz et al. (2014) claimed that researchers usually choose DREEM, because it is one of the oldest and most widely adopted questionnaires (Colbert-Getz et al., 2014). This prompts researchers to adopt DREEM to facilitate comparisons of their findings on students' perceptions of their learning environment with other institutions that have employed the same questionnaire before (Miles et al., 2012). It is worth noting that despite the length of DREEM questionnaire (50 items), the questions are generally easy to comprehend, which could have potentially facilitated its popularity and spread.

This review demonstrated that the majority of the questionnaires have limited validity evidence, where ‘content validity’ and ‘internal structure’ were the most reported validity evidence categories of APERA standards. Furthermore, the majority of the questionnaires did not have a thorough assessment of the ‘response process’, ‘relation to other variables’, and the ‘consequences’ categories. This finding is consistent with Colbert-Getz et al.’s (2014) systematic review of studies in medical education, which utilized APERA standards and Beckman et al. (2005) interpretation (Colbert-Getz et al., 2014). Moreover, this finding is in line with Mansutti et al.’s (2017) systematic review of studies in nursing education, which utilized the consensus-based standards for the selection of health measurement instruments (COSMIN) tool (Mansutti et al., 2017) to evaluate the methodological quality of the psychometric properties of instruments developed to assess the clinical LE in the nursing education (Mansutti et al., 2017). The COSMIN tool facilitates a more comprehensive assessment of both psychometric properties and research methods, organized into distinct dimensions labeled in alignment with the property being evaluated. This includes internal consistency, reliability, measurement error, content validity (including face validity), structural validity, hypotheses testing (including convergent validity), criterion validity, cross-cultural, responsiveness, interpretability, and generalisability of the findings. Mansutti et al.’s (2017) systematic review revealed that concept and construct validity were inadequately addressed and infrequently evaluated by the nursing student population. Whereas, some properties, such as reliability, measurement error, and criterion validity, were rarely considered (Mansutti et al., 2017). Limited validity evidence of the developed questionnaires continues to be a challenge in the health literature (Bai et al., 2008; Hirani et al., 2013). This challenge was explained by Boateng et al. (2018) who argued that the process of instrument development and validation is complex and requires knowledge and skills in sophisticated statistical analysis methods (Boateng et al., 2018). However, several graduate programs in behavioral and health sciences do not adequately account for those statistical analysis methods in training and educating their students (Boateng et al., 2018).

In this review, PLCS demonstrated the highest validity evidence on the five APERA standards categories among all questionnaires (Yılmaz et al., 2016). PLCS was developed in 2016, and hence it was not identified in Soemantri et al.’s (2010) (Soemantri et al., 2010) and in Colbert-Getz et al.’s (2014) (Colbert-Getz et al., 2014) systematic reviews. In 2010, Soemantri et al. systematic review utilized three types of validity assessment (i.e., content, criterion-related, and construct) and argued that DREEM is the best questionnaire for examining the perception of undergraduate medical students of their LE (Soemantri et al., 2010). However, Soemantri et al.’s approach to evaluating the content, criterion-related, and construct validities did not include essential psychometric properties, such as response process, internal structure, and consequences. Consequently, DREEM received a higher score in Soemantri et al.’s review compared to the current review, where a relatively lower validity evidence score was assigned, adhering to APERA standards and Beckman et al. (2005) interpretation. In Colbert-Getz et al.’s (2014) systematic review (Colbert-Getz et al., 2014), Pololi and Price's (2000) questionnaire (Pololi & Price, 2000) received the highest validity evidence score using APERA standards; however, Colbert-Getz et al. did not take the category ‘consequences’ into consideration (Colbert-Getz et al., 2014). Thus, Pololi and Price's (2000) questionnaire obtained a lower validity evidence score in the current review, aligning with APERA standards and Beckman et al. (2005) interpretation. CLES + T received the highest validity evidence among questionnaires developed for the nursing profession in the current review as well as in Mansutti et al.’s (2017) systematic review (Mansutti et al., 2017), which utilized the COSMIN tool for evaluating research methods and psychometric properties of instruments designed to assess the clinical LE in the nursing education (Mansutti et al., 2017). The consistency between Mansutti et al.’s (2017) systematic review and the current review potentially suggests the applicability of the use of APERA standards and Beckman et al. (2005) interpretation for the psychometric testing assessment of questionnaires in HPE.

The utilization of theory or theoretical framework in questionnaire development ensures that the research findings are theory-driven, which enhances their robustness and rigor (Schönrock-Adema, 2012; Stewart & Susan Klein, 2016). This review revealed that less than fifty percent of the included articles, (17/41), utilized a theory or a theoretical framework in the questionnaire development process. This finding was supported by other studies that indicated that the development of questionaries for examining the perception of healthcare professional students of their LE usually lacks solid grounding on theoretical frameworks. This was justified by Schnrock-Adema et al. by the lack of consensus about the most suitable framework to assess the LE (Schönrock-Adema, 2012). Remarkably, the development of both DREEM, which is the most commonly used questionnaire and PLCS, which is the most valid questionnaire was not grounded on a theoretical basis. The findings of this scoping review suggest that the two most frequently utilized theories and theoretical frameworks in the development of the questionnaires in the included articles were Kolb’s (1984) experiential learning theory (Kolb, 1984), and Moos’s (1973, 1991) learning environment framework (Moos, 1973, 1991), respectively. According to Kolb (1984)’s experiential learning theory, learning and knowledge development takes place through engagement with the real-world environment (Abdulwahed, 2010), which further highlights that the LE plays an indispensable role in the learning process and significantly influences the learning experience, performance, and learning outcome of students (Kolb, 1984). Moos’s (1973, 1991) learning environment framework provided an integrated system approach, which analyzes the LE and the LE effect on learning experiences and outcomes holistically (Moos, 1973, 1991). Moos’s framework is composed of three elements: ‘personal development’, ‘relationships’, and ‘system maintenance and change’ (Insel & Moos, 1974; Moos, 1973, 1991). The ‘personal development’ element comprises the opportunities within an environment and the capacity for personal growth and self-esteem improvement. The ‘relationship’ element involves the extent to which individuals deal with and support each other in an environment. The ‘system maintenance and change’ element represents the environmental physical dimension, in terms of clarity and transparency to change within an institutional structural setting (Insel & Moos, 1974; Moos, 1973, 1991). In the current review, Kolb’s (1984) experiential learning theory and Moos’s (1973, 1991) learning environment framework were utilized for developing questionnaires that were intended to be used in clinical, experiential learning settings, such as HEMLEM (Isba et al., 2020) and CLEI (Chan, 2001; Chan, 2003), as well as in those that were intended to be used in both, clinical and academic settings such as JHLES (Shochet et al., 2015) and HELES (Rusticus et al., 2020).

Limitations and strengths

This is the first review that provides a comprehensive and critical assessment of questionnaires that are used to assess the perception of undergraduate students of their LE in HPEPs, with no restriction to profession, or setting. Moreover, this review is unique in indicating whether a theory or a theoretical framework was utilized in the development of the questionnaire.

Nevertheless, a few limitations should be recognized when interpreting the findings of this review. Although the use of the APERA standards of validity evidence provided a valuable assessment of the quality of the included questionnaires, other reviews have used more detailed and comprehensive criteria (Mansutti et al., 2017), such as the COSMIN tool. Using the COSMIN tool in this review was not practical because of its cognitively demanding nature. Another point that should be taken into consideration when interpreting the findings of this review is the total validity score. Adopting the APERA lens for validity assessment and Beckman et al. (2005) interpretation assumes equal weight for all five evidence sources and disregards potential differences in their significance, which depends on the specific use context of the assessment. A more flexible and nuanced approach to validity arguments is provided by Kane's framework, which enables prioritization according to the assessment's purpose and inferences as well as a personalized focus on pertinent data (Cook et al., 2015; Kane, 2006, 2013). Kane's framework would be an invaluable resource for educators seeking a more thorough and context-sensitive knowledge of assessment validity. Again, the cognitively demanding nature of Kane's framework rendered its application impractical for this review. An additional limitation is that this review did not report the interrater reliability for scoring the sources of validity evidence for each questionnaire. This could have been beneficial in providing valuable insights into the consistency of judgments among reviewers and understanding the potential limitations of using the adopted methodology. Nevertheless, an attempt was made during the data extraction stage to enhance the interrater reliability of the validity evidence assessment of the questionnaires by piloting the data extraction sheet on a sample of the included articles. It is worth mentioning, however, that challenges in consistently measuring and evaluating evidence for specific APERA categories of validity evidence may result from the scarcity of reported evidence related to those categories (Beckman et al., 2005). Furthermore, the search in this review was limited to three databases, possibly leading to the exclusion of significant articles exclusive to other databases. Nonetheless, a comprehensive review of the reference lists in the included articles was conducted to identify relevant studies. Finally, restricting the search to English-language publications has potentially resulted in excluding valuable research articles published in other languages, which could affect the generalizability and comprehensiveness of the findings of this scoping review.

Conclusions

This scoping review provided an overview of the available questionnaires in the HPE literature to assess the perception of undergraduate students of their LE. The review also provided a summary of the validity evidence and theoretical basis of the identified questionnaires. A total of 41 questionnaires were identified in the included articles for different HPEPs. The results suggested that DREEM, CLES + T, and CLEI were the most commonly used questionnaires, while PLCS followed by JHLES had the highest total validity evidence score, using the APERA standards of validity evidence and Beckman et al. (2005) interpretation of these standard categories. Moreover, this review demonstrated that only a few questionnaires in the included articles were designed using a theoretical foundation. Furthermore, the findings of this research suggested that the newly developed questionnaires that are theoretically driven had well-established validity evidence. Therefore, a culture of developing and validating questionnaires according to high standards and best practices needs to be adopted and reinforced by healthcare professional educators to ensure the rigor of studies conducted to improve the quality of the LE. Furthermore, the investigators of the current review strongly advocate for a shift from adopting questionnaires based on the wide spread of use to that based on validity and reliability evidence, as well as to contribute to establishing the psychometric measures of the newly developed ones. Finally, this review did not reveal any questionnaire that was specifically developed to assess the perception of students of their LE in some of the major HPEPs such as pharmacy or biomedical sciences. Consequently, healthcare professional educators and scholars are encouraged to examine the common aspects of the LE within their respective health professions, and ultimately plan to investigate those common aspects across various HPEPs in order to understand how they influence the perception of students of their learning experiences and outcome.

References

Abdulwahed, M. (2010). Towards enhancing laboratory education by the development and evaluation of the" TriLab": a triple access mode (virtual, hands-on and remote) laboratory © Mahmoud Abdulwahed.

Abusaad, F. E. S., Mohamed, H. E. S., & El-Gilany, A. H. (2015). Nursing students’ perceptions of the educational learning environment in pediatric and maternity courses using DREEM questionnaire. Journal of Education and Practice, 6(29), 26–32.

Ahmad, M. S., Bhayat, A., Fadel, H. T., & Mahrous, M. S. (2015). Comparing dental students’ perceptions of their educational environment in Northwestern Saudi Arabia. Saudi Medical Journal, 36(4), 477–483. https://doi.org/10.15537/smj.2015.4.10754

Ahmed, Y., Taha, M. H., Al-Neel, S., & Gaffar, A. M. (2018). Students’ perception of the learning environment and its relation to their study year and performance in Sudan. International Journal of Medical Education, 9, 145.

Al Ayed, I., & Sheik, S. (2008). Assessment of the educational environment at the College of Medicine of King Saud University. Riyadh. EMHJ-Eastern Mediterranean Health Journal, 14(4), 953–959.

AlHaqwi, A. I., Kuntze, J., & van der Molen, H. T. J. B. M. E. (2014). Development of the clinical learning evaluation questionnaire for undergraduate clinical education: factor structure, validity, and reliability study. BMC Medical Education, 14(1), 1–8.

Al-Hazimi, A., Al-Hyiani, A., & Roff, S. (2004a). Perceptions of the educational environment of the medical school in King Abdul Aziz University, saudi Arabia. Medical Teacher, 26(6), 570–573. https://doi.org/10.1080/01421590410001711625

Al-Hazimi, A., Zaini, R., Al-Hyiani, A., Hassan, N., Gunaid, A., Ponnamperuma, G., Karunathilake, I., Roff, S., McAleer, S., & Davis, M. (2004b). Educational environment in traditional and innovative medical schools: A study in four undergraduate medical schools. Education for Health-Abingdon-Carfax Publishing Limited., 17(2), 192–203.

Ali, K., McHarg, J., Kay, E., Moles, D., Tredwin, C., Coombes, L., & Heffernan, E. J. E. J. O. D. E. (2012). Academic environment in a newly established dental school with an enquiry-based curriculum: perceptions of students from the inaugural cohorts. European Journal of Dental Education, 16(2), 102–109.

Andalib, M., Malekzadeh, M., Zahra, A., Daryabeigi, M., Yaghmaei, B., Ashrafi, M., Rabbani, A., & Rezaei, N. (2015). Evaluation of educational environment for medical students of a tertiary pediatric hospital in tehran, using DREEM questionnaire. Iranian Journal of Pediatrics, 25, e2362. https://doi.org/10.5812/ijp.2362

Bai, Y., Peng, C.-Y.J., & Fly, A. D. (2008). Validation of a short questionnaire to assess mothers’ perception of workplace breastfeeding support. Journal of the American Dietetic Association, 108(7), 1221–1225.

Ballouk, R., Mansour, V., Dalziel, B., & Hegazi, I. (2022). The development and validation of a questionnaire to explore medical students’ learning in a blended learning environment. BMC Medical Education, 22(1), 1–9.

Bandura, A., & Walters, R. H. (1977). Social learning theory. Englewood Cliffs: Prentice Hall.

Beckman, T. J., Cook, D. A., & Mandrekar, J. N. (2005). What is the validity evidence for assessments of clinical teaching? Journal of General Internal Medicine, 20(12), 1159–1164.

Billett, S. (2002). Workplace pedagogic practices: Co–participation and learning. British Journal of Educational Studies, 50(4), 457–481.

Bloom, B. S. J. E. A. Q. (1966). Stability and change in human characteristics: Implications for school reorganization. Educational Administration Quarterly, 2(1), 35–49.

Boateng, G. O., Neilands, T. B., Frongillo, E. A., Melgar-Quiñonez, H. R., & Young, S. L. (2018). Best practices for developing and validating scales for health, social, and behavioral research: A primer. Frontiers in Public Health, 6, 149.

Britt, L. L., Ball, T. C., Whitfield, T. S., & Woo, C. W. (2022). Students’ perception of the classroom environment: a comparison between innovative and traditional classrooms. Journal of the Scholarship of Teaching and Learning, 22(1), 31–17.

Brown, T., Williams, B., & Lynch, M. (2011). The Australian DREEM: Evaluating student perceptions of academic learning environments within eight health science courses. International Journal of Medical Education, 2, 94.

Carr, S. E., Miller, S. J., Siddiqui, Z. S., & Jonas-Dwyer, D. R. (2015). Enhancing Capabilities in health professions education. International Journal of Medical Education, 6, 161–165. https://doi.org/10.5116/ijme.5641.060c

Cerón, M. C., Garbarini, A. I., & Parro, J. F. (2016). Comparison of the perception of the educational atmosphere by nursing students in a Chilean university. Nurse Education Today, 36, 452–456.

Chan, D. S. (2001). Combining qualitative and quantitative methods in assessing hospital learning environments. International Journal of Nursing Studies, 38(4), 447–459.

Chan, D. S. (2003). Validation of the clinical learning environment inventory. Western Journal of Nursing Research, 25(5), 519–532.

Chuan, O. L., & Barnett, T. (2012). Student, tutor and staff nurse perceptions of the clinical learning environment. Nurse Education in Practice, 12(4), 192–197. https://doi.org/10.1016/j.nepr.2012.01.003

Closs, L., Mahat, M., & Imms, W. (2022). Learning environments’ influence on students’ learning experience in an Australian faculty of business and economics. Learning Environments Research, 25(1), 271–285. https://doi.org/10.1007/s10984-021-09361-2

Colbert-Getz, J. M., Kim, S., Goode, V. H., Shochet, R. B., & Wright, S. M. (2014). Assessing medical students’ and residents’ perceptions of the learning environment: Exploring validity evidence for the interpretation of scores from existing tools. Academic Medicine, 89(12), 1687–1693.

Collins, A., & Kapur, M. (2006). Cognitive apprenticeship (Vol. 291). na.

Cook, D. A., Brydges, R., Ginsburg, S., & Hatala, R. (2015). A contemporary approach to validity arguments: A practical guide to K ane’s framework. Medical Education, 49(6), 560–575.

Council, E. (1998). International standards in medical education: Assessment and accreditation of medical schools’–educational programmes. A WFME Position Paper. Medical Education, 32(5), 549–558.

Damiano, R. F., Furtado, A. O., da Silva, B. N., Ezequiel, O. D. S., Lucchetti, A. L., DiLalla, L. F., & Lucchetti, G. (2020). Measuring students’ perceptions of the medical school learning environment: translation, transcultural adaptation, and validation of 2 instruments to the Brazilian Portuguese language. Journal of Medical Education and Curricular Development, 7, 2382120520902186.

Demiroren, M., Palaoglu, O., Kemahli, S., Ozyurda, F., & Ayhan, I. H. (2008). Perceptions of students in different phases of medicai education of educational environment: Ankara university facuity of medicine. Medical Education Online, 13(1), 4477. https://doi.org/10.3402/meo.v13i.4477

Dimoliatis, I. D., Vasilaki, E., Anastassopoulos, P., Ioannidis, J. P., & Roff, S. (2010). Validation of the Greek translation of the Dundee Ready Education Environment Measure (DREEM). Education for Health (abingdon, England), 23(1), 348.

Dornan, T., Muijtjens, A., Graham, J., Scherpbier, A., & Boshuizen, H. (2012). Manchester clinical placement index (MCPI) conditions for medical students’ learning in hospital and community placements. Advances in Health Sciences Education, 17, 703–716.

D’Souza, M. S., Karkada, S. N., Parahoo, K., & Venkatesaperumal, R. (2015). Perception of and satisfaction with the clinical learning environment among nursing students. Nurse Education Today, 35(6), 833–840. https://doi.org/10.1016/j.nedt.2015.02.005

Dunn, S. V. (1995). The development of a clinical learning environment scale. Journal of Advanced Nursing, 22(6), 1166–1173.

Dunne, F., McAleer, S., & Roff, S. (2006). Assessment of the undergraduate medical education environment in a large UK medical school. Health Education Journal, 65(2), 149–158.

Dweck, C. S. (2006). Mindset: The new psychology of success. Random house.

Education, L. (2009). Liaison committee on medical education (LCME) standards on diversity. In: American Association of Medical Colleges, Washington, DC.

Eignor, D. R. (2013). The standards for educational and psychological testing. In K. F. Geisinger, B. A. Bracken, J. F. Carlson, J.-I.C. Hansen, N. R. Kuncel, S. P. Reise, & M. C. Rodriguez (Eds.), APA handbook of testing and assessment in psychology (pp. 245–250). Test theory and testing and assessment in industrial and organizational psychology: American Psychological Association.

Farrell, G. A., & Coombes, L. (1994). Student nurse appraisal of placement (SNAP): an attempt to provide objective measures of the learning environment based on qualitative and quantitative evaluations. Nurse Education Today, 14(4), 331–336. https://doi.org/10.1016/0260-6917(94)90146-5

Fego, M. W., Olani, A., & Tesfaye, T. (2022). Nursing students’ perception towards educational environment in governmental Universities of Southwest Ethiopia: A qualitative study. PLoS ONE, 17(3), e0263169.

Fluit, C. R., Bolhuis, S., Grol, R., Laan, R., & Wensing, M. (2010). Assessing the quality of clinical teachers: A systematic review of content and quality of questionnaires for assessing clinical teachers. Journal of General Internal Medicine, 25(12), 1337–1345. https://doi.org/10.1007/s11606-010-1458-y

Fouad, S., El Araby, S., Abed, R. A. R. O., Hefny, M., & Fouad, M. (2020). Using item response theory (IRT) to assess psychometric properties of undergraduate clinical education environment measure (UCEEM) among medical students at the faculty of medicine, Suez Canal University. Education in Medicine Journal, 12(1), 15–27. https://doi.org/10.21315/eimj2020.12.1.3

Genn, J. M. (2001). AMEE medical education guide no. 23 (Part 2): Curriculum, environment, limate, quality and change in medical education: a unifying perspective. Medical Teacher, 23(5), 445–454. https://doi.org/10.1080/01421590120075661

Gruppen, L. D., Irby, D. M., Durning, S. J., & Maggio, L. A. (2019). Conceptualizing learning environments in the health professions. Academic Medicine, 94(7), 969–974.

Gu, Y. H., Xiong, L., Bai, J. B., Hu, J., & Tan, X. D. (2018). Chinese version of the clinical learning environment comparison survey: Assessment of reliability and validity. Nurse Education Today, 71, 121–128. https://doi.org/10.1016/j.nedt.2018.09.026

Gustafsson, M., Blomberg, K., & Holmefur, M. (2015). Test-retest reliability of the clinical learning environment, supervision and nurse teacher (CLES+ T) scale. Nurse Education in Practice, 15(4), 253–257.

Henzi, D., Davis, E., Jasinevicius, R., Hendricson, W., Cintron, L., & Isaacs, M. (2005). Appraisal of the dental school learning environment: The students’ view. Journal of Dental Education, 69(10), 1137–1147.

Hirani, S. A. A., Karmaliani, R., Christie, T., Parpio, Y., & Rafique, G. (2013). Perceived breastfeeding support assessment tool (PBSAT): Development and testing of psychometric properties with Pakistani urban working mothers. Midwifery, 29(6), 599–607.

Hoidn, S. (2016). Student-centered learning environments in higher education classrooms. Springer.

Hooven, K. (2014). Evaluation of instruments developed to measure the clinical learning environment: An integrative review. Nurse Educator, 39(6), 316–320. https://doi.org/10.1097/nne.0000000000000076

Hosoda, Y. J. (2006). Development and testing of a clinical learning environment diagnostic inventory for baccalaureate nursing students. Journal of Advanced Nursing, 56(5), 480–490.

Hutchins, E. B. (1961). The 1960 medical school graduate: His perception of his faculty, peers, and environment. Journal of Medical Education, 36, 322–329.

Insel, P. M., & Moos, R. H. (1974). Psychological environments: Expanding the scope of human ecology. American Psychologist, 29(3), 179.

Irby, D. M., O’Brien, B. C., Stenfors, T., & Palmgren, P. J. (2021). Selecting instruments for measuring the clinical learning environment of medical education: A 4-domain framework. Academic Medicine, 96(2), 218–225. https://doi.org/10.1097/acm.0000000000003551

Isba, R., Rousseva, C., Woolf, K., Byrne-Davis, L. J. B., & m. e. (2020). Development of a brief learning environment measure for use in healthcare professions education: the Healthcare Education Micro Learning Environment Measure (HEMLEM). BMC Medical Education, 20(1), 1–9.

Jaffery, A. H., & Kishwar, M. J. P. A. F. M. J. (2019). Student’s perception of educational environment and their academic performance; are they related? Pakistan Armed Forces Medical Journal, 6, 1267.

Jeffries, P. (2020). Simulation in nursing education: From conceptualization to evaluation. Lippincott Williams & Wilkins.

Jessee, M. A., Russell, R. G., Kennedy, B. B., Dietrich, M. S., & Schorn, M. N. J. N. E. (2020). Development and Pilot Testing of a Multidimensional Learning Environment Survey. Nurse Educator, 45(5), E50–E54.

Jeyashree, K., Shewade, H. D., & Kathirvel, S. (2018). Development and psychometric testing of an abridged version of Dundee ready educational environment measure (DREEM). Environmental Health and Preventive Medicine, 23(1), 13. https://doi.org/10.1186/s12199-018-0702-7

Jiboyewa, D., & Umar, M. A. (2015). Academic achievement at the university of Maiduguri: A survey of teaching-learning environment. Journal of Education and Practice, 6(36), 146–157.

Johansson, U. B., Kaila, P., Ahlner-Elmqvist, M., Leksell, J., Isoaho, H., & Saarikoski, M. J. (2010). Clinical learning environment, supervision and nurse teacher evaluation scale: psychometric evaluation of the Swedish version. Journal of Education and Practice, 66(9), 2085–2093.

Johnson, H. C. (1978). Minority and nonminority medical students’ perceptions of the medical school environment. Academic Medicine, 53(2), 146–157.

Junaid Sarfraz, K., Tabasum, S., Yousafzai, U. K., & Fatima, M. (2011). DREEM on: validation of the dundee ready education environment measure in Pakistan. JPMA-Journal of the Pakistan Medical Association, 61(9), 885.

Kane, M. T. (2006). Validation. Educational Measurement, 4(2), 17–64.

Kane, M. T. (2013). Validating the interpretations and uses of test scores. Journal of Educational Measurement, 50(1), 1–73.

Kishore, K., Jaswal, V., Kulkarni, V., & De, D. (2021). Practical guidelines to develop and evaluate a questionnaire. Indian Dermatology Online Journal, 12(2), 266–275. https://doi.org/10.4103/idoj.IDOJ_674_20

Kolb, D. A. (1984). Experience as the source of learning and development. Prentice Hall.

Kossioni, A., Lyrakos, G., Ntinalexi, I., Varela, R., & Economu, I. J. E. J. O. D. E. (2014). The development and validation of a questionnaire to measure the clinical learning environment for undergraduate dental students (DECLEI). European Journal of Dental Education, 18(2), 71–79.

Krupat, E., Borges, N. J., Brower, R. D., Haidet, P. M., Schroth, W. S., Fleenor, T. J., & Uijtdehaage, S. J. A. M. (2017). The Educational Climate Inventory: measuring students’ perceptions of the preclerkship and clerkship settings. Academic Medicine, 92(12), 1757–1764.

Leighton, K. (2015). Development of the Clinical learning environment comparison survey. Clinical Simulation in Nursing, Academic Medicine, 11(1), 44–51. https://doi.org/10.1016/j.ecns.2014.11.002

Leighton, K., Kardong-Edgren, S., Schneidereith, T., Foisy-Doll, C., & Wuestney, K. A. (2021). Meeting undergraduate nursing students’ clinical needs: A comparison of traditional clinical, face-to-face simulation, and screen-based simulation learning environments. Nurse Educator, 46(6), 349–354. https://doi.org/10.1097/nne.0000000000001064

Lizzio, A., Wilson, K., & Simons, R. (2002). University students’ perceptions of the learning environment and academic outcomes: Implications for theory and practice. Studies in Higher Education, 27(1), 27–52.

Lokuhetty, M. D., Warnakulasuriya, S. P., Perera, R. I., De Silva, H. T., & Wijesinghe, H. D. (2010). Students’ perception of the educational environment in a Medical Faculty with an innovative curriculum in Sri Lanka.

Mansutti, I., Saiani, L., Grassetti, L., & Palese, A. (2017). Instruments evaluating the quality of the clinical learning environment in nursing education: A systematic review of psychometric properties. International Journal of Nursing Studies, 68, 60–72. https://doi.org/10.1016/j.ijnurstu.2017.01.001

Marshall, R. E. J. J. O. M. E. (1978). Measuring the medical school learning environment. Academic Medicine, 53(2), 98–104.

Maudsley, R. F. (2001). Role models and the learning environment: Essential elements in effective medical education. Academic Medicine, 76(5), 432–434. https://doi.org/10.1097/00001888-200105000-00011

Mikkonen, K., Elo, S., Miettunen, J., Saarikoski, M., & Kääriäinen, M. J. J. O. A. N. (2017). Development and testing of the CALD s and CLES+ T scales for international nursing students’ clinical learning environments. Journal of Advanced Nursing, 73(8), 1997–2011.

Miles, S., Swift, L., & Leinster, S. J. (2012). The dundee ready education environment measure (DREEM): A review of its adoption and use. Medical Teacher, 34(9), e620–e634. https://doi.org/10.3109/0142159X.2012.668625

Moore-West, M., Harrington, D., Mennin, S., & Kaufman, A. (1986). Distress and attitudes toward the learning environment: Effects of a curriculum innovation. Research in medical education : Proceedings of the … annual Conference. Conference on Research in Medical Education, 25, 293–300.

Moos, R. H. (1973). Conceptualizations of human environments. Am Psych, 28(8), 652.

Moos, R. H. (1991). Connections between school, work, and family settings. In B. J. Fraser & H. J. Walberg (Eds.), Educational environments: Evaluation, antecedents and consequences (pp. 29–53). Pergamon Press.

Newton, J. M., Jolly, B. C., Ockerby, C. M., & Cross, W. M. J. J. O. A. N. (2010). Clinical learning environment inventory: factor analysis. Journal of Advanced Nursing, 66(6), 1371–1381.

Nosair, E., Mirghani, Z., & Mostafa, R. M. (2015). Measuring students’ perceptions of educational environment in the PBL program of Sharjah Medical College. Journal of Medical Education and Curricular Development, 2, S29926.

Orton, H. D. (1979). Ward learning climate and student nurse response Sheffield Hallam University (United Kingdom).

Oyira, E. J., Obot, G., & Inifieyanakuma, O. J. G. J. O. P. (2016). Academic dimension of classroom learning environment and students’ nurses attitude toward schooling. Global Journal of Pure and Applied Sciences, 22(2), 255–261.

Parry, J., Mathers, J., Al-Fares, A., Mohammad, M., Nandakumar, M., & Tsivos, D. (2002). Hostile teaching hospitals and friendly district general hospitals: Final year students’ views on clinical attachment locations. Medical Education, 36(12), 1131–1141. https://doi.org/10.1046/j.1365-2923.2002.01374.x

Pimparyon, S. M. C., Pemba, S., Roff, S., & P. (2000). Educational environment, student approaches to learning and academic achievement in a Thai nursing school. Medical Teacher, 22(4), 359–364.

Pololi, L. H., Evans, A. T., Nickell, L., Reboli, A. C., Coplit, L. D., Stuber, M. L., Vasiliou, V., Civian, J. T., & Brennan, R. T. J. A. P. (2017). Assessing the learning environment for medical students: an evaluation of a novel survey instrument in four medical schools. Academic Psychiatry, 41(3), 354–359.

Pololi, L., & Price, J. (2000). Validation and use of an instrument to measure the learning environment as perceived by medical students. Teaching & Learning in Medicine, 12(4), 201–207.

Rawas, H., & Yasmeen, N. (2019). Perception of nursing students about their educational environment in College of Nursing at King Saud Bin Abdulaziz University for Health Sciences. Saudi Arabia. Medical Teacher, 41(11), 1307–1314.

Roff, S., & McAleer, S. (2001). What is educational climate? Medical Teacher, 23(4), 333–334.

Roff, S., McAleer, S., Harden, R. M., Al-Qahtani, M., Ahmed, A. U., Deza, H., Groenen, G., & Primparyon, P. (1997). Development and validation of the dundee ready education environment measure (DREEM). Medical Teacher, 19(4), 295–299. https://doi.org/10.3109/01421599709034208

Rosenbaum, M. E., Schwabbauer, M., Kreiter, C., & Ferguson, K. J. (2007). Medical students’ perceptions of emerging learning communities at one medical school. Academic Medicine, 82(5), 508–515. https://doi.org/10.1097/ACM.0b013e31803eae29

Rothman, A. I., & Ayoade, F. (1970). The development of a learning environment: A questionnaire for use in curriculum evaluation. Academic Medicine, 45(10), 754–759.

Rusticus, S. A., Wilson, D., Casiro, O., & Lovato, C. (2020). Evaluating the quality of health professions learning environments: Development and validation of the health education learning environment survey (HELES). Evaluation & the Health Professions, 43(3), 162–168.

Rusticus, S., Worthington, A., Wilson, D., & Joughin, K. (2014). The Medical School Learning Environment Survey: An examination of its factor structure and relationship to student performance and satisfaction. Learning Environments Research, 17(3), 423–435.

Saarikoski, M., Isoaho, H., Leino-Kilpi, H., & Warne, T. J. I. J. O. N. E. S. (2005). Validation of the clinical learning environment and supervision scale. International Journal of Nursing Education Scholarship. https://doi.org/10.2202/1548-923X.1081

Saarikoski, M., Isoaho, H., Warne, T., & Leino-Kilpi, H. J. I. J. O. N. S. (2008). The nurse teacher in clinical practice: developing the new sub-dimension to the clinical learning environment and supervision (CLES) scale. International Journal of Nursing Studies, 45(8), 1233–1237.

Saarikoski, M., Leino-Kilpi, H. J. I., & j. O. N. S. (2002). The clinical learning environment and supervision by staff nurses: developing the instrument. International Journal of Nursing Studies, 39(3), 259–267.

Salamonson, Y., Bourgeois, S., Everett, B., Weaver, R., Peters, K., & Jackson, D. (2011). Psychometric testing of the abbreviated Clinical Learning Environment Inventory (CLEI-19). Journal of Advanced Nursing, 67(12), 2668–2676. https://doi.org/10.1111/j.1365-2648.2011.05704.x

Sand-Jecklin, K. (2009). Assessing nursing student perceptions of the clinical learning environment: Refinement and testing of the SECEE inventory. Journal of Nursing Measurement, 17(3), 232–246. https://doi.org/10.1891/1061-3749.17.3.232

Schön, D. J. P. (1983). The reflective practitioner. 116(6), 1546-1552

Schönrock-Adema, J., Bouwkamp-Timmer, T., van Hell, E. A., & Cohen-Schotanus, J. (2012). Key elements in assessing the educational environment: Where is the theory? Advances in Health Sciences Education: Theory and Practice, 17(5), 727–742. https://doi.org/10.1007/s10459-011-9346-8

Shochet, R. B., Colbert-Getz, J. M., Levine, R. B., & Wright, S. M. (2013). Gauging events that influence students’ perceptions of the medical school learning environment: Findings from one institution. Academic Medicine, 88(2), 246–252. https://doi.org/10.1097/ACM.0b013e31827bfa14

Shochet, R., Colbert-Getz, J., & Wright, S. (2015). The Johns Hopkins learning environment scale: Measuring medical students’ perceptions of the processes supporting professional formation. Academic Medicine. https://doi.org/10.1097/ACM.0000000000000706

Simpson, D., McDiarmid, M., La Fratta, T., Salvo, N., Bidwell, J. L., Moore, L., & Irby, D. M. J. J. O. G. M. E. (2021). Preliminary Evidence supporting a novel 10-item clinical learning environment quick survey (CLEQS). Journal of Graduate Medical Education, 13(4), 553–560.

Soemantri, D., Herrera, C., & Riquelme, A. (2010). Measuring the educational environment in health professions studies: A systematic review. Medical Teacher, 32(12), 947–952.

Stewart, D., & Klein, S. (2016). The use of theory in research. International Journal of Clinical Pharmacy, 38(3), 615–619. https://doi.org/10.1007/s11096-015-0216-y

Strand, P., Sjöborg, K., Stalmeijer, R., Wichmann-Hansen, G., Jakobsson, U., & Edgren, G. (2013). Development and psychometric evaluation of the undergraduate clinical education environment measure (UCEEM). Medical Teacher, 35(12), 1014–1026. https://doi.org/10.3109/0142159x.2013.835389

Sunkad, M. A., Javali, S., Shivapur, Y., & Wantamutte, A. J. J. E. E. H. P. (2015). Health sciences students’ perception of the educational environment of KLE University. India as Measured with the Dundee Ready Educational Environment Measure (DREEM)., 12(12), 37.

Tackett, S., Abu Bakar, H., Shilkofski, N., Coady, N., Rampal, K., & Wright, S. (2015). Profiling medical school learning environments in Malaysia: a validation study of the Johns Hopkins Learning Environment Scale. Journal of Educational Evaluation for Health Professions. https://doi.org/10.3352/jeehp.2015.12.39

Thibault, G. E. (2020). The future of health professions education: Emerging trends in the United States. FASEB Bioadv, 2(12), 685–694. https://doi.org/10.1096/fba.2020-00061

Tomietto, M., Saiani, L., Cunico, L., Cicolini, G., Palese, A., Watson, P., & Saarikoski, M. (2012). Clinical learning environment and supervision plus nurse teacher (CLES+ T) scale: testing the psychometric characteristics of the Italian version. Giornale Italiano Di Medicina Del Lavoro Ed Ergonomia, 34(2), 72–80.

Tricco, A. C., Lillie, E., Zarin, W., O’Brien, K. K., Colquhoun, H., Levac, D., Moher, D., Peters, M. D. J., Horsley, T., Weeks, L., Hempel, S., Akl, E. A., Chang, C., McGowan, J., Stewart, L., Hartling, L., Aldcroft, A., Wilson, M. G., Garritty, C., & Straus, S. E. (2018). PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Annals of Internal Medicine, 169(7), 467–473. https://doi.org/10.7326/M18-0850

Vizcaya-Moreno, M. F., Pérez-Cañaveras, R. M., De Juan, J., & Saarikoski, M. J. I. J. O. N. S. (2015). Development and psychometric testing of the clinical learning environment, supervision and nurse teacher evaluation scale (CLES+ T): The Spanish version. International Journal of Nursing Studies, 52(1), 361–367.

Wach, F.-S., Karbach, J., Ruffing, S., Brünken, R., & Spinath, F. M. (2016). University students’ satisfaction with their academic studies: Personality and motivation matter. Frontiers in Psychology, 7, 55.

Wakeford, R. E. (1981). students’ perception of the medical school learning environment: a pilot study into some differences and similarities between clinical schools in the UK Assess & Eval in High Educ. Assessment in Higher Education, 6(3), 206–217. https://doi.org/10.1080/0260293810060303

Wenger, E. (1999). Communities of practice: Learning, meaning, and identity. Cambridge University Press.

White, C. (2010). A socio-cultural approach to learning in the practice setting. Nurse Education Today, 30(8), 794–797. https://doi.org/10.1016/j.nedt.2010.02.002

Yılmaz, N., Velipasaoglu, S., Sahin, H., Başusta, N., Midik, O., Budakoglu, I., Mamakli, S., Tengiz, F., Durak, H., Ozan, S., & Coskun, O. (2016). A De Novo Tool to Measure the Preclinical Learning Climate of Medical Faculties in Turkey. Educational Sciences: Theory & Practice, 16, 1–14. https://doi.org/10.12738/estp.2016.1.0064

Yusoff, M. S. B. (2012). Stability of DREEM in a sample of medical students: A prospective study. Education Research International. https://doi.org/10.1155/2012/509638

Funding

Open Access funding provided by the Qatar National Library. This research work was supported by Qatar University Internal Grant [QUCG-CPH-22/23–565].

Author information

Authors and Affiliations

Contributions

Conceptualization: [Banan Mukhalalati]; Methodology: [Banan Mukhalalati, Ola Yakti, Sara Elshami]; Formal analysis and investigation: [Banan Mukhalalati, Ola Yakti, Sara Elshami]; Writing—original draft preparation: [Ola Yakti]; Writing—review and editing: [Banan Mukhalalati, Ola Yakti, Sara Elshami]; Funding acquisition: [Banan Mukhalalati]; Resources: [Banan Mukhalalati]; Supervision: [Banan Mukhalalati].

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Ethical approval

Not required for this review paper.

Consent to participate

Not required for this review paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mukhalalati, B., Yakti, O. & Elshami, S. A scoping review of the questionnaires used for the assessment of the perception of undergraduate students of the learning environment in healthcare professions education programs. Adv in Health Sci Educ (2024). https://doi.org/10.1007/s10459-024-10319-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10459-024-10319-1