Abstract

Empirical or semi-empirical design methodologies at the macroscopic scale (structural level) can be supported and justified only by a fundamental understanding at the lower (microscopic) size scale through the physical model. Today structural integrity (SI) is thought as the optimisation of microstructure by controlling processing coupled with intelligent manufacturing of the material: to maximise mechanical performance and ensure reliability of the large scale structure; and to avoid calamity and misfortune. SI analysis provides quantitative input to the formulation of an appropriately balanced response to the problem. This article demonstrates that at the heart of the matter are those mechanisms of crack nucleation and growth that affect the structural integrity of the material: microscopic cracking events that are usually too small to observe and viewed only by microscopy.

Similar content being viewed by others

1 Background

In critical engineering conditions of variable stress-state, fluctuating temperature and hostile environment, where the objective is to design large composite structures for longevity, durability and reliability out of materials having structural integrity, then the balance between empirical engineering design based on continuum and mathematical modelling, (sometimes called “distilled empiricism”), and physical modelling, (sometimes called “mechanism modelling” or simply “micro-mechanics”), is shifted in favour of physical modelling. Whilst many ancient temples and cathedrals have stood the test of time, others collapsed prematurely without warning leaving the stone mason with head in hands. History is littered with structural disasters where the crucial failure event eluded the experimentalist. Yet despite an acquisition of vast collections of experimental data, information and compelling evidence, and an engineer’s intuition based on “feel”, − experience coupled with intelligent observation - a phenomenology - our ability to fully understand that longstanding problem of structural failure remains unresolved. Empirical “rules” simply do not have the power of prediction.

1.1 The Traditional Route of Engineering Design

Traditional empirical design formulations have not done well dealing with these challenges. Successful prediction of mechanical behaviour of material and design life of a structure requires detailed information of all possible failure mechanisms across the widest spectrum of size-scale under all sorts of operational conditions. To set up an experimental program that covers all eventualities, the scope of the test program would be immense and unaffordable. Not only would the findings be complicated to sort out because of the many different test and material variables, and the complicated mechanisms of fracture and fatigue involved, the question of their interaction would have to be resolved. And bear in mind, material characterization over one range of temperature cannot safely be extrapolated into another range: a new material characterization would be needed for every set of composite lay-up, selection of fiber and matrix system, operating conditions, and environment.

To follow the traditional route of engineering design is merely an attempt to ensure that a highly stressed critical component could not possibly fail within its design lifetime. This exercise involves deriving over the entire life of the structure, the spectrum of loads experienced in service and to compare with materials test data; strength, fatigue and creep data, stress corrosion cracking rate, etc., −- that phenomenology mentioned above. The next step is to determine the component’s dimensions to maintain the design loads and to “guarantee” the design life within a margin of safety, allowing for some acceptable risk of disaster. In this way, we build up to testing larger and larger items until the complete structure undergoes that multi-million dollar test to destruction. Determining the ultimate strength or impact resistance of the structure experimentally, however, has it benefits; it helps verify the analytical methods used to calculate the loads and deformations the structure will have to carry in service. In reality there are still many tests to complete from elements to components to sub-structures where internal stress fields are too intricate to evaluate by analysis [1].

Traditionally, then, practical design methods called for time-consuming, expensive test programs to establish damage tolerance certification of the large structure (Fig.1). The consequences of changing laminate design (fiber orientation, stacking sequence or ply thickness) or material system “along the road” would be disastrous. A heavy price is paid for making mistakes at this stage in the design process. Quite simply, the entire test matrix for material qualification has to be repeated. Furthermore, there are complications as the result of laminates being heterogeneous elastic bodies containing sites of stress singularity; and secondly, the mechanisms controlling damage initiation and propagation are non-linear. The likelihood is those mechanisms would not be known. Direct identification is the only sure way.

The Experimental Challenge [2] (Courtesy of Davies and Ankersen)

In contrast, for a metal undergoing fatigue, we know that cracks grow and we can control life by monitoring the growth of the longest (meaning dominant) crack. The behaviour under multi-axial stresses can be quantified by the use of Goodman diagrams. However, these diagrams are not feasible for a composite structure and though it can be said that composite structures are more resistant to fatigue than metal ones, this is only strictly the case in a tensile stress – tensile strain situation. In most cases, in contrast to the metals, when polymer matrix composites (PMC) are fatigued, damage takes the form of numerous micro-cracks predominantly in the matrix material or at the fiber-matrix interface and most importantly towards the end of life by a cascade of snapping fibers. Early in the life cycle damage can be sustained but it spreads over time through significant parts of the bulk material and structure. But there is another complication: structure evolves with time and ageing weakens. Furthermore, when cracks form they in turn increase the rate of damage progression. There is positive feed back.

Since it is unlikely of a single dominant crack in fibrous composites it would not be straightforward with preset knowledge to access the nature of the damage simply by microscopic examination. Detection by acoustic emission may be possible but sorting out the different cracking mechanisms is not straightforward either [3, 4]. Some suggestions have been made to use the measurement of Poisson’s ratio of the fatigued structure as a monitor of the damage [Tony Kelly, private communication]. To overcome this uncertainty the designer reduces the allowable stress on the material but then the component becomes overweight and probably over-cost.

1.2 In Search of Structural Integrity

Structures are like human beings. They have to endure the stresses and strains of everyday life, suffer a few unexpected blows and generally experience wear and tear as they grow older. Until more recent times, our knowledge of the processes by which a structure failed was not known; no one really cared. Predicting precisely where a crack will develop in a material under stress and when disaster will strike an engineering structure is one the oldest unsolved mysteries in designing against engineering catastrophe. Basic human instincts, thinking, perceptions and judgements have been misdirected and the compulsory basis of creative endeavours in building large structures were representations of a distorted reality. Quite simply, we just did not understand what is meant by structural integrity (SI). Our knowledge of material behaviour and of structural failure was based entirely on an understanding of a store of practical knowledge being empirical in nature. The problem is that once a crack is formed ageing accelerates its growth. Cracking in composites under stress and the rate of damage progression increases with the advancement of time: there is positive feedback.

A good place to begin is by asking what an engineering construction is supposed to do (its function)? An aircraft transports from place to place and must maintain rigidity with respect to payload and external forces. An example of a specialised function is a “death-defying” fairground ride where function is to thrill with perceived danger but without intentional spills and distress. Failure is defined as that loss of function. Death can be the ultimate result.

Cost is often a major factor in any design and function related to a need for high performance. Defence spends more than most but the “acceptable lifetime” of a fighter-plane is much less than that of a civil counterpart. The price of carbon fiber composite does not lead to “cheap and cheerful” materials and manufacturing processes but some of us are prepared to pay a premium for a carbon fiber tennis racquet. Passengers may want the cheapest air-fares but they want to be safe.

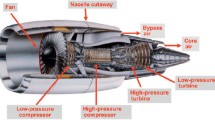

Cheaper fares require more efficient use of aviation fuel; hence higher thrust/weight engines, higher stresses and higher engine operating temperatures. This entails sophisticated aero-engine design and the use of expensive high-performance materials. While passengers insist on high safety, the airlines want longer maintenance cycles.

So how far from potential disaster are we prepared to go (Fig. 2)? Well, quite clearly, there are conflicting aims of designing simultaneously for high efficiency and high safety assurance throughout an economically viable lifetime. What is an acceptable level of danger? It comes down to structural integrity vs loss of function (disaster).

An engine blows up on a new Airbus A380 on Qantas Airline flight 4 November 2010. A turbine disc in the Rolls-Royce Trent 900 engine disintegrated resulting in damage to the nacelle, wing, fuel system, landing gear, flight controls, and undetected fire in an inner wing fuel tank. Failure was caused by fracture of a stub oil pipe

On 10th January 1954, BOAC Flight 781, a de Haviland Comet, suffered an explosive decompression at altitude and crashed into the Mediterranean Sea. A similar aircraft went down months later. The burden of testing to prove absolute safety of structure, however, is impossible to manage in practice. Where human life depends upon structural integrity, it takes 104 material test coupons per composite laminate configuration to evaluate an airframe for a given set of operating conditions Fig. 3.

Coming to terms with this life-long dilemma of when catastrophe might strike requires knowledge of the following phenomena: impact, fatigue, creep, stress corrosion cracking, etc. All affect reliability, life expectancy, and durability of structure. Structural integrity analysis is one way forward. It treats simultaneously design, materials used, how best components and parts are joined, takes service duty into account, and considers what is an acceptable level of danger! We, the public must decide what price we are prepared to pay for ultimate in safety or decide upon an acceptable level of danger and “white knuckle-ride”.

Today we think of SI as the optimisation of microstructure by controlling processing coupled with intelligent manufacturing of the material: to maximise mechanical performance and ensure reliability of the large scale structure; and to avoid calamity and misfortune. An important feature of SI analysis is that it provides quantitative input to the formulation of an appropriately balanced response to the problem. And at the heart of the matter are those mechanisms of crack nucleation and growth that affect the structural integrity of the material: microscopic cracking events that are usually too small to observe and viewed only by microscopy (sections 3 and 8).

But how much detail do we need to know about cracks? Is it from the time the smallest undetectable defect forms? Or when a visible crack is eventually found? The way the crack initiates is entirely determined by atomic-scale phenomena; micro-structure at the micron level of size affects the modes of crack propagation and the path it takes. The fracture process from beginning to end requires an in-depth knowledge and understanding of the critical deformation and cracking processes monitored directly, tracing those damaging mechanisms and the progression of structural change over time.

Since the discovery of high strength, high stiffness carbon fiber more than half century ago, in scientific terms there has not been a thorough quantitative formulation of the relationship between the design of the composite micro-structure and performance of the large scale engineering composite structure. A gap opened up that required bridging between the dimensional domains of the materials engineer and the structural engineer both working at different size levels on a structural size-scale. Required is an integrated approach across multi-disciplines: the application of the fundamentals of materials science and the principles of micro-mechanics coupled with structural engineering mechanics to understand why materials crack and structures fracture.

Various design methodologies exist, all dealing with critical issues of structure and all common to the overall design process of production, maintenance, and repair. Superimposed are three items – assessment by non-destructive inspection (NDI) and structural health monitoring (SHM), and safety. Is safety compromised where the fatal flaw(s) in the structure is (are) smaller than the NDI detection limit? What initial flaw (damage) size (content) is acceptable in the final structure as a result of the manufacturing process followed by the service conditions? And what is an appropriate inspection period? Fitness considerations of large structures require though-life structural health monitoring of damage growth. We require tools (physical models, micro-mechanical models, structural mechanics, for example) for predicting the structural integrity of the material on the one hand and the design life of the large scale structure on the other.

The avoidance of the failure of composite structured assemblies and their complexity of design represents a major challenge. Anticipating possible material and structural problems are just too complicated and too interactive to propose a solution by practical testing and in any case the structures are just too large. It follows that judicious testing coupled with well-substantiated computer modelling is the only way forward at present. Bird strike, the fatal nemesis of the brave RB211 fan project can now be adequately modelled so that the EASA (European Aviation Safety Agency) will accept bird strike simulation. And some forms of virtual testing are been accepted too. Virtual testing procedures for drop weight impact tests have been developed using finite element methods. Recent advances allow the inclusion of complex constitutive equations and their manipulation with fast computers. But the difference in comparing the design and testing of composites with metal structures is still vast.

A feature of contemporary composite materials for large or new structures is the manner in which university groups and research institutes, ex-house to the main contractor, are involved both in design, particularly for the latter stages, and in testing activities. As an illustration, the production project for the end section of the A350 involves the following specific requests for work. On the production method:

-

Getting it right first time and quality control of the manufacturing process.

-

Effects of impact damage – with composites impact due to falling or struck objects (at up to 900 km/h) does not immediately give visual evidence of the event on the exterior surface – delamination may be produced and /or peeling at the inner surface. It turns out that curved sections appear to be more susceptible to damage than are planar ones.

-

Damage tolerance is a most important concept and much consideration must be given to coming up with a reliable quantitative statement of how much may be allowed with safety.

-

For both of the last two, NDI methods for checking the adhesive bond failure are important.

2 Damaging Mechanisms: Early Days at Cambridge

On 15th June 1960, a young professor at Cambridge, Alan Cottrell, gave an invited lecture at The Royal Society in London [5]. He cogently argued a novel treatment of a long-standing problem, of the crack with a force between its faces. His direct approach was to obtain general expressions for force and displacement between crack surfaces. Professor Cottrell enunciated: “... if there is a transverse notch cutting across a parallel array of fibers in a rod of some material like adhesive, the forces from the cut fibers can be transmitted to the intact fibres close to the notch tip only by passing as shearing forces through layers of the adhesive.” Professor Cottrell continued: “If this adhesive has a fairly low resistance to shear... it will then be incapable of focussing the transmitted forces sharply…There is a tremendous opportunity for developing this principle further using fibers of very strong atomic forces like oxides and carbides.”

In the audience at The RS was a young Dr Tony Kelly. On returning to Cambridge he instructed his graduate students to carry out two different experiments. First, a tensile test of aligned strong tungsten wires embedded into soft ductile copper loaded to ultimate failure. They observed that the tensile fracture surface displayed an array of protruding fibers; a mechanism of dissipating energy which became known as fiber pull-out. The resistance to cracking increases by this crack bridging mechanism. In similar experiments with silica fiber embedded in resin loaded in tension at room temperature and at very low temperature (77K), the brittle component (which is the fiber at room temperature and the matrix at 77K) is observed to break in a series of parallel cracks while the other component remains intact and bears the load. This “ductility” in a totally brittle system Kelly called multiple cracking. Here lies the paradox; ductility in a non-ductile material system. To produce ductility in a metal is commonplace, but to produce it in a totally brittle system, that is something special when a crack does not extend if faced with an interface which yields easily in shear.

Quite simply, we require in a damage-tolerant composite material the presence of a microscopically weak structure built into a macroscopically strong solid that ensures any crack present becomes benign. Cottrell had proposed this and Kelly and his students by experimental means had discovered two new quite separate methods of dissipating energy: fiber pull-out and multiple cracking. This is what the mechanicians call “resistance to cracking”, what George Irwin in the USA called “toughness”. Fragility of a brittle material could now be overcome by the incorporation of brittle fibers of strongly bonded oxides or carbides. By these means are ceramics and brittle resins toughened: by laminating or by planar matting; or of oxide layers on metal surfaces; or of protective coatings.

We saw at Cambridge two generations of scientists working in unison; there is synergy by acting together (just like the fiber and matrix interacting in a composite material). In the case of the fibrous composite under tensile loading, carbon fibers in epoxy, for example, the fibers snap randomly at weak points along their length. Some will fracture in the plane of the matrix; the majority will not and act as bridges connecting the two crack surfaces. The fibers snap in a sequential manner because of the variability in flaw size and strength, flaw distribution along their length, and distance from the matrix crack plane. By careful manipulation of fiber-matrix bonding, the fiber-matrix interface is “allowed” to fail by de-cohesion creating a damage zone around the matrix crack tip. By this means is the damage zone “softened” (the localised stiffness falls) with a corresponding decrease in the crack tip stress intensity factor, whilst fibers bridging that crack remain intact and carry the traction (Fig. 4).

3 Fitness Considerations for Long-Life Implementation

So why do we still encounter materials that crack and structures that fail? It is because our knowledge is based (almost) entirely on this store of information being empirical in nature. For decades, an “invisible” college of continuum mechanicians studied mechanical behaviour based on an idealization of what behaviour is without any reference to microstructure. They could not care less for studying micro-structure. The problem is that extensive (and time-consuming, expensive) test programs must reveal all those dominant failure mechanisms that are likely to operate in service and that is simply unrealistic. And for composite materials, the components of damage are complex and, ideally, they must be observed directly and not inferred indirectly. The path less travelled by some practitioners but becoming more familiar today to solving this dilemma has led to the development of the principles of damage mechanics, constitutive equations of material behaviour based on physical models of the deformation and cracking processes, which is sometimes called micro-mechanics.

For half a century or longer, those factors that influence the endurance boundary of the composite material on the one hand and performance limit of the composite structure on the other have been the subject of a great number of analytical investigations, validated by precise measurement of critical property data. But predicting precisely where a crack will develop in a material under stress and exactly when in time catastrophic failure of the structure will occur remains an unsolved mystery. Our comprehension of the sustainable damage of a composite material and the mechanical stability of a composite structure over a wide range of operating conditions remains restricted.

Selecting the right material system at the very beginning of the design process is a sensitive issue and requires careful material properties profiling. Dimensions must be consistent with the overall function including minimum weight and there are data bases for materials properties to which designers can refer, for example, The Cambridge Materials Selector [8]. When it comes to material property profiling, frequently Young’s modulus E and density ρ are the performance drivers in which case the materials engineer consults a chart having the axes E-ρ with superimposed lines of constant design or merit indices: a) E/ρ for a strut; b) E1/2/ρ for a beam, and c) E1/3/ρ for a plate, where the index is maximised for optimum design.

Additional input in selecting the correct combination of fiber and matrix might include thermal conductivity and thermal expansion coefficient. For thermal properties profiling, an expansion coefficient mismatch between ceramic constituents leads to thermal fatigue cracking or ratchetting in metal-matrix composites (MMC). This requirement constrains selection of fiber reinforcement and protective coatings for high-temperature application; Ti requires Al2O3 fibers, not SiC fibers. Ceramic-ceramic composites (CMC) preference is for SiC/SiC or mullite (alumino-silicate ceramic)/Al2O3 over SiC/Al2O3.

Where toughness is a critical requirement of a material, experience indicates a practical minimum level of fracture toughness of 10-15 MPa.m1/2 in fracture mechanics units. Toughness, however, is not a unique property of composites, which complicates things. For example, “blunting” mechanisms stabilize damage: multiple matrix cracking, fiber bridging of de-lamination cracks, fiber buckling zones around notches or holes in compression, etc. Furthermore, the stress concentration factor around holes diminishes under increasing (and repeated) load because inelastic (damage) zones develop with an elevation in the local tensile strength. Multiple fiber fracture and matrix-dominated cracking below ultimate strength allows other inelastic mechanisms to activate in the matrix and stabilize the effect of damage, and the failure probability distribution is dramatically modified. Since notch strength scales with fracture toughness, notch sensitivity a more robust, useful measure of material performance. Quite simply, there is a fall in the crack tip intensity factor.

In service, composites can undergo combined attack from stress and environment. The result is the activation of a complexity of atomistic defects and microscopic flaws and their accumulation over time will be felt at the component level of size. Corrosion fatigue degradation of glass fibres in epoxy, for example, occurs by two rate-limiting phenomena. Hostile species penetrate the composite through matrix cracks. Reaction with the fibres reduces their strength and they fail at the matrix crack front. This is a reaction-controlled stress corrosion cracking process.

On the other hand, for a narrow matrix crack opening, concentration gradients develop along the crack and the stress corrosion cracking process becomes diffusion-controlled. The chemically activated kinetics of the processes are thermally sensitive, so models based on statistical mechanics lead to a rate that depends upon temperature: In solving this particular problem, the difficulty is that pure atomistic models on their own break down because certain structural variables (diffusion-rates, jump frequencies, chemical activation energies, etc) are not known or neither easily measured [9].

The main damage mechanisms in composites known by experiment involve intra-laminar and inter-laminar cracking, and they must explicitly be taken into account. Behaviour of the interface elements may be controlled using a simple cohesive crack model and the maximum load at failure and absorbed energy can be accurately predicted. But once again, life is just not that simple. Damage by cracking involves complex non-linear processes: at the micro scale; it includes micro-cracking of the matrix and fiber breakage. There are just too many possible interacting, competing components of damage to understand let alone to build into a single realistic predictive model of catastrophic structural failure. Prediction really is a problem.

To formulate a life-prediction methodology, three principal phenomena have to be addressed: reduced strength of reinforcement; fiber stress at the tip of a crack; and concentration of hostile species within the crack, all issues related to the chemistry and the kinetics of reactions [9]). But there is another complication: re-call structure evolves with time and ageing weakens. Furthermore, when cracks form they in turn increase the rate of damage progression. There is positive feedback. The difficulty is that we simply do not know what happens once damage begins. How, therefore, can we deal with the nucleation of damage and the multiplicity of interacting cracks? Understanding this life-long dilemma requires knowledge of the following phenomena: impact; fatigue; creep; and stress corrosion cracking, etc. All affect the reliability, life expectancy, and durability of structure; its structural integrity. An important feature of SI analysis is that it provides quantitative input to the formulation of an appropriately balanced response to that question.

4 Structural Integrity and Length-Scale

In the twentieth century, modern mechanical design evolved with the development of the continuum theories of mechanics (mathematical and continuum models of elasticity and plasticity), diffusion and reaction rates. With the advent of computer power this resulted in finite element (FE) modelling, numerical analyses, and high-fidelity simulation. This led to an optimisation to minimise cost or to maximise performance or safety. Thus, the modern designer has to show dexterity in the use of two boxes of tools: mathematics and continuum modelling, from which those continuum theories and constitutive modelling have evolved, (a sort of “distilled empiricism”), and micro-mechanics modelling (or physical modelling).

Using these tools to determine constitutive equations relies on knowledge of the rules of materials behaviour. The idea is that the response at one size level is described by one (or more) parameters and passed to the next level up (or down). There is a hierarchy of structural scales and discrete methods of analysis in design ranging from micro-mechanics to the higher structural levels of modelling, continuum mechanics, etc. (Fig. 5). Structural Integrity embraces contributions from materials science and engineering, fabrication and processing technology, NDI, fracture mechanics, probabilistic assessment of failure, across a broad spectrum of size scale.

Combining information leads to the development of “damage mechanics”; constitutive equations based on physical models. The problem is that constitutive equations of continuum design are based on experiment. This is where micro-mechanics helps by identifying those mechanisms responsible for cracking and an understanding derived from the theory of reaction rates (for example) to model them. But micro-mechanical models have something else to offer, they point to rules that the constitutive equations must obey. Although micro-mechanical models cannot by themselves lead to precise constitutive laws, the result is a constitutive equation that contains the predictive power of physical modelling combined with the precision of ordinary curve fitting methods (called “model-informed empiricism”). A key role in failure prediction, from empirical methods to high-fidelity simulations of damage evolution, is played by certain physical length scales in the damaging processes, which provide a rationale for making modelling decisions.

A length-scale arises because of the complexity of the nature of cracks, for specific damaging mechanisms; de-lamination and splitting (shear) cracks (and associated interfacial friction), fiber rupture, fiber micro-buckling or kink formation, and diffuse micro-cracking or shear damage [10].

Corresponding physical models and mathematical theories describe these mechanisms on a micro-scale and crack growth in the large engineering structure. Boundaries on this length-scale are delineated by a breakdown in the model and assumptions implicit to a particular size. Thus, we can define points on that scale by phenomena that are treated discretely from phenomena treated collectively. The two exceptions are the end points of the length-scale. (Everything at the electronic level is treated discretely whereas everything at the macro size is treated collectively). Our confusion over how damage is interpreted along this length-scale is causing difficulty as progress is made from one design stage to the next; from the size of architectural feature of the laminate to that of structural element, and from component and to the fully assembled large scale structure.

Lack of mastery in combining architectural design of the material at the micron (or less) size with the design of elements of the engineering structure metres in length, has led to the opening of a gap in our knowledge of composite failure. This weakness can be traced to the changing nature of cracking and fracture as structural size increases. If we consider coming to terms with all sorts of material behavioural complexities at the (sub-) microscopic end of the scale, we might say that we have characterized the properties of the composite by reference to the fiber only. There has been no real consideration of the “make up” of the material or macroscopic geometry of the laminate or shape of part or component. Any notch, hole or cut-out is but a geometrical aberration. Conversely, at the size level of component design, we have tended to look at the overall geometric shape and thought of the material properties as being set (in a geometric sense) at global level.

Coming to terms with these differences of scale appears to be a key source of design difficulty because it is precisely at that size where the material problem becomes a structural one where this gap in understanding of composite failure has opened up. This gap has been partially filled using fracture (and damage) mechanics, where quantitative relationships between microscopic and macroscopic parameters have been developed. Thus, as before, damage tolerance certification of a material and structure requires time-consuming, expensive testing. However, with the development of computer power and appropriate software, this has lead to a reduction in number of tests by substituting with high-fidelity damage simulations that serve as virtual tests of structural integrity.

Understanding damage by experimentation and modelling across orders of magnitude of structural dimension, and linking analyses systematically to provide a total predictive design strategy is lacking with respect to absolute reliability and guaranteed safety. This is particularly critical regarding two design issues: (1) a structure capable of sustaining a potential damaging event (damage resistance) and (2) a structure’s ability to perform satisfactorily and safely with damage present (damage tolerance). Even with the exponential growth of computational power, which has resulted in an abundance of numerical analytical models, there still exists fundamental barriers to overcome as progress is made by connecting one damage analysis to the next, from the time the smallest undetectable defect forms in the solid to the point where a visible crack is found in a full-scale engineering structure “down the road”.

5 Structural Integrity and Multi-Scale Modelling

Materials have to be processed, components shaped, and structures assembled. Lack of attention to detail leads to premature failure after shorter service duty because of the introduction at some stage of fatal flaws (voids, de-laminations, fiber waviness, contamination at joints, etc) and they all impact on structural performance. Predicting damage initiation followed by damage evolution and specifying accurately the safe operating limits is a major challenge. The problem is especially difficult if the damage is severe. Difficulties in prediction arise because composites modelled as heterogeneous elastic bodies contain sites of stress singularity; secondly, because the mechanisms controlling damage initiation and growth are non-linear. Multi-scale problems of structural failure that occur at the micro, meso, and the macro size of scale must be targeted by appropriate multi-scale modelling methods. Testing and analysis across a size spectrum reflect responses at all structural levels, we call “multi-scale modelling” (Fig. 6).

Structural Integrity is affected from the Micro to Meso to Macro size levels. (Courtesy of Josef Jančář [11]). Testing and analysis across a size spectrum reflect responses at all structural levels. This is illustrated with Boeing’s 787 multi-scale composite structure. Macroscopic response of material and component reflects responses at all levels beneath

The macroscopic response of a composite material system and component reflects responses at all levels beneath. The idea is that the response at one level is passed to the next level up (or down). Hierarchical and multi-scale modelling links top-down (TD) and bottom-up (BU) approaches. Almost always, behaviour at one level can be passed to the next level up (or down) as a simple mathematical function. Of particular interest is how damage transfers from a lower scale to a higher scale. This requires the entire range of length scale be probed in order to connect failure of the material and fracture of the engineering structure. Regrettably, distinct communities have pursued “top-down” and “bottom up” methods of design only occasionally transferring information from one to the other.

The TD method begins with a macroscopic engineering model, a procedure that depends only on knowledge of straightforward macroscopically measurable properties, like hardness or yield stress or ultimate tensile strength, which an engineer can handle. BU methods on the other hand, seek to model (or simulate) failure by building upon events that initially take place at the atomistic (or microscopic) level, which in engineering terms are difficult to determine, let alone quantify.

In the Bottom to Top strategy, component testing can be carried out through structural analysis using micro-mechanical models for the homogenized laminate behavior (3D continuum shell and cohesive elements). These virtual experiments open revolutionary opportunities to reduce the number of costly tests to certify safety, to develop new materials configurations and to improve the accuracy of failure criteria. However, the problem still remains that it is difficult to model first damage (matrix cracking) and progression into a de-lamination crack.

Whilst physical modelling as described by one (or more) parameters in a constitutive model, for example, can help rationalise those microscopic processes responsible for yield stress, toughness (notch sensitivity), fatigue, stress corrosion cracking etc., generally, these models are too imprecise for an exact engineering solution. In fatigue, for example, life prediction based on a physical model only might give a prediction within a factor of 10. That is because the material parameters of the physical model are not known with any certainty and they are too difficult to determine or measure with any confidence. They can be estimated only by empirical means. Knowledge of matrix crack density, for instance, would characterise the state of damage in a composite under fatigue but there is no straightforward way of quantifying it in a large structure.

Such interplay of materials science and engineering is of crucial importance where composite material properties vary continuously with some internal parameter that relates to composite architecture in some way. Optimum material micro-structure (and nano-structure) can be forecast and designed rather than found by trial and error, (with the possibility of calamity), whilst maximising structural high performance and sustainable safe life. Then, when a set of properties is specified, it should be possible to select a particular lay-up or weave of an appropriate composite material system, and set of processing conditions, to meet that specification and provide structural integrity. We require mathematical formulations that link TD and BU approaches in single design codes that represent fine-scale phenomena embedded in calculations representing larger-scale phenomena, tracing damage mechanisms through all size scales.

A word of warning: beware of premature claims of success. There are Top-Downers who fit engineering data with a large number of material and experimental parameters and infer their model is unique; and those Bottom-Uppers who project their mechanism as the dominant one amongst all others in order to control engineering behaviour. In reality, is there a mechanism that has not been observed; is there a significant crack that has gone undetected?

6 At the Heart of Structural Integrity

Component failure is normally due to instability of one kind or other and it is irrevocable. When a crack extends in a solid, energy is irreversibly lost. Load on the structural part is not indefinitely sustainable and it eventually fails. As a reminder, at the heart of the matter is the matrix or interface crack between layers of the laminated composite bridged by fiber that requires de-bonding to occur in preference to fiber fracture. In the absence of de-bonding or when the sliding (shear) resistance along the de-bonded interface is high, crack tip stresses are concentrated in the fiber and they decay rapidly with distance from the matrix crack plane. Consequently, fibers are more likely to snap at or near to the crack plane rather than pulling out, thus diminishing their vital role in bridging matrix cracks and de-lamination cracks. Under these circumstances, the composite would exhibit notch sensitivity (brittleness). Re-call the fate of the original carbon fiber compressor fan blades of the RB211 engine.

Thus, the critical issue concerning structural integrity of the composite centres on the extent of this de-bonding mechanism and its dependence on interface properties, and its effect on crack opening and fiber fracture. These contributions on the dissipation of energy in a stable manner can be derived in terms of the constituent properties of the fiber and the shear resistance or shear toughness of the interface. Furthermore, the question of structural integrity concerns the definition of optimum surface treatment of fiber and optimum properties of any coating or inter-phase between the fiber and matrix.

Vital, then, is the nature of the bond and integrity of the interface, and possible thermal stresses and shrinkage effects of the matrix during processing and ageing in wet and dry environments. Thus, questions surrounding the mechanisms of mixed-modes of splitting and de-lamination cracking require resolution. Another consideration is how to include in a physical model the probabilistic nature of the failure behaviour of composite materials. So, while our understanding of the deformation and fracture behaviour of materials based on defect theory and crack mechanics has advanced considerably, failure prediction of composite structures on a macro-scale becomes problematic. At the risk of repetition, at the heart of the problem lies those failure mechanism(s) best identified by direct observation (Figs. 7 and 8). But that’s not straightforward to undertake by any means. It is dangerous to assume a mechanism without direct evidence or that it is dominant.

While our understanding of the deformation and fracture behaviour of materials based on defect theory and crack mechanics has advanced considerably, failure prediction of composite structures on a macro-scale becomes problematic.

Mark Kortshot (top right), Costas Soutis (bottom center).

The next best thing to directly observing cracking is by indirect observation; mechanisms can be inferred by C-scan or by changes in modulus or Poisson’s ratio. The value of Poisson’s ratio is a more sensitive indicator of the presence of cracks than is a direct measure of the other elastic constants. It has been argued against this in that the principal Poisson’s ratio of an aligned carbon fibre composite is very small and hence changes in its value difficult to detect. One answer to this objection is that a composite has a number of Poisson’s ratios and so while a very small one may occur between one particular pair of directions, it will be accompanied by a much larger value shown when another pair of directions is taken for the measurements. The latter pair should be chosen. An angle ply laminate has a very large value of the principal Poisson’s ratio.

It is dangerous to assume a mechanism without direct evidence or that it is dominant.

7 A Guide to Thinking and Planning a Physical Model

Consider a physical model, which is a gross simplification that captures the essentials of the problem. All successful models capture features that really matter by illuminating the principles that underline key observations of material behaviour. (An example is a topographical 3-D relief map of a mountain displaying contour lines of elevation. Unfortunately, it is unable to show us the easiest way down in winter on skis but with the snow-covered pistes colour coded it does indicate the way for novices, more or less). Keep the model simple but not too simple.

First, identify the problem. Understand the nature of the problem (the mechanism(s)) such as matrix cracking, de-lamination, etc. Next, model each mechanism separately (coupling is the real challenge); then compare with data. Remember what the model is for: to gain physical insight; to capture material response in an equation or code; to predict material response under conditions not easily reproduced; and to allow extrapolation in time or temperature.

What do you want from the model? Identify the desired inputs and outputs of the model. A physical model is a transfer function. It transforms those inputs into outputs. When models couple the outputs of one become the inputs to the next. What are the macroscopic variables and boundary conditions - - temperature, time, loads, etc.? And at the heart of the model lie the physical mechanisms of structural change.

To construct the model use standard techniques using the modelling tools of engineering and materials science: equations of fracture mechanics, kinetics, dynamics etc. that we learnt at college. Exploit previously validated models of the problem using those tools. If parts of the process cannot be modelled introduce an empirical fit to the data (e.g., a power law) that can be replaced later by a better model when it becomes available.

The point is this: physical models suggest forms for constitutive equations (laws), and for the significant groupings of the variables that enter them. Empirical methods can then be used to establish the precise functional relations between these groups. Finally, we finish up with a constitutive equation that contains the predictive power of micro-mechanical modelling with the precision of ordinary curve fitting of experimental data. In other words, input variables like maximum stress, stress range or stress amplitude, frequency, etc., and temperature, concentration of chemical species, damage-state, are all embedded in the physical model. An example on modelling structure that evolves with time below may help to illustrate this.

7.1 Constitutive Models: The Internal Material State Variable Method

Composite Materials Behaviour Involves Three Principle Levels of Complexity:

-

1)

structural change where structure (component behaviour) changes as damage evolves;

-

2)

multiple mechanisms;

-

3)

linked processes.

We need constitutive equations for design that encapsulate all the intrinsic and extrinsic variables. Constitutive models have two aspects: response equations and structural evolution equations. The response equation describes, for example, the relationship of current (damaged) modulus E (or strength) to stress range, temperature, etc. and a current value of an internal state variable D. The structural change (evolution) equation is based upon the internal (“damage”) state variable D where D uniquely defines the current level of damage in the composite for a given set of variables and evolves progressively.

For composites under attack from stress and environment, the activation of a complexity of atomistic defects and microscopic flaws over time can be felt at the component level of size. Corrosion fatigue of glass fibers in epoxy is an example, and occurs by 2 rate-limiting phenomena. First, reaction of the hostile species with the fibers reduces their strength and they fail at the matrix crack. This process repeats itself and new crack segments form bridges by pristine fiber and the fiber pull-out mechanism operates with diminished crack blunting. This is a reaction-controlled process. On the other hand, for a narrow matrix-fiber shear crack opening, concentration gradients develop along the crack and the fiber pull-out mechanism is absent [9]). However, pure atomistic models on their own break down because certain structural variables (diffusion-rates, jump frequencies, etc) are not known, neither can they easily be measured. Whilst our understanding of the deformation and fracture behaviour of materials based on defect theory and crack mechanics has advanced considerably, failure prediction of composite structures on a macroscale becomes problematic. Chemically activated kinetics of the process is thermally sensitive. Models based on statistical mechanics lead to a crack-growth rate that depends upon temperature.

The point is this: the physical model suggests the form for a constitutive equation (law). Variables are grouped that enter the equation while empirical methods establish precise functional relations between these groups. Finally, we have a constitutive equation having the predictive power of a micro-mechanical model combined with the precision of curve fitting of experimental data. In other words, input variables (maximum stress etc., and temperature, concentration of chemical species, damage-state) are all embedded in the physical model.

A structure under stress suffers damage with time: cracks form, which in turn increase the rate of damage formation. There is positive feedback. Ageing weakens the material; its structure changes and constitutive models (equations) describe this sort of behaviour, best derived using the internal state variable method. (Re-call: a constitutive model is a set of mathematical equations that describe the behaviour of a material element subjected to external influence: stress, temperature, etc). Constitutive equations take on many forms: algebraic, differential, or integral equations, which may be embedded in a computation (e.g., finite element analysis).

The response equation describes the relationship of (say) current modulus, Ec, of the laminate, (a measure of the effect of damage), to the applied stress, σ, or stress range, Δσ, load cycles, N, and to the current value of the internal state variable, D.

We call the internal state variable damage because it describes a change in the state of a material, brought about by an applied stress or by load cycling. It (meaning D) uniquely defines the current level of damage in the material, for a given set of test variables.

In this example, we observe changes in the composite modulus (stiffness) with the accumulation of fatigue damage. The response equation describes this change of (damaged) modulus, Ec, to the stress magnitude, temperature, time (number of load cycles), and to the current value of the internal state variable D:

Now consider matrix cracking as the damaging process: D is usually defined as D = 1/s, where s is matrix crack spacing. Damage due to de-lamination, on the other hand, can be defined as total (meaning actual or measured) de-lamination crack area normalised with respect to the total area available for de-lamination, i.e., D = A/Ao [12, 13].

Or it might be useful to normalise matrix crack spacing, s, with de-lamination crack length ld, (i.e., s/ld ) because of coupling effects between these two mechanisms [14]. But problems arise from a lack of detail of these microscopic parameters including number (or density) of fibre breaks, which can only be determined microscopically and this is not practical in real structures. Instead of characterizing a material property as a function of the independent variables, which experimentally is very time-consuming and expensive), fit data to a coupled set of differential equations, one for the modulus E’, and two (or more) depending on damaging mechanisms D1’, D2’:

Since the internal state variable, D, evolves over time with the progressive nature of the damaging processes, its rate of change can be described by:

Where several mechanisms contribute simultaneously to the response, (e.g., where modulus degradation is the result of the coupling of de-lamination and matrix cracking), this time there are two internal state variables, one for each mechanism. Consequently, the model suggests a constitutive equation having a completely different form than before. Instead of trying to characterize the modulus, Ec, as a function of the complete set of independent variables (although we could), we now seek to fit data to a coupled set of differential equations, one for the modulus Ec', and two (or more), depending on the number of damaging mechanisms, for damage propagation, namely D1' and D2':

D1 describes the damage due to one mechanism and D2 describes a different damaging mechanism that, when combined with the first, eventually lead to composite failure. E', D1' and D2' are their rates of change with time (or numbers of load cycles); f, g1, g2 are simple functions yet to be determined.

There are now three independent variables, (σ, Τ, and stress-state, λ), whereas before there were eight. These equations can be integrated to track out the change of modulus with the accumulation of damage, and ultimately used to predict fracture of a component or the design life in fatigue.

Thus, the modulus-time (cycles) response is found by integrating the equations as a coupled set, starting with E = Eo (the undamaged modulus) and D = 0 (no damage). Step through time (cycles), calculating the increments, and the current values, of Ec and D, and using these to calculate their change in the next step. Eq. [3a] can now be adopted as the constitutive equation for fatigue, and empirical methods can be used to determine the functions f, g1, g2. The State Variable Approach leads to the idea of a Mechanism Map or Damage Diagram, a versatile method of presenting both results of the model and experimental data. The conceptual map displays the response (loss of stiffness or strength), identifies the mechanisms, and shows the extent of the damage for a given loading history (Fig. 9).

The State Variable Approach leads to the ideas of a Mechanism Map or Damage Diagram, a versatile method of presenting both results of the model and experimental data [12, 13]. The map displays the response (loss of stiffness), identifies the mechanisms, and shows the extent of the damage for a given loading history. Various failure modes spread, reducing the section until the fracture load exceeds the residual strength culminating in a cascade of breaking fibers

However, the model points to something else, and it is of the greatest value; it suggests the proper form that the constitutive equation should take. This physical model-informed empiricism has led to the development of a new branch of mechanics called damage mechanics. There can be added complexity: spatial variation appears when stress and temperature or other field variables are non-uniform. While simple geometries can be treated analytically, using, for example, the modelling tools of fracture (damage) mechanics, more complex geometries require discrete methods.

The finite element method of modelling is an example. Here the material is divided into cells, which respond to temperature, body forces, and stress via constitutive equations, with the constraints of equilibrium, compatibility and continuity imposed at their boundaries. Internal material state variable formulations for constitutive laws are embedded in the finite element computations to give an accurate description of spatially varying behaviour. Ultimately, the aim is to develop a design tool that incorporates an initial material variability and operating environment to provide a “knock-down” factor f that corresponds to a specified probability of failure Pf.

Critical aspects include:

-

1)

understanding of the expected load and environment for a particular structure based on a statistical description via a Monte Carlo simulation; and

-

2)

development of a database of initial strength based on a Weibull distribution and residual (fatigue) strength evolution curves (the input) based on a stress analysis and structure evolution to support the “informed empiricism” of a residual strength model.

In summary, a physical model is to gain physical insight; to capture the material’s response in an equation or code; to predict material response under conditions not easily reproduced; to allow extrapolation in time or temperature; and to identify desired inputs and outputs of the model. The physical model is a transfer function. It transforms those inputs into outputs. When models couple the outputs of one become the inputs to the next.

We need to know what are the macroscopic variables and boundary conditions; temperature, time, loads, etc. And at the heart of the model lie the physical mechanisms of structural change. The important design issues should all be embedded in the same model of material and component behaviour and that must also include the dominant mechanisms of structural change over orders of magnitude of size and time. To predict a result there should be a self-evident truth that the mechanism regime in which the component is operating must be known.

8 Multi-Scale Modelling and Computer Simulation

Modelling and simulation methods are becoming increasingly ambitious. On the one hand, there are calculations, which aim at increasingly precise and detailed description of material behaviour. The number of assumptions is minimised and empirical elements are replaced wherever possible. Improvements in detail yield improvements in accuracy and provide the potential tool to resolve previously intractable problems. On the other hand, increasingly large complex systems are being investigated. These have different challenges. The methods, which are best for studies at the atomistic level are unlikely to be efficient for larger structures. This is because atomistic models for service performance are too firmly rooted in the underlying atomistic processes, and although understood, can be characterized only by microscopic measurements, which are impractical for an engineer.

Furthermore, interpretation itself is difficult without other aids. There is a need to link experience at levels between the macroscopic size with understanding at the micro-structural scale of the material. One way forward is to identify the broad rules governing material behaviour and the rules governing the magnitudes of material properties, which are contained in the microscopic models, and to use them as the basis of a “model-informed” empiricism.

8.1 Analysis of a Stringer-Stiffened Panel

In Fig. 10 a typical multi-scale challenge based on a hierarchical strategy is presented. It is a stringer-stiffened panel fabricated from a textile composite. The details of the microstructure at either the fiber-matrix or tow architectural scales, however, are too complex to analyse. Instead, micro-mechanical models are used to obtain the effective properties at the micron scale to obtain effective fibre tow properties. Then, a model or representative of a volume element is used to obtain the effective properties of the textile architecture at this size scale. Inserting these values into a structural model identifies the “hot spots”. These hot spots are interrogated by reversing the process in order to determine whether the structure has to be re-designed or an alternative material system selected. Examples of challenges include dealing with large macroscopic gradients, non-periodic loads including transient loads, non-periodic microstructure, mesh generation, and data management.

In Fig. 11, all of the pictures are synthesized from the same basic textile microstructure. The difference is the degree of repetition of the basic unit; also, how closely the material has to be modelled (for example, in the presence of large macroscopic strain gradients, a higher magnification is required). For coarse and fine microstructures the analyses involve discrete and homogenized modelling, respectively. What is not so clear is how to handle the “transitional microstructure”, for which there is too much detail for discrete modelling, but too little repetition of representative behaviour to use homogenisation. One way is to use homogenisation, selective homogenisation, macro elements (finite elements that allow complex variation of properties within an element), and global to local interfacing techniques,

including one technique based on modal analysis. An aspect of multi-scale analysis often overlooked is the integration of different models.

A common characteristic of many analytical studies is the need to construct and manipulate related models, related in the sense that they share some characteristics. In other words, there is a clear hierarchy in which many properties are “passed on” via inheritance. The hierarchical analysis environment can be used in parametric studies of structures or situations in which the constitutive behaviour is defined in a hierarchical sense.

Recent developments in multi-scale modelling strategies have lead to the virtual mechanical testing of composite structures right up to the point of failure. In the virtual testing of realistic aerospace composite structures, the validation of finite element (FE) predictions has been mostly proven for simple coupon and benchmark test specimens (Fig. 12).

The need for finite elements smaller than the process zone at a crack tip means less than 1 mm for typical resins. A mesh this fine is unrealistic for a realistic structure. Most codes contain a local/global strategy whereby an existing coarse (global) finite element model has embedded a refined (local) region. Courtesy of G A O Davies

The difficulty is simulating the correct failure mechanisms of realistic aerospace structures: for example, post-buckled compression panels, impact-damaged shells, major joints or any component with geometrical discontinuities. Commercial FE codes are powerful enough to capture the correct physics of the failure process. Some codes model the initiation of material failure followed by propagation to full structural failure.

Having a FE code is just the beginning: the modeller has to create a FE model, which is capable of capturing the internal stress fields with the required accuracy. Assessing damage in sandwich structures under hard and soft impacts, for example, requires validated FE design tools together with structural impact test data in order to validate FE codes and airworthiness certification.

Simulation is a study of the dynamic response of a modelled system by subjecting the model to inputs, which simulate real events, ways in which complex structures evolve without the need to perform expensive time consuming experiments. This route leads to a quantitative prediction that represents the actual performance of the material or component or full-size structure across a broad spectrum of size (or multi-scale) without the cost, time, accident risk, effort and repeatability, problems normally associated with real testing. But how much detail of failure mechanisms do we need to know in order that a successful physical model can be incorporated into a simulated virtual test that reproduces the outcome of a real structural situation? Creating physically sound damage simulations is difficult because damage initiation must first be predicted for parts containing no cracks.

Advances in computer power have made it possible to simulate materials by describing the motion of each atom, rather than making the approximation that matter is continuous. Most simulations of cracks, however, ignore the quantum-mechanical nature of the bonds between the atoms. This limitation is overcome by using a new technique called “Learn-on-the-fly” (LOTF). This method uses a quantum-mechanical description of bonding near the tip of the crack, where essentially it is coupled almost seamlessly to a large (on the atomic scale) region described with an inter-atomic potential.

Developments in non-linear elements in computational mechanics have led to damage simulations of sufficient fidelity in engineering design. A key feature is the incorporation into a finite element formulation of elements that can explicitly represent displacement discontinuities associated with cracks – so-called “cohesive elements.” These elements relate the displacement discontinuity across a crack to tractions that act across that crack. Examples include: fibrils in polymer craze zones, bridging fibres or ductile particles across a matrix crack, fiber-matrix slippage following de-bonding (friction) at interfaces, and so on. Cohesive elements can be formulated that admit cracks crossing any surface within the finite element, so that the developing crack path in a damage simulation need not be specified a priori; it can simply follow any locus that the mechanics of the evolving failure process has determined.

8.2 Simulation of a De-Lamination Crack Using a Cohesive Interface Model

A composite can be modelled by layered elements (homogeneous and orthotropic) whose properties (for example, stiffness) degrade by micro-cracking according to a continuum damage model. The co-existence (coupling) of discrete de-lamination cracking and continuum damage (matrix cracking) can be captured via a cohesive interface model (Fig. 13). The composite is modelled by layered elements (homogenious orthotropic) whose properties (e.g., stiffness) degrade by micro-cracking according to a continuum damage model. Discrete de-lamination damage can be captured via a cohesive interface model [15]. Micro-cracking is modelled by a non-linear constitutive law to the individual ply that causes material degradation (“softening”) according to the continuum damage model.

A realistic simulation of a de-lamination crack modelled by a Cohesive Zone idealization based on the Finite Element Method which computes stress distributions for generic geometry and loads. Courtesy of Brian Cox [15]

The ply degradation parameters in the model are the internal state variables governed by damage evolution eqs. A cohesive zone idealization based on the finite element method computes the stress distributions for generic geometry and applied load. A realistic model or simulation then predicts the effect of load, fiber orientation, and stress concentrator upon damage and strength.

Strain energy concentrations suggest correctly that ply junctions are sites that initiate de-lamination cracks. Crack propagation needs an accurate representation of the stress field ahead of the crack front known as the “process zone”, which may be small. The need for finite elements smaller than the process zone at a crack tip means less than 1 mm for typical resins. A mesh this fine is unrealistic for a true structure. Most codes contain a local/global strategy whereby an existing coarse (global) finite element model has embedded a refined (local) region.

However, a number of minor difficulties still exist before damage simulations of sufficient fidelity can replace qualification tests: (1) the implications of length scales associated with non-linear cohesive processes for correct mesh refinement; (2) the calibration of cohesive traction-displacement laws in order to confirm a physically-sound model of the particular crack wake mechanism; and (3) the problem of instability in numerical iteration of non-linear damage problems, which may have physical (not algorithmic) origins.

Those damage growth mechanisms that have been observed in laminates include: de-lamination and splitting (shear) cracks, which grow in various orientations and change in shape with time; fiber rupture; fiber micro-buckling or kink formation; global buckling of de-laminated plies; and diffuse micro-cracking or shear damage within individual plies. These mechanisms (with the exception of global buckling) can be represented by cohesive elements, by collapsing the non-linear processes onto surfaces, with the physics embedded in traction-displacement constitutive behaviour (rather than stress-strain behaviour).

Solving the problem of suitable mesh refinement might be achievable in terms of length scales associated with cohesive laws. Calibration of traction-displacement laws requires a successful physical model and appropriate set of experiments. This is not straightforward because different cohesive mechanisms often act simultaneously in a single crack, with their relative magnitudes depending on interaction effects with other cracks.

Defining experiments is also challenged because traction laws must be deduced from experiments via inverse problem methods, which are vulnerable to numerical noise. Instability can be dealt with in one of two ways: (1) run the complete simulation as a dynamic model; or (2) invent algorithms that stabilize the model by controlling local displacements.

9 Assessment of the Health of a Structure

There is general acceptance that the current practice of designing a damage tolerant structure is to take advantage of composite material heterogeneity and to configure the material such that it will withstand certain types of damage and will naturally arrest their propagation; 3D fibre architectures [16,17,18] and resins modified with nano particles [19,20,21,22] have been used in that effort. Yet, this is a passive approach and for this reason it is subject to its own limitations. On the other hand, the development of structural health monitoring (SHM) techniques, [23,24,25], for composite materials and structures is an emerging technology which seemingly can provide the means to enhance reliability and safety by ensuring early detection and monitoring of damage. Predictive capabilities enabling estimations of residual stiffness and strength of a composite structure with a known state of damage are also emerging. It is envisioned that new strategies for designing damage resistant and tolerant composite materials and structures may become available, if we first develop and then synergistically combine new capabilities enabling in-service damage detection and characterization, health monitoring and structural prognosis. The rapid development of numerical codes and experimental techniques make possible not only the robust modelling behind the design of advanced composites with improved behaviour in critical operational conditions, but also for establishing sound, reliable SHM methods and strategies. The challenges will be an integration of modelling the design of composite structures, as well as process modelling, together with SHM and repair strategies. Moreover, SHM may also promote a “self-healing” reaction in case of a particular damage, keeping the structural integrity, at least for a given period of time.

The assurance of structural reliability of aircraft systems will greatly enhance confidence in their safety, reduce the probability of premature failures, and diminish the costs of operation and maintenance. An important element in achieving reliable and low-maintenance cost structural systems is the availability of scheduled and automated SHM inspections and assessments of the actual physical condition of critical structural components which will amend or eliminate current preventive maintenance practices, with envisioned states of on-line and predictive condition-based maintenance capabilities. To this end, significantly improved techniques for inspection, analysis, and interpretation are urgently needed to facilitate the assessment of the health of a structure and to promote the design, fabrication, and reliable operation of future and current aircraft systems.

The enhancement of damage tolerance in composites through structural monitoring, [26,27,28] is a multi-disciplinary effort, involving composite mechanics, fracture mechanics, computational mechanics structural dynamics, new actuator and sensor development, signal processing and interpretation, system integration, and so forth. Successful cross-fertilization of these tasks will lead to: (1) revolutionary design and manufacturing concepts and analyses for advanced ultra-reliable damage tolerant composite structures of the new century; (2) theoretical and computational models for predicting actuator/sensor performance, structural integrity, and damage detection capabilities enabling composite structural prognosis; and (3) self-healing strategies.

10 Difficult Challenges Ahead

There are difficult challenges ahead. In present design “No-growth” damage criteria is applied and post-buckling design has not yet been explored in composite wings. The problem is how to come up with a predictive damage initiation model that describes damage evolution while guaranteeing structural integrity. And in all design issues the sites and mechanisms of crack initiation and processes of damage growth must all be embedded in the same model of material and component behaviour together with recognition of the dominant meaning most critical mechanism(s) over orders of magnitude of size.

Other issues that require solving include: modelling progressive fibre fracture and integrating within the various models; formulation of a single design equation that combines continuum and discrete damage representations; increasing automation for ease of product manufacture and reduced costs; and to speed up and advance potentially high-volume markets.

There are many questions yet unanswered. For example how much detail of failure mechanisms do we need to know for a successful model to be incorporated into a simulated virtual test to reproduce the outcome of a real test? How frequently do we need to use NDE to inspect critical components for cracks? What decision do we make should we find a crack that might or might not turn out to be innocuous or sub-critical? How do we deal with the possibility of a large crack being missed during inspection?

To answer these questions, the monitoring and management of structural health of the composite is essential. Benefits include: improvement of reliability and safety; enhancement of performance and operation; potential for automated unsupervised monitoring; and reduction of life-cycle cost. Structural health monitoring (SHM) is the “process of implementing a damage identification strategy”, observing a structure using periodically spaced measurements to extract of damage-sensitive features, and analysis of these features to determine the current state of system health.

The necessary elements to implement a SHM technology utilize distributed, permanently installed sensors at critical structural points. Diagnostic algorithms extract health information from the sensing data. The objective is structural condition assessment and prediction of remaining service life. The maintenance benefit from SHM systems compared to conventional inspection techniques to detect structural failure include the ability to identify the beginning of damage: it can define immediate remedial strategies; and it can predict remaining life of the structure.

Implementing SHM can lead to less conservative designs that minimize structural weight; shorten the design cycle time; optimize structural component testing; and overall improve the performance of the system. The principle of operation for passive sensing SHM systems is to monitor environmental changes, e.g. loads and impacts; to interrogate local failure; and identify location and magnitude of damage. Active SHM systems utilize transducers as sensors and actuators as transmitters and receivers to monitor environmental changes, e.g. loads and impacts; interrogating local failure; location and magnitude of damage.

11 Concluding Remarks

Composite materials are used increasingly in aerospace applications and designs and analysis methods are based on well-established design practices. “Black metal design” seems still to be the common word. Material and geometric non-linearities are not accepted as a common analysis method of composite structures. No-growth damage criteria are still applied, while post-buckling design has not yet been explored in composite wings. Bolted patch repair has become accepted as common practise for military and civil platforms. And there remains a limitation on operational strains. The dilemma is that conventional analysis methods developed for metallic structures might be too conservative for composite structures.

A major difficulty in designing composite structures is how to predict damage initiation and damage evolution, and safe operating limits to ensure structural integrity. Our comprehension of structural changes in composite materials, which take place continuously and cumulatively, is simply lacking in detail. To predict a result, say lifetime or a stress response by a numerical method, there must be self-evident truth that the mechanism regime in which the component is operating must be have been identified. In other words, the important design issues must all be embedded in the same model of material and component behaviour that also include the dominant mechanism(s) of structural change over orders of magnitude of size and time. This remains a difficulty.

Critical design issues that relate to anisotropy effects should take into account toughness and its manifestation in notch sensitivity. Weaknesses caused by anisotropy should be identified, such as de-lamination and transverse ply cracking, and de-bonding at interfaces. Mechanisms that govern inelastic strains and re-distribute stresses and diminish peak stress magnitudes should be understood.

Damage tolerance certification requires time-consuming, expensive testing. The consequences of changing composite lay-up and textile architecture are unpredictable and the complete test matrix for materials qualification would have to be repeated at the coupon level. In the design process, a key role has to be played by certain physical length scales in the failure process, which arises from de-lamination and splitting (shear) cracks, fibre rupture and fiber micro-buckling or kink formation, and diffuse micro-cracking or shear damage. All provide a rationale for making modelling decisions.

With the advent of powerful computers and software that can be purchased at reasonable cost, this means that many of the physical models and computer simulations that would be cumbersome for design engineers to use, could be implemented as user-friendly computer applications or integrated within commercial finite element design systems. In this respect, mathematical challenges include hierarchical meshing strategies, which must be coarse enough at the largest scales (entire structure), whilst cascading down through finer and finer meshes to atomic scale (if necessary). The real challenge is to formulate design equations that combine continuum (spatially averaged) and discrete damage representations through physical (mechanism) models in a single calculation. And at the heart of the model lies those mechanism(s) best identified by direct observation.

Successful implementation of physical models or simulations requires knowledge of appropriate phenomena such as impact. Multi-scale modelling will arise because impact damage is localised and requires fine scale modelling of failure processes at the micro level, whilst the structural length scales are much larger. Solution is to use meso-scale models based on continuum damage mechanics (CDM) in explicit FE codes providing a framework within which failure processes may be modelled. Key issues currently under investigation include the development and implementation of: constitutive laws for modelling failures mechanisms; models for folding and collapse in composite sandwich cores; and materials models into FE codes.