Abstract

We consider the convex quadratic optimization problem in \(\mathbb {R}^{n}\) with indicator variables and arbitrary constraints on the indicators. We show that a convex hull description of the associated mixed-integer set in an extended space with a quadratic number of additional variables consists of an \((n+1) \times (n+1)\) positive semidefinite constraint (explicitly stated) and linear constraints. In particular, convexification of this class of problems reduces to describing a polyhedral set in an extended formulation. While the vertex representation of this polyhedral set is exponential and an explicit linear inequality description may not be readily available in general, we derive a compact mixed-integer linear formulation whose solutions coincide with the vertices of the polyhedral set. We also give descriptions in the original space of variables: we provide a description based on an infinite number of conic-quadratic inequalities, which are “finitely generated.” In particular, it is possible to characterize whether a given inequality is necessary to describe the convex hull. The new theory presented here unifies several previously established results, and paves the way toward utilizing polyhedral methods to analyze the convex hull of mixed-integer nonlinear sets.

Similar content being viewed by others

1 Introduction

Given a symmetric positive semidefinite matrix \(Q\in \mathbb {R}^{n\times n}\), vectors \(a,b\in \mathbb {R}^n\) and set \(Z\subseteq \{0,1\}^n\), consider the mixed-integer quadratic optimization (MIQO) problem with indicator variables

and the associated mixed-integer nonlinear set

where \(\varvec{e}\) denotes a vector of ones, and \(x\circ (\varvec{e}-z)\) is the Hadamard product of vectors x and \(\varvec{e}-z\). There has recently been an increasing interest in problem (1) due to its statistical applications: the nonlinear term (1b) is used to model a quadratic loss function, as in regression, while Z represents logical conditions on the support of the variables x. For example, given model matrix \(F \in \mathbb {R}^{m\times n}\) and responses \(\beta \in \mathbb {R}^m\), setting \(a=-\beta ^\top F\), \(Q=F^\top F\), \(b=0\) and \(Z=\left\{ z\in \{0,1\}^n:\sum _{i=1}^nz_i\le r\right\} \) in (1) is equivalent to the best subset selection problem with a given cardinality r [10, 16]:

Other constraints defining Z that have been considered in statistical learning applications include multicollinearity [10], cycle prevention [28, 30], and hierarchy [12]. Set X arises as a substructure in many other applications, including portfolio optimization [13], optimal control [21], image segmentation [26], signal denoising [9].

A critical step toward solving MIQO effectively is to convexify the set X. Indeed, the mixed-integer optimization problem (1) is equivalent to the convex optimization problem

where \({\text {conv}}(X)\) denotes the convex hull of X and \(\text {cl conv}(X)\) is the closure of \({\text {conv}}(X)\). However, problem MIQO is \(\mathcal{N}\mathcal{P}\)-hard even if \(Z=\{0,1\}^n\) [15]. Thus, a simple description of \(\text {cl conv}(X)\) is, in general, not possible unless \(\mathcal{N}\mathcal{P}\)= Co-\(\mathcal{N}\mathcal{P}\).

In practice, one aims to obtain a good convex relaxation of X, which can then be used either as a standalone method (as is pervasively done in the machine learning literature), to obtain high-quality solutions via rounding, or in a branch-and-bound framework. Nonetheless, it is unclear how to determine whether a given relaxation is good or not. In mixed-integer linear optimization, it is well-understood that facet-defining inequalities give strong relaxations. However, in MIQO (and, more generally, in mixed-integer nonlinear optimization problems), \(\text {cl conv}(X)\) is not a polyhedron and there is no consensus on how to design good convex relaxations, or even what a good relaxation should be.

An important class of convex relaxations of X that has received attention in the literature is obtained by decomposing matrix \(Q=\sum _{i=1}^\ell \Gamma _i+R\), where \(\Gamma _i\succeq 0\), \(i=1,\dots ,\ell \), are assumed to be “simple" and \(R\succeq 0\). Then

and each constraint \(\tau _i\ge x^\top \Gamma _i x\) is replaced with a system of inequalities describing the convex hull of the associated “simple" mixed-integer set. This idea was originally used in [19], where \(\ell =n\), \((\Gamma _i)_{ii}=d_i>0\) and \((\Gamma _i)_{jk}=0\) otherwise, and constraints \(\tau _i\ge d_i x_i^2\) are strengthened using the perspective relaxation [1, 18, 22], i.e., reformulated as \(z_i\tau _i\ge d_i x_i^2\). Similar relaxations based on separable quadratic terms were considered in [17, 35]. A generalization of the above approach is rank-one decomposition, which lets \(\Gamma _i=h_ih_i^\top \) be a rank-one matrix [5, 6, 33, 34]; in this case, letting \(S_i=\left\{ i\in [n]:h_i\ne 0\right\} \), constraints \(\left( \sum _{j\in S_i}z_j\right) \tau _i\ge (h_i^\top x)^2\) can be added to the formulation. Alternative generalizations of perspective relaxation that have been considered in the literature include exploiting substructures based on \(\Gamma _i\) where non-zeros are \(2\times 2\) matrices [4, 7, 8, 20, 24, 27] or tridiagonal [29].

Convexifications based on decomposition (3) have proven to be strong computationally, and are attractive from a theoretical perspective. The fact that a given formulation is ideal for the substructure \(\tau _i \ge x^\top \Gamma _ix\) lends some theoretical weight to the strength of the convexification. However, approaches based on decomposition (3) have fundamental limitations as well. First, they require computing the convex hull description of a nonlinear mixed-integer set to establish (theoretically) the strength of the relaxation, a highly non-trivial task that restricts the classes of matrices \(\Gamma _i\) that can be used. Second, even if the ideal formulation for the substructure \(\tau _i \ge x^\top \Gamma _i x\) is available, the convexification based on such decomposition can still be a poor relaxation of X—and there is currently no approach to establish the strength of the relaxation without numerical computations. Third, it is unclear whether the structure of the relaxations induced by (3) matches the structure of \(\text {cl conv}(X)\), or if they are overly simple or complex.

1.1 Contributions and outline

In this paper, we close the aforementioned gaps in the literature by characterizing the structure of \(\text {cl conv}(X)\). First, in Sect. 2, we review relevant background for the paper. In Sect. 3, we show that \(\text {cl conv}(X)\) can be described in a compact extended formulation with \({\mathcal {O}}(n^2)\) additional variables with linear constraints and an \((n+1) \times (n+1)\) positive semidefiniteness constraint. In particular, convexification of X in this extended formulation reduces to describing a base polytope. We use the vertex description of this base polytope, which is exponential in general. However, we show that the set of vertices can be represented as the feasible points of a compact mixed-integer linear formulation (Sect. 5). In Sect. 4, we characterize \(\text {cl conv}(X)\) in the original space of variables. While the resulting description has an infinite number of conic quadratic constraints, we show that \(\text {cl conv}(X)\) is finitely generated, and thus we establish which inequalities are necessary to describe \(\text {cl conv}(X)\)—in precisely the same manner that facet-defining inequalities are required to describe a polyhedron. We also establish a relationship between \(\text {cl conv}(X)\) and relaxations obtained from decompositions (3). In Sect. 5, we present a mixed-integer linear formulation of the MIQO problem using the theoretical results in Sect. 3. Finally, in Sect. 6 we conclude the paper with a few remarks.

We point out that, using standard disjunctive programming techniques [14, 20], it is possible to obtain a conic quadratic extended formulation of (1), although such representation typically requires adding \({\mathcal {O}}(|Z|n)\) number of variables and \({\mathcal {O}}(|Z|)\) nonlinear constraints. Since |Z| is often exponential in n, these formulations are in general impractical, and therefore their use has been restricted to small instances with \(n\le 2\) [4, 7, 20, 22, 24] or problems with special structures that admit a compact representation [23]. We argue that the convexifications in this paper are significantly more tractable: regardless of Z, we require only \({\mathcal {O}}(n^2)\) variables instead of \({\mathcal {O}}(|Z|n)\), and only one nonlinear conic constraint instead of \({\mathcal {O}}(|Z|)\). The major complexity of the proposed formulations in this paper is the exponential number of linear inequalities, which can be generated, as needed, using mature mixed-integer linear optimization techniques.

2 Notation and preliminaries

In this section, we first review the relevant background and introduce the notation used in the paper.

Definition 1

([31]) Given a matrix \(W\in \mathbb {R}^{p\times q}\), its pseudoinverse \(W^\dagger \in \mathbb {R}^{q\times p}\) is the unique matrix satisfying the four properties:

Clearly, if W is invertible, then \(W^{-1}=W^\dagger \). It also readily follows from the definition that \((W^\dagger )^\dagger =W\).

We recall the generalized Schur complement, relating pseudoinverses and positive semidefinite matrices.

Lemma 1

([3]) Let \(W= \begin{pmatrix}W_{11}&{}W_{12}\\ W_{12}^\top &{} W_{22}\end{pmatrix}\), with symmetric \(W_{11}\in \mathbb {R}^{p\times p}\), symmetric \(W_{22}\in \mathbb {R}^{q\times q}\), and \(W_{12}\in \mathbb {R}^{p\times q}\). Then \(W\succeq 0\) if and only if \(W_{11}\succeq 0\), \(W_{11}W_{11}^\dagger W_{12}=W_{12}\) and \(W_{22}-W_{12}^\top W_{11}^\dagger W_{12}\succeq 0\).

Note that if \(W_{11}\succ 0\), then the second condition of Lemma 1 is automatically satisfied. Otherwise, this condition is equivalent to the system of equalities \(W_{11}U=W_{12}\) having a solution \(U\in \mathbb {R}^{p\times q}\).

Let \([n]=\{1,\dots ,n\}\). Throughout, we use the convention that \(x_i^2/z_i=0\) if \(x_i=z_i=0\) and \(x_i^2/z_i=+\infty \) if \(z_i=0\) and \(x_i\ne 0, i\in [n]\). For a vector \(a\in \mathbb {R}^{n}\), \(\Vert a\Vert _2\) and \(\Vert a\Vert _{\infty }\) denote the vector \(\ell _2\)-norm and the maximum absolute value among \(a_i\)’s, respectively. Given two matrices V, W of matching dimensions, let \(\langle V,W\rangle =\sum _{i}\sum _jV_{ij}W_{ij}\) denote the usual inner product. Given a matrix \(W\in \mathbb {R}^{n \times n}\), let \(\text {Tr}(W)=\sum _{i=1}^nW_{ii}\) denote its trace, and let \(W^{-1}\) denote its inverse, if it exists. Let \(\Vert W\Vert _{F}\) and \(\Vert W\Vert _{\max }\)denote the Frobenius norm and the maximum absolute value of entries of W respectively, and \(\lambda _{\max }(W)\) means the maximum eigenvalue of W. We let \(\textrm{col}(W)\) denote the column space of matrix W. Given a matrix \(W\in \mathbb {R}^{n\times n}\) and \(S\subseteq [n]\), let \(W_S\in \mathbb {R}^{S\times S}\) be the submatrix of W induced by S, and let \({\hat{W}}_S\in \mathbb {R}^{n\times n}\) be the \(n\times n\) matrix obtained from \(W_S\) by filling the missing entries with zeros, i.e., matrices subscripted by S without “hat" refer to the lower-dimensional submatrices. For any two sets \(S, T \subset [n]\), let \(W_{S,T}\) denote the submatrix of W with rows in S and columns in T. Note that if matrix \(W\succ 0\), then it can be easily be verified from Definition 1 that the submatrix of \({\hat{W}}_S^{\dagger }\) indexed by S coincides with \(W_S^{-1}\), and \({\hat{W}}_S^{\dagger }\) is zero elsewhere; in this case, we abuse notation and write \({\hat{W}}_S^{-1}\) instead of \({\hat{W}}_S^{\dagger }\). Given \(S\subseteq [n]\), let \({\hat{\varvec{e}}}_S\in \{0,1\}^n\) be the indicator vector of S. We define \(\pi _S\) as the projection onto the subspace indexed by S and \(\pi _{S}^{-1}(x)\) as the preimage of x under \(\pi _S\).

Example 1

Let \(Q=\begin{pmatrix} d_1&{} b\\ b &{}d_2 \end{pmatrix}\) with \(d_1,d_2>0\) and \(d_1d_2>b^2\). Then

3 Convexification in an extended space

In this section, we describe \(\text {cl conv}(X)\) in an extended space. In Sect. 3.1, we provide a “canonical" representation of \(\text {cl conv}(X)\) under the assumption that \(Q\succ 0\). In Sect. 3.2, we provide alternative representations of \(\text {cl conv}(X)\), which can handle non-invertible matrices Q and may also lead to sparser formulations.

3.1 Canonical representation

Given \(Q\succ 0\), define the polytope \(P\subseteq \mathbb {R}^{n+n^2}\) as

Proposition 1 below shows how to construct mixed-integer conic formulations of MIQO using polytope P.

Proposition 1

If \(Q\succ 0\), then the mixed-integer optimization model

is a valid formulation of problem (1).

Proof

Consider a point (x, z, t, W) satisfying constraints (4b), (4c) with \(z={\hat{\varvec{e}}}_S\) for some \(\hat{\varvec{e}}_S\). Constraint (4c) is satisfied if and only if \(W={\hat{Q}}_S^{-1}\). Therefore, constraint (4b) reduces to

Since the pseudoinverse of matrix \(W=\begin{pmatrix} Q_S^{-1} &{} \textbf{0}\\ \textbf{0} &{} \textbf{0}\end{pmatrix}\) is \(W^\dagger =\begin{pmatrix} Q_S &{} \textbf{0}\\ \textbf{0} &{} \textbf{0}\end{pmatrix}\), we find from Lemma 1 that constraint (4b) is satisfied if and only if:

-

\(W\succeq 0\), which is automatically satisfied.

-

\( WW^\dagger x=x\Leftrightarrow \begin{pmatrix} I &{} \textbf{0}\\ \textbf{0} &{} \textbf{0}\end{pmatrix}\begin{pmatrix}x_S\\ x_{[n]{\setminus } S}\end{pmatrix}=\begin{pmatrix}x_S\\ x_{[n]{\setminus } S}\end{pmatrix}\Leftrightarrow x_{[n]{\setminus } S}=0.\) Thus, condition \(WW^\dagger x=x\) simply enforces the complementarity constraints \(x\circ (\varvec{e}-z)=0\).

-

\(t\ge x^\top W^\dagger x\Leftrightarrow t\ge x_S^\top Q_Sx_S\), which is precisely the nonlinear constraint defining set X.

Now, it is clear that for any (x, z, t, W) satisfying constraints (4b), (4c), (4d), it holds \((x, z, t) \in X\). On the other hand, for any \((x, z, t) \in X\) with \(z = {\hat{\varvec{e}}}_S\) for some \(S \subset [n]\), we can always let \(W = {\hat{Q}}_{S}^{-1}\) and similarly, (x, z, W, t) satisfies constraints (4b), (4c), (4d). \(\square \)

Note that condition \(WW^\dagger x=x\) is used to enforce the complementarity constraints. We point out that a similar idea was recently used in the context of low-rank optimization [11].

Now consider the convex relaxation of (4), obtained by dropping the integrality constraints \(z\in \{0,1\}^n\):

Theorem 1

Let Q be a positive definite matrix. Then

Consequently, that problem (5) has an optimal solution integral in z.

Proof

First observe that constraints (4b),(4c) define a closed convex set. Projecting out variable t, we find that problem (5) reduces to

Note that this formulation uses the pseudoinverse of a matrix of variables. Observe that we omit the constraint \(W\succeq 0\). Since every extreme point \(({\bar{z}}, {\bar{W}})\) of P satisfies \({\bar{W}}\succeq 0\), it follows \((z,W)\in P\) already implies \(W\succeq 0\).

We argue that for any fixed \((z, W)\in P\), setting \(x=-Wa\) is optimal for (6). Using equality (6b), we replace the term \(a^\top x\) in the objective with \(a^\top WW^\dagger x\). Since the problem is convex in x, from KKT conditions we find that any point x satisfying

is optimal. In particular, setting \(x=-Wa\), we find that (7b) is satisfied with \(\lambda =0\), and (7a) is satisfied since \(W W^\dagger x=- W W^\dagger Wa=- Wa=x\).

Substituting \(x=-Wa\) in the relaxed problem, we obtain

Since the objective \(-\frac{1}{2} \langle aa^\top ,W\rangle +b^\top z\) is linear in (z, W) and P is a polytope, there exists an optimal solution \((z^*,W^*)\) that is an extreme point of P, and in particular there exists \({\hat{\varvec{e}}}_{S} \in Z\) such that \(z^*={\hat{\varvec{e}}}_S\) and \(W^*={\hat{Q}}_S^{-1}\). \(\square \)

Remark 1

The convexification for the case where Q is tridiagonal [29] is precisely in the form given in Theorem 1, where the polyhedron P is described with a compact extended formulation. \(\square \)

3.1.1 Bivariate quadratic functions

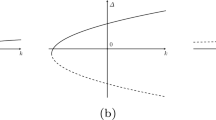

Consider set

where \(d_1d_2>1, d_1,d_2 > 0\). Set \(X_{2\times 2}\) corresponds (after scaling) to a generic strictly convex quadratic function of two variables. We now illustrate Theorem 1 by computing an extended formulation of \(\text {cl conv}(X_{2\times 2})\), that is, for \(Q= \begin{pmatrix}d_1&{}-1\\ -1&{}d_2\end{pmatrix}\). Let \(\Delta :=d_1d_2-1 >0\) be the determinant of Q.

Proposition 2

The closure of the convex hull of \(X_{2\times 2}\) is

Proof

Polyhedron P is the convex hull of the four points given in Table 1.

Note that equalities \(W_{11}=\frac{1}{d_1}(z_1+W_{12})\) and \(W_{22}=\frac{1}{d_2}(z_2+W_{12})\) are valid. Letting \(w=W_{12}\) and projecting out variables \(W_{11}\) and \(W_{22}\), we find that

Also note that \(w = \frac{1}{\Delta } \min \{z_1, z_2\}\), and the convex hull of \(\big \{(z_1, z_2, w) \in \{0,1\}^2 \times \mathbb {R}\; | \; w = \frac{1}{\Delta } \min \{z_1, z_2\} \big \}\) is described by the following inequalities:

Then, (9) and (10) describe the polyhedron P. \(\square \)

Conic quadratic disjunctive programming representations of \(\text {cl conv}(X_{2\times 2})\) have been used in the literature [4]; explicit representations of \(\text {cl conv}\left( X_{2\times 2}\cap \{(x,z,t):x\ge 0\}\right) \) in the original space of variables have been given [8, 24], and descriptions of the rank-one case \(d_1d_2=1\) were given in [5]. A description of \(\text {cl conv}\left( X_{2 \times 2} \cap \{(x,z,t):\ell \le x \le u\}\right) \) in a conic quadratic extended formulation is given in [20] via disjunctive programming. This formulation can be easily adapted to the case with no bounds (considered here), and requires three additional variables and three conic quadratic constraints. In Proposition 2, we give an alternative description of \(\text {cl conv}(X_{2 \times 2})\) using three additional variables, a compact \(3 \times 3\) positive semidefinite constraint, and linear inequalities.

Remark 2

Since P is not full-dimensional, we require only one additional variable w (instead of three) for conic representation of \(\text {cl conv}(X_{2\times 2})\) via the constraints \(0\le z\le 1\), (10), and

\(\square \)

Remark 3

The matrix representation (9) suggests an interesting connection between \(\text {cl conv}(X_{2\times 2})\) and McCormick envelopes. Indeed, from Table 1, we see that

Moreover, the usual McCormick envelopes of the bilinear term \(z_1z_2\), given by \(\max \{0,-1+z_1+z_2\}\le z_1z_2\le \min \{z_1,z_2\}\), are sufficient to characterize the convex hull. \(\square \)

3.1.2 Quadratic functions with “choose-one" constraints

Given \(Q\succ 0\), consider set

Set \(X_{C1}\) arises, for example, in regression problems with multicollinearity constraints [10]: given a set J of features that are collinear, constraints \(\sum _{i\in J}z_i\le 1\) are used to ensure that at most one such feature is chosen.

The closure of the convex hull of \(X_{C1}\) is [see, e.g., 20, 33]

We now give an alternative derivation of this result using our technique. Polyhedron P is the convex hull of \(n+1\) points: point (0, 0) and points \(\{({\hat{\varvec{e}}}_{\{i\}},{\hat{Q}}_{\{i\}}^{-1})\}_{i=1}^n\). It can easily be seen that P is described by constraints \(W_{ij}=0\) whenever \(i\ne j\), \(W_{ii}=z_i/Q_{ii}\) for \(i\in [n]\), and constraints \(z\ge 0\), \(\sum _{i=1}^n z_i\le 1\). In particular, constraint (4b) reduces to

which by Lemma 1 is equivalent to

and \(x_i = 0\) if \(z_i/Q_{ii}=0, \; \forall i \in [n]\). Note that the second condition is the complementarity constraint, which is already included in the constraint \(t\ge \sum _{i=1}^n Q_{ii}x_i^2/z_i\) (since \(z_i = 0\) and \(x_i > 0\) implies \(\frac{x_i^2}{z_i} = + \infty \)).

3.2 Factorable representation

A (possibly low-rank) matrix \(Q\in \mathbb {R}^{n\times n}\) is positive semidefinite if and only if there exists some \(F\in \mathbb {R}^{n\times k}\) such that \(Q=FF^\top \). Then, letting \(u=F^\top x\), one can rewrite \(x^\top Qx\) as \(x^\top FF^\top x=u^\top u\). Matrix F may be immediately available when formulating the problem, or may be obtained through a Cholesky decomposition or eigendecomposition of Q. Such a factorization is often employed by solvers, since it results in simpler (separable) nonlinear terms, and in many situations matrix F is sparse as well. In this section, we discuss representations of \(\text {cl conv}(X)\) amenable to such factorizations of Q. While the proofs of the propositions of this section are similar to those in Sect. 3.1, additional care is required to handle unbounded problems (1) arising from a rank-deficient Q.

Given \(F\in \mathbb {R}^{n\times k}\), define \(F_S\in \mathbb {R}^{S\times k}\) as the submatrix of F corresponding to the rows indexed by S, and let \({\hat{F}}_S\in \mathbb {R}^{n\times k}\) be the matrix obtained by filling the missing entries with zeros. Define the polytope \(P_F\subseteq \mathbb {R}^{n+k^2}\) as

Remark 4

For any \(S\subseteq [n]\), matrix \({\hat{F}}_S^\dagger {\hat{F}}_S\) is an orthogonal projection matrix (symmetric and idempotent), and in particular \(({\hat{F}}_S^\dagger {\hat{F}}_S)^\dagger ={\hat{F}}_S^\dagger {\hat{F}}_S\). These properties can be easily verified from Definition 1. Since all eigenvalues of an orthogonal projection matrix are either 0 or 1, it also follows that \({\hat{F}}_S^\dagger {\hat{F}}_S\succeq 0\). \(\square \)

Proposition 3

If \(Q=FF^\top \), then the mixed-integer optimization model

is a valid formulation of problem (1).

Proof

Consider a point \((x,z,t)\in X\) with \(z={\hat{\varvec{e}}}_S\) for some \({\hat{\varvec{e}}}_{S} \in Z\). Constraint (11d) is trivially satisfied. Constraint (11c) is satisfied if and only if \(W={\hat{F}}_S^{\dagger }{\hat{F}}_S\). Note that in any feasible solution, \(x_i=0\) whenever \(i\not \in S\), and in particular \(F^\top x={\hat{F}}_{S}^\top x\). From Lemma 1, we find that constraint (11b) is satisfied if and only if (recall properties in Remark 4):

-

\({\hat{F}}_S^{\dagger }{\hat{F}}_S \succeq 0\), which is automatically satisfied.

-

\( {\hat{F}}_S^{\dagger }{\hat{F}}_S({\hat{F}}_S^{\dagger }{\hat{F}}_S)^\dagger F^\top x=F^\top x.\) We find that

$$\begin{aligned} {\hat{F}}_S^{\dagger }{\hat{F}}_S({\hat{F}}_S^{\dagger }{\hat{F}}_S)^\dagger {\hat{F}}_S^\top x={\hat{F}}_S^{\dagger }{\hat{F}}_S{\hat{F}}_S^{\dagger }{\hat{F}}_S {\hat{F}}_S^\top x={\hat{F}}_S^{\dagger }{\hat{F}}_S {\hat{F}}_S^\top x={\hat{F}}_S^{^\top }({\hat{F}}_S^\dagger )^\top {\hat{F}}_S^\top x={\hat{F}}_S^\top x, \end{aligned}$$and, therefore, this condition is satisfied as well.

-

\(t\ge x^\top F W^\dagger F^\top x\Leftrightarrow t\ge x_S^\top {\hat{F}}_S ({\hat{F}}_S^\dagger {\hat{F}}_S)^\dagger {\hat{F}}_S^\top x_S=x_S^\top {\hat{F}}_S {\hat{F}}_S^\dagger {\hat{F}}_S{\hat{F}}_S^\top x_S=x_S^\top {\hat{F}}_S{\hat{F}}_S^\top x_S\), which is precisely the nonlinear constraint defining set X and is thus satisfied.

\(\square \)

While the proofs of Propositions 1 and 3 are similar in spirit, we highlight a critical difference. In the proof of Proposition 1, with the assumption \(Q\succ 0\), constraints \(WW^\dagger x=x\) enforce the complementarity constraints \(x\circ (\varvec{e}-z)=0\), and therefore, such constraints are excluded in (4). In contrast, in the proof of Proposition 3, with Q potentially of low-rank, constraints \(WW^\dagger F^\top x=F^\top x\) alone are not sufficient to enforce \(x\circ (\varvec{e}-z)=0\), and therefore, they are included in (11) and are used to prove the validity of the mixed-integer formulation. Indeed, if there exist \({\hat{\varvec{e}}}_{S} \in Z\) and \({\bar{x}}\in \mathbb {R}^{n}\) such that \({\bar{x}}_S\ne 0\), \({\bar{x}}_{[n]\setminus S}=0\) and \(F^\top {\bar{x}}=0\), then for any \((x,z,t)\in X\) we find that

In particular, the point \((x+{\bar{x}},z,t)\), which may not satisfy the complementarity constraints, cannot be separated from \(\text {cl conv}(X)\), or any closed relaxation. On the other hand, if matrix Q is full-rank, then \(F^\top {\bar{x}}=0\implies {\bar{x}}=0\) (as shown in the proof of Proposition 1); therefore, the complementarity constraints are enforced by the conic constraint.

Recall that \(\pi _S: \mathbb {R}^{n} \rightarrow \mathbb {R}^{S}\) is the projection onto the subspace indexed by S. Now we consider the natural convex relaxation of (11) by dropping constraint (11d), and show that it is ideal under certain technical conditions over F and the set Z, as stated in Theorem 2 below.

Theorem 2

Let \(Q = F F^{\top }\), where \(F \in \mathbb {R}^{n \times k}\) is a full-column rank matrix satisfying \(\textrm{col}(F) = \bigcap _{{\hat{\varvec{e}}}_{S} \in Z} \pi _S^{-1}(\textrm{col}(F_S))\). Then

Proof

Clearly, constraints (11b),(11c) define a closed convex set. Consider the two optimization problems:

and

It suffices to show that problem (12) and (13) always attain the same optimal value. Consider the following two cases:

-

\(F F^{\dagger } a \ne a\): In other words, a is not in the column space of F, i.e., \(a \notin \textrm{col}(F)\). In this case, by the condition \(\textrm{col}(F) = \bigcap _{{\hat{\varvec{e}}}_{S} \in Z} \pi _{S}^{-1}(\textrm{col}(F_S))\), there exists one \({\hat{\varvec{e}}}_{S} \in Z\) such that \(a_{S} \notin \textrm{col}(F_S)\). Then, let z be such that \(z_i = 1, \; \forall i \in S\). Since \(a_{S} \notin \textrm{col}(F_{S})\), there exists x such that \(x_i = 0\) for all \(i \in [n]\backslash S\), \(x_{S}\) is in the orthogonal complement of \(F_S\) and \(a_S^\top x_S < 0\). Clearly, z and x satisfy the constraint \(x_i (1 - z_i) = 0\) for all \(i = 1, \dots ,n\). Complementarity holds for \(\lambda x\) for \(\lambda > 0\) as well. Since, by construction, \(x^{\top } F F^{\top } x = 0\), the objective \(b^\top z + \lambda \langle a, x \rangle + \lambda ^2 (x^{\top } F F^{\top } x)\) tends to \(-\infty \) for \((\lambda x, z)\) as \(\lambda \rightarrow \infty \). Thus problem (12) is unbounded and since problem (13) is a convex relaxation of (12), problem (13) is unbounded as well.

-

\(F F^{\dagger } a = a\): For problem (13), we can project out t using the relation

$$\begin{aligned} \begin{pmatrix} W &{} F^\top x \\ x^{\top } F &{} t \end{pmatrix} \succcurlyeq 0 \quad \text {iff} \quad W W^{\dagger } F^{\top } x = F^{\top } x \; \; \text {and} \; \; t \ge x^{\top } F W^{\dagger } F^{\top } x. \end{aligned}$$Therefore, problem (13) is equivalent to

$$\begin{aligned} \min \quad&a^{\top } x + b^{\top } z + \tfrac{1}{2} x^{\top } F W^{\dagger } F^{\top } x \end{aligned}$$(14a)$$\begin{aligned} \text {s.t.} \; \;&W W^{\dagger } F^{\top } x = F^{\top } x \end{aligned}$$(14b)$$\begin{aligned}&(z,W)\in P_F,\; x\in \mathbb {R}^n. \end{aligned}$$(14c)

Since \(F F^{\dagger } a = a\), we can write \(a^{\top } x = (F^{\dagger } a)^{\top } F^{\top } x\). Define \({\tilde{a}} = F^{\dagger } a\), then \(a^{\top } x = {\tilde{a}}^{\top } F^{\top } x\). Substituting \(F^{\top } x\) with a new variable \(u \in \mathbb {R}^{k}\) and since F has full column rank, problem (14) is equivalent to

Using identical arguments as in the proof of Theorem 1, we find that there exists \({\hat{\varvec{e}}}_{S}\in Z\) such that \((u^*,z^*,W^*)=(-{\hat{F}}_S^\dagger {\hat{F}}_S {\tilde{a}}, {\hat{\varvec{e}}}_S, {\hat{F}}_S^\dagger {\hat{F}}_S)\) is optimal for (15). We now construct an optimal solution for (14). Let \(x^*\) be defined as \(x_S^*=-(F_{S}^{\dagger })^{\top } F_{S}^{\dagger } a_{S}\) and \(x_{[n]\setminus S}^*=0\), and observe that \((x^*,z^*)\) is feasible for (12), with objective \(\sum _{i \in S} b_i - \frac{1}{2} \Vert F_{S}^{\dagger } a_{S} \Vert _2^2\). Substituting \(W^{*} = {\hat{F}}_{S}^{\dagger } {\hat{F}}_{S}\), the optimal value of problem (13) equals \(\sum _{i \in S} b_i - \frac{1}{2} \Vert F_{S}^{\dagger } F_{S} F^{\dagger } a \Vert _2^2\). Note that both \(\alpha _1 = F^{\dagger } a\) and \(\alpha _2 = F_{S}^{\dagger } a_{S}\) satisfy the equation \(F_{S} \alpha = a_{S}\) and thus \(\alpha _1 - \alpha _2\) is orthogonal to the row space of \(F_{S}\) which means \(F_{S}^{\dagger } F_{S} \alpha _1 = F_{S}^{\dagger } F_{S} \alpha _2 = \alpha _2\). Hence, we conclude that the optimal values of problem (12) and problem (13) coincide. \(\square \)

Remark 5

From the first case analysis of the proof of Theorem 2, one sees that the technical condition \(\textrm{col}(F) = \bigcap _{{\hat{\varvec{e}}}_{S} \in Z} \pi _S^{-1}(\textrm{col}(F_S))\) is equivalent to stating that the mixed-integer optimization problem and the proposed convex relaxation are unbounded at the same time. The condition is automatically satisfied if \(\varvec{e}\in Z\). Moreover, if matrix Q is rank-one, then this condition is equivalent to the nondecomposability condition on Z given in [34]. If it fails to hold, the convexification presented is still valid but may be weak: the convex relaxation may be unbounded even if the mixed-integer optimization problem is bounded. We provide an example illustrating this phenomenon in Sect. 3.2.3. \(\square \)

Remark 6

An immediate consequence of Theorem 2 is that if matrix Q is rank-deficient, i.e., \(k<n\), then the extended formulation describing \(\text {cl conv}(X)\) is simpler than the full rank case, i.e., it has fewer additional variables and lower-dimensional conic constraints. \(\square \)

We now illustrate Theorem 2 by providing an alternative proof of the main result of [5] using our unifying framework.

3.2.1 Rank-one quadratic functions

Consider the rank-one set

where we assume \(h_i\ne 0\) for all \(i\in [n]\).

Proposition 4

([5]) The closure of the convex hull of \(X_{R1}\) is

Proof

In the case of a rank-one function, we have \(F=h\) and \(W \in \mathbb {R}^1\). Note that the pseudoinverse of vector \({\hat{h}}_S\) is given by

and, in particular, we find that \({\hat{h}}_S^\dagger {\hat{h}}_S=1\) if \(S\ne \emptyset \), and \({\hat{h}}_S^\dagger {\hat{h}}_S=0\) otherwise. Thus, \({\hat{h}}_S^\dagger {\hat{h}}_S=\max \{z_1,\dots ,z_n\}\), and \(P_F\) is described by the linearization \(0\le W\le \min \{1,\varvec{e}^\top z\}\). Projecting out variable W, we arrive at the result. \(\square \)

We discuss generalizations of \(X_{R1}\) with arbitrary constraints on the indicator variables in Sect. 4.

3.2.2 An example with a rank-two quadratic function

In order to illustrate how convexification methods for polyhedra can be directly utilized to convexify the mixed-integer nonlinear set X, we consider a special rank-two quadratic function with three variables and the associated set

In this case, \(Q = F F^\top \) with \(F^\top = \begin{pmatrix}1 &{} 1 &{}1 \\ 0&{}0 &{} 1 \end{pmatrix}\). The extreme points of \(P_F\) are given in Table 2. Using PORTA [32] to switch from the extreme point representation of \(P_F\) to its facial description, we obtain the closure of the convex hull of \(X_{3}\):

3.2.3 An example where the technical condition fails

Consider the set

with \(h_i\ne 0\) for \(i\in [n]\). In this case, \(F=h\) and \(\textrm{col}(F_{\{i\}})=\mathbb {R}\) and \(\pi _S^{-1}(\textrm{col}(F_{\{i\}}))=\mathbb {R}^n\). Thus, \( \bigcap _{{\hat{\varvec{e}}}_{S}\in Z} \pi _S^{-1}(\textrm{col}(F_S))=\mathbb {R}^n\), while \(\textrm{col}(F) = \{x\in \mathbb {R}^n: x=\lambda h \text { for some }\lambda \in \mathbb {R}\}\), and the technical assumption is not satisfied.

The relaxation induced by (11b), (11c), (11e), which is constructed as outlined in Proposition 4, results in the set induced by bound constraints \(0\le z\le 1\), \(\varvec{e}^\top z\le 1\) and \(t\ge (h^\top x)^2/(\varvec{e}^\top z)\). Moreover, the corresponding optimization problem

is unbounded unless \(a\in \textrm{col}(F)\).

In contrast, \(\text {cl conv}(X_{R1}^{C1})\) is described via constraint \(t\ge \sum _{i=1}^n h_i^2x_i^2/z_i\) [33, 34] (similar to the result described in Sect. 3.1.2), and the corresponding optimization problem is always bounded.

4 Convexification in the original space

We now turn our attention to describing \(\text {cl conv}(X)\) in the original space of variables. The discussion of this section is based on projecting out the matrix variable W in the canonical description of \(\text {cl conv}(X)\) given in Theorem 1 for \(Q\succ 0\). Identical arguments hold for the representation in Theorem 2 for low-rank matrices.

Suppose that a minimal description of polyhedron P is given by the facet-defining inequalities

and equalities

where \(\Gamma _i\in \mathbb {R}^{n \times n},\) \(\beta _i\in \mathbb {R}\) and \(\gamma _i\in \mathbb {R}^n\). Theorem 3 describes \(\text {cl conv}(X)\) in the original space of variables. Note that, in practice, a complete description of P may not be explicitly available, in which case one can use a partial description to derive valid inequalities.

Before we give the description in the original space, we define a set of feasible coefficients used to derive the inequalities. Let

Theorem 3

If \(Q\succ 0\), point \((x,z,t)\in \text {cl conv}(X)\) if and only if \(z\in {\text {conv}}(Z)\), \(t\ge 0\) and

or equivalently,

Proof

A point \((x,z,t)\in \text {cl conv}(X)\) if and only if

Strong duality holds since there exists \((z,W)\in P\) that satisfies the facet-defining inequalities strictly, and we can always increase \(\lambda \) to find a strictly feasible solution to the above minimization problem. Substituting \(V=W-xx^\top /t+\lambda I\), the optimization problem simplifies to

Letting \(y\in \mathbb {R}_+^{m_1}\times \mathbb {R}^{m-m_1}\) denote the dual variables, we find the equivalent representation

In particular, inequality (20a) is valid for any fixed feasible y. Multiplying both sides of the inequality by t, we find the equivalent conic quadratic representation

Note that validity of inequalities (21) implies that \(y^\top \beta +\left( \sum _{i=1}^my_i\gamma _i\right) ^\top z\ge 0\) for any primal feasible z and dual feasible y; dividing both sides of the inequality by \(y^\top \beta +\left( \sum _{i=1}^my_i\gamma _i\right) ^\top z\), the theorem is proven. \(\square \)

Note that even if inequalities (16) are not facet-defining or are insufficient to describe P, the corresponding inequalities (23) are still valid for \(\text {cl conv}(X)\).

We also state the analogous result for low-rank matrices, without proof, where \((\Gamma _i,\gamma _i,\beta _i), i\in [m]\) defines \(P_F\).

Theorem 4

Let \(Q = F F^{\top }\), where \(F \in \mathbb {R}^{n \times k}\) is a full-column rank matrix satisfying \(\textrm{col}(F) = \bigcap _{{\hat{\varvec{e}}}_{S} \in Z} \pi _S^{-1}(\textrm{col}(F_S))\). Then point \((x,z,t)\in \text {cl conv}(X)\) if and only if \(z\in {\text {conv}}(Z)\), \(t\ge 0\) and

or equivalently,

We now illustrate Theorem 3 for the set \(X_{2\times 2}\) discussed in Sect. 3.1.1.

Example 2

(Description of \(\text {cl conv}(X_{2\times 2})\) in the original space) From Proposition 2, we find that for \(X_{2\times 2}\), a minimal description of polyhedron P is given by the bound constraints \(0\le z\le 1\) and

Then, an application of Theorem 3 yields the inequality

Note that variables \(y_1,y_2\) are originally free as dual variables for equality constraints, however, the nonnegativity constraints are imposed due to the positive definiteness constraint in \({\mathcal {Y}}\). In Appendix A we provide an independent verification that inequality (24) is indeed valid, and reduces to the quadratic inequality \(t\ge d_1x_1^2+d_2x_2^2-2x_1x_2\) at integral z. \(\square \)

From Theorem 3, we see that \(\text {cl conv}(X)\) can be described by an infinite number of fractional quadratic/affine inequalities (23). More importantly, the convex hull is finitely generated: the infinite number of quadratic and affine functions are obtained from conic combinations of a finite number of base matrices \(\Gamma _i\) and vectors \((\gamma _i,\beta _i)\), which correspond precisely to the minimal description of P. To solve the resulting semi-infinite problem in practice, one can employ a delayed cut generation scheme, where at each iteration, the problem with a subset of inequalities (22) is solved to obtain \(({\bar{x}},{\bar{z}})\). Then, the separation problem to find a maximum violated inequality (i.e., y) at \(({\bar{t}}, {\bar{x}},{\bar{z}})\), if it exists, is a convex optimization problem given by the inner maximization problem in (23).

Example 3

(Rank-one function with constraints) Given \(Z\subseteq \{0,1\}^{n}\), consider the set

that is, a rank-one function with arbitrary constraints on the indicator variables z defined by Z. As discussed in the proof of Proposition 4, \(P_F\subseteq \mathbb {R}^{n+1}\) with one additional variable \(W \in \mathbb {R}^1\) which, at integer points, is given by \(W=\max \{z_1,\dots ,z_n\}\). For simplicity, assume that \(0\in Z\), and that both \({\text {conv}}(Z)\) and \({\text {conv}}(Z{\setminus } \{0\})\) are full-dimensional. Finally, consider all facet-defining inequalities of \({\text {conv}}(Z\setminus \{0\})\) of the form \(\gamma _i^\top z\ge 1\) (that is, inequalities that cut off point 0), for \(i=1,\dots ,m\). Now consider the inequalities

First, observe that inequalities (25) are valid for \(P_F\): given \(z\in Z\), if \(z=0\), then \(W=0\); otherwise, \(z\in Z{\setminus }\{0\}\implies \gamma _i^\top z\ge 1=W\). Second, note that inequalities (25) are facet-defining for \(P_F\). Indeed, given \(i\in [m]\), consider the face \(Z_i=\{z\in {\text {conv}}(Z{\setminus } \{0\}): \gamma _i^\top z=1\}\) of \({\text {conv}}(Z\setminus \{0\})\): since \({\text {conv}}(Z\setminus \{0\})\) is full-dimensional and \(\gamma _i^\top z\ge 1\) is facet-defining, there are n affinely independent points \(\{z^j\}_{j=1}^n\) such that \(z^j\in Z_i\). Thus, we find that points \((z^j,1)_{j=1}^n\) and (0, 0) are (\(n+1\))-affinely independent points satisfying (25) at equality. Moreover, one can easily verify that inequality \(W\le 1\) is facet-defining as well. Thus, from (23) (adapted to the factorable representation discussed in Sect. 3.2), we conclude that the inequality

is valid for \(\text {cl conv}(X_{R1}^Z)\). Moreover, an optimal solution to optimization problem (26) corresponds to setting \(y_i=1\) for \(i\in \arg \min _{i\in [m]}\{\gamma _i^\top z\}\), and we conclude that inequalities \(t\ge (h^\top x)^2\) and \(t\ge (h^\top x)^2/(\gamma _i^\top z), i\in [m]\) are valid for \(\text {cl conv}(X_{R1}^Z)\). Indeed, as shown in [34], these inequalities along with \(z\in {\text {conv}}(Z)\) fully describe \(\text {cl conv}(X_{R1}^Z)\) (when a nondecomposability condition holds). \(\square \)

4.1 Connection with decomposition methods

From Theorem 3, we see that the convex hull, X, is obtained by adding conic quadratic inequalities \( t \ge \frac{x^\top \left( \sum _{i=1}^m \Gamma _iy_i\right) x}{y^\top \beta +\left( \sum _{i=1}^my_i\gamma _i\right) ^\top z}\) with simpler quadratic structure \(x^{\top } \Gamma _i x\) (corresponding to inequalities describing P). In particular, the intuition is similar to convexifications obtained from decompositions (3). We now show how the theory presented in this paper sheds light on the strength of the aforementioned decompositions.

Suppose inequalities (16), which we repeat for convenience:

are valid for P and, additionally, \(\Gamma _i\succeq 0\) for all \(i\in [m]\). Since P is not full-dimensional in general, positive semidefiniteness conditions may not be as restrictive as they initially seem.

Example 4

(Description of \(\text {cl conv}(X_{2\times 2})\), continued) None of the matrices in the facets of P for \(\text {cl conv}(X_{2\times 2})\) given in Example 2 are positive semidefinite. Nonetheless, the inequalities below also describe P (we abuse notation and encode using variables y how each inequality is obtained):

In particular, the last two inequalities satisfy positive semidefiniteness. Moreover, the relaxation of the first two equalities obtained by replacing them with inequalities also satisfies positive semidefiniteness. Finally, if Q is sufficiently diagonally dominant and \(d_1d_2\ge 4\), then the third and fourth inequalities satisfy positive semidefiniteness as well. \(\square \)

Now suppose that in (23), we fix \(y_i=\lambda /(\beta _i+\gamma _i^\top z)\), where \(\lambda \) is small enough to ensure that constraint \(\sum _{i=1}^m\text {Tr}(\Gamma _i)y_i\le 1\) is satisfied. Then inequality (23) reduces to

which is precisely the relaxations obtained from (3). We make the following two important observations.

Observation 1 Relaxations obtained by fixing a given decomposition (3) [19, 20] are, in general, insufficient to describe \(\text {cl conv}(X)\). Indeed, from Theorem 3, describing \(\text {cl conv}(X)\) requires one inequality per extreme point of the region \({\mathcal {Y}}\), whereas a given decomposition corresponds to a single point in this region.

Observation 2 On the other hand, the strong “optimal” or “dynamic” relaxations [5, 17, 35], where the decomposition is not fixed but instead is chosen dynamically, are excessive to describe \(\text {cl conv}(X)\). Indeed, they are of the form (23) for every possible (rank-one, \(2\times 2\), remainder) matrix, and are not finitely generated; whereas, our results imply that the necessary inequalities are finitely generated.

We conclude this section with an analysis of rank-one decompositions, where we assume for simplicity that \(Q\succ 0\): given a subset \({\mathcal {T}}\subseteq 2^{[n]}\), rank-one relaxations are given by

where \(R=Q-\sum _{T\in {\mathcal {T}}}{\hat{h}}_T{\hat{h}}_T^\top \succeq 0\), and \({\hat{h}}_T\in \mathbb {R}^n\) are given vectors that are zero in entries not indexed by T. Relaxation (28) can be interpreted as a decomposition obtained from valid inequalities for P of the form

where \(\gamma \ge 0\). Note that inequality (29) is valid for P if

Proposition 5

If \(\gamma = \max _{{\hat{\varvec{e}}}_{S}\in Z} \frac{1}{|S \bigcap T|} \langle {\hat{h}}_T{\hat{h}}_T^\top , {\hat{Q}}_S^{-1} \rangle \), then inequality (29) defines a face of P of dimension at least \(\textrm{dim}(P_0)+1\), where

Proof

There are \(\text {dim}(P_0)+1\) affinely independent points in \(P_0\), and all satisfy (29) at equality. Letting \(S^*\in \mathop {\mathrm {arg\,max}}\limits _{{\hat{\varvec{e}}}_{S}\in Z} \frac{1}{|S \bigcap T|} \langle {\hat{h}}_T{\hat{h}}_T^\top , {\hat{Q}}_S^{-1} \rangle \), we find that \(({\hat{\varvec{e}}}_{S^*}, {\hat{Q}}_{S^*}^{-1})\) is an additional affinely independent point satisfying (29) at equality. \(\square \)

Note that if optimization problem (30) has multiple optimal solutions, then one can find additional affinely independent points. In particular, (29) is guaranteed to define a high dimensional face of P if |T| is small. Indeed, inequalities (29) were found to be particularly effective computationally if \({\mathcal {T}}=\left\{ T\subseteq [n]: |T|\le \kappa \right\} \) for some small \(\kappa \) [5], although a theoretical justification of this observation has been missing until now.

Remark 7

(Description of \(\text {cl conv}(X_{2\times 2})\), continued) Consider again the facet-defining inequalities given in Example 4. The last two inequalities correspond to a rank-one strengthening with \(|T|=1\), which leads to relaxations of \(X_{2\times 2}\) similar to the perspective relaxation. Thus, we may argue that the perspective relaxation is required to describe \(\text {cl conv}(X_{2\times 2})\). \(\square \)

5 A mixed-integer linear formulation for P

The polyhedron P can (in theory) be studied using standard methods from mixed-integer linear optimization. However, the vertex representation of P is often not convenient, as most techniques require that the polyhedron be described explicitly via linear inequalities. Thus, in this section, we present such a mixed-integer linear formulation for the vertices of polytope P when the Hessian matrix Q is positive definite.

First, we describe the linear equalities necessary for P. Throughout this section, for ease of exposition, for a given \(S\subseteq [n]\), we permute the rows and columns of Q such that indices in S appear first.

Proposition 6

For any \((z, W) \in P\),

Proof

For any \(S \subseteq [n]\), \(({\hat{\varvec{e}}}_{S}, {\hat{Q}}_{S}^{-1}) \in P\), we have

Observe that the \(i^{th}\) diagonal entry of \({\hat{Q}}_{S}^{-1} Q\) is one if \(i \in S\) and zero otherwise. Since at all extreme points of P we have \(z={\hat{\varvec{e}}}_S\) and \(W={\hat{Q}}_S^{-1}\) for some \(S\subseteq [n]\), it follows that \((WQ)_{ii}=({\hat{Q}}_{S}^{-1} Q)_{ii}=z_i\). \(\square \)

Since P satisfies n linearly independent equalities, we immediately get insights into the dimension of P.

Corollary 1

The dimension of P is at most \(n(n+1)/2\). If \(Q_{ij}\ne 0\) for all \(i,j\in [n]\) and \(Z=\{0,1\}^n\), then this bound is tight.

Proof

Polyhedron P has \(n+n^2\) variables, but symmetry constraints \(W_{ij}=W_{ji}\) and equalities (31) imply the upper bound on the dimension. If \(Q_{ij}\ne 0\) for all \(i,j\in [n]\), the set of points \(({\hat{\varvec{e}}}_{\{i,j\}},Q_{\{i,j\}}^{-1})_{i\ne j}\) and \(({\hat{\varvec{e}}}_{i}, Q_{\{i\}}^{-1})_{i \in [n]}\) are \(n(n+1)/2\) affinely independent points of P, because each point is the unique one satisfying \(W_{ij}\ne 0\). Together with point \((0,\textbf{0})\), where 0 represents the null matrix, we find the required \(n(n+1)/2+1\) affinely independent points in P. \(\square \)

From Corollary 1, we see that (under mild conditions) there are no other equalities in the description of P. In order to construct a mixed-integer linear formulation for the vertices of P, we will use big-M constraints. Lemmas 2 and 3 are necessary to identify valid bounds for coefficients M.

Lemma 2

For any \(S \subseteq [n]\), \(Q^{-1} \succeq {\hat{Q}}_{S}^{-1}\) and \(\Vert {\hat{Q}}_{S}^{-1}\Vert _{\max } \le \lambda _{\max }(Q^{-1})\).

Proof

To prove \(Q^{-1} \succeq {\hat{Q}}_{S}^{-1}\) for \(S \subseteq [n]\), it suffices to show \(I \succeq Q^{1/2} {\hat{Q}}_{S}^{-1} Q^{1/2}\). Since switching the order of matrix multiplication does not change the set of nonzero eigenvalues, the nonzero eigenvalues of \(Q^{1/2} {\hat{Q}}_{S}^{-1}Q^{1/2}\) coincide with those of \({\hat{Q}}_{S}^{-1}Q \). From (32) one sees that \({\hat{Q}}_{S}^{-1}Q = \begin{pmatrix} I_{|S|} &{} Q_{S}^{-1} Q_{S, [n] \backslash S} \\ 0 &{} 0 \end{pmatrix}\) is an upper triangular matrix, which has a maximum eigenvalue of one. Then we conclude that \(I \succeq Q^{1/2} {\hat{Q}}_{S}^{-1} Q^{1/2}\) and thus \(Q^{-1} \succeq {\hat{Q}}_{S}^{-1}\).

For the second part, it follows that for \(i \in [n]\), \(({\hat{Q}}_{S}^{-1})_{ii}\le Q_{ii}^{-1} \le \lambda _{\max } (Q^{-1})\). Since \({\hat{Q}}_{S}^{-1} \succeq 0\), for any \(i,j \in [n]\), \(({\hat{Q}}_{S}^{-1})_{ij}^2\le ({\hat{Q}}_{S}^{-1})_{ii}({\hat{Q}}_{S}^{-1})_{jj}\). As \(\lambda _{\max }(Q^{-1})\) gives a uniform bound on the diagonal elements of \({\hat{Q}}_{S}^{-1}\), \(\lambda _{\max }(Q^{-1})\) also bounds the absolute value of the off-diagonal elements of \({\hat{Q}}_{S}^{-1}\). \(\square \)

Next, we define

and prove that M provides a bound for the off-diagonal elements of \({\hat{Q}}_{S}^{-1} Q\) for any \(S \subseteq [n]\) in the following lemma.

Lemma 3

For any \(S \subseteq [n]\), the off-diagonals of \({\hat{Q}}_{S}^{-1} Q\) are bounded by M.

Proof

Note that \({\hat{Q}}_{S}^{-1} Q = \begin{pmatrix} I_{|S|} &{} Q_{S}^{-1} Q_{S, [n]\backslash S} \\ 0 &{} 0 \end{pmatrix}\). For any \(j \notin S\),

where the last inequality follows from Lemma 2. \(\square \)

One can make a few observations about \(P = \{({\hat{\varvec{e}}}_{S}, {\hat{Q}}_{S}^{-1})\}_{{\hat{\varvec{e}}}_{S} \in Z}\). Note that at extreme points of P, \(W={\hat{Q}}_S^{-1}\) for some S. Thus, for any extreme point \((z, W) \in P\), \(W_{ij}\) is nonzero only if \(z_i = z_j = 1\). Moreover, for any \(S \subseteq [n]\), \(({\hat{\varvec{e}}}_{S}, {\hat{Q}}_{S}^{-1}) \in P\), \(Q {\hat{Q}}_{S}^{-1} = QW=\begin{pmatrix} I_{|S|} &{} 0 \\ Q_{S, [n] \backslash S}^{\top } Q_{S}^{-1} &{} 0 \end{pmatrix}\), and the off-diagonal entries in the \(i^{th}\) row of QW are all zeros if \(i \in S\). These two observations lead to the formulation in the following proposition.

Proposition 7

The extreme points of P are described as

Proof

For any \(z = {\hat{e}}_{S} \in Z\), the constraint

implies that \(W_{ij} = 0\) if either i or j is not in S. For \(i \in S\), we have

Inequalities (34) and (35) imply that \(\begin{pmatrix} Q_{S}&Q_{S, [n] \backslash S}\end{pmatrix} \begin{pmatrix} W_{S} \\ W_{S, [n] \backslash S}^{\top }\end{pmatrix} = I\). Since \(W_{S, [n] \backslash S} = 0\), we have \(Q_S W_{S} = I\) and \(W = {\hat{Q}}_{S}^{-1}\). Therefore, \(Q {\hat{Q}}_{S}^{-1} = \begin{pmatrix} I &{} 0 \\ Q_{S, [n]\backslash S}^{\top } Q_S^{-1} &{} 0 \end{pmatrix}\). It is clear that the off-diagonal elements in the \(i^{th}\) row are all zero if \(i \in S\), otherwise (if \(i\not \in S\)) they are bounded by M according to Lemma 3. In other words, constraints

hold. Moreover, thanks to Lemma 2, the constraints

hold at \(W = {\hat{Q}}_{S}^{-1}\) and \(z = {\hat{\varvec{e}}}_{S}\) as well. \(\square \)

Proposition 7 allows us to give a mixed-integer linear formulation for the MIQO problem (1). Substituting the mixed-integer linear representation of P in Proposition 7 in the equivalent MIQO formulation (8), we arrive at:

where M is defined in (33). MILO is the first polynomial-size explicit mixed-integer linear formulation given for (1).

We point out that the mixed-integer representation of P in Proposition 7 relies on big-M constraints and, therefore, it is not a strong formulation. Nonetheless, advanced mixed-integer linear optimization solvers have a plethora of built-in techniques to improve such formulations. Preliminary computations using Gurobi indicate the following findings:

-

(1)

The natural relaxation of (37) is very weak and, therefore, (37) results in worse performance than alternative (nonlinear) formulations for problem (1) in most cases.

-

(2)

In some cases, however, and notably when the matrix Q is sparse, Gurobi improves the relaxation in presolve to the point where the problems are solved at the root node, faster than existing formulations for (1). This situation illustrates that (in some cases) existing methods can improve even weak relaxations, whereas similar improvements are not currently available for nonlinear formulations.

Detailed computational results are presented in Appendix B. Overall, the results illustrate the potential benefits of reducing convexification to describing a polyhedral set, but also indicate that much work remains to be done for deriving better relaxations of P.

6 Conclusion

In this paper, we first describe the convex hull of the epigraph of a convex quadratic function with indicators in an extended space, which is given by one semi-definite constraint, and an exponential system of linear inequalities defining the convex hull of a polytope, P (or \(P_F\)). We then derive the convex hull description in the original space as a semi-infinite conic quadratic program. Furthermore, we give a compact mixed-integer linear representation of the vertices of the polytope P that results in the first compact mixed-integer linear formulation of MIQO problems. While this is a weak formulation, our preliminary computational experience indicates that for a class of sparse problems, off-the-shelf solvers are able to take advantage of the developments in MILO to improve the formulation substantially and it is competitive if not better than state-of-the-art approaches. To translate our theoretical developments into effective practical methods, it is crucial to exploit the structure of P. In our ongoing work, we explore the case when Q is a Stieltjes matrix for which P has a nice structure that allows us to use our results directly without resorting to the MILO formulation. Our results provide a unifying framework for several convex relaxations of MIQO problems in the literature and can also be used to evaluate their strength.

References

Aktürk, M.S., Atamtürk, A., Gürel, S.: A strong conic quadratic reformulation for machine-job assignment with controllable processing times. Oper. Res. Lett. 37, 187–191 (2009)

Aktürk, M.S., Atamtürk, A., Gürel, S.: Parallel machine match-up scheduling with manufacturing cost considerations. J. Sched. 13, 95–110 (2010)

Albert, A.: Conditions for positive and nonnegative definiteness in terms of pseudoinverses. SIAM J. Appl. Math. 17(2), 434–440 (1969)

Anstreicher, K.M., Burer, S.: Quadratic optimization with switching variables: the convex hull for \(n= 2\). Math. Program. 188, 421–441 (2021)

Atamtürk, A., Gómez, A.: Rank-one convexification for sparse regression. arXiv preprint arXiv:1901.10334 (2019)

Atamtürk, A., Gómez, A.: Supermodularity and valid inequalities for quadratic optimization with indicators. Math. Program. (2022). https://doi.org/10.1007/s10107-022-01908-2

Atamtürk, A., Gómez, A.: Strong formulations for quadratic optimization with M-matrices and indicator variables. Math. Program. 170, 141–176 (2018)

Atamtürk, A., Gómez, A., Han, S.: Sparse and smooth signal estimation: convexification of \(\ell _0\)-formulations. J. Mach. Learn. Res. 22(52), 1–43 (2021)

Bach, F.: Submodular functions: from discrete to continuous domains. Math. Program. 175, 419–459 (2019)

Bertsimas, D., King, A.: OR forum–an algorithmic approach to linear regression. Oper. Res. 64, 2–16 (2015)

Bertsimas, D., Cory-Wright, R., Pauphilet, J.: Mixed-projection conic optimization: a new paradigm for modeling rank constraints. Oper. Res. 70(6), 3321–3344 (2022)

Bien, J., Taylor, J., Tibshirani, R.: A lasso for hierarchical interactions. Ann. Stat. 41(3), 1111 (2013)

Bienstock, D.: Computational study of a family of mixed-integer quadratic programming problems. Math. Program. 74(2), 121–140 (1996)

Ceria, S., Soares, J.: Convex programming for disjunctive convex optimization. Math. Program. 86, 595–614 (1999)

Chen, X., Ge, D., Wang, Z., Ye, Y.: Complexity of unconstrained \(l_2-l_p\) minimization. Math. Program. 143(1), 371–383 (2014)

Cozad, A., Sahinidis, N.V., Miller, D.C.: Learning surrogate models for simulation-based optimization. AIChE J. 60(6), 2211–2227 (2014)

Dong, H., Chen, K., Linderoth, J.: Regularization vs. relaxation: a conic optimization perspective of statistical variable selection. arXiv preprint arXiv:1510.06083 (2015)

Frangioni, A., Gentile, C.: Perspective cuts for a class of convex 0–1 mixed integer programs. Math. Program. 106, 225–236 (2006)

Frangioni, A., Gentile, C.: SDP diagonalizations and perspective cuts for a class of nonseparable MIQP. Oper. Res. Lett. 35, 181–185 (2007)

Frangioni, A., Gentile, C., Hungerford, J.: Decompositions of semidefinite matrices and the perspective reformulation of nonseparable quadratic programs. Math. Oper. Res. 45(1), 15–33 (2020)

Gao, J., Li, D.: Cardinality constrained linear-quadratic optimal control. IEEE Trans. Autom. Control 56, 1936–1941 (2011)

Günlük, O., Linderoth, J.: Perspective reformulations of mixed integer nonlinear programs with indicator variables. Math. Program. 124, 183–205 (2010)

Han, S., Gómez, A.: Compact extended formulations for low-rank functions with indicator variables. arXiv preprint arXiv:2110.14884 (2021)

Han, S., Gómez, A., Atamtürk, A.: 2x2 convexifications for convex quadratic optimization with indicator variables. Math. Program. (2023). https://doi.org/10.1007/s10107-023-01924-w. (Online First Article)

He, Z., Han, S., Gómez, A., Cui, Y., Pang, J.-S.: Comparing solution paths of sparse quadratic minimization with a stieltjes matrix. Math. Program. (2023). https://doi.org/10.1007/s10107-023-01966-0

Hochbaum, D.S.: An efficient algorithm for image segmentation, Markov random fields and related problems. J. ACM (JACM) 48(4), 686–701 (2001)

Jeon, H., Linderoth, J., Miller, A.: Quadratic cone cutting surfaces for quadratic programs with on-off constraints. Discret. Optim. 24, 32–50 (2017)

Küçükyavuz, S., Shojaie, A., Manzour, H., Wei, L. , Wu, H.-H.: Consistent second-order conic integer programming for learning Bayesian networks. arXiv preprint arXiv:2005.14346 (2020)

Liu, P., Fattahi, S., Gómez, A., Küçükyavuz, S.: A graph-based decomposition method for convex quadratic optimization with indicators. Math. Program. (2022). https://doi.org/10.1007/s10107-022-01845-0. (Article in Advance)

Manzour, H., Küçükyavuz, S., Wu, H.-H., Shojaie, A.: Integer programming for learning directed acyclic graphs from continuous data. INFORMS J. Optim. 3(1), 46–73 (2021)

Penrose, R.: A generalized inverse for matrices. In: Mathematical Proceedings of the Cambridge Philosophical Society, vol 51, pp. 406–413. Cambridge University Press (1955)

POlyhedron Representation Transformation Algorithm. https://porta.zib.de/#download. Accessed: 2021-11-20

Wei, L., Gómez, A., Küçükyavuz, S.: On the convexification of constrained quadratic optimization problems with indicator variables. In: International conference on integer programming and combinatorial optimization, pp. 433–447. Springer (2020)

Wei, L., Gómez, A., Küçükyavuz, S.: Ideal formulations for constrained convex optimization problems with indicator variables. Math. Program. 192(1–2), 57–88 (2022)

Zheng, X., Sun, X., Li, D.: Improving the performance of MIQP solvers for quadratic programs with cardinality and minimum threshold constraints: A semidefinite program approach. INFORMS J. Comput. 26, 690–703 (2014)

Acknowledgements

We thank the AE and three reviewers for their suggestions that improved the presentation. Alper Atamtürk is supported, in part, by NSF AI Institute for Advances in Optimization Award 2112533, and DOD ONR Grant 12951270. Andrés Gómez is supported, in part, by NSF Grant 2006762 and AFOSR grant FA9550-22-1-0369. Simge Küçükyavuz and Linchuan Wei are supported, in part, by NSF Grant 2007814, and DOD ONR Grants N00014-19-1-2321 and N00014-22-1-2602.

Funding

Open access funding provided by SCELC, Statewide California Electronic Library Consortium

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A. Validity of inequalities (24)

Here we directly check the validity of the inequalities in Example 2, which are repeated for convenience.

If \(z_1=z_2=x_1=x_2=0\), then the inequality reduces to \(t\ge 0\). If \(z_1=1\) and \(z_2=x_2=0\), the inequality reduces to

The inequality can be maximized by setting \(y_6=y_1/d_1\) and \(y_2=y_3=y_4=y_5=0\), and reduces to \(t\ge d_1x_1^2\). The case \(z_2=1\), \(z_1=x_1=0\) is identical.

Finally, if \(z_1=z_2=1\), then the inequality reduces to

Note that we can assume, without loss of generality, that \(y_3=0\) (otherwise, if \(y_3>0\), one can increase \(y_4\) and reduce \(y_3\) to obtain a feasible solution with better objective value). Let \({\bar{y}}=y_4-y_5-y_6\). With these simplifications, (38) reduces to

By taking the derivative of the objective with respect to \({\bar{y}}\), we conclude that (for fixed values of \(y_1\) and \(y_2\)) the objective is monotone, and thus \({\bar{y}}\) may be assumed to be set at a bound. In particular, the rotated cone constraint holds at equality, and \({\bar{y}}=-y_1/d_1-y_2/d_2\pm 2\sqrt{y_1y_2}\). Thus, problem (39) further reduces to

Substitute \({\bar{y}}_1=\pm \sqrt{y_1}\) and \({\bar{y}}_2=\pm \sqrt{y_2}\). By multiplying by \(({\bar{y}}_1^2d_2+{\bar{y}}_2^2d_1+ 2{\bar{y}}_1{\bar{y}}_2)/(t\Delta )\ge 0\) on both sides of the inequality, we find that (40) is satisfied if and only if \(\forall {\bar{y}}_1,{\bar{y}}_2 \in \mathbb {R}\) satisfying \({\bar{y}}_1+{\bar{y}}_2\le 1\), it holds

which in turn holds if and only if

Appendix B. Numerical Experiments

Formulation MILO provides one way of utilizing Theorem 1 for general problems for which an explicit linear description of P is not available. In this section, we discuss the practical effectiveness of MILO to solve problem (1). First, in Sect. B.1, we test MILO on best subset selection problems (2). As MILO is a weak formulation due to big-M constraints, it is often outperformed by alternative formulations to solve MIQO problems in the literature. Then, in Sect. B.2, we test the formulations on a class of graphical models which result in MIQO problems where matrix Q is sparse. It turns out advanced optimization solvers are able to substantially improve the relaxation, and MILO is competitive against the usual alternatives for this class of problems.

We compare MILO with the following alternative formulations:

Natural:

The natural reformulation, where we replace the nonconvex constraint \(x_i (1 - z_i) = 0\) in (1) with \( |x_i| \le 5 \Vert x^{*}\Vert _{\infty } z_i\), where \(x^{*}\) denotes the optimal solution of the problem without binary variables or cardinality constraints. Observe that \(5 \Vert x^{*}\Vert _{\infty }\) is not guaranteed to be a valid bound on \(|x_i|\), thus this formulation may produce suboptimal solutions for (1).

PerspS: The perspective reformulation [2, 14, 18, 22] where we extract a diagonal term diag\((\delta )\) from Q with \(\delta \in \mathbb {R}_+^n\) and add the perspective constraints \(s_i z_i \ge x_i^2, \; \forall i \in [n]\). For numerical stability, it is common to add bounds on the variables with the perspective reformulation [28, 30, 35]. Overall, the perspective reformulation we compare with is as follows:

The validity of the bound \( \lambda _{\max } (Q^{-1}) \Vert a\Vert _{2}\) comes from the fact that for any subset \(S \subset [n]\), the unconstrained optimal solution in the reduced space equals \(Q_{S}^{-1} a_{S}\) whose infinity norm is bounded by \(\lambda _{\max } (Q^{-1}) \Vert a\Vert _{2}\) by a similar argument as Lemma 3. In [10], a similar bound on the maximum absolute value of the continuous variables is proposed, and the bound we use may be seen as a relaxation that is easy to compute and works for an arbitrary Z. The vector \(\delta \) is chosen as the one which gives the highest lower bound of the perspective relaxation (41) by solving the SDP model in [35] with MOSEK 10. We also tested other methods for diagonal decomposition [18, 19, 35]; but we only present the results with PerspS.

In all experiments, Z is defined by a cardinality constraint, i.e., \(Z= \{z \in \{0,1\}^{n} \; | \; \sum _{i = 1}^{n} z_i \le r \}\), where \(r=kn\) for a given sparsity parameter \(0<k\le 1\), and \(b=0\). The mixed-integer optimization problems are solved by Gurobi 9.0 on a laptop with Intel(R) Core(TM) i7-8750 H 2.20 GHz and 32 GB RAM. We set the time limit to 30 min, and we use the default values of the Gurobi parameters. When tackling mixed-integer second-order conic problems (PerspS), Gurobi automatically decides between solving the continuous relaxation of (41) or using an outer linear approximation for the rotated cone constraints (41b).

1.1 B.1. Best subset selection

In this section, we solve the best subset selection problem (2) with varying k on the benchmark datasets in Table 3, available from the UCI machine learning repository.Footnote 1 The performance measures considered are solution time in seconds (for PerspS, we include the time for solving the SDP problem in the total solution time), the number of nodes explored (denoted as #node), and the initial percentage optimality gap of the continuous relaxation (denoted as %gap). We also record the optimality gaps attained at the root node after presolve for MILO, under the %gap column (in parentheses). Denoting the optimal objective value of a continuous relaxation by \({\textbf {LB}}\) and the exact optimal value (or the best upper bound) by \({\textbf {OPT}}\), the initial optimality gap is calculated as % gap = 100 \(\times \frac{{\textbf {OPT}} - {\textbf {LB}}}{{\textbf {OPT}}}\). For instances that hit the time limit, we report the average end gap in parentheses.

Table 4 shows the performance of the different formulations on these benchmark datasets. We observe that the relaxation quality of MILO is poor, with optimality gaps well above 100% (in the range of \(10^3-10^7\)%). Indeed, even though the objective of (2) has a trivial lower bound of 0, the objective values produced by the continuous relaxation of MILO are in all cases negative. The bad relaxation quality leads to large numbers of branch-and-bound nodes and solution times. However, for the special case of \(k=0.1\) on the first three datasets, Gurobi is able to close almost all the gap at the root node and solve the problems with little or no branching. Thus, while the results clearly indicate that at the moment—in the context of a general MIQO—standard methods are better than the MILO formulation, in some cases, solvers might be able to exploit the polyhedrality of MILO. In the next section, we present experiments showcasing this phenomenon.

1.2 B.2. Inference with graphical models

Given a graph \(G = (V, E)\), we consider the following MIQO problem

Problem (42) arises in the sparse inference problem of a two-dimensional Gaussian Markov random field (GMRF), see [29] for an in-depth discussion.

The graph G we consider in our experiment is a two-dimensional \(10 \times 10\) grid.

The corresponding Hessian matrix Q in problem (42) is sparse: each row has at most five nonzero entries (including the diagonal element). We use the random instances from [25], available at https://sites.google.com/usc.edu/gomez/data, where \(y_i = x_i + {\mathcal {N}}(0, \sigma )\) is a noisy observation of x, and there are three randomly sampled \(3 \times 3\) blocks of x to be nonzero. Note that \(\sigma \) affects both the noise level of y and the diagonal dominance of Q in (42), with small noise values \(\sigma \) resulting in problems with larger diagonal dominance. We test on \(\sigma = 0.1, 0.2, 0.3, 0.4, 0.5\) and sparsity levels \(k = 0.1, 0.2, 0.3, 0.4, 0.5\). For each \(\sigma \), we use five randomly generated instances and report the average statistics.

Table 5 summarizes the results. Similar to the experiments reported in Sect. B.1, the continuous relaxation of MILO is the worst among the three formulations, with gaps well over 100%. However, in this case, Gurobi closes virtually all optimality gap in all the instances, and the problems are solved very fast with at most one branch-and-bound node. The overall performance is significantly better than using the natural MIQO formulation, and very close to and in some cases (e.g., \(\sigma =0.5, k>0.1\)) faster than perspective reformulation for these instances.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wei, L., Atamtürk, A., Gómez, A. et al. On the convex hull of convex quadratic optimization problems with indicators. Math. Program. 204, 703–737 (2024). https://doi.org/10.1007/s10107-023-01982-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-023-01982-0