Abstract

With the growing significance of environmental awareness, the role of renewable materials and their reuse and recycling possibilities have become increasingly important. Wood is one of the best examples for this, as it is a material that has a variety of primary uses, while also being a prime candidate for reuse and recycling. An important phase in most waste wood value chains is the processing of bulk waste from various sources, usually by means of shredding. This paper presents methods for scheduling the machines in such a waste wood processing facility, where incoming deliveries of different types of wood are processed by a series of treatment and transformation steps to produce shredded wood. Two mathematical models are developed for the problem that both allow overlaps between consecutive steps to optimize resource flow through the system. One of these is a more traditional discrete-time model, while the other is precedence-based and uses continuous-time variables for the timing of the various tasks. Both modeling techniques have their advantages and shortcomings with regard to the ease of integration of further problem-specific parameters and requirements. Next to providing a sound approach for the identified problem class, another aim is to evaluate, which technique suits better for this problem class, and should be used as a basis for extended and integrated cases in the future. Thus, the performance of these models is compared on instances that were randomly generated based on real-world distributions from the literature.

Similar content being viewed by others

1 Introduction

With the increasing focus on sustainability and environmental awareness, the various transformation processes of renewable materials and their reuse and recycling possibilities are experiencing a growing interest. Wood is one of the prime examples of such a renewable material, as it has a huge variety of primary uses (e.g. construction, furniture, energy, paper) while also being a prime candidate for reuse and recycling. Two overlapping fields both contribute to this concept; namely, cascading use of wood and circular economy. Cascading tries to enhance the utilization of wood through the sequential steps of reuse, recycle, and finally, transformation to energy (Jarre et al. 2020). This can result in the decrease of fresh wood used as a resource and provides an opportunity for discarded wooden products to be used instead. The movement of resources in such a network of various woodworking sectors was modeled by Taskhiri et al. (2019) for a use-case in Lower Saxony, Germany. Circular economy is a concept where the traditional forward supply chain of products is augmented by a reverse supply chain as well, where the focus is on the optimization of resource usage by repair, reuse, refurbishing, remanufacturing, and recycling, keeping resources in this closed loop as long as possible (Balanay et al. 2022).

Optimization of the traditional forward product flows of wood is a well-researched area, with problems ranging from harvesting (Santos et al. 2019; Mesquita et al. 2021) through facility-level decision-making (e.g. sawmilling Maturana et al. 2010), cutting pattern optimization (Koch et al. 2009) to network-level modeling (Pekka et al. 2019). However, the utilization of waste wood and the reverse flows of this material are not widely studied. Waste wood can originate from a variety of sources, the two main groups being residual industrial wood (from the woodworking industry) and used wooden products (ranging from demolition waste to household items). However, similarly to other biomass residues, waste wood is mostly considered a resource for energy production (Tripathi et al. 2019), and scientific studies usually concentrate on this aspect, while there might be more sustainable recycle possibilities (Garcia and Hora 2017).

As it was mentioned, the literature studying the optimization problems of waste wood is scarce. The two main research areas are the resource flow of waste wood for energy (Greinert et al. 2019; Marchenko et al. 2020) and the optimization problems of network design (Devjak et al. 1994; Burnard et al. 2015; Trochu et al. 2018; Egri et al. 2021). While the previous studies concentrate mostly on network-level decisions, optimization problems in the nodes of waste wood logistics networks should also be studied. One important step is the processing of the collected waste, which is usually done through shredding, as most end-uses (e.g. energy or chipboard) require wood to be shredded to a certain size.

This paper presents the scheduling of a waste wood processing plant, where the incoming wood deliveries are processed by a series of transformation steps to produce shredded wood. Two mathematical models are designed for the problem to handle the overlapping of the automated processing steps in order to provide as continuous operation as possible. One of these is a discrete-time model, which is closer to the classical way of scheduling tasks in such a facility (Floudas and Lin 2004), while the other is a precedence-based model that utilizes continuous-time variables for the timing of activities. While precedence-based models tend to achieve better performance if all tasks to be executed are known in advance, they are not trivial to extend with capacity constraints on shared resources or to allow preemption. Time discretization models, on the other hand, can model such features easily, but usually require a larger number of integer variables. Moreover, the investigated problem class features a special processing step, whose position in the production sequence depends on resource assignment. The performance effects of modeling such a unique requirement for either technique were unknown a priori and also contributed to the goals of our investigation. The efficiency of the proposed models is shown on instances that were randomly generated based on real-world distributions from the literature, and their results will be compared on these same datasets.

This work is an extension of the conference proceedings publication of Dávid et al. (2021b), which acts as a preliminary version of this paper. While the above publication only presented the major parts of the precedence-based model, this paper presents both a discrete- and continuous-time model for the representation of the waste wood shredding problem. The presented topic is a variation of our previously published work on scheduling waste wood processing facilities in Dávid et al. (2021a). This previous study considered the uncertainty of the type and origin of the incoming deliveries (which is not tackled in the current paper), but it did not allow any overlapping between the various processes of the facility and only tackled the continuous case.

2 Problem definition

The proposed models in Sects. 3 and 4 minimize the total weighted lateness of a plant that processes waste wood deliveries from collection centers and households. Each delivery has a given arrival time, a due date for completing its processing, and a priority weight.

There are 5 main processing steps each delivery has to go through:

-

Inspection and sorting (\({{\texttt{IS}}}\))

-

Metal separation (\({{\texttt{MS}}}\))

-

Coating removal (\({{\texttt{CR}}}\))

-

Shredding (\({{\texttt{SH}}}\)) and reshredding (\({{\texttt{RS}}}\))

-

Screening (\({{\texttt{SC}}}\))

The main step is shredding, for which dedicated, high-throughput machines are available. Subsequently, shredded wood goes through screening, where large pieces are selected for reshredding. Reshredding is executed by the same shredding machines, while screening also has its dedicated units.

Metal separation can be done either by a magnetic separator in an automated fashion or manually by a dedicated crew. In the former case, it has to be done after the main shredding operation, otherwise, it precedes coating removal, and follows inspection. Both of these initial steps are carried out by their dedicated crews and based on the quality of the delivery, coating removal is only needed for a portion of it.

The overall process of the plant is illustrated in Fig. 1.

Intermediate materials produced by machines may be transported to the subsequent step while the rest of the batch is still being processed, i.e., these steps behave in a continuous fashion. Manual steps, however, can be considered batch subprocesses. Neither the manual nor the automated steps may be interrupted apart from the end of the shifts. In the latter case, production must resume as it was when the next shift starts. Automated steps may have an input storage/buffer that can hold shredded wood for as long as needed. The capacities of these storages are sufficiently large to enable fully batch operation if equipment availability would require it.

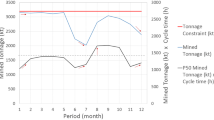

Screening is always done in parallel with shredding, and the selected wood parts are redirected back to the same machine. Thus, the shredding cannot end while screening (and metal separation if done automatically) is done. The possible timing of these steps is illustrated in Fig. 2 with both options for metal separation.

There may be several available machines for automated steps, which can be assigned to either different or the same delivery at a given time. The dedicated crews, however, may not be split up between different jobs.

The wood type of a delivery may be solid (\({{\texttt{S}}}\)) or derived (\({{\texttt{D}}}\)), which main factor for the percentage of wood / shredded wood that requires coating removal / reshredding. These values are estimated based on statistical data from the literature

Formally, the problem data may be given by the following sets and the parameters listed in Table 1:

- J:

-

Finite set of jobs/deliveries

- S:

-

\(=\{{{\texttt{IS}}},{{\texttt{MS}}},{{\texttt{CR}}},{{\texttt{SH}}},{{\texttt{SC}}}\}\) set of steps

- M:

-

Finite set of machines and dedicated crews

Note, that from the modeling point of view, dedicated crews do not differ from machines, as they cannot be split, so M is considered to entail \(\{crew\_{{\texttt{IS}}}, crew\_{{\texttt{CR}}}, crew\_{{\texttt{MS}}}\}\) which refer to the manual execution of the corresponding steps.

Each machine/crew is dedicated to the execution of a single subprocess. \(s_m\) will refer to the step which \(m\in M\) can process.

3 Discrete-time model

A discrete-time model was the first to be developed for the problem as this is closer to the classical way of scheduling the timing of real-world events inside a facility. Tasks can only start at predefined time points, which may be suboptimal but easier to apply in practice with work shifts.

Time is discretized through a global time grid, whose time points are \(\Delta \) hours from each other. The last time point is set to 3 days later than the latest deadline, to account for the delay:

The index set of the time points is denoted by P:

The value of \(\Delta \) should be equal to the shift length, h, or \(\frac{h}{2}, \frac{h}{3}, \frac{h}{4}, \dots \), so there is a time point at the start of each shift.

Material flows are modeled with a State-Task Network (STN) representation, which was introduced by Kondili et al. (1993). The recipe has two production paths, as the task sequence is different depending on whether manual or automated metal separation is used. To model this, 2 different states are defined for intermediate states after inspection and sorting in the set of resource states:

The tasks are also duplicated to account for the two production paths, so the input and output states of the two task variants can be different. For each step \(s \in S\), its input is given by \(in^m_s\) for the manual variant, and \(in^a_s\) for the automated variant. Similarly, the output is given by \(out^m_s\) and \(out^a_s\). The inputs and outputs can be seen in Fig. 3, where rectangles represent tasks, and circles denote states.

As due dates and arrival times are given in days, some relations between days and time points must be defined. The set of days is denoted by D:

The time point at the start of day d is defined as \(st_d = \frac{d\cdot h}{\Delta }\).

To account for the percentages requiring coating removal and reshredding, virtual throughputs are defined for the machine-job pairs. If 20% needs coating removal from job j, the virtual throughput of this step will be 5 times the normal throughput. Conversely, if 20% needs reshredding, the virtual throughput of the shredding and screening steps will be the normal throughput divided by 1.2. In general, the virtual throughput \(c^v_{m,j}\) is calculated as:

The arriving material quantities are calculated for each time point:

A big-M parameter is required for setting quantities. This is defined as:

3.1 Decision variables

To avoid increasing the number of variables, tasks are not duplicated in the model to represent the variants on the two production paths. Instead, a separate binary variable, \(a_j\) decides whether automated (\(a_j=1\)) or manual (\(a_j=0\)) metal separation is used for job j.

The variables of the discrete-time model are listed in Table 2.

3.2 Constraints

As tasks are nonpreemptive, each must start exactly once. This is set by Eq. (1).

A machine can only be processing a job at a time point if its corresponding step is started at the same time point, or if it was already processing the job in the previous time point. Equation (2) ensures this processing continuity, while Eq. (3) set the bound for the first time point.

Equation (4) prohibits a machine from processing multiple jobs at the same time.

The quantity processed at a time point by a machine is bounded by its throughput capacity in Eq. (5).

The total mass consumed and produced from each resource state is calculated from the machine quantities. As a machine can have different input and output states based on the production path selected by \(a_j\), separate constraints are needed for the 2 scenarios. Consumption is lower bounded in Eqs. (6) and (7) and production is upper bounded in Eqs. (8) and (9). Produced output always appears in the subsequent time point from the one where its input was consumed. Therefore, no production is allowed at the start, as stated by Eq. (10).

Material balance is set by Eq. (11) by calculating the change of stored quantity from a resource by adding the produced and arrived quantities and subtracting the consumed quantity from the storage level of the previous time point. At the first time point, production is not possible, so the difference between the initial supply and the consumption will be the stored quantity, as set by Eq. (12).

Equation (13) ensures the production of the final products in the required quantities.

The above formulation would allow the overlapping of manual steps. However, as stated in the problem definitions, these are fully batch tasks, and no overlap is allowed. To model this, Eqs. (15) to (17) ensure that the precedence requirements are satisfied between applicable task pairs.

A percentage of the material must return for reshredding, so shredding cannot finish before the end of screening. Eq. (18) ensures that shredding machines with sufficient throughput capacity remain active during the screening process. If metal separation is automated, the reshredded quantity enters this step again too. In this case, Eq. (19) ensures that metal separation machines with sufficient capacity stay active too.

3.3 Objective function

To calculate the lateness, the unfinished variables are bounded by Eq. (20). If a job is being processed at a time point, it is unfinished on the day corresponding to that time point, and all previous days.

In the objective function, the weighted count of the unfinished days after the due date of a job is calculated:

4 Precedence-based model

The second proposed model is based on precedence, i.e., the timeline is not discretized, continuous variables are assigned to the timing of events, and binary precedence variables are responsible for sequencing.

Before introducing the decision variables and constraints, several helper sets are defined to ease later formalism. A specific step of a specific job will be referred to as a task, which consists of a (j, s) pair. The set of all tasks is denoted by T:

The amount of material from a delivery that undergoes a certain step differs for steps and different material types. To ease formulation, the quantity of a task is denoted by \(q_{js}\), which can be defined as:

As each machine is dedicated to a step, assignments are done between jobs and machines, the step can be deduced unambiguously. The set of all possible assignments is denoted by A:

Any pair of jobs may be assigned to the same machine/crew, thus, there is a chance of a collision that may require sequencing. The set of these triplets is contained in set C:

Finally, a big-M parameter is needed in several constraints. A suitable value can be given as:

4.1 Decision variables

The model uses the decision variables presented in Table 3.

Since shutdown and setup times of machines are negligible, they are omitted from the model, the time representation simply shifts all of the shifts together and tackles them as a continuous time horizon. Also, the model assumes, that if a step of a job is split up among several machines, those machines start and finish working simultaneously.

4.2 Constraints

The first set of constraints enforces logical and mass balance constraints between the aforementioned variables.

Equation (21) enforces the common relation between precedence variables. If the execution of both tasks starts at the same time, one of the precedence variables can arbitrarily be chosen to be 1. In practice, this constraint reduces the number of these binary variables by half.

f the manual crew is assigned to metal separation, the other machines cannot be assigned to it. This is expressed by Eq. (22).

If a machine/crew is not assigned to a job, it cannot process any of it, otherwise, the maximum quantity is the total mass for that step, as expressed in Eq. (23).

Equations (24) and (25) express that the total amount of processed quantity for a task should equal the mass of the job for that step.

The completion time of a task should be at least as much later than its starting time, as the assigned crew/machine needs to process the assigned quantity. Equation (26) expresses this relation. Note, that the constraint binds for the non-assigned machines/crews as well, it is not relaxed by assignment variables. However, in that case,  , thus it simply implies

, thus it simply implies  which must be true for all tasks.

which must be true for all tasks.

The correct sequencing of the tasks of the same job are expressed by Eqs. (27) to (35). Some of these precedences are constant, others depend on the manual/automated assignment for metal separation.

Sequencing of the tasks assigned to the same machine or crew is expressed by Eq. (36). This constraint binds only when both (j, s) and \((j',s)\) are assigned to m and j is decided to precede \(j'\) in that stage.

4.3 Objective function

In order to express the objective function, the  variables must be bound to the \(t^c_{j,{{\texttt{SH}}}}\) variables, as those mean the ending of processing for each job. This relation is expressed in Eq. (37).

variables must be bound to the \(t^c_{j,{{\texttt{SH}}}}\) variables, as those mean the ending of processing for each job. This relation is expressed in Eq. (37).

Then, the objective function can be expressed as:

5 Case study

The models introduced in the previous sections were both tested on the same sets of instances. As acquiring real-life datasets with varied sizes and structures is not a trivial task, testing of the model was done with the same methodology presented in Dávid et al. (2021a): input instances were randomly generated based on available distribution data. Arrival times and deadlines of the deliveries were also chosen randomly, either in a 1-week or 2-week interval, depending on the instance set. Deadlines were due 3–5 days after arrival, which resulted in instances having either a 12-day (referred to as 1-week instances) or 19-day (referred to as 2-week instances) planning horizon (with the latest arrival plus the latest deadline). The number of deliveries was a multiple of 5 between 5 and 25 for the 1-week, and between 5 and 40 for the 2-week instances. The features of the waste wood deliveries were determined based on statistics in Kharazipour and Kües (2007), their sizes were determined using the capacities of biomass transport trucks (Laitila et al. 2016) and publicly available data on real machines (Komptech 2022) was used to determine throughput. Two different delivery types were used: small deliveries had a mass corresponding to the payload of a small truck (6–15 t) and the ones with large deliveries had a mass corresponding to the payload of an average-sized biomass transport truck (31–49 t). Masses of both delivery types were chosen uniformly randomly in the above-mentioned intervals. This resulted in 13 instance sets (one for each pair of delivery size and delivery number, e.g. ‘25 large deliveries’), with 10 different random instances in each set. The model was solved for all instances using the Gurobi 9.1 solver on a PC with an Intel Core i7-5820K 3.30 GHz CPU and 32 GB RAM. A running time limit of 3600 s was introduced to all test runs.

First, test results for instances with small deliveries will be presented for both the discrete- and continuous-time models (DT and CT respectively), followed by the results for instances with large deliveries. The rows of every result table present aggregated information for the 10 inputs of a specific instance set. The planning horizon and the applied model are given in the table description, while the header of the row (Jobs) denotes the number of jobs in each input of the given instance set. This is followed by three sets of information about the solution of these inputs; the number of instances that were solved to optimality in the given time limit (Opt) and their average running time (Avg. time (s)), the number of instances that only yielded suboptimal solutions in 3600 s (Subopt.) and their average optimality gap (Avg. gap), and finally the number of instances where no feasible solution was found (No sol.).

5.1 Small deliveries

Solutions for the instances with small deliveries over a 1- and 2-week arrival horizon are presented in Tables 4 and 6 for the discrete-time model and in Tables 5 and 7 for the continuous-time model.

Results of the DT model show that the optimal solution of the inputs within the time limit is only possible in the smallest instance sets (5 and 10 jobs) for both 1- and 2-week horizons. Moreover, this model failed to provide even feasible solutions for inputs from larger instance sets (20 and 25 in the 1-week cases and 30, 35, and 40 in the 2-week cases).

On the other hand, the performance of the CT model was good in all of the above instances. This model found optimal solutions for every input, with an average running time of under 1 s in every case (except for the 2-week set with 40 deliveries, where the average running time is slightly above 1 s).

5.2 Large deliveries

Solutions for the instances with large deliveries over a 1- and 2-week arrival horizon are presented in Tables 8 and 10 for the discrete-time model and in Tables 9 and 11 for the continuous-time model.

Observations here are similar to the instance sets with small deliveries. The DT model again only provides optimal solutions within the time limit for the smallest instance sets (5 and 10 jobs) for both 1- and 2-week horizons. Feasible solutions are once again not achieved at all for inputs of larger instance sets (20 and 25 in the 1-week cases and 30,35 and 40 in the 2-week cases).

The CT model performed well in all of the above instances. However, optimal solutions were not found for every instance set within the set time limit Except for the largest instances (25 jobs in the 1-week case and 35,40 jobs in the 2-week case), every input is either solved to optimality under a minute or a suboptimal solution is found within the given time limit.

It can be seen from all the above results that the CT model outperformed the DT model for every instance set in any combination of 1- or 2-week planning horizons and small or large deliveries in the input. While the DT model had problems finding even feasible solutions for mid- and large-sized inputs, the CT model provided optimal solutions to all instances with small deliveries, and found feasible or optimal solutions to the majority of instance sets, except for the largest inputs in each group.

6 Conclusions

This paper presented the problem of machine scheduling in a waste wood processing plant where the incoming deliveries of wood are shredded. Two mixed-integer linear programming models were developed for the problem that allow overlapping of the machine processing steps, so the flow of material can be as continuous throughout the facility as possible. Moreover, the presented models address a unique scheduling feature of the investigated problem class, where resource assignment affects task dependencies within the recipe. The two models were compared to each other on instances that were randomly generated based on real-life statistical data.

While both models can tackle the considered problem class, the results of computational tests showed that the continuous-time model performs significantly better in all cases. It is capable of scheduling a large number of jobs over the short-term period of one week and a mid-term period of two weeks under a short solution time. This performance warrants further investigation in the application of such models for more complex, extended, or integrated scheduling problems with similar time frames. On the other hand, while such an extension may be simpler with discrete-time models, the tests showed that significant improvements have to be made before this type of model can be considered for more complex problems. Moreover, the development of metaheuristic algorithms together with exhaustive testing of larger planning periods of several weeks and a mix of different deliveries can be conducted in the scope of future work. Ideally, real-world datasets should also be collected and used for the testing of this model.

References

Balanay RM, Varela RP, Halog AB (2022) Chapter 25 - circular economy for the sustainability of the wood-based industry: the case of Caraga region, Philippines. In: Stefanakis A, Nikolaou I (eds) Circular economy and sustainability. Elsevier, Amsterdam, pp 447–462. https://doi.org/10.1016/B978-0-12-821664-4.00016-9

Burnard M, Tavzes Č, Tošić A, et al (2015) The role of reverse logistics in recycling of wood products. In: Environmental Implications of Recycling and Recycled Products. Springer Singapore, pp 1–30 https://doi.org/10.1007/978-981-287-643-0_1

Devjak S, Tratnik M, Merzelj F (1994) Model of optimization of wood waste processing in Slovenia. In: Bachem A, Derigs U, Jünger M et al (eds) Operations research ’93. Physica-Verlag HD, London, pp 103–107

Dávid B, Ősz O, Hegyháti M (2021) Robust scheduling of waste wood processing plants with uncertain delivery sources and quality. Sustainability 13(9):5007. https://doi.org/10.3390/su13095007

Dávid B, Ősz O, Hegyháti M (2021b) Scheduling of waste wood processing facilities with overlapping jobs. pp 321–326, https://www.scopus.com/inward/record.uri?eid=2-s2.0-85125182873 &partnerID=40 &md5=60b1d2de6db7357dee3b14d8edc683c7

Egri P, Dávid B, Kis T et al (2021) Robust facility location in reverse logistics. Ann Oper Res 1–26. https://doi.org/10.1007/s10479-021-04405-5

Floudas CA, Lin X (2004) Continuous-time versus discrete-time approaches for scheduling of chemical processes: a review. Comput Chem Eng 28:2109–2129. https://doi.org/10.1016/j.compchemeng.2004.05.002

Garcia CA, Hora G (2017) State-of-the-art of waste wood supply chain in Germany and selected European countries. Waste Manag 70:189–197. https://doi.org/10.1016/j.wasman.2017.09.025

Greinert A, Mrówczyńska M, Szefner W (2019) The use of waste biomass from the wood industry and municipal sources for energy production. Sustainability 11(11):3083. https://doi.org/10.3390/su11113083

Jarre M, Petit-Boix A, Priefer C et al (2020) Transforming the bio-based sector towards a circular economy-What can we learn from wood cascading? Forest Policy Econ 110(101):872. https://doi.org/10.1016/j.forpol.2019.01.017

Kharazipour A, Kües U (2007) Recycling of Wood composites and solid wood products. Universitätsverlag Göttingeng, Germany, pp 509–533

Koch S, König S, Wäscher G (2009) Integer linear programming for a cutting problem in the wood-processing industry: a case study. Int Trans Oper Res 16(6):715–726. https://doi.org/10.1111/j.1475-3995.2009.00704.x

Komptech (2022) Stationary machines. https://komptechamericas.com/wp-content/uploads/2019/03/1-StationaryMachines-KTA.pdf

Kondili E, Pantelides C, Sargent R (1993) A general algorithm for short-term scheduling of batch operations-I. MILP formulation. Comput Chem Eng 17(2):211–227. https://doi.org/10.1016/0098-1354(93)80015-F

Laitila J, Asikainen A, Ranta T (2016) Cost analysis of transporting forest chips and forest industry by-products with large truck-trailers in Finland. Biomass Bioenerg 90:252–261. https://doi.org/10.1016/j.biombioe.2016.04.011

Marchenko O, Solomin S, Kozlov A et al (2020) Economic efficiency assessment of using wood waste in cogeneration plants with multi-stage gasification. Appl Sci 10(21):7600. https://doi.org/10.3390/app10217600

Maturana S, Pizani E, Vera J (2010) Scheduling production for a sawmill: a comparison of a mathematical model versus a heuristic. Comput Ind Eng 59(4):667–674. https://doi.org/10.1016/j.cie.2010.07.016

Mesquita M, Marques S, Marques M, et al (2021) An optimization approach to design forest road networks and plan timber transportation. Op Res 1–29

Pekka H, Reetta L, Juha L et al (2019) Joining up optimisation of wood supply chains with forest management: a case study of North Karelia in Finland. For Int J For Res 93(1):163–177. https://doi.org/10.1093/forestry/cpz058

Santos PAVHd, Silva ACLd, Arce JE et al (2019) A mathematical model for the integrated optimization of harvest and transport scheduling of forest products. Forests 10(12):1110. https://doi.org/10.3390/f10121110

Taskhiri MS, Jeswani H, Geldermann J et al (2019) Optimising cascaded utilisation of wood resources considering economic and environmental aspects. Comput Chem Eng 124:302–316. https://doi.org/10.1016/j.compchemeng.2019.01.004

Tripathi N, Hills C, Singh R et al (2019) Biomass waste utilisation in low-carbon products: harnessing a major potential resource. npj Clim Atmos Sci 2:1–10. https://doi.org/10.1038/s41612-019-0093-5

Trochu J, Chaabane A, Ouhimmou M (2018) Reverse logistics network redesign under uncertainty for wood waste in the crd industry. Resour Conserv Recycl 128:32–47. https://doi.org/10.1016/j.resconrec.2017.09.011

Funding

Open access funding provided by University of Sopron. This research was supported by the National Research, Development and Innovation Office, NKFIH Grant no. 129178. Balázs Dávid gratefully acknowledges the European Commission for funding the InnoRenew CoE project (Grant Agreement #739574) under the Horizon2020 Widespread-Teaming program, and the Republic of Slovenia (Investment funding of the Republic of Slovenia and the European Union of the European Regional Development Fund). He is also grateful for the support of the Slovenian National Research Agency (ARRS) through grants N1-0093, N1-0223 and BIAT/20-21-014, and gratefully acknowledges the Slovenian National Research Agency (ARRS) and the Ministry of Economic Development and Technology (MEDT) for the grant V4-2124. He is supported in part by the University of Primorska postdoc grant No. 2991-5/2021.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ősz, O., Dávid, B. & Hegyháti, M. Comparison of discrete- and continuous-time models for scheduling waste wood processing facilities. Cent Eur J Oper Res 31, 853–871 (2023). https://doi.org/10.1007/s10100-023-00852-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10100-023-00852-6