Abstract

Interaction design/HCI seems to be at a crossroads. On the one hand, it is still about designing for engaging user experiences (UX). Still, on the other hand, it seems to be increasingly about reducing interaction and automating human–machine interaction through the use of AI and other new technologies. In this paper, we explore this seemingly unavoidable gap. First, we discuss the fundamental design rationality underpinning interaction and automation of interaction from the viewpoints of classic theoretical standpoints. We then illustrate how these two come together in interaction design practice. Here we examine four examples from already published research on automation of interaction, including how different levels of automation of interaction affect or enable new practices, including coffee making, self-tracking, automated driving, and conversations with AI-based chatbots. Through an interaction analysis of these four examples, we show (1) how interaction and automation are combined in the design, (2) how interaction is dependent on a certain level of automation, and vice versa, and (3) how each example illustrates a different balance between, and integration of interaction and automation. Based on this analysis, we propose a two-dimensional design space as a conceptual construct that takes these aspects into account to understand and analyze ways of combining interaction and automation in interaction design. We illustrate the use of the proposed two-dimensional design space, discuss its theoretical implications, and suggest it as a useful tool—when designing for engaging user experiences (UX), with interaction and automation as two design materials.

Similar content being viewed by others

1 Introduction

Interaction design/HCI seems to be at a crossroads. Artificial intelligence (AI) is sweeping across our field, changing the nature of computing [30]. AI is making it possible to automate functions and activities that, until now, have been deemed to be done by people. At the same time, there is also a growing interest in user experience (UX), not at least in the tech industry, where the experience of active and engaged interaction with digital systems is emphasized. As suggested by [14], these two directions are also fundamentally interdependent and interrelated, and accordingly, “given the expansion of the field, there is a continued need for HCI contributions” [14, p. 99]. At the same time, these two developments—automated functionality and engaged interaction—seem to pull in different directions. On the one hand, an ambition to off-load, delegate, and minimize the need for human–computer interactions by using modern (AI) technology. This automation of interaction represents a shift from human–machine interaction as the primary activity to scenarios where intelligent machines can do these things for us without the need for (obvious) interaction. On the other hand, the interest in UX suggests a need to understand human–machine interactions as a foundation for the design of engaging interactions. While we could see these two trends as distinct developments, we suggest that there is a more complex relationship where these two are tightly interlinked.

There is a growing body of research on automation of everyday life (see, e.g., [6]) and how to work with automation in design for engaging interaction with interactive systems (i.e., with “scripted [automated] parts of the interaction that “will affect the overall experience of interaction” [31, p. 93]). In this paper, we contribute to this growing strand of research by conceptually exploring how these two trends, automation and UX, are linked and how it is possible to describe and understand the relationships between automation and interaction. Lots of research is now being carried out with either a focus on UX (e.g., [9]) and user engagement (see, e.g., [4, 27]) or interaction with AI systems (see, e.g., [11, 17, 24]). We suggest that a focus on interaction, as a unifying and fundamental object of analysis, is needed to examine how these two are interlinked, not only to understand the overarching trends of automation and UX, but, even more importantly, from a design perspective as these two strands are increasingly coming together in modern interaction design. Even though the use of automation might not lead to the disappearance of interaction, or implicit interactions [5, 15], it is often designed to support or complement interaction to make digital services easier to use, to a point where control and precision in interaction are substituted for probabilities and estimations. We are, of course, aware of the large existing bodies of related research on automation of interaction, AI, UX, implicit interaction, etc., in HCI, and we contribute to these strands with our focus on interaction as a unifying perspective and through our conceptual analysis. With this as our point of departure, we explore two directions before conceptually exploring how these two strands come together.

1.1 Direction I—“control and precision”—HCI and design for engaging user experiences (UX)

HCI, as a research and development program, has been dedicated to understanding and designing comfortable, engaging, and useful interactions with computers. Guiding principles have been ease of use (e.g., usability) and control (e.g., WYSIWYG, Fitts law, direct manipulation), all with the purpose of designing machines that carry out precisely what the user wants, with high precision. The notions of control and precision have been the guiding goals behind most of these efforts. Accordingly, HCI has been about understanding user needs, requirements, and human capabilities and about designing interactive systems adjusted to these human needs and activities.

To reach this goal of control and precision in interaction design, the field of HCI has developed several design paradigms and principles, including the use of metaphors, direct manipulation, tangible interaction, and embodied interaction, just to mention a few. The field has borrowed and worked with gestalt laws from psychology to make the user interfaces easy to see, understand, and use, and with physiological knowledge from ergonomics. In short, the field has taken several approaches to arrive at a high level of usability to ensure that intended actions are made visible by the interface. The field has worked with notions such as understandability, comfort, ease of use, and learnability to ensure that the user feels that they are “in control” and that they can carry out intended interactions with or through these interactive systems with a high degree of precision.

But control and precision were not the only goals driving this development. With a focus on the active user, who interacts with these systems through interaction modalities that allow for direct manipulation, navigation, explorations, and even tangible or embodied forms of interaction, there have been complementary goals for making these sessions successful. A complementary goal has been to design for engaging user experiences (UX). We see this in the development of VR caves (with related notions of “immersion” and feeling present), or for website and computer game design, where the user should not just use the website or the game but should be entertained, engaged, and committed. In short, by applying a user-centered approach, the field has been occupied with the design of interactive systems along with user requirements, wants, and needs to ensure precision and control while also providing aspects of what it should feel like or be about when using these interactive technologies—that is, a great user experience (UX).

1.2 Direction II—“probabilities and estimations”—automation of interaction

We now turn to the second movement, the automation of interaction. As suggested by Shekhar [23] “Automation has introduced a system of computer and machines and replaced a system that was built by combining man and machine.” [23, p. 14] where “Automation basically means making a software or hardware which is capable of automatically doing things and that too without any form of human intervention”. And where “Artificial intelligence on the other hand is a science as well as engineering which is involved in making machines which are intelligent. AI is about attempting to make machines mimic or even try to supersede human intelligence and behavior” [23, p. 14]. Increasingly, AI is now used for automation. For instance, generative AI applications such as ChatGPT can generate text on behalf of its user (prompt-based interaction), serving as automation of the writing process. Through this trend towards the use of AI for automation, it has also led to a situation where “both these terms are used interchangeably in daily life” although “there are also big differences between these two. These differences correspond to the complexity level of both systems” [23, p. 14].

Further, the use of AI and other new technologies offers new functionality and performance. As suggested by Yarlagadda [33] “Artificial Intelligence and RPA automation are most likely to outperform labor in ten years hence replacing human labor” [33, p. 365]. On the other hand, Acemoglu and Restrepo [1] suggest that “we are far from a satisfactory understanding of how automation in general, and AI and robotics in particular, impact the labor market and productivity. On the one side are the alarmist arguments that the oncoming advances in AI and robotics will spell the end of work by humans, while many economists on the other side claim that because technological breakthroughs in the past have eventually increased the demand for labor and wages, there is no reason to be concerned that this time will be any different.” [1, p. 197].

This development towards automation of interaction offers an alternative to the classic HCI concepts of precision, ease of use, usability control, and engagement. In fact, along with this movement, ease of use in many cases means “no interaction” or minimal interaction since the work is delegated to the machine. Interaction is substituted with automation. We refer to this as the automation of interaction. At the same time, the second notion, control, is also changed—from control as a matter of the user being in control and precision carrying out what they want to do with a computer to a matter of “controlling” that the system is doing, what it is expected to do, and to control that it is not doing something unwanted.

Here, this shift in the meaning of the notion of “control” has led to calls for explainable and graspable AI [6], responsible AI [3], and issues of ethics and trust in AI systems have been foregrounded (see, e.g., [12, 18, 29]) to ensure that automatic/intelligent systems are doing what is intended and expected. While the movement towards “precision and control” foregrounded direct manipulation and active and engaged users, this movement towards automation of interaction leads to emerging worries related to the role of interactive systems and how they should act with users and on their behalf. In short, this trend seems to be about reducing or substituting interaction with automation of interaction.

2 Related work—balancing interaction and automation

The field of HCI research and development has always been occupied with the question of how the interaction between humans and machines should be shaped and which activities should be assigned to the human and which ones to the machine. Since this is a broad and wide-ranging topic, it would be possible to read almost all HCI research as related work to our investigation. Instead of including all potentially related work, we will only mention a few that more specifically address this balance between interaction and automation and how these two can be integrated into interaction design.

It has been argued that successful automation is about establishing a good balance between automation and human control [16]. To automate some aspects of an interaction, while enabling the user to interact with, control, or feel engaged has most recently been highlighted by Scheiderman [25, p. 60], who argues that to design human-centered AI requires some form of balance (to ensure that the user understands the system as reliable, safe and trustworthy). This notion of “balance” between “automation of interaction” and manual interaction has also been approached by other researchers. For instance, Lew and Schumacher [17] argue that AI cannot be without UX. We see literature like this as a sign that HCI is at this crossroad between UX and AI from the viewpoint of designing for engaging interactions versus designing for automation. Further, we suggest that this sparks questions about how automation can be integrated into interaction design, and how the relations between interaction and automation can be explored to understand and explore the design space of interaction and automation.

In relation to this, it has been proposed that one can think about this design challenge from the viewpoint of an “automation space” [2]. The notion of an automation space suggests that it would be possible to explore what aspects of an interaction design should or could be automated and what should be or must be manual. However, it is not apparent how this can be done since the automatization of an act may reveal unseen aspects of the activity that would change how it can be automated. Don Norman [21], in his book “The design of future things,” describes how automation foregrounds issues of user experience. He uses the classic example of “driving” an autonomous car and how this affects the user experience. Interestingly, there is a connection between the automation of interaction, and the efforts taken to make the ride smoother by automating the activity of steering the car through the use of embedded computers and sensors hide the technology while foregrounding essential aspects for comfortable user experiences—such as trust and feeling of control. Hancock et al. [10] add to this discussion of how automation and manual control are interrelated and highlight that “a long-held conventional wisdom is that a greater degree of automation in human-in-the-loop systems produces both costs and benefits to performance. The major benefit of automation is performance in routine circumstances. Costs result when automation (or the systems or sensors controlled by automation) “fail” and the human must intervene” [10, p.10]. In addition to this discussion on the balance between automation and interaction, Sheridan and Parasuraman [24] pinpoint that “automation does not mean humans are replaced; quite the opposite. Increasingly, humans are asked to interact with automation in complex and typically large-scale systems, including aircraft and air traffic control, nuclear power, manufacturing plants, military systems, homes, and hospitals.” [24, p. 89], In relation to this, Parasuraman, R. et al. [26] define automation in terms of how “Machines, especially computers, are now capable of carrying out many functions that at one time could only be performed by humans. Machine execution of such functions—or automation—has also been extended to functions that humans do not wish to perform, or cannot perform as accurately or reliably as machines” [26, p. 286] and in doing so they propose a model for types and levels of human interaction with automation.

As computing is increasingly designed along an aesthetics of disappearance [31], rendered invisible [20], and fundamentally entangled with our everyday lives [7, 32], it becomes less clear what is suitable for automation and what requires true user control. In fact, this seems to be almost an interaction design paradox, and it has accordingly received some attention from HCI and interaction design researchers who have tried to resolve this tension between automation and user experience. For instance, Xu [30] has addressed disappearing computing from the viewpoint of “implicit interaction.” The basic idea is that human activities can trigger actions taken by a computer, although it happens in the background of the user’s attention. For instance, an activity bracelet counts the steps its user takes in the background of their attention, and there is no need for explicit interaction with the bracelet to “add” each step. As formulated by Mary Douglas [5], this can be seen as a process of “backgrounding,” where information (and computing) is “pushed out of sight” [5, p.3]. When information and computing go through this process of “backgrounding,” rendered invisible to the user, and when aspects of the human–machine interaction are automated, there is a need to foreground the human in these processes, that is, to understand the interplay between such automation of interaction and the user experience. Again, some attempts have been made to understand the details of this interplay. For instance, Saffer [22] has proposed to do detailed studies of “micro-interactions” to see the details of the interplay between humans and computers. We believe this to be a helpful approach, and we have, in our analysis, paid similar attention to how the human–computer interaction unfolds through a close-up examination of three cases where part of the interaction is automated.

From this review, we notice that most of this research focuses on the “balance” between interaction and automation. The field does not lack proposals on how that can be addressed—ranging from the acknowledgment of this balance via the development of models of an “automation space” to the identification of related issues, such as trust and feeling of control, at this crossroads between interaction and automation of interaction. However, we argue that for such an approach to be successful, we need a deeper understanding of what interaction is. We argue that we need a framework that allows us to focus on and examine the details of how the interaction unfolds (what is happening in the foreground) and that allows us to see aspects of automation (what is happening in the background), while at the same time relate to the user experience.

3 What is “interaction” and “automation of interaction”?

To be able to more closely analyze examples of interaction and automation, we need a vocabulary suitable for our purpose. We have chosen a model presented in Janlert and Stolterman [13]. This model of interaction provides us with terms suitable for our analysis. According to Janlert and Stolterman [13], an interaction can be defined as a user’s action understood as an operation by a user, and the responding “move” from the artifact. See Fig. 1.

A basic model of interaction. From [13]

A brief explanation of the model and its core concepts might be needed. First, the “states,” as explained in [13], fall into three classes: internal states, or i-states for short, are the functionally critical interior states of the artifact or system. External states, or e-states for short, are the operationally or functionally relevant, user-observable states of the interface, the exterior of the artifact or system. Further, world states, or w-states for short, are states in the world outside the artifact or system causally connected with its functioning. [13, p 66].

The model also details the activity on both the artifact and user sides. For instance, states change as a result of an operation triggered by a user action or by the move (action) by the artifact. These moves appear as a cue for the user. These cues come to the user either as e-state changes or w-state changes.

The model is meant to be a tool for analyzing any form of human-artifact interaction. It serves our purpose well to investigate the relationship between automation and interaction.

Based on the model, we can now define any form of “automation of interaction” as removing a pair of actions and moves from an interaction while leading to the same or similar outcome. We first focus on the extreme forms of the relationship between interaction and automation, that is, when there is no automation (full interaction) and when there is no interaction (full automation).

The extreme form of no automation of interaction means that the artifact does not perform any operations and moves other than those triggered explicitly by an action of the user. This means that the user has complete control of all activities and outcomes, which requires intimate knowledge and skill. It also means that the user needs to understand the artifact and the relationship between user actions and artifact moves.

The extreme form of full automation of interaction means that the artifact performs all its operations and moves without being triggered by any actions from the user. Instead, the artifact moves are based on its i-states or changes in the w-states. This means that the user has no control over activities and outcomes. It also means that the user does not need any particular knowledge or skills since the artifact performs all actions.

We can now see that “automation of interaction” through AI means that we substitute man–machine interaction with AI support that can automate complex relationships between actions, operations, moves, and/or cues as the basic model of interaction shows (Fig. 1). Typically, this can be implemented by designing a system that monitors user behaviors and expressions to determine what is expected from the system. This also means that instead of reacting to precise user actions, the system moves based on interpretations of previous interaction(s) and estimations of the most effective moves to take (given the probability model that governs the system).

In many cases, the reduction of interaction will, for the user, lead to a loss of control and precision, but maybe with a gain in functionality, performance, and of course, a lesser need to focus on interaction. This model is descriptive and has no intention of being prescriptive or hinting at what might be good or bad interactions. We are using it as a tool in our investigations of some examples. More details and nuances of the model are found in [13].

4 Four examples—interaction and automation in practice

In the following four examples, we will use the model of interaction presented above (Fig. 1) to examine how interaction and “automation of interaction” come together in practice. The four examples are coffee-making, self-tracking, automated driving, and interaction with ChatGPT. We have selected these four as they serve as both well-known examples of automation of interaction and have been used in related published research on automation of interaction. In fact, the first three examples can be found in a special issue on everyday automation experience [5] where Klapperich et al. [16] introduced the example of coffee making, where Hancı et al. [8] focused on self-tracking and where Lindgren et al. [19] focused on everyday autonomous driving. For the fourth example, we included interaction with AI—conversations with ChatGPT—as it is right now one of the most fast-growing AI-based chatbots, both in terms of the data set it uses and the number of users worldwide (about 100 million active users in January 2023).

For each example, we first present it as a case, followed by a description of how each case includes aspects of interaction and automation. We then describe the case in relation to the basic model of interaction before concluding each case with a set of takeaways related to the basic model of interaction. Finally, we summarize what these three cases suggest before moving forward.

4.1 Example 1—coffee making

Klapperich et al. [16] describe how automation is finding its way into many parts of everyday life. They suggest that the reason is the increasing opportunities for end users to offload decisions to their home appliances, hand over control to their cars, step into automated trains, or go shopping at self-checkout stores. In their study, they argue that while automation has many advantages, for example, in terms of time saved, better and more predictable results, etc., it also has apparent negative side effects, including alienation, deskilling, and overreliance (also highlighted by, e.g., [25]).

To explore automation in relation to everyday manual processes, Klapperich et al. [16] conducted an empirical study of coffee making. In this study, they demonstrated that a manual process has experiential benefits over more automated processes. They investigated the differences between the experiences emerging when using an “automated” coffee brewing process (a Senseo pad machine) and more manual coffee brewing (with a manual grinder in combination with an Italian coffee maker), which required more user input and provided more direct contact with coffee beans, water, and heat. The results showed that brewing coffee manually was rated as more positive and fulfilling than brewing in an automated way. Positive moments in the manual brewing process were mainly related to the process itself (e.g., grinding), while in the automated brewing, the outcome, that is, drinking the coffee itself, had been the most positive. Further, their study shows that in the automated condition, 7 out of 20 participants complained about the waiting time. According to [16], this was somewhat surprising because the preparation time in the more automated process was only about a third of the time used in the more manual condition.

An important observation they made during this study was something they called “the dilemma of everyday automation” which is about how automation, on the one hand, is efficient and convenient, but on the other hand, meaningful moments derived from coffee-making are lost. Klapperich et al. [16] describe how this happens since automation renders activities experientially “flat” in terms of need fulfillment and affect (positive as well as negative). Further, they suggest that with more manual brewing, participants enjoyed the smell and feel and the sensory stimulation, especially while grinding.

Based on this study, they suggest that maybe blended forms of interaction are better than either full automation or completely manual interaction if the purpose is to design meaningful experiences. Given this preferred blended mode, they conclude their paper by suggesting that a possible solution (in-between full automation and completely manual interaction) is to reconcile automation with experience, that is, to identify ways to preserve certain advantages of automation while providing a richer experience. They suggest that this can be done through what they call “automation from below.” This is described in contrast to conventional automation, where an entire activity is automated in a top-down manner, where this kind of interaction design starts from a manual interaction (to preserve meaning), which is then supported by automation step by step.

Let us consider this example through the theoretical lens of the basic model of interaction in Fig. 1. We can see how coffee-making from the viewpoint of interaction is about user interaction with the artifact (the pad machine, the coffee beans, and the water). In this process, the user takes several actions which serve as operations to the artifact/materials at hand (grinding, adding coffee beans and water, heating water, monitoring the process, etc.). In return, the artifact makes several moves through the process of brewing. The user monitors these moves as cues and uses these as a guide to know what to do next in this process. In terms of w-states, there are external factors influencing this interactive process. For instance, if it is early in the morning, and the user is in a hurry, it might be quite an instrumental process where it is just about getting the coffee brewing process done. In contrast, if it is in the evening, it might be a slower and more sensory-guided process (smelling the beans, etc.). As this analysis illustrates, depending on a particular w-state, the process can be more or less instrumental and efficient—ranging from a preferred mode of full automation (where the coffee making happens in the background and in parallel to other activities the user might be occupied with while getting ready in the morning) to a very deliberate manual process where the user devotes their attention and actions taken completely to the interaction with the coffee machine. In this case, there is no given or correct “balance” point between automation and interaction.

4.2 Example 2—self-tracking

Hancı et al. [8] focus on another classic example of automation, namely self-tracking. As described by the authors, self-tracking technologies have become an integral part of everyday automation experiences (for instance, activity bracelets). Further, they suggest that similar to other smart technologies, from voice-activated speakers to smart light bulbs, it is a form of automation that affects our everyday lives and enhances our capabilities.

While the first example with coffee-making seemed to be about finding a good balance between automation and manual interaction, this example is more about how automation directly affects us and how we see ourselves. As formulated by Hancı et al. [8] “the use of self-tracking, by virtue of the automation experience it offers, transforms how we make sense of ourselves.”

Their study focused on how a user perceives and is affected by a technology that, to a large extent, works autonomously through the monitoring of the user’s activities. The authors introduce the concept of “commitment to self-tracking” to capture how this is also a matter of subscribing to the idea of a technology designed for self-tracking. An important conclusion is how their study “facilitates the further investigation of the relationship between commitment to self-tracking and everyday automation experiences both theoretically and empirically.”

If we return to Fig. 1, this example shows how increasing automation of self-tracking leads to new expectations of experiences. When the user is given reports on their own physical status, new expectations evolve. More tracking might be seen as desirable and needed. In short, this cycle of interaction sparks additional cycles of interactions (i.e., an e-state might trigger additional interactions). It can also be the case that the user conducts other activities that change a w-state (for instance, the user starts to walk and thus takes steps and changes their location). These changes in w-state are recognized by the user’s activity bracelet (artifact), which again triggers another round of interaction.

In terms of balance between full automation and full interaction, we can see how artifacts for self-tracing, such as activity bracelets, work along a particular schema—it is automated to the extent that it automatically monitors the user’s actions and whereabouts. At the same time, it actively seeks to trigger user actions by alerting the user about new e-states (e.g., sending out notifications of step goals, daily steps taken, medals reached, etc.). An interaction design is deliberately configured to trigger the user to trigger the artifact to produce new e-states. This “commitment to self-tracking” is a cycle where the interaction design is optimized in relation to how interaction can trigger more interaction.

4.3 Example 3—automated driving

The third example, automated driving (AD), we rely on here is already, despite being fairly new, a classic example when we turn to the automation of interaction literature.

In a study conducted by Lindgren et al. [19], they explored the anticipatory aspect of the automation experience, where five families used research cars with evolving automated driving functions in their everyday lives. Here, the focus was on the development of automated driving technologies, referred to as advanced driver assistance systems (ADAS). Their study was motivated by a growing body of human–computer interaction (HCI) research that has turned its attention to user experience (UX) to identify the factors which enable people to accept and adopt them.

In their empirical study, they used an ethnographic research approach, to achieve an in-depth understanding of how participants’ everyday lives with ADAS unfolded in natural settings, focusing on anticipatory UX. They also focused on predefined AD-prepared routes around a city (including partly hands-off driving, eyes on the road with driver monitoring reminder, speed/curve limit adaptation, lane change, a countdown to hand over between car and driver), and they studied people’s gradual adoption to these automated cars.

As a result of this 1.5-year-long ethnographic study, they could show that anticipatory UX occurs within specific socio-technical and environmental circumstances, and they concluded that this would not be easy to derive in experimental settings. This form of studying anticipation offers new insights into how people adopt automated driving in their everyday commuting routines.

This example illustrates how an automated car allows for other activities than steering the car while driving. If we return to Fig. 1, the autonomous vehicle (the “artifact” in Fig. 1) controls the driving of the vehicle by reading different w-states (e.g., lane assistant, reading the location of other cars, pedestrians). The reading of these changing w-states affects the i-state of the autonomous vehicle (e.g., it continuously updates its whereabouts based on GPS and sensor data), and it makes its next move based on this sensor data (e.g., turning left/right, accelerating, hitting the breaks). The passengers (users) in this car can sit back, relax, and monitor the moves taken but do not need to take any actions. The user can feel relaxed while the car is autonomously and safely moving forward. The notion of “driving” changes as the vehicle increases its ability to move without instructions from a user. At some point, the user is not a driver. Instead, all persons in the vehicle are passengers. This also means that the user experience expectations change, the anticipatory experiences, from being related to the activity of driving to the activity of being transported.

4.4 Example 4—interaction with AI—conversations with ChatGPT

Our fourth example has to do with interaction with the ChatGPT chatbot. In short, ChatGPT by openai.com is an AI-based chatbot that relies on a language model created by OpenAI. ChatGPT uses deep learning techniques to generate human-like responses to text-based conversations. ChatGPT is trained on a large dataset of text from the Internet, which enables it to write responses in a conversation style on a wide range of topics. It can answer questions and build on previous prompts and answers to engage in conversations with its user, and it can provide information through prompt-based conversations with its users. ChatGPT is also designed to continuously learn and improve over time, based on new data, as well as based on the interactions it has with users, which means that the automated responses generated by ChatGPT will become more accurate the more users interact with it.

In relation to Fig. 1, the basic model of interaction, we can say that the more a user interacts with ChatGPT (that is, the higher the number of moves made by the user in the form of written prompts to ChatGPT, the more input it receives in the form of operations to ChatGPT as the artifact in focus here. Each operation triggers the language model to generate new replies (changes in i-state, and output from the artifact, i.e., new moves), as part of the conversation. Accordingly, this fourth example illustrates both a very active user, who actively provide ChatGPT with new written prompts as part of the conversation, and at the same time, ChatGPT illustrates a highly automated system where each new prompt triggers ChatGPT to generate a new reply as part of the conversation with this system.

To summarize, in the first example, there is a high degree of manual interaction. In the second example, the balance between UX and AI is leaning towards a high degree of automation. When it comes to the third example with automated driving, very little interaction is needed (at least in the near future of autonomous vehicles), whereas in the fourth example with interaction with ChatGPT, it is built around a turn-taking model between human interaction with a fully automated system.

These four examples illustrate how interaction and automation of interaction are combined in different designs in ways that are obvious but also rich and complex. A similar observation was made by Parasumaran et al. [26] “This implies that automation is not all or none, but can vary across a continuum of levels, from the lowest level of fully manual performance to the highest level of full automation.”

The examples show that different balances of automation and interaction affect the user experience differently. The user experience is not a function of a particular combination of automation and interaction. No given combination always leads to engaging interactions. The examples also illustrate that while user experience is affected by the combination of automation and interaction, it is also a consequence of the context’s full complexity.

This leads us to conclude that these four examples operate along a scale in terms of how aspects of user interaction and automation of interaction are integrated into the interaction design. Based on this analysis of these four examples, we will, in the next section, propose a two-dimensional design space as a conceptual construct and tool for analyzing and guiding interaction design that seeks to arrive at engaging interaction by integrating aspects of user interaction and automation.

5 The relationship between interaction and automation

In the previous section, we could see how the basic model of interaction allowed for an analysis of the four examples regarding how aspects of interaction and automation are combined in quite different ways in each design and how these examples illustrate different balances between interaction and automation. Each example is “leaning” either towards design for less interaction or design for more interaction, except for the fourth example where we have a system with a high level of human interaction, with a high-level automated system.

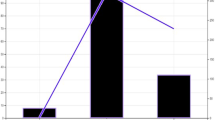

As our point of departure, we can now use two scales to examine the possible relationships between interaction and automation and accordingly use these two scales to form a two-dimensional design space to examine different combinations of interaction and automation. The two scales go from a low degree of automation, to full automation, and from a low degree of interaction, to a high degree of interaction (Fig. 2).

Based on our four examples and these two scales, it is possible to make some observations about the relationship between automation and interaction. First of all, this illustrates a wide design space where different designs can rely on a low or high degree of interaction, or rely on a high or low degree of automation. In Fig. 2, this is indicated by mapping the four examples onto the design space. Further on, this two-dimensional framework brings automation and interaction together into a space where both dimensions create a space onto which different examples of interaction and automation can be mapped. Any design relies on a particular level of interaction, in relation to a particular level of automation (see examples 1–4 as illustrations of this in Fig. 2). This means that automation and interaction should be understood as complementary instead of conflicting.

As our four examples show, the notion of a “right balance” is not about the midpoint on these two scales or at any other particular point. What would constitute an appropriate balance between automation and interaction can be anywhere on these two scales. It all depends on the purpose and outcome of a particular interaction design. Whether a certain combination is “good” or not can only be determined in relation to the purpose of the interaction and how users experience it, and the value and quality of its outcome. Full automation can lead to engaged and positive user experiences, while, in other cases, full automation can lead to negative experiences. Even in the same case, different users may have positive or negative experiences, not based on the level of automation or interaction, but based on a myriad of contextual variables (personality, culture, skill, values, etc.).

For instance, the first example (Ex. 1, Fig. 2)—coffee making—illustrates a quite high degree of manual interaction and a quite low degree of automation. For baristas, or people very interested in coffee, this configuration of the balance between interaction and automation allows for engaged interaction where very little is done automatically. On the other hand, Ex. 2—self-tracking—illustrates how the user can focus on his/her primary activity (e.g., going for a run with an activity bracelet) and letting the bracelet automatically collect and process data about the running. This illustrates a minor degree of direct interaction with the activity bracelet and a higher degree of automation where the activity bracelet automatically counts every step taken, the pulse, and the duration of the activity. Further on, Ex. 3—self-driving—illustrates a move across this design space. While driving a traditional car was a very hands-on activity that demanded a high level of interaction, attention, and commitment from the driver (Ex. 3, transparent circle, Fig. 2), the change towards self-driving cars illustrates a shift towards a high level of automation and a low level of interaction required by the passenger. Finally, Ex. 4—interaction with AI—conversations with ChatGPT, illustrates a high level of interaction with a highly automated chatbot.

6 Discussion

Our analysis of the relationships between interaction and automation has convinced us about the need for more detailed examinations of how interactivity is configured in different interactive systems. Such analysis would help the field become better at “reading” or analyzing different designs and seeing how different interactive systems operate in different ways with their users. There is a strong need for more theoretical models of interaction and automation and a deeper understanding of interactivity. Our proposed two-dimensional design space construct is simple and only addresses the two dimensions of interaction and automation, but it shows that there are opportunities to expand it and to make it richer and more detailed. There is a need for models for detailed analysis of increasingly complex interactive artifacts with evolving intelligence and automation. The interactive landscape emerging today with new automated tools and artifacts (coffee makers, vehicles, homes, etc.) invading our lives is creating a highly complex reality, and this new reality needs to be examined along different scales of automation and forms of implicit interaction. We suggest that our two-dimensional construct can be further developed, for instance, by considering different taxonomies that have been developed over the years [see, e.g., [28]).

Based on our analysis, we argue that HCI researchers need tools suitable for investigating this new reality. We recognize that there is a large amount of research aiming at understanding the consequences of this evolution from ethical, cultural, societal, and other perspectives, as well as frameworks developed that speak to how automation of interaction foregrounds aspects of user experience. But as a complement to capturing these consequences, there is a need to investigate foundational changes in the technology and the designed artifacts that cause these consequences—in this case, how can we describe and examine the relations between interaction and automation. To understand these foundational changes, they need to be analyzed and understood at a detailed level, and to be able to be compared and contrasted, there needs to be theoretical frameworks and models that can support such work.

Our work also illustrates how AI is not coming along as a “disruptive technology” that might challenge the whole field of interaction design to move away from interaction in favor of automation. On the contrary, our analysis shows that AI is not bringing anything new to the field. Automation of interaction has been ongoing since the very first days of computers. Interaction has moved from the initial highly manually demanding procedures to control a computer to where we are today, in most cases without any influence of AI. The addition of AI technology today is, however, changing the landscape since new “moves” of an artifact (Fig. 1) are now possible, and more complicated interactions can be automated.

This also tells us that the field of HCI and interaction design is not facing a new problem or situation. Instead, it is a continuation of a development that has been going on for a long time. What we are seeing is an ongoing shift from the left to the right in Fig. 2. But also, as the coffee machine example shows, it is not the case that all interactions improve by moving from left to right or from right to left. It is all about expectations of experiences and how users value experiences.

We suggest that an intimate understanding of the relationship is a valuable tool for anyone who needs to examine existing interaction designs in terms of how the interactive system’s basic model of interaction is geared towards no automation or full automation. The model can also help when imagining future designs, on the drawing board, before any form of implementation. Here, questions can be asked about how active the user should be and how intrusive the system should be (e.g., monitoring the user, collecting data, sending notifications).

7 Conclusions

In this article, we have examined how interaction design/HCI seems to be at a crossroads. On the one hand, it is still about designing for “ease of use,” control and precision, and engaging user experiences (UX). But, on the other hand, it seems to be increasingly about reducing interaction and automating human–machine interaction through new advanced technologies. We have described these two strands and discussed these two rationalities for interaction design in relation to a basic interaction model (Fig. 1). With this model as our unifying conceptual construct, we have examined four examples of how elements of interaction and automation are combined in practice. We have developed and proposed a two-dimensional design space construct that allows further analysis of the relations between interaction and automation and that demonstrates how automation and interaction operate, not at a crossroads and not in conflict but as complementary dimensions of interactive systems design. We conclude the article by discussing how a deeper understanding of this relationship can be used and its theoretical implications. We suggest an integrative understanding of automation and interaction opens up a new chapter of interaction design and HCI research. It is already changing interaction design practice. We suggest that more studies are essential to analyze further how these two strands might scaffold each other in interaction design and support meaningful interaction.

Data availability

Our manuscript has no associated data.

References

Acemoglu D, Restrepo P (2018) Artificial intelligence, automation, and work. In: The economics of artificial intelligence: An agenda. University of Chicago Press, pp 197–236

Bongard J, Baldauf M, FrÖehlich P (2020, November) Grasping everyday automation–a design space for ubiquitous automated systems. In: Proceedings of the 19th International Conference on Mobile and Ubiquitous Multimedia (pp 332–334)

Dignum V (2017) Responsible artificial intelligence: designing AI for human values. ITU J ICT Discov 1:1–8

Doherty K, Doherty G (2018) Engagement in HCI: conception, theory and measurement. ACM Comput Surv (CSUR) 51(5):1–39. https://doi.org/10.1145/3234149

Douglas M (1975) Implicit meanings. Routledge, New York, USA

Fröhlich P, Baldauf M, Meneweger T et al (2020) Everyday automation experience: a research agenda. Pers Ubiquit Comput 24:725–734. https://doi.org/10.1007/s00779-020-01450-y

Ghajargar M, Bardzell J, Renner AS, Krogh PG, Höök K, Cuartielles D, ... Wiberg M (2021, February) From” explainable AI” to” graspable ai”. In: Proceedings of the Fifteenth International Conference on Tangible, Embedded, and Embodied Interaction (pp 1–4). https://doi.org/10.1145/3430524.3442704

Hancı E, Lacroix J, Ruijten PAM et al (2020) Measuring commitment to self-tracking: development of the C2ST scale. Pers Ubiquit Comput 24:735–746. https://doi.org/10.1007/s00779-020-01453-9

Hancock PA, Jagacinski RJ, Parasuraman R, Wickens CD, Wilson GF, Kaber DB (2013) Human-automation interaction research: past, present, and future. Ergon Des 21(2):9–14

Hassenzahl M (2008, September) User experience (UX) towards an experiential perspective on product quality. In: Proceedings of the 20th Conference on l’Interaction Homme-Machine (pp 11–15). https://doi.org/10.1145/1512714.1512717

Hassenzahl M, Borchers J, Boll S, Pütten ARVD, Wulf V (2020) Otherware: How to best interact with autonomous systems. Interactions 28(1):54–57. https://doi.org/10.1145/3436942

Holstein T, Dodig-Crnkovic G, Pelliccione P (2021) Steps toward real-world ethics for self-driving cars: Beyond the trolley problem. In: Machine Law, Ethics, and Morality in the Age of Artificial Intelligence. IGI Global, pp 85–107

Janlert L-E, Stolterman E (2017) Things that keep us busy-the elements of interaction. The MIT Press, Cambridge, MA

Janssen CP, Donker SF, Brumby DP, Kun AL (2019) History and future of human-automation interaction. Int J Hum Comput Stud 131:99–107

Wendy Ju (2015) The design of implicit interactions, synthsis lectured on human-centered informatics. Morgan & Claypool Publishers, USA

Klapperich H, Uhde A, Hassenzahl M (2020) Designing everyday automation with well-being in mind. Pers Ubiquit Comput 24:763–779. https://doi.org/10.1007/s00779-020-01452-w

Lew G, Schumacher RM (2020) AI and UX – why artificial intelligence needs user experience. Apress, USA

Luciano F (2019) Establishing the rules for building trustworthy AI. Nat Mach Intell 1(6):261–262

Lindgren T, Fors V, Pink S et al (2020) Anticipatory experience in everyday autonomous driving. Pers Ubiquit Comput 24:747–762. https://doi.org/10.1007/s00779-020-01410-6

Norman D (1998) The invisible computer. MIT Press

Norman D (2007) The design of future things. Basic Books, USA

Saffer D (2014) Microinteractions – designing with details. O’reilly Media, USA

Shekhar SS (2019) Artificial intelligence in automation. Artif Intell 3085(06):14–17

Sheridan TB, Parasuraman R (2005) Human-automation interaction. Rev Human Factors Ergon 1(1):89–129

Shneiderman B (2022) Human-centered AI. Oxford University Press

Parasuraman R, Sheridan TB, Wickens CD (2000) A model for types and levels of human interaction with automation. IEEE Trans Syst Man Cybern-Part A: Syst Hum 30(3):286–297

Turner P (2010, August) The anatomy of engagement. In: Proceedings of the 28th Annual European Conference on Cognitive Ergonomics (pp 59–66). https://doi.org/10.1145/1962300.1962315

Vagia M, Transeth AA, Fjerdingen SA (2016) A literature review on the levels of automation during the years. What are the different taxonomies that have been proposed?, Appl Ergon 53(Part A): 190–202. ISSN 0003–6870,https://doi.org/10.1016/j.apergo.2015.09.013

Wing JM (2021) Trustworthy AI. Commun ACM 64(10):64–71

Xu W (2019) Toward human-centered AI: a perspective from human-computer interaction. Interactions 26(4):42–46

Wiberg M (2018) The materiality of interaction – notes on the materials of interaction design. MIT Press, Cambridge, MA

Wiberg M, Ishii H, Rosner D, Vallgårda A, Dourish P, Sundström P, Kerridge T, Rolston M (2012) Material interactions—from atoms and bits to entangled practices.CHI EA '12: CHI '12 Extended Abstracts on Human Factors in Computing Systems (pp 1147–1150)

Yarlagadda RT (2018) The RPA and AI automation. Int J Creat Res Thoughts (IJCRT), ISSN, pp 2320–2882

Funding

Open access funding provided by Umea University.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wiberg, M., Stolterman Bergqvist, E. Automation of interaction—interaction design at the crossroads of user experience (UX) and artificial intelligence (AI). Pers Ubiquit Comput 27, 2281–2290 (2023). https://doi.org/10.1007/s00779-023-01779-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00779-023-01779-0