Abstract

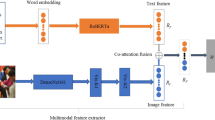

The growing popularity of social media platforms has simplified the creation of news articles, and fake news being spread on these platforms have a disruptive impact on our lives. With the prevalence of multimodal data in social networks, automatic detection of multimodal fake news plays an important role in the prevention of its negative effects. To the best of our knowledge, existing multimodal fake news detection works still face two challenging roadblocks: the lack of ambiguous clue reasoning across different modalities and insufficient fusion of multimodal information. To alleviate these concerns, this paper presents an Enhanced Clue-Ambiguity Reasoning Network (ECARnet) for multimodal fake news detection. The proposed model first extracts the fine-grained semantic features from salient image regions and semantic textual words, and further utilizes a cross-modal weighted residual network to learn their similar clue features. Subsequently, an efficient bidirectional clue-ambiguity reasoning module is constructed to explicitly excavate the ambiguous clue features. Specifically, forward reasoning branch employs the two-branch network with clue correlation analysis to maximally distinguish the cross-modal ambiguous clues, while backward reasoning branch utilizes uncertainty learning to eliminate the uncertain clue features. Through the joint exploitation of the above, the proposed ECARnet model can adaptively learn the cross-modal ambiguous clues to benefit various multimodal fake news detection tasks. Extensive experiments verify the superiority of the proposed framework and show its competitive performances with the state-of-the-arts.

Similar content being viewed by others

References

Shu, K., Sliva, A., Wang, S.H., et al.: Fake news detection on social media: a data mining perspective. ACM Spec. Interest Group Knowl. Discov. Data Min. Explor. Newslett. 19, 22–36 (2017). https://doi.org/10.1145/3137597.3137600

Song, C.G., Ning, N.W., Zhang, Y.L., et al.: A multimodal fake news detection model based on crossmodal attention residual and multichannel convolutional neural networks. Inform. Process. Manag. 58, 102437 (2021). https://doi.org/10.1016/j.ipm.2020.102437

Singh, B., Sharma, D.K.: Predicting image credibility in fake news over social media using multi-modal approach. Neural Comput. Appl. 34, 21503–21517 (2022). https://doi.org/10.1007/s00521-021-06086-4

Nasir, J.A., Khan, O.S., Varlamis, I.: Fake news detection: a hybrid cnn-rnn based deep learning approach. Int. J. Inform. Manag. Data Insights 1, 100007 (2021). https://doi.org/10.1016/j.jjimei.2020.100007

Sheng, Q., Cao, J., Zhang, X.Y., et al.: Zoom out and observe: News environment perception for fake news detection. In: Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pp 4543–4556, https://doi.org/10.18653/v1/2022.acl-long.311 (2022)

Chen, Y.X., Li, D.S., Zhang, P., et al.: Cross-modal ambiguity learning for multimodal fake news detection. In: Proceedings of the ACM Web Conference, pp 2897–2905, https://doi.org/10.1145/3485447.3511968 (2022)

Wang, Y., Ma, F., Wang, H., et al.: Multimodal emergent fake news detection via meta neural process networks. In: Proceedings of the 27th ACM Conference on Knowledge Discovery & Data Mining, pp 3708–3716, https://doi.org/10.1145/3447548.3467153 (2021)

Lv, J., Wang, X., Shao, C.: Tmif: transformer-based multi-modal interactive fusion for automatic rumor detection. Multimed. Syst. (2022). https://doi.org/10.1007/s00530-022-00916-8

Guo, H., Cao, J., Zhang, Y.Z., et al.: Rumor detection with hierarchical social attention network. In: Proceedings of the 27th ACM International Conference on Information and Knowledge Management, pp 943–951, https://doi.org/10.1145/3269206.3271709 (2018)

Qian, S.S., Wang, J.G., Hu, J., et al.: Hierarchical multi-modal contextual attention network for fake news detection. In: Proceedings of the 44th ACM International Conference on Research and Development in Information Retrieval, pp 153–162, https://doi.org/10.1145/3404835.3462871 (2021)

Wu, Y., Zhan, P.W., Zhang, Y.J., et al.: Multimodal fusion with co-attention networks for fake news detection. In: Proceedings of the Association for Computational Linguistics, pp 2560–2569 (2021)

Shu, K., Cui, L., Wang, S., et al.: Defend: explainable fake news detection. In: Proceedings of the 25th ACM International Conference on Knowledge Discovery & Data Mining, pp 395–405, https://doi.org/10.1145/3292500.3330935 (2019)

Ma, J., Gao, W., Mitra, P., et al.: Detecting rumors from microblogs with recurrent neural networks. In: Proceedings of the 25th International Joint Conference on Artificial Intelligence, pp 3818–3824 (2016)

Castillo, C., Mendoza, M., Poblete, B.: Information credibility on twitter. In: Proceedings of the 20th International Conference on World Wide Web, pp 675–684, https://doi.org/10.1145/1963405.1963500 (2011)

Zhang, W., Gui, L., He, Y.: Supervised contrastive learning for multimodal unreliable news detection in COVID-19 pandemic. In: Proceedings of the 30th ACM International Conference on Information & Knowledge Management, pp 3637–3641, https://doi.org/10.1145/3459637.3482196 (2021)

Yuan, H., Zheng, J., Ye, Q.W., et al.: Improving fake news detection with domain-adversarial and graph-attention neural network. Decis. Support Syst. 151, 113633 (2021). https://doi.org/10.1016/j.dss.2021.113633

Zhou, P., Han, X.T., Morariu, V.I., et al.: Learning rich features for image manipulation detection. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp 1053–1061, https://doi.org/10.1109/CVPR.2018.00116 (2018)

Qi, P., Cao, J., Yang, T., et al.: Exploiting multi-domain visual information for fake news detection. In: 2019 IEEE International Conference on Data Mining, IEEE, pp 518–527, https://doi.org/10.1109/ICDM.2019.00062 (2019)

Shu, K., Wang, S.H., Liu, H.: Beyond news contents: The role of social context for fake news detection. In: Proceedings of the 12th ACM International Conference on Web Search and Data Mining, pp 312–320, https://doi.org/10.1145/3289600.3290994 (2019)

Ben, C.N.E.H., Bouzeghoub, A., Guetari, R., et al.: Deep learning methods for anomalies detection in social networks using multidimensional networks and multimodal data: A survey. Multimed. Syst. (2022). https://doi.org/10.1007/s00530-020-00731-z

Singhal, S., Shah, R.R., Chakraborty, T., et al.: Spotfake: a multi-modal framework for fake news detection. In: Proceedings of the 5th International Conference on Multimedia Big Data, pp 39–47, https://doi.org/10.1109/BigMM.2019.00-44 (2019)

Wang, Y.Q., Ma, F.L., Jin, Z.W., et al.: Eann: event adversarial neural networks for multi-modal fake news detection. In: Proceedings of the 24th ACM Special Interest Group on Knowledge Discovery and Data Mining, pp 849–857, https://doi.org/10.1145/3219819.3219903 (2018)

Khattar, D., Goud, J.S., Gupta, M., et al.: Mvae: multimodal variational autoencoder for fake news detection. In: Proceedings of the World Wide Web Conference, pp 2915–2921, https://doi.org/10.1145/3308558.3313552 (2019)

Silva, A., Luo, L., Karunasekera, S., et al.: Embracing domain differences in fake news: cross-domain fake news detection using multi-modal data. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 557–565, https://doi.org/10.1609/aaai.v35i1.16134 (2021)

Jin, Z.W., Cao, J., Guo, H., et al.: Multimodal fusion with recurrent neural networks for rumor detection on microblogs. In: Proceedings of the 25th ACM International Conference on Multimedia, pp 795–816, https://doi.org/10.1145/3123266.3123454 (2017)

Qi, P., Cao, J., Li, X.R., et al.: Improving fake news detection by using an entity-enhanced framework to fuse diverse multimodal clues. In: Proceedings of the 29th ACM International Conference on Multimedia, pp 1212–1220, https://doi.org/10.1145/3474085.3481548 (2021)

Xue, J.X., Wang, Y.B., Tian, Y.C., et al.: Detecting fake news by exploring the consistency of multimodal data. Inform. Process. Manag. 58, 102610 (2021). https://doi.org/10.1016/j.ipm.2021.102610

Zhou, X.Y., Wu, J.D., Zafarani, R.: Safe:similarity-aware multi-modal fake news detection. In: Proceedings of the 24th Pacific-Asia Knowledge Discovery and Data Mining, pp 354–367, https://doi.org/10.1007/978-3-030-47436-2_27 (2020)

Shang, L., Kou, Z., Zhang, Y., et al.: A duo-generative approach to explainable multimodal COVID-19 misinformation detection. Proc. ACM Web Conf. 2022, 3623–3631 (2022). https://doi.org/10.1145/3485447.3512257

Jin, Y., Wang, X., Yang, R., et al.: Towards fine-grained reasoning for fake news detection. In: Proceedings of the AAAI Conference on Artificial Intelligence, pp 5746–5754, https://doi.org/10.1609/aaai.v36i5.20517 (2022)

Mosallanezhad, A., Karami, M., Shu, K., et al.: Domain adaptive fake news detection via reinforcement learning. In: Proceedings of the ACM Web Conference, pp 3632–3640, https://doi.org/10.1145/3485447.3512258 (2022)

Connor, C.E., Egeth, H.E., Yantis, S.: Visual attention: bottom–up versus top-down. Curr. Biol. 14, 850–852 (2004). https://doi.org/10.1016/j.cub.2004.09.041

Girshick, R.: Fast r-cnn. In: Proceedings of the IEEE International Conference on Computer Vision, pp 1440–1448 (2015)

Zhen, L.L., Hu, P., Wang, X., et al.: Deep supervised cross-modal retrieval. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 10394–10403, https://doi.org/10.1109/CVPR.2019.01064 (2019)

Chung, J.S., Zisserman, A.: Out of time: automated lip sync in the wild. In: Proceedings of the Asian Conference on Computer Vision, pp 251–263, https://doi.org/10.1007/978-3-319-54427-4_19 (2017)

Liu, X., Hu, Z., Ling, H., et al.: Mtfh: a matrix tri-factorization hashing framework for efficient cross-modal retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 43, 964–981 (2021). https://doi.org/10.1109/TPAMI.2019.2940446

Goldberger, J., Gordon, S., Greenspan, H., et al.: An efficient image similarity measure based on approximations of kl-divergence between two Gaussian mixtures. In: Proceedings of the IEEE International Conference on Computer Vision, pp 487–493, https://doi.org/10.1109/ICCV.2003.1238387 (2003)

Morteza, P., Li, Y.X.: Provable guarantees for understanding out-of-distribution detection. In: Proceedings of the Association for the Advancement of Artificial Intelligence, pp 7831–7840, https://doi.org/10.1609/aaai.v36i7.20752 (2022)

Ma, J., Gao, W., Wei, Z.Y., et al.: Detect rumors using time series of social context information on microblogging websites. In: Proceedings of the 24th ACM International on Conference on Information and Knowledge Management, pp 1751–1754, https://doi.org/10.1145/2806416.2806607 (2015)

Lee, J., Toutanova, K.: Bert:pre-training of deep bidirectional transformers for language understanding. ArXiv Preprint https://doi.org/10.48550/arXiv.1810.04805 (2018)

Sengupta, A., Ye, Y.T., Wang, R., et al.: Going deeper in spiking neural networks: Vgg and residual architectures. Front. Neurosci. (2019). https://doi.org/10.3389/fnins.2019.00095

Van der Maaten, L., Hinton, G.: Visualizing data using t-sne. Mach. Learn. Res. 9, 2579–2605 (2008)

Acknowledgements

This work was supported in part by the Open Project of Zhejiang Lab under Grant 2021KH0AB01, and in part by the National Science Foundation of Fujian Province under Grant 2020J01083 and Grant 2020J01084.

Author information

Authors and Affiliations

Contributions

SZ and SP wrote the main manuscript text. XL designed model. LZ prepared figures 1–8. XX prepared tables 1–3. and TL did the experiment. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Communicated by B. Bao.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhong, S., Peng, S., Liu, X. et al. Ecarnet: enhanced clue-ambiguity reasoning network for multimodal fake news detection. Multimedia Systems 30, 55 (2024). https://doi.org/10.1007/s00530-023-01256-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00530-023-01256-x