Abstract

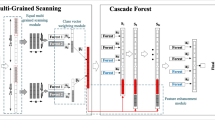

Key process parameters such as production qualities and environmental pollution indices are difficult to be measured online in complex industrial processes. High time and economic costs make only limited small sample data be obtained to build process models, while the deep neural network model requires massive training samples. Although the deep forest algorithm is based on nonneural network structure, it mainly is utilized to effectively address classification problems. Owing to the above problems, a new deep forest regression algorithm based on cross-layer full connection is proposed. First of all, sub-forest prediction values of the input layer forest module are processed to obtain the layer regression vector, which is combined with the raw feature vector as the input of the middle layer forest model. And then, a cross-layer full connection way connecting the former layer regression vector contributes to an augmented layer regression vector. Meanwhile, the deep layer’s number is adaptively adjusted via verifying the validation error. In the end, the output layer forest model is trained by using the augmented layer regression vector originated from the middle layer forest model and the raw feature vector. Sequentially, the maximum information flow is effectively ensured by information sharing. Moreover, the proposed method has the advantages of simple hyper-parameter setting criterion. Simulation results based on benchmark and industrial data show that the proposed method has equal or better performance than several state-of-art methods.

Similar content being viewed by others

References

Xie Y, Peng MG (2019) Forest fire forecasting using ensemble learning approaches. Neural Comput Appl 31:4541–4550

Breiman L (2001) Random forests. Mach Learn 45(1):5–32

Konrad M, John D, Leo C (2018) A soft sensor for prediction of mechanical properties of extruded PLA sheet using an instrumented slit die and machine learning algorithms. Polym Test 69:462–469

Napier LFA, Aldrich C (2017) Soft sensor based on random forests and principal component analysis. Ifac Papersonline 50(1):1175–1180

Zhang W, Cheng X, Hu Y (2019) Online prediction of biomass moisture content in a fluidized bed dryer using electrostatic sensor arrays and the random forest method. Fuel 239(1):437–445

Hinton G, Deng L, Yu D (2012) Deep neural networks for acoustic modeling in speech recognition. IEEE Signal Process Mag 29(6):82–97

Goodfellow I, Bengio Y, Courville A (2016) Deep learning. MIT Press, Cambridge

Hemanth D, Deperlioglu O, Kose U (2020) An enhanced diabetic retinopathy detection and classification approach using deep convolutional neural network. Neural Comput Appl 32:707–721

Yi LZ, Chang FM, Long GZ, Liang XX, Ma WB (2020) Application research on short-term load forecasting based on evolutionary deep learning. J Electr Power Syst Autom 32(03):1–6

Hu XB (2020) Multi-objective prediction of coal-fired boiler with a deep hybrid neural networks. Atmos Pollut Res. https://doi.org/10.1016/j.apr.2020.04.001

Liu X, Zhang H, Kong X (2019) Wind speed forecasting using deep neural network with feature selection. Neurocomputing. https://doi.org/10.1016/j.neucom.2019.08.108

Yin BC, Wang WT, Wang LC (2015) A summary of deep learning research. J Beijing Univ Technol 41(01):48–59

Zhou ZH, Feng J (2019) Deep forest. Natl Sci Rev 6:74–86

Miller K, Hettinger C (2017) Forward thinking: building deep random forests. arXiv:1705.07366.

Wang HY, Tang Y, Jia ZY, Ye F (2020) Dense adaptive cascade forest: a self-adaptive deep ensemble for classification problems. Soft Comput 24:2955–2968

Hu G, Li H, Xia Y, Luo L (2018) A deep Boltzmann machine and multi-grained scanning forest ensemble collaborative method and its application to industrial fault diagnosis. Comput Ind 100:287–296

Quinlan JR (1986) Induction of decision trees. Mach Learn 1(1):81–106

Quinlan JR (1992) C45: programs for machine learning. Morgan Kaufmann, London

Breiman L, Friedman J, Stone C (1984) Classification and regression Trees. Wadsworth, London

Stulp F, Sigaud O (2015) Many regression algorithms, one unified model: a review. Neural Netw 69:60–79

Kontschieder P, Fiterau M, Criminisi A (2015) Deep neural decision forests. In: IEEE international conference on computer vision (ICCV). IEEE

Zhen XT, Wang ZJ (2016) Multi-scale deep networks and regression forests for direct bi-ventricular volume estimation. Med Image Anal 30:120–129

Wang YF, Shen TY (2016) Appearance-based gaze estimation using deep features and random forest regression. Knowl Based Syst 110:293–301

Chen YD, Li CF, Sang QB (2019) Convolutional neural network combined with deep forest for reference-free image quality evaluation. Prog Laser Optoelectron 56(11):131–137

Eslamil E, Salman AK, Choi Y, Sayeed A, Lops Y (2019) A data ensemble approach for real-time air quality forecasting using extremely randomized trees and deep neural networks. Neural Comput Appl. https://doi.org/10.1007/s00521-019-04287-6

Fan W, Wang H (2003) Is random model better? On its accuracy and efficiency. In: ICDM’03: proceedings of the third IEEE international conferenceon data mining, pp 51–58.

Rafiei MH, Adeli H (2015) A novel machine learning model for estimation of sale prices of real estate units. J Constr Eng Manag 142(2):04015066

Yeh IC (2007) Modeling slump flow of concrete using second-order regressions and artificial neural networks. Cem Concr Compos 29(6):474–480

Yeh IC (1998) Modeling of strength of high performance concrete using artificial neural networks. Cem Concr Res 28(12):1797–1808

Tang J, Yu W, Chai TY, Zhao LJ (2012) On-line principal component analysis with application to process modeling. Neurocomputing 82(1):167–178

Acknowledgements

This work is supported by National Natural Science Foundation of China (62073006, 62021003), Beijing Natural Science Foundation (4212032, 4192009), National Key R&D Program of the Ministry of Science and Technology (2018YFC1900800-5), National & Beijing Key Laboratory of Process Automation in Mining & Metallurgy (BGRIMM-KZSKL-2020-02).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest. The article is considered for publication on the understanding that the article has neither been published nor will be published anywhere else before being published in the journal of Neural Computing and Applications.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Tang, J., Xia, H., Zhang, J. et al. Deep forest regression based on cross-layer full connection. Neural Comput & Applic 33, 9307–9328 (2021). https://doi.org/10.1007/s00521-021-05691-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-021-05691-7