Abstract

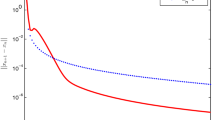

The main strategy of this paper is intended to speed up the convergence of the inertial Mann iterative method and further speed up it through the normal S-iterative method for a certain class of nonexpansive-type operators that are linked with variational inequality problems. Our new convergence theory permits us to settle down the difficulty of unification of Korpelevich’s extragradient method, Tseng’s extragardient method, and subgardient extragardient method for solving variational inequality problems through an auxiliary algorithmic operator, which is associated with the seed operator. The paper establishes an interesting the fact that the relaxed inertial normal S-iterative extragradient methods do influence much more on convergence behaviour. Finally, the numerical experiments are carried out to illustrate that the relaxed inertial iterative methods; in particular, the relaxed inertial normal S-iterative extragradient methods may have a number of advantages over other methods in computing solutions to variational inequality problems in many cases.

Similar content being viewed by others

Data Availability

Enquiries about data availability should be directed to the authors.

References

Agarwal RP, O’Regan D, Sahu DR (2009) Fixed point theory for Lipschitzian-type mappings with applications, 1st edn. Springer, New York

Agarwal RP, Regan DO, Sahu DR (2007) Iterative construction of fixed points of nearly asymptotically nonexpansive mappings. J Nonlinear Convex Anal 8(1):61

Alvarez F (2004) Weak convergence of a relaxed and inertial hybrid projection-proximal point algorithm for maximal monotone operators in Hilbert space. SIAM J Optim 14(3):773–782

Alvarez F, Attouch H (2001) An inertial proximal method for maximal monotone operators via discretization of a nonlinear oscillator with damping. Set-Valued Anal 9(1–2):3–11

Anh PK, Thong DV, Vinh NT (2020) Improved inertial extragradient methods for solving pseudo-monotone variational inequalities. Optimization 5:1–24

Ansari QH, Sahu DR (2014) Some iterative methods for fixed point problems. Top Fixed Point Theory 5:273–300

Ansari QH, Sahu DR (2016) Extragradient methods for some nonlinear problems. Fixed Point Theory 6:187–230

Censor Y, Gibali A, Reich S (2012) Extensions of Korpelevich’s extragradient method for the variational inequality problem in Euclidean space. Optimization 61(9):1119–1132

Bauschke HH, Combettes PL (2011) Convex analysis and monotone operator theory in Hilbert spaces, vol 408. Springer, Berlin

Boţ RI, Csetnek ER, Hendrich C (2015) Inertial Douglas-Rachford splitting for monotone inclusion problems. Appl Math Comput 256:472–487

Boţ RI, Csetnek ER, Vuong PT (2020) The forward-backward-forward method from continuous and discrete perspective for pseudo-monotone variational inequalities in Hilbert spaces. Eur J Oper Res 287(1):49–60

Cai G, Dong Q-L, Peng Yu (2021) Strong convergence theorems for solving variational inequality problems with pseudo-monotone and non-lipschitz operators. J Optim Theory Appl 188:447–472

Censor Y, Gibali A, Reich S (2011) The subgradient extragradient method for solving variational inequalities in Hilbert space. J Optim Theory Appl 148(2):318–335

Chidume C (2009) Geometric properties of banach spaces and nonlinear iterations, vol 1965. Springer, Berlin

Dixit A, Sahu DR, Singh AK, Som T (2019) Application of a new accelerated algorithm to regression problems. Soft Comput 6:1–14

Dong QL, Huang J, Li XH, Cho YJ, Rassias TM (2019) Mikm: multi-step inertial Krasnoselskii-Mann algorithm and its applications. J Global Optim 73(4):801–824

Dong QL, Lu YY, Yang J (2016) The extragradient algorithm with inertial effects for solving the variational inequality. Optimization 65(12):2217–2226

Dong Y (2015) Comments on “the proximal point algorithm revisited’’. J Optim Theory Appl 166(1):343–349

Goldstein AA (1964) Convex programming in Hilbert space. Bull Am Math Soc 70(5):709–710

Jolaoso LO, Aphane M (2022) An explicit subgradient extragradient algorithm with self-adaptive stepsize for pseudomonotone equilibrium problems in banach spaces. Numer Algorithms 89(2):583–610

Kanzow C, Shehu Y (2017) Generalized Krasnoselskii-Mann-type iterations for nonexpansive mappings in Hilbert spaces. Comput Optim Appl 67(3):595–620

Kato T (1964) Demicontinuity, hemicontinuity and monotonicity. Bull Am Math Soc 70(4):548–550

Khanh PD (2016) A modified extragradient method for infinite-dimensional variational inequalities. Acta Math Vietnam 41(2):251–263

Korpelevich GM (1976) The extragradient method for finding saddle points and other problems. Matecon 12:747–756

Maingé PE (2008) Convergence theorems for inertial KM-type algorithms. J Comput Appl Math 219(1):223–236

Mann WR (1953) Mean value methods in iteration. Proc Am Math Soc 4(3):506–510

Malitsky Yu (2015) Projected reflected gradient methods for monotone variational inequalities. SIAM J Optim 25(1):502–520

Nachaoui A, Nachaoui M (2022) An hybrid finite element method for a quasi-variational inequality modeling a semiconductor. RAIRO-Oper Res 6:218

Polyak BT (1964) Some methods of speeding up the convergence of iteration methods. USSR Comput Math Math Phys 4(5):1–17

Sahu DR (2011) Applications of the S-iteration process to constrained minimization problems and split feasibility problems. Fixed Point Theory 12(1):187–204

Sahu DR (2020) Applications of accelerated computational methods for quasi-nonexpansive operators to optimization problems. Soft Comput 9:1–25

Sahu DR, Ansari QH, Yao JC (2016) Convergence of inexact Mann iterations generated by nearly nonexpansive sequences and applications. Numer Funct Anal Optim 37(10):1312–1338

Sahu DR, Pitea A, Verma M (2019) A new iteration technique for nonlinear operators as concerns convex programming and feasibility problems. Numer Algorithms. https://doi.org/10.1007/s11075-019-00688-9

Sahu DR, Shi L, Wong NC, Yao JC (2020) Perturbed iterative methods for a general family of operators: convergence theory and applications. Optimization 32:1–37

Sahu DR, Wong NC, Yao JC (2012) A unified hybrid iterative method for solving variational inequalities involving generalized pseudocontractive mappings. SIAM J Control Optim 50(4):2335–2354

Sahu DR, Yao JC, Verma M, Shukla KK (2020) Convergence rate analysis of proximal gradient methods with applications to composite minimization problems. Optimization 7:1–26

Sahu DR, Yao JC, Singh VK, Kumar S (2017) Semilocal convergence analysis of S-iteration process of Newton-Kantorovich like in Banach spaces. J Optim Theory Appl 172(1):102–127

Sahu DR, Singh AK (2021) Inertial iterative algorithms for common solution of variational inequality and system of variational inequalities problems. J Appl Math Comput 65(1):351–378

Tan B, Qin X, Cho SY (2022) Revisiting subgradient extragradient methods for solving variational inequalities. Numer Algorithms 90(4):1593–1615

Tan B, Sunthrayuth P, Cholamjiak P, Cho YJ (2023) Modified inertial extragradient methods for finding minimum-norm solution of the variational inequality problem with applications to optimal control problem. Int J Comput Math 100(3):525–545

Thong DV, Hieu DV (2018) Modified Tseng’s extragradient algorithms for variational inequality problems. J Fixed Point Theory Appl 20(4):1–18

Tseng P (2000) A modified forward-backward splitting method for maximal monotone mappings. SIAM J Control Optim 38(2):431–446

Verma M, Sahu DR, Shukla KK (2017) Vaga: a novel viscosity-based accelerated gradient algorithm. Appl Intell 3:1–15

Vuong PT (2018) On the weak convergence of the extragradient method for solving pseudo-monotone variational inequalities. J Optim Theory Appl 176(2):399–409

Xu HK (2002) Iterative algorithms for nonlinear operators. J Lond Math Soc 66(1):240–256

Yao Y, Marino G, Muglia L (2014) A modified korpelevich’s method convergent to the minimum-norm solution of a variational inequality. Optimization 63(4):559–569

Zeidler E (2013) Nonlinear functional analysis and its applications: III: variational methods and optimization. Springer, Berlin

Zhang YC, Guo K, Wang T (2019) Generalized Krasnoselskii-Mann-type iteration for nonexpansive mappings in banach spaces. J Oper Res Soc China 3:1–12

Funding

The authors have not disclosed any funding.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that there is no conflict of interest regarding the publication of this paper.

Ethical approval

This article does not contain any studies with human participants or animals performed by the author.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix-I: Proof of Lemma 2.5

Proof

(a) Set \(K:=\varepsilon -\theta (1+\varepsilon +\max \{1,\varepsilon \})\). Define \(K_{n}=\theta _{n}(1+\theta _{n}+\varepsilon (1-\theta _{n}))\), \( n=1,2,\cdots \) and \(\phi _{n}=\Vert x_{n}-v\Vert ^{2}\), \(n=0,1,2,\cdots .\) From (11), we have

and hence

Since \(\theta _{n}\le \theta _{n+1}\) for all \(n\in \mathbb {N}\), it follows that

Note \(\theta _{n}\le \theta _{n+1}\le \theta \) for all \(n\in \mathbb {N}\) and \(K_{n}=\theta _{n}\left( 1+\theta _{n}+\varepsilon (1-\theta _{n})\right) \le \theta _{n}\left( 1+\max \{1,\varepsilon \}\right) \text { for all } n\in \mathbb {N}.\) Hence

Note \(K>0\) by the inequality (12). Hence

which implies that \(\varphi _{n+1}\le \varphi _{n} \) for all \(n\in \mathbb {N }.\) Thus, the sequence \(\{\varphi _{n}\}\) is non-increasing.

(b) For \(n\in \mathbb {N},\) we have

and

Since \(\varphi _{1}>0,\) from (52), we have

Combining (53) and (54), we have

From (51), we get

Taking limit as \(n\rightarrow \infty ,\) we have \( K\sum _{i=1}^{\infty }\Vert x_{i+1}-x_{i}\Vert ^{2}\le \frac{ \varphi _{1}}{1-\theta }<\infty . \)

(c) From (50), we have

From Lemma 2.4, we obtain that \(\lim _{n\rightarrow \infty }\Vert x_{n}-v\Vert \) exists. \(\square \)

Appendix-II: Proof of Proposition 3.1

Proof

(a)–(b). Let \(v\in \textrm{Fix}(S)\). Note \(\displaystyle Tw_{n}-w_{n}=\frac{ 1}{\alpha _{n}}(x_{n+1}-w_{n})\) and \(\displaystyle \varepsilon \le \frac{ 1+\kappa -\alpha _{n}}{\alpha _{n}}\) for all \(n\in \mathbb {N}.\) Note T is \( \kappa \)-strongly quasi-nonexpansive operator. From (13) and Lemma 2.6, we have

Again, from (13) and Lemma 2.2(ii), we have

Combining (56) and (57), we get

From (10), we have

From (58), we obtain

Noticing that \(\{\theta _{n}\}\) is an increasing sequence in [0, 1) by the assumption (C2) and that the condition (12) holds by the assumption (C3). Apply Lemma 2.5 on (59), we conclude that \(\sum _{n=1}^{\infty }\Vert x_{n+1}-x_{n}\Vert ^{2}<\infty \text { and }\lim _{n\rightarrow \infty }\Vert x_{n}-v\Vert ~\text { exists.} \)

Clearly, \(\Vert x_{n+1}-x_{n}\Vert \rightarrow 0\) as \(n\rightarrow \infty \). Hence, from (13), we have

It follows that \(\lim _{n\rightarrow \infty }\Vert w_{n}-x_{n}\Vert =0\). We may assume that \(\lim _{n\rightarrow \infty }\Vert x_{n}-v\Vert =\ell >0\). It follows, from \(\lim _{n\rightarrow \infty }\Vert x_{n}-w_{n}\Vert =0\), that \(\lim _{n\rightarrow \infty }\Vert w_{n}-v\Vert =\ell \). In view of condition (C1), we obtain, from (55), that \( \lim _{n\rightarrow \infty }\Vert w_{n}-Tw_{n}\Vert =0.\)

(c) Let \(v\in \textrm{Fix}(S)\). Note \(R_{T}(n):=R_{T,\{w_{n}\}}(n)\le \Vert w_{n}-Tw_{n}\Vert \) for all \(n\in \mathbb {N}\). From (55) and (57), we have

Hence, from the condition (C1), we have

for all \(n\in \mathbb {N}\) and for some \(M>0.\) Thus, \(\sum \limits _{n=1}^{ \infty }(R_{T}(n))^{2}<\infty .\) Note \(\{R_{T}(n)\}\) is decreasing. Hence, from Lemma 2.3, we see that \( R_{T,\{w_{n}\}}(n)=o\left( \frac{1}{\sqrt{n}}\right) . \) \(\square \)

Appendix-III: Proof of Proposition 3.2

Proof

(a)–(b). Let \(v\in \textrm{Fix}(S)\). Set \(y_{n}:=(1-\alpha _{n})w_{n}+\alpha _{n}Tw_{n}\). Hence \(\displaystyle Tw_{n}-w_{n}=\frac{1}{ \alpha _{n}}(y_{n}-w_{n})\). From (19), we have \(\displaystyle \mathcal {E}\le \frac{\alpha _{n}(1+\kappa -\alpha _{n})}{2(1+{\alpha _{n}^{2}\beta }^{2})}\) for all \(n\in \mathbb {N}.\) For \(\rho =\frac{1}{2}\), from (9), we obtain

Since T is \(\beta \)-Lipschitz continuous, we have

Thus,

From Lemma 2.6, we get

Using (61) and then (60), we obtain

From (17) and Lemma 2.2(ii), we have

From (10), we have

From (63), we obtain

Note \(\{\theta _{n}\}\) is an increasing sequence in [0, 1) by the assumption (C2) and the condition (12) holds by the assumption (C5) with \(\varepsilon =\mathcal {E}\). Thus, Lemma 2.5 infers us that \(\sum _{n=1}^{\infty }\Vert x_{n+1}-x_{n}\Vert ^{2}<\infty \) and \(\lim _{n\rightarrow \infty }\Vert x_{n}-v\Vert \) exists. It immediately follows, from (17), that \( \lim _{n\rightarrow \infty }\Vert w_{n}-x_{n}\Vert =0\).

Since \(\ell :=\lim _{n\rightarrow \infty }\Vert x_{n}-v\Vert \) exists, it follows, from \(\lim _{n\rightarrow \infty }\Vert x_{n}-w_{n}\Vert =0\), that \( \lim _{n\rightarrow \infty }\Vert w_{n}-v\Vert =\ell \). Hence, from (62), we obtain that \(\lim _{n\rightarrow \infty }\Vert w_{n}-Tw_{n}\Vert \) \(=\) 0 \( =\) \(\lim _{n\rightarrow \infty }\Vert y_{n}-Ty_{n}\Vert . \)

(c) Let \(v\in \textrm{Fix}(S)\). Note \(\{\theta _{n}\}\) is an increasing sequence in [0, 1). From (62) and (64), we get

Hence, from the condition (C1), we have

Since T is \(\beta \) -Lipschitz continuous, we have

Since \(\sum \limits _{n=1}^{\infty }\Vert y_{n}-Ty_{n}\Vert ^{2}<\infty ,\) it follows that \(\sum \limits _{n=1}^{\infty }\Vert x_{n}-Tx_{n}\Vert ^{2}<\infty \). Noticing that \(R_{T}(n):=R_{T,\{x_{n}\}}(n)\le \Vert x_{n}-Tx_{n}\Vert \) for all \(n\in \mathbb {N}\) and that \(\{R_{T}(n)\}\) is decreasing. Hence \( \sum \limits _{n=1}^{\infty }(R_{T}(n))^{2}<\infty .\) Therefore, from Lemma 2.3, we conclude that (20) holds. \(\square \)

Appendix-IV: Proof of Proposition 4.2

Proof

Let \(\{x_{n}\}\) be a sequence in X such that \(x_{n}\) \(\rightharpoonup z\) and \((I-S_{\lambda })x_{n}\rightarrow 0\) as \(n\rightarrow \infty \). We now show that \((I-S_{\lambda })z=0.\) If \(Fz=0,\) then \(z\in \varOmega [ \textrm{VI}(C,F)],\) i.e, \((I-S_{\lambda })z=0.\) Assume that \(Fz\ne 0.\) Let \( x\in C.\) Set \(y_{n}=S_{\lambda }x_{n}.\) From Lemma 2.1(a), we have

Hence \( \left\langle x_{n}-y_{n},x-y_{n}\right\rangle \le \lambda \left\langle Fx_{n},x-y_{n}\right\rangle , \) which implies that

Since \(x_{n}\rightharpoonup z,\Vert x_{n}-y_{n}\Vert \rightarrow 0\) and F is sequentially weak-to-weak continuous, we have \(y_{n}\rightharpoonup z\) and \(Fx_{n}\rightarrow Fz.\) Hence, from (66), we have

Since the norm is sequentially weakly lower semicontinuous, we get

It follows that there exists \(n_{0}\in \mathbb {N}\) such that \(Fy_{n}\ne 0\) for all \(n\ge n_{0}.\ \)Now we choose a sequence \(\{\varepsilon _{k}\}\) in \( (0,\infty )\) such that \(\{\varepsilon _{k}\}_{_{k\ge 0}}\) is strictly decreasing and \(\varepsilon _{k}\rightarrow 0.\ \)For \(\{\varepsilon _{k}\}_{k\ge 0}\), from (67) and (68), there exists a strictly increasing sequence \(\{n_{k}\}_{k\ge 0}\) of positive integers such that

Set \(p_{k}=\frac{1}{\Vert Fy_{n_{k}}\Vert ^{2}}Fy_{n_{k}}.\) Then \( \left\langle Fy_{n_{k}},p_{k}\right\rangle =1.\) From (69), we obtain

By the pseudo-monotonicity of F on X, we have

Note F satisfies the condition \((\mathscr {B})\). Then, by the boundedness of \(\{Fy_{n_{k}}\},\) there exist constants \(m,M>0\) such that \(m\le \Vert Fy_{n_{k}}\Vert \le M\) for all \(k\ge 0.\) Hence \(\Vert \varepsilon _{k}p_{k}\Vert =\varepsilon _{k}\Vert p_{k}\Vert =\frac{\varepsilon _{k}}{ \Vert Fy_{n_{k}}\Vert }\rightarrow 0\) as \(k\rightarrow \infty .\) Thus, from ( 70), we get \( \left\langle Fx,x-z\right\rangle \ge 0. \) Since x is an arbitrary element in C, we infer that \(z\in \varOmega [\textrm{DVI}(C,F)]\). Therefore, from Lemma 4.2, we conclude that \( z\in \varOmega [\textrm{VI}(C,F)],~\)i.e., \((I-S_{\lambda })z=0.\) \(\square \)

Appendix-V: Proof of Proposition 4.3

Proof

(a) Let \(u,v\in X.\) Then, from Remark 4.1, we have

(b) Let \(\lambda \in (0,1/L)\), \(x\in X\) and \(v\in \varOmega [\textrm{VI}(C,F)]\). Set \( y=P_{C}(x-\lambda Fx)\) and \(z=P_{C}(x-\lambda Fy)\). Then \(\left\langle Fv,y-v\right\rangle \ge 0.\) By the pseudo-monotonicity of F, we have \( \left\langle Fy,y-v\right\rangle \ge 0.\) From Lemma 2.1(d), we have

Substituting x by \(x-\lambda Fx\) and y by z in Lemma 2.1(a), we get

which gives us that

Thus, from (72), we have

Hence, from (71) and (73), we get

For \(\rho =1/2\), from (9), we have

Hence, from (74), we have

(c) Let \(\lambda \in (0,1/L)\). Lemma 4.1 shows that \(\textrm{Fix}(S_{\lambda })=\varOmega [\textrm{VI}(C,F)].\) Note \(\emptyset \ne \textrm{Fix} (S_{\lambda })\subseteq \textrm{Fix}(E_{\lambda }).\) We now show that \( \textrm{Fix}(E_{\lambda })\subseteq \textrm{Fix}(S_{\lambda }).\) Let \(u\in \textrm{Fix}(E_{\lambda })\) and \(v\in \textrm{Fix}(S_{\lambda }).\) From Part b(i), we get

which implies that \(u\in \textrm{Fix}(S_{\lambda }).\)

(d) Let \(\lambda \in (0,1/L)\). Let \(\{x_{n}\}\) be a bounded sequence in X such that \( \lim _{n\rightarrow \infty }\Vert x_{n}-E_{\lambda }(x_{n})\Vert =0.\) Let \(v\in \varOmega [\textrm{VI}(C,F)].\) From Part (b)(i), we have

it follows that

By the boundedness of \(\{x_{n}\}\), we have \(\lim _{n\rightarrow \infty }\Vert x_{n}-S_{\lambda }(x_{n})\Vert =0.\) \(\square \)

Appendix-VI: Proof of Proposition 4.4

Proof

(a) Let \(u,v\in X.\) Then

(b) Let \(\lambda \in (0,1/L),\) \(x\in X\) and \(z\in \varOmega [\textrm{VI} (C,F)].\) Set \(y:=P_{C}(x-\lambda Fx).~\)Hence

and

which implies that \((1-\lambda L)\Vert x-S_{\lambda }(x)\Vert \le \Vert x-T_{\lambda }x\Vert .\) From Lemma 2.1(a), we have

Thus,

Since \(z\in \varOmega [\text {VI}(C,F)],\) we have \(\langle Fz,y-z\rangle \ge 0.\) By pseudo-monotonicity of F, we have \(\langle Fy,y-z\rangle \ge 0.\) From (75), we have

(c) Let \(\lambda \in (0,1/L)\). Note \(\textrm{Fix}(S_{\lambda })=\varOmega [\textrm{VI}(C,F)].\) Observe that \(\textrm{Fix}(S_{\lambda })\subseteq \textrm{Fix}(T_{\lambda }).\ \)We now show that \(\textrm{Fix} (T_{\lambda })\subseteq \textrm{Fix}(S_{\lambda }).\) Let \(u\in \textrm{Fix} (T_{\lambda })\) and \(v\in \textrm{Fix}(S_{\lambda }).\) From Part b(iii), we get

which implies that \(u\in \textrm{Fix}(S_{\lambda }).\)

(d) Let \(\lambda \in (0,1/L)\). Let \(\{x_{n}\}\) be a bounded sequence in X such that \(\lim _{n\rightarrow \infty }\Vert x_{n}-T_{\lambda }(x_{n})\Vert =0.\) Using Part (b)(ii), we obtain \( (1-\lambda L)\Vert x_{n}-S_{\lambda }(x_{n})\Vert \le \Vert x_{n}-T_{\lambda }(x_{n})\Vert \text { for all }n\in \mathbb {N}. \) Thus, we conclude that the operator \(S_{\lambda }\) has property \((\mathscr {A} )\) with respect to the operator \(T_{\lambda }\). \(\square \)

Appendix-VII: Proof of Example 5.2

To prove the pseudo-monotonicity and Lipschitz continuity of operator F defined by (46), we define \(g:X\rightarrow \mathbb {R}\) such that \( g(x)=e^{-\langle x,Ux\rangle }+\alpha \ \text {for all}\ x\in X. \) Let \(x,y\in X\) such that \(\langle Fx,y-x\rangle \ge 0.\) Since \(g(x)>0,\) it follows that \(\langle Vx+q,y-x\rangle \ge 0.\) Hence

Thus, F is pseudo-monotonous.

Now, let \(x,h\in X.\) Then \( \nabla F(x)(h) =2e^{-\langle x,Ux\rangle }\langle Ux,h\rangle (Vx+p)+\left( e^{-\langle x,Ux\rangle }+\alpha \right) Vh \) and

Hence

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sahu, D.R. A unified framework for three accelerated extragradient methods and further acceleration for variational inequality problems. Soft Comput 27, 15649–15674 (2023). https://doi.org/10.1007/s00500-023-08806-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-023-08806-5