Abstract

In this paper, we present an algorithm for providing visually-guided unmanned aerial vehicle (UAV) control using visual information that is processed on a mobile graphic processing unit (GPU). Most real-time machine vision applications for UAVs exploit low-resolution images because the shortage of computational resources comes from size, weight and power issue. This leads to the limitation that the data are insufficient to provide the UAV with intelligent behavior. However, GPUs have emerged as inexpensive parallel processors that are capable of providing high computational power in mobile environments. We present an approach for detecting and tracking lines that use a mobile GPU. Hough transform and clustering techniques were used for robust and fast tracking. We achieved accurate line detection and faster tracking performance using the mobile GPU as compared with an x86 i5 CPU. Moreover, the average results showed that the GPU provided approximately five times speedup as compared to an ARM quad-core Cortex-A15. We conducted a detailed analysis of the performance of proposed tracking and detection algorithm and obtained meaningful results that could be utilized in real flight.

Similar content being viewed by others

1 Introduction

Over the past few years, a number of approaches for utilizing unmanned aerial vehicles (UAVs) for diverse military missions and civil applications, such as monitoring work for security, have been proposed. Historically, UAVs were remotely piloted by an operator, recently, however, autonomous control is increasingly employed. For some commercial applications, UAVs are operated using mainly positioning information provided by the global positioning system (GPS) and inertial measurement unit (IMU). Recently, vision-guided autonomous approaches have been presented. Vision information is a salient factor in the development of intelligent UAVs.

Real-time image processing is a common technique that is widely used in machine vision to exploit vision information, and thus, allows autonomy and machine learning. However, many restricted embedded hardware systems suffer from performance limitations when applications are computationally heavy. In intelligent UAVs, in particularly in rotorcraft, the computing environment is less adequate than fixed-wing aircraft and ground vehicles because of power, weight, and volume constraints. The maximum flight time is less than 30 min. The development of systems that provide UAVs with autonomous flight has advanced somewhat slowly compared development of their avionics. It is more difficult to provide UAVs with autonomy than other unmanned vehicles because of their size, weight, and power (SWaP), and because integration of sensors such as the IMU and the GPS for accurately determining the position, has to be considered. Another significant barrier to the implementation of such systems is real-time control signal generation by vision processing.

In general, vision-based high-level applications, such as reconnaissance and recognition, require powerful computational resources that are typically installed in desktop computers equipped with graphic accelerators. However, these large and heavy devices cannot be carried by small UAVs. Therefore, the vision processing must be performed by either an inadequate onboard unit or using a remote high-performance computing unit to that receives series of images. Over the past few years, graphics processing units (GPUs) have quickly emerged as inexpensive parallel processors with high computational power. The most recent GPU can be applied in mobile devices.

This study extends ideas previously presented in (IMIS 2014) (Tyan and Jeon 2014). Here, we extend the previous work by implementing a tracking algorithm on a mobile GPU board and testing it using four video clips including real flight video which was captured by an UAV. Mobile GPU system such as Tegra K1 (TK1) is advanced embedded system on chip (SOC) platform that shows GPU-accelerated performance as desktop with low power. TK1 consists of CPU, GPU and ISP in a single chip. This approach achieves considerable image processing capability that provides greater efficiency without sacrificing speed or accuracy with mobile GPU.

This paper describes a GPU computing solution for small-scaled UAV visual navigation. In summary, the main contributions of this study are as follows:

-

Implementation of an embedded real-time image processing system for UAVs that provide relatively high-performance line detection and tracking using mobile GPU acceleration. Development of an image processing system on the compute unified device architecture (CUDA) to demonstrate the algorithm.

-

Modification of a computationally heavy image processing algorithm that runs on a mobile GPU and obtained a faster frame rate acceleration than that achieved using only a CPU.

-

Our approach constitutes a novel attempt to advance research on intelligent UAVs utilizing mobile GPU.

2 Related works

The control of UAVs using visual information has been addressed in several studies in a few different fields, such as high-level avionic control, electronic engineering, computer vision and artificial intelligence. The techniques studied in these different fields are equally important, essentially impossible since the absence of one element of the system makes it essentially impossible to build an intelligent UAV that correctly operates.

Several studies of controlling UAV have been conducted in the fields of computer vision and image processing for autonomous flight. In the context of visually guided control, various approaches were described in Feng et al. (2012), Campoy et al. (2009) and Kaiser et al. (2010). Landing and avoiding obstacles using visual information are also proposed in Lange et al. (2009), Mejias et al. (2010) and Zhihai et al. (2006). In these works, onboard processing is important for instant response. In numerous instances, small-sized aerial vehicles that are controlled by visual information cannot operate onboard processing due to SWaP issues as described in Engel et al. (2012). Off-board processing can utilize powerful system in remote place. However, it suffers from rapid response limitations when using visual control as well as requiring additional effort for image handling over network. In the context of soft computing, Olivares-Mendez (2010) proposed a landing task using a fuzzy controller guided by visual information.

Line tracking is a low-level method for extracting useful features from images and can be used in some practical implementations such as environment recognition and position estimation, for example, recognizing landing strips or detecting lanes on the road. Most studies involved in line tracking have used the Hough transform technique as the core of algorithm; for example, Mills et al. (2003) shows accurate result. This research concentrated on line movement prediction using Kalman filter (Kalman 1960). Kalman filter are used to speedup processing for real-time applications. Some prior researches in solving the computational problem of line detection and tracking have been done. However, the Hough transform is widely regarded as a bottleneck. Mills et al. (2003) proposed a modification of the Hough transform called the progressive probabilistic Hough transform that minimizes the amount of computational resources required for detecting lines by exploiting differences in the fraction of votes that are needed to reliable detect lines with different numbers of supporting points. This algorithm is ideally suited for real-time applications with a fixed amount of available processing time, as voting and line detection are interleaved. The present paper focuses mainly on the matter of improving process speed in order to develop a system for fast line tracking using mobile GPUs.

Currently, GPU programming is extensively used for resolving a broad range of computationally demanding and complex problems. CUDA is a general-purpose graphics processing unit solution by NVIDIA that provides direct access to the GPU hardware interface through a C-like language, unlike the traditional approach that relies on the graphical programming interface. CUDA extends C by allowing the programmer to define C functions, called CUDA kernels, that, when called, are executed \(n\) times in parallel by \(n\) different CUDA threads, as opposed to only once as in regular C functions. CUDA applications on UAVs have access to a graphics hardware device and transparently achieve high computing performance. Furthermore, Hough transform bottleneck can be easily parallelized resulting in a large boost in speed. Approaches in Gmez-Luna1a et al. (2011), Chen and Jiang (2011) and Braak et al. (2011) used a CUDA-based implementation to analyze the improvement in Hough transform performance on GPUs. The average results showed that GPUs provide about five times faster performance as compared to CPU. These studies provided a solid baseline for building faster algorithms for line detection and tracking. However, all of these studies involved image processing using photographic still images and implemented only the Hough transform on the GPU. In our previous paper (Tyan and Jeon 2014), we demonstrated line predictions and optimized accumulator calculations using probabilistic Hough transform. In this paper, we present fast and robust line detection and tracking algorithm that is practical for use in UAV mobile GPU environments.

3 Tracking multiple lines using a Mobile GPU

3.1 Detecting and tracking lines

In this section, line detection and tracking algorithm are presented. The whole tracking system consists of two main elements: detection and tracking. After retrieving a new frame, the algorithm checks whether this frame should be sent to the detection element; if not, it is sent to the tracking element. Every 30th frame is marked for detection. If a frame has been transmitted to the detection element, the list of lines is refreshed according to the lines detected in the current frame, and the predicted line positions are set according to the same values owing to the impossibility of predicting the line displacement in the next frame when only the current frame is used.

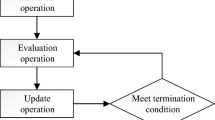

The tracking algorithm in turn uses the predicted line positions and tracks the most probable lines in the vicinity of the predicted line positions. Next, the tracking algorithm updates the current line list and predicted line positions using the divergence between the predicted values and the actual tracked lines. The block scheme, shown in Fig. 1, represents the tracking system algorithm.

The proposed tracking algorithm is based on two key techniques. First, the Hough transform (Duda and Hart 1972) is used to transfer the image to the Hough space, in which some primitive line shapes can be identified. Second, a set of Kalman filters (Kalman 1960; Mills et al. 2003) is used to predict the line positions in the next frame to reduce the search area and consequently improve the overall performance of the algorithm. The positions of the predicted lines are obtained from the previous frame, and the Kalman filter enables a reduction in the required number of computations by reducing the search window area while maintaining tracking accuracy. Figure 2 shows the predicted search window in Hough space by Kalman filter. The small-red rectangle is the reduced search window predicted by the Kalman filter and large-white rectangle is the original search window without the Kalman filter. Experiments revealed that these techniques were effective when used combination. The basic steps of tracking are given as follows:

-

Step 1: Receive a new frame;

-

Step 2: Apply preprocessing procedures;

-

Step 3: Detect edges;

-

Step 4: Convert image to Hough space (partial conversion in the vicinity of predicted positions);

-

Step 5: Search most probable lines in the vicinity of predicted positions;

-

Step 6: Update line positions and predict next position using the set of Kalman filters.

The detection algorithm is based on an unsupervised clustering method, called \(K\)-means. Inherently, the detection algorithm is the same as the tracking algorithm, except for some minor modifications. Line position detection can account for a significant proportion of the computational effort. First, a frame specified for detection is preprocessed and enhanced. Then, the algorithm searches the preprocessed image for edges and selects the probable positions of line segments. A binary image obtained in the previous step is then used for calculating a full Hough space to be able to detect lines in any position. The resulting Hough space typically contains a few peaks that correspond to the strong lines in the image. To expose these lines, the detection algorithm thresholds the Hough space using predefined value (minimum line length) with subsequent clustering. In the last step, the algorithm searches a local maximum in the vicinity of the cluster centers to ensure that the line is aligned with the most suitable position in the image.

An image retrieved from a video source almost always contains some kind of noise and image artifacts caused by camera vibrations as well as the camera lens properties. For this reason, fundamental preprocessing should be performed to provide image quality enhancement and noise reduction. Image preprocessing is a complex procedure that involves a number of low-level image transformations and noise filtration techniques: color space conversion (Oppenheim et al. 1989), gamma correction, and sharpness filter. However, an additional problem is that edge detection algorithms detect the gradient changes, not only those of the appropriate targets. To build a robust binarization algorithm, we used color to track the lines, as this helped to resolve the edge-detection problem. Red, green, and blue (RGB) color channels are strongly correlated; therefore, another color space in which color is more clearly represented is needed for color segmentation. For this reason, RGB to HSV (Sural et al. 2002) color space conversion is performed using the subsequent thresholding in the required range of the color spectrum in the hue and saturation channels. The saturation channel is passed because the saturation changes according to the luminosity of the image scene.

3.2 Implementation on mobile GPU

In our implementation, the entire voting process in Hough space is fully implemented on GPUs, which provides a significant burst in acceleration. The acceleration also increases with the increase in input data resolution. The function of calculating the Hough accumulator is fully executed on the GPU. As a result, this function returns a filled accumulator of line probabilities. All subsequent operations are processed as usual on a CPU. The kernel structure is shown in the Fig. 3. The kernel is executed on a one-dimensional array of blocks, each of which consists of a two-dimensional array of threads. Every thread in the block is related to a point in the Hough space accumulator. The array dimensions are defined by two parameters:

L is the maximum of the binary image dimensions (width or height) and ensures that no pixels are missed in the voting. N is the tracking window size, the value which is always an odd number because it is calculated as double the maximum line displacement plus one (in the case of detection window size, it constitutes the entire Hough space).

In Hough space, a line can be parameterized with \(\rho \) and \(\theta \). The parameter \(\rho \) represents the distance between the line and the origin, and \(\theta \) represents the angle of the normal (the vector from the origin to the closest point on the line). Each block counts the number of pixels on the line corresponding to the \(\rho \) and \(\theta \) assigned to the block according to the (1).

The kernel function calculates the \(Y\)-coordinate using the \(X\)-coordinate, with subsequent check as to whether the corresponding pixel in spatial space is related to an edge. The accumulator is located in the global memory of the GPU. It allows all the threads access to these data. The binary image is copied to the texture memory in order to optimize access speed, because binary data are used quite intensively. Figure 4 illustrates the entire procedure of the proposed algorithm. The original image obtained from the UAV can be seen on the far left of the upper row. The middle of the upper row shows the converted HSV color space for extracting lines by classifying the color, and the thresholded binary image is shown on the right. On the far left of the lower row are the plots of the Hough space; the white points represent the most probable spots for lines. The clustering results are shown in the middle of the lower row. The clustering procedure was performed to provide robust tracking. Finally, the tracking results are shown on the right. We tracked five lines in each frame.

4 Experimental results and performance analysis

4.1 Experimental results

The computers used for development and experimental purposes were of two different platforms: an x86 machine, configured with an Intel i5-2500 quad-core CPU running at 3.3 GHz and a NVIDIA Quadro 2000D for graphic acceleration, and the most recent small-scaled SOC-embedded board TK1 with a quad-core 2.3 GHz ARM Cortex-A15 CPU and a TK1 GPU. Each computer was configured with NVIDIA GPUs with CUDA support to provide the graphical acceleration. Mobile GPUs provide an affordable performance under hard SWaP constraints. GPU acceleration is particularly well suited for data-parallel tasks, such as image processing, signal processing, autonomy, and machine learning.

In general, low-resolution images were used for real-time machine vision owing to the lack of computational resources and processing speed as well as to satisfy real-time constraints. In our experiments, we used 320\(\times \)240 and 640\(\times \)480 resolutions that were recorded at 30 frames per second. The testing sets were \(320\times 240\) for the x86 CPU, 320\(\times \)240 for the x86 GPU, 640\(\times \)480 for the TK1 CPU, and 640\(\times \)480 for the TK1 GPU. We measured the line detection time, which constituted a full search of one frame and the time that elapsed for tracking the multiple lines extracted by the detection algorithm. We obtained measurements while varying the search area (a squared search block in Hough space from 3\(\times \)3 to 55\(\times \)55) and number of clusters used to identify lines. The size of search area is the key measurement for testing the processing performance. A larger search area indicates that we can achieve more robust tracking of moving lines, although more computations are required. In addition, the number of clusters is the second major factor to determine the computational load.

We tested four different videos in the experiments. Video clip 1 was captured by our own UAV positioned over a white-lined tennis court. It included rotations and changes in altitude. Clip 2 is a landing video sequence of a fixed-wing aircraft on a runway. It has two noticeable lines and slow pace. Clip 3 is our own video that includes rapid movements both in terms of direction and perspective. Clip 4 is another type of runway sequence captured with a virtual flight simulator. It has three dominant lines and rapid speed. Figure 5 shows the four types of test clips and the results of line tracking. The detected lines were maintained fully until the end of clip in each video.

4.2 Performance analysis

Figure 6 shows the average detection time for each machine with four clips. Measured detection time results have an almost constant value in the same condition: machine type and resolution, because lines were searched in the entire spatial domain. In the result obtained using 640\(\times \)480 clip 1, the average detection time was 990 ms using the x86 CPU, 245 ms using the x86 GPU, 3020 ms using the TK1 CPU, and 1150 ms using the TK1 GPU. Line detection was executed every 30 frames.

Figure 7 depicts the elapsed tracking time for one frame in each clip. Larger searching window size and the number of clusters are major factors that lead to performance degradations. Table 1 shows the correlation between processing type and the number of clusters. As mentioned in the previous section, the number of clusters used in detection algorithm had a strong influence on performance speed. Therefore, results in Table 1 signify search window size and the number of clusters determines the frame rate for tracking. Figure 8 shows the frame rate for the detection and tracking of 320\(\times \)240 pixels on clip 1, respectively. It can be observed that as the search area increases the tracking time obtained using the CPU increases gradually. On the other hand, results on GPU did not increase owing to the parallel processing capabilities of the GPU. In this graph, x86 CPU shows higher performance than TK1 GPU for small search windows under 35\(\times \)35. It comes from a characteristic of GPU execution; namely, that it should copy input–output data between host and device memory. This may incur a performance decline due to system bus bandwidth and latency. In practical terms, small search windows may miss lines during the tracking process. For that reason, the gap in performance using small search windows is not a critical drawback. The TK1 GPU exhibits better performance from 47\(\times \)47 search area than does the x86 CPU. These results indicate that the TK1 GPU can process almost 40 frames per second including detection processes. In other words, onboard real-time tracking is feasible using this embedded system. In controlling UAV by visual information, higher frame rates can help provide stable navigation by reducing the gap in timing error with other sensors such as GPS, gyroscopes and IMU. Resolution is the main influencing factor in detection measurements. The rate of increase across machine types shows similar trend using the 320\(\times \)240 clip. Figure 9 shows the frame rate for detection and tracking of the 640\(\times \)480 pixel clip 1. The characteristics of the CUDA architecture define these capacities. The parallel threads can simultaneously process each separated Hough space. The experimental results show that these data-parallel tasks are well suited for GPU acceleration. Furthermore, in some cases the TK1 shows better performance than the x86 i5 processor.

Table 2 shows frame rate for tracking in each machine and at each resolution. The tracking time is obtained by subtracting the detection time from the total elapsed time. In mobile GPU section (bold and italic bold), we obtained acceleration according to the CPU processing results. Furthermore, tracking time obtained using the GPU was less influenced by the search window size owing to parallel processing for respective search window in the separated processing unit. For the maximum window size, measurements with the GPU are far faster than those obtained with the CPU in the same condition.

5 Conclusions

In this paper, we presented a fast and robust detection and tracking algorithm to facilitate UAV intelligent flight, in which the processing is executed on a mobile GPU. In the past, there were several obstacles to real-time processing using small-scale low-power embedded machines. A computing platform that requires low-power consumption and achieves high-performance helped to resolve these problems. Much of the intermediate processing in the Hough transform and the clustering are thus well suited for GPU-based implementation. The calculation of separated search spaces is appropriate for parallel software implementations and the calculations are carried out independently and simultaneously. We obtained about five times speedup in comparison with ARM quad-core Cortex-A15 and even faster performance than x86 quad-core CPU in certain conditions. The experimental results were promise and encourage the development of onboard real-time image processing for intelligent UAV.

References

Braak van den GJ, Nugteren C, Mesman B, Corporaal H (2011) Advances concepts for intelligent vision systems. Fast Hough transform on GPUs: exploration of algorithm trade-offs. Springer, Berlin Heidelberg

Campoy P, Correa J, Mondragon I, Martnez C, Olivares M, Mejas L, Artieda J (2009) Computer vision onboard UAVs for civilian tasks. J Intell Robotic Syst 54(1–3):105–135

Chen S, Jiang H (2011) Accelerating the Hough transform with CUDA on graphics processing units. In: Department of Computer Science, Arkansas State University

Duda R, Hart P (1972) Use of the Hough transformation to detect lines and curves in pictures. Commun ACM 15(1):11–15

Engel J, Sturm J, Cremers D (2012) Camera-based navigation of a low-cost quadrocopter. In: Intelligent robots and systems (IROS), 2012 IEEE/RSJ international conference on, pp 2815–2821

Feng L, Xiangxu D, Chen BM, Lee Kai-Yew LT (2012) A robust real-time embedded vision system on an unmanned rotorcraft for ground target following. Ind Electron IEEE Trans 59(2):1038–1049

Gmez-Luna1a J, Gonzlez-Linaresb J, Benavidesa J, Zapatab E, Guilb N (2011) Parallelization of the generalized Hough transform on GPU. In: Computer Architecture and Electronics Department, University of Crdoba, Spain

Kaiser M, Gans N, Dixon W (2010) Vision-based estimation for guidance, navigation, and control of an aerial vehicle. Aerosp Electron Syst IEEE Trans 46(3):1064–1077

Kalman RE (1960) A new approach to linear filtering and prediction problems. J Fluids Eng 82(1):35–45

Lange S, Sunderhauf N, Protzel P (2009) A vision based onboard approach for landing and position control of an autonomous multirotor UAV in GPS-denied environments. In: Advanced robotics, ICAR 2009, international conference on, pp 22–26

Mejias L, McNamara S, Lai J, Ford J (2010) Vision-based detection and tracking of aerial targets for UAV collision avoidance. In: Intelligent robots and systems (IROS), 2010 IEEE/RSJ international conference on, pp 87–92

Mills S, Pridmore T, Hills M (2003) Tracking in a Hough space with the extended Kalman filter. In: Proceedings of the British machine conference. BMVA Press, pp 1–10

Olivares-Mendez M (2010) Fuzzy controller for UAV-landing task using 3D-position visual estimation. In: Comput. Vision Group, Univ. Politec. de Madrid, Madrid, Barcelona, Spain, pp 1–8

Oppenheim A, Schafer R, Buck J (1989) Discrete-time signal processing. Prentice-hall, Englewood Cliffs

Otsu N (1979) A threshold selection method from gray-level histogram. IEEE Trans Syst Man Cybern SMC–9(1):62–66

Sural S, Qian G, Pramanik S (2002) Segmentation and histogram generation using the HSV color space for image retrieval. In: Image processing proceedings, international conference on, IEEE, Vol. 2, pp II-589

Tyan V, Jeon D (2014) Fast multi-line detection and tracking with CUDA for vision-based UAV autopilot. In: IMIS 2014. United Kingdom

Zhihai H, Iyer R, Chandler P (2006) Vision-based UAV flight control and obstacle avoidance. In: American control conference, pp 14–16

Acknowledgments

This research was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education, Science and Technology. (Grant Number: 2012006817).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by A. Jara, M.R. Ogiela, I. You and F.-Y. Leu.

Rights and permissions

About this article

Cite this article

Jeon, D., Kim, DH., Ha, YG. et al. Image processing acceleration for intelligent unmanned aerial vehicle on mobile GPU. Soft Comput 20, 1713–1720 (2016). https://doi.org/10.1007/s00500-015-1656-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-015-1656-y