Abstract

We investigate the minimum cost of a wide class of combinatorial optimization problems over random bipartite geometric graphs in \(\mathbb {R}^d\) where the edge cost between two points is given by a pth power of their Euclidean distance. This includes e.g. the travelling salesperson problem and the bounded degree minimum spanning tree. We establish in particular almost sure convergence, as n grows, of a suitable renormalization of the random minimum cost, if the points are uniformly distributed and \(d \ge 3, 1\le p<d\). Previous results were limited to the range \(p<d/2\). Our proofs are based on subadditivity methods and build upon new bounds for random instances of the Euclidean bipartite matching problem, obtained through its optimal transport relaxation and functional analytic techniques.

Similar content being viewed by others

1 Introduction

Combinatorial optimization problems on graphs are widespread in operation research, with applications in planning and logistics. Their study is strongly related to algorithm theory and computational complexity theory. The most representative example of such discrete variational problems is the travelling salesperson problem (TSP) [45]: given a set of cities and distances between each pair of them, one asks for the shortest route that visits each city exactly once and returns to the origin city (i.e. a tour). Like many related combinatorial problems and despite its straightforward formulation, the TSP belongs to the class of NP-hard problems. In practical terms, computing an exact solution becomes computationally intractable as known algorithms perform exponentially many steps in the number of cities.

In real-world situations, there is quite often the need to solve many similar instances of a given combinatorial optimization problem. In that case, additional structure, including geometry and randomness, can be exploited. The Euclidean formulation of the TSP, i.e., when cities are points in \(\mathbb {R}^d\) and distances are given by the Euclidean distance, is still NP-hard [40], but Karp [33] observed that solutions to random instances, i.e., when cities are sampled independently and uniformly, can be efficiently approximated via a partitioning scheme. His proof relies upon the seminal work by Beardwood, Halton and Hammersley [7], where precise asymptotics for optimal costs of a random instance of the problem were first established: given i.i.d. points \((X_i)_{i=1}^n\) distributed according to a probability density \(\rho \) on \(\mathbb {R}^d\), denoting the length \( \mathcal {C}_{\textsf{TSP}}((X_i)_{i=1}^n)\) of the (random) solution to the TSP cycling through such points satisfies the \(\mathbb {P}\)-a.s. limit

where \(\beta _{{\text {BHH}}} = \beta _{{\text {BHH}}}(d) \in (0, \infty )\) is a constant depending on the dimension d only. The scaling \(n^{1-1/d}\) is intuitively explained by the fact that the n cities are connected through paths of typical length \(n^{-1/d}\) (as if they were on a regular grid).

Building upon these ideas, several authors [39, 46, 47, 53] contributed towards establishing a general theory to obtain limit results of BHH-type, i.e., as in (1.1), for a wide class of random Euclidean combinatorial optimization problems. The theory allows also for more general weights than the Euclidean length, including p-th powers of the Euclidean distance, a variant often motivated by modelling needs. If \(0<p<d\), with a minimal modification of the techniques one obtains BHH-type results as in (1.1), with the scaling replaced by \(n^{1-p/d}\), the constant \(\beta _{{\text {BHH}}}\) now depending on p, d and the specific combinatorial optimization problem, and the integrand \(\rho ^{1-1/d}\) replaced by \(\rho ^{1-p/d}\). For \(p \ge d\), the situation becomes subtler and (1.1) is known for the TSP only if \(p=d\), see [52] and [53, Section 4.3].

Despite the wide applicability of this theory, several classical problems such as those formulated over two random sets of points, are not covered and require different mathematical tools. The Euclidean assignment problem, also called bipartite matching, is certainly the most representative among these: given two sets of n points \((x_i)_{i=1}^n\), \((y_j)_{j=1}^n \subseteq \mathbb {R}^d\), one defines the matching cost functional as

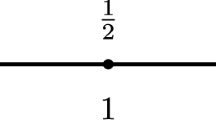

where the minimum is taken among all the permutations \(\sigma \) over n elements. This is often interpreted in terms of optimal planning for the execution of a set of jobs at positions \(y_j\)’s to be assigned to a set of workers at the positions \(x_i\)’s. Although the assignment problem belongs to the P complexity class, i.e., an optimal \(\sigma \) can be found in a polynomial number of steps with respect to n [38], the analysis of random instances still shows some interesting behavior in low dimensions. Indeed, if \((X_i)_{i=1}^n\), \((Y_j)_{j=1}^n\) are i.i.d. and uniformly distributed on the cube \((0,1)^d\), it is known [1, 21, 22, 48] thatFootnote 1

In particular, for \(d\in \left\{ 1,2 \right\} \) the cost is asymptotically larger than the heuristically motivated \(n^{1-1/d}\). This exceptional scaling is intuitively due to local fluctuations of the distributions of the two families of points.

Inspired by the combinatorial approach in [12] for the random Euclidean bipartite matching problem in dimension \(d\ge 3\), Barthe and Bordenave [6] first proposed a general theory to establish results of BHH-type (1.1) for a wide class of random Euclidean combinatorial optimization problems over two sets of n points. Let us point out that the equality in (1.1) is actually only proven for uniform measures while in general only upper and lower bounds (which are conjectured to coincide) are known. In case of p-th power weighted distances, the theory developed in [6] applies in the range \(0<p<d/2\), which appears quite naturally in their arguments. The threshold \(p=d/2\) is not merely technical, since in fact (1.1) cannot hold without additional hypothesis on the density \(\rho \). For example, because of fluctuations a necessary condition is connectedness of the support of \(\rho \). Nevertheless, in the case of the Euclidean bipartite matching problem, it was recently proved [25] that if \(\rho \) is the uniform measure on the unit cube with \(d \ge 3\) and \(p \ge 1\), then

Here \(\beta _{\textsf{M}} \in (0, \infty )\) depends on d and p only. The proof is a combination of classical subadditivity arguments—that originate from [7]—and tools from the theory of optimal transport. In particular, the defect in subadditivity is estimated using the connection between Wasserstein distances and negative Sobolev norms. In this context, the use of this type of estimates can be traced back to a recent PDE ansatz proposed in statistical physics [14]. Since then, it has been successfully used in the mathematical literature [3, 10, 16, 23, 24, 26, 27, 35, 37], even beyond the case of i.i.d. points [11, 28, 30, 51]. We refer to [8, 9, 15] for further statistical physics literature. In fact, the technique in [25] is quite robust and coarser estimates can be used, avoiding the use of PDEs. Still, the results apply only for the Euclidean bipartite matching problem thanks to its connection with optimal transport. The main purpose of this paper is to show that for a quite general class of bipartite combinatorial problems it is actually possible to rely on the good bounds for the matching problem to obtain the analog of (1.1) provided \(p<d\). This is inspired by [13] where a similar idea is used for the TSP and the 2-factor problem when \(p=d=2\).

As alluded to, an important open question left from the theory developed in [6] (see also [20]) is the existence of a limit in (1.1) for general densities. The only result in this direction is [4], which established for \(p=d=2\) that the limit of the expected cost (suitably renormalized) exists if \(\Omega \) is a bounded connected open set, with Lipschitz boundary and \(\rho \) is Hölder continuous and uniformly strictly positive and bounded from above on \(\Omega \). This settled a conjecture from [9] and, more importantly for our purposes, combined subadditivity and PDE arguments with a Whitney-type decomposition to take into account the structure of \(\Omega \) and its boundary. While we do not address this question here, some of the ideas from [4] are further developed in this work.

1.1 Main result

Our aim is to establish limit results for the cost of a wide class of Euclidean combinatorial optimization problems of two random point sets, in the range \(d/2 \le p <d\) for any dimension \(d \ge 3\). This overcomes the limitations of [6], showing that in higher dimensions bipartite problems behave much more similarly to non-bipartite ones. Our general theorem can be stated as follows (a precise description of all the assumptions and notation is given in Sect. 2).

Theorem 1.1

Let \(d \ge 3\), \(p\in [1,d)\) and let \(\textsf{P}= (\mathcal {F}_{n,n})_{n \in \mathbb {N}}\) be a combinatorial optimization problem over complete bipartite graphs such that assumptions A1, A2, A3, A4 and A5 hold and write \(\mathcal {C}_{\textsf{P}}^p( (x_i)_{i=1}^n, (y_j)_{j=1}^n)\) for the optimal cost of the problem over the two sets of n points \((x_i)_{i=1}^n\), \((y_j)_{j=1}^n \subseteq \mathbb {R}^d\), with respect to the Euclidean distance raised to the power p. Then, there exists \(\beta _{\textsf{P}}\in (0, \infty )\) depending on p, d and \(\textsf{P}\) only such that the following hold.

Let \(\Omega \subseteq \mathbb {R}^d\) be a bounded open set and assume that it is either convex or has \(C^2\) boundary. Let \(\rho \) be a Hölder continuous probability density on \(\Omega \), uniformly strictly positive and bounded from above. Given i.i.d. random variables \((X_i)_{i=1}^\infty \), \((Y_j)_{j=1}^\infty \) with common law \(\rho \) we have \(\mathbb {P}\)-a.s. that

Moreover, if \(\rho \) is the uniform density and \(\Omega \) is either a cube or has \(C^2\) boundary, then the above is a \(\mathbb {P}\)-a.s. limit and equality holds.

Our assumptions A1, A2, A3, A4 and in particular A5 are slightly stronger than those introduced in [6, Section 5.3], but it is not difficult to show that all the specific examples discussed in [6] satisfy them. In particular, our result apply to the TSP, the minimum weight connected k-factor problem and the k-bounded degree minimum spanning tree. It is thus fair to say that for compactly supported densities, Theorem 1.1 extends the main results in [6] in the range \(d/2 \le p<d\) for any \(d \ge 3\) and for this reason we do not consider the case \(p<1\).

Remark 1.2

Let us point out that (1.3) also holds in expectation (see Proposition 5.1).

Remark 1.3

Arguing as in [6] (see also [4]) and considering a “boundary” variant of \(\textsf{P}\) it should be possible to adapt the proof of Theorem 1.1 to show that there exists \(\beta _{\textsf{P}}^b>0\) such that

However since we are currently not able to prove that \(\beta _{\textsf{P}}^b=\beta _{\textsf{P}}\) we decided to leave it aside.

Remark 1.4

In fact our result applies, at least in expectation, to any \(p-\)homogeneous bi-partite functional \(\mathcal {C}\) satisfying the subadditivity inequality (5.2) (which is similar to the condition \((\mathcal {S}_p)\) from [6]) and the growth condition (5.3) (somewhat reminiscent of condition \((\mathcal {R}_p)\) from [6]). See Remark 5.2.

Of course, our result applies in particular for the Euclidean assignment problem.

Corollary 1.5

For \(d \ge 3\), \(p\in [1,d)\), let \(\Omega \subseteq \mathbb {R}^d\) be a cube or a bounded connected open set with \(C^2\) boundary and let \(\rho \) be a Hölder continuous probability density on \(\Omega \), uniformly strictly positive and bounded from above. Then, given i.i.d. \((X_i)_{i=1}^\infty \), \((Y_j)_{j=1}^\infty \) with common law \(\rho \), we have \(\mathbb {P}\)-a.s. that

with \(\beta _{\textsf{M}}\) as in (1.2). Moreover, if \(\rho \) is the uniform density and \(\Omega \) has \(C^2\) boundary, then the above is a \(\mathbb {P}\)-a.s. limit and equality holds.

Remark 1.6

In the case of the matching problem, combining ideas from this paper and [25] the conclusion of Corollary 1.5 could be extended to every \(p\ge 1\) (at least in expectation).

1.2 Comments on the proof technique

Our proof leverages on the techniques developed for the bipartite matching problem, in particular [4, 25] to carefully estimate the defects in a geometric subadditivity argument. Comparing the approach in [6], which works if \(p<d/2\), with that in [25], which holds instead for any p, a crucial difference is that the errors due to local oscillations in the two distributions of points are mitigated in the latter by spreading them evenly across all the points. This is possible since the optimal transport relaxation allows for general couplings as well as continuous densities, rather than discrete matchings only.

The overall strategy is thus to find a suitable replacement for such operation in the purely combinatorial setting. The starting point is Proposition 3.7 where we prove a subadditivity inequality. The problem is then to estimate the defect in subadditivity. This is achieved by combining the following three key observations.

The first one is to bound from above the cost of the problem over any two point sets \((x_i)_{i=1}^n\), \((y_j)_{j=1}^n\) by the sum of a term of order \(n^{1-p/d}\) plus the bipartite matching cost between the two point sets. This is stated as an assumption (A5), but can be easily checked on many specific problems (Lemma 3.10): being an upper bound, it usually suffices to combine an optimal matching with the solution to an additional non-bipartite combinatorial optimization problem, such as the TSP, to build a feasible solution. This approach was first successfully used in [13] (see also [4]) for the random bipartite TSP in the case \(p=d=2\), where one can simply argue that the main contribution comes from the logarithmic corrections in the matching cost.

The second key observation is that for point sets mostly made of i.i.d. points (while much less is assumed on the remaining ones), it is still possible to obtain good bounds for the matching cost. We refer to Sect. 6 for the precise statements, but the underlying idea is strongly related to bounds for the optimal transport cost in terms of the negative Sobolev norms—thus relying again on the PDE ansatz originally introduced in the statistical physics literature.

The third observation is that, in order to ensure that a small fraction of i.i.d. uniformly distributed points can indeed be found in the subadditivity defect terms, it is enough to keep them out of the optimization procedure on the smaller scales. As usual with those arguments, the proof of existence of the limit is performed first on the Poisson version of the random problem, so to retain a fraction of points we perform a thinning procedure.

Besides these main ideas, plenty of technical modifications with respect to the arguments in [6] and [4, 25] are required, e.g. in order to establish improved subadditivity inequalities (Proposition 3.7) and to extend the Whitney-type decomposition argument from [4] to \(p\ne 2\).

1.3 Further questions and conjectures

Our results raise several questions about costs and properties of solutions to Euclidean random combinatorial optimization problems over two point sets. We list here a few which we believe are worth exploring.

-

1.

Existence of a limit in (1.3) for non-uniform densities is rather easy to conjecture, but so far our techniques do not improve upon [6], hence the problem remains largely open.

-

2.

Our techniques break down if \(p \ge d\) for many reasons. In particular, the idea of leaving out a small fraction of i.i.d. points to improve the estimate of the defect terms seems to fail. It is however natural to conjecture that Theorem 1.1 should hold also in that range.

-

3.

In this work we considered only the case of compactly supported densities \(\rho \). It would be interesting to investigate the case where the support is \(\mathbb {R}^d\). To the best of our knowledge, the only results available so far in this direction are [35, 37] where the correct rates are established for the Gaussian density in the case of the matching problem.

-

4.

The assumptions in [6] are slightly different than ours, although the specific problems considered therein satisfy both. It would be interesting to find examples which satisfy only one set of these, or possibly simplify even more our assumptions.

-

5.

Many problems, such as the bounded degree minimum spanning tree, but also the bipartite matching problem itself, can be naturally formulated also for two families of points with different number of elements: it could be of interest to investigate limit results also in those cases.

-

6.

The cases \(d \in \left\{ 1,2 \right\} \) are necessarily excluded by our analysis, since subadditivity arguments do not apply already for the random bipartite matching problem. It is however already an open question, whether the additional logarithmic correction indeed appears in the asymptotic rates for many other problems. As an example, we mention that for the Euclidean minimum spanning tree over two random point sets (without any uniform bound on the degree) no logarithmic corrections appear [19], but the maximum degree is unbounded, hence it is not covered by our results.

-

7.

In the deterministic literature, for the TSP and other NP-hard Euclidean combinatorial optimization problems, polynomial time approximation schemes are known [5] for any (fixed) dimension d, as the number of points grows. Can our approach lead to similar schemes for problems on two families of points, possibly under some mild regularity assumption on their spatial distributions?

1.4 Structure of the paper

In Sect. 2 we first introduce some general notation. We then discuss Whitney-type decompositions, Sobolev spaces as well as recall useful known facts on the Optimal Transport problem, and possibly some novel ones (Proposition 2.9). We close the section with a variant of the standard subadditivity (Fekete-type) arguments, suited for our purposes together with some simple concentration inequalities. Section 3 is devoted to the combinatorial optimization problems we consider, discussing in particular the main assumptions that we require and some useful consequences. In Sect. 4 we establish a variant our main result in the case of Poisson point processes and in Sect. 5 we use it to deduce Theorem 1.1. These two sections in fact rely upon the novel bounds for the Euclidean assignment problem that we finally establish in Sect. 6.

2 Notation and preliminary results

2.1 General notation

Given \(n \in \mathbb {N}\), we write \([n] = \left\{ 1, \ldots , n \right\} \) and \([n]_1 = \left\{ (1,i) \right\} _{i=1}^n\), \([n]_2 = \left\{ (2,i) \right\} _{i=1}^n\), which easily allows to define two disjoint copies of [n]. Given a finite set A, we write |A| for the number of its elements, while, if \(A \subseteq \mathbb {R}^d\) is infinite, |A| denotes its Lebesgue measure.

Given a metric space \((\Omega , \textsf{d})\), \(x \in \Omega \), \(A\subseteq \Omega \), we write \(\textsf{d}(x, A) = \min _{y \in A}\left\{ \textsf{d}(x,y) \right\} \) and \({\text {diam}}(A) = \sup _{x,y \in A} \textsf{d}(x,y)\). We endow every set \(\Omega \subseteq \mathbb {R}^d\) with the Euclidean distance. A partition \(\left\{ \Omega _k \right\} _{k=1}^K\) of a set \(\Omega \) is always intended up to a set of Lebesgue measure zero. A rectangle \(R \subseteq \mathbb {R}^d\) is a subset of the form \(R = \prod _{i=1}^d (x_i,x_i+L_i)\), and is said to be of moderate aspect ratio if for every i, j, \(L_i/L_j\le 2\). If \(L_i= L\) for every i, then \(R = Q\) is a cube of side length L. We write \(Q_L = (0,L)^d\). We write \(I_\Omega \) for the indicator function of a set \(\Omega \).

2.2 Families of points

Given a set \(\Omega \), we consider finite ordered families of points \(\textbf{x}= \left( x_i \right) _{i=1}^n \subseteq \Omega \), with \(n \in \mathbb {N}\), letting \(\textbf{x}= \emptyset \) if \(n=0\). For many purposes the order will not be relevant, but we thus may allow e.g. for repetitions (which will be probabilistically negligible anyway). Given a family \(\textbf{x}\subseteq \mathbb {R}^d\), we write \(\mu ^{\textbf{x}} = \sum _{i=1}^n \delta _{x_i}\) for the associated empirical measure and, for every (Borel) \(\Omega \subseteq \mathbb {R}^d\), we let \(\textbf{x}(\Omega ) = \mu ^{\textbf{x}}(\Omega )\). In the special case \(\Omega = \mathbb {R}^d\), we simply write \(|\textbf{x}| = \textbf{x}(\mathbb {R}^d) = \mu ^{\textbf{x}}(\mathbb {R}^d)\) for the total number of points (counted with multiplicity). We also write \(\textbf{x}_ \Omega \) for its restriction to \(\Omega \), i.e., the family of all points \(x_i \in \Omega \), so that \(\textbf{x}= \textbf{x}_{\Omega }\) if \(\textbf{x}\subseteq \Omega \) (conventionally, we naturally re-index it over \(i=1, \ldots , \textbf{x}(\Omega )\) with the order inherited from that in \(\textbf{x}\)). Given \(\textbf{x}= \left( x_i \right) _{i=1}^n\), \(\textbf{y}= \left( y_j \right) _{j=1}^m\subseteq \mathbb {R}^d\), their union is \(\textbf{x}\cup \textbf{y}= (x_1, \ldots , x_n, y_1, \ldots , y_m)\). Strictly speaking, the union should be called concatenation, since the operation is not commutative, in general.

2.3 Whitney partitions

We recall the following partitioning result [4, Lemma 5.1].

Lemma 2.1

Let \(\Omega \subset \mathbb {R}^d\) be a bounded domain with Lipschitz boundary and let \(\mathcal {Q}= \{Q_i\}_i\) be a Whitney partition of \(\Omega \). Then, for every \(\delta >0\) sufficiently small, letting \(\mathcal {Q}_\delta =\{Q_i \, \ {\text {diam}}(Q_i) \ge \delta \}\), there exists a finite family \(\mathcal {R}_\delta =\{\Omega _j\}_j\) of disjoint open sets such that:

-

(i)

\((\Omega _k)_{k=1}^K = \mathcal {Q}_\delta \cup \mathcal {R}_\delta \) is a partition of \(\Omega \),

-

(ii)

\( |\Omega _k| \sim {\text {diam}}(\Omega _k)^d\) for every \(k=1, \ldots , K\),

-

(iii)

if \(\Omega _k \in \mathcal {Q}_\delta \), then \({\text {diam}}(\Omega _k) \sim \textsf{d}(x, \Omega ^c)\) for every \(x \in \Omega _k\),

-

(iv)

if \(\Omega _k \in \mathcal {R}_\delta \), then \({\text {diam}}(\Omega _k)\sim \delta \) and \(\textsf{d}(x, \Omega ^c) \lesssim \delta \), for every \(x \in \Omega _k\).

Here all the implicit constants depend only on the initial partition \(\mathcal {Q}\) (and not on \(\delta \)).

For later use, we collect some useful bounds related to these partitions.

Lemma 2.2

Let \(\Omega \subset \mathbb {R}^d\) be a bounded domain with Lipschitz boundary and let \(\mathcal {Q}= \{Q_i\}_i\) be a Whitney partition of \(\Omega \). Then, for every \(\delta >0\) sufficiently small, letting \((\Omega _k)_{k=1}^K = \mathcal {Q}_\delta \cup \mathcal {R}_\delta \) as in Lemma 2.1, one has that \(|\mathcal {R}_\delta | \lesssim \delta ^{1-d}\) and the following holds:

-

(1)

For every \(\alpha \in \mathbb {R}\),

$$\begin{aligned} \sum _{k=1}^K {\text {diam}}(\Omega _k)^{\alpha } \lesssim _\alpha {\left\{ \begin{array}{ll} 1 &{} \text {if}\,\alpha >d-1,\\ |\log \delta |&{}\ \text {if}\,\alpha =d-1,\\ \delta ^{1-d+\alpha } &{} \text {if}\,\alpha <d-1. \end{array}\right. } \end{aligned}$$(2.1) -

(2)

If \(\alpha <0\), then for every \(k =1,\ldots , K\), and \(x \in \Omega _k\),

$$\begin{aligned} \sum _{j=1}^K {\text {diam}}(\Omega _j)^{\alpha } \min \left\{ 1, \left( \frac{{\text {diam}}(\Omega _j)}{\textsf{d}(x, \Omega _j)} \right) ^{d-1} \right\} \lesssim \delta ^{\alpha } |\log (\delta )|. \end{aligned}$$(2.2)

In all the inequalities the implicit constants depend upon \(\alpha \) and \(\mathcal {Q}\) in (2.1) only.

By property (ii), inequality (2.1) also holds for the sum \(\sum _{k=1}^K |\Omega _k|^{\alpha }\), with \(\alpha d\) instead of \(\alpha \).

Proof

Since \(\partial \Omega \) is Lipschitz, it follows from the properties of the partition that, for every \(x \in \Omega \) and \(r \ge s \ge \delta \),

with the implicit constant depending on \(\mathcal {Q}\) only. It follows that \(|\mathcal {R}_\delta | \lesssim \delta ^{1-d}\) and, for every \(\ell \le |\log _2 \delta |\), the number of cubes \(\Omega _k \in \mathcal {Q}_\delta \) with \({\text {diam}}(\Omega _k) \in [2^{-\ell }, 2^{-\ell +1})\) is estimated by \( 2^{\ell (d-1)}\). Therefore, for \(\alpha \in \mathbb {R}\),

Since \(\ell \) is also bounded from below in the summation (e.g. by \(-|\log _2{\text {diam}}(\Omega )|\)), we obtain (2.1).

We next prove (2.2). We claim that it follows from the following inequalities, valid for any \(\gamma \in \mathbb {N}\):

and, for \(\beta <d-1\),

Indeed, we can split the summation and use (2.4) and (2.5) to get

Recalling that \({\text {diam}}(\Omega _k) \gtrsim \delta \) and choosing \(\gamma \) so that \(2^{-\gamma } \le \delta \le 2^{-\gamma +1}\) yields (2.2).

In order to prove (2.4) and (2.5) we first notice that, given \(\Omega _k\), \(\Omega _j\) and \(x \in \Omega _k\), we have that, for some constant \(C = C(\mathcal {Q})\),

Indeed, if \(\Omega _j \in \mathcal {R}_\delta \), then \({\text {diam}}(\Omega _j)\lesssim \delta \lesssim {\text {diam}}(\Omega _k)\), hence (2.7) holds. If instead \(\Omega _j \in \mathcal {Q}_\delta \), then we can find \(y \in \Omega _j\) with \(|x-y|\le 2 \textsf{d}(x,\Omega _j)\), so that, by the triangle inequality,

and by property (iii) in Lemma 2.1 we obtain that \({\text {diam}}(\Omega _j) \lesssim \max \left\{ \textsf{d}(x, \Omega _j), {\text {diam}}(\Omega _k) \right\} \), yielding again the desired inclusion.

Hence, we prove (2.4) and (2.5). Let \(\ell _k \le |\log _2 \delta |\) be such that \({\text {diam}}(\Omega _{k}) \in [2^{-\ell _k}, 2^{-\ell _k+1})\). Combining (2.7) and (2.3), we see that, for every \(\ell \le |\log _2\delta |\), there are at most \(2^{(\ell -\ell _k-\gamma )(d-1)}\) sets \(\Omega _j\) such that \(\textsf{d}(x, \Omega _j) \le 2^{-\gamma }{\text {diam}}(\Omega _k)\) and \({\text {diam}}(\Omega _j) \in [2^{-\ell }, 2^{-\ell +1})\). Therefore,

This proves (2.4). To prove (2.5), we split dyadically,

Let us also notice that, if \(\Omega _j \subseteq B(x, C2^{-\ell })\), then necessarily \(\delta \le {\text {diam}}(\Omega _j) \lesssim 2^{-\ell }\) (since \({\text {diam}}(\Omega _j)^d \sim |\Omega _j|\)). Thus for \(\ell '\) with \(2^{-\ell '} \sim 2^{-\ell }\),

using again that \(\ell '\) is bounded from below by a constant depending on \(\mathcal {Q}\) only. Plugging this bound in (2.10), we conclude that

This concludes the proof of (2.5). \(\square \)

2.4 Sobolev norms

Given a bounded domain \(\Omega \subseteq \mathbb {R}^d\) with Lipschitz boundary and \(p \in (1,\infty )\), with Hölder conjugate \(q = p/(p-1)\), we write \(\Vert f \Vert _{L^p(\Omega )}\) for the Lebesgue norm of f, and

for the negative Sobolev norm. We notice in particular that if \(\Vert f\Vert _{W^{-1,p}(\Omega )}<\infty \) then \(\int _\Omega f=0\). In this case we may also restrict the supremum to functions \(\phi \) having also average zero. When it is clear from the context, we will drop the explicit dependence on \(\Omega \) in the norms.

Let us recall that we can bound the \(W^{-1,p}\) norm by the \(L^p\) norm. We give here a proof based on the embedding \(L^{pd/(p+d)}\subset W^{-1,p}\) (for \(p>d/(d-1)\)) which is an elementary alternative to the PDE arguments used in [25, Lemma 3.4].

Lemma 2.3

Let \(\Omega \) be a bounded domain with Lipschitz boundary and let \(f:\Omega \rightarrow \mathbb {R}\) such that \(\int _\Omega f=0\). Then, for every \(p>d/(d-1)\),

Moreover, the implicit constant depends on \(\Omega \) only through the corresponding constant for the Sobolev embedding.

Proof

Let q be the Hölder conjugate of p, \(q^*\) the Sobolev conjugate of q and \(p^*=pd/(p+d)\) the Hölder conjugate of \(q^*\). We then have for every \(\phi \) with \(\Vert \nabla \phi \Vert _{L^q(\Omega )}\le 1\),

Using that \(p^*<p\) and Hölder inequality concludes the proof of (2.13). \(\square \)

As in [4], (2.13) will however not be precise enough when estimating the error in subadditivity in the case of general densities and domains. We will instead rely on gradient bounds for the Green kernel \((G(x,y))_{x,y\in \Omega }\) of the Laplacian with Neumann boundary conditions to obtain sharper estimates. See [2, 3, 23, 35] for related results. Let us however point out that in our case we will not rely on any stochastic cancellation in the form of Rosenthal inequality [43] but will instead use a purely deterministic estimate. We will assume that

where the implicit constant depends uniquely on \(\Omega \).

Remark 2.4

This condition is satisfied for instance if \(\Omega \) is \(C^2\) or convex, see e.g. [50]. Notice that since it is a local condition it also holds for \(Q\backslash \Omega \) where Q is a cube and \(\Omega \) a \(C^2\) open set with \(d(\partial Q,\partial \Omega )>0\).

Remark 2.5

Let us point out that as in [35], instead of (2.14) it would have been enough to have \(L^p\) bounds (for the same p as for the cost \(\mathcal {C}_{\textsf{P}}^p\)) on the Riesz transform for the Neumann Laplacian. From the available results for the Dirichlet Laplacian [31, 44], we expect that for every Lipschitz domain there is \(p>3\) (depending on the domain) for which these bounds hold. In particular, this would allow to extend the validity of Theorem 1.1 to every Lipschitz domain when \(d=3\). However, since we were not able to find in the literature the corresponding results for the case of Neumann boundary conditions we kept the stronger hypothesis (2.14).

We then have

Lemma 2.6

Let \(\Omega \subset \mathbb {R}^d\) be a bounded domain with Lipschitz boundary, such that (2.14) holds and let \(\rho \) be a density bounded above and below on \(\Omega \). For \(\delta >0\) sufficiently small, let \((\Omega _k)_{k=1}^K = \mathcal {Q}_\delta \cup \mathcal {R}_\delta \) as in Lemma 2.1. If there exists \(h>0\) such that \(|b_k|\le h^{\frac{1}{2}} |\Omega _k|^{\frac{1}{2}}\) for \(k=1, \ldots , K\), then for every \(p\ge 1\),

Proof

Set

Let then \(\phi _k\) denotes the solution to the equation \(\Delta \phi _k = f_k\), with null Neumann boundary conditions on \(\Omega \) and use as competitor \(\xi =\sum _{k=1}^K B_k \nabla \phi _k\) in the definition of the \(W^{-1,p}\) norm. We get,

To bound the last term, we use the integral representation in terms of the Green’s function,

to obtain that, for every \(x\in \Omega \),

Indeed, by (2.14),

Moreover, for \(x\notin \Omega _k\), we get directly from (2.14),

For any \(k=1, \ldots , K\) and \(x \in \Omega _k\), we then estimate

having used inequality (2.2) from Lemma 2.2 with \(\alpha = 1-d/2\).

Therefore, we can split the integration

In combination with (2.16) this concludes the proof of (2.15). \(\square \)

2.5 Optimal transport

Given two positive Borel measures \(\mu \), \(\lambda \) on \(\mathbb {R}^d\) with \(\mu (\mathbb {R}^d) = \lambda (\mathbb {R}^d) \in (0, \infty )\) and finite p-th moments, the optimal transport cost of order \(p\ge 1\) between \(\mu \) and \(\lambda \) is defined as the quantity

where \(\Gamma (\mu , \lambda )\) is the set of couplings between \(\mu \) and \(\lambda \), i.e., finite Borel measures \(\pi \) on the product \(\mathbb {R}^d\times \mathbb {R}^d\) such that their marginals are respectively \(\mu \) and \(\lambda \). Notice that if \(\mu (\mathbb {R}^d) = \lambda (\mathbb {R}^d) = 0\) then \(\textsf{W}^p(\mu , \lambda ) = 0\), while if \(\mu (\Omega ) \ne \lambda (\Omega )\), we conveniently extend the definition setting \(\textsf{W}^p(\mu , \lambda ) = \infty \). Let us recall that the triangle inequality for the Wasserstein distance of order p (which is defined as the the p-th root of \(\textsf{W}^p(\mu , \lambda )\)) yields

A straightforward, but useful subadditivity inequality is

valid for any (countable) family of measures \((\mu _k, \nu _k)_{k}\).

To keep notation simple, we write

and, if a measure is absolutely continuous with respect to Lebesgue measure, we only write its density. For example, \(\textsf{W}^p_{\Omega } \left( \mu , \mu (\Omega )/|\Omega | \right) \) denotes the transportation cost between \(\mu \lnot \Omega \) to the uniform measure on \(\Omega \) with total mass \(\mu (\Omega )\).

For \(q \ge p\), Jensen inequality gives

Our arguments make substantial use of two crucial properties of the optimal transport cost. The first one [25, Lemma 3.1] is a simple consequence of (2.18) and (2.19).

Lemma 2.7

For every \(p\ge 1\), there exists a constant \(C>0\) depending only on p such that the following holds. Let \(\Omega \subseteq \mathbb {R}^d\) be Borel and \((\Omega _k)_{k\in \mathbb {N}}\) be a countable Borel partition of \(\Omega \). Then, for finite measures \(\mu \), \(\lambda \), and \(\varepsilon \in (0,1)\), we have the inequality

where \(\alpha = \mu (\Omega )/\lambda (\Omega )\) and \(\alpha _k = \mu (\Omega _k)/\lambda (\Omega _k)\).

The second one is [4, Lemma 2.2] which gives an upper bound for the Wasserstein distance in terms of a negative Sobolev norm. It follows from the Benamou-Brenier formulation of the optimal transport problem (see also [41, Corollary 3]).

Lemma 2.8

Assume that \(\Omega \subseteq \mathbb {R}^d\) is a bounded connected open set with Lipschitz boundary. If \(\mu \) and \(\lambda \) are measures on \(\Omega \) with \(\mu (\Omega ) = \lambda (\Omega )\), absolutely continuous with respect to the Lebesgue measure and \(\inf _\Omega \lambda >0\), then, for every \(p\ge 1\),

As in many recent works on the matching problem, we will use this inequality to improve on the trivial bound

which holds as soon as \(\mu (\Omega ) = \lambda (\Omega )\). Much of our effort in the proofs will be ultimately to deal with an intermediate situation, where the measures can be decomposed as the sum of a “good” part, i.e., absolutely continuous with smooth density and a “bad” remainder about which not much can be assumed. We prove here a general inequality which could also be of independent interest.

Proposition 2.9

Let \(\Omega \subseteq \mathbb {R}^d\) be a bounded Lipschitz domain, \(\rho \) be a density bounded above and below on \(\Omega \), \(\mu \) be any finite measure on \(\Omega \) and \(h >0\). Then, for every \(p>d/(d-1)\),

where \(\alpha = \frac{\mu (\Omega )}{\rho (\Omega )} +h\). Moreover, this inequality is invariant by rescaling of \(\Omega \).

Proof

By scaling we may assume that \(|\Omega |=1\). Notice that, by the trivial bound

we can assume that \(\mu (\Omega ) \ll h\). Let \(P_t\) be the heat semi-group with null Neumann boundary conditions on \(\Omega \) and set \(\mu _t=P_t\mu \). By triangle inequality (2.18) and (2.22), we have

We now estimate the last term. For this let q be the Hölder conjugate exponent of p, i.e., \(q=p/(p-1) \in (1, d)\) and \(q^* = qd/(d-q)\) be the Sobolev conjugate of q. We first use the triangle inequality and the fact that \(\rho \) is bounded from above and below to estimate

Using that the Sobolev embedding is equivalent to ultra-contractivity i.e. if \(\int _\Omega \phi =0\),

where \(\phi _t = P_t \phi \), we finally estimate for every \(\phi \) with \(\Vert \nabla \phi \Vert _{L^{q}(\Omega )}\le 1\) and \( \int _{\Omega } \phi =0\),

Therefore, by taking the supremum over \(\phi \) we find

Taking the p-th power we find for \(t\le 1\),

Optimizing in t we find \(t^{\frac{p}{2}}=\left(\frac{\mu (\Omega )}{h}\right)^{\frac{(p-1)q}{d}}\) which satisfies \(t\ll 1\) if \(\mu (\Omega )\ll h\). Since \((p-1)q=p\), this concludes the proof of (2.24). \(\square \)

Remark 2.10

Since by Hölder inequality it will be enough for us to apply Proposition 2.9 for p arbitrarily close to d, the condition \(p>d/(d-1)\) will not be a limitation for us. Let us however mention that, in the critical case \(p=d/(d-1)\) one can argue similarly, relying instead on the Moser-Trudinger inequality [18, Remark 1.4], to obtain (in the case \(\rho =1\) and \(\Omega =Q\) a cube for simplicity)

If instead \(1\le p<d/(d-1)\), using the same proof as above but with the inclusion \(W^{1,q}(Q) \subseteq L^\infty (Q)\) and letting \(t \rightarrow 0\) gives the estimate

We close this section with the following result easily adapted from [4, Proposition 2.4] which helps in particular to reduce the transport problem from Hölder to constant densities.

Proposition 2.11

For \(d\ge 1\), \(\alpha \in (0,1)\) and \(\rho _0>0\), there exists \(C = C(\rho _0, d, \alpha )>0\) such that the following holds: for any \(\rho \in C^\alpha ( (0,1)^d)\) with

there exists \(T: (0,1)^d \rightarrow (0,1)^d\) such that \(T_{\sharp } \rho = 1\), with

2.6 A subadditivity lemma

We will need a slight variant of the usual convergence results for subadditive functions, see e.g. [12, 46].

Lemma 2.12

Let \(\alpha , \beta , c>0\), \(f: [1, \infty ) \rightarrow [0, \infty )\) be continuous and such that the following holds: for every \(\eta \in (0,1/2]\), there exists \(C(\eta )>0\) such that, for every \(m \in \mathbb {N}{\setminus }\left\{ 0 \right\} \) and \(L \ge C(\eta )\),

Then \(\lim _{L\rightarrow \infty } f(L) \in [0, \infty )\) exists.

Proof

We use the following fact: for any open interval \((a,b) \subseteq [0, \infty )\), the union

contains a half-line, for some \(A>0\). Indeed, one has \((ma, mb) \cap ((m+1)a, (m+1)b)\ne \emptyset \) if \(mb > (m+1)a\), which holds for every \(m> a/(b-a)\).

First, we show that f is uniformly bounded. Let \(\eta = 1/2\) and use the fact that both f(L) and \(L^{-\beta }\) are continuous for \(L \in [1/2,2]\), hence bounded, so that by (2.25), for every \(m \ge 1\), \(L \in [1,2]\),

since \(\bigcup _{m= 1}^\infty [m, 2m] = [1, \infty )\), it follows that f is uniformly bounded on \([1, \infty )\). To show that the limit exists (and is finite) we argue that

Given \(\varepsilon \ll 1\), let \(\eta = \eta (\varepsilon ) \in (0,1/2]\) such that \(c\eta ^{\alpha }= \varepsilon \) and \(L_{\varepsilon }>0\) such that \(C(\eta ) L_{\varepsilon }^{-\beta }=\varepsilon \), so that, for every \(L \ge L_\varepsilon \),

Let then \(L^* > \max \left\{ L_\varepsilon , C(\eta ) \right\} \) be such that

By continuity of f, there exists \(a< L^* <b\) with \(a>\max \left\{ L_\varepsilon , C(\eta ) \right\} \) such that the same inequality holds for \(L \in (a,b)\). For every \(m \ge 1\), and \(L \in (a/(1-\eta ), b/(1-\eta ))\), we have \(L \ge \max \left\{ L_\varepsilon , C(\eta ) \right\} \) and \(L(1-\eta ) \in (a,b)\), hence using (2.25) we obtain

Using that \(\cup _{m =1}^\infty (ma/(1-\eta ), mb/(1-\eta ))\) contains a half-line \((A,+\infty )\), it follows that

and the thesis follows letting \(\varepsilon \rightarrow 0\). \(\square \)

2.7 Concentration inequalities

We close this section by recalling some standard concentration inequalities. Let us start with a general definition.

Definition 2.13

We say that a random variable X with \(\mathbb {E}\left[ X \right] =h\) satisfies (algebraic) concentration if for every \(q\ge 1\) there exists \(C(q)\in (0, \infty )\) such that

We then have

Lemma 2.14

Poisson, binomial and hypergeometric random variables satisfy concentration. More precisely, if:

-

(i)

N is a Poisson random variable with parameter \(n \ge 1\) then, for every \(q\ge 1\),

$$\begin{aligned} \mathbb {E}\left[|N-n|^q\right]\lesssim _q n^{\frac{q}{2}}. \end{aligned}$$(2.26)Hence, for every \(\gamma \in (0,1)\),

$$\begin{aligned} \mathbb {P}\left( N < \gamma n \quad \text {or} \quad N > (1+\gamma ) n \right) \lesssim _{q,\gamma } (1-\gamma )^{-2q} n^{-q}. \end{aligned}$$(2.27) -

(ii)

B is a binomial random variable with parameters n and \(p\in (0,1)\) (so that \(\mathbb {E}\left[ B \right] = np\)) then, for every \(q \ge 1\),

$$\begin{aligned} \mathbb {E}\left[|B-np|^q\right]\lesssim _q n^{\frac{q}{2}}. \end{aligned}$$(2.28) -

(iii)

H is a hypergeometric random variables counting the number of red marbles extracted in z draws without replacement from an urn containing u marbles, r of which are red (so that \(\mathbb {E}\left[ H \right] = z r/u\)) then, for every \(q \ge 1\),

$$\begin{aligned} \mathbb {E}\left[ \left| H - zr/u \right| ^q \right] \lesssim _q r^{\frac{q}{2}}. \end{aligned}$$(2.29)

Proof

We only prove concentration in the hypergeometric case, since it is classical for both Poisson and binomial random variables. We may assume that \(r \ge 1\), otherwise there is nothing to prove since \(H = \mathbb {E}\left[ H \right] = 0\). From [29, Theorem 1], we have, for \(\lambda \ge 2\),

where

As usual, writing

yields the bound

which is bounded from above by \(r^{q/2}\), since \(r \ge 1\). \(\square \)

3 Combinatorial optimization problems over bipartite graphs

3.1 Graphs

Although we are interested in random combinatorial optimization over Euclidean bipartite graphs, it is useful to recall some general terminology. A (finite, undirected) graph \(G = (V, E)\) is defined by a finite set \(V = V_G\) of vertices (or nodes) and a set of edges \(E = E_G\), which is a collection of unordered pairs \(e = \left\{ x,y \right\} \subseteq V\) with \(x \ne y\). A graph \(G'\) is a subgraph of G and we write \(G' \subseteq G\), if \(V_{G'} \subseteq V_G\) and \(E_{G'}\subseteq E_G\). The induced subgraph over a subset of vertices \(V' \subseteq V_G\) is defined as the subgraph \(G'\) with \(V_{G'} = V'\) and all the edges from \(E_G\) connecting vertices in \(V'\). It will be useful to denote by \(\emptyset \) the empty graph, i.e., \(V = \emptyset \), \(E = \emptyset \), which is a subgraph of any graph G.

Given a vertex \(x \in V\), its neighborhood in G is the set

The degree of x in G, \(\deg _G(x)\), is the number of elements in \(\mathcal {N}_G(x)\). Given \(\kappa \in \mathbb {N}\), a graph G is \(\kappa \)-regular if \(\deg _G(x) = \kappa \) for every \(x \in V_G\). We say that a subgraph \(G' \subseteq G\) spans \(V_G\) if \(V_{G'} = V_G\) and \(\mathcal {N}_{G'}(x) \ne \emptyset \) for ever \(x \in V_{G'}\). We say that two subgraphs \(G_1\), \(G_2\) of G are disjoint if \(V_{G_1} \cap V_{G_2} =\emptyset \). A graph G is connected if it cannot be decomposed as the union of two disjoint subgraphs \(G = G_1 \cup G_2\), i.e., \(V_G = V_{G_1} \cup V_{G_2}\) with both \(V_{G_1}\), \(V_{G_2} \ne \emptyset \), \(V_{G_1} \cap V_{G_2} = \emptyset \) and \(E_{G} =E_{G_1} \cup E_{G_2}\). Given \(\kappa \in \mathbb {N}\), \(\kappa \ge 1\), we say that a graph G is \(\kappa \)-connected if any subgraph \(G' \subseteq G\) obtained by removing from G \((\kappa -1)\)-edges is still connected. A cycle is a connected 2-regular graph, a tree is a connected graph which contains no cycles as subgraphs.

Given two graphs \(G_1\), \(G_2\) and an injective function \(\sigma : V_{G_1} \rightarrow V_{G_2}\), we let \(\sigma (E_1) = \left\{ \left\{ \sigma (x), \sigma (y) \right\} \,: \, \left\{ x,y \right\} \in E_{G_1} \right\} \). If \(\sigma (E_1) \subseteq E_2\), then we say that \(G_1\) embeds into \(G_2\) via \(\sigma \). If \(\sigma \) is bijective and \(\sigma (E_1) = E_2\), then we say that \(G_1\) is isomorphic to \(G_2\) via \(\sigma \).

A graph G is complete if \(E_G\) consists of all the pairs \(\left\{ x,y \right\} \subseteq V\) with \(x \ne y\). The complete graph over \(V = [n]\) is commonly denoted by \(\mathcal {K}_n\). Any complete graph G with n vertices is isomorphic to \(\mathcal {K}_n\). We say that the graph G is bipartite over a partition \(V = X \cup Y\) (i.e., \(X \cap Y = \emptyset \)), if every \(e\in E\) can be written as \(e= \left\{ x,y \right\} \) with \(x\in X\), \(y \in Y\). A graph is complete bipartite if it is bipartite over a partition \(V = X \cup Y\) and every pair \(\left\{ x,y \right\} \) with \(x\in X\), \(y \in Y\) is an edge. For any \(n, m \in \mathbb {N}\), any two complete bipartite graphs with X having n elements and Y having m elements are isomorphic. To fix a representative, we define \(\mathcal {K}_{n,m}\) as the complete bipartite graph over the vertex set \(V = [n]_1 \cup [m]_2\).

We introduce a weight function on edges \(w: E \rightarrow [0, \infty )\), \(w(e) = w(x,y)\). The total weight of G is then

A subgraph \(G' \subseteq G\) of a weighted graph is always intended with the restriction of w on \(E'\). Notice that for the empty graph \(\emptyset \subseteq G\) we have \(w(\emptyset ) = 0\).

We are interested in geometric realizations of graphs, where vertices are in correspondence with points in a metric space \((\Omega , \textsf{d})\), and the weight function is a power of the distance between the corresponding points, with a fixed exponent \(p>0\). Since we consider only complete and complete bipartite graphs, we introduce the following notation. Given \(\textbf{x}= (x_i)_{i=1}^n \subseteq \Omega \), we let \(\mathcal {K}(\textbf{x})\) be the complete graph \(\mathcal {K}_n\) endowed with the weight function \(w(i,j) = \textsf{d}(x_i, x_j)^p\). Similarly, given \(\textbf{x}= (x_i)_{i=1}^n\), \(\textbf{y}= (y_j)_{j=1}^m \subseteq \Omega \), we let \(\mathcal {K}(\textbf{x}, \textbf{y})\) denote the complete bipartite graph \(\mathcal {K}_{n,m}\) endowed with the weight function \(w((1,i), (2,j)) = \textsf{d}(x_i, y_j)^p\). Notice that the points in \(\textbf{x}\) and \(\textbf{y}\) may not be all distinct, but this will in fact occur with probability zero. If all the points are distinct, then we can and will identify the vertex set directly with the set of points \(\textbf{x}\) for \(\mathcal {K}(\textbf{x})\), and with the set of points in \(\textbf{x}\cup \textbf{y}\) for \(\mathcal {K}(\textbf{x}, \textbf{y})\). With this convention, if \(\textbf{x}= \textbf{x}^0 \cup \textbf{x}^1\), \(\textbf{y}= \textbf{y}^0 \cup \textbf{y}^1\), then both \(\mathcal {K}(\textbf{x}^0, \textbf{y}^0)\) and \(\mathcal {K}(\textbf{x}^1, \textbf{y}^1)\) are naturally seen as subgraphs of \(\mathcal {K}(\textbf{x}, \textbf{y})\).

3.2 Combinatorial problems

A combinatorial optimization problem \(\textsf{P}\) on weighted graphs is informally defined by prescribing, for every graph G, a set of subgraphs \(G' \subseteq G\), also called feasible solutions \(\mathcal {F}_{G}\), and, after introducing a weight w, by minimizing \(w(G')\) over all \(G'\in \mathcal {F}_G\).

Our aim is to study problems on random geometric realizations of complete bipartite graphs \(\mathcal {K}_{n,n}\), thus it is sufficient to define a combinatorial optimization problem over complete bipartite graphs as a collection of feasible solutions \(\textsf{P} = ( \mathcal {F}_{n,n})_{ n \in \mathbb {N}}\), with \(\mathcal {F}_{n,n}\) being the feasible solutions on \(\mathcal {K}_{n,n}\). We will mostly consider problems \(\textsf{P}\) that satisfy the following assumptions:

-

A1

(isomorphism) if \(\sigma \) is any isomorphism of \(\mathcal {K}_{n,n}\) into itself and \(G \in \mathcal {F}_{n,n}\), then \(\sigma (G) = (\sigma (V_G), \sigma (E_G)) \in \mathcal {F}_{n,n}\);

-

A2

(spanning) for every \(n \in \mathbb {N}\), \(\mathcal {F}_{n,n}\) is not empty and there exists \(\textsf{c}_{{\text {A2}}}>0\) such that, for \(n < \textsf{c}_{{\text {A2}}}\), \(\mathcal {F}_{n,n} = \left\{ \emptyset \right\} \) while for \(n \ge \textsf{c}_{{\text {A2}}}\), every \(G \in \mathcal {F}_{n,n}\) spans \(\mathcal {K}_{n,n}\);

-

A3

(bounded degree) there exists \(\textsf{c}_{{\text {A3}}}>0\) such that, for every \(n \in \mathbb {N}\) and every feasible solution \(G \in \mathcal {F}_{n,n}\), one has \(\deg _G(x) \le \textsf{c}_{{\text {A3}}}\) for every \(x \in G\).

Given \(\textsf{P} = ( \mathcal {F}_{n,n})_{n \in \mathbb {N}}\), we canonically extend it to graphs \(\mathcal {K}_{n,m}\), with \(n \ne m\), defining \(\mathcal {F}_{n,m}\) as the collection of all graphs \(\sigma (G)\) where \(G\in \mathcal {F}_{z,z}\), \(z = \min \left\{ n,m \right\} \) and \(\sigma \) is an isomorphism of \(\mathcal {K}_{n,m}\) into itself.

In the geometric setting, i.e., when \(\mathcal {K}_{n,m}\) is mapped into \(\mathcal {K}(\textbf{x},\textbf{y})\) with \(\textbf{x}= (x_i)_{i=1}^n\), \(\textbf{y}= (y_j)_{j=1}^m\subseteq \Omega \), with \((\Omega , \textsf{d})\) metric space, we introduce the following notation for the cost of a problem \(\textsf{P}\):

Recalling the definition of \(\mathcal {F}_{n,m}\) if \(n \ne m\), we also have the identity

Remark 3.1

Assumption A2 ensures that, if \(\min \left\{ |\textbf{x}|, |\textbf{y}| \right\} < \textsf{c}_{{\text {A2}}}\), then \(\mathcal {C}_{\textsf{P}}^p(\textbf{x}, \textbf{y}) = 0\).

Remark 3.2

If \((\Omega ', \textsf{d}')\) is a metric space and \(S: \Omega \rightarrow \Omega '\) is Lipschitz, i.e., for some constant \({\text {Lip}}S\) one has \(\textsf{d}'(S(x), S(y)) \le ({\text {Lip}}S) \textsf{d}(x, y)\) for every x, \(y\in \Omega \), then writing \(S(\textbf{x}) = (S(x_i))_{i=1}^n\), \(S(\textbf{y}) = (S(y_j))_{j=1}^m\), we clearly have the inequality

Remark 3.3

Similar definitions and assumptions may be given in the non-bipartite case, thus defining combinatorial optimization problems \(\textsf{P} = ( \mathcal {F}_{n})_{n \in \mathbb {N}}\) over complete graphs, as a collection of feasible solutions \(\mathcal {F}_{n}\) over the complete graph \(\mathcal {K}_{n}\).

3.3 Examples

Let us introduce some fundamental examples of these problems.

3.3.1 Assignment problem

The minimum weight bipartite matching problem, also called assignment problem, is defined letting \(\mathcal {F}_{n,n}\) be the set of perfect matchings in \(\mathcal {K}_{n,n}\), i.e., spanning subgraphs induced by a collection of edges which have no vertex in common (if \(n=0\) we simply let \(\mathcal {F}_{n,n} = \left\{ \emptyset \right\} \)). Feasible solutions are in correspondence with permutations \(\sigma \) over [n], letting

When \(n \ne m\), e.g. \(n \le m\), the same correspondence holds with the set of injective maps \(\sigma :[n] \rightarrow [m]\). Therefore, given a weight w on \(\mathcal {K}_{n,m}\), the cost of the assignment problem is

In the geometric case, i.e., on the weighted graph \(\mathcal {K}(\textbf{x}, \textbf{y})\) with \(\textbf{x}= (x_i)_{i=1}^n\), \(\textbf{y}=(y_j)_{j=1}^m \subseteq \Omega \) and \(w\left( (1,i), (2,j) \right) =\textsf{d}(x_i, y_{j} )^p\), this expression becomes

If \(n>m\), then one simply exchanges the roles of n and m.

Remark 3.4

If \(n=m\), Birkhoff’s theorem ensures equivalence between the bipartite matching problem and the optimal transport between the associated empirical measures \(\mu ^\textbf{x}= \sum _{i=1}^n \delta _{x_i}\), \(\mu ^\textbf{y}= \sum _{j=1}^n \delta _{y_j}\), i.e.,

Therefore, using the triangle inequality (2.18), we can bound from above as follows:

for every probability measure \(\lambda \) on \(\mathbb {R}^d\).

3.3.2 Travelling salesperson problem

The travelling salesperson problem (TSP) is usually defined on a general graph by prescribing as feasible solutions the cycles visiting each vertex exactly once (also called Hamiltonian cycles). In the complete bipartite case \(\mathcal {K}_{n,n}\), such cycles exist for every \(n \ge 2\), and assumptions A1, A2 and A3 are also clearly satisfied (letting \(\mathcal {F}_{n,n} = \left\{ \emptyset \right\} \) if \(n\in \left\{ 0,1 \right\} \)). Similarly as in the case of the assignment problem, feasible solutions are in this case in correspondence with pairs of permutations \(\sigma \), \(\tau \) over [n], letting

where we conventionally let \(\tau (n+1) = \tau (1)\) (we will always use summation \({\text {mod}} n\) in such cases). In words, \(\sigma \) and \(\tau \) prescribe the order at which the vertices are visited by the cycle. When \(n \ne m\), e.g. \(n \le m\), the same correspondence holds with injective maps \(\sigma \), \(\tau \) from [n] into [m].

Therefore, given a weight w on \(\mathcal {K}_{n,m}\), the cost of the TSP reads

In the geometric case, i.e., on the weighted graph \(\mathcal {K}(\textbf{x}, \textbf{y})\) with \(\textbf{x}= (x_i)_{i=1}^n\), \(\textbf{y}=(y_j)_{j=1}^m \subseteq \Omega \), this becomes

If \(n>m\), then one simply exchanges the roles of n and m.

The non-bipartite version of the TSP, i.e., on \(\mathcal {K}_n\), feasible solutions to the TSP are in correspondence with permutations \(\sigma \) over [n], letting

In the geometric case \(\textbf{x}= (x_i)_{i=1}^n \subseteq \Omega \), it becomes

3.3.3 Connected \(\kappa \)-factor problem

The TSP can be generalized in many directions. For example, since a cycle is a connected graph such that every vertex has degree 2, i.e., it is 2-regular, we may instead define as feasible solutions \(\kappa \)-regular spanning connected subgraphs, for a fixed \(\kappa \in \mathbb {N}\), \(\kappa \ge 2\). This defines a non-empty set of feasible solutions \(\mathcal {F}_{n,n}\) over \(\mathcal {K}_{n,n}\) if \(n \ge \kappa \) (otherwise we let \(\mathcal {F}_{n,n} = \left\{ \emptyset \right\} \)) and assumptions A1, A2 and A3 are easily seen to be satisfied. We refer to such problem as the (minimum weight) connected \(\kappa \)-factor problem. A simpler variant is to require that feasible solutions are \(\kappa \)-regular but not necessarily connected: this is simply known as (minimum weight) \(\kappa \)-factor problem. Let us notice that, for \(\kappa =1\), this reduces to the assignment problem.

Back to the connected \(\kappa \)-factor problem, a simple fact worth noticing, that we will use below, is that any connected \(\kappa \)-regular bipartite graph G is 2-connected, i.e., it remains connected even after removing a single edge. Assume that \(V_G = X \cup Y\), with \(X \cap Y = \emptyset \) and by contradiction let \(x\in X\), \(y \in Y\) be such that \(\left\{ x,y \right\} \in E_G\) and the subgraph \(G' \subseteq G\) with edge set \(E_{G'} = E_G {\setminus } \left\{ x,y \right\} \) is not connected: there are two disjoint subgraphs \(G_1'\), \(G_2'\) with \(x \in V_{G_1'}\), \(y \in V_{G_2'}\) with \(G' = G_1' \cup G_2'\). All the vertices in \(G_1'\) have degree \(\kappa \), except for x, whose degree is \(\kappa -1\). However, if we let \(n_X = |V_{G_1'} \cap X|\), \(n_Y = |V_{G_1'} \cap Y|\), then using the fact that the graph \(G_1'\) is bipartite we can count the number of edges as the sum of the degrees of the vertices in \(V_{G_1'} \cap X\) or equivalently of those in \(V_{G_1'} \cap Y\), which leads to the identity \(\kappa n_X - 1 = \kappa n_Y\), from which \(\kappa (n_X - n_Y ) = 1\), which gives a contradiction.

3.3.4 \(\kappa \)-bounded degree minimum spanning tree

The minimum weight spanning trees (MST) problem is defined by letting feasible solutions be all spanning subgraphs that are trees, i.e., connect and acyclic, whose existence on any given connected graph is guaranteed by standard algorithms. This problem however may not have uniformly bounded degree, thus assumption A3 may not be satisfied. Therefore, we restrict the set of feasible solutions to spanning trees over \(\mathcal {K}_{n,n}\) such that that each vertex degree is less than or equal to some fixed \(\kappa \ge 2\) (letting \(\mathcal {F}_{0,0}=\left\{ \emptyset \right\} \)). This problem, known as the \(\kappa \)-bounded degree minimum spanning tree (\(\kappa \)-MST), satisfies assumptions A1, A2 and A3: notice in particular that removing any edge from a Hamiltonian cycle, i.e., a feasible solution for the TSP, gives a 2-bounded degree minimum spanning tree.

We remark here that the \(\kappa \)-MST problem may be also directly defined over graphs \(\mathcal {K}_{n,m}\), with \(n \ne m\), with a non trivial set of feasible solutions (provided that \(|n-m|\) is not too large). However, also in this case we follow our general convention, so that if \(n \ne m\), the set \(\mathcal {F}_{n,m}\) does not contain spanning trees of \(\mathcal {K}_{n,m}\) but only spanning trees over subgraphs isomorphic to \(\mathcal {K}_{z,z}\) with \(z = \min \left\{ n,m \right\} \).

A simple fact that we will use below is that any \(G \in \mathcal {F}_{n,n}\) contains at least one leaf (i.e., a vertex with degree 1) in \([n]_1\) and one in \([n]_2\). This is because more generally any spanning tree over \(\mathcal {K}_{n,n}\) contains at least one leaf in \([n]_1\) and one in \([n]_2\). Indeed, assume by contradiction that there are no leaves in \([n]_1\). Then, since the tree spans, all the vertices in \([n]_1\) must have degree at least 2 (the graph is connected, hence every vertex has at least degree 1) and since no edges connect pairs of vertices in \([n]_1\), these are all distinct, hence the tree contains at least 2n edges, which contradicts the well-known fact that any tree (not necessarily bipartite) over 2n vertices must have \(2n-1\) edges.

In order to perform our analysis, we introduce two further assumptions that we discuss in the following subsections.

3.4 Local merging

Our analysis relies on a key subadditivity inequality, that ultimately follows by a stability assumption with respect to local merging operations, besides assumptions A1 and A3. Let us give the following general definition.

Definition 3.5

(gluing) Given a graph G and two disjoint subgraphs \(G_1\), \(G_2 \subseteq G\), we say that \(G' \subseteq G\) is obtained by gluing at \(x_1 \in V_{G_1}\), \(x_2 \in V_{G_2}\) if \(V_{G'} = V_{G_1}\cup V_{G_2}\),

and

In words, gluing at \(x_1\), \(x_2\) means that the two subgraphs are joined by (possibly) removing and adding edges connecting \(x_2\) to vertices from the neighborhood of \(x_1\) in \(G_1\), and similarly \(x_1\) to vertices from the the neighborhood of \(x_2\) in \(G_2\). In particular, we have that \(\mathcal {N}_{G'}(x) = \mathcal {N}_{G_1}(x)\) for every \(x\in V_{G_1} {\setminus } \left( \mathcal {N}_{G_1}(x_1)\cup \left\{ x_1 \right\} \right) \), and similarly \(\mathcal {N}_{G'}(x) = \mathcal {N}_{G_2}(x)\) for every \(x\in V_{G_2} {\setminus } \left( \mathcal {N}_{G_2}(x_2)\cup \left\{ x_2 \right\} \right) \).

Back to combinatorial optimization problems over bipartite graphs, our assumption is, loosely speaking, that any two (non empty) feasible solutions \(G \in \mathcal {F}_{n,n}\), \(G' \in \mathcal {F}_{n',n'}\), can be glued together yielding a feasible solution \(G \in \mathcal {F}_{n+n', n+n'}\). In fact, we also allow adding up to \(\textsf{c}\) edges, but only connecting vertices of G, where \(\textsf{c} \in \mathbb {N}\) is a constant (depending only on the problem \(\textsf{P}\)). Before giving a precise formulation of the assumption, we notice that G and \(G'\) are in general not disjoint: what we mean is that \(G'\) must be suitably “translated”. Precisely, given \(n \in \mathbb {N}\), we introduce the map

defined as

so that G, \(\tau ( G') \subseteq \mathcal {K}_{n+n', n+n'}\) are disjoint.

We consider therefore combinatorial optimization problems \(\textsf{P}\) over bipartite graphs which satisfy the following assumption:

-

A4

(local merging) there exists \(\textsf{c}_{{\text {A4}}}\ge 0\) such that, for every n, \(n' \in \mathbb {N}\), and \(G\in \mathcal {F}_{n,n}\), \(G'\in \mathcal {F}_{n',n'}\) with both \(G\ne \emptyset \) and \(G' \ne \emptyset \), one can find \(G'' \in \mathcal {F}_{n+n', n+n'}\) obtained by gluing G and \(\tau (G')\) at the vertices (1, 1), \((1,n+1)\) and possibly adding up to \(\textsf{c}_{{\text {A4}}}\) edges from those of \(\mathcal {K}_{n,n}\).

The reason why we also allow up to \(\textsf{c}_{{\text {A4}}}\) additional edges is to include some problems where connectedness may be destroyed by gluing, such as the \(\kappa \)-MST. This should be compared with the merging assumption [6, (A4)], where a bounded number of edges from the whole \(\mathcal {K}_{n+n', n+n'}\) instead is allowed to be added to the union \(G \cup \tau (G')\) (with our notation). Notice however that, in our case, since the extra edges are from \(\mathcal {K}_{n,n}\) it remains true that

which is a key condition that we use below.

All the problems described in the previous section satisfy A4.

Lemma 3.6

The TSP, the connected \(\kappa \)-factor problem (as well as the non connected one) and the \(\kappa \)-MST over complete bipartite graphs satisfy assumption A4.

Proof

Let \(G \in \mathcal {F}_{n,n}\), \(G'\in \mathcal {F}_{n', n'}\) be both non empty. Then (e.g. by assumption A2) \(\deg _{G}(1,1) \ge 1\) but also \(\deg _{\tau (G')}(1, n+1) \ge 1\). The basic idea is to pick \(y \in \mathcal {N}_{G}(1,1)\), \(y' \in \mathcal {N}_{G'}(1,n+1)\), remove the edges \(\left\{ (1,1), y \right\} \), \(\left\{ (1,n+1), y' \right\} \) and add instead \(\left\{ (1,1), y' \right\} \), \(\left\{ (1,n+1), y' \right\} \). This operation does not change the vertex degrees, in particular at (1, 1) and \((1,n+1)\).

For the TSP and more generally the connected \(\kappa \)-factor problem, the resulting graph \(G''\) is connected, because after removing a single edge, both graphs G and \(\tau (G')\) are still connected, and adding the new edges has the effect of connecting the two graphs (hence in this case \(\textsf{c}_{{\text {A4}}}= 0\)).

For the \(\kappa \)-bounded degree MST, we use the fact that the tree \(G \in \mathcal {F}_{n,n}\) must have at least one leaf in the set of \([n]_1\) and one in the set \([n]_2\). Therefore, we obtain a connected tree (with degree bounded by \(\kappa \)) if we add also one edge connecting two such leaves (hence is this case \(\textsf{c}_{{\text {A4}}}= 1\)). \(\square \)

3.5 Subadditivity inequality

Using all the assumptions introduced so far, in particular A4, we establish a fundamental subadditivity inequality.

Proposition 3.7

(Approximate subadditivity) Let \(\textsf{P}\) be a combinatorial optimization problem over bipartite graphs satisfying assumptions A1, A2, A3 and A4.

For a metric space \((\Omega ,\textsf{d})\) and a finite partition \(\Omega = \cup _{k=1}^K \Omega _k\), \(K \in \mathbb {N}\),

-

(i)

let \(\textbf{x}^0\), \(\textbf{y}^0 \subseteq \Omega \) be such that \(\min \left\{ |\textbf{x}^0|, |\textbf{y}^0| \right\} \ge \max \left\{ \textsf{c}_{{\text {A2}}}, K \right\} \),

-

(ii)

for every \(k =1, \ldots , K\), let \(\textbf{x}^k\), \(\textbf{y}^k \subseteq \Omega _k\) with \(|\textbf{x}^k| = |\textbf{y}^k| =n_k\), with either \(n_k \ge \textsf{c}_{{\text {A2}}}\) or \(n_k = 0\) (i.e., both families are empty),

-

(iii)

let \(\textbf{z}=(z_k)_{k=1}^K\) with \(z_k \in \Omega _k\), for every \(k=1,\ldots , K\).

Then, the following inequality holds:

The implicit constant depends only upon p, \(\textsf{c}_{{\text {A2}}}\), \(\textsf{c}_{{\text {A3}}}\) and \(\textsf{c}_{{\text {A4}}}\) (in particular not on K).

Remark 3.8

The role played by the points \(\textbf{z}\) is quite marginal, and indeed if \(\textbf{x}^0(\Omega _k) >0\) for every k, then by choosing \(z_k \in \textbf{x}^0_{\Omega _k}\), the term \(\textsf{M}^p(\textbf{z}, \textbf{x}^0)\) vanishes.

Proof

Recalling (3.1), up to replacing \(\textbf{x}^0\), \(\textbf{y}^0\) with subsets \(\textbf{x}'\), \(\textbf{y}'\) with \(|\textbf{x}'| = |\textbf{y}'| = \min \left\{ |\textbf{x}^0|, |\textbf{y}^0| \right\} \), we may also assume that \(|\textbf{x}^0| = |\textbf{y}^0|\). For every \(k=1, \ldots , K\) let \(G_k \subseteq \mathcal {K}(\textbf{x}^k, \textbf{y}^k)\) be a minimizer for \(\textsf{P}\). If \(n_k=0\), then \(G_k =\emptyset \). Otherwise, \(n_k \ge \textsf{c}_{{\text {A2}}}\), and by assumption A2 it is in particular non-empty and using Markov inequality, we can choose \(x^k \in \textbf{x}^k\) such that

For the last estimate we used that \(\deg _{x^k}(G_k) \le \textsf{c}_{{\text {A3}}}\).

Similarly, let \(G_0\subseteq \mathcal {K}(\textbf{x}^0, \textbf{y}^0)\) be a (also non-empty) minimizer for \(\mathcal {C}_{\textsf{P}}^p(\textbf{x}^0, \textbf{y}^0)\) and let \(\sigma :\{1, \ldots , K\}\rightarrow \{ 1, \ldots , |\textbf{x}^0|\}\) be an optimal matching between \(\textbf{z}\) and \(\textbf{x}^0\).

We iteratively use assumptions A1 and A4 to define feasible solutions

We begin by letting \(\tilde{G}_0 = G_0\). For \(k =1, \ldots , K\), having already defined \(\tilde{G}_{k-1}\), if \(n_k = 0\), then we simply let \(\tilde{G}_k = \tilde{G}_{k-1}\). Otherwise, we obtain a feasible solution \(\tilde{G}_k\) by gluing \(G_{k}\) with \(\tilde{G}_{k-1}\) at the vertices \(x^k\), \(x_{k}^0\) and adding up to \(\textsf{c}_{{\text {A4}}}\) edges from \(\mathcal {K}(\textbf{x}^k, \textbf{y}^k)\). The fact that we can glue at any such pair of vertices is due to assumption A1: up to isomorphisms we can assume that \(x^k\) corresponds to the abstract graph vertex (1, 1) and that \(x_{k}^0\) to \((1, n_k+1)\).

This construction gives the following inequality between the graph weights, if \(n_k \ne 0\):

while if \(n_k = 0\), we simply have \(w(\tilde{G}_k) = w(\tilde{G}_{k-1})\). We bound from above the last two terms in (3.8) as follows: first,

where we used that \(\deg _{x^k}(G_k) \le \textsf{c}_{{\text {A3}}}\). To bound the last term, we notice that each step in the construction we are locally merging at different points in \(\textbf{x}^0\): since no such points are adjacent because the graph is bipartite, using (3.6) by induction yields

which in particular contains at most \(\textsf{c}_{{\text {A3}}}\) elements, since \(G_0\) is feasible. Therefore,

Summing (3.8) upon \(k=1,\ldots , K\), we obtain (3.7) because

and, being all the points \(x^0_{\sigma (k)}\) different,

\(\square \)

3.6 Growth/regularity

The last assumption that we introduce for a combinatorial optimization problem \(\textsf{P}\) over bipartite graphs is a general upper bound for the cost when specialized to a geometric graph in the Euclidean cube \((0,1)^d\):

-

A5

(growth/regularity) There exists \(\textsf{c}_{{\text {A5}}}\ge 0\) such that, for every \(\textbf{x}, \textbf{y}\subseteq (0,1)^d\), we have

$$\begin{aligned} \mathcal {C}_{\textsf{P}}^p(\textbf{x}, \textbf{y}) \le \textsf{c}_{{\text {A5}}}\left( \min \left\{ |\textbf{x}|^{1-\frac{p}{d}}, |\textbf{y}|^{1-\frac{p}{d}} \right\} + \textsf{M}^p(\textbf{x},\textbf{y}) \right) .\end{aligned}$$(3.9)

Remark 3.9

Notice that if \(\Omega \subset (0,1)^d\) then (3.9) applies in particular for \(\textbf{x},\textbf{y}\subseteq \Omega \). By scaling we obtain that for every bounded set \(\Omega \) and every \(\textbf{x},\textbf{y}\subseteq \Omega \),

Using (3.1), we obtain at once that in order to establish that a given problem \(\textsf{P}\) satisfies (3.9) it is enough to consider the case where \(\textbf{x}\), \(\textbf{y}\subseteq (0,1)^d\) have the same number of elements.

Notice that this assumption seems slightly different with respect to the previous ones, as it explicitly refers to the cost for Euclidean realizations of the graph, instead of feasible solutions, and relies as well on the assignment problem. In fact, the constant \(\textsf{c}_{{\text {A5}}}\) depends upon the problem \(\textsf{P}\) but also on the dimension d and the exponent p, which however will be fixed in our derivations so we avoid to explicitly state it.

It is well known that quite general arguments, such as the space-filling curve heuristics [46, Chapter 2], lead to an upper bound in terms of \(n^{1-p/d}\) for non-bipartite combinatorial optimization problems over n points in a cube, under very mild assumptions, including those introduced above. Simple examples (e.g. let \(\textbf{x}\) consist of points close to a given vertex of the cube and \(\textbf{y}\) instead be all close to another vertex) show that similar bounds cannot hold for their bipartite counterparts, which explains the second term in the right-hand side of (3.9).

To establish it in our examples we follow the strategy from [13], where limit results for the random Euclidean bipartite TSP for \(p=d=2\) were first obtained.

Lemma 3.10

The TSP, the connected \(\kappa \)-factor problem (as well as the non-connected one) and the \(\kappa \)-MST problems over complete bipartite graphs satisfy assumption A5 (with a constant \(\textsf{c}_{{\text {A5}}}\) depending on \(\kappa \), p, d only).

Proof

Let us first observe that the cost of the \(\kappa \)-MST problem is always bounded from above by the cost of the minimum weight connected \(\kappa \)-factor problem, since given any connected \(\kappa \)-factor, one can extract from it a MST whose degree at every vertex is then bounded by \(\kappa \). Therefore it is sufficient to check that assumption A5 holds with \(\textsf{P}\) being the connected \(\kappa \)-factor problem, for any \(\kappa \ge 2\) (the case \(\kappa =2\) being the TSP).

For \((\Omega , \textsf{d})\) a general metric space and \(\textbf{x}, \textbf{y}\subseteq \Omega \) we establish first the bound

Combining this with the fact that when \((\Omega ,\textsf{d})\) is the unit cube \((0,1)^d\) with the Euclidean distance, \(\mathcal {C}_{\textsf{TSP}}(\textbf{x}) \lesssim |\textbf{x}|^ {1-p/d}\) (a well-known fact, proved e.g. via space-filling curves) this would conclude the proof of (3.9).

Assume without loss of generality that \(|\textbf{x}| = |\textbf{y}| = n \ge \kappa \) and let \(\rho \) be a permutation over [n] that induces an optimal assignment between \(\textbf{x}\) and \(\textbf{y}\). Consider then an optimizer for the TSP over \(\mathcal {K}(\textbf{x})\), which we also identify with a permutation \(\sigma \) over [n]. We then define the feasible solution \(G \in \mathcal {F}_{n,n}\) for the connected \(\kappa \)-factor problem whose edge set is

which generalizes \(E_{\sigma , \tau }\) from (3.5) with \(\tau = \rho \circ \sigma \) in the \(\kappa =2\) case, and as in (3.5) we use the summation \({\text {mod}} n\), i.e., \(i+ \ell = i + \ell - n\) if \(i+\ell >n\). Clearly, any vertex has degree \(\kappa \) and the graph is connected, since \(E_{G} \supset E_{\sigma , \tau }\).

In follows that

Using the triangle inequality for every i and \(\ell \), we bound from above

Summation upon i (keeping \(\ell \) fixed) gives

hence, after summing upon \(\ell = 0, \ldots , \kappa -1\), we obtain (3.10). \(\square \)

4 Convergence results for Poisson point processes

4.1 Point processes

The aim of this section is to prove the analogue of Theorem 1.1 for Poisson point processes (instead of i.i.d. points).

We define a point process on \(\mathbb {R}^d\) as a random finite family of points \(\mathcal {N}= (X_i)_{i=1}^N \subseteq \mathbb {R}^d\), i.e. a N-uple of random variables with values in \(\mathbb {R}^d\), where the total number of points N is also random and a.s. finite (if \(N=0\), then \(\mathcal {N}= \emptyset \)). We extend the notation for families of points to point processes (naturally defined for each realization of the random variables): for a process \(\mathcal {N}= \left( X_i \right) _{i=1}^N\), write \(\mu ^\mathcal {N}:= \sum _{i=1}^N \delta _{X_i}\) and, given a Borel \(\Omega \subseteq \mathbb {R}^d\), let \(\mathcal {N}(\Omega ) = \mu ^{\mathcal {N}}(\Omega )\) be the (random) number of variables belonging to \(\Omega \), while \(\mathcal {N}_{\Omega }\) denotes its restriction to \(\Omega \), i.e., the collection of the variables such that \(X_i \in \Omega \) (naturally re-indexed over \(i=1, \ldots , \mathcal {N}(\Omega )\), with the order inherited from the original process). Given two point processes \(\mathcal {N}= (X_i)_{i=1}^N\), \(\mathcal {M}= (Y_j)_{j=1}^M\), their union is \(\mathcal {N}\cup \mathcal {M}= (X_1, \ldots , X_N, Y_1, \ldots , Y_M)\).

Given a finite Borel measure \(\lambda \) on \(\mathbb {R}^d\), a Poisson point process \(\mathcal {N}^\lambda \) with intensity \(\lambda \) can be constructed from a random collection of i.i.d. variables \((X_i)_{i=1}^\infty \) with common law \(\lambda /\lambda (\mathbb {R}^d)\) and, after introducing a further independent Poisson variable \(N^{\lambda }\) with mean \(\lambda (\mathbb {R}^d)\), by considering only the first \(N^{\lambda }\) variables, i.e.,

A key property of a Poisson point process (with intensity \(\lambda \)) is that, given any countable Borel partition \(\mathbb {R}^d = \cup _{k} \Omega _k\), the variables \((\mathcal {N}^\lambda (\Omega _k))_k\) are independent Poisson variables, each with mean \(\lambda (\Omega _k)\) and, conditionally upon their value, the points in each \(\Omega _k\) are i.i.d. variables with common probability law \(\lambda \lnot \Omega _k / \lambda (\Omega _k)\). This property can be summarized by stating that the restrictions \((\mathcal {N}^\lambda _{\Omega _k})_{k}\) are independent Poisson point processes, with each \(\mathcal {N}^\lambda _{\Omega _k}\) having intensity given by the restriction \(\lambda \lnot \Omega _k\).

We will use the well-known thinning operation, which apparently dates back to Rényi [42], to split a Poisson point process \(\mathcal {N}^\lambda \) with intensity \(\lambda \) into two independent Poisson point processes, each containing approximatively a given fraction of points: for \(\eta \in [0,1]\), the \(\eta \)-thinning of a Poisson point process \(\mathcal {N}^{\lambda } = (X_i)_{i=1}^{N^\lambda }\) defines the two processes

where \(N^{(1-\eta )\lambda } = \sum _{i=1}^{N} Z_i\) is defined using a further sequence of i.i.d. Bernoulli random variables \((Z_i)_{i=1}^\infty \) with \(\mathbb {P}(Z_i=1)=1-\eta \) (independent from the variables \((X_i)_i\) and \(N^{\lambda }\)). Clearly, \(\mathcal {N}^{\lambda } = \mathcal {N}^{(1-\eta )\lambda }\cup \mathcal {N}^{\eta \lambda }\), and it is straightforward to prove that both are independent and Poisson point processes with intensities respectively \((1-\eta )\lambda \) and \(\eta \lambda \).

4.2 Statement

We are in a position to state the main result to be proved in this section.

Theorem 4.1

Let \(d \ge 3\), \(p\in [1,d)\) and let \(\textsf{P}= (\mathcal {F}_{n,n})_{n \in \mathbb {N}}\) be a combinatorial optimization problem over complete bipartite graphs such that assumptions A1, A2, A3, A4 and A5 hold. Then, there exists \(\beta _{\textsf{P}} \in (0, \infty )\) (depending on p and d) such that the following holds.

Let \(\Omega \subseteq \mathbb {R}^d\) be a bounded domain with Lipschitz boundary and such that (2.14) holds. Let \(\rho \) be a Hölder continuous probability density on \(\Omega \), uniformly strictly positive and bounded from above. For every \(n\in (0, \infty )\), let \(\mathcal {N}^{n\rho }\), \(\mathcal {M}^{n\rho }\) be independent Poisson point processes with intensity \(n \rho \) on \(\Omega \). Then,

Moreover, if \(\rho \) is the uniform density and \(\Omega \) is a cube or its boundary is \(C^2\), then the limit exists and equals the right-hand side.

After having introduced some general notation and proved some basic facts, we split the proof into four main cases. We deal first with the case of a uniform density on a cube and establish existence of the limit via subadditivity. Then, we consider Hölder densities on a cube and move next to general domains. Finally, we establish existence of the limit for uniform densities on domains with \(C^2\) boundary.

4.3 General facts

Although each case has its distinctive features, the underlying strategy is common and relies on Proposition 3.7 in combination with a preliminary application of the thinning operation. To avoid repetitions and introduce a general notation, we give a description of the construction and show a first lemma which uses the fundamental ideas upon which we elaborate in the next sections.

Let \(\mathcal {N}\), \(\mathcal {M}\) be two independent Poisson point processes on \(\Omega \) with common intensity given by a finite measure \(\lambda \). In our applications, \(\lambda \) is Lebesgue measure or \(\lambda = n \rho \), but for simplicity here we omit to specify it. We apply the \(\eta \)-thinning to \(\mathcal {N}= \mathcal {N}^{1-\eta } \cup \mathcal {N}^{\eta }\), obtaining independent Poisson point processes with respective intensities \((1-\eta )\lambda \), \(\eta \lambda \), and similarly to \(\mathcal {M}= \mathcal {M}^{1-\eta } \cup \mathcal {M}^{\eta }\). Given a finite Borel partition \(\Omega = \bigcup _{k=1}^K \Omega _k\), for each \(k=1, \ldots , K\), we pick a minimizer \(G_k\subseteq \mathcal {K}\left( \mathcal {N}^{1-\eta }_{\Omega _k}, \mathcal {M}^{1-\eta }_{\Omega _k} \right) \) for the problem

Writing

we notice that \(G_k = \emptyset \) if and only if \(Z_k < \textsf{c}_{{\text {A2}}}\) (by Remark 5.5 for \(p>1\), \(G_k\) is a.s. unique. For \(p=1\) we can consider a measurable selection).

We define point processes \(\mathcal {U}\), \(\mathcal {V}\) on \(\Omega \) by setting \(\mathcal {U}_{\Omega _k} \subseteq \mathcal {N}^{1-\eta }_{\Omega _k}\), \(\mathcal {V}_{\Omega _k} \subseteq \mathcal {M}^{1-\eta }_{\Omega _k}\), given by all the points, respectively in \(\mathcal {N}^{1-\eta }_{\Omega _k}\) and \(\mathcal {M}^{1-\eta }_{\Omega _k}\), which do not belong to the set of vertices of \(G_k\). In particular, if \(G_k = \emptyset \), then \(\mathcal {U}_{\Omega _k} = \mathcal {N}^{1-\eta }_{\Omega _k}\), \(\mathcal {V}_{\Omega _k} = \mathcal {M}^{1-\eta }_{\Omega _k}\). Notice that by construction the K pairs of processes \(\left( (\mathcal {U}_{\Omega _k}, \mathcal {V}_{\Omega _k}) \right) _{k=1}^K\) are independent, but for any k the two processes \(\mathcal {U}_{\Omega _k}\), \(\mathcal {V}_{\Omega _k}\) are not in general independent. For later use, we prove:

Lemma 4.2

For every \(k=1, \ldots , K\) such that

we have, for every \(q \ge 1\),

Proof

In the event

since \(\eta \in (0,1/2)\) we have that \(Z_k \ge \textsf{c}_{{\text {A2}}}\) hence by assumption A2, every feasible solution (in particular the optimal solution \(G_k\)) spans a subgraph of \(\mathcal {K}_{ \mathcal {N}^{1-\eta }(\Omega _k), \mathcal {M}^{1-\eta }(\Omega _k)}\) isomorphic to \(\mathcal {K}_{Z_k, Z_k}\), so that

Using (2.26), we have

By the union bound and (2.27) with \(n = (1-\eta ) \lambda (\Omega _k)\), \(\gamma =1/2\), we have

Therefore,