Abstract

We consider \(N\times N\) Hermitian random matrices H consisting of blocks of size \(M\ge N^{6/7}\). The matrix elements are i.i.d. within the blocks, close to a Gaussian in the four moment matching sense, but their distribution varies from block to block to form a block-band structure, with an essential band width M. We show that the entries of the Green’s function \(G(z)=(H-z)^{-1}\) satisfy the local semicircle law with spectral parameter \(z=E+\mathbf {i}\eta \) down to the real axis for any \(\eta \gg N^{-1}\), using a combination of the supersymmetry method inspired by Shcherbina (J Stat Phys 155(3): 466–499, 2014) and the Green’s function comparison strategy. Previous estimates were valid only for \(\eta \gg M^{-1}\). The new estimate also implies that the eigenvectors in the middle of the spectrum are fully delocalized.

Similar content being viewed by others

1 Introduction

Grassmann integration with supersymmetric (SUSY) methods is ubiquitous in the physics literature of random quantum systems, see e.g. the basic monograph of Efetov [7]. This approach is especially effective for analyzing the Green function in the middle of the bulk spectrum with spectral parameter close to the real axis, i.e. precisely in the regime where other methods often fail. The main algebraic strength lies in the fact that Gaussian integrals with Grassmann variables counterbalance the determinants obtained in the partition functions of complex Gaussian integrals. This greatly simplifies algebraic manipulations as it was demonstrated in several papers, see e.g. the proof of the absolutely continuous spectrum on the Bethe lattice by Klein [17] or the bounds on the Lyapunov exponents for random walks in random environment at the critical energy by Wang [29]. However, in theoretical physics Grassmann integrations are also commonly used as an analytic tool by performing saddle point analysis on the superspace coordinatized by complex and Grassmann variables. Since Grassmann variables lack the concept of size, the rigorous justification of this very appealing idea is notoriously difficult.

Initiated by Spencer (see [26] for a summary) and starting with the paper [4] by Disertori, Pinson and Spencer, only a handful of mathematical papers have succeeded in exploiting this powerful tool in an essentially analytic way. We still lack the mathematical framework of a full-fledged analysis on the superspace that would enable us to translate physics arguments into proofs directly, but a combination of refined algebraic identities from physics (such as the superbosonization formula) and a careful analysis have yielded results that are currently inaccessible with more standard probabilistic methods. In this paper we present such results on random band matrices that surpass a well known limitation of the recently developed probabilistic techniques to prove the local versions of the celebrated Wigner semicircle law. We start with introducing the physical motivation of our model.

The Hamiltonian of quantum systems on a graph with vertex set \(\varGamma \) is a self-adjoint matrix \(H= (h_{ab})_{a,b\in \varGamma }, H=H^*\). The matrix elements \(h_{ab}\) represent the quantum transition rates from vertex a to b. Disordered quantum systems have random matrix elements. We assume they are centered, \(\mathbb {E}h_{ab} =0\), and independent subject to the basic symmetry constraint \(h_{ab} = \bar{h}_{ba}\). The variance \(\sigma _{ab}^2: = \mathbb {E} |h_{ab}|^2\) represents the strength of the transition from a to b and we use a scaling where the norm \(\Vert H\Vert \) is typically order 1. The simplest case is the mean field model, where \(h_{ab}\) are identically distributed; this is the standard Wigner matrix ensemble [31]. The other prominent example is the Anderson model [2] or random Schrödinger operator, \(H= \varDelta +V\), where the kinetic energy \(\varDelta \) is the (deterministic) graph Laplacian and the potential \(V= ( V_x)_{x\in \varGamma }\) is an on-site multiplication operator with random multipliers. If \(\varGamma \) is a discrete \(\mathsf {d}\)-dimensional torus then only few matrix elements \(h_{ab}\) are nonzero and they connect nearest neighbor points in the torus, \(\text {dist}(a,b)\le 1\). This is in sharp contrast to the mean field character of the Wigner matrices.

Random band matrices naturally interpolate between the mean field Wigner matrices and the short range Anderson model. They are characterized by a parameter M, called the band width, such that the matrix elements \(h_{ab}\) for \(\text {dist}(a,b)\ge M\) are zero or negligible. If M is comparable with the diameter L of the system then we are in the mean field regime, while \(M\sim 1\) corresponds to the short range model.

The Anderson model exhibits a metal-insulator phase transition: at high disorder the system is in the localized (insulator) regime, while at small disorder it is in the delocalized (metallic) regime, at least in \(\mathsf {d}\ge 3\) dimensions and away from the spectral edges. The localized regime is characterized by exponentially decaying eigenfunctions and off diagonal decay of the Green’s function, while in the complementary regime the eigenfunctions are supported in the whole physical space. In terms of the localization length \(\ell \), the characteristic length scale of the decay, the localized regime corresponds to \(\ell \ll L\), while in the delocalized regime \(\ell \sim L\). Starting from the basic papers [1, 15], the localized regime is well understood, but the delocalized regime is still an open mathematical problem for the \(\mathsf {d}\)-dimensional torus.

Let \(N=L^{\mathsf {d}}\) be the number of vertices in the discrete torus. Since the eigenvectors of the mean field Wigner matrices are always delocalized [13, 14], while the short range models are localized, by varying the parameter M in the random band matrix, one expects a (de)localization phase transition. Indeed, for \(\mathsf {d}=1\) it is conjectured (and supported by non rigorous supersymmetric calculations [16]) that the system is delocalized for broad bands, \(M\gg N^{1/2}\) and localized for \(M\ll N^{1/2}\). The optimal power 1/2 has not yet been achieved from either sides. Localization has been shown for \(M\ll N^{1/8}\) in [23], while delocalization in a certain weak sense for the most eigenvectors was proven for \(M\gg N^{4/5}\) in [11]. Interestingly, for a special Gaussian model even the sine kernel behavior of the 2-point correlation function of the characteristic polynomials could be proven down to the optimal band width \(M\gg N^{1/2}\), see [19, 21]. Note that the sine kernel is consistent with the delocalization but does not imply it. We remark that our discussion concerns the bulk of the spectrum; the transition at the spectral edge is much better understood. In [25] it was shown with moment method that the edge spectrum follows the Tracy–Widom distribution, characteristic to mean field model, for \(M\gg N^{5/6}\), but it yields a different distribution for narrow bands, \(M\ll N^{5/6}\).

Delocalization is closely related to estimates on the diagonal elements of the resolvent \(G(z)=(H-z)^{-1}\) at spectral parameters with small imaginary part \(\eta =\mathsf {Im}z\). Indeed, if \(G_{ii}(E+i\eta )\) is bounded for all i and all \(E\in {\mathbb R }\), then each \(\ell ^2\)-normalized eigenvector \(\mathbf{{u}}\) of H is delocalized on scale \(\eta ^{-1}\) in a sense that \(\max _i |u_i|^2 \lesssim \eta \), i.e. u is supported on at least \(\eta ^{-1}\) sites. In particular, if \(G_{ii}\) can be controlled down to the scale \(\eta \sim 1/N\), then the system is in the complete delocalized regime.

For band matrices with band width M, or even under the more general condition \( \sigma _{ab}^2\le M^{-1}\), the boundedness of \(G_{ii}\) was shown down to scale \(\eta \gg M^{-1}\) in [14] (see also [12]). If \(M\gg N^{1/2}\), it is expected that \(G_{ii}\) remains bounded even down to \(\eta \gg N^{-1}\) which is the typical eigenvalue spacing, the smallest relevant scale in the model. However, the standard approach [12, 14] via the self-consistent equations for the Green’s function does not seem to work for \(\eta \le 1/M\); the fluctuation is hard to control. The more subtle approach using the self-consistent matrix equation in [11] could prove delocalization and the off-diagonal Green’s function profile that are consistent with the conventional quantum diffusion picture, but it was valid only for relatively large \(\eta \), far from \(M^{-1}\). Moment methods, even with a delicate renormalization scheme [24] could not break the barrier \(\eta \sim M^{-1}\) either.

In this paper we attack the problem differently; with supersymmetric (SUSY) techniques. Our main result is that \(G_{ii}(z)\) is bounded, and the local semicircle law holds for any \(\eta \gg N^{-1}\), i.e. down to the optimal scale, if the band width is not too small, \(M\gg N^{6/7}\), but under two technical assumptions. First, we consider a generalization of Wegner’s n-orbital model [22, 30], namely, we assume that the band matrix has a block structure, i.e. it consists of \(M\times M\) blocks and the matrix elements within each block have the same distribution. This assumption is essential to reduce the number of integration variables in the supersymmetric representation, since, roughly speaking, each \(M\times M\) block will be represented by a single supermatrix with 16 complex or Grassmann variables. Second, we assume that the distribution of the matrix elements matches a Gaussian up to four moments in the spirit of [28]. Supersymmetry heavily uses Gaussian integrations, in fact all mathematically rigorous works on random band matrices with supersymmetric method assume that the matrix elements are Gaussian, see [4–6, 19–21, 26, 27]. The Green’s function comparison method [14] allows one to compare Green’s functions of two matrix ensembles provided that the distributions match up to four moments and provided that \(G_{ii}\) are bounded. This was an important motivation to reach the optimal scale \(\eta \gg N^{-1}\).

In the next subsections we introduce the model precisely and state our main results. Our supersymmetric analysis was inspired by [20], but our observable, \(G_{ab}\), requires a partly different formalism, in particular we use the singular version of the superbosonization formula [3]. Moreover, our analysis is considerably more involved since we consider relatively narrow bands. In Sect. 1.3, we explain our novelties compared with [20].

1.1 Matrix model

Let \(H_N=(h_{ab})\) be an \(N\times N\) random Hermitian matrix, in which the entries are independent (up to symmetry), centered, complex variables. In this paper, we are concerned with \(H_N\) possessing a block band structure. To define this structure explicitly, we set the additional parameters \(M\equiv M(N)\) and \(W\equiv W(N)\) satisfying

For simplicity, we assume that both M and W are integers. Let \(S=(\mathfrak {s}_{jk})\) be a \(W\times W\) symmetric matrix, which will be chosen as a weighted Laplacian of a connected graph on W vertices. Now, we decompose \(H_N\) into \(W\times W\) blocks of size \(M\times M\) and relabel

where \(j\equiv j(a)\) and \(k\equiv k(b)\) are the spatial indices that describe the location of the block containing \(h_{ab}\) and \(\alpha \equiv \alpha (a)\) and \(\beta \equiv \beta (b)\) are the orbital indices that describe the location of the entry in the block. More specifically, we have

We will call \((j(a),\alpha (a))\) (resp. \((k(b),\beta (b))\)) as the spatial-orbital parametrization of a (resp. b). Moreover, we assume

That means, the variance profile of the random matrix \(\sqrt{M}H_N\) is given by

in which each entry represents the common variance of the entries in the corresponding block of \(\sqrt{M}H_N\).

1.2 Assumptions and main results

In the sequel, for some matrix \(A=(a_{ij})\) and some index sets \(\mathsf {I}\) and \(\mathsf {J}\), we introduce the notation \(A^{(\mathsf {I}|\mathsf {J})}\) to denote the submatrix obtained by deleting the ith row and jth column of A for all \(i\in \mathsf {I}\) and \(j\in \mathsf {J}\). We will adopt the abbreviation

In addition, we use \(||A||_{\max }:=\max _{i,j}|a_{ij}|\) to denote the max norm of A. Throughout the paper, we need some assumptions on S.

Assumption 1.1

(On S) Let \(\mathcal {G}=(\mathcal {V},\mathcal {E})\) be a connected simple graph with \(\mathcal {V}=\{1,\ldots , W\}\). Assume that S is a \(W\times W\) symmetric matrix satisfying the following four conditions.

-

(i)

S is a weighted Laplacian on \(\mathcal {G}\), i.e. for \(i\ne j\), we have \(\mathfrak {s}_{ij}>0\) if \(\{i,j\}\in \mathcal {E}\) and \(\mathfrak {s}_{ij}=0\) if \(\{i,j\}\not \in \mathcal {E}\), and for the diagonal entries, we have

$$\begin{aligned} \mathfrak {s}_{ii}=-\sum _{j:j\ne i}\mathfrak {s}_{ij},\quad \forall \; i=1,\ldots ,W. \end{aligned}$$ -

(ii)

\(\widetilde{S}\) defined in (1.2) is strictly diagonally dominant, i.e., there exists some constant \(c_0>0\) such that

$$\begin{aligned} 1+2\mathfrak {s}_{ii}>c_0,\quad \forall \; i=1,\ldots , W. \end{aligned}$$ -

(iii)

For the discrete Green’s functions, we assume that there exist some positive constants C and \(\gamma \) such that

$$\begin{aligned} \max _{i=1,\ldots , W}||(S^{(i)})^{-1}||_{\max }\le CW^{\gamma }. \end{aligned}$$(1.4) -

(iv)

There exists a spanning tree \(\mathcal {G}_0=(\mathcal {V},\mathcal {E}_0)\subset \mathcal {G}\), on which the weights are bounded below, i.e. for some constant \(c>0\), we have

$$\begin{aligned} \mathfrak {s}_{ij}\ge c, \quad \forall \; \{i,j\}\in \mathcal {E}_0. \end{aligned}$$

Remark 1.2

From Assumption 1.1 (ii), we easily see that

Later, in Lemma 7.4, we will see that \(||(S^{(i)})^{-1}||_{\max }\le CW^2\) always holds. Hence, we may assume \(\gamma \le 2\).

Example 1.1

Let \(\varDelta \) be the standard discrete Laplacian on the \(\mathsf {d}\)-dimensional torus \([1,\mathfrak {w}]^{\mathsf {d}}\cap \mathbb {Z}^{\mathsf {d}}\), with periodic boundary condition, where \(\mathfrak {w}=W^{1/\mathsf {d}}\). Here by standard we mean the weights on the edges of the box are all 1. Now let \(S=a\varDelta \) for some positive constant \(a<1/4\mathsf {d}\). It is then easy to check Assumption 1.1 (i), (ii) and (iv) are satisfied. In addition, if \(\mathsf {d}=1\), it is well known that we can choose \(\gamma =1\) in Assumption 1.1 (iii). For \(\mathsf {d}\ge 3\), one can choose \(\gamma =0\). For \(\mathsf {d}=2\), one can choose \(\gamma =\varepsilon \) for arbitrarily small constant \(\varepsilon \). For instance, one can refer to [8] for more details.

For simplicity, we also introduce the notation

where \(\mathbf {1}_M\) is the M-dimensional vector whose components are all 1 and \(\widetilde{S}\) is the variance matrix in (1.2). It is elementary that

Our assumption on M depends on the constant \(\gamma \) in Assumption 1.1 (iii).

Assumption 1.3

(On M) We assume that there exists a (small) positive constant \(\varepsilon _1\) such that

Remark 1.4

A direct consequence of (1.8) and \(N=MW\) is

Especially, when \(\gamma =1\), one has \(M\gg N^{6/7}\). Actually, through a more involved analysis, (1.8) [or (1.9)] can be further improved. At least, for \(\gamma \le 1\), we expect that \(M\gg N^{4/5}\) is enough. However, we will not pursue this direction here.

Besides Assumption 1.1 on the variance profile of H, we need to impose some additional assumption on the distribution of its entries. To this end, we temporarily employ the notation \(H^g=(h^g_{ab})\) to represent a random block band matrix with Gaussian entries, satisfying (1.1), Assumptions 1.1 and 1.3.

Assumption 1.5

(On distribution) We assume that for each \(a,b\in \{1,\ldots , N\}\), the moments of the entry \(h_{ab}\) match those of \(h_{ab}^g\) up to the 4th order, i.e.

In addition, we assume the distribution of \(h_{ab}\) possesses a subexponential tail, namely, there exist positive constants \(c_1\) and \(c_2\) such that for any \(\tilde{\gamma }>0\),

holds uniformly for all \(a,b=1,\ldots , N\).

The four moment condition (1.10) in the context of random matrices first appeared in [28].

To state our results, we will need the following notion on the comparison of two random sequences, which was introduced in [9, 12].

Definition 1.6

(Stochastic domination) For some possibly N-dependent parameter set \(\mathsf {U}_N\), and two families of random variables \(\mathsf {X}=(\mathsf {X}_N(u): N\in \mathbb {N},u\in \mathsf {U}_{N})\) and \(\mathsf {Y}=(\mathsf {Y}_N(u): N\in \mathbb {N},u\in \mathsf {U}_N)\), we say that \(\mathsf {X}\) is stochastically dominated by \(\mathsf {Y}\), if for all \(\varepsilon '>0\) and \(D>0\) we have

for all sufficiently large \(N\ge N_0(\varepsilon ', D)\). In this case we write \(\mathsf {X}\prec \mathsf {Y}\).

The set \(\mathsf {U}_N\) is omitted from the notation \(\mathsf {X}\prec \mathsf {Y}\). Whenever we want to emphasize the role of \(\mathsf {U}_N\), we say that \(\mathsf {X}_N(u)\prec \mathsf {Y}_N(u)\) holds for all \(u\in \mathsf {U}_N\). For example, by (1.1) and Assumption 1.5, we have

Note that here \(\mathsf {U}_N=\{u=(a,b): a,b=1,\ldots ,N\}\). In some applications, we also use this notation for random variables without any parameter or with a fixed parameter, i.e. the set of parameters \(\mathsf {U}_N\) plays no role.

Note that \(\widetilde{S}\) is doubly stochastic. It is known that the empirical eigenvalue distribution of \(H_N\) converges to the semicircle law, whose density function is given by

see [14] for instance. We denote the Green’s function of \(H_N\) by

and its (a, b) matrix element is \(G_{ab}(z)\). Throughout the paper, we will always use E and \(\eta \) to denote the real and imaginary part of z without further mention. In addition, for simplicity, we suppress the subscript N from the notation of the matrices here and there. The Stieltjes transform of \(\varrho _{sc}(x)\) is

where we chose the branch of the square root with positive imaginary part for \(z\in \mathbb {C}^+\). Note that \(m_{sc}(z)\) is a solution to the following self-consistent equation

The semicircle law also holds in a local sense, see Theorem 2.3 in [12]. For simplicity, we cite this result with a slight modification adjusted to our assumption.

Proposition 1.7

(Erdős, Knowles, Yau, Yin, [12]) Let H be a random block band matrix satisfying Assumptions 1.1, 1.3 and 1.5. Then, for any fixed small positive constants \(\kappa \) and \(\varepsilon \), we have

Remark 1.8

We remark that Theorem 2.3 in [12] was established under a more general assumption \(\sum _k \sigma _{jk}^2=1\) and \(\sigma _{jk}^2\le C/M\). Especially, the block structure on the variance profile is not needed. In addition, Theorem 2.3 in [12] also covers the edges of the spectrum, which will not be discussed in this paper. We also refer to [14] for a previous result, see Theorem 2.1 therein.

Our aim in this paper is to extend the local semicircle law to the regime \(\eta \gg N^{-1}\) and replace M with N in (1.15). More specifically, we will work in the following set, defined for arbitrarily small constant \(\kappa >0\) and any sufficiently small positive constant \( \varepsilon _2:=\varepsilon _2(\varepsilon _1)\),

Throughout the paper, we will assume that \(\varepsilon _2\) is much smaller than \(\varepsilon _1\), see (1.8) for the latter. Specifically, there exists some large enough constant C such that \(\varepsilon _2\le \varepsilon _1/C\).

Theorem 1.9

(Local semicircle law) Suppose that H is a random block band matrix satisfying Assumptions 1.1, 1.3 and 1.5. Let \(\kappa \) be an arbitrarily small positive constant and \(\varepsilon _2\) be any sufficiently small positive constant. Then

for all \(z\in \mathbf {D}(N,\kappa ,\varepsilon _2)\).

Remark 1.10

In fact, (1.17) together with the fact that \(G_{ab}(z)\) and \(m_{sc}(z)\) are Lipschitz functions of z with Lipschitz constant \(\eta ^{-2}\) imply the uniformity of the estimate in z in the following stronger sense

Remark 1.11

The restriction \(|E|\le \sqrt{2}-\kappa \) in (1.16) is technical. We believe the result can be extended to the whole bulk regime of the spectrum, i.e., \(|E|\le 2-\kappa \). The upper bound of \(\eta \) in (1.16) is also technical. However, for \(\eta > M^{-1}N^{\varepsilon _2}\), one can control the Green’s function by (1.15) directly.

Let \(\lambda _1,\ldots ,\lambda _N\) be the eigenvalues of \(H_N\). We denote by \(\mathbf {u}_i:=(u_{i1},\ldots , u_{iN})\) the normalized eigenvector of \(H_N\) corresponding to \(\lambda _i\). From Theorem 1.9, we can also get the following delocalization property for the eigenvectors.

Theorem 1.12

(Complete delocalization) Let H be a random block band matrix satisfying Assumptions 1.1, 1.3 and 1.5. We have

Remark 1.13

We remark that delocalization in a certain weak sense was proven in [11] for an even more general class of random band matrices if \(M\gg N^{4/5}\). However, Theorem 1.12 asserts delocalization for all eigenvectors in a very strong sense (supremum norm), while Proposition 7.1 of [11] stated that most eigenvectors are delocalized in a sense that their substantial support cannot be too small.

1.3 Outline of the proof strategy and novelties

In this section, we briefly outline the strategy for the proof of Theorem 1.9.

The first step, which is the main task of the whole proof, is to establish the following Theorem 1.15, namely, a prior estimate of the Green’s function in the Gaussian case. For technical reason, we need the following slight modification of Assumption 1.3, to state the result.

Assumption 1.14

(On M) Let \(\varepsilon _1\) be the small positive constant in Assumption 1.3. We assume

In the regime \(M\ge N(\log N)^{-10}\), we see that (1.17) anyway follows from (1.15) directly.

Theorem 1.15

Assume that H is a Gaussian block band matrix, satisfying Assumptions 1.1 and 1.14. Let n be any fixed positive integer. Let \(\kappa \) be an arbitrarily small positive constant and \(\varepsilon _2\) be any sufficiently small positive constant. There is \(N_0=N_0(n)\), such that for all \(N\ge N_0\) and all \(z\in \mathbf {D}(N,\kappa ,\varepsilon _2)\), we have

for some positive constant \(C_0\) independent of n and z.

Remark 1.16

Much more delicate analysis can show that the prefactor \(N^{C_0}\) can be improved to some n-dependent constant \(C_n\). We refer to Sect. 12 for further comment on this issue.

Using the definition of stochastic domination in Definition 1.6, a simple Markov inequality shows that (1.21) implies

The proof of Theorem 1.15 is the main task of our paper. We will use the supersymmetry method. We partially rely on the arguments from Shcherbina’s work [20] concerning universality of the local 2-point function and we develop new techniques to treat our observable, the high moment of the entries of G(z), under a more general setting. We will comment on the novelties later in this subsection.

The second step is to generalize Theorem 1.15 from the Gaussian case to more general distribution satisfying Assumption 1.5, via a Green’s function comparison strategy initiated in [14], see Lemma 2.1 below.

The last step is to use Lemma 2.1 and its Corollary 2.2 to prove our main theorems. Using (1.22) above to bound the error term in the self-consistent equation for the Green’s function, we can prove Theorem 1.9 by a continuity argument in z, with the aid of the initial estimate for large \(\eta \) provided in Proposition 1.7. Theorem 1.12 will then easily follow from Theorem 1.9.

The second and the last steps are carried out in Sect. 2. The main body of this paper, Sects. 3–11 is devoted to the proof of Theorem 1.15.

One of the main novelty of this work is to combine the supersymmetry method and the Green’s function comparison strategy to go beyond the Gaussian ensemble, which was so far the only random band matrix ensemble amenable to the supersymmetry method, as mentioned at the beginning. The comparison strategy requires an apriori control on the individual matrix elements of the Green’s function with high probability [(see (1.22)], this is one of our main motivations behind Theorem 1.15.

Although we consider a different observable than [20], many technical aspects of the supersymmetric analysis overlaps with [20]. For the convenience of the reader, we now briefly introduce the strategy of [20], and highlight the main novelties of our work.

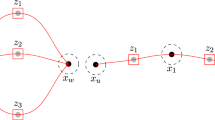

In [20], the author considers the 2-point correlation function of the trace of the resolvent of the Gaussian block band matrix H, with the variance profile \(\widetilde{S}=1+a\varDelta \), under the assumption \(M\sim N\) (note that we use M instead of W in [20] for the size of the blocks). The 2-point correlation function can be expressed in terms of a superintegral of a superfunction \(F(\{\breve{\mathcal {S}}_i\}_{i=1}^W)\) with a collection of \(4\times 4\) supermatrices \(\breve{\mathcal {S}}_i:=\mathcal {Z}^*_i\mathcal {Z}_i\). Here for each \(i, \mathcal {Z}_i=(\varPsi _{1,i},\varPsi _{2,i},\varPhi _{1,i},\varPhi _{2,i})\) is an \(M\times 4\) matrix and \(\mathcal {Z}^*_i\) is its conjugate transpose, where \(\varPsi _{1,i}\) and \(\varPsi _{2,i}\) are Grassmann M-vectors whilst \(\varPhi _{1,i}\) and \(\varPhi _{2,i}\) are complex M-vectors. Then, by using the superbosonization formula in the nonsingular case (\(M\ge 4\)) from [18], one can transform the superintegral of \(F(\{\breve{\mathcal {S}}_i\}_{i=1}^W)\) to a superintegral of \(F(\{\mathcal {S}_i\}_{i=1}^W)\), where each \(\mathcal {S}_i\) is a supermatrix akin to \(\breve{\mathcal {S}}_i\), but only consists of 16 independent variables (either complex or Grassmann). We will call the integral representation of the observable after using the superbosonization formula as the final integral representation. Schematically it has the form

for some functions \(\mathsf {g}(\cdot ), \mathsf {f}_c(\cdot )\) and \(\mathsf {f}_g(\cdot )\), where we used the abbreviation \(\mathcal {S}:=\{\mathcal {S}_i\}_{i=1}^W\) and \(\mathcal {S}_c\) and \(\mathcal {S}_g\) represents the collection of all complex variables and Grassmann variables in \(\mathcal {S}\), respectively. Here, \(\mathsf {g}(\mathcal {S}_c)\) and \(\mathsf {f}_c(\mathcal {S}_c)\) are some complex functions and \(\mathsf {f}_g(\mathcal {S}_g, \mathcal {S}_c)\) will be mostly regarded as a function of the Grassmann variables with complex variables as its parameters. The number of variables (either complex or Grassmann) in the final integral representation then turns out to be of order W, which is much smaller than the original order N. In fact, in [20] it is assumed that \(W=O(1)\) although the author also mentions the possibility to deal with the case \(W\sim N^{\varepsilon }\) for some small positive \(\varepsilon \), see the remark below Theorem 1 therein.

Performing a saddle point analysis for the complex measure \(\exp \{M\mathsf {f}_c(\mathcal {S}_c)\}\), one can restrict the integral in a small vicinity of some saddle point, say, \(\mathcal {S}_c=\mathcal {S}_{c0}\). It turns out that \(\mathsf {f}_c(\mathcal {S}_{c0})=0\) and \(\mathsf {f}_c(\mathcal {S}_c)\) decays quadratically away from \(\mathcal {S}_{c0}\). Consequently, by plugging in the saddle point \(\mathcal {S}_{c0}\), one can estimate \(\mathsf {g}(\mathcal {S}_c)\) by \(\mathsf {g}(\mathcal {S}_{c0})\) directly. However, for \(\exp \{M\mathsf {f}_c(\mathcal {S}_c)\}\) and \(\exp \{\mathsf {f}_g(\mathcal {S}_g, \mathcal {S}_c)\}\), one shall expand them around the saddle point. Roughly speaking, in some vicinity of \(\mathcal {S}_{c0}\), one will find that the expansions read

Here \(\mathbf {u}\) is a real vector of dimension O(W), which is essentially a vectorization of \(\sqrt{M}(\mathcal {S}_c-\mathcal {S}_{c0}); \mathsf {e}_c(\mathbf {u})=o(1)\) is some error term; \(\varvec{\rho }\) and \(\varvec{\tau }\) are two Grassmann vectors of dimension O(W). \({\mathbb {H}}\) is a complex matrix [(c.f. (9.26)], and \(\mathbb {A}\) is a complex matrix with positive-definite Hermitian part [(the explicit form of \(\mathbb {A}\) can be read from (8.30)]. Moreover, \(\mathbb {A}\) is closely related to \(\mathbb {H}\) in the sense that determinant of a certain minor of \(\mathbb {H}\) (after two rows and two columns removed) is proportional to the square root of the determinant of \( \mathbb {A}\), up to trivial factors. In addition, \(\mathsf {p}(\varvec{\rho },\varvec{\tau },\mathbf {u})\) is the expansion of \(\exp \{\mathsf {f}_g(\mathcal {S}_g, \mathcal {S}_c)-\mathsf {f}_g(\mathcal {S}_g, \mathcal {S}_{c0})\}\), which possesses the form

where \(\mathsf {p}_\ell (\varvec{\rho },\varvec{\tau },\mathbf {u})\) is a polynomial of the components of \(\varvec{\rho }\) and \(\varvec{\tau }\) with degree \(2\ell \), regarding \(\mathbf {u}\) as fixed parameters. Now, keeping the leading order term of \(\mathsf {p}(\varvec{\rho },\varvec{\tau },\mathbf {u})\), and discarding the remainder terms, one can get the final estimate of the integral by taking the Gaussian integral over \(\mathbf {u}, \varvec{\rho }\) and \(\varvec{\tau }\). This completes the summary of [20].

Similarly to [20], we also use the superbosonization formula to reduce the number of variables and perform the saddle point analysis on the resulting integral. However, owing to the following three main aspects, our analysis is significantly different from [20].

-

(Different observable) Our objective is to compute high moments of the single entry of the Green’s function. By using Wick’s formula (see Proposition 3.1), we express \(\mathbb {E}|G_{jk}|^{2n}\) in terms of a superintegral of some superfunction of the form

$$\begin{aligned} \tilde{F}\left( \{\varPsi _{a,j},\varPsi ^*_{a,j},\varPhi _{a,j}, \varPhi ^*_{a,j}\}_{\begin{array}{c} a=1,2;\\ j=1,\ldots ,W \end{array}}\right) :=\left( \bar{\phi }_{1,q,\beta }\phi _{1,p,\alpha }\bar{\phi }_{2,p,\alpha }\phi _{2,q,\beta }\right) ^nF(\{\breve{\mathcal {S}}_i\}_{i=1}^W) \end{aligned}$$for some \(p,q\in \{1,\ldots , W\}\) and \(\alpha ,\beta \in \{1,\ldots ,M\}\), where \(\phi _{1,p,\alpha }\) is the \(\alpha \)th coordinate of \(\varPhi _{1,p}\), and the others are defined analogously. Unlike the case in [20], \(\tilde{F}\) is not a function of \(\{\breve{\mathcal {S}}_i\}_{i=1}^W\) only. Hence, using the superbosonization formula to change \(\breve{\mathcal {S}}_i\) to \(\mathcal {S}_i\) directly is not feasible in our case. In order to handle the factor \(\big (\bar{\phi }_{1,q,\beta }\phi _{1,p,\alpha }\bar{\phi }_{2,p,\alpha }\phi _{2,q,\beta }\big )^n\), the main idea is to split off certain rank-one supermatrices from \(\breve{\mathcal {S}}_p\) and \(\breve{\mathcal {S}}_q\) such that this factor can be expressed in terms of the entries of these rank-one supermatrices. Then we use the superbosonization formula not only in the nonsingular case from [18] but also in the singular case from [3] to change and reduce the variables, resulting the final integral representation of \(\mathbb {E}|G_{jk}|^{2n}\). Though this final integral representation, very schematically, is still of the form (1.23), due to the decomposition of the supermatrices \(\breve{\mathcal {S}}_p\) and \(\breve{\mathcal {S}}_q\), it is considerably more complicated than its counterpart in [20]. Especially, the function \(\mathsf {g}(\mathcal {S}_c)\) differs from its counterpart in [20], and its estimate at the saddle point follows from a different argument.

-

(Small band width) In [20], the author considers the case that the band width M is comparable with N, i.e. the number of blocks W is finite. Though the derivation of the 2-point correlation function is highly nontrivial even with such a large band width, our objective, the local semicircle law and delocalization of the eigenvectors, however, can be proved for the case \(M\sim N\) in a similar manner as for the Wigner matrix (\(M=N\)), see [12, 14]. In our work, we will work with much smaller band width to go beyond the results in [12, 14], see Assumption 1.3. Several main difficulties stemming from a narrow band width can be heuristically explained as follows.

At first, let us focus on the integral over the small vicinity of the saddle point, in which the exponential functions in the integrand in (1.23) approximately look like (1.24).

We regard the first term in (1.24) as a complex Gaussian measure, of dimension O(W). When \(W\sim 1\), one can discard the error term \(\mathsf {e}_c(\mathbf {u})\) directly and perform the Gaussian integral over \(\mathbf {u}\), due to the fact \(\int \mathrm{d}\mathbf {u}\exp \{-\mathbf {u}'\mathsf {Re}(\mathbb {A})\mathbf {u}\}|\mathsf {e}_c(\mathbf {u})|=o(1)\). However, such an estimate is not allowed when \(W\sim N^{\varepsilon }\) (say), because the normalization of the measure \(\exp \{-\mathbf {u}'\mathsf {Re}(\mathbb {A})\mathbf {u}\}\) might be exponentially larger than that of \(\exp \{-\mathbf {u}'\mathbb {A}\mathbf {u}\}\). In order to handle this issue, in Sect. 8.2, we will do a second deformation of the contours of the variables in \(\mathbf {u}\), following the steepest descent paths exactly, whereby we can transform the complex Gaussian measure to a real one (c.f., (8.45)), thus the error term of the integral can be controlled.

Now, we turn to the second term in (1.24). When \(W\sim 1\), there are only finitely many Grassmann variables. Hence, the complex coefficient of each term in the polynomial \(\mathsf {p}(\varvec{\rho }, \varvec{\tau },\mathbf {u})\), which is of order \(M^{-\ell /2}\) for some \(\ell \in \mathbb {N}\) (see (1.25)), actually controls the magnitude of the integral of this term against the Gaussian measure \(\exp \{-\varvec{\rho }'\mathbb {H}\varvec{\tau }\}\). Consequently, in case of \(W\sim 1\), it suffices to keep the leading order term (according to \(M^{-\ell /2}\)), one may discard the others trivially, and compute the Gaussian integral over \(\varvec{\rho }\) and \(\varvec{\tau }\) explicitly. However, when \(W\sim N^{\varepsilon }\) (say), in light of the Wick’s formula (3.2) and the fact that the coefficients are of order \(M^{-\ell /2}\), the order of the integral of each term of \(\mathsf {p}(\varvec{\rho }, \varvec{\tau },\mathbf {u})\) against the Gaussian measure reads \(M^{-\ell /2}\det \mathbb {H}^{(\mathsf {I}|\mathsf {J})}\) for some index sets \(\mathsf {I}\) and \(\mathsf {J}\) and some \(\ell \in \mathbb {N}\). Due to the fact \(W\sim N^{\varepsilon }, \det \mathbb {H}^{(\mathsf {I}|\mathsf {J})}\) is typically exponential in W. Hence, it is much more complicated to determine and compare the orders of the integrals of all \(e^{O(W)}\) terms. In Sect. 9.1, in particular using Assumption 1.1 (iii) and Lemma 9.4, we perform a unified estimate for the integrals of all the terms, rather than simply estimate them by \(M^{-\ell /2}\).

In addition, the analysis for the integral away from the vicinity of the saddle point in our work is also quite different from [20]. Actually, the integral over the complement of the vicinity can be trivially ignored in [20], since each factor in the integrand of (1.23) is of order 1, thus gaining any o(1) factor for the integrand outside the vicinity is enough for the estimate. However, in our case, either \(\exp \{M\mathsf {f}_c(\mathcal {S}_c)\}\) or \(\int \mathrm{d} \mathcal {S}_g \exp \{\mathsf {f}_g(\mathcal {S}_g, \mathcal {S}_c)\}\) is essentially exponential in W. This fact forces us to provide an apriori bound for \(\int \mathrm{d} \mathcal {S}_g \exp \{\mathsf {f}_g(\mathcal {S}_g, \mathcal {S}_c)\}\) in the full domain of \(\mathcal {S}_c\) rather than in the vicinity of the saddle point only. This step will be done in Sect. 6. In addition, in Sect. 7, an analysis of the tail behavior of the measure \(\exp \{M\mathsf {f}_c(\mathcal {S}_c)\}\) will also be performed, in order to control the integral away from the vicinity of the saddle point.

-

(General variance profile \(\widetilde{S}\)) In [20], the authors considered the special case \(S=a\varDelta \) with \(a<1/4\mathsf {d}\). We generalize the discussion to more general weighted Laplacians S satisfying Assumption 1.1, which, as a special case, includes the standard Laplacian \(\varDelta \) for any fixed dimension \(\mathsf {d}\).

1.4 Notation and organization

Throughout the paper, we will need some notation. At first, we conventionally use U(r) to denote the unitary group of degree r, as well, U(1, 1) denotes the group of \(2\times 2\) matrices Q obeying

Furthermore, we denote

Recalling the real part E of z, we will frequently need the following two parameters

Correspondingly, we define the following four matrices

We remark here \(D_\pm \) does not mean “\(D_+\) or \(D_-\)”. In addition, we introduce the matrix

For simplicity, we introduce the following notation for some domains used throughout the paper.

For some \(\ell \times \ell \) Hermitian matrix A, we use \(\lambda _1(A)\le \cdots \le \lambda _\ell (A)\) to represent its ordered eigenvalues. For some possibly N-dependent parameter set \(\mathsf {U}_N\), and two families of complex functions \(\{a_N(u): N\in \mathbb {N}, u\in \mathsf {U}_N\}\) and \(\{b_N(u): N\in \mathbb {N}, u\in \mathsf {U}_N\}\), if there exists a positive constant \(C>1\) such that \(C^{-1}|b_N(u)|\le |a_N(u)|\le C |b_N(u)|\) holds uniformly in N and u, we write \(a_N(u)\sim b_N(u)\). Conventionally, we use \(\{\mathbf {e}_i:i=1,\ldots , \ell \}\) to denote the standard basis of \(\mathbb {R}^{\ell }\), in which the dimension \(\ell \) has been suppressed for simplicity. For some real quantities a and b, we use \(a\wedge b\) and \(a\vee b\) to represent \(\min \{a,b\}\) and \(\max \{a,b\}\), respectively.

Throughout the paper, \(c, c', c_1, c_2, C, C', C_1, C_2\) represent some generic positive constants that are possibly n-dependent and may differ from line to line. In contrast, we use \(C_0\) to denote some generic positive constant independent of n.

The paper will be organized in the following way. In Sect. 2, we prove Theorem 1.9 and Theorem 1.12, with Theorem 1.15. The proof of Theorem 1.15 will be done in Sects. 3–11. More specifically, in Sect. 3, we use the supersymmetric formalism to represent \(\mathbb {E}|G_{ij}|^{2n}\) in terms of a superintegral, in which the integrand can be factorized into several functions; Sect. 4 is devoted to a preliminary analysis on these functions; Sects. 5–10 are responsible for different steps of the saddle point analysis, whose organization will be further clarified at the end of Sect. 5; Sect. 11 is devoted to the final proof of Theorem 1.15, by summing up the discussions in Sects. 3–10. In Sect. 12, we make a comment on how to remove the prefactor \(N^{C_0}\) in (1.21). At the end of the paper, we also collect some frequently used symbols in a table, for the convenience of the reader.

2 Proofs of Theorem 1.9 and Theorem 1.12

Assuming Theorem 1.15, we prove Theorems 1.9 and 1.12 in this section. At first, (1.21) can be generalized to the generally distributed matrix with the four moment matching condition via the Green’s function comparison strategy.

Lemma 2.1

Assume that H is a random block band matrix, satisfying Assumptions 1.1, 1.5 and 1.14. Let \(\kappa \) be an arbitrarily small positive constant and \(\varepsilon _2\) be any sufficiently small positive constant. There is \(N_0=N_0(n)\), such that for all \(N\ge N_0\) and all \(z\in \mathbf {D}(N,\kappa ,\varepsilon _2)\), we have

for some positive constant \(C_0\) uniform in n and z.

By the definition of stochastic domination in Definition 1.6, we can get the following corollary immediately.

Corollary 2.2

Under the assumptions of Lemma 2.1, we have

In the sequel, at first, we prove Lemma 2.1 from Theorem 1.15 via the Green’s function comparison strategy. Then we prove Theorem 1.9, using Lemma 2.1. Finally, we will show that Theorem 1.12 follows from Theorem 1.9 simply.

2.1 Green’s function comparison: Proof of Lemma 2.1

To show (2.1), we use Lindeberg’s replacement strategy to compare the Green’s functions of the Gaussian case and the general case. That means, we will replace the entries of \(H^g\) by those of H one by one, and compare the Green’s functions step by step. Choose and fix a bijective ordering map

Then we use \(H_k\) to represent the \(N\times N\) random Hermitian matrix whose \((\imath ,\jmath )\)th entry is \(h_{\imath \jmath }\) if \(\varpi (\imath ,\jmath )\le k\), and is \(h^g_{\imath \jmath }\) otherwise. Especially, we have \(H_0=H^g\) and \(H_{\varsigma (N)}=H\). Correspondingly, we define the Green’s functions by

Fix k and denote

Then, we write

where \(H_k^0\) is obtained via replacing \(h_{ab}\) and \(h_{ba}\) by 0 in \(H_k\) (or replacing \(h^g_{ab}\) and \(h^g_{ba}\) by 0 in \(H_{k-1}\)). In addition, we denote

Set \(\varepsilon _3\equiv \varepsilon _3(\gamma ,\varepsilon _1)\) to be a sufficiently small positive constant, satisfying (say)

where \(\gamma \) is from Assumption 1.1 (iii) and \(\varepsilon _1\) is from (1.8). For simplicity, we introduce the following parameters for \(\ell =1,\ldots , \varsigma (N)\) and \(\imath ,\jmath =1,\ldots , N\),

where C is a positive constant. Here we used the notation \(\delta _{\mathsf {I}\mathsf {J}}=1\) if two index sets \(\mathsf {I}\) and \(\mathsf {J}\) are the same and \(\delta _{\mathsf {I}\mathsf {J}}=0\) otherwise. It is easy to see that for \(\eta \le M^{-1}N^{\varepsilon _2}\), we have

by using (1.9). Now, we compare \(G_{k-1}(z)\) and \(G_k(z)\). We will prove the following lemma.

Lemma 2.3

Suppose that the assumptions in Lemma 2.1 hold. Additionally, we assume that for some sufficiently small positive constant \(\varepsilon _3\) satisfying (2.5),

Let \(n\in \mathbb {N}\) be any given integer. Then, if

we also have

for any \(k=1,\ldots , \varsigma (N)\).

Proof of Lemma 2.3

Fix k and omit the argument z from now on, but all formulas are understood to hold for all \(z\in \mathbf {D}(N,\kappa ,\varepsilon _2) \). At first, under the conditions (2.8) and (2.9), we show that

To see this, we use the expansion with (2.4)

which implies that for a sufficiently small \(\varepsilon '>0\) and a sufficiently large constant \(D>0\)

where the first step follows from (1.13), (2.8), Definition 1.6 and the trivial bound \(\eta ^{-1}\) for the Green’s functions. Now, using (2.9), (2.7) and Hölder inequality, we have

In addition, for sufficiently small \(\varepsilon '\), it is easy to check that there exists a constant \(c>0\) such that

for \(z\in \mathbf {D}(N,\kappa ,\varepsilon _2)\), in light of the fact \(M\gg N^{\frac{4}{5}}\), c.f., (1.9). Substituting (2.13) and (2.14) into (2.12) and choosing D to be sufficiently large, we can easily get the bound (2.11).

Now, recall (2.4) again and expand \(G_{k-1}(z)\) and \(G_k(z)\) around \(G_k^0(z)\), namely

We always choose m to be sufficiently large, depending on \(\varepsilon _3\) but independent of N. Then, we can write

where

At first, by taking m sufficiently large, from (2.8) and (1.13), we have the trivial bound

For \(\mathsf {R}_{\ell ,\imath \jmath }\) and \(\mathsf {S}_{\ell ,\imath \jmath }\), we split the discussion into off-diagonal case and diagonal case. In the case of \(\imath \ne \jmath \), we keep the first and the last factors of the terms in the expansions of \(((G_k^0 \mathsf {V}_{ab})^{\ell } G_k^0)_{\imath \jmath }\) and \(((G_k^0 \mathsf {W}_{ab})^{\ell } G_k^0)_{\imath \jmath }\), namely, \((G_k^0)_{\imath \jmath '}\) and \((G_k^0)_{\imath '\jmath }\) for some \(\imath ',\jmath '=a\) or b, and bound the factors in between by using (1.13) and (2.8), resulting the bound

For \(\imath =\jmath \), we only keep the first factor of the terms in the expansions of \(((G_k^0 \mathsf {V}_{ab})^{\ell } G_k^0)_{\imath \imath }\) and \(((G_k^0 \mathsf {W}_{ab})^{\ell } G_k^0)_{\imath \imath }\), and bound the others by using (1.13) and (2.8), resulting the bound

Observe that, in case \(\imath \ne \jmath \), if \(\{\imath ,\jmath \}\ne \{a,b\}\), at least one of \((G_k^0)_{\imath \jmath '}\) and \((G_k^0)_{\imath '\jmath }\) is an off-diagonal entry of \(G_k^0\) for \(\imath ',\jmath '=a\) or b.

Now we compare the 2nth moment of \(|(G_{k-1})_{\imath \jmath }|\) and \(|(G_k)_{\imath \jmath }|\). At first, we write

By substituting the expansion (2.16) into (2.21), we can write

where \(\mathbf {A}(\imath ,\jmath )\) is the sum of the terms which depend only on \(H_k^0\) and the first four moments of \(h_{ab}\), and \(\mathbf {R}_d(\imath ,\jmath )\) is the sum of all the other terms. We claim that \(\mathbf {R}_d(\imath ,\jmath )\) satisfies the bound

for some positive constant C independent of n. Now, we verify (2.23). According to (2.11) and the fact that the sequence \(\mathsf {R}_{1,\imath \jmath },\ldots , \mathsf {R}_{m,\imath \jmath }, \widetilde{\mathsf {R}}_{m+1,\imath \jmath }\), as well as \(\mathsf {S}_{1,\imath \jmath },\ldots , \mathsf {S}_{m,\imath \jmath }, \widetilde{\mathsf {S}}_{m+1,\imath \jmath }\), decreases by a factor \(N^{\varepsilon _3}/\sqrt{M}\) in magnitude, it is not difficult to check the leading order terms of \(\mathbf {R}_{k-1}(\imath ,\jmath )\) are of the form

with some \(p,q_\ell ,q'_\ell \in \mathbb {N}\) such that

and the leading order terms of \(\mathbf {R}_{k}(\imath ,\jmath )\) possess the same form (with \(\mathsf {R}\) replaced by \(\mathsf {S}\)). Every other term has at least 6 factors of \(h_{ab}\) or \(h_{ab}^g\) or their conjugates, thus their sizes are typically controlled by \(M^{-3}(N\eta )^{-n}\), i.e. they are subleading. Hence, it suffices to bound (2.24).

Now, the five factors of \(h_{ab}\) or \(h_{ba}\) within the \(\mathsf {R}_{\ell ,\imath \jmath }\)’s in (2.24) are independent of the rest and estimated by \(M^{-5/2}\). For the remaining factors from \(G^0_k\), we use (2.11) to bound 2n of them and use (2.8) to bound the rest. In the case that \(\imath \ne \jmath \) and \(\{\imath ,\jmath \}\ne \{a,b\}\), by the discussion above, we must have an off-diagonal entry of \(G_k^0\) in the product \((G_k^0)_{\imath \jmath '} (G_k^0)_{\imath ' \jmath }\) for any choice of \(\imath ',\jmath '=a\) or b. Then, in the bound for \(\mathsf {R}_{\ell ,\imath \jmath }\) in (2.19), for each \((G_k^0)_{\imath \jmath '} (G_k^0)_{\imath '\jmath }\), we keep the off-diagonal entry and bound the other by \(N^{\varepsilon _3}\) from assumption (2.8). Hence, by using (2.19) and (2.25), we see that for some \(\imath _r,\jmath _r\in \{\imath ,\jmath ,a,b\}\) with \(\imath _r\ne \jmath _r, r=1,\ldots , \sum (q_\ell +q'_\ell )\), the following bound holds

where the last step follows from (2.11) and Hölder’s inequality. In case of \(\imath \ne \jmath \) but \(\{\imath ,\jmath \}=\{a,b\}\), we keep an entry in the product \((G_k^0)_{\imath \jmath '} (G_k^0)_{\imath ' \jmath }\) and bound the other by \(N^{\varepsilon _3}\). We remark here in this case the entry being kept can be either diagonal or off-diagonal. Consequently, for some \(\imath _r,\jmath _r\in \{\imath ,\jmath ,a,b\},r=1,\ldots ,\sum (q_\ell +q'_\ell )\), we have the bound

by using (2.11) and Hölder’s inequality again. Hence, we have shown (2.23) in the case of \(\imath \ne \jmath \). For \(\imath =\jmath \), it is analogous to show

by using (2.11), (2.20) and Hölder’s inequality. Hence, we verified (2.23). Consequently, by Assumption 1.5, (2.22) and (2.23) we have

which together with the assumption (2.9) for \(\mathbb {E}|(G_{k-1})_{\imath \jmath }|^{2n}\) and the definition of \(\widehat{\varTheta }_{\ell ,\imath \jmath }\)’s in (2.6), we can get (2.10). Hence, we completed the proof of Lemma 2.3. \(\square \)

To show (2.1), we also need the following lemma.

Lemma 2.4

Suppose that the assumptions in Lemma 2.1 hold. Fix the indices \(a,b\in \{1,\ldots N\}\). Let \(H^0\) be a matrix obtained from H with its (a, b)th entry replaced by 0. Then, if for some \(\eta _0\ge 1/N\) there exists

then we also have

Proof of Lemma 2.4

The proof is almost the same as the discussion on pages 2311–2312 in [10]. For the convenience of the reader, we sketch it below. At first, according to the discussion below (4.28) in [10], for any \(\imath ,\jmath =1,\ldots , N\), we have

Now, we set \(k_1:=\max \{k: 2^k\eta <\eta _0\}\) and \(k_2:=\max \{k: 2^k\eta <1\}\). According to our assumption, both \(k_1\) and \(k_2\) are of the order \(\log N\). Now, we have

where in the second step, we used the fact that the function \(y\mapsto y\mathsf {Im}G_{\ell \ell } (E+\mathbf {i}y)\) is monotonically increasing, the condition (2.29) and the fact \(\eta \le \eta _0\). Hence, we conclude the proof of Lemma 2.4. \(\square \)

Now, with Theorem 1.15, Lemmas 2.3 and 2.4, we can prove Lemma 2.1.

Proof for Lemma 2.1

The proof relies on the following bootstrap argument, namely, we show that once

holds for \(\eta \ge \eta _0\) with \(\eta _0\in [N^{-1+\varepsilon _2+\varepsilon _3},M^{-1}N^{\varepsilon _2}]\), it also holds for \(\eta \ge \eta _0N^{-\varepsilon _3}\) for any \(\varepsilon _3\) satisfying (2.5). Assuming (2.30) holds for \(\eta \ge \eta _0\), we see that

Consequently, for \(\eta \ge \eta _0\), we also have

Therefore, (2.29) holds. Then, by Lemma 2.4, we see that (2.8) holds for \(\eta \ge \eta _0N^{-\varepsilon _3}\). Furthermore, by Lemma 2.3 and Theorem 1.15 for \(G_0\), i.e. the Gaussian case, one can get that for any given n,

Note that since (2.32) holds for any given n, we get (2.30) for \(M^{-1}N^{\varepsilon _2}\ge \eta \ge \eta _0N^{-\varepsilon _3}\).

Now we start from \(\eta _0=M^{-1}N^{\varepsilon _2}\). By Proposition 1.7 we see that (2.30) holds for all \(\eta \ge \eta _0\). Then we can use the bootstrap argument above finitely many times to show (2.30) holds for all \(\eta \ge N^{-1+\varepsilon _2}\). Consequently, we have (2.8) for all \(\eta \ge N^{-1+\varepsilon _2}\). Then, Lemma 2.1 follows from Lemma 2.3 and Theorem 1.15 immediately. \(\square \)

2.2 Proof of Theorem 1.9

Without loss of generality we can assume that \(M\le N(\log N)^{-10}\), otherwise, Proposition 1.7 implies (1.17) immediately. We only need to consider the diagonal entries \(G_{ii}\) below, since the bound for the off-diagonal entires of G(z) is implied by (2.1) directly. For simplicity, we introduce the notation

To bound \(\varLambda _d\), a key technical input is the estimate for the quantity

which is given in the following lemma.

Lemma 2.5

Suppose that H satisfies Assumptions 1.1, 1.5 and 1.14. We have

for all \(z\in \mathbf {D}(N,\kappa ,\varepsilon _2)\).

The proof of Lemma 2.5 will be postponed. Using Lemma 2.5, we see that, with high probability, (2.33) is a small perturbation of the self-consistent equation of \(m_{sc}\), i.e. (1.14), considering \(\sum _{a}\sigma _{ai}^2=1\). To control \(\varLambda _d\), we use a continuity argument from [12].

We remind here that in the sequel, the parameter set of the stochastic dominance is always \(\mathbf {D}(N,\kappa ,\varepsilon _2)\), without further mention. We need to show that

and first we claim that it suffices to show that

Indeed, if (2.36) were proven, we see that with high probability either \(\varLambda _d>N^{-\frac{\varepsilon _2}{4}}\) or \(\varLambda _d\prec (N\eta )^{-\frac{1}{2}}\le N^{-\frac{\varepsilon _2}{2}}\) for \(z\in \mathbf {D}(N,\kappa ,\varepsilon _2)\). That means, there is a gap in the possible range of \(\varLambda _d\). Now, choosing \(\varepsilon \) in (1.15) to be sufficiently small, we are able to get for \(\eta =M^{-1}N^{\varepsilon _2}\),

By the fact that \(\varLambda _d\) is continuous in z, we see that with high probability, \(\varLambda _d\) can only stay in one side of the range, namely, (2.35) holds. The rigorous details of this argument involve considering a fine discrete grid of the z-parameter and using that G(z) is Lipschitz continuous (albeit with a large Lipschitz constant \(1/\eta \)). The details are found in Section 5.3 of [12].

Hence, what remains is to verify (2.36). The proof of (2.36) is almost the same as that for Lemma 3.5 in [14]. For the convenience of the reader, we sketch it below without reproducing the details. We set

We also denote  .

By the assumption \(\varLambda _d\le N^{-\frac{\varepsilon _2}{4}}\), we have

.

By the assumption \(\varLambda _d\le N^{-\frac{\varepsilon _2}{4}}\), we have

Now we rewrite (2.33) as

By using (2.34), Lemma 5.1 in [14], and the assumption \(\varLambda _d\le N^{-\frac{\varepsilon _2}{4}}\), we can show that

One can refer to the derivation of (5.14) in [14] for more details. Averaging over i for (2.39) and (2.40) leads to

where

Plugging (2.38) and (2.34) into (2.42) yields

Using (2.43), the fact \(|\bar{m}(z)-m_{sc}(z)|\le \varLambda _d\le N^{-\frac{\varepsilon _2}{4}}\), and Lemma 5.2 in [14], to (2.41), we have

where in the first step we have used the fact that \(z\in \mathbf {D}(N,\kappa ,\varepsilon _2)\) thus away from the edges of the semicircle law. Now, we combine (2.39), (2.40) and (2.41), resulting

We just take the above identity as the definition of \(\mathsf {w}_i\). Analogously, we set  . Then (2.42) and (2.45) imply

. Then (2.42) and (2.45) imply

where the second step follows from the fact \(|z+m_{sc}(z)|\ge 1\) in \(\mathbf {D}(N,\kappa ,\varepsilon _2)\) (see (5.1) in [14] for instance), (2.43) and (2.44), and in the last step we used (2.44) again.

Now, using the fact \(m_{sc}^2(z)=(m_{sc}(z)+z)^{-2}\) (see (1.14)), we rewrite (2.45) in terms of the matrix \(\mathcal {T}\) introduced in (1.6) as  . Consequently, we have

. Consequently, we have

Then for \(z\in \mathbf {D}(N,\kappa ,\varepsilon _2)\), using (1.7) and Proposition A.2 (ii) in [12] (with \(\delta _-=1\) and \(\theta >c\)), we can get

Plugging (2.48) and (2.46) into (2.47) yields

where the second step follows from (2.34). Then (2.38) further implies that  , which together with (2.44) and (2.34) also implies

, which together with (2.44) and (2.34) also implies

Hence

Therefore, we completed the proof of Theorem 1.9.

Proof of Lemma 2.5

At first, recalling the notation defined in (1.3), we denote the Green’s function of \(H^{(i)}\) as

with a little abuse of notation. For simplicity, we omit the variable z from the notation below. At first, we recall the elementary identity by Schur’s complement, namely,

where we used the notation \(\mathbf {h}_i^{\langle i\rangle }\) to denote the ith column of H, with the ith component deleted. Now, we use the identity for \(a,b\ne i\) (see Lemma 4.5 in [12] for instance),

By using (1.11) and the large deviation estimate for the quadratic form (see Theorem C.1 of [12] for instance), we have

which implies that

where we have used the fact that \(\sum _{a}\sigma _{ai}^2=1\) in the first inequality above. Plugging (1.22) and (2.52) into (2.50) and using Corollary 2.2 we obtain

which implies

In addition, (1.22), (2.50) and (2.53) lead to the fact that

Now, using (2.33), (2.49), (2.51) and (2.54), we can see that

Therefore, we completed the proof of Lemma 2.5. \(\square \)

2.3 Proof of Theorem 1.12

With Theorem 1.9, we can prove Theorem 1.12 routinely. At first, according to the uniform bound (1.18), we have

which implies that

due to the fact that \(m_{sc}(z)\sim 1\). Recalling the normalized eigenvector \(\mathbf {u}_i=(u_{i1},\ldots , u_{iN})\) corresponding to \(\lambda _i\), and using the spectral decomposition, we have

For any \(|\lambda _i|\le \sqrt{2}-\kappa \), we set \(E=\lambda _i\) on the r.h.s. of (2.57) and use (2.56) to bound the l.h.s. of it. Then we obtain \({||\mathbf {u}_{i}||^2_\infty }/{\eta }\prec 1\). Choosing \(\eta =N^{-1+\varepsilon _2}\) above and using the fact that \(\varepsilon _2\) can be arbitrarily small, we can get (1.19). Hence, we completed the proof of Theorem 1.12.

3 Supersymmetric formalism and integral representation for the Green’s function

In this section, we will represent \(\mathbb {E}|G_{ij}(z)|^{2n}\) for the Gaussian case by a superintegral. The final representation is stated in (3.31). We make the convention here, for any real argument in an integral below, its region of the integral is always \(\mathbb {R}\), unless specified otherwise.

3.1 Gaussian integrals and superbosonization formulas

Let \(\varvec{\phi }=(\phi _1,\ldots , \phi _k)'\) be a vector of complex components, \(\varvec{\psi }=(\psi _1,\ldots ,\psi _k)'\) be a vector of Grassmann components. In addition, let \(\varvec{\phi }^*\) and \(\varvec{\psi }^*\) be the conjugate transposes of \(\varvec{\phi }\) and \(\varvec{\psi }\), respectively. We recall the following well-known formulas for Gaussian integrals.

Proposition 3.1

(Gaussian integrals or Wick’s formulas)

-

(i)

Let \(\mathrm {A}\) be a \(k\times k\) complex matrix with positive-definite Hermitian part, i.e. \(\mathsf {Re}A>0\). Then for any \(\ell \in \mathbb {N}\), and \(i_1,\ldots , i_\ell , j_1,\ldots , j_\ell \in \{1,\ldots , k\}\), we have

$$\begin{aligned} \int \prod _{a=1}^k\frac{\mathrm{d}\mathsf {Re}\phi _a \mathrm{d}\mathsf {Im}\phi _a}{\pi }\;\exp \{-\varvec{\phi }^*\mathrm {A}\varvec{\phi }\}\prod _{b=1}^\ell \bar{\phi }_{i_b}\phi _{j_b}=\frac{1}{\det \mathrm {A}} \; \sum _{\sigma \in \mathbb {P}(\ell )} \prod _{b=1}^\ell (\mathrm {A}^{-1})_{j_b,i_{\sigma (b)}}, \nonumber \\ \end{aligned}$$(3.1)where \(\mathbb {P}(\ell )\) is the permutation group of degree \(\ell \).

-

(ii)

Let \(\mathrm {B}\) be any \(k\times k\) matrix. Then for any \(\ell \in \{ 0,\ldots , k\}\), any \(\ell \) distinct integers \(i_1,\ldots , i_\ell \) and another \(\ell \) distinct integers \(j_1,\ldots , j_\ell \in \{1,\ldots , k\}\), we have

$$\begin{aligned} \int \prod _{a=1}^k\mathrm{d}\bar{\psi }_a \mathrm{d}\psi _a\; \exp \{-\varvec{\psi }^*\mathrm {B}\varvec{\psi }\}\prod _{b=1}^\ell \bar{\psi }_{i_b}\psi _{j_b} =(-1)^{\ell +\sum _{\alpha =1}^\ell (i_\alpha +j_\alpha )}\det \mathrm {B}^{(\mathsf {I}|\mathsf {J})}, \nonumber \\ \end{aligned}$$(3.2)where \(\mathsf {I}=\{i_1,\ldots , i_\ell \}\), and \(\mathsf {J}=\{j_1,\ldots , j_\ell \}\).

Now, we introduce the superbosonization formula for superintegrals. Let \(\varvec{\chi }=(\chi _{ij})\) be an \(\ell \times r\) matrix with Grassmann entries, \(\mathbf {f}=(f_{ij})\) be an \(\ell \times r\) matrix with complex entries. In addition, we denote their conjugate transposes by \(\varvec{\chi }^*\) and \(\mathbf {f}^*\) respectively. Let F be a function of the entries of the matrix

Let \(\mathcal {A}(\varvec{\chi },\varvec{\chi }^*)\) be the Grassmann algebra generated by \(\chi _{ij}\)’s and \(\bar{\chi }_{ij}\)’s. Then we can regard F as a function defined on a complex vector space, taking values in \(\mathcal {A}(\varvec{\chi },\varvec{\chi }^*)\). Hence, we can and do view \(F(\mathcal {S}(\mathbf {f},\mathbf {f}^*;\varvec{\chi },\varvec{\chi }^*))\) as a polynomial in \(\chi _{ij}\)’s and \(\bar{\chi }_{ij}\)’s, in which the coefficients are functions of \(f_{ij}\)’s and \(\bar{f}_{ij}\)’s. Under this viewpoint, we state the assumption on F as follows.

Assumption 3.2

Suppose that \(F(\mathcal {S}(\mathbf {f},\mathbf {f}^*;\varvec{\chi },\varvec{\chi }^*))\) is a holomorphic function of \(f_{ij}\)’s and \(\bar{f}_{ij}\)’s if they are regarded as independent variables, and F is a Schwarz function of \(\mathsf {Re}f_{ij}\)’s and \(\mathsf {Im}f_{ij}\)’s, by those we mean that all of the coefficients of \(F(\mathcal {S}(\mathbf {f},\mathbf {f}^*;\varvec{\chi },\varvec{\chi }^*))\), as functions of \(f_{ij}\)’s and \(\bar{f}_{ij}\)’s, possess the above properties.

Proposition 3.3

(Superbosonization formula for the nonsingular case, [18]) Suppose that F satisfies Assumption 3.2. For \(\ell \ge r\), we have

where \(\mathbf {x}=(x_{ij})\) is a unitary matrix; \(\mathbf {y}=(y_{ij})\) is a positive-definite Hermitian matrix; \(\varvec{\omega }\) and \(\varvec{\xi }\) are two Grassmann matrices, and all of them are \(r\times r\). Here

and \(\mathrm{d}\hat{\mu }(\cdot )\) is defined by

under the parametrization induced by the eigendecomposition, namely,

Here \(\mathrm{d}\mu (V)\) is the Haar measure on \(\mathring{U}(r)\), and \(\varDelta (\cdot )\) is the Vandermonde determinant. In addition, the integral w.r.t. \(\mathbf {x}\) ranges over U(r), that w.r.t. \(\mathbf {y}\) ranges over the cone of positive-definite matrices.

For the singular case, i.e. \(r>\ell \), we only state the formula for the case of \(r=2\) and \(\ell =1\), which is enough for our purpose. We can refer to formula (11) in [3] for the result under more general setting.

Proposition 3.4

(Superbosonization formula for the singular case, [3]) Suppose that F satisfies Assumption 3.2. If \(r=2\) and \(\ell =1\), we have

where y is a positive variable; \(\mathbf {x}\) is a 2-dimensional unitary matrix; \(\varvec{\omega }=(\omega _1,\omega _2)'\) and \(\varvec{\xi }=(\xi _1,\xi _2)\) are two vectors with Grassmann components. In addition, \(\mathbf {w}\) is a unit vector, which can be parameterized by

Moreover, the differentials are defined as

In addition, the integral w.r.t. \(\mathbf {x}\) ranges over U(2).

3.2 Initial representation

For \(a=1,2\) and \(j=1,\ldots ,W\), we set

For each j and each \(a, \varPhi _{a,j}\) is a vector with complex components, and \(\varPsi _{a,j}\) is a vector with Grassmann components. In addition, we use \(\varPhi ^*_{a,j}\) and \(\varPsi ^*_{a,j}\) to represent the conjugate transposes of \(\varPhi _{a,j}\) and \(\varPsi _{a,j}\) respectively. Analogously, we adopt the notation \(\varPhi ^*_{a}\) and \(\varPsi ^*_{a}\) to represent the conjugate transposes of \(\varPhi _{a}\) and \(\varPsi _{a}\), respectively. We have the following integral representation for the moments of the Green’s function.

Lemma 3.5

For any \(p,q=1,\ldots , W\) and \(\alpha , \beta =1,\ldots , M\), we have

where

Proof

By using Proposition 3.1 (i) with \(\ell =n\) and Proposition 3.1 (ii) with \(\ell =0\), we can get (3.5). \(\square \)

3.3 Averaging over the Gaussian random matrix

Recall the variance profile \(\widetilde{S}\) in (1.2). Now, we take expectation of the Green’s function, i.e average over the random matrix. By elementary Gaussian integral, we get

where \(J:=\text {diag}(1,-1)\) and \(Z:=\text {diag}(z,\bar{z})\), and for each \(j=1,\ldots , W\), the matrices \(\breve{X}_j, \breve{Y}_j, \breve{\varOmega }_j\) and \(\breve{\varXi }_j\) are \(2\times 2\) blocks of a supermatrix, namely,

Remark 3.6

The derivation of (3.6) from (3.5) is quite standard. We refer to the proof of (2.14) in [20] for more details and will not reproduce it here.

3.4 Decomposition of the supermatrices

From now on, we split the discussion into the following three cases

-

(Case 1): Entries in the off-diagonal blocks, i.e. \(p\ne q\),

-

(Case 2): Off-diagonal entries in the diagonal blocks, i.e. \(p=q, \quad \alpha \ne \beta \),

-

(Case 3): Diagonal entries, i.e. \(p=q, \quad \alpha =\beta \).

For each case, we will perform a decomposition for the supermatrix \(\breve{\mathcal {S}}_j\) (\(j=p\) or q). For a vector \(\mathbf {v}\) and some index set \(\mathsf {I}\), we use \(\mathbf {v}^{\langle \mathsf {I}\rangle }\) to denote the subvector obtained by deleting the ith component of \(\mathbf {v}\) for all \(i\in \mathsf {I}\). Then, we adopt the notation

Here, for \(\mathsf {A}=\breve{X}_j, \breve{Y}_j, \breve{\varOmega }_j\) or \(\breve{\varXi }_j\), the notation \(\mathsf {A}^{\langle \mathsf {I}\rangle }\) is defined via replacing \(\varPhi _{a,j}, \varPsi _{a,j}, \varPhi _{a,j}^*\) and \(\varPsi _{a,j}^*\) by \(\varPhi _{a,j}^{\langle \mathsf {I}\rangle }, \varPsi _{a,j}^{\langle \mathsf {I}\rangle }, (\varPhi _{a,j}^*)^{\langle \mathsf {I}\rangle }\) and \((\varPsi _{a,j}^*)^{\langle \mathsf {I}\rangle }\), respectively, for \(a=1,2\), in the definition of \(\mathsf {A}\). In addition, the notation \(\mathsf {A}^{[i]}\) is defined via replacing \(\varPhi _{a,j}, \varPsi _{a,j}, \varPhi _{a,j}^*\) and \(\varPsi _{a,j}^*\) by \(\phi _{a,j,i}, \psi _{a,j,i}, \bar{\phi }_{a,j,i}\) and \(\bar{\psi }_{a,j,i}\) respectively, for \(a=1,2\), in the definition of \(\mathsf {A}\). Moreover, for \(\mathsf {A}=\breve{\mathcal {S}}_j, \breve{X}_j, \breve{Y}_j, \breve{\varOmega }_j\) or \(\breve{\varXi }_j\), we will simply abbreviate \(\mathsf {A}^{\langle \{a,b\}\rangle }\) and \(\mathsf {A}^{\langle \{a\}\rangle }\) by \(\mathsf {A}^{\langle a,b\rangle }\) and \(\mathsf {A}^{\langle a\rangle }\), respectively. Note that \(\breve{\mathcal {S}}_j^{[i]}\) is of rank-one.

Recalling the spatial-orbital parametrization for the rows or columns of H, it is easy to see from the block structure that for any \(\alpha ,\alpha '\in \{1,\ldots , M\}\), exchanging the \((j,\alpha )\)th row with the \((j,\alpha ')\)th row and simultaneously exchanging the corresponding columns will not change the distribution of H.

For Case 1, due to the symmetry mentioned above, we can assume \(\alpha =\beta =1\). Then we extract two rank-one supermatrices from \(\breve{\mathcal {S}}_p\) and \(\breve{\mathcal {S}}_q\) such that the quantities \(\bar{\phi }_{2,p,1}\phi _{1,p,1}\) and \(\bar{\phi }_{1,q,1}\phi _{2,q,1}\) can be expressed in terms of the entries of these supermatrices. More specifically, we decompose the supermatrices

Consequently, we can write

For Case 2, due to symmetry, we can assume that \(\alpha =1, \beta =2\). Then we extract two rank-one supermatrices from \(\breve{\mathcal {S}}_p\), namely,

Consequently, we can write

Finally, for Case 3, due to symmetry, we can assume that \(\alpha =1\). Then we extract only one rank-one supermatrix from \(\breve{\mathcal {S}}_p\), namely,

Consequently, we can write

Since the discussion for all three cases are similar, we will only present the details for Case 1. More specifically, in the remaining part of this section and Sect. 4 to Sect. 10, we will only treat Case 1. In Sect. 11, we will sum up the discussions in the previous sections and explain how to adapt them to Case 2 and Case 3, resulting a final proof of Theorem 1.15.

3.5 Variable reduction by superbosonization formulae

We will work with Case 1. Recall the decomposition (3.7). We use the superbosonization formulae to reduce the number of variables. We shall treat \(\breve{\mathcal {S}}_k (k\ne p,q)\) and \(\breve{\mathcal {S}}_j^{\langle 1\rangle } (j=p,q)\) on an equal footing and use the formula (3.3) with \(r=2,\ell =M\) for the former and \(r=2,\ell =M-1\) for the latter, while we separate the terms \(\breve{\mathcal {S}}_j^{[1]} (j=p,q)\) and use the formula (3.4). For simplicity, we introduce the notation

Accordingly, we will use \(\widetilde{X}_j, \widetilde{\varOmega }_j, \widetilde{\varXi }_j\) and \(\widetilde{Y}_j\) to denote four blocks of \(\widetilde{\mathcal {S}}_j\). With this notation, we can rewrite (3.6) with \(\alpha =\beta =1\) as

where the first factor \((\cdots )^n\) of integrand is the observable and all other factors constitute a normalized measure, written in a somewhat complicated form according to the decomposition from Sect. 3.4.

Now, we use the superbosonization formulae (3.3) and (3.4) to the supermatrices \(\breve{\mathcal {S}}_k (k\ne p,q), \breve{\mathcal {S}}_j^{\langle 1\rangle }\) and \(\breve{\mathcal {S}}_j^{[1]} (j=p,q)\) one by one, to change to the reduced variables as

Here, for \(j=1,\ldots , W, X_j\) is a \(2\times 2\) unitary matrix; \(Y_j\) is a \(2\times 2\) positive-definite matrix; \(\varOmega _j=(\omega _{j,\alpha \beta })\) and \(\varXi _j=(\xi _{j,\alpha \beta })\) are \(2\times 2\) Grassmann matrices. For \(k=p\) or \(q, X_k^{[1]}\) is a \(2\times 2\) unitary matrix; \(y_k^{[1]}\) is a positive variable; \(\varvec{\omega }_{k}^{[1]}=(\omega _{k,1}^{[1]}, \omega _{k,2}^{[1]})'\) is a column vector with Grassmann components; \(\varvec{\xi }_{k}^{[1]}=(\xi _{k,1}^{[1]},\xi _{k,2}^{[1]})\) is a row vector with Grassmann components. In addition, for \(k=p,q\),

Then by using superbosonization formulae, we arrive at the representation

where we used the notation \(\mathbf {y}^{[1]}:=(y_p^{[1]},y_q^{[1]}), \mathbf {w}^{[1]}:=(\mathbf {w}_p^{[1]}, \mathbf {w}_q^{[1]})\). The differentials are defined by

Now we change the variables as \(X_jJ\rightarrow X_j,Y_jJ \rightarrow B_j,\varOmega _jJ\rightarrow \varOmega _j, \varXi _jJ\rightarrow \varXi _j\) and perform the scaling \(X_j\rightarrow -MX_j, B_j\rightarrow MB_j, \varOmega _j\rightarrow \sqrt{M} \varOmega _j\) and \(\varXi _j\rightarrow \sqrt{M}\varXi _j\). Consequently, we can write

where the functions in the integrand are defined as

with

In (3.17), the regions of \(X_j\)’s and \(X_k^{[1]}\)’s are all U(2), and those of \(B_j\)’s are the set of the matrices A satisfying \(AJ>0\). We remind the reader here that if we parametrize the unitary matrix \(X_j\) according to its eigendecomposition, the scaling \(X_j\rightarrow -MX_j\) is equivalent to changing the contour of the eigenvalues of \(X_j\) from the unit circle \(\varSigma \) to \(\frac{1}{M}\varSigma \), up to the orientation. Afterwards, we deformed the contour back to \(\varSigma \) in (3.17). This is possible since the only singularity of the integrand in (3.17) in the variables of the eigenvalues of \(X_j\) is at 0, c.f., the matrix \(X_j^{-1}\) in the factor \(\mathcal {P}( \varOmega , \varXi , X, B)\).

3.6 Parametrization for X, B

Similarly to the discussion in [20], we start with some preliminary parameterization. At first, we do the eigendecomposition

where

Further, we introduce

Especially, we have \(V_1=T_1=I\). Now, we parameterize \(P_1, Q_1, V_j\) and \(T_j\) for all \(j=2,\ldots , W\) as follows

Under the parametrization above, we can express the corresponding differentials as follows.

where

In addition, for simplicity, we do the change of variables

Note that the Berezinian of such a change is 1. After this change, \(\mathcal {P}( \varOmega , \varXi , X,B,\mathbf {y}^{[1]},\mathbf {w}^{[1]})\) turns out to be independent of \(P_1\) and \(Q_1\).

To adapt to the new parametrization, we change the notation

We recall here that K(X) does not depend on \(P_1\), as well, L(B) does not depend on \(Q_1\). Moreover, according to the change (3.27), we have

and

Consequently, using (3.26), from (3.17) we can write

where we introduced the notation

In (3.31), the regions of \(V_j\)’s are all \(\mathring{U}(2)\), and those of \(T_j\)’s are all \(\mathring{U}(1,1)\). Observe that all Grassmann variables are inside the integrand of the integral \(\mathsf {A}(\hat{X}, \hat{B}, V, T)\). Hence, (3.31) separates the saddle point calculation from the observable \(\mathsf {A}(\hat{X}, \hat{B}, V, T)\).

To facilitate the discussions in the remaining part, we introduce some additional terms and notation here. Henceforth, we will employ the notation \((X^{[1]})^{-1}=\{(X^{[1]}_p)^{-1}, (X^{[1]}_q)^{-1}\}\) and \((\mathbf {y}^{[1]})^{-1}=\{(y_p^{[1]})^{-1}, (y_q^{[1]})^{-1}\}\) for the collection of inverse matrices and reciprocals, respectively. For a matrix or a vector A under discussion, we will use the term A-variables to refer to all the variables parametrizing it. For example, \(\hat{X}_j\)-variables means \(x_{j,1}\) and \(x_{j,2}\), and \(\hat{X}\)-variables refer to the collection of all \(\hat{X}_j\)-variables. Analogously, we can define the terms T-variables, \(\mathbf {y}^{[1]}\)-variables , \(\varOmega \)-variables and so on. We use another term A-entries to refer to the non-zero entries of A. Note that \(\hat{X}_j\)-variables are just \(\hat{X}_j\)-entries. However, for \(T_j\), they are different, namely,

Analogously, we will also use the term T-entries to refer to the collection of all \(T_j\)-entries. Then V-entries, \(\mathbf {w}^{[1]}\)-entries, etc. are defined in the same manner. It is easy to check that \(Q_1^{-1}\)-entries are the same as \(Q_1\)-entries, up to a sign, as well, \(T_j^{-1}\)-entries are the same as \(T_j\)-entries, for all \(j=2,\ldots , W\).

Moreover, to simplify the notation, we make the convention here that we will frequently use a dot to represent all the arguments of a function. That means, for instance, we will write \(\mathcal {P}( \varOmega , \varXi , \hat{X}, \hat{B}, V, T)\) as \(\mathcal {P}(\cdot )\) if there is no confusion. Analogously, we will also use the abbreviation \(\mathcal {Q}(\cdot ), \mathcal {F}(\cdot ), \mathsf {A}(\cdot )\), and so on.

Let \(\mathbf {a}:=\{a_1,\ldots , a_\ell \}\) be a set of variables, we will adopt the notation

to denote the class of all multivariate polynomials \(\mathfrak {p}(\mathbf {a})\) in the arguments \(a_1,\ldots , a_\ell \) such that the following three conditions are satisfied: (i) The total number of the monomials in \(\mathfrak {p}(\mathbf {a})\) is bounded by \(\kappa _1\); (ii) the coefficients of all monomials in \(\mathfrak {p}(\mathbf {a})\) are bounded by \(\kappa _2\) in magnitude; (iii) the power of each \(a_i\) in each monomial is bounded by \(\kappa _3\), for all \(i=1,\ldots , \ell \). For example, \(5b_{j,1}^{-1}+3b_{j,1}t_j^2+1\in \mathfrak {Q}\big (\{b_{j,1}^{-1}, b_{j,1}, t_j\}; 3, 5, 2\big )\). In addition, we define the subset of \(\mathfrak {Q}(\mathbf {a}; \kappa _1, \kappa _2, \kappa _3)\), namely,

consisting of those polynomials in \(\mathfrak {Q}(\mathbf {a}; \kappa _1, \kappa _2, \kappa _3)\) such that the degree is bounded by \(\kappa _3\), i.e. the total degree of each monomial is bounded by \(\kappa _3\). For example \(5b_{j,1}^{-1}+3b_{j,1}t_j^2+1\in \mathfrak {Q}_{\text {deg}}\big (\{b_{j,1}^{-1}, b_{j,1}, t_j\}; 3, 5, 3\big )\).

4 Preliminary discussion on the integrand

In this section, we perform a preliminary analysis on the factors of the integrand in (3.17). Recall the matrix \(\mathfrak {I}\) defined in (1.29).

4.1 Factor \(\exp \{-M(K(\hat{X},V)+L(\hat{B},T))\}\)

Recall the parametrization of \(\hat{B}_j, \hat{X}_j, T_j\) and \(V_j\) in (3.23) and (3.25), as well as the matrices defined in (1.28). According to the discussion in [20], there are three types of saddle points of this function, namely,

-

Type I : For each j, \(\displaystyle (\hat{B}_j, T_j,\hat{X}_j)=(D_{\pm }, I, D_{\pm })\quad \text {or} \quad (D_{\pm }, I, D_{\mp })\),

\(\qquad \theta _j\in \mathbb {L}, ~~~~v_j=0\) if \(\hat{X}_j=\hat{X}_1\), and \(v_j=1\) if \(\hat{X}_j\ne \hat{X}_1\).

-

Type II : For each j, \(\displaystyle (\hat{B}_j, T_j,\hat{X}_j)=(D_{\pm }, I, D_{+})\) and \(V_j\in \mathring{U}(2)\).

-

Type III : For each j, \(\displaystyle (\hat{B}_j, T_j,\hat{X}_j)=(D_{\pm }, I, D_{-})\) and \(V_j\in \mathring{U}(2)\).

(Actually, since \(\theta _j\) and \(v_j\) vary on continuous sets, it would be more appropriate to use the term saddle manifolds.) We will see that the main contribution to the integral (3.17) comes from some small vicinities of the Type I saddle points. At first, by the definition in (3.24), we have \(V_1=I\). If we regard \(\theta _j\)’s in the parametrization of \(V_j\)’s as fixed parameters, it is easy to see that there are totally \(2^W\) choices of Type I saddle points. Furthermore, the contributions from all the Type I saddle points are the same, since one can always do the transform \(V_j\rightarrow \mathfrak {I} V_j\) or \((\hat{X}_j, P_j)\rightarrow (\mathfrak {I}\hat{X}_j\mathfrak {I}, \mathfrak {I}P_j)\) for several j to change one saddle to another. That means, for Type I saddle points, it suffices to consider

-

Type I’ : For each j, \(\displaystyle (\hat{B}_j, T_j,\hat{X}_j, V_j)=(D_{\pm }, I, D_{\pm }, I)\).

Therefore, the total contribution to the integral (3.17) from all Type I saddle points is \(2^W\) times that from the Type I’ saddle point.

Following the discussion in [20], we will show in Sect. 5 that both \(K(\hat{X},V)-K(D_{\pm }, I)\) and \(L(\hat{B},T)-L(D_{\pm }, I)\) have positive real parts, bounded by some positive quadratic forms from below, which allows us to perform the saddle point analysis. In addition, it will be seen that in a vicinity of the Type I’ saddle point, \(\exp \{-M(K(\hat{X},V)+L(\hat{B},T))\}\) is approximately Gaussian.

4.2 Factor \(\mathcal {Q}( \varOmega , \varXi , \varvec{\omega }^{[1]},\varvec{\xi }^{[1]}, P_1, Q_1, X^{[1]}, \mathbf {y}^{[1]},\mathbf {w}^{[1]})\)

The function \(\mathcal {Q}(\cdot )\) contains both the \( \varOmega , \varXi \)-variables from \(\mathcal {P}(\cdot )\), and the \(P_1, Q_1, X^{[1]},\mathbf {y}^{[1]}, \mathbf {w}^{[1]}\)-variables from \(\mathcal {F}(\cdot )\). In addition, note that in the integrand in (3.17), \(\mathcal {Q}(\cdot )\) is the only factor containing the \(\varvec{\omega }^{[1]}\) and \(\varvec{\xi }^{[1]}\)- variables. Hence, we can compute the integral

At first, the explicit formula for \(\mathsf {Q}( \cdot )\) is complicated and irrelevant for us. From (3.30) and the definition of the Grassmann integral, it is not difficult to see that \(\mathsf {Q}( \cdot )\) is a polynomial of the \((X^{[1]})^{-1}, (\mathbf {y}^{[1]})^{-1}, \mathbf {w}^{[1]}, P_1, Q_1, \varOmega \) and \(\varXi \)-entries. In principle, for each monomial in the polynomial \(\mathsf {Q}(\cdot )\), we can combine the Grassmann variables with \(\mathcal {P}(\cdot )\), then perform the integral over \(\varOmega \) and \(\varXi \)-variables, whilst we combine the complex variables with \(\mathcal {F}(\cdot )\), and perform the integral over \(X^{[1]}, \mathbf {y}^{[1]}, \mathbf {w}^{[1]}, P_1\) and \(Q_1\)-variables. A formal discussion on \(\mathsf {Q}(\cdot )\) will be given in Sect. 6.1. However, the terms from \(\mathsf {Q}(\cdot )\) turn out to be irrelevant in our proof. Therefore, in the arguments with \(\mathsf {Q}(\cdot )\) involved, a typical strategy that we will adopt is as follows: we usually neglect \(\mathsf {Q}(\cdot )\) at first, and perform the discussion on \(\mathcal {P}(\cdot )\) and \(\mathcal {F}(\cdot )\) separately, at the end, we make necessary comments on how to slightly modify the discussions to take \(\mathsf {Q}(\cdot )\) into account.

4.3 Factor \(\mathcal {P}( \varOmega , \varXi , \hat{X}, \hat{B}, V, T)\)