Abstract

Stochastic models of synaptic plasticity must confront the corrosive influence of fluctuations in synaptic strength on patterns of synaptic connectivity. To solve this problem, we have proposed that synapses act as filters, integrating plasticity induction signals and expressing changes in synaptic strength only upon reaching filter threshold. Our earlier analytical study calculated the lifetimes of quasi-stable patterns of synaptic connectivity with synaptic filtering. We showed that the plasticity step size in a stochastic model of spike-timing-dependent plasticity (STDP) acts as a temperature-like parameter, exhibiting a critical value below which neuronal structure formation occurs. The filter threshold scales this temperature-like parameter downwards, cooling the dynamics and enhancing stability. A key step in this calculation was a resetting approximation, essentially reducing the dynamics to one-dimensional processes. Here, we revisit our earlier study to examine this resetting approximation, with the aim of understanding in detail why it works so well by comparing it, and a simpler approximation, to the system’s full dynamics consisting of various embedded two-dimensional processes without resetting. Comparing the full system to the simpler approximation, to our original resetting approximation, and to a one-afferent system, we show that their equilibrium distributions of synaptic strengths and critical plasticity step sizes are all qualitatively similar, and increasingly quantitatively similar as the filter threshold increases. This increasing similarity is due to the decorrelation in changes in synaptic strength between different afferents caused by our STDP model, and the amplification of this decorrelation with larger synaptic filters.

Similar content being viewed by others

1 Introduction

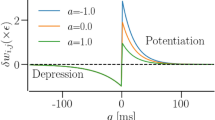

Spike-timing-dependent plasticity (STDP; Markram et al. 1997; Bi and Poo 1998; Zhang et al. 1998; Froemke and Dan 2002; Roberts and Bell 2002; Harvey and Svoboda 2007; Caporale and Dan 2008)—the biphasic, graded change in synaptic efficacy depending on the relative timing of pre- and postsynaptic spiking—is typically understood to imply that single synapses can express finely graded changes in synaptic strength, with this assumption implicit in many models (Song et al. 2000; van Rossum et al. 2000; Castellani et al. 2001; Senn et al. 2001; Sjöström and Nelson 2002; Burkitt et al. 2004; Bi and Rubin 2005; Rubin et al. 2005; Bender et al. 2006). Yet some experimental evidence suggests that synapses may occupy only discrete states of synaptic strength (Montgomery and Madison 2002, 2004; O’Connor et al. 2005a, b; Bartol et al. 2015) or may change their strengths only in discrete, all-or-none jumps (Petersen et al. 1998; Yasuda et al. 2003; Bagal et al. 2005; Sobczyk and Svoboda 2007). One way to resolve this apparent contradiction is to propose that a single synapse may express only fixed-amplitude steps in synaptic strength, with the probability but not amplitude of change depending on spike timing (Appleby and Elliott 2005). The classic, graded biphasic STDP curve (Bi and Poo 1998) then emerges as an average change over multiple synapses for a single spike-pair presentation or at a single synapse over multiple spike-pair presentations (Appleby and Elliott 2005).

Any probabilistic or stochastic model of synaptic plasticity faces the challenge posed by destabilising fluctuations in synaptic strength. When considering, for example, neuronal development (Purves and Lichtman 1985), fluctuations can lead to a change in the patterns of synaptic connectivity acquired through activity-dependent competitive dynamics in the developing primary visual cortex (V1). In particular, the segregated afferent input to V1 neurons, in which one eye or the other dominates in the control of V1 neurons (Hubel and Wiesel 1962), can be destabilised by fluctuations, so that the two afferents repeatedly switch control of a target cell over some characteristic, average time scale (Appleby and Elliott 2006; Elliott 2008). Reducing the size of the all-or-none steps in synaptic strength can control these fluctuations, but if the plasticity step size must be very small to control fluctuations, then models become biologically implausible and, furthermore, synaptic strengths become for all practical purposes graded rather than discrete (Elliott 2008). We have instead proposed that synapses act as low-pass filters, integrating their plasticity induction signals before expressing a step change in synaptic strength (Elliott 2008; Elliott and Lagogiannis 2009). These “integrate-and-express” models powerfully control fluctuations without having to resort to implausible parameter choices or assumptions.

Previously we extensively analysed three such models of synaptic filtering operating in concert with our model of STDP (Elliott 2011b). We found that the plasticity step size plays the role of a temperature-like parameter, with smaller step sizes corresponding to lower temperatures. Structure formation (segregated states) emerges only below a critical plasticity step size, akin to a Curie point or critical temperature. Synaptic filtering “cools” the dynamics, with the filter threshold scaling back the actual plasticity step size into an effective step size, where this scaling is linear over a range of parameters. This analysis was performed using the master equation for the strengths of two afferents (representing inputs via the lateral geniculate nucleus from the two eyes) and its Fokker–Planck equation limit. In order to write down the master equation, it was necessary to make several approximations, but the key one for the purposes of analytical tractability was to assume that the occurrence of a plasticity step in one afferent resets the filter states in all afferents. This resetting or renewal approximation essentially reduces a random walk in multiple dimensions (afferents) to a set of one-dimensional random walks, one for each afferent. The afferents’ dynamics are then coupled only through the common postsynaptic firing rate of their target cell. We argued although did not demonstrate that this approximation works because our stochastic model of STDP, together with synaptic filtering, decorrelates synaptic strength changes across multiple afferents, with simultaneous changes becoming increasingly unlikely with larger filter thresholds.

Using this resetting or renewal approximation is essential in obtaining analytical results that are key to understanding the stability and lifetimes of patterns of neuronal connectivity acquired during development, and thus to revealing the role of the plasticity step size as a temperature-like parameter. Understanding in detail why this approximation works so well is therefore of central importance, not just to our own models but to other stochastic models in which this approximation could be employed. Our purpose here is thus to examine this approximation in detail by comparing and contrasting results obtained with it to those obtained from the full synaptic dynamics without it, and to those obtained with other, simpler approximations. Doing so shows when and why the approximation works. To achieve this, we consider the full dynamics of two afferents in their joint STDP and filter state space, obtaining the equilibrium joint probability distribution for their strengths. This distribution determines whether or not segregated states of afferent connectivity exist as the outcome of the process of neuronal development. We compare these full results to those from our earlier study and to the results from a model that may be regarded as intermediate between the full and earlier models. Regardless of which of these three models we use, all results are qualitatively and often quantitatively very similar, especially for larger filters, confirming the validity of the central approximation used in our earlier model and verifying our explanation of it in terms of decorrelating changes in synaptic strength. We also compare some of our results to simulation, particularly in relation to the lifetimes of segregated states of afferent connectivity. Most of our results must be obtained by the numerical solution of large, two-dimensional linear systems, so we restrict to a study of just the simplest possible filter model. However, the validation of our earlier key approximation by these numerical methods permits the full analytical power of that approximation to be deployed.

The remainder of our paper is organised as follows: In the next section, we introduce in some detail the methods and approach that we take for a single afferent (or synapse). Section 3 then builds on these methods to consider two afferents, extending the machinery of Sect. 2 to the three models that we examine. Then in Sect. 4, we present our numerical and simulation results for these models, comparing and contrasting them. Finally, in Sect. 5 we briefly discuss our results.

2 Recapitulation of synaptic dynamics of one afferent

Before considering two or more afferents in the next section, we first consider our previous analysis (Elliott 2011b) of the dynamics of one afferent synapsing on a target cell. We do this in some detail to provide orientation; to set up the formalism and notation; to describe our switch-based model of STDP for synaptic plasticity induction and our simplest filter-based model for synaptic plasticity expression; to clarify conceptual issues that previously were implicit or unclear; and to extend some of our previous results from specific to general cases. Mathematically and notationally speaking, the two-afferent case considered in Sect. 3 is much more elaborate and involved than the one-afferent case considered in this section. We therefore also discuss the one-afferent case at some length so that the major issues and approximations involved are behind us when we turn to the mathematically harder but conceptually identical two-afferent case.

2.1 Plasticity induction by tristate STDP switch

Synaptic state transitions induced by spike-timing-dependent plasticity in the tristate switch model. Circles indicate STDP-related synaptic states and either solid or dashed lines transitions between them. Solid lines labelled “pre” or “post” represent transitions caused by pre- or postsynaptic spikes, respectively, while dashed lines labelled “\(\gamma _+\)” or “\(\gamma _-\)” represent stochastic decay processes. Lines additionally labelled “\(\Uparrow \)” or “\(\Downarrow \)” indicate transitions in which synaptic plasticity (potentiation or depression, respectively) is induced. In panel A, the return of the tristate switch to the OFF state after each plasticity-inducing transition is explicitly represented. In panel B, we instead replace these plasticity-inducing transitions with transitions into fictitious absorbing states that enable the calculation of first passage time densities for plasticity induction processes

Our tristate switch model of STDP (Appleby and Elliott 2005) postulates that any given synapse resides in one of three STDP-related synaptic states, as illustrated in Fig. 1A. We refer to these states as the “UP” state, the “OFF” state, and the “DOWN” state.Footnote 1 Transitions between these three states are driven by pre- or postsynaptic spikes, as well as by two stochastic decay processes. When in the OFF state, the occurrence of a presynaptic spike will drive the synapse to the UP state, while a postsynaptic spike will cause a transition to the DOWN state. From the UP state, a postsynaptic spike will drive the synapse back to the OFF state, inducing a fixed-amplitude increment of \(T_+\) in synaptic strength. The sequence of transitions OFF \(\rightarrow \) UP \(\rightarrow \) OFF driven by a presynaptic followed by postsynaptic spike pair accounts for the potentiating side of the usual biphasic STDP curve (Bi and Poo 1998) although not the dependence of the amplitude on the spike-time difference. Similarly, when in the DOWN state, a presynaptic spike triggers a transition back to the OFF state, inducing a fixed-amplitude decrement of \(T_-\) in synaptic strength. The sequence of transitions OFF \(\rightarrow \) DOWN \(\rightarrow \) OFF driven by a postsynaptic followed by a presynaptic spike pair accounts for the depressing side of the usual STDP curve, although again not the dependence of the amplitude on spike-time difference.

To account for the spike-time dependence in both cases, we suppose that a synapse in the UP state will stochastically decay back to the OFF state, and similarly a synapse in the DOWN state will stochastically decay back to the OFF state. Although a single spike pair (either presynaptic followed by postsynaptic or postsynaptic followed by presynaptic) will always induce a fixed-amplitude jump (or no change) in synaptic strength, multiple such spike pairs at the same synapse, or the same spike pair across different synapses between the same pre- and postsynaptic neuron, will then induce graded changes in synaptic strength (at the same synapse or across all synapses between afferent and target). This is because the stochastic decay processes are assumed to be independent between different spike-pair instances at the same synapse or for the same spike-pair instance across different synapses. For example, a 10-ms time difference between a presynaptic spike followed by a postsynaptic spike may on one occasion induce a potentiation step because the postsynaptic spike occurs before the decay process, but on another may not because the decay process occurs first. Although the two stochastic decay processes could be characterised by any PDFs, for simplicity we take them to be exponential distributions with rate \(\lambda _+\) for the UP \(\rightarrow \) OFF decay process and \(\lambda _-\) for the DOWN \(\rightarrow \) OFF decay process. These decays rates \(\lambda _\pm \) translate into time scales \(\tau _\pm = 1 / \lambda _\pm \) characterising the widths of the two sides of the biphasic STDP window.

We have not indicated how a synapse in the UP state responds to a presynaptic spike, nor how a synapse in the DOWN state responds to a postsynaptic spike. Variants of the basic tristate switch model can be constructed depending on whether or not a spike of the appropriate type resets the stochastic decay process of the corresponding state (Appleby and Elliott 2006). Here we consider the simplest and most natural model, which assumes that a synapse in the UP state does not respond at all to a presynaptic spike, and similarly for the DOWN state and a postsynaptic spike. However, when all inter-event processes are exponentially distributed, the memorylessness of the exponential process ensures that all these variant models collapse down to one model.

It is therefore convenient to assume that pre- and postsynaptic spike processes are governed by Poisson processes of rates that we write for brevity as \(\lambda _\pi \) and \(\lambda _p\), respectively. Inter-spike intervals for the same spike type are then exponentially distributed. With the assumption of Poisson spiking, we may show that the parameter \(\gamma = (T_+ \lambda _-)/(T_- \lambda _+) = (T_+ \tau _+) / (T_- \tau _-)\) must satisfy the condition \(\gamma < 1\) to prevent potentiation dominating depression (Appleby and Elliott 2005). Furthermore, we may also show that activity-dependent competitive dynamics naturally arise between different afferents synapsing on the same target cell for particular ranges of \(\gamma \) (Appleby and Elliott 2006; Elliott 2008). Moreover, these competitive dynamics depend on higher-order spike interactions (e.g. spike triplets and quadruplets) that our STDP model automatically accommodates without additional postulates (Appleby and Elliott 2005, 2007). We will set \(T_+ = T_-\) so that synaptic strengths occupy points on a lattice, and previously we used the choice \(\gamma = 3/5\) with \(T_\pm = T\) to ensure the presence of competitive synaptic dynamics (Elliott 2011b). We take \(\lambda _- = 50\) Hz (or \(\tau _- = 20\) ms) as a standard choice from this earlier work, which therefore implies that \(\lambda _+ \approx 83\) Hz (or \(\tau _+ = 12\) ms).

We have been careful to label the transitions in Fig. 1A according to the processes that cause them rather than according to the rates at which they occur. This is because a plasticity induction signal that is generated by the spike-driven UP \(\rightarrow \) OFF or DOWN \(\rightarrow \) OFF transition will trigger a change in the synapse’s state, whether that be its strength or some other, internal synaptic state. Even for a fixed presynaptic spike firing rate \(\lambda _\pi \), if an induction signal causes an immediate change in synaptic strength, then the postsynaptic spike firing rate \(\lambda _p\) will change. Hence, the rates of the transitions in Fig. 1A may change because of the induction processes triggered by these very transitions, even when \(\lambda _\pi \) is fixed. The induction processes are formally first passage time (FPT) processes in which, starting from any given STDP switch state at time \(t = 0\) s, a postsynaptic-spike-driven UP \(\rightarrow \) OFF transition occurs for the first time, or a presynaptic-spike-driven DOWN \(\rightarrow \) OFF transition occurs for the first time, with in each case an induction event of the other type having not already occurred. Prior to an induction signal being generated for the first time, the rates of the processes in Fig. 1A cannot change unless \(\lambda _\pi \) changes. Once an induction signal has been generated, the rate \(\lambda _p\) could change even if \(\lambda _\pi \) does not. We must therefore focus on the FPT processes by which the STDP switch mechanism generates plasticity induction signals, as these signals drive further synaptic changes.

To compute the FPT densities for the induction signals generated by the tristate STDP switch, we introduce the fictitious absorbing states \(\hbox {UP}^*\) and \(\hbox {DOWN}^*\) and replace the spike-driven transitions UP \(\rightarrow \) OFF and DOWN \(\rightarrow \) OFF in Fig. 1A with the transitions UP \(\rightarrow \) \(\hbox {UP}^*\) and DOWN \(\rightarrow \) \(\hbox {DOWN}^*\), respectively, shown in Fig. 1B. In Fig. 1B, any transitions between the standard DOWN, OFF and UP switch states are then by construction transitions that have occurred without an induction signal having been generated. However, probability will accumulate in the fictitious absorbing states over time, with the rate of change of the probabilities of these two states corresponding precisely to the FPT densities for the occurrence of plasticity induction signals. Ordering the switch states including the fictitious absorbing states as \(\{ \textrm{DOWN}^*, \textrm{DOWN}, \textrm{OFF}, \textrm{UP}, \textrm{UP}^*\}\), we first define the \(3 \times 3\) matrix

as the generating matrix for transitions purely within the \(\{ \textrm{DOWN}, \textrm{OFF}, \textrm{UP} \}\) states excluding transitions to the fictitious states. Defining also the two vectors

the \(5 \times 5\) generating matrix for all transitions including those to the fictitious states is then given by

We have written the matrix in schematic block form, so the central three elements of the first and last rows contain the components of \(\underline{v}^-\) and \(\underline{v}^+\), respectively; the central \(3 \times 3\) submatrix is just \(\mathbb {S}_0\); all elements of the first and last columns are zero; the vector \(\underline{0}\) denotes a vector of zeros (in this case with three components); and a superscript \(\textrm{T}\) denotes the transpose.

The transition matrix \(\mathbb {P}(t)\) that describes the transition probabilities between the five states in Fig. 1B in a time t is then the solution of the standard forward Chapman–Kolmogorov equation \(\textrm{d} \mathbb {P}(t)/\textrm{d}t = \mathbb {S}_*\mathbb {P}(t)\), subject to the initial condition \(\mathbb {P} (0) = \mathbb {I}\), with \(\mathbb {I}\) being the identity matrix. If \(\lambda _\pi \) and thus \(\lambda _p\) are constant, then we obtain the standard matrix exponential solution \(\mathbb {P}(t) = \exp \left( \mathbb {S}_*\, t \right) \). It is easy to see that the matrix exponential in this case takes the form

where the integral terms arise by simplifying the sum \(\sum _{n=1}^\infty \mathbb {S}_0^{n-1} \, (t^n/n!)\). If \(\lambda _\pi \) and thus \(\lambda _p\) are not constant, then the matrix exponentials in Eq. (4) are replaced throughout by time-ordered matrix exponentials. Indexing the standard STDP switch states with letters such as X and Y, where \(X, Y \in \{ \textrm{DOWN}, \textrm{OFF}, \textrm{UP} \}\), the FPT densities \(G_Y^\pm (t)\) for potentiating and depressing induction signals at time t starting from switch state Y are given by:

where we index matrix elements according to the associated states rather than numerically. Hence, writing the vectors \(\underline{G}^\pm (t) = \left( G_\textrm{DOWN}^\pm (t), G_\textrm{OFF}^\pm (t), G_\textrm{UP}^\pm (t) \right) ^{\textrm{T}}\), we have

We must have that \(G_Y^\pm (0) \equiv 0\) because an induction signal cannot be generated if no time has elapsed, so Eq. (6) is valid for \(t > 0\) s and not at \(t = 0\) s. We may confirm that the FPT densities satisfy the differential equations

subject to these initial conditions, \(\underline{G}^\pm (0) = \underline{0}\). The Dirac delta function \(\delta (t)\) gives the discontinuous behaviours of the \(G_Y^\pm (t)\) at \(t = 0\) s. The inhomogeneous terms in Eq. (7) correspond to absorbing boundary conditions that act via \(\delta (t)\) at the instant that a transition to the relevant fictitious absorbing state occurs. The appearance of the transposed matrix \(\mathbb {S}_0^{\textrm{T}}\) rather than \(\mathbb {S}_0\) in Eq. (7) reflects the fact that FPT processes must be computed by keeping the final state (here \(\hbox {DOWN}^*\) or \(\hbox {UP}^*\) in Eq. (5)) fixed and allowing a variable initial state Y, rather than vice versa. Hence, they arise from the backward rather than the forward Chapman-Kolmogorov equation, with backward processes indicated by post- rather than pre-multiplication by \(\mathbb {S}_0\). As \(\underline{G}^\pm (t)\) are vectors and not matrices, post-multiplication by \(\mathbb {S}_0\) must become pre-multiplication by its transpose, \(\mathbb {S}_0^{\textrm{T}}\).

Equation (7) may alternatively be written down by other, standard methods (see, e.g. van Kampen 1992). However, by using the fictitious absorbing states and the matrix \(\mathbb {S}_*\), we can just read off from the matrix \(\mathbb {P}(t)\) not only the FPT densities \(G_Y^\pm (t)\) but also the transition probabilities between the standard switch states \(\{ \textrm{DOWN}, \textrm{OFF}, \textrm{UP} \}\) without a transition to the fictitious absorbing states \(\{ \textrm{DOWN}^*, \textrm{UP}^*\}\) having occurred. We represent these by the elements of a matrix \(\mathbb {F}(t)\), where \(\mathbb {F}(t)\) is just the central \(3 \times 3\) submatrix of \(\mathbb {P}(t)\), so

or its time-ordered equivalent. We write its elements as \(F_Y^X(t) = \left[ \, \mathbb {F}(t) \right] _{X,Y}\), for the transition probability from switch state Y to switch state X in time t, without a transition to \(\hbox {DOWN}^*\) or \(\hbox {UP}^*\) having occurred. Asymptotically these transition probabilities must vanish, so \(F_Y^X(t) \rightarrow 0\) as \(t \rightarrow \infty \), because an induction process is inevitable given enough time: all probability finally accumulates in the top and bottom rows of \(\mathbb {P}(t)\). The matrix elements \(F_Y^X(t)\) and the FPT densities \(G_Y^\pm (t)\) are related by the equality:

with the sum running over \(X \in \{ \textrm{DOWN}, \textrm{OFF}, \textrm{UP} \}\), because the left-hand side is the probability of having not transitioned from the three standard switch states to the fictitious absorbing states, while the right-hand side is the complement of the probability of having already been absorbed by them. This probability is just the waiting time distribution for either of these FPT processes to occur. Alternatively, Eq. (9a) is just the statement that each column sum of \(\mathbb {P}(t)\) must be unity. The further equalities

follow directly from Eqs. (4), (5) and (8).

We will require the rates of the FPT processes defined by the pair of mutually exclusive density functions \(G_Y^\pm (t)\) for any given Y, considered purely in mathematical terms rather than as processes that arise from some underlying mechanism. The rates of these processes are determined from the probability distribution for the number of occurrences of these events in some time t, where immediately upon the occurrence of one of them, we start waiting for the next. Let \(N(\nu _+, \nu _-; t)\) be the probability of \(\nu _\pm \) occurrences of the events associated with the densities \(G_Y^\pm (t)\), respectively. Then, we have

for \(\nu _\pm \ge 0\) with boundary conditions \(N(-1, \nu _-; t) = 0\) and \(N(\nu _+, -1; t) = 0\), where \(\delta _{a,b}\) is the Kronecker delta symbol. The inhomogeneous term on the right-hand side involves the waiting time distribution for either event. If neither has occurred in time t, then \(\nu _\pm \) must both be zero with this waiting time probability. The homogeneous terms essentially count the events as they first occur at some time \(\tau \), with further events having to occur in the remaining time \(t - \tau \) to give the required number of events \(\nu _\pm \) at time t. By taking the Laplace transform, with \(\widehat{f}(s)\) denoting the transform of f(t), and defining the probability generating function (PGF)

we obtain

The rates \(r_Y^\pm (t)\) of the induction signals are just the derivatives of the expectation values of \(\nu _\pm \). It is more convenient to consider the derivatives of these rates, so \(\rho _Y^\pm (t) = \textrm{d} r_Y^\pm (t)/\textrm{d}t\). Since \(\widehat{\rho }_Y^\pm (s) = s \, \widehat{r}_Y^{\, \pm } (s)\), where we have used the fact that \(r_Y^\pm (0) = 0\) Hz (cf. \(G_Y^\pm (0) = 0\)), we get

with inverse relations

For any escape or FPT processes, we always obtain this relationship between the \(\widehat{\rho }\)’s and the \(\widehat{G}\)’s, but with the denominators extended to sums over all exit processes into all possible absorbing states. The rate derivatives \(\rho _Y^\pm (t)\) are useful because we can obtain the asymptotic behaviour of the rates \(r_Y^\pm (t)\) from the Laplace transforms of \(\rho _Y^\pm (t)\). In particular,

using \(r_Y^\pm (0) \equiv 0\) Hz. As \(\widehat{\rho }_Y^\pm (s) = s \, \widehat{r}_Y^{\, \pm } (s)\), this result is an example of a standard Tauberian theorem, namely that \(\lim _{s \rightarrow 0} s \, \widehat{f} (s) = \lim _{t \rightarrow \infty } f(t)\) (see, e.g. Feller 1967, vol. II).

Before considering how a synapse might respond to the plasticity induction signals generated by the STDP switch, it is instructive to reconsider the dynamics in Fig. 1A. Although we have argued that the spike-driven UP \(\rightarrow \) OFF and DOWN \(\rightarrow \) OFF transitions can lead to changes in the rates of these very transitions because of synaptic plasticity, we can instead consistently define the system in Fig. 1A by considering the scenario in which a synapse just ignores plasticity induction signals. The rates of the transitions in Fig. 1A may then be time-dependent only in virtue of any time-dependence of the presynaptic spike firing rate and not because of any changes that the transitions in Fig. 1A may themselves trigger. The generating matrix for the transitions in Fig. 1A is then given by:

which is just the matrix \(\mathbb {S}_0\) with the spike-driven UP \(\rightarrow \) OFF and DOWN \(\rightarrow \) OFF transitions included. If the presynaptic spike firing rate \(\lambda _\pi \) and thus the postsynaptic spike firing rate \(\lambda _p\) do not depend on time, then we may examine the equilibrium distribution of the STDP switch states. Denoting this distribution by the vector \(\underline{\sigma }\) with components \(\sigma _X\), \(X \in \{ \textrm{DOWN}, \textrm{OFF}, \textrm{UP} \}\), \(\underline{\sigma }\) is the normalised null right eigenvector of \(\mathbb {S}\), so that \(\mathbb {S} \, \underline{\sigma } = \underline{0}\), where \(\underline{\sigma } \cdot \underline{1} = 1\), with \(\underline{1}\) being a vector of unit components and the dot denotes the dot product. In equilibrium, the rate \(r^+\) of potentiating induction signals is just the probability that the STDP switch is in the UP state multiplied by the rate \(\lambda _p\) of the postsynaptic-spike-driven UP \(\rightarrow \) OFF transition. Thus, \(r^+ = \lambda _p \, \sigma _{\, \textrm{UP}}\). Similarly, \(r^- = \lambda _\pi \, \sigma _{\, \textrm{DOWN}}\). These equilibrium rates \(r^\pm \) in fact agree precisely with an explicit calculation of the asymptotic rates \(r_{\textrm{OFF}}^\pm (\infty ) = \widehat{\rho }^\pm _{\textrm{OFF}} (0)\) via the FPT densities \(G_{\textrm{OFF}}^\pm (t)\) discussed above, when the spike firing rates \(\lambda _\pi \) and \(\lambda _p\) are of course taken to be fixed. Notice, however, that \(r_{\textrm{OFF}}^\pm (\infty )\) are by construction the asymptotic rates of FPT induction signals specifically starting from an initial OFF state. The dependence of the rates \(r_Y^\pm (t)\) on their initial state Y is in general never lost, not even in the asymptotic limit when it exists (e.g. \(r_{\textrm{DOWN}}^+(\infty ) \ne r_{\textrm{OFF}}^+(\infty )\)), whereas essentially by definition the equilibrium vector \(\underline{\sigma }\) retains no memory of the initial distribution of the switch states. The use of an equilibrium distribution to calculate particular asymptotic FPT induction rates in this way provides a powerful and simple tool that will be extremely useful later.

2.2 Synaptic filtering of plasticity induction signals

Changes in synaptic filter state triggered by the synaptic plasticity induction signals generated by the STDP tristate switch mechanism. Circles labelled with a particular value of I indicate the five possible filter states for the specific choice of \(\varTheta = 3\); the two circles labelled \(\pm \varTheta ^*\) in panel B represent absorbing states enabling FPT calculations. The transitions are labelled \(\mathscr {I}^+\) and \(\mathscr {I}^-\) to indicate potentiating and depressing induction signals, respectively. The additional labels \(\Uparrow \) and \(\Downarrow \) indicate transitions in which the expression of synaptic plasticity is triggered

The plasticity induction signals generated by the STDP switch may lead to the immediate expression of a change in synaptic strength of the corresponding synapse. However, because of the stochastic nature of our STDP model, fluctuations can destabilise developmentally-relevant patterns of synaptic connectivity (Appleby and Elliott 2006; Elliott 2008). These fluctuations can be suppressed by taking the plasticity step size T to be very, in fact implausibly small, which slows down the overall rate of synaptic plasticity and therefore allows synaptic self-averaging to occur (Appleby and Elliott 2006; Elliott 2008). Alternatively, we have proposed that synapses integrate synaptic plasticity induction signals before expressing synaptic plasticity. These integrate-and-express models of synaptic plasticity allow synapses to act as low-pass filters, suppressing high-frequency noise in plasticity induction signals while passing any low-frequency trends in them (Elliott 2008; Elliott and Lagogiannis 2009). Many such models are possible, but here we consider only the simplest. Any given synapse is postulated to instantiate in its molecular machinery the state of a discrete synaptic filter. We have discussed elsewhere how a synapse might do this (Elliott 2011a). A potentiating induction signal leads to an increment in filter state, while a depressing induction signal leads to a decrement. When the filter reaches an upper threshold, an increase in synaptic strength of T (or, more generally, \(T_+\)) is expressed, while if it reaches a lower threshold, a decrease of T (or \(T_-\)) is expressed. Upon reaching either threshold, the filter is then also reset to some other state, which we take to be the zero filter state. Filter states can then be represented by integers, with the upper threshold at some integer value \(+ \varTheta _+ > 0\) and the lower threshold at some other integer value \(- \varTheta _- < 0\), where \(\varTheta _\pm > 0\). We will take the two thresholds to be symmetrical with respect to the zero filter state, so that \(\varTheta _\pm = \varTheta \) for some common value \(\varTheta \). Filter states are indexed by letters such as I and J, where \(I,J \in \{ -(\varTheta - 1), \ldots , 0, \ldots + (\varTheta - 1) \}\). The filter state performs a one-step random walk on this discrete set with absorbing boundaries at \(\pm \varTheta \). The one-step transitions are driven by the spike-induced UP \(\rightarrow \) OFF and DOWN \(\rightarrow \) OFF transitions from the STDP tristate switch, whose FPT densities we calculated above. Synaptic plasticity is expressed at the absorbing boundaries at \(\pm \varTheta \), at which the filter is then reset to the zero state. Figure 2A illustrates the transitions of such a synaptic filter for the particular case of \(\varTheta = 3\), explicitly showing the resetting of the filter to the zero state at threshold.

Because synaptic plasticity is expressed at either filter threshold, we have the familiar issue of the transition rates in Fig. 2A changing due to these very transitions even when \(\lambda _\pi \) is fixed. In Fig. 2B, we therefore explicitly represent the thresholds as absorbing boundary states so that for fixed \(\lambda _\pi \) and thus fixed \(\lambda _p\), the transition rates are fixed. The temporal dynamics of the expression of synaptic plasticity are therefore determined by the FPT process for escape to these absorbing boundaries. To obtain the FPT densities for filter threshold events, we write down a renewal equation governing the transitions in joint synaptic STDP switch and filter states (see, e.g. Cox 1962, for a discussion of renewal equations). Let \(F_{J, Y}^{I, X}(t)\) be the probability for the transition from switch state Y and filter state J to switch state X and filter state I in time t without either filter threshold having been reached. Then, we have the renewal equation

for \(| I | < \varTheta \) and \(| J | < \varTheta \), subject to the boundary conditions \(F_{\pm \varTheta ,Y}^{I, X}(t) = 0\).Footnote 2 As convolution integrals typically appear in renewal equations, we will usually use the standard notation

for brevity. The interpretation of Eq. (17) is straightforward. The inhomogeneous term on the right-hand side allows for the possibility that an induction signal is not generated by the STDP switch in time t. In this case, the filter state cannot change (hence \(\delta _J^I\)), with the switch state possibly changing but not via a spike-driven induction process (hence \(F_Y^X(t)\)). The homogeneous terms allow for the possibility that an induction signal is first generated at some time \(0< \tau < t\). This may be a potentiating (hence \(G_Y^+(\tau )\)) or depressing (hence \(G_Y^-(\tau )\)) induction signal. The initial filter state J will then have increased to \(J+1\) or decreased to \(J-1\), respectively, but in either case, the STDP switch will be in the OFF state immediately after the induction signal has been generated at time \(\tau \). In the remaining time \(t-\tau \), the synapse must then transition from filter states \(J \pm 1\) and the OFF switch state to the final states I and X (hence \(F_{J {\pm } 1, \textrm{OFF}}^{I, X} (t-\tau )\)). The imposed boundary conditions exclude the possibility that threshold is reached in either of these two induction cases, as by definition \(F_{J, Y}^{I, X}(t)\) is the transition probability without threshold being reached. These three possibilities of no induction signal or a first induction signal of either potentiating or depressing type are mutually exclusive and exhaustive, so they just sum on the right-hand side.

Equation (17) integrates out the internal STDP switch transitions by focusing only on the induction densities rather than considering the detailed internal switch dynamics by which the induction signals are generated. It therefore integrates out the “microscopic” switch dynamics and works with the “mesoscopic” dynamics of filter transitions; the “macroscopic” dynamics discussed later will be the changes in strength triggered by filter threshold processes. To confirm that the non- or semi-Markovian mesoscopic renewal dynamics of Eq. (17) are completely equivalent to the underlying Markovian microscopic dynamics, we can differentiate Eq. (17) and use Eq. (7) for the induction densities \(G_Y^\pm (t)\). After a little algebra we obtain

where the sums are over \(Z \in \{ \textrm{DOWN}, [1] \textrm{OFF}, [1] \textrm{UP} \}\), and \(v^\pm _Y\) are the components of \(\underline{v}^\pm \). The expression multiplying \(\delta _J^I\) on the right-hand side is just the relevant element of \(\textrm{d} \mathbb {F}(t)/\textrm{d}t - \mathbb {F}(t) \, \mathbb {S}_0\). This vanishes identically because \(\mathbb {F}(t)\) satisfies the forward equation \(d \mathbb {F}(t)/\textrm{d}t = \mathbb {S}_0 \, \mathbb {F}(t)\), and hence, it also satisfies the backward equation \(\textrm{d} \mathbb {F}(t)/\textrm{d}t = \mathbb {F}(t) \, \mathbb {S}_0\). The first term on the right-hand side is also just a matrix multiplication written out element-wise, again with post- rather than pre-multiplication by \(\mathbb {S}_0\). This matrix gives transitions in STDP switch state without induction processes being generated, so the filter states do not change. The remaining two terms on the right-hand side account for the induction processes, in which the STDP switch returns to the OFF state and an increment or decrement in filter state occurs, with the imposed boundary conditions excluding the possibility of either filter threshold being reached. Equation (18) is just the backward form of the microscopic dynamics including all switch state transitions. We obtain the backward rather than forward form because the renewal equation in Eq. (17) can only be written in the backward form, in which the final states are fixed.

We can use the renewal equation in Eq. (17) to derive equations for the FPT densities \(G_{J, Y}^{\pm \varTheta }\) for the escape through filter thresholds \(\pm \varTheta \) from the initial filter state J and switch state Y. Using the equivalent relationship between \(F_{J, Y}^{I, X} (t)\) and \(G_{J, Y}^{\pm \varTheta } (t)\) as that between \(F_Y^X (t)\) and \(G_Y^\pm (t)\) in Eq. (9a), after taking the Laplace transform we obtain

Similar equations are satisfied by \(\widehat{G}_{J, Y}^{\, \pm \varTheta } (s)\) individually, of which Eq. (19) is their sum. These equations are just

subject to the absorbing boundary conditions \(\widehat{G}_{\pm \varTheta , \textrm{OFF}}^{\pm \varTheta } (s) = 1\) (same signs) and \(\widehat{G}_{\mp \varTheta , \textrm{OFF}}^{\pm \varTheta }(s) = 0\) (opposite signs). It is clear that if we have solved for \(\widehat{G}_{J, \textrm{OFF}}^{\pm \varTheta } (s)\), the density for escape starting from the OFF switch state, then the other densities \(\widehat{G}_{J, Y}^{\pm \varTheta } (s)\) for \(Y = \textrm{DOWN}\) and \(Y = \textrm{UP}\) follow immediately. Setting \(Y= \textrm{OFF}\) in Eq. (20), we obtain simple second-order recurrence relations, whose solutions are

where

Substituting the expressions in Eq. (21) into Eq. (20) at once gives all the \(\widehat{G}_{J, Y}^{\pm \varTheta }(s)\). An equation very similar to Eq. (13) can then be used to obtain the Laplace transforms of the derivatives of the expression rates, \(\widehat{\rho }_{J, Y}^{\pm \varTheta }(s)\). The expressions for \(\widehat{\rho }_{0, \textrm{OFF}}^{\pm \varTheta }(s)\), namely

are particularly simple, and are of fundamental importance here, as we will see later.

We see from Eq. (17) that the various probabilities \(P_{J, Y}^{\, I, X}(t)\) for \(Y \in \{ \textrm{DOWN}, \textrm{OFF}, \textrm{UP} \}\) are controlled by the particular \(Y = \textrm{OFF}\) case, so by the probability \(P_{J, \textrm{OFF}}^{\, I, X} (t)\). This is also reflected in the escape densities \(G_{J, Y}^{\pm \varTheta }(t)\), all of which are controlled by \(G_{J, \textrm{OFF}}^{\pm \varTheta }(t)\). Starting from any initial switch state Y, one of the two possible initial induction densities \(G_Y^\pm (t)\) will drive the first induction event. However, all subsequent induction events will then be driven by the densities \(G_{\textrm{OFF}}^\pm (t)\), so starting from the OFF switch state. These OFF \(\rightarrow \) OFF switch transitions that trigger induction events are the key processes that drive changes in synaptic filter state. This is the reason for the control of filter transition probabilities and the corresponding filter threshold densities by the particular \(Y = \textrm{OFF}\) case. We have stressed the importance of these OFF \(\rightarrow \) OFF switch transitions before (Elliott 2010).

Synaptic plasticity is expressed by a synapse when its filter reaches threshold, but we may again consider a consistent scheme in which expression does not occur. Provided that \(\lambda _\pi \) and thus \(\lambda _p\) are fixed, the rates of the transitions in Fig. 2A are then fixed. In this case, we can consider the probability \(P_{J, Y}^{\, I, X}(t)\) for transitions between joint switch and filter states including the threshold processes and the subsequent resetting of the filter to the zero state (\(F_{J, Y}^{I, X}(t)\) excludes the possibility of reaching threshold and thus of resetting). The renewal dynamics become

Boundary conditions are not required. Rather, the two factors \(1 - \delta _J^{\pm (\varTheta - 1)}\) remove the upper and lower threshold process terms when \(J = \pm (\varTheta - 1)\). They would lead to the incorrect terms \(P_{\pm \varTheta , \textrm{OFF}}^{\, I, X}\) in this equation. Instead, these terms are replaced by the two terms multiplied by the two factors \(\delta _J^{\pm (\varTheta - 1)}\). These terms correctly reset the filter state to zero at threshold, giving the two different \(P_{0, \textrm{OFF}}^{\, I, X}\) contributions.

Unlike \(F_{J, Y}^{I, X}(t)\), which vanish as \(t \rightarrow \infty \) because filter threshold processes are inevitable given enough time, the transition probabilities \(P_{J, Y}^{\, I, X}(t)\) do not in general vanish in the equilibrium limit. Taking the Laplace transform of Eq. (24), we can use the Tauberian theorem that \(\lim _{s \rightarrow 0} s \, \widehat{P}_{J, Y}^{\, I, X} (s) = \lim _{t \rightarrow \infty } P_{J, Y}^{\, I, X} (t)\) to obtain

where we have used the fact that

Taking as usual \(Y = \textrm{OFF}\) and defining the \((2\varTheta -1) \times (2\varTheta -1)\) matrix \(\mathbb {E}\) with elements

where we index the elements according to the corresponding filter state, Eq. (25) can be written in the form

For any given final switch state X, this equation just expresses an element-wise matrix multiplication involving a matrix of asymptotic transition probabilities for filter states and the matrix \(\mathbb {E}\). We again have post-multiplication by the generating matrix \(\mathbb {E}\) because of the backward form of renewal equations. In this backward form, Eq. (28) encodes the trivial fact that the rows of the asymptotic filter state transition matrix (for fixed final switch state X) are proportional to the null left eigenvector of \(\mathbb {E}\), or just \(\underline{1}^{\textrm{T}}\). For the columns, we must consider the forward form of Eq. (28), which just entails pre-multiplication by \(\mathbb {E}\). Hence, all columns are the same (because of the row proportionality), with any given column proportional to the null right eigenvector of \(\mathbb {E}\). Write this as \(\underline{\varepsilon }\), where \(\underline{\varepsilon } \cdot \underline{1} = 1\), so that \(\underline{\varepsilon }\) is normalised to a probability distribution, and write its components as \(\varepsilon _I\), again indexed by filter state. In any given equilibrium filter state I with probability \(\varepsilon _I\), the system can reside in any of the three switch states \(X \in \{ \textrm{DOWN}, \textrm{OFF}, \textrm{UP} \}\). Their equilibrium probability distribution is just \(\sigma _X\). So, we must have \(P_{J, \textrm{OFF}}^{\, I, X} (\infty ) = \varepsilon _I \, \sigma _X\). This equilibrium result must be independent of the particular initial choice of \(Y = \textrm{OFF}\), which can be confirmed directly from Eq. (28). Hence, we have

as the asymptotic transition probabilities for joint filter and switch states.

An explicit calculation of the components \(\varepsilon _I\) gives

which may be verified by checking that \(\mathbb {E} \, \underline{\varepsilon } = \underline{0}\). In equilibrium, the conditional rate of the expression of a potentiating step, conditional on the switch state being in the OFF state, is just probability of the filter being in state \(I = +(\varTheta - 1)\) at equilibrium, \(\varepsilon _{\varTheta -1}\), multiplied by the asymptotic rate of an induction step starting from the OFF switch state, \(r_{\textrm{OFF}}^+(\infty )\). Similarly for the expression of a depressing step. Hence, these conditional equilibrium rates are just \(r_{\textrm{OFF}}^\pm (\infty ) \, \varepsilon _{\pm (\varTheta - 1)}\) (same signs). They agree exactly with the asymptotic rates \(r_{0, \textrm{OFF}}^{\pm \varTheta } (\infty ) = \widehat{\rho }_{0, \textrm{OFF}}^{\pm \varTheta } (0)\) obtained from the FPT densities for filter thresholds being reached starting from the OFF switch state and the zero filter state given in Eq. (23). We have

We note again that although the asymptotic rates \(r_{0, \textrm{OFF}}^{\pm \varTheta } (\infty )\) explicitly depend on starting from the zero filter state, the equilibrium rates \(r_{\textrm{OFF}}^\pm (\infty ) \, \varepsilon _{\pm (\varTheta - 1)}\) have by definition forgotten about the initial filter state.

2.3 Expressing changes in synaptic strength

We are now in a position to write down a renewal equation for joint changes in synaptic strength, filter and switch states. A synapse’s strength is assumed to take only discrete values, with a uniform spacing of T between consecutive strengths. We refer to a synapse’s strength state rather than its strength, indexing the strength state by letters such as A and B. As we consider only excitatory synapses, strengths cannot be negative, so \(A,B \in \{ 0, 1, 2, \ldots \}\). The strength of a synapse in strength state B is just TB. It will be convenient to employ an upper bound on synaptic strengths, but only for the purposes of obtaining numerical solutions of equations rather than as a hard bound to prevent run-away excitation. Analytically this upper bound is not required and we can often ignore it. When required, we take \(A,B \in \{ 0, 1, \ldots , N-1, N \}\), with N denoting the highest strength state available. We will always take N large enough, when required, so that it does not have a significant impact on our results. For a postsynaptic cell with just one synaptic input, as we are currently considering, its spike rate \(\lambda _p\) is a function of the presynaptic spike rate \(\lambda _\pi \) and the strength state B of its synaptic input. We set

where \(\lambda _{\textrm{spont}}\) is a spontaneous postsynaptic spike firing rate whose presence is essential to ensure the existence of non-trivial equilibrium patterns of synaptic connectivity (Elliott 2011b). The switch and filter state transitions considered above depend on both the presynaptic spike firing rate and the postsynaptic spike firing rate. They therefore depend explicitly on the synaptic strength state via \(\lambda _p\). If a synapse is in strength state B, we denote this dependence by writing, for example, the FPT densities as \(G_{J, Y; \, B}^{\pm \varTheta } (t)\) and \(G_{J; \,B}^\pm (t)\) rather than the earlier \(G_{J, Y}^{\pm \varTheta } (t)\) and \(G_J^\pm (t)\), and other related quantities are similarly modified.

The renewal equation for the transition probability from joint synaptic strength state B, filter state J and switch state Y to joint states A, I and X in time t is, for \(0<B<N\),

For \(B=0\), we replace the depressed term \(P_{B - 1, 0, \textrm{OFF}}^{\, A, I, X}\) on the right-hand side with \(P_{0, 0, \textrm{OFF}}^{\, A, I, X}\) to enforce a reflecting boundary condition at the lowest strength state, while with an upper boundary at \(B=N\), we replace the potentiated term \(P_{B+1, 0, \textrm{OFF}}^{\, A, I, X}\) with \(P_{N, 0, \textrm{OFF}}^{\, A, I, X}\). These reflecting boundaries enforce saturation dynamics in synaptic strength. Notice that despite this saturation, the switch and filter states are still returned to the OFF and zero states, respectively. We assume that the dynamics of switch and filter state transitions are not explicitly sensitive to a synapse’s current strength state (they are implicitly sensitive, but only via the dependence of \(\lambda _p\) on synaptic strength). Thus, a threshold filter transition caused by an induction signal triggered by the STDP switch will lead to an expression signal regardless of the synapse’s current strength state. The filter and STDP switch will then reset. Whether or not the synapse can actually act on the expression signal and implement a change in its strength state should not affect the filter and switch processes.

For a single synapse, Eq. (33) is exact, with no approximations having been made to obtain it. It integrates out the details of the underlying filter and switch processes by considering only the filter threshold densities. We may again confirm by differentiation that the macroscopic strength dynamics of Eq. (33) correctly describe the mesoscopic filter and microscopic switch dynamics that generate the expression and induction signals leading to macroscopic strength changes. The equation essentially describes a one-step random walk on a finite or semi-infinite set of strength states between reflecting boundaries, where the expression densities drive the steps. Although the detailed sub-macroscopic dynamics have been integrated out, Eq. (33) retains vestiges of them by referencing the initial filter and switch states J and Y, respectively. It is clear, however, that the fundamental process is driven by the transitions starting from the OFF switch state and the zero filter state. We have already discussed the significance of the OFF \(\rightarrow \) OFF switch transitions. The zero \(\rightarrow \) zero filter state transitions are also fundamental in the same way. This is reflected in the presence of the terms \(P_{B \pm 1, 0, \textrm{OFF}}^{\, A, I, X}(t)\) in Eq. (33). Once the first expression step has occurred, the system has forgotten its initial filter and switch states, and the key dynamics are then driven by the expression processes from the joint zero filter and OFF switch states back to these states.

If we were to solve Eq. (33), we would first set \(J = 0\) and \(Y = \textrm{OFF}\) to obtain a recurrence relation in B for \(P_{B, 0, \textrm{OFF}}^{\, A, I, X}(t)\), and then the general forms \(P_{B, J, Y}^{\, A, I, X}(t)\) would follow. The key transition probabilities of interest are therefore \(P_{B, 0, \textrm{OFF}}^{\, A, I, X}(t)\), which control all the others. Furthermore, we will be concerned with the long-term, equilibrium behaviour of \(P_{B, 0, \textrm{OFF}}^{\, A, I, X}(t)\) (or some suitable version thereof), and this must become independent of the initial joint state. Therefore, we may without loss of generality simply set \(J = 0\) and \(Y = \textrm{OFF}\) in Eq. (33). Since we are interested only in the macroscopic dynamics of the evolution of synaptic strength states, we can also just sum over the final switch state X and filter state I, to obtain the marginal transition probability to strength state A from strength state B. By writing \(\mathscr {P}_B^{\, A}(t) = \sum _{I, X} P_{B, 0, \textrm{OFF}}^{\, A, I, X} (t)\), using the relationship between \(\sum _{I, X} F_{J, Y; \, B}^{I, X}(t)\) and \(G_{J, Y; \, B}^{\pm \varTheta }(t)\), taking the Laplace transform and then expressing \(\widehat{G}_{J, Y; \, B}^{\pm \varTheta } (s)\) in terms of \(\widehat{\rho }_{J, Y; \, B}^{\pm \varTheta }(t)\), Eq. (33) becomes

with obvious modifications at the reflecting boundaries. Since \(\mathscr {P}_B^{\, A} (0) = \delta _B^A\), we recognise the left-hand side as the Laplace transform of \(\textrm{d} \mathscr {P}_B^{\, A}(t)/\textrm{d}t\). Undoing the Laplace transform, we have

This is a backward equation for the evolution of the strength state transition probabilities \(\mathscr {P}_B^{\, A}(t)\) involving the memory kernels \(\rho _{0, \textrm{OFF}; \, B}^{\pm \varTheta } (t)\).

Since we have a backward equation, it is extremely tempting to move immediately to its forward form, which normally involves the trivial step of pre- rather than post-multiplying by the transition matrix implied by Eq. (35). In a backward equation, the final (joint) state is fixed and we evolve backwards in time to the probability distribution of the initial (joint) state. In a forward equation, the initial (joint) state is fixed and we evolve forwards in time to the probability distribution of the final (joint) state. Critically, however, in obtaining Eq. (35), we have summed over the final switch state X and filter state I and set their initial states to \(J = 0\) and \(Y = \textrm{OFF}\), since \(\mathscr {P}_B^{\, A} (t) = \sum _{I, X} P_{B, 0, \textrm{OFF}}^{\, A, I, X} (t)\). Although moving between the forward and backward Chapman–Kolmogorov equations for the evolution of the full transition probability \(P_{B, J, Y}^{\, A, I, X} (t)\) does involve the trivial transposition of the transition operator, no such move is therefore possible for the marginalised and restricted transition probability \(\mathscr {P}_B^{\, A} (t)\).

Nevertheless, Eq. (35) highlights the centrality of the internal state transitions in which, starting from the OFF switch and zero filter states, a synapse first expresses a change in strength state via an upper or lower filter threshold process, returning immediately back to the OFF switch and zero filter states. The memory kernels \(\rho _{0, \textrm{OFF}; \, B}^{\pm \varTheta } (t)\) integrate out all the internal details by which these two OFF & zero \(\rightarrow \) OFF & zero strength-changing transitions occur. As we are only interested in these internal dynamics insofar as they drive changes in strength state, we can elevate the memory kernels \(\rho _{0, \textrm{OFF}; \, B}^{\pm \varTheta } (t)\) to a fundamental status. We can therefore consider them as defining a one-step non- or semi-Markovian random walk on the set of strength states, inducing the transition probability \(P_B^{\, A} (t)\) between these strength states, where these strength states are now considered as structureless and irreducible. The backward equation describing this random walk is then of course identical to Eq. (35):

Clearly, the evolution of \(P_B^{\, A} (t)\) and \(\mathscr {P}_B^{\, A} (t)\) are identical, but this identity is established not by equivalence but by formal correspondence. However, unlike \(\mathscr {P}_B^{\, A} (t)\), for which we cannot move immediately to the forward equation, the move to the forward Chapman–Kolmogorov equation for \(P_B^{\, A} (t)\) is now indeed just a trivial matter of matrix transposition. Although there is a formal correspondence between \(P_B^{\, A} (t)\) and \(\mathscr {P}_B^{\, A} (t)\), this difference emphasises the deep conceptual gulf between these transition probabilities: \(P_B^{\, A} (t)\) knows nothing about the internal states, while \(\mathscr {P}_B^{\, A} (t) = \sum _{I, X} P_{B, 0, \textrm{OFF}}^{\, A, I, X} (t)\) has simply marginalised over the final internal states and restricted to specific initial internal states.

The forward equation for \(P_B^{\, A} (t)\) is just

We have re-ordered the products in Eq. (37) compared to Eq. (36) because, in a forward equation, the expression step is the last rather than the first step. Equation (37) is the general form for \(0< A < N\). For \(A = 0\), we replace \(\rho _{0, \textrm{OFF}; \, A - 1}^{+ \varTheta } *P_B^{\, A-1}\) by \(\rho _{0, \textrm{OFF}; \, 0}^{- \varTheta } *P_B^{\, 0}\), which replaces a potentiating step from the non-existent \(A = -1\) state with the reflected depressing step from the \(A=0\) state itself. There is of course then a cancellation of the two \(A=0\) depression terms in Eq. (37), but explicitly including the reflection process is conceptually clearer. If we also have an upper, reflecting boundary at \(A = N\), then we would replace \(\rho _{0, \textrm{OFF}; \, A + 1}^{- \varTheta } *P_B^{\, A + 1}\) by \(\rho _{0, \textrm{OFF}; \, N}^{+ \varTheta } *P_B^{\, N}\), which now replaces a depressing step from the non-existent \(A=N+1\) state with the reflected potentiating step from the \(A=N\) state itself.

The forward equation in Eq. (37) for the transition probabilities \(P_B^{\, A}(t)\) is of course just equivalent to the master equation for the evolution of the strength states themselves, starting from the definite initial strength state B. We write the probability of strength state A as \(P_{A; \, 0, \textrm{OFF}} (t)\), where we add the zero and OFF labels to remind ourselves that these are state probabilities driven exclusively by the two OFF & zero \(\rightarrow \) OFF & zero strength-changing transitions. We stress that these are merely labels, and do not indicate internal states. This distinction will be vital in Sect. 3. The master equation is just

again with the same boundary modifications. We can take the Laplace transform of Eq. (38) to remove the convolution structure and solve directly for \(\widehat{P}_{A; \, 0, \textrm{OFF}}(s)\), obtaining \(P_{A; \, 0, \textrm{OFF}}(t)\) for any finite time t, at least in principle even when \(\lambda _\pi \) is time-dependent. Here we are mainly concerned with the equilibrium structure of the probability distribution of synaptic strength states rather than the process of evolution towards this equilibrium distribution. This focus is equivalent to considering the outcome (or the multiple possible outcomes) of neuronal development rather than the temporal dynamics of neuronal development. For the unrealistic case of fixed \(\lambda _\pi \), \(P_{A; \, 0, \textrm{OFF}}(t)\) has a well-defined equilibrium limit. However, for the realistic case of time-dependent \(\lambda _\pi \), an equilibrium limit in the conventional sense does not in general exist. This limit would have to be defined by considering the ensemble of all possible patterns of \(\lambda _\pi (t)\), taking the ensemble average \(\langle P_{A; \, 0, \textrm{OFF}}(t) \rangle _{\lambda _\pi }\) over these patterns, and finally taking the limit \(t \rightarrow \infty \). Whether this calculation is feasible remains to be seen. We must instead resort to approximation methods to separate time scales, which we discuss next.

2.4 Separation of time scales

For the first approximation, we consider the behaviour of the rates \(r_{\textrm{OFF}; \, B}^\pm (t)\) of induction signals generated by the STDP switch mechanism, starting from the OFF state. Assuming that \(\lambda _\pi \) is fixed and with \(\lambda _p\) therefore also fixed for processes not involving a change in synaptic strength state B, the asymptotic behaviour of the induction rates is in fact achieved very quickly. For the typical presynaptic spike rate \(\lambda _\pi \) that we consider below and with \(\lambda _- = 50\) Hz (or \(\tau _- = 20\) ms) and \(\lambda _+ \approx 83\) Hz (or \(\tau _+ = 12\) ms), asymptotic behaviour is achieved within around 10 ms (Elliott 2011b). This time scale is much shorter than the time scale on which natural stimulus patterns change in the visual system, for example. This latter time scale is determined by the average fixation time between saccades, and so is perhaps of order at least 100 ms. Hence, using a fixed spike firing rate \(\lambda _\pi \) to determine the time scale on which the induction rates attain their asymptotic behaviours is a consistent procedure, because the spike firing rate is indeed essentially fixed during the much longer fixation periods. In addition, as 10 ms is much shorter than typical stimulus times and certainly much shorter than any relevant time scales involving synaptic plasticity (see, e.g. Elliott 2011a), to a good approximation we may regard \(r_{\textrm{OFF}; \, B}^\pm (t)\) as behaving like a scaled Heaviside step function, instantaneously jumping from 0 Hz at \(t=0\) s to its asymptotic rate for \(t > 0\) s. Thus, we write \(r_{\textrm{OFF}; \, B}^\pm (t) \approx r_{\textrm{OFF}; \, B}^\pm (\infty ) \, H(t)\), where for concreteness we define \(H(t) = 0\) for \(t \le 0\) s and \(H(t) = 1\) for \(t > 0\) s. We then also have \(\rho _{\textrm{OFF}; \, B}^\pm (t) = \textrm{d} r_{\textrm{OFF}; \, B}^\pm (t)/\textrm{d}t \approx r_{\textrm{OFF}; \, B}^\pm (\infty ) \, \delta (t)\). We saw earlier that the asymptotic FPT induction rates \(r_{\textrm{OFF}; \, B}^\pm (\infty )\) match exactly the induction rates \(r_B^+ = \lambda _p \, \sigma _{\, \textrm{UP}}\) and \(r_B^- = \lambda _\pi \, \sigma _{\, \textrm{DOWN}}\) obtained from the equilibrium distribution \(\underline{\sigma }\) of switch states. We therefore simplify the notation by stripping off the subscript referring to the OFF switch state in these asymptotic induction rates, and instead just write \(r_B^\pm (t) \approx r_B^\pm \, H(t)\) and \(\rho _B^\pm (t) \approx r_B^\pm \, \delta (t)\). The Laplace transforms of the switch FPT densities for \(Y = \textrm{OFF}\) in Eq. (14) then become the simple expressions (again stripping off the subscript OFF)

so that \(G_B^\pm (t) = r_B^\pm \, \exp [ - (r_B^+ + r_B^-) \, t ]\), which are just exponentially distributed densities. An explicit calculation of the asymptotic or equilibrium rates \(r_B^\pm \) gives

which are just Eqs. (2.5) and (2.6) in Elliott (2011b) with \(s \rightarrow 0\) (or in the notation there, \(p \rightarrow 0\)).

For the second approximation, we consider the expression rates \(r_{0, \textrm{OFF}; \, B}^{\pm \varTheta } (t)\) rather than the induction rates \(r_{\textrm{OFF}; \, B}^\pm (t)\). As we now strip off the subscript OFF on \(r_{\textrm{OFF}; \, B}^\pm (t)\), we do the same on \(r_{0, \textrm{OFF}; \, B}^{\pm \varTheta } (t)\) and related functions, but it will be essential to retain reference to the initial filter state in Sect. 3, so we do not also strip off the subscript 0. Equation (21) gives the Laplace transforms of the expression densities \(\widehat{G}_{0; \, B}^{\pm \varTheta } (s)\) (setting \(J = 0\)), where in the expressions for \(\varPhi ^\pm (s)\) we use the approximation in Eq. (39) for the Laplace transformed induction densities. Because the Laplace transform of a probability density function is, up to the sign of s, just the moment generating function (MGF) of the distribution, we can use \(\widehat{G}_{0; \, B}^{\pm \varTheta } (-s)\) to obtain the mean time to express any change in synaptic strength state. Direct calculation shows this to be

For \(\varTheta = 1\), this time is well under one second for our typical choice of \(\lambda _\pi \) and with \(\lambda _p\) determined by direct synaptic drive (with \(\lambda _- = 50\) Hz and \(\lambda _+ \approx 83\) Hz). However, as \(\varTheta \) increases, the mean expression time increases. For \(\varTheta = 5\), it is typically a few seconds; for \(\varTheta = 10\), around ten seconds; for \(\varTheta = 20\), it approaches one minute; for \(\varTheta = 40\), a few minutes; and for \(\varTheta = 80\), closer to ten minutes. Choices of \(\varTheta \) that are too large are unrealistic from a biological point of view (Elliott 2011a), with \(\varTheta = 80\) likely too large but \(\varTheta = 40\) acceptable. For reasonable choices of \(\varTheta \) then, neither too small nor too large, filter threshold processes occur on time scales ranging from around ten seconds up to around several minutes. These time scales are much longer than typical stimulus times driven by fixation periods of perhaps around 100 ms or somewhat more. Thus, in Eqs. (17) and (24) (with \(Y = \textrm{OFF}\)) for the filter dynamics, the induction rates \(r_B^\pm \) appearing via Eq. (39) cannot be regarded as fixed because the stimuli change on a time scale at least at an order of magnitude, perhaps even two orders of magnitude faster than any filter threshold process. However, we may exploit the fact that the time scales are so different to make a second approximation. We assume that the filter dynamics in Eqs. (17) and (24) sample the changing induction rates \(r_B^\pm \) sufficiently often that we can replace the time-dependent rates \(r_B^\pm \) with their fixed ensemble averages \(\langle r_B^\pm \rangle \), where the average is taken over all possible stimulus patterns. This is an adiabatic approximation that allows a separation of processes occurring on two rather different time scales (Elliott 2011b).

To average over the stimulus patterns of m afferents with spike rates \(\lambda _i\), \(i = 1, \ldots , m\), we use our earlier methods (Elliott 2011b). We write \(\lambda _i = \mu + \alpha _i\) and define the first- and second-order statistics of the fluctuations \(\alpha _i\) by

so that \(\mu \) is the common mean spike firing rate, \(\varsigma ^2\) is the common variance in each afferent’s spike firing rate, and \(\mathfrak {r}_{i j}\) is the correlation coefficient between any pair of afferents’ spike firing rates; for \(i \ne j\), we take a common correlation coefficient \(\mathfrak {r}\), although we usually just set it to zero, i.e. \(\mathfrak {r}_{i j} = \delta _{i j}\). In simulation, we take \(\lambda _i \in \{ \mu - \varsigma , \mu + \varsigma \}\) with equal probability, with suitable pairwise correlations where necessary. Provided that \(\mu / \varsigma \) is sufficiently small, we showed that this restricted set of simulated spike firing rates fully captures an analytical ensemble average over any spike firing rate distribution satisfying the statistics in Eq. (42) (Elliott 2011b). Simulations are defined by firing epochs of duration 1000 ms. Spike firing rates are constant within each epoch and change between them. We take \(\mu = 50\) Hz and allow a standard deviation \(\varsigma \) of 25% around this mean value, in accordance with our previous protocols (Elliott 2011b).

The asymptotic and adiabatic approximations simplify the filter dynamics. For example, setting \(Y = \textrm{OFF}\) and summing over \(X \in \{ \textrm{DOWN}, \textrm{OFF}, \textrm{UP} \}\) in Eq. (17) to obtain the transition probability between filter states without threshold events, \(F_{J; \, B}^I(t)\) (again stripping off the OFF subscript and adding the strength state B), we obtain

again with boundary conditions \(F_{\pm \varTheta ; \, B}^I (t) = 0\). We have obtained this by employing the standard trick of taking the Laplace transform, using Eq. (39), and then undoing the transform. What would have been the memory kernels \(\rho _B^\pm (t)\) appearing under convolution integrals on the right-hand side of Eq. (43) have collapsed the integrals down because with the asymptotic approximation, we have \(\rho _B^\pm (t) \approx r_B^\pm \, \delta (t)\). Then, with the adiabatic approximation, \(r_B^\pm \) are replaced by their averages \(\langle r_B^\pm \rangle \). A similar simplification of Eq. (24) also occurs. Equation (43) can be solved explicitly. Writing it as the matrix equation \(\textrm{d} \mathbb {F}_B(t) / \textrm{d}t = \mathbb {F}_B(t) \, \mathbb {E}_0\), the matrix elements \([ \, \mathbb {E}_0 ]_{I,J}\) are

The matrix \(\mathbb {E}_0\) is just the earlier matrix \(\mathbb {E}\), whose elements are given in Eq. (27), but lacking the filter resetting terms and having been ensemble averaged. Because \(\mathbb {E}_0\) is a tridiagonal matrix, its eigen-decomposition is standard, and so the filter transition probabilities without threshold processes can be explicitly computed (van Kampen, 1992; see also Elliott and Lagogiannis, 2012). We obtain

where we have not simplified the expression any further so that the left and right eigenvectors and associated eigenvalues of \(\mathbb {E}_0\) can be read off from this form. Because the filter threshold FPT densities are in general given by (cf. Eqs. (9b) and (9c))

or \(\widehat{G}_{J; \, B}^{\pm \varTheta } (s) = \widehat{\rho }_B^\pm (s) \widehat{F}_{J; \, B}^{\pm (\varTheta - 1)} (s)\), which may be verified by directly solving the Laplace-transformed Eq. (17) (after setting \(Y = \textrm{OFF}\) and summing over \(X \in \{ \textrm{DOWN}, \textrm{OFF}, \textrm{UP} \}\)) and using Eq. (21), we can just write down explicit forms for \(G_{J; \, B}^{\pm \varTheta } (t)\) using Eq. (45). With the asymptotic approximation, the integral in Eq. (46) collapses, and the adiabatic approximation then gives

Although these are explicit solutions without using the Laplace transform, the Laplace-transformed versions are often simpler to use. With the asymptotic and adiabatic approximations, the results in Eq. (21) retain their given forms, but with \(\varPhi _B^\pm (s)\) in Eq. (22) replaced by

The same replacement also occurs in Eq. (23) for \(\widehat{\rho }_{0; \, B}^{\pm \varTheta } (s)\).

Although the filter dynamics collapse down to a Markov process with the asymptotic approximation, the strength dynamics do not collapse in this way. With the asymptotic and adiabatic approximations, for completeness we restate the master equation in Eq. (38) for strength states,

where the Laplace transforms of \(\rho _{0; \, B}^{\pm \varTheta }(t)\) are given in Eq. (23) (dropping the subscript OFF and adding the B index), with \(\varPhi _B^\pm (s)\) given by Eq. (48). We have also stripped off the OFF label on \(P_{A; \, 0, \textrm{OFF}} (t)\) to leave \(P_{A; \, 0} (t)\), because of the asymptotic approximation. The two approximations have simplified the memory kernels \(\rho _{0; \, B}^{\pm \varTheta }(t)\), but they are still present, so that the strength transitions continue to be governed by non- or semi-Markovian dynamics determined by non-exponential waiting times driven by the filter threshold FPT densities.

2.5 Equilibrium strength distribution

As discussed, although we can solve Eq. (49) at least for the Laplace transform of \(P_{A; \, 0}(t)\) and thus obtain the full time-dependence, we are more interested in the asymptotic behaviour of these probabilities, giving their equilibrium structure. With the adiabatic approximation in particular, the equilibrium limit is well-defined. Taking the Laplace transform of Eq. (49) and then again using the standard Tauberian theorem for the equivalence of the \(s \rightarrow 0\) and \(t \rightarrow \infty \) limits, the resulting equation for the equilibrium distribution, \(P_{A; \, 0} (\infty )\), is

with appropriate changes for the reflecting boundary conditions at \(A=0\) and possibly at \(A=N\). Because \(r_{0; \, A}^{\pm \varTheta } (\infty ) = \widehat{\rho }_{0; \, A}^{\pm \varTheta } (0)\), from Eq. (23) we have the explicit forms

for the coefficients in this recurrence relation for \(P_{A; \, 0} (\infty )\). By swapping the positions of the two \(r_{0; \, A}^{\pm \varTheta } (\infty ) \, P_{A; \, 0} (\infty )\) terms in Eq. (50), it is manifest that if the \(P_{A; \, 0} (\infty )\) for \(A > 0\) satisfy the relationship

then Eq. (50) is immediately satisfied. The \(A=0\) boundary case \(P_{\, 0; \, 0} (\infty )\) is used to normalise the distribution. This observation generates the usual solution for the equilibrium distribution of a general one-dimensional one-step random walk on a finite or semi-infinite set of states (van Kampen 1992), where for \(A>0\),

The product is absent for the \(A = 1\) case.

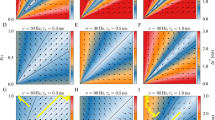

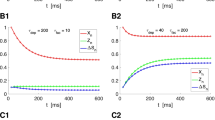

Equilibrium probability distribution of synaptic strength for a single afferent synapsing on a target cell. The distribution \(P_{A; \, 0} (\infty )\) is plotted against the synapse’s strength TA for various choices of the plasticity step size T as indicated. In panel A, we take \(\varTheta = 1\), while in panel B, we take \(\varTheta = 2\). The strength TA takes only the discrete values dictated by the strength state A scaled by T, so that in the displayed interval [0, 1] there are \(1 + 1/T\) discrete data points per line. The parameters are: \(\mu = 50\) Hz, \(\varsigma / \mu = 1/4\), \(\lambda _- = 50\) Hz (or \(\tau _- = 20\) ms), \(\lambda _+ = \lambda _- / \rho \) where \(\rho = 3/5\), and \(\lambda _{\textrm{spont}} = 0.1\) Hz

In Fig. 3, we plot the equilibrium distribution of the strength of a single afferent synapsing on a target cell for two different choices of \(\varTheta \) and different plasticity step sizes T. We see that for \(\varTheta = 1\) and \(T = 1/25\), the equilibrium distribution is tightly concentrated around the \(A=0\) state. As T reduces, the distribution first broadens, then develops a maximum away from \(A=0\), and finally sharpens up around this new maximum. A maximum of a probability distribution corresponds to a quasi-stable state that is frequently occupied by a system. If there are multiple maxima, then over time the system occupies all of them, with fluctuations driving transitions between them. As a distribution sharpens up around multiple maxima, the mean passage time for transitions between these maxima increases, possibly to the extent that any individual maximum is effectively stable because the fluctuations that drive transitions between the maxima develop on time scales that can for all practical purposes be regarded as infinitely long, or at least vastly longer than any relevant biological time scale. For \(\varTheta = 2\), we observe the same behaviour as for \(\varTheta = 1\), but a larger value of T for \(\varTheta = 2\) roughly speaking corresponds to a smaller values of T for \(\varTheta = 1\), in terms of the overall profile of the equilibrium probability distribution.

We have taken \(\lambda _{\textrm{spont}} = 0.1\) Hz in Fig. 3. Using instead \(\lambda _{\textrm{spont}} = 0\) Hz, the equilibrium distribution collapses down to \(P_{A; \, 0} (\infty ) = \delta _{\, 0}^A\), so that it is entirely concentrated on the \(A=0\), zero strength state. From Eq. (40), we see that if \(\lambda _p = 0\) Hz, then \(r_A^\pm = 0\) Hz, so that synaptic plasticity switches off in the switch model of STDP. In the absence of spontaneous postsynaptic activity, the postsynaptic spike firing rate can only become zero in the \(A=0\) state. Hence, the \(A=0\) state is an absorbing state in the absence of spontaneous postsynaptic activity, so we must allow a small, non-zero level of postsynaptic spontaneous activity to prevent this uninteresting case from arising. The equilibrium distribution is insensitive to the precise value of \(\lambda _{\textrm{spont}}\) provided that it is not taken to be too large.

We denote by \(T_{\textrm{C}}\) the critical value of T that corresponds to the first appearance of a maximum of \(P_{A; \, 0} (\infty )\) for some \(A > 0\) as T is reduced. This value \(T_{\textrm{C}}\) corresponds to a value of T that induces a bifurcation in the number of maxima of \(P_{A; \, 0} (\infty )\). For \(\varTheta = 1\), we previously calculated an explicit expression for \(T_{\textrm{C}}\) based on taking a continuum limit, regarding the synaptic strength \(S=TA\) as a continuous rather than discrete quantity in Eq. (53), and making an approximation in replacing a sum by an integral in order to evaluate the logarithm of the product appearing in Eq. (53), and for simplicity using only \(\lambda _\pi = \mu \) (Elliott 2011b). Repeating this calculation for general \(\varTheta \), we find that

where

For smaller values of \(\varTheta \), \(T_{\textrm{C}}\) scales nearly linearly with \(\varTheta \), but as \(\varTheta \) increases further, \(T_{\textrm{C}}\) asymptotes to a value independent of \(\varTheta \), where the asymptotic value is

We have observed this behaviour previously (Elliott 2011b) but not formally derived it because previously we only derived Eq. (54) for the specific, \(\varTheta = 1\) case. Fig 4 shows the dependence of the location of the \(A>0\) maximum of \(P_{A; \, 0} (\infty )\) on T, and the dependence of the corresponding value of \(T_{\textrm{C}}\) on \(\varTheta \). For \(\varTheta \lessapprox 8\), we see that the analytical expression for \(T_{\textrm{C}}\) in Eq. (54) agrees very closely with the value of \(T_{\textrm{C}}\) obtained by numerical methods. The agreement is still reasonable for larger values of \(\varTheta \), but the approximation method used to obtain Eq. (54) requires considering both \(S = TA\) and T to be small, so it starts to break down as \(T_{\textrm{C}}\) increases above around 0.1.

Scaling of critical synaptic plasticity step size with filter threshold for a single afferent synapsing on a target cell. In panel A, we plot the strength state \(A > 0\) corresponding to a maximum of \(P_{A; \, 0} (\infty )\) away from the zero strength state, as a function of the plasticity step size T, for different choices of threshold \(\varTheta \) as indicated. At T is reduced, the value at which A suddenly jumps from \(A=0\) to some value \(A>0\) corresponds to a bifurcation. In panel B we plot this critical value \(T_{\textrm{C}}\) as a function of \(\varTheta \), where we obtain \(T_{\textrm{C}}\) either numerically from the exact expression for \(P_{A; \, 0} (\infty )\) (black line) or via the analytical approximation discussed in the main text (red line). Parameter choices correspond to those used in Fig. 3