Abstract

Over the last decades, computer modeling has evolved from a supporting tool for engineering prototype design to an ubiquitous instrument in non-traditional fields such as medical rehabilitation. This area comes with unique challenges, e.g. the complex modeling of soft tissue or the analysis of musculoskeletal systems. Conventional modeling approaches like the finite element (FE) method are computationally costly when dealing with such models, limiting their usability for real-time simulation or deployment on low-end hardware, if the model at hand cannot be simplified without losing its expressiveness. Non-traditional approaches such as surrogate modeling using data-driven model order reduction are used to make complex high-fidelity models more widely available regardless. They often involve a dimensionality reduction step, in which the high-dimensional system state is transformed onto a low-dimensional subspace or manifold, and a regression approach to capture the reduced system behavior. While most publications focus on one dimensionality reduction, such as principal component analysis (PCA) (linear) or autoencoder (nonlinear), we consider and compare PCA, kernel PCA, autoencoders, as well as variational autoencoders for the approximation of a continuum-mechanical system. In detail, we demonstrate the benefits of the surrogate modeling approach on a complex musculoskeletal system of a human upper-arm with severe nonlinearities and physiological geometry. We consider both, the model’s deformation and the internal stress as the two main quantities of interest in a FE context. By doing so we are able to create computationally low-cost surrogate models which capture the system behavior with high approximation quality and fast evaluations.

Similar content being viewed by others

1 Introduction

With the growing availability of virtual and augmented reality (AR/VR) devices, interest in scientific applications for this type of hardware is also increasing across different research fields, e.g. immersive data and simulation analysis or computer aided surgery [13, 4]. Placing the user in an environment that allows for direct, intuitive interaction with data and models, having them properly represented in the same space as the user, or providing crucial information directly in a persons field of vision to aid them in the task they are performing, holds great potential for disciplines, which are—so far—primarily experienced through regular screens. In particular, musculoskeletal system models with realistic muscle geometries and material behavior are relevant for physiotherapy applications, where the muscle’s shape, deformation, as well as their inner stress are important. The stress can be used to derive the force output and the joint torques which are the main quantities of interest in a physiotherapy context but also many other mechanical applications. However, as comfort of the wearer, and thus weight and size of the device, are primary concerns for the manufacturers, the computational resources an AR/VR headset can provide are quite limited.

Previously, the musculoskeletal biomechanics simulations that were able to be executed on resource-poor systems or meet real-time performance requirements usually relied on multibody systems using so-called lumped parameter models like Hill-type muscles for the muscle tissue [22]. Lumped parameter models capture the macroscopic behavior of the muscle tissue in an arrangement of discrete elastic, dampening and contractile elements. Consequently, they can be used for forward simulations up to the scale of full-body models modelling the skeleton as multibody systems. While very performant and able to exhibit some key characteristics of muscles they only offer a one-dimensional representation [39].

Realistic muscle geometries like the three-dimensional distribution of stress and deformation or contact mechanics cannot be addressed with this type of model. For these purposes a continuum-mechanical material description in combination with the finite-element method (FE) is well suited for dealing with the large deformations encountered in human soft tissues, like skeletal muscles. These capabilities do, however, come at a considerable computational cost, making them undesirable for any type of time-sensitive simulation or evaluation heavy application like inverse problem solving musculoskeletal biomechanics [18].

However, a new generation of data-based surrogate models from the field of data-driven model order reduction (MOR) enables to transfer the knowledge from complex high-fidelity models to highly efficient and trainable algorithms. Most publications in this context focus on academic problems rather than practical, application-oriented examples. For example, [18, 14, 30] deal among others with the Burgers’ equation, while other publications consider the Navier–Stokes equation [21] or other fluid dynamics problems [38]. Publications that are closer to application, in the field of structural dynamics, mostly concentrate on crash simulation models [19, 26, 24] or external excited structures and micromirrors [15, 10]. Human body models, on the contrary, are rarely considered in data-based surrogate modeling approaches but come with specific challenges compared to other structural dynamical systems. In particular, the model considered in this publication implements a highly nonlinear material model, with physiological muscle and bone geometries, causing uniquely complex input output behavior for a mechanical system. Parts of the data from this model have already been successfully approximated using a sparse interpolation scheme involving cubic B-splines [43].

Related publications from the field of structural dynamics [19, 26, 27, 24] show that the derived surrogate models are capable of approximating the systems displacement, i.e. spatial configuration, well. That said, other physical quantities such as stress have been neglected so far or were only calculated in a post-processing step using standard FE tools like [15]. Even if the methods are often attributed a general validity, in reality there are non-negligible differences and associated requirements between the quantities to be approximated.

Consequently, the success of a surrogate model approach for this application is not guaranteed and proofs to be challenging. It is not clear which surrogate modeling approach is suited for which quantity and which application. Hence, we not only want to showcase to which extent surrogate models can be applied to the aforementioned problem but also present a variety of different algorithms along with a comprehensive comparison and design guidance. In this way, we hope to contribute to the work of other researchers tackling similar problems. In detail, we focus on data-driven non-intrusive model order reduction (MOR).

1.1 Data-driven model order reduction

In addition to classical machine learning, the field of model order reduction provides a broad library of methods for the efficient approximation of high-dimensional systems. Aside from classical linear techniques like modal or Krylov reduction and parametrized model reduction, as well as classical nonlinear techniques such as collocation-based methods or optimized global cubature methods [3, 12], data-driven approaches have become a popular solution and close the gap to machine learning. Their benefits include their ability to handle black- and gray-box models and to capture nonlinear behavior. Furthermore, the disadvantage of having to manipulate simulation code is avoided. Unfortunately, this generally requires a costly offline phase and physical behavior can often no longer be guaranteed.

A concept, which many of the data-driven MOR approaches share, is the idea of transforming a high-dimensional state onto a low-dimensional space (reduction) where the relationship between input variables and a low-dimensional representation of the state is learned (regression). Many publications in this context are focused on the use of specific choice of reduction and regression algorithms but obviously there are endless possible combinations. To account for all of them and to understand this idea as a general and modular framework, we will refer to this type of method as low-rank regression (LRR). An example for this can be found in [21], where proper orthogonal decomposition (POD) is combined with a neural network to extract a low-dimensional subspace from data first and predict the reduced dynamics of a parametrized partial differential equation second. The authors refer to this certain approach as POD-NN.

When linear reduction techniques reach their limits regarding approximation quality, nonlinear techniques can provide better dimensionality reduction capabilities. In [38], kernel proper orthogonal decomposition is used as nonlinear extension of POD in combination with neural networks to approximate parametrized partial differential equations. Another popular nonlinear dimensionality reduction approach are neural networks in the form of autoencoders (AE) [29]. They are composed of a decoder that maps the high-dimensional data onto a low-dimensional representation and an encoder which follows the task to reconstruct the full data from this reduced representation. In [14], for example, deep convolutional autoencoders are used along with feed-forward neural networks as surrogate models in cardiac electrophysiology. Other applications of convolutional autoencoders in the context of MOR can be found in [17, 30]. Convolutional neural networks are a popular choice for the task of reduction as they possess a lower number of trainable parameters compared to fully connected neural networks. Moreover, for structured, grid-like data, they are able to detect local patterns using filters. Unfortunately, they are not suited for finite element models which in general are not spatially discretized as grid. This can be remedied by using graph convolutions [11], which are compared to a fully connected neural network in the context of MOR in [18]. Graph convolutional autoencoders have been applied in the field of computer vision in combination with mesh sampling operations to reconstruct 3D faces [34] from a low-dimensional space. Moreover, many combinations of different reduction methods are possible: autoencoders which complement a linear subspace [41] or a series of linear and nonlinear reduction methods, where the first method reduces the system to a certain degree, followed by further reduction by the second method, see for example [10].

In contrast to the aforementioned methods which try to approximate the reduced system using a black-box model, other approaches aim to discover low-dimensional governing equations. In [9] an autoencoder is used to find a low-dimensional space in which the governing equations of the system are identified using sparse regression on a library of suitable function candidates. This approach can also be extended to discover hidden variables by adding time-delay embedding, see [6]. In the context of structural dynamics, this kind of approach was used to discover low-dimensional equations of a beam, which can then be used for continuation [10].

1.2 Scope and overview

In this work, we use the LRR framework to approximate the behavior of a complex three-dimensional musculoskeletal biomechanics model in the form of a human arm. In doing so, we not only consider its motion in form of node displacements but also develop surrogate models that directly predict the resulting internal stress. For the investigations we put our focus on the identification of low-dimensional coordinates describing the system states. In particular, we aim to investigate the advantages and disadvantages of linear and nonlinear reduction methods for application to various physical quantities in continuum-mechanical models and derive according recommendations. Thereby, we compare multiple reduction techniques, namely, the frequently used principal component analysis (PCA), its nonlinear extension kernel principal component analysis (KPCA) as well as fully-connected classical autoencoders (AE) and variational autoencoders (VAE) as further nonlinear alternatives. For this purpose, reference data is obtained from a high-fidelity FE model based on different muscle activation levels. With this data the reduction algorithms are fitted and used to create a low-dimensional representation of the data. The relation between muscle activation and reduced coordinates is approximated using Gaussian process (GP) regression. For the approximation of the arm’s full behavior, the GP ’s predictions are transformed back into original space.

The theoretical background can be found in Sect. 2, the resulting algorithm and its implementation in Sect. 3, while the results for the arm model are presented and discussed in Sect. 4 followed by the conclusion in Sect. 5.

The contributions of our work can be summarized as:

-

1.

We develop a sophisticated and precise surrogate model for a complex human arm model that is both real-time capable and accurate even for a five-dimensional parameter dependency.

-

2.

We apply the LRR framework to the challenging and often overlooked but important quantity of stress, demonstrating its effectiveness and limits.

-

3.

We use KPCA as a rarely used nonlinear alternative of PCA compared to autoencoders in the LRR framework and provide a comparison of all mentioned reduction methods.

-

4.

We provide guidance for the selection of reduction techniques based on their ability to reconstruct and generalize data accurately.

2 Methods

The underlying approximation method used in this paper relies on a coordinate transformation to find a low-dimensional description of the system and on a regression algorithm to learn the behavior of the system. Although we start with introducing the specific human upper-arm model, the used framework is explained in general so that it can be adapted and applied to other problems.

2.1 Model

Muscles are the driving organs, i.e. the actuators, of the human body. Together with soft tissues such as tendons, they are essential for the proper interaction and functioning of the human organism. For example, muscle activations have a great impact in car crashes, see [31]. It can stiffen extremities, reduce the load on certain parts of the body, and has a huge impact on the likelihood of injuries.

The considered human upper-arm model consists of the radius and ulna bones for the lower arm and the humerus for the upper-arm, see Fig. 1.

The elbow joint connecting them is modelled as a simple hinge joint. It contains five muscle actuating this joint, two extensors and three flexors. Namely these are the m. triceps brachii and the m. anconeus, as well as the m. biceps brachii, the m. brachialis and the m. brachioradialis. The geometry of all these components is modelled after the Visible Human Male dataset [2]. In [5], a previous iteration of this upper limb model is described and details regarding the constitutive and material modelling are pointed out. Additionally a manuscript on a new version of the model is to be submitted shortly [5]. Since the model used here heavily relates to the aforementioned one, we will primarily describe parts of the model that differ from the previous version, and those which are most relevant to this work. The upper head of the humerus bone, normally connected to the shoulder, is fixed in an upright position, making the elbow joint the only mechanical degree of freedom in the model.

The input of the model consists of a vector of activation parameters \({\varvec{\mu }}\in \mathcal {M}\subseteq \mathbb {R}^{5}\). Each activation \(\mu _j \in [0,1]\) with \(j=1,...,5\) is associated with one muscle in the model, representing the percentage of the maximum possible active stress currently output by the muscle.

The overall stress in the j-th muscle can be written as a sum of isotropic and anisotropic contributions \({\varvec{\sigma }}_\text {iso}\) and \({\varvec{\sigma }}_\text {aniso}\), of which the latter can be subdivided further into active and passive stresses \({\varvec{\sigma }}_\text {active}\) and \({\varvec{\sigma }}_\text {passive}\):

The variables \(\gamma _M, \ \gamma _{ST} \in [0,1]\) are scale factors describing the tissue, with \(\gamma _M,\ \gamma _{ST}>0\) being muscle, \(\gamma _M =0, \ 0<\gamma _{ST}\le 1\) tendon, and \(\gamma _M=\gamma _{ST}=0\) soft tissues, e.g. fat. The active stress is defined as

In this context, \(\sigma _\text {max}\) is the maximum possible active stress for the j-th muscle. This occurs at the optimal fibre length \(\lambda _f^\text {opt}\). More information on this, as well as all other material parameters of the model, can be found in Appendix A in Table 6.

The steepness of the force-length-relation of the muscle fibre is given by \(\Gamma _\text {asc}\) and \(\nu _\text {asc}\) for the ascending arm of the relation, and \(\Gamma _\text {desc}\) and \(\nu _\text {desc}\) for the descending arm. \(\lambda _f\) is the muscle fibre stretch in the current configuration. \(\textbf{M}=\textbf{a}_0\otimes \textbf{a}_0\) is defined as by the fibre orientation in the reference configuration \(\textbf{a}_0\), with \(\otimes \) being the dyadic product.

Furthermore the passive anisotropic stress can be written as

where \(c_3\) and \(c_4\) are material parameters. On top of these anisotropic contributions, the muscles also exhibit some isotropic behavior which is given as

with

Again, \(c_1\) and \(c_2\) are material parameters. The invariants \(I_1, I_2, I_3, I_4\) can be derived from the Cauchy-Green stress tensor \(\textbf{C}\):

For the purposes of the approximation methods applied in this work the model described above serves as high-fidelity model and is mathematically defined as \({\varvec{F}}:\mathcal {M}\rightarrow \mathcal {Z}\subseteq \mathbb {R}^{N}\). It possess unknown since not accessible internal dynamics. The quantities of interest in this work, on the contrary, are accessible as simulation results. They include the motion of the arm in form of its (node-wise) vectorized displacements \({\varvec{q}}\in \mathcal {Q}\subseteq \mathbb {R}^{N_{\text {disp}}}\) with

where \({\varvec{q}}_m=\begin{bmatrix} q_{m,x}&q_{m,y}&q_{m,z} \end{bmatrix}^T\) represents the x,- y-, and z-displacement of the m-th node. The simulation results furthermore contain the (element-wise) stress load of the muscle tissue. For the latter, we consider the equivalent stress values \({\varvec{\sigma }}\in \mathcal {G}\subseteq \mathbb {R}^{N_{\text {stress}}}\) according to von Mises

as it offers advantage to combine all stress components into a single value. The dimension of the displacements \(N_{\text {disp}}=48212\cdot 3=144636\) corresponds to the product of the number of nodes \(n_\text {i}=48212\) and features per node \(n_\text {f}=3\), while the dimension of the von Mises stress simply equals the number of elements \(N_{\text {stress}}=169278\). In the following explanations, we will not distinguish whether the displacements \({\varvec{q}}\) or the stress \({\varvec{\sigma }}\) is the quantity of interest as it makes no difference for the description of the underlying approach. Correspondingly, we introduce the variable \({\varvec{z}}\in \mathcal {Z}\subseteq \mathbb {R}^N\) as general state vector, which can describe an arbitrary physical quantity.

2.2 Problem definition

First, we want to present the problem at hand in general terms, since the framework itself is formulated in a general way. However, the explicit implementation must address the individual challenges problems. Details on this follow in the implementation 3 and discussion Sect. 4.2. Generally speaking, we pursue the goal of creating an efficient but accurate surrogate model \(\tilde{{\varvec{F}}}\) for a computationally expensive high-fidelity model \({\varvec{F}}\). A major problem encountered in surrogate modeling of sophisticated models is their sheer dimensionality. In the case of finite element models, this high dimensionality arises from the underlying modeling method and not from the necessity to describe the system with so many degrees of freedom. Fortunately, this often results in high-dimensional systems that can be expressed with only a few intrinsic coordinates, instead of thousands or millions, by a suitable coordinate transformation.

Consequently, our surrogate modeling approach relies on two fundamental steps. The first step involves the identification of a suitable coordinate transformation \({\varvec{\Psi }}: \mathcal {Z}\rightarrow \mathcal {Z}_{r}\) mapping the system state \({\varvec{z}}\) onto its reduced low-dimensional equivalent \(\bar{{\varvec{z}}}\in \mathcal {Z}_{r}\subseteq \mathbb {R}^{r}\) where \(r \ll N\). A reverse coordinate transformation from reduced to full space \({\varvec{\Psi }}^{\dagger }: \mathcal {Z}_{r}\rightarrow \mathcal {Z}\) ensures physical interpretability of the results.

The second step involves the approximation of the system behavior in reduced space, i.e. of the reduced coordinates \(\bar{{\varvec{z}}}\). Therefore, we seek a function \({\varvec{\Phi }}: \mathcal {M}\rightarrow \mathcal {Z}_{r}\) that approximates the relationship \(\bar{{\varvec{z}}}\approx \tilde{\bar{{\varvec{z}}}}= {\varvec{\Phi }}({\varvec{\mu }})\) between quantities from the parameter space \(\mathcal {M}\) and reduced system behavior. The abstract workflow can be seen in Fig. 2.

Low-rank regression architecture. Offline stage: evaluate FOM \({\varvec{F}}({\varvec{\mu }})\), fit \({\varvec{\Psi }}\), \({\varvec{\Psi }}^{\dagger }\), and \({\varvec{\Phi }}\). Online stage: evaluate surrogate model \({\varvec{\Psi }}^{\dagger }\circ {\varvec{\Phi }}({\varvec{\mu }})\). Validation: compare quantities \({s}_{\text {rec}}\), \(s_{\text {regr}}\), and \(s_{\text {appr}}\)

It is important to keep in mind that besides the approximation itself, the surrogate’s purpose is to be executable under time- and resource constraints. Therefore, the computation time of the surrogate \(\Delta T_{\tilde{{\varvec{F}}}}\), which is used as a general indicator of the computational effort required, must be significantly lower, than that of the original model \(\Delta T_{{\varvec{F}}}\).

Taking all mentioned considerations into account, the overall goal can be formulated in a mathematical sense resulting in an optimization problem

In this context, the function \(\mathcal {L}\) is some measure of approximation quality comparing the results from the surrogate \(\tilde{{\varvec{z}}}\) with the reference results \({\varvec{z}}\). The optimization problem (1) is minimized over \({\varvec{\Psi }}\) and \({\varvec{\Psi }}^{\dagger }\) to find the best low-dimensional representation of the system and over \({\varvec{\Phi }}\) to find the best approximation of the reduced system coordinates. Furthermore, the parameters \({\varvec{\mu }}\in \mathcal {M}\) are taken into account to guarantee a satisfying approximation in the complete considered parameter space \(\mathcal {M}\).

In the following, \({\varvec{\Psi }}\) will be called reduction mapping and \({\varvec{\Psi }}^{\dagger }\) accordingly reconstruction mapping. Furthermore, an approximation of a quantity is always indicated by a tilde as in \(\tilde{{\varvec{z}}}\), whereas a reduced quantity is represented by a bar, e.g., \(\bar{{\varvec{z}}}\), as listed in Table 1, where the most important variables of this work are summarized. Parameter dependencies \({\varvec{z}}({\varvec{\mu }})\) are only mentioned where it helps the comprehensibility and neglected otherwise.

2.3 Dimensionality reduction

For an optimal low-dimensional representation of the system states \(\bar{{\varvec{z}}}={\varvec{\Psi }}({\varvec{z}})\) a coordinate transformation (reduction mapping) \({\varvec{\Psi }}: \mathcal {Z}\rightarrow \mathcal {Z}_{r}\) and its reverse transformation (reconstruction mapping) \({\varvec{\Psi }}^{\dagger }: \mathcal {Z}_{r}\rightarrow \mathcal {Z}\) are sought. Their function composition \(\breve{{\varvec{z}}}={\varvec{\Psi }}^{\dagger }\circ {\varvec{\Psi }}({\varvec{z}})={\varvec{\Psi }}^{\dagger }(\bar{{\varvec{z}}})\) yields the reconstructed state.

There exist numerous possibilities to derive the reduction and reconstruction mapping in the field of model order reduction [20, 8]. In the light of data-based approaches, snapshot-based methods are a popular choice. They assemble \(\kappa \) high-fidelity simulation samples, so-called snapshots, in the snapshot matrix

where \({\varvec{z}}_{{k}}={\varvec{z}}({\varvec{\mu }}_{k})\). The individual snapshots rely on the discrete and finite parameter set \(\mathcal {M}_\kappa = \{{\varvec{\mu }}_1,...,{\varvec{\mu }}_\kappa \} \subseteq \mathcal {M}\). As long as there are enough samples in the resulting subspace \({\mathcal {S}_{\mathcal {M}_\kappa }=\text {span}\{{\varvec{Z}}\}}\), it is supposed that this subspace approximates the discrete solution manifold \(\mathcal {S}_{\mathcal {M}}=\{{\varvec{z}}({\varvec{\mu }}) \ \vert \ {\varvec{\mu }}\in \mathcal {M}\}\). This, in turns, ensures that if \({\varvec{\Psi }}\) and \({\varvec{\Psi }}^{\dagger }\) are found using \(\mathcal {S}_{\mathcal {M}_\kappa }\) they can be applied to \(\mathcal {S}_{\mathcal {M}}\) as well.

A widespread methodology to find the reduced coordinates are linear reduced-basis methods, see [33]. They rely on the mathematical principle of projection and approximate the full state using a linear combination of reduced basis vectors \(\{{\varvec{v}}_1,...,{\varvec{v}}_r\}\subseteq \mathcal {Z}\). In case of a Galerkin projection this yields

with \({\varvec{V}}=\begin{bmatrix} {\varvec{v}}_1&...&{\varvec{v}}_r\end{bmatrix}\). Using such methods can result in a poor reconstruction or a relatively large number of required modes \(r\) due to the restriction to describe the state as a linear combination. Hence, we use besides principal component analysis as a representative of linear projection-based reduction methods, nonlinear alternatives in the form of kernel principal component analysis as well as classical and variational autoencoders.

Principal component analysis

Principal component analysis, which is also known as proper orthogonal decomposition [44], constructs a projection matrix \({\varvec{V}}\) of orthonormal basis vectors, also referred to as principal components for which the maintained variance under projection remains maximal, see [23]. With this method the projection matrix is either found by solving an eigenvalue problem for the covariance matrix of the snapshot matrix \({\varvec{Z}}\) or applying a singular value decomposition directly to it. The resulting eigenvectors or singular vectors are sorted in descending order of their eigen- or singular values and only the \(r\) most dominant ones \(\{{\varvec{v}}_l\}_{l=1}^r\) are kept to form the reduction matrix \({\varvec{V}}_{\text {PCA}} = \begin{bmatrix}{\varvec{v}}_1,&...&{\varvec{v}}_r\end{bmatrix}\).

Kernel principal component analysis

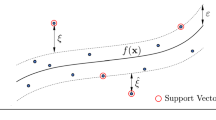

Kernel principal component analysis was introduced in [40] as a nonlinear extension of PCA. A more recent overview can be found in [16]. The underlying idea is to transform the state onto a higher dimensional feature space \(\mathcal {F}\subseteq \mathbb {R}^\eta \) with \(\eta \gg N\) where it is more likely to obtain linear separability and perform the PCA there. Direct computations in this high-dimensional space are avoided by using the so-called kernel trick.

In contrast to PCA, the principal components themselves are not calculated explicitly in KPCA but only the projections of the states onto those components. Consequently, no backprojection matrix is available and the reconstruction mapping \({\varvec{\Psi }}^{\dagger }_{\text {kPCA}}(\bar{{\varvec{z}}})\) is usually obtained via kernel ridge regression [7].

Autoencoder

Autoencoders (AE) are a special type of artificial neural network and have already been introduced as a nonlinear alternative to PCA in the early 90’s [29]. They aim to learn the identity mapping \({\varvec{\Xi }}: \mathcal {Z}\rightarrow \mathcal {Z}\) with \(\tilde{{\varvec{z}}}={\varvec{\Xi }}({\varvec{z}})\) using a bottleneck in their architecture as exemplified in Fig. 3a. This bottleneck ensures that the network learns a reduced representation of the input variables within the network. Thus, AEs can be split at their narrowest point into two functional parts: the encoder \({\varvec{\Psi }}_{\text {ae}}\) and the decoder \({\varvec{\Psi }}^{\dagger }_{\text {ae}}\).

One problem in classical autoencoders is that they lack regularization of the reduced/latent space and continuity. Hence, points that are close to each other in the reduced space can result in very different reconstructions. Moreover, the reduced space is not easy to interpret and cannot be assigned to any concrete effects in the full space. To circumvent these problems variational autoencoders [25, 36] can be used.

Variational autoencoder

In contrast to regular autoencorders, the input of variational autoencoders (VAEs) is not transformed into a single reduced coordinate but into a distribution over the reduced space, see Fig. 3b. Often, the prior probability distribution (prior) over the reduced/latent variables \(p(\bar{{\varvec{z}}})\) is assumed to be Gaussian \(\mathcal {N}({\varvec{0}}, {\varvec{I}})\) with zero mean and unit variance.

To reconstruct the original data, a sample is drawn from the reduced/latent distribution \(\bar{{\varvec{z}}}\sim \mathcal {N}({\varvec{\nu }}, {\varvec{\delta }})\) and then transformed by the decoder network. As a result, the variables in the reduced space have continuity, i.e. close points in the reduced space also give similar reconstructions, and each point within the distribution should give a meaningful reconstruction.

Convolutional neural networks, which are often used to reduce the number of trainable parameters compared to fully-connected networks, generalize well by detecting local patterns, but rely on regular grid-like structured data, which FE data is usually not.

Therefore, in this work, we exclusively use fully-connected autoencoders which are still applicable, given the system size of the human upper-arm model. It should be noted, however, that one possible solution to apply convolutions to FE models may be found in graph convolutions [11].

2.4 Regression

All presented dimensionality reduction techniques, PCA, KPCA, AE, and VAE, offer the possibility to identify suitable transformations to represent the system states \({\varvec{z}}\) in a low-dimensional manifold and thus form the foundation for further procedure. The reduction not only retains just the most important system features but also enables highly efficient training of regression algorithms. Thereby, the search for a function \({\varvec{\Phi }}: \mathcal {M}\rightarrow \mathcal {Z}_{r}\) approximating the relationship from simulation parameters to reduced system behavior \({\varvec{\mu }}\rightarrow \bar{{\varvec{z}}}\) with \(\tilde{\bar{{\varvec{z}}}}={\varvec{\Phi }}({\varvec{\mu }})\) is simplified.

As we only rely on data in this work, many regression algorithms can be used for this task. However, data generation is often an expensive process, especially in the case of complex FE models. Hence, whenever the offline phase is resource-limited, an algorithm that can cope with less data is preferable. Gaussian process regression is well-known to perform well in data-poor regimes and was successfully applied in other publication [26, 24] in the context of LRR. Consequently, they are utilized in this publication as well because the used dataset only contains relatively few samples.

Gaussian processes The basic idea of Gaussian Processes (GPs) is to limit the space of functions fitting the given data by imposing a prior on the functions first and condition them with available observations to receive the posterior probability distribution, see [35]. This approach relies on multivariate Gaussian distributions which are defined by a mean \({\varvec{\nu }}\) and a covariance matrix \({\varvec{\Sigma }}\). According to this, Gaussian Processes are completely defined by a mean function \({\varvec{m}}({\varvec{x}})\) and a covariance or rather kernel function \({\varvec{k}}({\varvec{x}}_i,{\varvec{x}}_j)\) for some inputs \({\varvec{x}}\). Thus, a GP can be defined as

where \({\varvec{K}}\) is the kernel matrix obtained by applying the kernel function element-wise to all inputs. The selected kernel function implies a distribution over functions, giving a higher probability to those satisfying certain properties such as smoothness to a greater extent.

3 Implementation

The presented framework consists of two successive steps. The first one is a coordinate transformation to obtain a low-dimensional representation from high-dimensional data and the second is the approximation of the system behavior in the reduced space. For the corresponding tasks, individual algorithms have been presented. However, the entire framework is structured generically so that a wide variety of further suitable algorithms exists. Therefore, in the following the variables \({\varvec{\Psi }}\) and \({\varvec{\Psi }}^{\dagger }\) are used for reduction and reconstruction in general.

For the implementation of PCA, KPCA, and GP it is relied on the software library scikit-learn [32], whereas the autoencoders are implemented using TensorFlow [1]. The high-fidelity simulations were conducted using the commercial FEM tool LS-DYNA and the resulting data is provided to the interested reader in [28]. This dataset contains the model’s muscle activations, displacements, as well as processed von Mises stress. The toolbox to generate the presented surrogate models, however, is still in progress and will be published at a later date.

3.1 Algorithm

The algorithm can be divided into an offline and an online phase. While data creation and surrogate modeling take place in the former one, the evaluation of the surrogate for new unseen parameters is part of the latter one.

In the offline phase, which is summarized in Algorithm 1, a finite set of simulation parameter \(\mathcal {M}_\kappa =\{{\varvec{\mu }}_1,...,{\varvec{\mu }}_{\kappa }\}\) from the parameter domain \(\mathcal {M}\) is created using some sampling strategy. Based on the selected parameter, the high-fidelity model is evaluated and its results are assembled for further processing. The following steps of the offline phase involve (i) calculation of coordinate transformation for dimensionality reduction and subsequently (ii) fitting of the regression algorithm to approximate the reduced system behavior.

To solve the task of dimensionality reduction, the high-fidelity simulation results are assembled in the dataset

which contains the full states as in- and output and can be rewritten as snapshot matrix (2) Based on this data, the reduction mapping \({\varvec{\Psi }}\) and the reconstruction mapping \({\varvec{\Psi }}^{\dagger }\) are fitted. Once these mappings are found the regression dataset can be composed by reducing the system states \(\bar{{\varvec{z}}}={\varvec{\Psi }}({\varvec{z}})\) resulting in

It is composed of the individual simulation parameters, i.e. the muscle activations as inputs and the corresponding reduced system states obtained by the reduction algorithms as output. Subsequently, the regression algorithm \({\varvec{\Phi }}\) is trained using \({\varvec{D}}_{\text {regr}}\) as data basis. The composition of the fitted reconstruction mapping \({\varvec{\Psi }}^{\dagger }\) along with the regression model \({\varvec{\Phi }}\) yields the complete surrogate model

It is used in the online phase to predict the system behavior, see Algorithm 2. In other words, the regression model is evaluated on new (unseen) simulation parameters to receive an approximation of the respective reduced states followed by a transformation back into the high-dimensional physical space. For an overview of the entire workflow including the offline as well as the online phase of the algorithm please refer once again to Fig. 2.

3.2 Error quantities

It is crucial to not only consult the complete surrogate model but also its components to validate its overall approximation quality and gain insights into the individual sources of error. Hence, besides the overall approximation error, also the error induced by the reduction and the regression algorithm are investigated.

For this purpose, three relative performance scores are introduced which allow a reliable assessment. In addition, absolute error measurements serve as physical interpretable quantities.

Relative performance scores In this paper, different reduction methods and their impact on the approximation are explored. A well-suited quantity to consider their impact isolated from the other sources of error is introduced as reconstruction score following the calculation rule

It compares a state \({\varvec{z}}\) with its reconstruction \(\breve{{\varvec{z}}}={\varvec{\Psi }}^{\dagger }\circ {\varvec{\Psi }}({\varvec{z}})\). This score, as well as the two following ones, reaches its optimum with a value of 1, whereas a value of 0 corresponds to no prediction at all. It is to be noted that \({s}_{\text {rec}}\) can become negative as the reconstruction can be arbitrarily poor.

Similar to the isolated reconstruction score, the regression score

validates the performance only of the regression algorithm by comparing the prediction \(\tilde{\bar{{\varvec{z}}}}={\varvec{\Phi }}({\varvec{\mu }})\) with the reduced reference state \(\bar{{\varvec{z}}}\). This is the only performance measurement taking place in the reduced space while all other measurements are calculated in the full physical space.

The composition of both parts, i.e. the overall approximation quality, is measured by the so-called approximation score

where \(\tilde{{\varvec{z}}}={\varvec{\Psi }}^{\dagger }({\varvec{\Phi }}({\varvec{\mu }}))\) is the overall approximation of the reference \({\varvec{z}}\). To visualize the individual scores, the connection between the compared quantities is shown in Fig. 2.

While the presented scores can all be assessed for a single simulation, their mean value over all simulations

is used to compare several simulations at once.

Absolute error measures In addition, to the relative scores, absolute error measures are consulted for the overall approximation. Therefore, the sample wise 2-norm

of the error between the features of the \(m\)-th node/element \({\varvec{z}}_m\) and their approximation \(\tilde{{\varvec{z}}}_m\) is used. Furthermore, its mean

and maximum value

over all nodes/elements are used for comparisons. Note that in case of displacements, where \({\varvec{z}}_m={\varvec{q}}_m\) corresponds to the coordinates of a node, the 2-norm \(e_{\text {dist}}({\varvec{q}}_m, \tilde{{\varvec{q}}}_m)=e_{\text {2}}({\varvec{q}}_m, \tilde{{\varvec{q}}}_m)\) represents the Euclidean node distance of the \(m\)-th node \({\varvec{q}}_m\) and its approximation \(\tilde{{\varvec{q}}}_m\).

For the element-wise scalar valued stress samples, \(e_{\text {stress}}(\sigma _m, \tilde{\sigma }_m)=e_{\text {2}}(\sigma _m, \tilde{\sigma }_m)\) just matches the absolute difference between the von Mises stress of the \(m\)-th element and its approximation \(\tilde{\sigma }_m\).

As last performance measure, the required computational time for one sample \(\Delta T\) is used to account for the resource savings achieved by the surrogate models.

3.3 Surrogates for displacement and stress

The implementation of a surrogate model that captures the displacements of the model and one that captures the stress is very similar and follows the presented approach in both cases. Nevertheless, both quantities, i.e. \({\varvec{q}}\) and \({\varvec{\sigma }}\) live in such different spaces that each requires its own reduction algorithm. Hence, for each quantity an individual dataset must be assembled following (3). This yields \({\varvec{D}}_{\text {red}}^{\text {disp}}=\{({\varvec{q}}_n, {\varvec{q}}_n) \}_{n=1}^{\kappa }\) for the displacements and \({\varvec{D}}_{\text {red}}^{\text {stress}}=\{({\varvec{\sigma }}_n, {\varvec{\sigma }}_n)\}_{n=1}^{\kappa }\) for the von Mises stress.

For the subsequent regression, a single algorithm could be used to predict values of both quantities. However, we use a single regression algorithm per variable for the sake of clarity. This, in turn, requires individual data sets \({\varvec{D}}_{\text {regr}}^{\text {disp}}=\{ ({\varvec{\mu }}_n, \bar{{\varvec{q}}}_n) \}_{n=1}^{\kappa }\) and \({\varvec{D}}_{\text {regr}}^{\text {stress}}=\{ ({\varvec{\mu }}_n, \bar{{\varvec{\sigma }}}_n) \}_{n=1}^{\kappa }\) for the approximation of the reduced displacements \(\bar{{\varvec{q}}}\) and reduced stress \(\bar{{\varvec{\sigma }}}\).

4 Results

For the creation of the high-fidelity, i.e. finite element simulation results, 1053 muscle activations \({\varvec{\mu }}\in \mathbb {R}^{5}\) are equidistantly sampled on the parameter domain \(\mathcal {M}=[0,1]\times [0,1]\times [0,1]\times [0,1]\times [0,1]\) as simulation parameters. A value of 1 corresponds to full muscle activation, whereas a value of 0 corresponds to no muscle activation. The amount of samples was chosen to be rather small to reduce the overall number of FE model evaluations, since calculating one activation state can take minutes on a high-performance computing system with 64 processors in parallel. Based on these muscle activations, the finite element simulation was evaluated, resulting in 1053 samples for displacement \({\varvec{q}}\) and stress \({\varvec{\sigma }}\), which are used to build the training data sets following (3) to fit the models. Another \(100\) parameters \({\varvec{\mu }}\) are randomly sampled, using a uniform distribution, on the parameter domain. These serve as a test set. Note that using a uniform distribution for the sampling is not necessarily ideal. Some measurements of muscle activation of the biceps during repeated arm flexion and extension at certain frequencies and at arbitrary speeds revealed the muscle activation to be more normally distributed with a mean of 0.5 to 0.6, rather than uniformly. This is to say, the uniform distribution does not affect the measured approximation quality w.r.t the FE model presented here, but considering the actual parameter distribution, or even correlations between some inputs, known as co-activation, could further improve surrogate model quality, without significantly increasing the number of FE evaluations. An adaptive sampling strategy is planned for future iterations of this work, but for the purposes of the proof-of-concept data-set used here, there was no reason to discriminate between different areas of the input parameter space at the time. A particular challenge in surrogate modeling for this simulation lies in the relatively poor data basis, due to its prohibitive computational cost. The following results (with exception of the high-fidelity simulations) were produced on an Apple M1 Max with a 10-Core CPU, 24-Core GPU, and 64 GB of RAM.

In our previous studies [26, 27], linear reduction methods have proven to be suitable for the low-dimensional representation of displacements obtained by elastic multibody and FE models. A similar conclusion can be reached for the arm model by considering the singular values \({\varvec{\varsigma }}\) of the centered snapshot matrix \(\hat{{\varvec{Z}}}\). The contribution of a reduced basis vector of the PCA to the total reconstruction of the original quantity is reflected by the magnitude of the corresponding singular value. That means if only a few singular values contribute significantly, it is possible to represent a quantity with only a few reduced basis vectors without losing much information. If this is in contrast not the case, the reduction either requires numerous reduced basis vectors or introduces a remarkable error. This is closely related to the Kolmogorov n-width ([42, 30])

which quantifies the optimal linear trial subspace by describing the largest distance between any point in the solution manifold \(\mathcal {S}_{\mathcal {M}}\) for all parameters and all n-dimensional subspaces \(\mathcal {Z}_{n}\subseteq \mathcal {Z}\). Supposing that the given system has unique solutions for all parameter samples, the intrinsic dimension of the solution space is at the most equal to the number of parameters, or number of parameters plus one for dynamical systems.

Consequently, if a linear subspace were sufficient to represent a given system, i.e. if the system possessed a fast decaying Kolmogorov n-width, we would expect that a satisfactory low-dimensional representation of the system can be achieved by using as many PCA modes as there are intrinsic dimensions. For the given human arm model which is parameterized by a five-dimensional parameter space \(\mathcal {M}\subseteq \mathbb {R}^{5}\), this means that having the most dominant behavior within the first five modes would justify the use of a linear subspace. The course of the singular values \(\hat{{\varvec{\varsigma }}}\) is considered in Fig. 4 to investigate the suitability of a linear subspace. For a suitable presentation, the singular values are scaled by the sum of singular values, i.e. \(\hat{\varsigma }^{(l)}={\varsigma ^{(l)}} \mathbin {/} {\sum _{i=1}^{\kappa }\varsigma ^{(i)}}\). On the one hand, the first five PCA modes for the displacements, indeed, capture almost 90% of the behavior of all data. On the other hand, considering the results of the stress data it becomes apparent that the singular values do not decrease as fast as it is the case for the displacements and single singular values are not that dominant. Accordingly, the first five only capture about 60%.

In this context, it is to be noted that this does not result from considering the von Mises stress instead of single stress components. The individual components \({\varvec{\sigma }}_{x},\ {\varvec{\sigma }}_{y},\ {\varvec{\sigma }}_{z},\ {\varvec{\sigma }}_{xy},\ {\varvec{\sigma }}_{yz},\ {\varvec{\sigma }}_{zx}\) possess a similar behavior as shown in Fig. 4. Accordingly, it seems to be more difficult to represent the stress data in a low-dimensional linear subspace in general compared to the displacements. As to how the added complexity of the stress data might arise, please refer to the discussion, i.e. Sect. 4.2, for more details. Furthermore, we expect that our results for the von Mises stress are transferable to the individual stress components.

Hyperparameters Another noteworthy aspect in this context is that a reduced coordinate \(\bar{z}^{(l)}\) belonging to a reduced basis vector \({\varvec{v}}_l\) with a relatively small corresponding singular value \(\varsigma ^{(l)}\) tends to possess less assignable characteristics and relations. Thus, they are often more difficult to approximate by regression algorithms. This in turn results in a preference for a few meaningful coordinates over many less meaningful coordinates for the following investigation, not only because of their dimensionality but also because of their predictability. The reduced system size \(r\) of the surrogate models is set to the same value for PCA, KPCA, AE, and VAE to guarantee a fair comparison. In case of the displacement, the reduced system size is chosen so that a reconstruction score above \({s}_{\text {rec}}>0.99\) is achieved using PCA resulting in a dimension of \(r_{\text {disp}}=10\). For stress, on the contrary, it is set to \(r_{\text {stress}}=13\) resulting in a reconstruction score above \({s}_{\text {rec}}>0.95\) using PCA.

Based on this preselected parameter, the individual hyperparameters of the different reduction algorithms are tuned using a grid search on the k-cross-validated training data set (3). As for PCA no further hyperparameter than the reduced system size r exists, only the hyperparameter for KPCA, AE, and VAE are listed in Table 2 and Table 3, respectively. Both autoencoders share the same underlying architecture to ensure a fair comparison.

For KPCA, a polynomial kernel function

is chosen and the scaled exponential linear unit (SELU)

with \(\beta =1.051\) and \(\alpha =1.673\) serves as activation function in the autoencoder.

For each fitted reduction algorithm, the dataset (4) is assembled and the regression algorithm is trained. Usually, the regression task is solved by a subnetwork in the case of autoencoders. However, we want to focus on the comparison of the individual reduction methods in the following investigation and want to examine them as independently as possible from the regression algorithm. Hence, the same regression algorithm is used for PCA, KPCA, AE, and VAE to enable comparability of their results within the context of LRR. In detail, a Gaussian process regression algorithm with consistent hyperparameters for all three reduction algorithms is used, see Table 4.

Sampling the reduced space As first comparison of the reduction algorithms, we present the impact that variations in the reduced space have in the original space. Therefore, the first entry \(\bar{z}^{(1)}\) of the reduced state is varied around its mean value \(\bar{z}^{(1), \text {mean}}=\frac{1}{\kappa }\sum _{j=1}^{\kappa }\bar{z}^{(1)}_{j}\) with \( {\varvec{z}}\in {\varvec{D}}_{\text {red}}\) occurring in the training data set. It is sampled within the range of the standard variance \(\bar{z}^{(1), \text {std}}\). Please note that the mean and standard variance obviously differ for different reduction algorithms. For an isolated view all other entries \(\bar{z}^{(2)},...,\bar{z}^{(r)}\) remain zero. The resulting reduced states are then transformed into a reconstructed arm \(\breve{{\varvec{z}}}^{(l)}={\varvec{\Psi }}^{\dagger }([\bar{z}^{(1)},0,...,0]^T)\). which is shown in Fig. 5 for each reduction algorithm.

In the case of PCA, this representation corresponds to the visualization of the first reduced basis vector. It can be observed that the first reduced basis vector of the PCA is described by a motion during which the arm is raised. A similar arm raise can be observed for the KPCA but simultaneously a non-physical muscle deformation occurs, especially in the brachioradialis. For the autoencoder, non-physical behavior can be observed especially in the lower-arm where it comes to bizarre deformations of the muscle and bone tissue. Comparing this to the results of the VAE, the benefits of regularizing the reduced space become apparent. The reconstructed arm of the VAE exhibits such non-physical behavior to a much lesser extent and is mainly characterized by a slight lowering of the lower-arm. It is not surprising that the arm raising occurs in all reduction algorithms as it is one of the most dominant motions. However, the individual modes from the PCA are sorted by importance and are orthogonal, which leads to the first reduced basis vector being an isolated motion of the most important movement without any other effect occurring. For the AE, the modes are in general neither orthogonal nor sorted by importance and thus different effects can occur simultaneously. Besides this more abstract representation of the impact of reduced coordinates, concrete performance measurements are presented in the following section.

4.1 Performance measurements

The fitted reduction \({\varvec{\Psi }}\) and regression algorithms \({\varvec{\Phi }}\) are used in the online algorithm 2 to approximate the displacement \({\varvec{q}}\) and stress \({\varvec{\sigma }}\) of the arm on the test data set, i.e. on unseen muscle activations \({\varvec{\mu }}\). The results are summarized in Table 5 where \({s}_{\text {rec}}\) is used for assessing of the isolated reduction algorithms, \(s_{\text {regr}}\) for the isolated regression algorithm, and \(s_{\text {appr}}\) for an overall impression. Moreover, the overall simulations averaged mean \(\hat{e}_{2}^{\text {mean}}=\frac{1}{\kappa }\sum _{j=1}^{\kappa }{e}_{2,j}^{\text {mean}}\) and maximum \(\hat{e}_{2}^{\text {max}}=\frac{1}{\kappa }\sum _{j=1}^{\kappa }{e}_{2,j}^{\text {max}}\) errors provide a physical interpretable metric and the computational time \(\Delta T\) enables a comparison of the computation time.

It confirms that the stress data is more difficult to reconstruct, given that \({s}_{\text {rec}}\) is consistently worse for stress than for displacement. In this context, it is noticeable that the KPCA reaches the best reconstruction score \({s}_{\text {rec}}\) and is accordingly most capable of identifying a low-dimensional representation of the data. Regarding the regression score \(s_{\text {regr}}\), the GP that is trained on the data reduced by PCA and KPCA performs very similarly. This suggests that the identified reduced coordinates are similarly learnable. Overall, the combination of an AE and GP yields the best results regarding the approximation score \(s_{\text {appr}}\) as well as most of the physical error metrics. The variational autoencoder achieves worse results for \(s_{\text {regr}}\) and consequently its reduced coordinates seem to be more difficult to approximate. However, the VAE is at the same time more robust against these errors in the reduced space and almost reaches the same quality as the original AE but with a more interpretable and regularized reduced space.

Regarding the computational time, the surrogate models using autoencoders are fastest followed by PCA, whereas KPCA is outperformed by far. The different computational times are only partially meaningful as the reduction algorithms are implemented using different software packages. While scikit-learn [32] is used for PCA and KPCA, TensorFlow [1] is used for the autoencoders. In an even comparison, PCA should be faster than the autoencoders as it contains less parameters. Nevertheless, all surrogate models can predict the arm behavior within milliseconds and thus are suitable for real-time applications. It should be noted, that the computational times presented in this work are the averaged times for the approximation off a single sample. When several samples are calculated at once the required time per sample decreases significantly.

For a more detailed elaboration of the results, the distribution of the performance scores of all test simulations are considered in the following paragraph. On the one hand, this is intended to illustrate the relationships between the reduction, regression, and overall approximation performance and, on the other hand, to depict the differences between the individual reduction methods. For this purpose, the distribution of the relative performance scores of the different reduction algorithms are shown in Fig. 6. There a boxplot is used as representation and a visual explanation is given in Fig. 6a. It consists of a box which is divided into quartiles so that 25% of the values are above the median value (upper quartile) and 25% below (lower quartile). Furthermore, the box is extended by lines, so-called whiskers, which are chosen to have a maximum length of \(1.5*IQR\). In this context, the interquartile range IQR is the distance between the upper and lower quartiles. Outliers not covered inside the range of the whiskers are visualized as individual points.

Considering the achieved results for the node displacements shown in Fig. 6b, the isolated reconstruction score attests the KPCA ’s ability to represent the data in a low-dimensional space without much loss of information. Furthermore, the reduced coordinates resulting from PCA and KPCA seem to be identically challenging to learn as the distribution of the regression score achieved by the GP is almost identical. The overall approximation quality mainly suffers from the error induced by the regression as it deviates largely to the reconstruction score. Interestingly, the overall approximation score using the KPCA is influenced to a larger extent as it is the case for the PCA and accordingly seems to be less robust against disturbances in the reduced space with the chosen hyper parameters. For the autoencoders, a better regression score is achieved than for PCA and KPCA and they perform comparably similar.

Considering the scores achieved for the approximation of the arm’s stress, as done in Fig. 6c, the impression changes. The reduced coordinates obtained via PCA and KPCA seem to be easier to learn than for the autoencoders and thus they result in better regression scores but at the same time they still contribute to the overall approximation. Quite the opposite is shown by the results for the autoencoders, which reveal that the reduced coordinates are difficult to learn but errors in the reduced space propagate to a much lesser extent to the overall approximation. Consequently, the approximation score \(s_{\text {appr}}\) stays close to the reconstruction score \({s}_{\text {rec}}\). This is especially evident for the VAE, for which the worst regression score but the best approximation is achieved. Hence, it seems to be very robust against errors introduced by the regression what may be explained by the fact that it is trained to reconstruct quantities sampled from a latent distribution. Although the AE does in most cases not place first in either of the reconstruction and regression categories, it still achieves the slightly better overall approximation for displacements as well as stress. The reason for this is that the errors of the individual categories do not affect the overall result to such an extent as is the case for KPCA which is sensitive to errors in the regression.

Comparing the displacement and stress data, two things are noteworthy. As expected the stress data poses the greater challenge for the reconstruction. Hence, the overall approximation is mainly limited by the reconstruction whereas the regression is the decisive factor for the displacements. At the same time, however, it must be noted that although worse results are achieved for the stress on average, there a less outliers than it is the case for the displacements. In addition to the distribution of the scores, the interested reader can view the performance scores for all individual test simulation in Appendix B in Fig. 11 for a more detailed view.

Besides the evaluation criteria already presented, physical errors are considered in the following in order to contextualize them in real terms. In Fig. 7a, the mean \(e_{\text {2}}^{\text {mean}}\) defined in Eq. (9) and the maximum reached Euclidean node distance \(e_{\text {2}}^{\text {max}}\) defined in Eq. (10) are shown for the three reduction algorithms.

All of them manage to stay below a mean node distance of 1.75 mm for all 100 test simulations. PCA, AE, and VAE are within a similar range but KPCA performs worst. The same applies for the maximum node distance. The rather large values for the KPCA compared to the moderate mean values suggests significant outliers in the approximation of single nodes or areas.

PCA is not able to provide a low mean error for the stress data as visualized in Fig. 7b. Here, it can be seen that all nonlinear reduction methods have a significant advantage. On the contrary, for the maximum error PCA yield a similar accuracy and all algorithms are within a similar range. This suggests that PCA is good at capturing local areas of high peaks, but does not perform that well for the entire model.

In addition to the numeric criteria presented so far, a visual assessment is given in the following to provide information about the most challenging parts of the arm model. A random set of seven simulations is picked from the test set and shown in Fig. 8. The finite element simulation results, i.e. the supposed ground truth, is shown at the top with the corresponding muscle activation of that simulation visualized as bar plot above. The results achieved by the three surrogate models are placed below with the nodes colored by their individual node distance \(e_{\text {2}}\).

It becomes particularly apparent which arm regions are most difficult to reproduce. This includes the complete forearm including the bones radius and ulna as well as the brachioradialis muscle on the one hand, and the biceps brachii on the other hand. In particular, the forearm is the part with the largest displacement so it is not surprising to find him in the worst approximated parts.

With the individual surrogate models, it is particularly noticeable that the one which relies on PCA and KPCA seem to have larger problems reproducing the triceps brachii. Nevertheless, the error in this region is low for all surrogates. The combination of KPCA and GP, furthermore, leads to large errors in the human arm for some muscle activations. For example, the complete biceps brachii is worse approximated in the last three visualized simulations. In contrast to the motion of the arm, the areas of high loading/large stress occur at different points for the stress.

As expected, the highest stress occurs primarily in the tendons, while only minor stress is found in the muscles as visualized in Fig. 9 where the stress distributions obtained from the FE model are presented at the top. Below of the reference solution, the approximation of the surrogate models colored by the respective errors are plotted. The largest discrepancies therefore unsurprisingly occur in the regions of highest stress. However, it is remarkable that the PCA-based surrogate has larger areas of notable errors than the other surrogate models.

Visualization of the stress of test simulations comparing the reference results (top) with their approximations using PCA, KPCA, AE, and VAE (from top to bottom). The reference is colored with respect to the stress occurring in the individual element, while the approximations are colored with respect to the corresponding error

4.2 Discussion

The presented results demonstrate that the modular LRR algorithm can create versatile surrogate models. They enable evaluation in real-time scenarios as they possess a very low computational time in the range of milliseconds and also allow to use them on resource-constrained hardware due to the savings in computational complexity. This is achieved for a high-dimensional human arm model although the present model possesses unusual challenges. On the one hand, the model is defined by extremely nonlinear material behavior which is accompanied by a highly complex three-dimensional physiological geometry. On the other hand, the surrogate approach is build upon a data-poor regime as the number of simulations is limited by the computational complexity of the FE model. Furthermore, besides the approximation of the muscle’s spatial configuration the surrogate models also need to capture the stress distribution which is more difficult to capture as revealed by the results.

Figure 4 showed the singular values of the displacement to decrease significantly faster than those of the stress components. This is due to the distribution of these quantities across the model. In the FE model, the displacements are solved for first, at the nodal points of the mesh. Based on these displacements the stresses can be calculated according to the material model for the muscle tissue. Part of this order of operations is that the displacements adhere to continuity criteria the stresses are not subject to. Thus, discontinuities or singularities can be encountered at the edges of mesh elements, which require a finer grained mesh in the affected area to avoid them. Even in less severe cases, the stresses can be assumed to be distributed less cleanly than the displacements. Furthermore the muscle material model in use here is highly nonlinear, which also adds to the stresses being more difficult to approximate. Note that this is not supposed to imply these effects to be present to a major extent with the geometry and mesh in use in this particular work. It rather serves to give an intuition for what effects might contribute to a more complex distribution of the stress in FE in general.

In comparing the different methods for the dimensionality reduction of the FE data exciting differences are revealed. As expected PCA performs well for displacements and is the simplest in its application. The results for the KPCA, on the other hand, are contradictory. On the one hand, no other reduction algorithm reconstructed the data that well from the reduced/latent space. On the other hand, it is more sensitive to errors in the reduced/latent space introduced by the regression algorithm so that the overall approximation suffers significantly. There may be other kernel functions and hyperparameters with which those hurdles can be surmounted but none of the kernel function and hyperparameter combinations tested during the grid search yielded better results. The autoencoder delivers an overall satisfactory result except for the more expensive training phase. Although a fully connected AE was used, contrary to expectations, we were able to succeed in a data-poor regime. Variational autoencoder have proven to be almost similar performant as the classical autoencoder but with a more interpretable and robust reduced/latent space.

Even though slightly better results were obtained with the nonlinear reduction methods than with the linear PCA, the differences are marginal for the displacements. For the stress, on the contrary, it could be shown that PCA was outperformed considering the mean error. However, the nonlinear methods did not achieve the significant advantage over the linear methods expected for the stress data. This could be due, among other things, to the fact that the autoencoders were trained with relatively little data.

Overall, it can be said that the LRR algorithm is suitable for approximating high-dimensional quantities that are difficult to represent. Depending on the intended use, the individual components must be carefully selected. The Gaussian process regression was successfully combined with all reduction techniques. As one scope of this work is to compare the reduction methods, the GP is not optimized for the individual combinations and consequently introduced a non neglectable error to the overall approximation. With further hyperparameter-tuning the overall error could be further decreased. Moreover, even though GPs worked well in previous literature, other regression methods such as neural networks have also shown satisfying results. For the dimensionality reduction, on the contrary, the results hardly depend on the underlying problem. While all algorithms worked well for data like displacements they introduced a larger error when it comes to the reconstruction of stress data. The best results for stress are still achieved by the nonlinear methods, i.e. KPCA, AE and VAE. However, since the first method has been found to be prone to outliers, the latter is generally preferable.

Besides the approximation quality measures, soft factors can also play a role in the choice of the reduction algorithm. Differences can be found, especially during the offline phase but also regarding the consumption of resources. While PCA usually works right away, KPCA AE, and VAE require a more extensive hyperparameter tuning including the choice of kernel function for the KPCA and the network architecture for the AE and VAE. The latter also require a computational expensive training phase while the former consumes the most memory for evaluation. Thus, the comparison of the different methods was successful as we were able to highlight some relevant differences between them. The results can therefore serve as a guide for the selection of the dimensionality reduction method and help other researchers to find the most appropriate method for their specific application. Furthermore, we have shown that there are combinations of dimensionality reduction and regression methods capable of approximating the complex musculoskeletal FE model considered in this work.

5 Conclusion

Real-time evaluation of complex continuum mechanical problems has not been feasible for a long time. In particular, musculoskeletal simulation models such as the complex human arm considered in this work impose unique challenges, including highly nonlinear material behavior accompanied by complex three-dimensional physiological geometry. With today’s resources and tools, however, real-time evaluation of such systems is possible. Especially, machine learning methods can serve as extremely fast enabler for surrogate models. With the combination of dimensionality reduction and a Gaussian process regressor we were able to create accurate yet real-time capable surrogate models. In this process, not only often considered physical quantities like displacements but also often neglected stress values have been approximated. The latter turned out to be more challenging to represent in a low dimension. Most likely because the calculation of the stresses, in contrast to the displacements, is not subject to continuity criteria. Accordingly, nonlinear reduction techniques in the form of kernel principal component analysis, autoencoder, and variational autoencoder are implemented besides the linear reduction in the form of the principal component analysis. All methods are able to process the displacements satisfactorily. For the challenging reduction of the stress data the nonlinear reduction methods slightly outperformed the linear one. With a greater data basis this effect may be amplified. Nonetheless, the stress behavior of the complex arm model could be satisfyingly and quickly approximated by the surrogate models. As soon as disturbances in the reduced coordinates occur, differences between them become apparent. This means errors induced by the Gaussian process approximating the reduced states can lead to non neglectable errors in the overall approximation, especially for the KPCA-based surrogates. Overall, the autoencoder-based surrogate yielded the best results while the one using PCA performs well albeit its simple application.

We summarize the effects of the various reduction techniques:

-

(i)

Principal Component Analysis: PCA Captivates with its simplicity and is computationally the most favorable method in the offline and online phase as neither expensive hyperparameter tuning nor an expensive training phase is necessary. However, PCA can reach its limits when linear reduction, i.e. a linear combination of reduced basis vectors, is a too great restriction.

-

(ii)

Kernel Principal Component Analysis: KPCA yields the best reconstruction from coordinates transformed into the reduced space. However, it is more sensitive to errors in the reduced space and thus leads to larger overall errors. No hyperparameters are found which were able to reconstruct well and robust. The offline phase including the hyperparameter tuning is expensive and the memory consumption non neglectable.

-

(iii)

Autoencoder: AEs provided the best overall approximation and still are fast. These advantages are bought by means of an expensive training phase. Furthermore, they require a lot of data which may limits their applicability for certain problems.

-

(iv)

Variational Autoencoder: VAEs regularize the reduced space leading to more interpretable reduced coordinates without losing much reconstruction capability compared to the normal autoencoder. Otherwise they behave similarly.

Data availability

The datasets generated during and/or analyzed during the current study are available in the DaRUS repository, see [28].

References

Abadi, M., Agarwal, A., Barham, P., et al.: TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. https://www.tensorflow.org/, software available from tensorflow.org (2015)

Ackerman, M.J.: The visible human project. Proc. IEEE 86(3), 504–511 (1998). https://doi.org/10.1109/5.662875

An, S.S., Kim, T., James, D.L.: Optimizing cubature for efficient integration of subspace deformations. ACM Trans. Gr. 27(5), 165:1-165:10 (2008). https://doi.org/10.1145/1457515.1409118

Arjomandi Rad, A., Vardanyan, R., Thavarajasingam, S.G., et al.: Extended, virtual and augmented reality in thoracic surgery: a systematic review. Interact. Cardiovasc. Thorac. Surg. 34(2), 201–211 (2022)

Avci, O., Röhrle, O.:Determining a musculoskeletal system’s pre-stretched state using continuum-mechanical forward modelling and joint range optimization. To be submitted shortly to: Biomechanics and Modeling in Mechanobiology (2023)

Bakarji, J., Champion, K., Kutz, J.N., et al.: Discovering governing equations from partial measurements with deep delay autoencoders (2022). https://doi.org/10.48550/ARXIV.2201.05136

Bakır, G.H., Weston, J., Schölkopf, B.: Learning to find pre-images. Adv. Neural. Inf. Process. Syst. 16, 449–456 (2004)

Benner, P., Schilders, W., Grivet-Talocia, S., et al.: Model Order Reduction: Volume 3 Applications. De Gruyter, Berlin (2021). https://doi.org/10.1515/9783110499001

Champion, K., Lusch, B., Kutz, J.N., et al.: Data-driven discovery of coordinates and governing equations. Proc. Natl. Acad. Sci. 116(45), 22445–22451 (2019). https://doi.org/10.1073/pnas.1906995116

Conti, P., Gobat, G., Fresca, S., et al.: Reduced order modeling of parametrized systems through autoencoders and sindy approach: continuation of periodic solutions (2022). arXiv preprint arXiv: 2211.06786 [cs.LG]

Defferrard, M., Bresson, X., Vandergheynst, P.: Convolutional neural networks on graphs with fast localized spectral filtering. Adv. Neural Inf. Process. Syst. 29, 29 (2016). arXiv: 1606.09375 [cs.LG]

Farhat, C., Avery, P., Chapman, T., et al.: Dimensional reduction of nonlinear finite element dynamic models with finite rotations and energy-based mesh sampling and weighting for computational efficiency. Int. J. Numer. Meth. Eng. 98(9), 625–662 (2014). https://doi.org/10.1002/nme.4668

Fleck, P., Calepso, A.S., Hubenschmid, S., et al.: Ragrug: a toolkit for situated analytics. IEEE Trans. Vis. Comput. Gr. (2022)

Fresca, S., Dede’, L., Manzoni, A.: A comprehensive deep learning-based approach to reduced order modeling of nonlinear time-dependent parametrized PDEs. J. Sci. Comput. 87(2), 1. https://doi.org/10.1007/s10915-021-01462-7 (2021)

Fresca, S., Gobat, G., Fedeli, P., et al.: Deep learning-based reduced order models for the real-time simulation of the nonlinear dynamics of microstructures. Int. J. Numer. Meth. Eng. 123(20), 4749–4777 (2022). https://doi.org/10.1002/nme.7054

García-González, A., Huerta, A., Zlotnik, S., et al.: A kernel principal component analysis (kpca) digest with a new backward mapping (pre-image reconstruction) strategy (2000). arXiv: 2001.01958 [math.NA]

Gonzalez, FJ., Balajewicz, M.: Deep convolutional recurrent autoencoders for learning low-dimensional feature dynamics of fluid systems (2018). arXiv: 1808.01346 [math.DS]

Gruber, A., Gunzburger, M., Ju, L., et al.: A comparison of neural network architectures for data-driven reduced-order modeling. Comput. Methods Appl. Mech. Eng. 393(114), 764 (2022). https://doi.org/10.1016/j.cma.2022.114764

Guennec, Y.L., Brunet, J.P., Daim, F.Z., et al.: A parametric and non-intrusive reduced order model of car crash simulation. Comput. Methods Appl. Mech. Eng. 338, 186–207 (2018). https://doi.org/10.1016/j.cma.2018.03.005

Guo, M., Hesthaven, J.S.: Data-driven reduced order modeling for time-dependent problems. Comput. Methods Appl. Mech. Eng. 345, 75–99 (2019). https://doi.org/10.1016/j.cma.2018.10.029

Hesthaven, J.S., Ubbiali, S.: Non-intrusive reduced order modeling of nonlinear problems using neural networks. J. Comput. Phys. 363, 55–78 (2018). https://doi.org/10.1016/j.jcp.2018.02.037

Hill, A.V.: The heat of shortening and the dynamic constants of muscle. Proc. R. Soc. Lond. Ser. B-Biol. Sci. 126(843), 136–195 (1938). https://doi.org/10.1098/rspb.1938.0050

Hotelling, H.: Analysis of a complex of statistical variables into principal components. J. Educ. Psychol. 24(6), 417–441 (1933). https://doi.org/10.1037/h0071325

Kaps, A., Czech, C., Duddeck, F.: A hierarchical kriging approach for multi-fidelity optimization of automotive crashworthiness problems. Struct. Multidiscip. Optim. 65(4), 1 (2022). https://doi.org/10.1007/s00158-022-03211-2

Kingma, D.P., Welling, M.: Auto-encoding variational bayes (2013). arXiv: 1312.6114 [stat.ML]

Kneifl, J., Grunert, D., Fehr, J.: A non-intrusive nonlinear model reduction method for structural dynamical problems based on machine learning. Int. J. Numer. Meth. Eng. (2021). https://doi.org/10.1002/nme.6712

Kneifl, J., Hay, J., Fehr, J.: Real-time human response prediction using a non-intrusive data-driven model reduction scheme (2021b). arXiv: 2110.13583v1 [math.DS]

Kneifl, J., Rosin, D., Okan, A., et al.: Continuum-Mechanical Forward Simulation Results of a Human Upper-limb Model Under Varying Muscle Activations (2022). https://doi.org/10.18419/darus-3302

Kramer, M.A.: Nonlinear principal component analysis using autoassociative neural networks. AIChE J. 37(2), 233–243 (1991). https://doi.org/10.1002/aic.690370209

Lee, K., Carlberg, K.T.: Model reduction of dynamical systems on nonlinear manifolds using deep convolutional autoencoders. J. Comput. Phys. 404(108), 973 (2020). https://doi.org/10.1016/j.jcp.2019.108973

Östh, J., Larsson, E., Jakobsson, L.: Human body model muscle activation influence on crash response. In: Proceedings of the IRCOBI Conference (2022). http://www.ircobi.org/wordpress/downloads/irc22/pdf-files/22101.pdf

Pedregosa, F., Varoquaux, G., Gramfort, A., et al.: Scikit-learn: machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011)

Quarteroni, A., Manzoni, A., Negri, F.: Reduced Basis Methods for Partial Differential Equations: An Introduction. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-15431-2

Ranjan, A., Bolkart, T., Sanyal, S.: et al (2018) Generating 3d faces using convolutional mesh autoencoders. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 704–720. https://doi.org/10.1007/978-3-030-01219-9_43

Rasmussen, CE.: Gaussian processes in machine learning. In: Summer School on Machine Learning, pp. 63–71 (2003). Springer. https://doi.org/10.1007/978-3-540-28650-9_4

Rezende, D.J., Mohamed, S., Wierstra, D.: Stochastic backpropagation and approximate inference in deep generative models. In: Xing, E.P., Jebara, T. (eds) Proceedings of the 31st International Conference on Machine Learning, Proceedings of Machine Learning Research, Vol. 32, pp 1278–1286. PMLR, Bejing (2014). https://proceedings.mlr.press/v32/rezende14.html

Röhrle, O., Sprenger, M., Schmitt, S.: A two-muscle, continuum-mechanical forward simulation of the upper limb. Biomech. Model. Mechanobiol. 16(3), 743–762 (2017). https://doi.org/10.1007/s10237-016-0850-x