Abstract

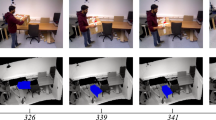

Numerous depth image-based rendering algorithms have been proposed to synthesize the virtual view for the free viewpoint television. However, inaccuracies in the depth map cause visual artifacts in the virtual view. In this paper, we propose a novel virtual view synthesis framework to create the virtual view of the scene. Here, we incorporate a trilateral depth filter with local texture information, spatial proximity, and color similarity to remove the ghost contours by rectifying the misalignment between the depth map and its associated color image. To further enhance the quality of the synthesized virtual views, we partition the scene into different 3D object segments based on the color image and depth map. Each 3D object segment is warped and blended independently to avoid mixing the pixels belonging to different parts of the scene. The evaluation results indicate that the proposed method significantly improves the quality of the synthesized virtual view compared with other methods and are qualitatively very similar to the ground truth. In addition, it also performs well in real-world scenes.

Similar content being viewed by others

References

Liu, J., Li, C., Mei, F., Wang, Z.: 3D entity-based stereo matching with ground control points and joint second-order smoothness prior. Vis. Comput. 31(9), 1253–1269 (2015)

Zitnick, C.L., Kang, S.B., Uyttendaele, M., Winder, S., Szeliski, R.: High-quality video view interpolation using a layered representation. In: ACM Transactions on Graphics (TOG), vol. 23, pp. 600–608. ACM (2004)

Software for view synthesis. http://www.fujii.nuee.nagoya-u.ac.jp/multiview-data/mpeg2/VS.htm

Müller, K., Smolic, A., Dix, K., Kauff, P., Wiegand, T.: Reliability-based generation and view synthesis in layered depth video. In: Multimedia Signal Processing, 2008 IEEE 10th Workshop on, pp. 34–39. IEEE (2008)

Solh, M., AlRegib, G.: Hierarchical hole-filling for depth-based view synthesis in FTV and 3D video. Sel. Topics Signal Process. IEEE J. 6(5), 495–504 (2012)

Dahan, M.J., Chen, N., Shamir, A., Cohen-Or, D.: Combining color and depth for enhanced image segmentation and retargeting. Vis. Comput. 28(12), 1181–1193 (2012)

Liu, M.Y., Tuzel, O., Taguchi, Y.: Joint geodesic upsampling of depth images. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 169–176 (2013)

Loghman, M., Kim, J.: Segmentation-based view synthesis for multi-view video plus depth. Multimed. Tools Appl. 74(5), 1611–1625 (2015)

Boykov, Y., Veksler, O., Zabih, R.: Fast approximate energy minimization via graph cuts. Pattern Anal. Mach. Intell. IEEE Trans. 23(11), 1222–1239 (2001)

Peak signal-to-noise ratio. https://en.wikipedia.org/wiki/Peak_signal-to-noise_ratio

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. Image Process. IEEE Trans. 13(4), 600–612 (2004)

Wolinski, D., Le Meur, O., Gautier, J.: 3D view synthesis with inter-view consistency. In: Proceedings of the 21st ACM International Conference on Multimedia, pp. 669–672. ACM (2013)

Fukushima, N., Kodera, N., Ishibashi, Y., Tanimoto, M.: Comparison between blur transfer and blur re-generation in depth image based rendering. In: 3DTV-Conference: The True Vision-Capture, Transmission and Display of 3D Video (3DTV-CON), 2014, pp. 1–4. IEEE (2014)

Ahn, I., Kim, C.: A novel depth-based virtual view synthesis method for free viewpoint video. Broadcast. IEEE Trans. 59(4), 614–626 (2013)

Acknowledgments

This work is supported and funded by the National Natural Science Foundation of China (No. 61300131), the National Key Technology Research and Development Program of China (No. 2013BAK03B07).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Liu, J., Li, C., Fan, X. et al. View synthesis with 3D object segmentation-based asynchronous blending and boundary misalignment rectification. Vis Comput 32, 989–999 (2016). https://doi.org/10.1007/s00371-016-1228-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-016-1228-x