Abstract

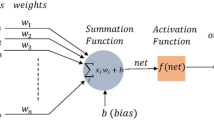

While multi-layer perceptrons (MLPs) remain popular for various classification tasks, their application of gradient-based schemes for training leads to some drawbacks including getting trapped in local optima. To tackle this, population-based metaheuristic methods have been successfully employed. Among these, Lévy flight distribution (LFD), which explores the search space through random walks based on a Lévy distribution, has shown good potential to solve complex optimisation problems. LFD uses two main components, the step length of the walk and the movement direction, for random walk generation to explore the search space. In this paper, we propose a novel MLP training algorithm based on the Lévy flight distribution algorithm for neural network-based pattern classification. We encode the network’s parameters (i.e., its weights and bias terms) into a candidate solution for LFD, and employ the classification error as fitness function. The network parameters are then optimised, using LFD, to yield an MLP that is trained to perform well on the classification task at hand. In an extensive set of experiments, we compare our proposed algorithm with a number of other approaches, including both classical algorithms and other metaheuristic approaches, on a number of benchmark classification problems. The obtained results clearly demonstrate the superiority of our LFD training algorithm.

Similar content being viewed by others

Data Availability

The datasets used during the current study are available in the UCI Machine Learning repository: https://archive.ics.uci.edu/ml/index.php.

References

Al-Betar, M.A., Awadallah, M.A., Doush, I.A., Alomari, O.A., Abasi, A.K., Makhadmeh, S.N., Alyasseri, Z.A.A.: Boosting the training of neural networks through hybrid metaheuristics. In: Cluster Computing, pp. 1–23 (2022)

Aljarah, I., Faris, H., Mirjalili, S.: Optimizing connection weights in neural networks using the whale optimization algorithm. Soft Comput. 22(1), 1–15 (2018)

Altay, O., Altay, E.V.: A novel hybrid multilayer perceptron neural network with improved grey wolf optimizer. In: Neural Computing and Applications, pp. 1–28 (2022)

Asuncion, A., Newman, D.: UCI machine learning repository (2007)

Aydogdu, I., Carbas, S., Akin, A.: Effect of levy flight on the discrete optimum design of steel skeletal structures using metaheuristics. Steel Compos. Struct. 24(1), 93–112 (2017)

Battiti, R.: First-and second-order methods for learning: between steepest descent and Newton’s method. Neural Comput. 4(2), 141–166 (1992)

Bidgoli, A.A., Komleh, H.E., Mousavirad, S.J.: Seminal quality prediction using optimized artificial neural network with genetic algorithm. In: 9th International Conference on Electrical and Electronics Engineering, pp. 695–699 (2015)

Boughrara, H., Chtourou, M., Amar, C.B., Chen, L.: Facial expression recognition based on a MLP neural network using constructive training algorithm. Multimedia Tools Appl. 75(2), 709–731 (2016)

Cantú-Paz, E., Kamath, C.: An empirical comparison of combinations of evolutionary algorithms and neural networks for classification problems. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 35, 915–927 (2005)

Carvalho, M., Ludermir, T.B.: An analysis of PSO hybrid algorithms for feed-forward neural networks training. In: 9th Brazilian Symposium on Neural Networks, pp. 6–11 (2006)

Chauhan, N., Ravi, V., Chandra, D.K.: Differential evolution trained wavelet neural networks: application to bankruptcy prediction in banks. Expert Syst. Appl. 36, 7659–7665 (2009)

Dolatabadi, A.M., Pour, M.S., Rezaee, K., Ajarostaghi, S.S.M.: Applying machine learning for optimization of dehumidification strategy on the modified model for the non-equilibrium condensation in steam turbines. Eng. Anal. Bound. Elem. 145, 13–24 (2022)

Ebrahimpour-Komleh, H., Mousavirad, S.J.: Cuckoo optimization algorithm for feedforward neural network training. In: 21st Iranian Conference on Electrical Engineering (2013)

Emami, H., Alipour, M.M.: Chaotic local search-based levy flight distribution algorithm for optimizing ONU placement in fiber-wireless access network. Opt. Fiber Technol. 67, 102733 (2021)

Ewees, A.A., Elaziz, M.A., Alameer, Z., Ye, H., Jianhua, Z.: Improving multilayer perceptron neural network using chaotic grasshopper optimization algorithm to forecast iron ore price volatility. Resour. Policy 65, 101555 (2020)

Hagan, M.T., Demuth, H.B., Beale, M.H.: Neural Network Design (1996)

Hamzehei, S., Akbarzadeh, O., Attar, H., Rezaee, K., Fasihihour, N., Khosravi, M.R.: Predicting the total unified Parkinson’s disease rating scale (UPDRS) based on ml techniques and cloud-based update. J. Cloud Comput. 12(1), 1–16 (2023)

Houssein, E.H., Hassaballah, M., Ibrahim, I.E., AbdElminaam, D.S., Wazery, Y.M.: An automatic arrhythmia classification model based on improved marine predators algorithm and convolutions neural networks. Expert Syst. Appl. 187, 115936 (2022)

Houssein, E.H., Saad, M.R., Hashim, F.A., Shaban, H., Hassaballah, M.: Lévy flight distribution: a new metaheuristic algorithm for solving engineering optimization problems. Eng. Appl. Artif. Intell. 94, 103731 (2020)

Ilonen, J., Kamarainen, J.-K., Lampinen, J.: Differential evolution training algorithm for feed-forward neural networks. Neural Process. Lett. 17(1), 93–105 (2003)

Izci, D., Ekinci, S., Hekimoğlu, B.: Fractional-order PID controller design for buck converter system via hybrid lévy flight distribution and simulated annealing algorithm. Arab. J. Sci. Eng., 1–19 (2022)

Jamil, M., Zepernick, H.-J.: Lévy flights and global optimization. In: Swarm Intelligence and Bio-inspired Computation, pp. 49–72. Elsevier, Amsterdam (2013)

Kaidi, W., Khishe, M., Mohammadi, M.: Dynamic levy flight chimp optimization. Knowl. Based Syst. 235, 107625 (2022)

Karaboga, D., Akay, B., Ozturk, C.: Artificial bee colony (ABC) optimization algorithm for training feed-forward neural networks. In: International Conference on Modeling Decisions for Artificial Intelligence, pp. 318–329 (2007)

Kh, R., Rasegh, G.M., Chagha, G.N., Haddania, J.: An intelligent diagnostic system for detection of hepatitis using multi-layer perceptron and colonial competitive algorithm. J. Math. Comput. Sci. 4(1), 237–245 (2012)

Koçkal, N., Aydoğdu, İ.: Estimation of rigidity of concrete based on multi parameters using artificial bee colony optimization method with levy flight distribution. Filomat 34(2) (2020)

Leema, N., Khanna Nehemiah, H., Kannan, A.: Neural network classifier optimization using differential evolution with global information and back propagation algorithm for clinical datasets. Appl. Soft Comput. 49, 834–844 (2016)

Ling, Y., Zhou, Y., Luo, Q.: Lévy flight trajectory-based whale optimization algorithm for global optimization. IEEE Access 5, 6168–6186 (2017)

Magdziarz, M., Szczotka, W.: Quenched trap model for Lévy flights. Commun. Nonlinear Sci. Numer. Simul. 30(1–3), 5–14 (2016)

Mandischer, M.: A comparison of evolution strategies and backpropagation for neural network training. Neurocomputing 42, 87–117 (2002)

Mirjalili, S.: The ant lion optimizer. Adv. Eng. Softw. 83, 80–98 (2015)

Mirjalili, S.: Dragonfly algorithm: a new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput. Appl. 27(4), 1053–1073 (2016)

Mirjalili, S.: SCA: a sine cosine algorithm for solving optimization problems. Knowl. Based Syst. 96, 120–133 (2016)

Montana, D.J., Davis, L.: Training feedforward neural networks using genetic algorithms. In: International Joint Conferences on Artificial Intelligence Organization, vol. 89, pp. 762–767 (1989)

Mora-Rubio, A., Alzate-Grisales, J.A., Arias-Garzón, D., Buriticá, J.I.P., Varón, C.F.J., Bravo-Ortiz, M.A., Arteaga-Arteaga, H.B., Hassaballah, M., Orozco-Arias, S., Isaza, G. et al.: Multi-subject identification of hand movements using machine learning. In: Sustainable Smart Cities and Territories, pp. 117–128. Springer, Berlin (2022)

Moravvej, S.V., Mousavirad, S.J., Moghadam, M.H., Saadatmand, M.: An LSTM-based plagiarism detection via attention mechanism and a population-based approach for pre-training parameters with imbalanced classes. In: International Conference on Neural Information Processing, pp. 690–701 (2021)

Mousavirad, S.J., Bidgoli, A.A., Ebrahimpour-Komleh, H., Schaefer, G., Korovin, I.: An effective hybrid approach for optimising the learning process of multi-layer neural networks. In: International Symposium on Neural Networks, pp. 309–317 (2019)

Mousavirad, S.J., Bidgoli, A.A., Komleh, H.E., Schaefer, G.: A memetic imperialist competitive algorithm with chaotic maps for multi-layer neural network training. Int. J. Bio-Inspired Comput. 14(4), 227–236 (2019)

Mousavirad, S.J., Gandomi, A.H., Homayoun, H.: A clustering-based differential evolution boosted by a regularisation-based objective function and a local refinement for neural network training. In: 2022 IEEE Congress on Evolutionary Computation (CEC), pp. 1–8. IEEE (2022)

Mousavirad, S.J., Jalali, S.M.J., Ahmadian, S., Khosravi, A., Schaefer, G., Nahavandi, S.: Neural network training using a biogeography-based learning strategy. In: International Conference on Neural Information Processing, pp. 147–155 (2020)

Mousavirad, S.J., Rahnamayan, S.: Evolving feedforward neural networks using a quasi-opposition-based differential evolution for data classification. In: IEEE Symposium Series on Computational Intelligence, pp. 2320–2326 (2020)

Mousavirad, S.J., Schaefer, G., Ebrahimpour-Komleh, H.: Optimising connection weights in neural networks using a memetic algorithm incorporating chaos theory. In: Metaheuristics in Machine Learning: Theory and Applications, pp. 169–192. Springer, Berlin (2021)

Mousavirad, S.J., Schaefer, G., Jalali, S.M.J., Korovin, I.: A benchmark of recent population-based metaheuristic algorithms for multi-layer neural network training. In: Genetic and Evolutionary Computation Conference Companion, pp. 1402–1408 (2020)

Mousavirad, S.J., Schaefer, G., Korovin, I., Oliva, D.: RDE-OP: a region-based differential evolution algorithm incorporation opposition-based learning for optimising the learning process of multi-layer neural networks. In: International Conference on the Applications of Evolutionary Computation, pp. 407–420 (2021)

Mousavirad, S.J., Schaefer, G., Korovin, I.S.: An effective approach for neural network training based on comprehensive learning. In: 25th International Conference on Pattern Recognition, pp. 8774–8781 (2021)

Phansalkar, V.V., Sastry, P.S.: Analysis of the back-propagation algorithm with momentum. IEEE Trans. Neural Netw. 5(3), 505–506 (1994)

Piotrowski, A.P.: Differential evolution algorithms applied to neural network training suffer from stagnation. Appl. Soft Comput. 21, 382–406 (2014)

Powell, M.J.D.: Restart procedures for the conjugate gradient method. Math. Program. 12(1), 241–254 (1977)

Rather, S.A., Bala, P.S.: Lévy flight and chaos theory based gravitational search algorithm for multilayer perceptron training. In: Evolving Systems, pp. 1–28 (2022)

Riedmiller, M., Braun, H.: A direct adaptive method for faster backpropagation learning: The RPROP algorithm. In: IEEE International Conference on Neural Networks, pp. 586–591 (1993)

Scales, L.E.: Introduction to Non-linear Optimization. Macmillan International Higher Education (1985)

Sexton, R.S., Dorsey, R.E.: Reliable classification using neural networks: a genetic algorithm and backpropagation comparison. Decis. Support Syst. 30(1), 11–22 (2000)

Słowik, A., Bialko, M.: Training of artificial neural networks using differential evolution algorithm. In: Conference on Human System Interactions, pp. 60–65 (2008)

Tarkhaneh, O., Shen, H.: Training of feedforward neural networks for data classification using hybrid particle swarm optimization, mantegna lévy flight and neighborhood search. Heliyon 5(4), e01275 (2019)

Tuba, M., Alihodzic, A., Bacanin, N.: Cuckoo search and bat algorithm applied to training feed-forward neural networks. In: Recent Advances in Swarm Intelligence and Evolutionary Computation, pp. 139–162. Springer, Berlin (2015)

Yaghini, M., Khoshraftar, M.M., Fallahi, M.: A hybrid algorithm for artificial neural network training. Eng. Appl. Artif. Intell. 26, 293–301 (2013)

Yang, X.-S., Deb, S.: Cuckoo search via Lévy flights. In: World Congress on Nature and Biologically Inspired Computing, pp. 210–214 (2009)

Yang, X.-S., Karamanoglu, M.: Swarm intelligence and bio-inspired computation: an overview. In: Swarm Intelligence and Bio-Inspired Computation, pp. 3–23 (2013)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

About this article

Cite this article

Bojnordi, E., Mousavirad, S.J., Pedram, M. et al. Improving the Generalisation Ability of Neural Networks Using a Lévy Flight Distribution Algorithm for Classification Problems. New Gener. Comput. 41, 225–242 (2023). https://doi.org/10.1007/s00354-023-00214-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00354-023-00214-5