Abstract

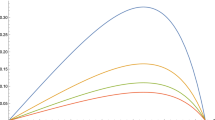

We consider the harvesting of a population in a stochastic environment whose dynamics in the absence of harvesting is described by a one dimensional diffusion. Using ergodic optimal control, we find the optimal harvesting strategy which maximizes the asymptotic yield of harvested individuals. To our knowledge, ergodic optimal control has not been used before to study harvesting strategies. However, it is a natural framework because the optimal harvesting strategy will never be such that the population is harvested to extinction—instead the harvested population converges to a unique invariant probability measure. When the yield function is the identity, we show that the optimal strategy has a bang–bang property: there exists a threshold \(x^*>0\) such that whenever the population is under the threshold the harvesting rate must be zero, whereas when the population is above the threshold the harvesting rate must be at the upper limit. We provide upper and lower bounds on the maximal asymptotic yield, and explore via numerical simulations how the harvesting threshold and the maximal asymptotic yield change with the growth rate, maximal harvesting rate, or the competition rate. We also show that, if the yield function is \(C^2\) and strictly concave, then the optimal harvesting strategy is continuous, whereas when the yield function is convex the optimal strategy is of bang–bang type. This shows that one cannot always expect bang–bang type optimal controls.

Similar content being viewed by others

Notes

We thank the anonymous referee who has brought the paper to our attention.

The assumption may not apply in models with large institutional investors.

An ecological model extension that would link this literature to our model would consider optimal extraction policy to maximise a time discounted concave total-yield function when there are at least two populations, situated in different environments with no growth limitation.

\(|\mu (x) - \mu (0)| < M|x|\) for some real \(M>0\) as \(\mu \) locally Lipschitz by asssumption. Therefore \(|g(x) - g(0)| < Mx^2\), so g differentiable at 0. Moreover, \(g'(0) = - \mu (0)\), and \(\mu (0)>0\) by assumption, so \(x_\iota \ne 0\).

References

Abakuks A (1979) An optimal hunting policy for a stochastic logistic model. J Appl Probab 16(2):319–331

Arapostathis A, Borkar V S, Ghosh M K (2012) Ergodic control of diffusion processes. Cambridge University Press, Cambridge

Alvarez LHR (2000) Singular stochastic control in the presence of a state-dependent yield structure. Stoch Process Appl 86(2):323–343

Abakuks A, Prajneshu (1981) An optimal harvesting policy for a logistic model in a randomly varying environment. Math Biosci 55(3–4):169–177

Alvarez LHR, Shepp LA (1998) Optimal harvesting of stochastically fluctuating populations. J Math Biol 37(2):155–177

Beddington JR, May RM (1977) Harvesting natural populations in a randomly fluctuating environment. Science 197(4302):463–465

Berg C, Pedersen HL (2006) The Chen–Rubin conjecture in a continuous setting. Methods Appl Anal 13(1):63–88

Berg C, Pedersen HL (2008) Convexity of the median in the gamma distribution. Ark Mat 46(1):1–6

Braumann CA (2002) Variable effort harvesting models in random environments: generalization to density-dependent noise intensities. Math Biosci 177(178):229–245 (Deterministic and stochastic modeling of biointeraction (West Lafayette, IN, 2000))

Benaïm M, Schreiber SJ (2009) Persistence of structured populations in random environments. Theor Popul Biol 76(1):19–34

Borodin AN, Salminen P (2012) Handbook of Brownian motion-facts and formulae. Birkhäuser, Basel

Dennis B, Patil GP (1984) The gamma distribution and weighted multimodal gamma distributions as models of population abundance. Math Biosci 68(2):187–212

Drèze J, Stern N (1987) The theory of cost-benefit analysis. Handb Pub Econ 2:909–989

Evans SN, Hening A, Schreiber SJ (2015) Protected polymorphisms and evolutionary stability of patch-selection strategies in stochastic environments. J Math Biol 71(2):325–359

Ethier S N, Kurtz T G (2009) Markov processes: characterization and convergence. Wiley, New York

Evans SN, Ralph PL, Schreiber SJ, Sen A (2013) Stochastic population growth in spatially heterogeneous environments. J Math Biol 66(3):423–476

Gard TC (1984) Persistence in stochastic food web models. Bull Math Biol 46(3):357–370

Gard TC (1988) Introduction to stochastic differential equations. M. Dekker, New York

Gard TC, Hallam TG (1979) Persistence in food webs. I. Lotka-Volterra food chains. Bull Math Biol 41(6):877–891

Gulland JA (1971) The effect of exploitation on the numbers of marine animals. In: Proceedings of the advanced study institute on dynamics of numbers in populations, pp 450–468

Hening A, Kolb M (2018) Quasistationary distributions for one-dimensional diffusions with singular boundary points (submitted). arXiv:1409.2387

Hening A, Nguyen D (2018) Coexistence and extinction for stochastic Kolmogorov systems. Ann Appl Probab. arXiv:1704.06984

Hening A, Nguyen D (2018) Persistence in stochastic Lotka-Volterra food chains with intraspecific competition (preprint). arXiv:1704.07501

Hening A, Nguyen D (2018) Stochastic Lotka-Volterra food chains. J Math Biol. arXiv:1703.04809

Hening A, Nguyen D, Yin G (2018) Stochastic population growth in spatially heterogeneous environments: the density-dependent case. J Math Biol 76(3):697–754

Hutchings JA, Reynolds JD (2004) Marine fish population collapses: consequences for recovery and extinction risk. AIBS Bull 54(4):297–309

Khasminskii R (2012) Stochastic stability of differential equations. In: Rozovskiĭ B, Glynn PW (eds) Stochastic modelling and applied probability, vol 66, 2nd edn. Springer, Heidelberg (With contributions by G. N. Milstein and M. B. Nevelson)

Kokko H (2001) Optimal and suboptimal use of compensatory responses to harvesting: timing of hunting as an example. Wildl Biol 7(3):141–150

Leigh EG (1981) The average lifetime of a population in a varying environment. J Theor Biol 90(2):213–239

Lande R, Engen S, Saether B-E (1995) Optimal harvesting of fluctuating populations with a risk of extinction. Am Nat 145(5):728–745

Ludwig D, Hilborn R, Walters C (1993) Uncertainty, resource exploitation, and conservation: lessons from history. Ecol Appl 3:548–549

Lungu EM, Øksendal B (1997) Optimal harvesting from a population in a stochastic crowded environment. Math Biosci 145(1):47–75

May RM, Beddington JR, Horwood JW, Shepherd JG (1978) Exploiting natural populations in an uncertain world. Math Biosci 42(3–4):219–252

Mas-Colell A, Whinston MD, Green JR (1995) Microeconomic theory, vol 1. Oxford University Press, New York

Merton RC (1969) Lifetime portfolio selection under uncertainty: the continuous-time case. Rev Econ Stat 51:247–257

Merton RC (1971) Optimum consumption and portfolio rules in a continuous-time model. J Econ Theory 3(4):373–413

Primack RB (2006) Essentials of conservation biology. Sinauer Associates, Sunderland

Reiter J, Panken KJ, Le Boeuf BJ (1981) Female competition and reproductive success in northern elephant seals. Anim Behav 29(3):670–687

Roth G, Schreiber SJ (2014) Persistence in fluctuating environments for interacting structured populations. J Math Biol 69(5):1267–1317

Schreiber SJ, Benaïm M, Atchadé KAS (2011) Persistence in fluctuating environments. J Math Biol 62(5):655–683

Shaffer ML (1981) Minimum population sizes for species conservation. Bioscience 31(2):131–134

Smith JB (1978) An analysis of optimal replenishable resource management under uncertainty. Digitized Theses, Paper 1074

Schreiber SJ, Ryan ME (2011) Invasion speeds for structured populations in fluctuating environments. Theor Ecol 4(4):423–434

Traill LW, Bradshaw CJA, Brook BW (2007) Minimum viable population size: a meta-analysis of 30 years of published estimates. Biol Conserv 139(1):159–166

Tyson R, Lutscher F (2016) Seasonally varying predation behavior and climate shifts are predicted to affect predator-prey cycles. Am Nat 188(5):539–553

Turelli M (1977) Random environments and stochastic calculus. Theor Popul Biol 12(2):140–178

Acknowledgements

We thank two anonymous referees for very insightful comments and suggestions that led to major improvements.

Author information

Authors and Affiliations

Corresponding author

Additional information

Dang H. Nguyen was in part supported by the National Science Foundation under Grant DMS-1207667 and is also supported by an AMS Simons travel grant. Tak Kwong Wong was in part supported by the HKU Seed Fund for Basic Research under the Project Code 201702159009, and the Start-up Allowance for Croucher Award Recipients.

Appendices

Appendix A: Proofs

In this appendix we present the framework of ergodic optimal control and prove the main results of our paper.

For any \(v\in \mathfrak U_{sm}\), denote the unique invariant probability measure of X(t) on \(\mathbb {R}_{++}\) by \(\pi _v\) if it exists. Define

Let \(p>0\). Since \(\lim _{x\rightarrow \infty }\mu (x)=-\infty \), there exist constants \( k_{1p}, k_{2p}>0\) such that

By Dynkin’s formula

Thus,

As a result, the family of occupation measures

is tight. If X(t) has an invariant probability measure on \(\mathbb {R}_{++}\), then \(\left( \Pi _{x,t}^v\right) _{t\ge 0}\) converges weakly to \(\pi _v\) because the diffusion is nondegenerate. This convergence and the uniform integrability (A.2) imply that

If X(t) has no invariant probability measures on \(\mathbb {R}_{++}\), then the Dirac measure with mass at 0 is the only invariant probability measure of X(t) on \(\mathbb {R}_+\). Moreover, any weak-limit of \(\left( \Pi _{x,t}^v\right) _{t\ge 0}\) as \(t\rightarrow \infty \) is an invariant probability measure of X(t) (Ethier and Kurtz 2009, Theorem 9.9 or Evans et al. 2015, Proposition 8.4). Thus, \(\left( \Pi _{x,t}^v\right) _{t\ge 0}\) converges weakly to the Dirac measure \(\delta _0\) as \(t\rightarrow \infty \). Because of (A.2) and \(\Phi (0)=0\), we have

Thus, we always have

Define

It will be shown later that \(\rho ^*>0\) whenever the population without harvesting persists, i.e. when \(\mu (0)-\sigma ^2/2>0\).

Theorem A.1

Suppose \(\mu (0)-\sigma ^2/2>0\), \(\mu (\cdot )\) satisfies Assumption 2.1 and \(\Phi (\cdot )\) satisfies Assumption 2.2. There exists a stationary Markov strategy \(v^*\in \mathfrak U_{sm}\) such that \(\pi _{v^*}\) exists and \(\rho _{v^*}=\rho ^*\). Moreover, for any admissible control h(t), we have

Proof

By (A.2) and since \(\Phi \) has a subpolynomial growth rate we can conclude that

Moreover, since \(\mu (0)-\sigma ^2/2>0\) we note that, since our population does not go extinct, \(\rho ^*>0\). On the other hand, since \(\Phi \) is continuous and \(\Phi (0)=0\) we get that \(\Phi (x)<\rho ^*\) for x is sufficiently small. This fact combined with (A.5) implies the existence of an optimal Markov strategy \(v^*\) according to Arapostathis et al. (2012, Theorem 3.4.5, Theorem 3.4.7). \(\square \)

Theorem A.2

Suppose \(\mu (0)-\sigma ^2/2>0\), \(\mu (\cdot )\) satisfies Assumption 2.1 and \(\Phi (\cdot )\) satisfies Assumption 2.2. The HJB equation

admits a classical solution \(V^*\in C^2(\mathbb {R}_+)\) satisfying \(V^*(1)=0\) and \(\rho =\rho ^*> 0\). The solution \(V^*\) of (A.6) has the following properties:

-

a)

For any \(p\in (0,1)\)

$$\begin{aligned} \lim _{x\rightarrow \infty }\dfrac{V^*(x)}{x^{p}}=0. \end{aligned}$$(A.7) -

b)

The function \(V^*\) is increasing, that is

$$\begin{aligned} V^*_x\ge 0,\, x\in \mathbb {R}_{++}. \end{aligned}$$(A.8)

A Markov control v is optimal if and only if it satisfies

almost everywhere in \(\mathbb {R}_+\).

Proof

Consider the optimal problem with the yield function

for some fixed \(x\in \mathbb {R}_{++}\) and \(h\in \mathfrak {U}\). Note that this is the \(\alpha \)-discounted optimal problem. Pick any \(0<x_1<x_2<\infty \) and let \(X^{x_1}\), \(X^{x_2}\) be the solutions to the controlled diffusion

with initial values \(x_1\), \(x_2\) respectively. Note that we are using a fixed admissible control h(t) which is the same for any initial value. The control h(t) here is not a Markov control which in general depends on the initial value. Since \(\mu (\cdot )\) is continuous and decreasing, for \(y_1, y_2>0\), there exists \(\xi (y_1, y_2)>0\) depending continuously on \(y_1, y_2\) such that \(\mu (y_1)-\mu (y_2)=-\xi (y_1, y_2)(\ln y_1-\ln y_2)\). Using Itô’s Lemma we have

which in turn yields

Therefore, if \(x_2>x_1\), we get that

This implies that \(J_h(\cdot )\) is an increasing function. Therefore, the optimal yield

is also increasing. By Arapostathis et al. (2012, Lemma 3.7.8), there is a function \(V^*\in C^2(\mathbb {R}_{++})\) satisfying (A.6) for a number \(\rho \) such that

Moreover,

for some sequence \((\alpha _n)_{n\in \mathbb {N}}\) that satisfies \(\alpha _n\rightarrow 0\) as \(n\rightarrow \infty \). This implies that \(V^*\) is an increasing function, i.e.

For any continuous function \(\psi :\mathbb {R}_{++}\mapsto \mathbb {R}\) satisfying

we have from (A.1) and Arapostathis et al. (2012, Lemma 3.7.2) that \({\mathbb {E}}^v_x |\psi (X(t))|\) exists and satisfies

and

where \(\xi _R=\inf \{t\ge 0: X(t)> R \text { or } X(t)<R^{-1}\}.\) Moreover, by using Arapostathis et al. (2012, Lemma 3.7.2) again we get that

where

and \(\tau _R:=\inf \{t\ge 0: X(t)\le R\}.\)

By Arapostathis et al. (2012, Formula 3.7.48), we have the estimate

which implies

Now, pick any \(\varepsilon >0\) and divide (A.16) on both sides by \(x^{p+\varepsilon }\). We get

and by letting \(x\rightarrow \infty \)

This implies, since p and \(\varepsilon >0\) are arbitrary, Eq. (A.7). Let \(\chi :\mathbb {R}_{++}\mapsto [0,1]\) be a continuous function satisfying \(\chi (x)=0\) if \(x<\frac{1}{2}\) and \(\chi (x)=1\) if \(x\ge 1\). Then \(\psi (x):=V^*(x)\chi (x)\) satisfies (A.12) because of (A.16). On the other hand, since \(V^*(x)\) is increasing and \(V^*(1)=0\), then \(V^*(x)\le 0\) when \(x\le 1\). Thus, we have

Let \(v^*\) be the measurable function satisfying (A.9).

By Dynkin’s formula

Letting \(R\rightarrow \infty \), we obtain from the monotone convergence theorem and (A.14) that

Letting \(t\rightarrow \infty \) and using (A.13) and (A.3), we have

This and (A.17) implies that \(\rho =\rho ^*=\rho _{v^*}\).

By the arguments from Arapostathis et al. (2012, Theorem 3.7.12), we can show that v is an optimal control if and only if (A.9) is satisfied. \(\square \)

When \(\Phi \) is the identity mapping the Eq. (A.9) becomes

which implies

Our main result is the following theorem.

Theorem 2.1

Assume that \(\Phi (x)=x, x\in (0,\infty )\) and that the population survives in the absence of harvesting, that is \(\mu (0)-\frac{\sigma ^2}{2}>0\). Furthermore assume that the drift function \(\mu (\cdot )\) satisfies Assumption 2.1. The optimal control (the optimal harvesting strategy) v has the bang–bang form

for a unique \(x^*\in (0,\infty )\). Furthermore, we have the following upper bound for the optimal asymptotic yield

Remark A.1

If \(V_x^*(x)=1\) then we note that (A.18) does not provide any information about v(x). However, in this case we can set the harvesting rate equal to anything since the yield function will not change. This is because our diffusion is non-degenerate and changing the values of the drift on a set of zero Lebesgue measure does not change the distribution of X.

We split up the proof of Theorem 2.1 into a few propositions. It is immediate to see that the HJB equation (A.6) becomes

Sketch of proof of Theorem 2.1

Since the optimal control is given by (A.18) we need to analyze the properties of the function \(V_x^*\) which by (A.19) satisfies a first order ODE. The analysis of this is split up into several propositions. Note that the ODE governing \(V_x^*\) is different, depending on whether \(V_x^*>1\) or \(V_x^*\le 1\).

In Proposition A.1 we analyze the ODE for when \(V_x^*\le 1\) and find its asymptotic behavior close to 0. Using this we can show in Proposition A.2 that one cannot have a \(\eta >0\) such that \(V_x^*(x)\le 0\) for all \(x\in (0,\eta ]\).

Similarly, in Proposition A.3 we show that there can exist no \(\zeta >0\) such that \(V_x^*(x)\ge 1\) for all \(x\ge \zeta \).

In Proposition A.4 we explore the possible ways \(V_x^*\) can cross the line \(y=1\) and find using soft arguments that there can be at most 3 crossings. Finally, we show that actually there must be exactly one crossing of \(y=1\) by \(V_x^*\) and that this crossing has to be from above. This combined with (A.18) completes the proof. \(\square \)

Proposition A.1

Assume \(\mu (\cdot )\) is locally Lipschitz on \([0,\infty )\). Then any solution \(\varphi _2\) of the ODE

satisfies

Proof

It follows from the method of integrating factors that the solution to the ODE (A.20) is

where the non-homogeneous term is \(\beta (y):=\frac{2(\rho -My)}{\sigma ^2 y^2}\), and the integrating factor is

for \(\gamma (y):=\frac{2(\mu (y) -M)}{\sigma ^2 y}\), and arbitrary \(x_0, x_1\in (0,\infty )\). Since \(\mu \) is locally Lipschitz at \(x=0\), there are constants \(L, K>0\) such that for any \(x\in [0,L]\), \(|\mu (x)-\mu _0|\le K x\), where \(\mu _0:=\mu (0)\). From now on, we choose \(x_1:=L\) (or any number between 0 and L). We have, for any \(x\in [0,x_1]\),

This implies that as \(x\rightarrow 0^+\),

On the other hand, from now on, if we choose \(x_0>0\) sufficiently close to 0 such that \(\rho -Mx>0\) and (A.23) holds for all \(x\in (0,x_0)\), then we have, for any \(0<x<x_0\),

where the constants \(C_i\) are given by

Now, using the asymptotic properties (A.23) and (A.24), we can analyze the limit of \(\varphi _2\) as follows.

Case 1:\(\mu _0 < M\).

In this case, we get from (A.23) and (A.24) that

Thus, we can apply l’Hôpital’s rule and obtain

since \(\rho >0\). This shows the limit (B.4).

Case 2:\(M\le \mu _0 \le M + \frac{\sigma ^2}{2}\).

For this range of \(\mu _0\), it follows from (A.23) and (A.24) again that

but \(\lim _{x\rightarrow 0^+} \zeta (x)\) exists and is finite. Hence, we can obtain the limit (B.4) by passing to the limit \(x\rightarrow 0^+\) in the solution formula (A.22).

Case 3:\(\mu _0 > M + \frac{\sigma ^2}{2}\).

In this final case, it follows from (A.23) and (A.24) that \(\lim _{x\rightarrow 0^+} \zeta (x) = 0\) and

exists and is finite. If \(J\ne 0\), then passing to the limit \(x\rightarrow \infty \) in the solution formula (A.22) will imply the limit (B.4). Otherwise, we can apply l’Hôpital’s rule and do the same computations we did in (A.25). This proves the limit (B.4).

Putting together Cases 1,2 and 3 completes the proof. \(\square \)

Proposition A.2

There does not exist any \(\eta >0\) such that \(V_x^*(x)\le 1, x\in (0,\eta ]\).

Proof

We will argue by contradiction. Assume there exists \(\eta >0\) such that \(V_x^*(x)\le 1, x\in (0,\eta ]\). Then by (A.19) we get that \(V_x^*\) follows the ODE (A.20) for all \(x\in (0,\eta )\). Making use of Proposition A.1 we get that

which contradicts that \(V_x^*\ge 0\) or that \(V_x^*(x)\le 1, x\in (0,\eta ]\). The proof is complete. \(\square \)

The above Proposition shows that the scenario from Fig. 5 cannot happen.

If \(V^*_x\) crosses \(y=1\) from below at \(x_0\), and it has not crossed from above before then we get a contradiction by Proposition A.2

Proposition A.3

There does not exist any \(\chi >0\) such that \(V_x^*(x)\ge 1\) for all \(x\ge \chi \).

Proof

Once again we will argue by contradiction. Assume there exists \(\chi >0\) such that \(V_x^*(x)\ge 1\) for all \(x\ge \chi \). By (A.19) \(V_x^*\) will follow the ODE

for all \(x\ge \chi \). As a result we get just as in Proposition A.1

where the non-homogeneous term is \(\beta (y):=\frac{2\rho }{\sigma ^2 y^2}\), and the integrating factor is

for \(\gamma (y):=\frac{2\mu (y)}{\sigma ^2 y}\), and arbitrary \(x_0, x_1\in (\chi ,\infty )\). Under Assumption 2.1 we can see that there exist constants \(L>0\) and \(c>0\) such that \(\mu (y) < -c\) for all \(y>L\), and hence, \(\int _{L}^x \frac{\mu (y)}{y} \;dy \le -c \int _{x_1}^x \frac{1}{y} \;dy = -c (\ln x - \ln x_1) \rightarrow -\infty \) as \(x\rightarrow \infty \). If we choose \(c> \frac{\sigma ^2}{2}\), \(x_1:=L\) we get

as \(x\rightarrow \infty \). If

then by (A.27) and the positivity of \(\zeta \) one has

which contradicts the growth condition (A.7). Therefore we need

Note that in this case

This implies, since \(\zeta (x)>0\), that for \(x>x_0\)

which contradicts the assumption that \(V_x^*(x)\ge 1\) for all \(x\ge \chi \). \(\square \)

The above Proposition shows that the scenario from Fig. 6 is not possible.

An impossible scenario, by Proposition A.3

Set \(g(x):=\rho - x\mu (x)\). By assumption \(p(x):=x\mu (x)\) has a unique maximum and \(\mu \) is locally Lipschitz and decreasing with \(\lim _{x\rightarrow \infty } \mu (x)=-\infty \). This implies that g(x) has a unique minimum for some \(x_\iota \in (0,\infty )\).Footnote 4 If \(g(x_\iota )<0\) then g intersects the x axis in exactly two points \(0<\alpha _1<\alpha _2<\infty \). If \(g(x_\iota )>0\) there is no intersection of g with the x axis. Finally, if \(g(x_\iota )=0\) there is exactly one intersection and this happens at \(x=x_\iota \).

Proposition A.4

The function \(V^*_x\) crosses the line \(y=1\) at most three times. More specifically, we have the following possibilities:

-

(I)

If \(g(x_\iota )<0\) then

-

(i)

For \(0\le x<\alpha _1\) the function \(V_x^*\) can only pass the line \(y=1\) at most once and the crossing has to be from below.

-

(ii)

For \(x>\alpha _2\) the function \(V_x^*\) can pass the line \(y=1\) at most once and the crossing has to be from below.

-

(iii)

For \(\alpha _1<x<\alpha _2\) the function \(V_x^*\) can pass the line \(y=1\) at most once and the crossing has to be from above.

-

(i)

-

(II)

If \(g(x_\iota )>0\) then the function \(V_x^*\) can pass the line \(y=1\) at most once and the crossing has to be from below.

-

(III)

If \(g(x_\iota )=0\) then \(V_x^*\) can cross the line \(y=1\) at most three times. In particular, the possible crossing(s) in \((0,x_\iota ) \cup (x_\iota ,\infty )\) must be from below.

-

(IV)

If \(V_x^*\) crosses the line \(y=1\) at \(x_0\) then we cannot have \(\varepsilon >0\) such that \(V_x^*=1\) on \((x_0,x_0+\varepsilon )\). In other words, the intersections have to be at separate points and we cannot ‘stick’ to \(y=1\).

Proof

It follows from the HJB Eq. (A.6) with \(\varphi :=V_x\) that if \(\varphi (x_0)=1\), then we have

Therefore, when \(\varphi \) crosses the line \(y=1\), we obtain some information from g. More precisely, we can infer the following:

-

(I)

When \(g(x_\iota )<0\) the function \(g(x) = \rho - x\mu (x)\) has exactly two zeros at \(\alpha _1, \alpha _2\) with \(0<\alpha _1<\alpha _2<\infty \).

-

(ii)

for \(0\le x<\alpha _1\) we have \(g(x) > 0\), hence \(\varphi \) is only allowed to cross the line \(y=1\) from below in this region;

-

(iii)

for \(x>\alpha _2\) we have \(g(x) > 0\), hence \(\varphi \) is only allowed to cross the line \(y=1\) from below in this region;

-

(iv)

for \(\alpha _1<x<\alpha _2\), \(g(x)<0\) and \(\varphi \) is only allowed to cross the line \(y=1\) from above in this region.

-

(ii)

-

(II)

If \(g(x_\iota )>0\) then \(g(x)> 0\) for all \(x\in \mathbb {R}_+\). The function \(V_x^*\) can pass the line \(y=1\) at most once and the crossing has to be from below.

-

(III)

If \(g(x_\iota )=0\) then g(x) has a unique intersection of the x axis at \(x_\iota \). As a consequence \(g(x)\ge 0\) and the function \(V_x^*\) can pass the line \(y=1\) at most thrice: at most once from below in the region \(x<x_\iota \), at most once from below in the region \(x>x_\iota \) and at most once from above or from below at the point \(x= x_\iota \).

-

(IV)

Since \(x\mu (x)\) is never constant on an interval, it is clear that for any \((u,v)\subset \mathbb {R}_+\) we cannot have \(V_x^*=1\) for all \(x\in (u,v)\).

\(\square \)

Remark A.2

By the analysis above one can note that at the intersection points (or roots) \(\alpha _{1,2}\) of the function g(x) with the x axis the derivative of \(\varphi \) is 0. This makes it more complicated to say, in case there is a crossing at a root, if the crossing is from above or from below. However, this does not require us to change our arguments. For example, if there is a crossing from below on \(0\le x<\alpha _1\) and there is a crossing at \(x=\alpha _1\) then the crossing at \(\alpha _1\) is necessarily from above. This then implies that there can be no crossing for \(x\in (\alpha _1,\alpha _2)\) because in this region the crossing has to be from above and there cannot be two crossings from above in a row.

Proof of Theorem 2.1

A direct consequence of Proposition A.4 is that \(V_x^*\) can cross the line \(y=1\) at most three times. We also know, given the at most two possible solutions \(\alpha _{1,2}\) of the equation \(g(x)=0\) how these crossings have to happen. Next, we eliminate all but one possibility.

-

i)

If we get a crossing from below in \((0,\alpha _1)\) this means that there exists \(\eta >0\) such that for all \(x\in (0,\eta )\) we have \(V_x^*(x)=\varphi _2(x) \le 1\). This is not possible by Proposition A.2. As such there can be no crossings in \((0,\alpha _1)\).

-

ii)

If we have a crossing from below in \((\alpha _2,\infty )\) then there is \(\zeta >0\) such that for all \(x\ge \zeta \)

$$\begin{aligned} V_x^*(x)=\varphi _1(x)\ge 1. \end{aligned}$$This is not possible by Proposition A.3. Therefore, there are no crossings in \( (\alpha _2,\infty )\).

-

iii)

We cannot have that \(V_x^*(x)\ge 1\) for all \(x\in (0,\infty )\) because then we get a contradiction by Proposition A.3. Similarly, we cannot have \( V_x^*(x)\le 1\) for all \(x\in (0,\infty )\) since we get a contradiction by Proposition A.2.

-

iv)

If \(g(x_\iota )>0\) then, in principle, there could be at most one crossing and this would have to be from below. But this creates a contradiction by either using Proposition A.2 or Proposition A.3. If there is no crossing then we get a contradiction by (iii) above.

-

v)

If \(g(x_\iota )=0\) then

-

(a)

If there is no crossing, then we get a contradiction by part iii) above.

-

(b)

If there are two crossings then we get contradictions from either Proposition A.2 or Proposition A.3.

-

(c)

If there are three crossings then we must have a crossing from below in \((0,x_\iota )\), one from above at \(x=x_\iota \) and one from below in \((x_\iota ,\infty )\). This yields a contradiction because of Proposition A.2.

-

(d)

If there is just one crossing and the crossing is from below then we get a contradiction by Proposition A.3.

-

(a)

-

vi)

By parts i)-iv) we get that there is exactly one crossing of the line \(y=1\), that this crossing is from above and that the crossing happens at a point in the interval \([\alpha _1,\alpha _2]\) when \(g(x_\iota )<0\) or at \(x_\iota \) if \(g(x_\iota )=0\).

This, together with (A.18), implies that the optimal strategy is of bang–bang type

Moreover, one can see that \(g(x_\iota )\le 0\) which in turn forces

\(\square \)

Appendix B: Optimal harvesting with concave and convex yields: proofs

This appendix shows that for a class of yield functions \(\Phi \) one can get continuous optimal harvesting strategies. Therefore, the optimal harvesting strategy will be discontinuous. One might wonder under which conditions on \(\Phi \) the optimal harvesting strategies will be continuous (Fig. 7).

We proved in Theorem A.2 that the HJB equation

admits a classical solution \(V^*\in C^2(\mathbb {R}_+)\) satisfying \(V^*(1)=0\) and \(\rho =\rho ^*> 0\).

For any given \(\Phi \), we define

where A is a shorthand of \(V_x^*\), that is,

For any fixed x, we can see A as a constant. Using these shorthands, we can rewrite the HJB equation as

where \(L:=xM\). A direct computation yields

because \(\Phi (0) = 0\). Therefore, the critical point(s) will be given by \(\omega _c = [\Phi ']^{-1}(A)\), and

If \(\Phi \) is assumed to be strictly concave, the maximum on the right hand side of (B.1) can be found easily because \(F''=\Phi ''\).

Theorem 3.1

Suppose Assumption 2.1 holds and the yield function satisfies

-

(1)

\(\Phi \in C^2(\mathbb {R}_+)\),

-

(2)

\(\Phi \) is strictly concave.

Then the optimal harvesting strategy is continuous and given by

Furthermore, the HJB equation for the system becomes

Proof

Assume that \(\Phi \) is \(C^2\) and strictly concave. Since \(\Phi \) is \(C^2\) we have that \(\Phi ''<0\). In this case, \(\Phi '\) is strictly decreasing, so its inverse is well-defined. As a result, we have a unique critical point which is a maximum \(\omega _c = [\Phi ']^{-1}(A)\). A standard calculus result yields

where we used the fact that \(F(0)=0\) and the concavity of \(\Phi \) in the last equality.

Depending on the maximum point, we have the corresponding optimal Markov control:

because v is the solution to

In conclusion, in this case, v depends on \(A:=\dfrac{d V^*}{d x}(x)\) continuously. Hence, since \(V^*\in C^2\left( \mathbb {R}_+\right) \) we conclude that v is continuous.

The HJB Eq. (A.6) becomes

\(\square \)

The case when the yield function \(\Phi \) is convex is qualitatively similar to the case when the yield function is linear, and the optimal solution is of the bang–bang type. We can improve Theorem 2.1 as follows.

Theorem 3.2

Assume that \(\Phi :\mathbb {R}_+\rightarrow \mathbb {R}_+\) is weakly convex, \(\Phi \) grows at most polynomially, \(\Phi \in C^1(\mathbb {R_+})\) and the population survives in the absence of harvesting, that is \(\mu (0)-\frac{\sigma ^2}{2}>0\). Furthermore assume that the drift function \(\mu (\cdot )\) satisfies the following modification of Assumption 2.1:

-

(i)

\(\mu \) is locally Lipschitz.

-

(ii)

\(\mu \) is decreasing.

-

(iii)

As \(x\rightarrow \infty \) we have \(\mu (x)\rightarrow -\infty \).

-

(iv)

The function

$$\begin{aligned} G(x)= \Phi (xM)\left( 1-\frac{2}{\sigma ^2}\mu (x)\right) - xM\Phi '(xM) \end{aligned}$$(3.2)has a unique extreme point in \((0,\infty )\) which is a minimum, and is not constant on any interval \((u,v)\subset \mathbb {R}_+\).

If the assumptions (i)–(iii) hold, the optimal control has a bang–bang form (i.e., the harvesting rate is either 0 or the maximal M). If assumptions (i)–(iv) hold, the optimal harvesting strategy v has a bang–bang form with one threshold

for some \(x^*\in (0,\infty )\).

Proof

This proof is similar to the proof of Theorem 2.1 from Appendix A. By (A.9)

Dropping the common terms gives

With \(x>0\), the right hand side is a weakly convex function of u, so one of the end points of the interval U achieves the maximum. This already shows that the optimal control is bang–bang, but says nothing else of the shape of v(x). Since \(\Phi (0) = 0\), we get

This implies

The function \(\Phi (\cdot )\) is weakly convex, therefore, for \(\alpha \in (0,1)\), \(\Phi (\alpha x + (1-\alpha ) y ) \le \alpha \Phi (x) + (1-\alpha )\Phi (y)\). By assumption, it is also continuous and positive valued. So, for \(\alpha \in (0,1)\), \(\alpha \Phi (xM) \ge \Phi (\alpha xM)\), equivalent with \(\Phi (xM) \ge \frac{1}{\alpha } \Phi (\alpha xM)\), equivalent with \(\frac{\Phi (xM)}{xM} \ge \frac{\Phi (\alpha xM)}{\alpha xM}\) if \(x,M>0\). Therefore \(\frac{\Phi (xM)}{xM}\) must be positive and monotonically increasing in x for \(M>0\), \(x>0\). In particular \(\Phi '(0) = \lim _{x\rightarrow 0^+} \frac{\Phi (xM)}{xM}\) exists and it is greater or equal to 0. The HJB equation A.6 becomes

One can easily modify the proofs from Appendix A to show the following four propositions:

Proposition A.5

Assume \(\mu , \Phi \) satisfy the assumptions of Theorem 3.2. Then any solution \(\varphi _2\) of the ODE

satisfies

Proof

Proceed similarly to the proof of Proposition A.1, replacing the definition \(\beta (y): = \frac{2(\rho - \Phi (My))}{\sigma ^2 y^2}\). This time,

For \(y \in [0,x_0]\), we have \(\Phi '(0) \le \frac{\Phi (My)}{My} \le \frac{\Phi (Mx_0)}{Mx_0}\), so

For a general positive constant N,

where the integration constants are given by

Now the case-by-case analysis of Proposition A.1 can be repeated similarly because the constants of the dominant terms in the expression above do not depend on N. \(\square \)

Proposition B.2

There does not exist any \(\eta >0\) such that \(V_x^*(x)\le \frac{\Phi (xM)}{xM}, x\in (0,\eta ]\).

Proof

Noting that \(\sup _{x\in (0,\eta ]} \frac{\Phi (xM)}{xM} = \frac{\Phi (\eta M)}{\eta M}\), the proof is similar to the proof of Proposition A.2, relying on the application of Proposition A.5 to Eq. (B.3). \(\square \)

Proposition B.3

There does not exist any \(\chi >0\) such that \(V_x^*(x)\ge \frac{\Phi (xM)}{xM}\) for all \(x\ge \chi \).

Proof

It follows the proof of Proposition A.3 without change, because \(\frac{\Phi (xM)}{xM} \ge 0\). \(\square \)

Proposition B.4

The function \(V^*_x\) intersects the curve \(\frac{\Phi (xM)}{xM}\) at most three times on \([0,\infty )\).

Proof

By (B.2) if we set \(f_x:=\varphi \), then at the intersections \(x:\; \varphi (x) = \frac{\Phi (xM)}{xM}\) we have

from the HJB equation. Now we want to compare \(\varphi _x\) with \(\left( \frac{\Phi (xM)}{xM}\right) '\) whenever there is a crossing, to infer the direction from which \(\varphi \) is crossing. To do that, consider the equation \(\varphi _x = \left( \frac{\Phi (xM)}{xM}\right) '\). Substituting and simplifying gives us the condition \(G(x) + \frac{2M\rho }{\sigma ^2} = 0\) where G(x) is defined in 3.2. Since G(x) has only one extremum by assumption, this equation has zero, one or two solutions. When there are two solutions, say \(\alpha _1, \alpha _2\), any intersection of \(\varphi \) with \(\frac{\Phi (xM)}{xM}\) for \(x \in (\alpha _1,\alpha _2)\) will have to be with \(\varphi \) coming from above, as \(\varphi _x <0\) in that interval. Using similar arguments to those in Proposition A.4, this implies, together with the condition on G from (3.2), that \(\varphi \) can intersect \(\frac{\Phi (xM)}{xM}\) at most three times. \(\square \)

The rest of the proof also mirrors the one of Theorem 2.1. Apply the four results above and find again that the optimal control is bang–bang with a single threshold \(x^*\),

for some \(x^*\in (0,\infty )\) (see Fig. 8). \(\square \)

Rights and permissions

About this article

Cite this article

Hening, A., Nguyen, D.H., Ungureanu, S.C. et al. Asymptotic harvesting of populations in random environments. J. Math. Biol. 78, 293–329 (2019). https://doi.org/10.1007/s00285-018-1275-1

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00285-018-1275-1