Abstract

Online social networks allow the collection of large amounts of data about the influence between users connected by a friendship-like relationship. When distributing items among agents forming a social network, this information allows us to exploit network externalities that each agent receives from his neighbors that get the same item. In this paper we consider Friends-of-Friends (2-hop) network externalities, i.e., externalities that not only depend on the neighbors that get the same item but also on neighbors of neighbors. For these externalities we study a setting where multiple different items are assigned to unit-demand agents. Specifically, we study the problem of welfare maximization under different types of externality functions. Let n be the number of agents and m be the number of items. Our contributions are the following: (1) We show that welfare maximization is APX-hard; we show that even for step functions with 2-hop (and also with 1-hop) externalities it is NP-hard to approximate social welfare better than (1−1/e). (2) On the positive side we present (i) an \(O(\sqrt n)\)-approximation algorithm for general concave externality functions, (ii) an O(log m)-approximation algorithm for linear externality functions, and (iii) a \(\frac {5}{18}(1-1/e)\)-approximation algorithm for 2-hop step function externalities. We also improve the result from [7] for 1-hop step function externalities by giving a \(\frac {1}{2}(1-1/e)\)-approximation algorithm.

Similar content being viewed by others

1 Introduction

Assume you have to form a committee and need to decide whom to choose as a member. It seems like a good strategy to select members from your network that are well-connected to the whole field so that not only the knowledge of the actual members but also of their whole network can be called upon when needed. Along the same vein assume you want to play a multiplayer online game but you do not have enough friends who are willing to play with you. Then it is a good idea to ask these friends to contact their friends whether they are willing to play as well. Both these settings can be modeled by a social network graph and in both settings not the direct (or 1-hop) neighbors alone, but instead the 1-hop neighbors in combination with the neighbors of neighbors (or 2-hop neighbors) are the decisive factor. Note that the 2-hop neighborhoods cannot be modeled by 1-hop neighborhoods through the insertion of an additional edge (to the neighbor of the neighbor) as we require that every participating neighbor of a neighbor is adjacent to a participating neighbor. In the above example, we can only get the opinion of a contact of a contact if we asked the contact before. In the same way, the participation of a friend of a friend will only be possible if there is a participating friend that invites him.

There has been a large body of work by social scientists and, in the last decade, also by computer scientists (see e.g., the influential paper by Kempe, Kleinberg, and Tardos [26] and its citations) to model and analyze the effect of 1-hop neighborhoods. The study of 2-hop neighborhoods has received much less attention (see e.g., [15, 25]). This is surprising as a recent study [20] of the Facebook network shows that the median Facebook user has 31k people as “friends of friends” and due to some users with very large friend lists, the average number of friends-of-friends reaches even 156k. Thus, even if each individual friend of a friend has only a small influence on a Facebook user, in aggregate the influence of the friends-of-friends might be large and should not be ignored.

We, therefore, initiate the study of the influence of 2-hop neighborhoods in the popular assignment setting, where items are assigned to users whose values for the item depend on who else in their neighborhood has the item. There is a large body of work on mechanisms and pricing strategies for this problem with a single [1, 3, 5, 6, 9, 10, 16, 19, 21, 28] or multiple items [2, 7, 12, 18, 24, 30–32] when the valuation function of a user depends solely on the 1-hop neighborhood of a user and the user itself. All this work assumes that there is an infinite supply of items (of each type if there are different items) and the users have unit-demand, that is, they want to buy only one item. This is frequently the case, for example, if the items model competing products or if the user has to make a binary decision between participating or not participating. In the above examples, this requirement would model that each user can only be in one committee or play one game at a time.

Thus, we study the allocation of items to users in a setting with 2-hop network externalities, where the valuation that a user derives from the products depends on herself, her 1-hop, and her 2-hop neighborhood with the goal of maximizing the social welfare of the allocation. The prior work that is most closely related to our work is the work by Bhalgat et al. [7], where they study the multi-item setting with 1-hop externality functions and give approximation algorithms based on LP-relaxations and randomized rounding for different classes of externality functions. For linear externalities they give a 1/64-approximation algorithm and for step function externalities they get an approximation ratio of (1−1/e)/16≈0.04. Additionally they present a 2O(d)-approximation algorithm for convex externalities that are bounded by polynomials of degree d and a polylogarithmic approximation algorithm for submodular externalities.

1.1 Our Results

The Model

Let G = (V, E) be an undirected graph modeling the social network. Consider any agent j ∈ V who receives item i ∈ I, and let S i j ⊆ V ∖ {j} denote the (2-hop) support of agent j for item i: this is the set of agents who contribute towards the valuation of j. Specifically, an agent j ′ ∈ V ∖ {j} belongs to the set S i j iff j ′ gets item i and the following condition holds: either j ′ is a neighbor of j (i.e., (j, j ′) ∈ E), or j and j ′ have a common neighbor j ″ who also gets item i. The valuation received by agent j is equal to λ i j ⋅f i j (|S i j |), where λ i j is the agent’s intrinsic valuation and f i j (|S i j |) is her 2-hop externality for item i. The goal is to compute an assignment of items to the agents that maximizes the social welfare, which is defined as the sum of the valuations obtained by the agents.

We study three types of 2-hop externality functions, namely concave, linear and step function externalities.

Step-Function Externalities

Consider a game requiring a minimal or fixed number of players (larger than two), e.g., Bridge or Canasta, then the externality is a step function. For step functions (Theorem 9) we show that it is NP-hard to approximate the social welfare within a factor of (1−1/e). The result holds for 1-hop and 2-hop externalities. We also show that the problem remains APX-hard when the number of items is restricted to 2 (Theorem 10). Then we give a 5/18⋅(1−1/e)≈0.17-approximation algorithm for 2-hop step function externalities (Theorem 6). Our technique also leads to a combinatorial (1−1/e)/2≈0.3-approximation algorithm for 1-hop step function externalities (Theorem 7), improving the approximation ratio of the LP-based algorithm in [7].

Linear Externalities

We show that social welfare maximization for linear 2-hop externality functions is APX-hardFootnote 1 (Theorem 5) and give an O(log n)-approximation algorithm (Theorem 3). Moreover, for linear externality functions we can relax the unit-demand requirement. Specifically, we can handle the setting where each user j can be assigned up to c j different items (still a user cannot be assigned the same item twice), where c j is a parameter given in the input.Footnote 2

Concave Externalities

We give an \(O(\sqrt {n})\) -approximation algorithm when the externality functions f i j (.) are concave and monotone (Theorem 1) and show that the hardness results for step-functions extend to this setting (Theorem 2). That is, it is NP-hard to approximate the social welfare within a factor of (1−1/e), and the problem remains APX-hard when only two different items are considered.

Extensions

Our algorithms for linear and concave externalities can be further generalized to allow a weighting of 2-hop neighbors so that 2-hop neighbors have a lower weight than 1-hop neighbors. This can be useful if it is important that the influence of 2-hop neighbors does not completely dominate the influence of the 1-hop neighbors.

Techniques

The main challenge in dealing with 2-hop externalities is as follows. Fix an agent j who gets an item i, and let V i ⊆ V denote the set of all agents who get item i. Recall that agent j’s externality is given by f i j (|S i j |), where the set S i j is called the support of agent j. The problem is that |S i j |, as a function of V i ∖{j}, is not submodular. This is in sharp contrast with the 1-hop setting, where the support for the agent’s externality comes only from the set of her 1-hop neighbors who receive item i.

All the mechanisms in [7] use the same basic approach: First solve a suitable LP-relaxation and then round its values independently for each item i. In the 2-hop setting, however, the lack of submodularity of the support size (as described above) leads to many dependencies in the rounding step. Nevertheless, we show how to extend the technique in [7] to achieve the approximation algorithm for linear 2-hop externality functions, using a novel LP. We further give a simple combinatorial algorithm with an approximation guarantee of \(O(\sqrt {n})\) for 2-hop concave externalities. For this, we show that either an \({\Omega }(1/\sqrt {n})\)-fraction of the optimal social welfare comes from a single item, or we can reduce our problem to a setting with 1-hop step function externalities by losing a \((1-{\Omega }(1/\sqrt {n}))\)-fraction of the objective.

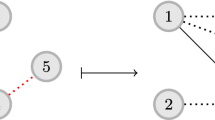

Our approach for 2-hop step functions is different. We use a novel decomposition of the graph into a maximal set of disjoint connected sets of size 3, 2, and 1. We say an assignment is consistent if it assigns all the nodes (i.e., users) in the same connected set the same item. We show first that restricting to consistent assignments reduces the maximum welfare by at most a factor of 5/18. Finally, we show that finding the optimal consistent assignment is equal to maximizing social welfare in a scenario where agents are not unit-demand, do not influence each other, and have valuation functions that are fractionally subadditive in the items they get assigned. For the latter we use the (1−1/e)-approximation algorithm by Feige [14].

1.2 Related Work

As discussed earlier the prior work that is most closely related to our work is the work by Bhalgat et al. [7], that studies a similar setting where multiple different items are assigned to unit-demand agents with 1-hop externalities.

Beside that there is a bunch of work on pricing strategies for selling an item in social networks that exploit 1-hop network externalities to maximize the revenue of a seller [1, 5, 10, 16, 21, 28], most prominent the work of Hartline et al. [21]. In these settings one typically first sells products for a discount to some customers to later exploit their positive influence on the other customers to maximize revenue. As the revenue maximization problems in the different models are computational hard one goes for approximations that are based on randomized selection of customers and/or submodular function maximization for selecting an initial set of customers who get the product for free.

The work of Anshelevich et al. [4] studies friends-of-friends benefits in a network formation game setting, where each node of a social network has to decide how to spend its resources to cultivate relationships with his friends. The strength of a relationship is then determined by the resources the two nodes put into it. The utility of a node is calculated by the strength of the relationships to its friends and the strength of the relationships between its friends and friends-of-friends. Anshelevich et al. consider two concrete functions for calculated the strength of relations, i.e. Sum and Min, and study equilibria and the price of anarchy.

Seeman and Singer [29] consider friends-of-friends for adaptive seeding in social networks. The problem they study is to select some nodes of the graph as seeds, e.g. as early adopters of a new technology that then promote it to the other nodes. As not always all nodes are available as early adopters Seeman and Singer promote a 2-phase strategy for selecting seed. In the first phase a part of the budget is spend to select some of the initial available as seeds and each of their neighbors becomes available as early adopter in the second phase (with some probability). In the second phase the remaining budget is then spend among these friends-of-friends such that information diffusion through the word-of-mouth processes is maximized.

Dobzinski et al. [11] gave an incentive-compatible combinatorial auction for complement-free Bidders that achieves an \(O(\sqrt {m})\)-approximation of the optimal item allocation. They distinguish whether most of the social welfare comes from bidders that get more than \(\sqrt {m}\) items or by bidders who get less than \(\sqrt {m}\) items and their auction computes two assignment one which is good in the first case and one that is good in the later one and uses the one with higher social welfare. This idea is somehow similar to our \(O(\sqrt {n})\)-approximation algorithm for concave externalities which computes two assignments one that is an \(O(\sqrt {n})\)-approximation if in an optimal assignment most of the social welfare comes from users with large support and another that is an \(O(\sqrt {n})\)-approximation if in an optimal assignment most of the social welfare comes from users with small support. However, our sub-procedures for computing the two assignments are not directly related to the sub-procedures used in [11].

2 Notations and Preliminaries

We are given a simple undirected graph G = (V, E) with |V| = n nodes. Each node j ∈ V in this graph is an agent, and there is an edge (j, j ′) ∈ E iff the agents j and j ′ are friends with each other. There is a set of m items I = {1,…,m}. Each item is available in unlimited supply, and the agents have unit-demand, i.e., each agent wants to get at most one item. An assignment \(\mathcal {A}: V \rightarrow I\) specifies the item received by every agent, and under this assignment, \(u_{j}(\mathcal {A},G)\) gives the valuation of an agent j ∈ V. Our goal is to find an assignment that maximizes the social welfare \({\sum }_{j \in V} u_{j}(\mathcal {A},G)\), i.e., the sum of the valuations of the agents.

Let \({F_{j}^{1}}(G)\), \({F_{j}^{2}}(G)\) and \(F_{j}^{\leq 2}(G)\) be the 1-hop neighborhood, 2-hop neighborhood, and 2-neighborhood, of a node j ∈ V.

Define \(V_{i}(\mathcal {A},G) = \{j \in V : \mathcal {A}(j) = i\}\) to be the set of agents who receive item i ∈ I under the assignment \(\mathcal {A}\). Let

denote the 1-hop neighbors of j who receive item i under the assignment \(\mathcal {A}\). Further, let

denote the 2-hop neighbors of j who receive item i under the assignment \(\mathcal {A}\) and are adjacent to some node in \({N_{j}^{1}}(i,\mathcal {A},G)\).

The support of an agent j ∈ V for item i ∈ I is defined as \(S_{ij}(\mathcal {A},G) = {N_{j}^{1}}(i,\mathcal {A},G) \cup {N_{j}^{2}}(i,\mathcal {A},G)\). This is the set of agents contributing towards the valuation of j for item i. Let λ i j be the intrinsic valuation of agent j for item i, and let \(f_{ij}(|S_{ij}(\mathcal {A},G)|)\) be the externality of the agent for the same item. The agent’s valuation from the assignment \(\mathcal {A}\) is given by the following equality.

We consider three types of externalities in this paper.

Definition 1

In concave externality it holds that f i j (t) is a monotone and concave function of t, with f i j (0) = 0, for every item i ∈ I and agent j ∈ V.

Definition 2

In linear externality it holds that for all j ∈ V, i ∈ I and every nonnegative integer t, we have f i j (t) = t.

We extend the step function definition of [7] as follows to 2-hop neighborhoods. In Appendix D we generalize it further to p-hop neighborhoods for p ≥ 2.

Definition 3

For integer s ≥ 1, in s-step function externality it holds that for all j ∈ V, i ∈ I and every nonnegative integer t, we have f i j (t) is 1 if t ≥ s and 0 otherwise.

We omit the symbol G from these notations if the underlying graph is clear from the context. All the missing proofs from Sections 4 and 5 appear in the Appendix.

3 An \(O(\sqrt {n})\)-Approximation for Concave Externalities

For the rest of this section, we fix the underlying graph G, and assume that the agents have concave externalities as per Definition 1. We also fix the intrinsic valuations λ i j and the externality functions f i j (.).

-

Let \(\mathcal {A}^{*} \in \arg \max _{\mathcal {A}} \left (\sum \nolimits _{j \in V} u_{j}(\mathcal {A}) \right )\) be an assignment that maximizes the social welfare, and let \({\textsc {Opt}} = \sum \nolimits _{j \in V} u_{j}(\mathcal {A}^{*})\) be the optimal social welfare.

-

Let \(X^{*} = \{j \in V : |S_{\mathcal {A}^{*}(j),j}(\mathcal {A}^{*})| \geq \sqrt {n}\}\) be the set of agents with support size at least \(\sqrt {n}\) under the assignment \(\mathcal {A}^{*}\), and

-

let Y ∗ = V ∖ X ∗ be the agents with support size less then \(\sqrt {n}\) under the assignment \(\mathcal {A}^{*}\).

Since X ∗ and Y ∗ partition the set of agents V, half of the social welfare under \(\mathcal {A}^{*}\) is coming either from (1) the agents in X ∗, or from (2) the agents in Y ∗. Lemma 1 shows that in the former case there is a uniform assignment, where every agent gets the same item, that retrieves a \(1/(2\sqrt {n})\)-fraction of the optimal social welfare. We consider the latter case in Lemma 2, and reduce it to a problem with 1-hop externalities.

The main idea behind Lemma 1 is that in \(\mathcal {A}^{*}\) there can be at most \(\sqrt {n}\) many different items that are assigned to \(\sqrt {n}\) many nodes. Thus picking among these items the one contributing the most to the social welfare and assign it to all nodes gives a \(\sqrt {n}\) approximation for the social welfare of X ∗.

Lemma 1

If \({\sum }_{j \in X^{*}} u_{j}(\mathcal {A}^{*}) \geq {\textsc {Opt}}/2\) , then there is an item i∈I such that \({\sum }_{j \in V} u_{j}(\mathcal {A}^{i}) \geq {\textsc {Opt}}/(2\sqrt {n})\) , where \(\mathcal {A}^{i}\) is the assignment that gives item i to every agent in V, that is, \(\mathcal {A}^{i}(j) = i\) for all j∈V.

Proof

Define the set of items \(I(X^{*}) = \bigcup _{j \in X^{*}} (\mathcal {A}^{*}(j))\).

We claim that \(|I(X^{*})| \leq \sqrt {n}\). To see why the claim holds, let \(V_{i}^{*} = \{ j \in V : \mathcal {A}^{*}(j) = i\}\) be the set of agents who receive item i under \(\mathcal {A}^{*}\). Now, fix any item i ∈ I(X ∗), and note that, by definition, there is an agent j ∈ X ∗ with \(\mathcal {A}^{*}(j) = i\). Thus, we have \(|V_{i}^{*}| \geq |S_{ij}(\mathcal {A}^{*})| \geq \sqrt {n}\) . We conclude that \(|V_{i}^{*}| \geq \sqrt {n}\) for every item i ∈ I(X ∗). Since \({\sum }_{i \in I(X^{*})} |V_{i}^{*}| \leq |V| = n\) , it follows that \(|I(X^{*})| \leq \sqrt {n}\). To conclude the proof of the lemma, we now make the following observations.

The lemma holds since \({\textsc {Opt}}/(2\sqrt {n})\leq \sum \limits _{j \in X^{*}} u_{j}(\mathcal {A}^{*})/\sqrt {n} \leq \underset {i \in I(X^{*})}{\max } \left (\sum \limits _{j \in V} u_{j}(\mathcal {A}^{i})\right )\). □

For every item i ∈ I and agent j ∈ V, we now define the externality function g i j (t) and the valuation function \(\hat {u}_{j}(\mathcal {A})\).

Clearly, for every assignment \(\mathcal {A}: V \rightarrow I\), we have \(0 \leq {\sum }_{j \in V} \hat {u}_{j}(\mathcal {A}) \leq {\sum }_{j \in V} u_{j}(\mathcal {A})\). Also note that the valuation function \(\hat {u}_{j}(.)\) depends only on the 1-hop neighborhood of the agent j. Specifically, if an agent j gets an item i, then her valuation \(\hat {u}_{j}(\mathcal {A})\) is λ i j ⋅f i j (1) if at least one of her 1-hop neighbors also gets the same item i, and zero otherwise. Bhalgat et al. [7] gave an LP-based O(1)-approximation for finding an assignment \(\mathcal {A} : V \rightarrow I\) that maximizes the social welfare in this setting (also see Section 5 for a combinatorial algorithm). In the lemma below, we show that if the agents in Y ∗ contribute sufficiently towards Opt under the assignment \(\mathcal {A}^{*}\), then by losing an \(O(\sqrt {n})\)-factor in the objective, we can reduce our original problem to the one where the externalities are g i j (.) and the valuations are \(\hat {u}_{j}(.)\).

The intuition behind Lemma 2 is that when considering nodes with a support smaller than \(\sqrt {n}\) then by the concavity of f i j the externality g i j (t) = f i j (1) gives a \(\sqrt {n}\) approximation of f i j (t).

Lemma 2

If \({\sum }_{j \in Y^{*}} u_{j}(\mathcal {A}^{*}) \geq {\textsc {Opt}}/2\) , then \({\sum }_{j \in V} \hat {u}_{j}(\mathcal {A}^{*}) \geq {\textsc {Opt}}/(2\sqrt {n})\).

Proof

Consider a node j ∈ Y ∗ that makes nonzero contribution towards the objective (i.e., \(u_{j}(\mathcal {A}^{*}) > 0\)) and suppose that it gets item i (i.e., \(\mathcal {A}^{*}(j) = i\)). Since u j (A ∗) > 0, we have \(S_{ij}(\mathcal {A}^{*}) = {N_{j}^{1}}(i,\mathcal {A}^{*}) \cup {N_{j}^{2}}(i,\mathcal {A}^{*}) \neq \emptyset \), which in turn implies that \({N_{j}^{1}}(i,\mathcal {A}^{*}) \neq \emptyset \). Thus, we have \(\hat {u}_{j}(\mathcal {A}^{*}) = \lambda _{ij} \cdot f_{ij}(1)\). Since \(|S_{ij}(\mathcal {A}^{*})| \leq \sqrt {n}\) and f i j (.) is a concave function, we have \(f_{ij}(1) \geq f_{ij}(|S_{ij}(\mathcal {A}^{*})|)/|S_{j}(\mathcal {A}^{*})| \geq f_{ij}(|S_{ij}(\mathcal {A}^{*})|)/\sqrt {n}\). Multiplying both sides of this inequality by λ i j , we conclude that \(\hat {u}_{j}(\mathcal {A}^{*}) \geq u_{j}(\mathcal {A}^{*})/\sqrt {n}\) for all agents j ∈ Y ∗ with \(u_{j}(\mathcal {A}^{*}) > 0\). In contrast, if \(u_{j}(\mathcal {A}^{*}) = 0\), then the inequality \(\hat {u}_{j}(\mathcal {A}^{*}) \geq u_{j}(\mathcal {A}^{*})/\sqrt {n}\) is trivially true. Thus, summing over all j ∈ Y ∗, we infer that \({\sum }_{j \in Y^{*}} \hat {u}_{j}(\mathcal {A}^{*},G) \geq {\sum }_{j \in Y^{*}} u_{j}(\mathcal {A}^{*},G)/\sqrt {n} \geq {\textsc {Opt}}/(2\sqrt {n})\). The lemma now follows since \({\sum }_{j \in V} \hat {u}_{j}(\mathcal {A}^{*},G) \geq {\sum }_{j \in Y^{*}} \hat {u}_{j}(\mathcal {A}^{*},G)\). □

The Algorithm for Concave Externalities

Algorithm 1 computes two assignments, the first in accordance with Lemma 1 to give an \(O(\sqrt {n})\)-approximation if most of the social welfare is by X ∗, the second in accordance with Lemma 2 to give an \(O(\sqrt {n})\)-approximation if most of the social welfare is by Y ∗, and then returns the assignment that has higher social welfare and thus achieves an \(O(\sqrt {n})\)-approximation in any case.

Theorem 1

Algorithm 1 gives an \(O(\sqrt {n})\) -approximation for social welfare under 2-hop, concave externalities.

Proof

Recall the notations introduced in the beginning of Section 3 and let \(\mathcal {A}\) be the assignment returned by Algorithm 1. Since the set of agents V is partitioned into X ∗ ⊆ V and Y ∗ = V ∖ X ∗, either \({\sum }_{j \in X^{*}} u_{j}(\mathcal {A}^{*}) \geq {\textsc {Opt}}/2\) or \({\sum }_{j \in Y^{*}} u_{j}(\mathcal {A}^{*}) \geq \textsc {Opt}/2\). In the former case, Lemma 1 guarantees that \({\sum }_{j \in \mathcal {A}} u_{j}(\mathcal {A}) \geq {\sum }_{j \in \mathcal {A}^{\prime }} u_{j}(\mathcal {A}^{\prime }) \geq \textsc {Opt}/(2\sqrt {n})\). In the latter case, by Lemma 2 we have \( {\sum }_{j \in \mathcal {A}} u_{j}(\mathcal {A}) \geq {\sum }_{j \in \mathcal {A}^{\prime \prime }} u_{j}(\mathcal {A}^{\prime \prime }) \geq {\sum }_{j \in \mathcal {A}^{\prime \prime }} \hat {u}_{j}(\mathcal {A}^{\prime \prime }) \geq {\sum }_{j \in \mathcal {A}^{*}} \hat {u}_{j}(\mathcal {A}^{*})/\alpha \geq \textsc {Opt}/(2\alpha \sqrt {n}) \), where α is the approximation ratio of the algorithm use for solving the 1-step setting. We conclude that the social welfare returned by our algorithm is always within an \(O(\sqrt {n})\)-factor of the optimal social welfare. □

Finally, we discuss APX-hardness results for concave externalities. Notice that 1-step function externalities are indeed concave externalities and thus each hardness result for 1-step function externalities is also a hardness result for concave functions. The following theorem is immediate by the corresponding results for 1-step function externalities in Appendix E.

Theorem 2

The problem of maximizing social welfare under concave externalities is APX-hard, that is there is no polynomial-time \(1-\frac {1}{e}+\epsilon \) -approximation algorithm (unless P = NP). It remains APX-hard even for the case with only two items, i.e., it is NP-hard to approximate better than a factor of \(\frac {23}{24}\).

4 An O(log m)-Approximation for Linear Externalities

In this section, we give an O(log m)-approximation for linear externalities by an LP relaxation and a randomized rounding schema.

To this end consider the LP below. Here, the variable α(i, j, k) indicates if both the agents j ∈ V and \(k \in {F^{1}_{j}}\) received item i ∈ I. If this variable is set to one, then agent j gets one unit of externality from agent k. Similarly, the variable β(i, j, l) indicates if both the agents \(j \in V, l \in V \cap {F_{j}^{2}}\) received item i ∈ I and there is at least one agent \(k \in {F_{j}^{1}} \cap {F_{l}^{1}}\) who also received the same item. If this variable is set to one, then agent j gets one unit of externality from agent l. The variables y(i, j) indicate if an agent j ∈ V receives item i ∈ I. If this variable is set to one, then agent j receives item i. Clearly, the total valuation of agent j for item i is given by \( {\sum }_{k \in V \cap {F_{j}^{1}}} \lambda _{ij} \cdot \alpha (i,j,k) + {\sum }_{l \in V \cap {F_{j}^{2}}} \lambda _{ij} \cdot \beta (i,j,l) \). Summing over all the items and all the agents in V, we see that the LP-objective encodes the social welfare of the set V.

Constraint 7 states that an agent can get at most one item. Constraint 6 says that if α(i, j, k)=1, then both y(i, j) and y(i, k) must also be equal to one. Constraint 4 states that if β(i, j, l)=1, then both y(i, j) and y(i, l) must also be equal to one. Finally, note that if an agent l ∈ V contributes one unit of 2-hop externality to an agent j ∈ V for an item i ∈ I, then there must be some agent \(k \in {F_{j}^{1}} \cap {F_{l}^{1}}\) who received item i. This condition is encoded in constraint 5. Thus, we have the following lemma.

Lemma 3

The LP is a valid relaxation for social welfare under 2-hop, linear externalities.

Before proceeding towards the rounding scheme, we perform a preprocessing step as described in the next lemma.

Lemma 4

In polynomial time, we can get a feasible solution to the LP that gives an O(logm) approximation to the optimal objective, and ensures that each α(i, j, k), β(i, j, l) ∈ {0, γ} for some real number γ ∈ [0, 1], and that each y(i,j) ≤ γ.

Proof

We compute the optimal solution of the LP, and partition the α(i, j, k) and β(i, j, l) variables into two groups (large and small) depending on whether they are greater than or less than 1/m 2. By losing at most a 1/m fraction of the objective, we can set all the small variables to zero. To see this, suppose that the claim is false, i.e., the contribution of these small variables exceeds 1/m fraction of the total objective. Then we can scale up all these small variables by a factor of m, set all the large α(i, j, k), β(i, j, l)’s to zero, and set every y(i, j) to 1/m. This will satisfy all the constraints, and the total contribution towards the objective by the erstwhile small variables will get multiplied by m, which, in turn, will imply that their new contribution actually exceeds the optimal objective. Thus, we reach a contradiction.

We discretize the range [1/m 2,1] in powers of two, thereby creating O(log m) intervals, and accordingly, we partition the large variables into O(log m) groups. The variables in the same group are within a factor 2 of each other. By losing an O(log m) factor in the approximation ratio, we select the group that contributes the most towards the LP-objective. Let all the variables in this group lie in the range [γ,2γ]. We now make the following transformation. All the α(i, j, k), β(i, j, l)’s in this group are set to γ. This way we lose another factor of at most 2 in the LP-objective. All the remaining α(i, j, k), β(i, j, l)’s are set to zero. At this stage, we have ensured that each α(i, j, k), β(i, j, l)∈{0,γ}. Finally, we set y(i, j)← min(y(i, j), γ) for each i ∈ I, j ∈ V. Since each α(i, j, k), β(i, j, l)∈{0,γ}, this transformation does not violate the feasibility of the solution. □

Note that after the processing according to Lemma 4 there might exist i, j, k, and l such that y(i, k) and β(i, j, l) both equal γ, but α(i, j, k) equals 0. In this case we could increase the value of the solution by setting α(i, j, k) to γ but this is not necessary for our rounding procedure as it ignores both the α as well as the β values.

We now present the rounding scheme for the LP (see Algorithm 2). Here, the set W i denotes the set of agents that have not yet been assigned any item when the rounding scheme enters the For loop for item i (see Step 3 ). Note that the sets T i might overlap, but these conflicts are resolved in Line 8 by intersecting T i with W i , which is disjoint with all previous T j , j < i.

Lemma 5

For all t ∈ V and all i ∈ I, we have P[t ∈ W i ] ≥ 5/6. Thus, P[{t 1, t 2, t 3} ⊆ W i ] ≥ 1/2 for all t 1, t 2, t 3 ∈ V.

Proof

Fix any node t ∈ V and any item i ∈ I, and consider an indicator random variable \({\Gamma }_{i^{\prime }t}\) that is set to one iff \(t \in T_{i^{\prime }}\). It is easy to verify that \(\mathbf {E}[{\Gamma }_{i^{\prime }t}] = y(i^{\prime },t)/6\) for all items i ′ ∈ I. By constraint 7 and linearity of expectation, we thus have: \( \mathbf {E}[{\sum }_{i^{\prime } < i} {\Gamma }_{i't}] = {\sum }_{i^{\prime } < i} y(i^{\prime },t)/6 \leq 1/6 \). Applying Markov’s inequality, we get \( \mathbf {P}[{\sum }_{i^{\prime } < i} {\Gamma }_{i't} = 0] \geq 5/6 \). In other words, with probability at least 5/6, we have that \(t \notin T_{i^{\prime }}\) for all i ′ < i. Under this event, we must have t ∈ W i .

We have P[t∉W i ] ≤ 1/6 for all t ∈ {t 1, t 2, t 3}, and thus P[{t 1, t 2, t 3} ⊆ W i ]≥1/2 follows from applying union-bound over these three events. □

In the first step, when we find a feasible solution to the LP in accordance with Lemma 4, we lose a factor of O(log m) in the objective. Below, we will show that the remaining steps in the rounding scheme result in a loss of at most a constant factor in the approximation ratio.

For all items i ∈ I, nodes j ∈ V, and nodes \(k \in {F_{j}^{1}}\), \(l \in {F_{j}^{2}}\), we define the random variables X(i, j, k) and Y(i, j, l). Their values are determined by the outcome \(\mathcal {A}\) of our randomized rounding.

Now, the valuation of any agent j ∈ V from the (random) assignment \(\mathcal {A}\) is:

We will analyze the expected contribution of the rounding scheme to each term in the LP-objective. Towards this end, we prove the following lemmas.

Lemma 6

For all \(i \in I, j \in V, k \in {F_{j}^{1}}\) , we have \(\mathbf {E}_{\mathcal {A}}[X(i,j,k)] \geq \delta \cdot \mathcal {A}lpha(i,j,k)\) , where δ > 0 is a sufficiently small constant.

Proof

Fix an item i ∈ I, a node j ∈ V and a node \(k \in {F_{j}^{1}}\). By Lemma 4 we have that α(i, j, k) ∈ {0, γ} and y(i, j) ≤ γ, y(i, k) ≤ γ. If α(i, j, k) = 0 the lemma is trivially true. Otherwise suppose for the rest of the proof that α(i, j, k) = γ. From constraint (6) it follows that also y(i, j) = y(i, k) = γ. Now, we have:

Now we can use the following (in)equalities: P[j, k ∈ W i ] ≥ 1/2 by Lemma 5; P[η i ≤ γ/6 | j, k ∈ W i ] = γ/6 as η i is independent of W i ; P[j, k ∈ T i | (η i ≤ γ/6)∧(j, k ∈ W i )] = 1 as y(i, j) = y(i, k) = γ and thus in the event that η i ≤ γ/6 the algorithm adds j, k to T i with probability 1.

□

Lemma 7

For all \(i \in I, j \in V, l \in {F_{j}^{2}}\) , we have \(\mathbf {E}_{\mathcal {A}}[Y(i,j,l)] \geq \delta \cdot \beta (i,j,l)\) , where δ is a sufficiently small constant.

Proof

Fix an item i ∈ I, a node j ∈ V and a node \(l \in {F_{j}^{2}}\). By Lemma 4 we have that β(i, j, l) ∈ {0, γ} and y(i, j) ≤ γ, y(i, l) ≤ γ. If β(i, j, l) = 0 the lemma is trivially true. Otherwise suppose for the rest of the proof that β(i, j, l) = γ which, given constraint 4, implies that also y(i, j) = y(i, l) = γ.

Let \(\mathcal {E}_{i}\) be the event that η i ≤ γ/6 (see Steps 5 & 6 in Algorithm 2). Let Z(i, k) be an indicator random variable that is set to one iff node \(k \in {F_{j}^{1}} \cap {F_{l}^{1}}\) is included in the set T i by our rounding scheme (see Step 7 in Algorithm 2). We have:

Thus, conditioned on the event \(\mathcal {E}_{i}\), the expected number of common neighbors of j and l who are included in the set T i is given by

The above inequality is by constraint (5) of the LP. Note that conditioned on the event \(\mathcal {E}_{i}\), the random variables Z(i, k) are mutually independent. Thus, applying Chernoff bound on (11), we infer that with constant probability, at least one common neighbor of j and l will be included in the set T i . To be more precise, define \(T_{i,j,l} = T_{i} \cap {F_{j}^{1}} \cap {F_{l}^{1}}\). For some sufficiently small constant δ 1, we have:

Let \(\mathcal {E}_{i,j,l}\) be the event that the following two conditions hold simultaneously: (a) T i, j, l ≠∅, and (b) the nodes j, l, and an arbitrary node from T i, j, l are included in W i . Now, (12) and Lemma 5 imply that \( \mathbf {P}[\mathcal {E}_{i,j,l} \, | \, \mathcal {E}_{i}] \geq \delta _{2} \) for δ 2 = δ 1/2. Putting all these observations together, we obtain that \(\mathbf {P}[Y(i,j,l) = 1] = \mathbf {P}[\mathcal {E}_{i}] \cdot \mathbf {P}[\mathcal {E}_{i,j,l} \, | \, \mathcal {E}_{i}] \cdot \mathbf {P}[ j,l \in T_{i} \, | \, \mathcal {E}_{i,j,l} \cap \mathcal {E}_{i}] \geq \gamma /6 \cdot \delta _{2} \cdot 1 = \delta \cdot \gamma = \delta \cdot \beta (i,j,l)\)for δ = δ 2/6. □

Theorem 3

The rounding scheme in Algorithm 2 gives an O(logm)-approximation for social welfare under 2-hop, linear externalities.

Proof

In the first step, when we find a feasible solution to the LP in accordance with Lemma 4, we lose a factor of O(log m) in the objective. At the end of the remaining steps, the expected valuation of an agent j ∈ V is given by:

The first equality follows from linearity of expectation, while the second equality follows from Lemma 6 and Lemma 7. Thus, the expected valuation of any agent in V is within a constant factor of the fractional valuation of the same agent under the feasible solution to the LP obtained at the end of Step 1 (see Algorithm 2). Summing over all the agents in V, we get the theorem. □

We can generalize the above approach to the following setting: Each user j is given an integer c j and can be assigned up to c j different items (each at most once). The valuation of an agent j for such an assignment \(\mathcal {A}\) is then given by \(u_{j}(\mathcal {A},G) = {\sum }_{i \in \mathcal {A}(j)}\lambda _{i,j} \cdot f_{\mathcal {A}(j),j}(|S_{\mathcal {A}(j),j}(\mathcal {A},G)|)\), with unchanged definitions of λ i, j , f i, j and \(S_{i,j}(\mathcal {A},G)\). To generalize the LP we replace, for each item i and node j, the constraint \({\sum }_{i} y(i,j) \leq 1\) by the two constraints \({\sum }_{i} y(i,j) \leq c_{j}\) and y(i, j) ≤ 1 and adapt the proof of Lemma 5. We give the modified proof in Appendix A.

Finally, we show NP-hardness for linear externalities, not only in the 2-hop setting but also for 1-hop. The proof is provided in Appendix C.Footnote 3

Theorem 4

Maximizing social welfare under linear externalities is NP-hard.

Moreover, if we consider 2-hop externality functions we get APX-hardness for linear externalities. The proof is provided in Appendix E.

Theorem 5

Maximizing social welfare with linear externalities in a 2-neighborhood is APX-hard even for the case with 2 items, i.e., it is NP-hard to approximate better than a factor of \(\frac {143}{144}\).

5 Constant Factor Approximation for Step Function Externalities

In this section, our goal is to maximize social welfare when agents have general step function externalities, i.e., an agent needs a certain number of 1- and 2-hop neighbors having the same product to receive externality. We will show that no constant factor approximation is possible unless a bound on the number of 2-neighbors an agent needs for receiving externality is given. We consider the case of 2-step function externalities, where only two neighbors are needed (see Definition 3) and give a \(\frac {5}{18} \cdot (1-1/e)\)-approximation algorithm for this problem. Moreover, as an upper bound we show that there is no polynomial time \(1-\frac {1}{e}+\epsilon \)-approximation algorithm (unless P = NP). Notice that if we consider step functions that just require one neighbor the problem reduces to the 1-hop step function scenario in [7]. However, our algorithm gives a \(\frac {1}{2} \cdot (1-1/e)\)-approximation for this scenario, improving the result in [7].

In the following we assume 2-step function externalities. Let \(G_{V^{\prime }}\) denote the subgraph induced by V ′ ⊆ V. For the rest of this section, the term “triple” will refer to any (unordered) set of three nodes T = {j 1, j 2, j 3} such that G T is connected. Similarly, the term “pair” will refer to any (unordered) set of two nodes {j 1, j 2} that are connected by an edge in E.

We first compute a maximal collection of mutually disjoint triples in the graph G. We denote this collection by \(\mathcal {T}\), and let \(V(\mathcal {T}) = \bigcup _{T \in \mathcal {T}} T \subseteq V\). The graph \(G_{V \setminus V(\mathcal {T})}\), by definition, consists of a mutually disjoint collection of pairs (say \(\mathcal {P}\)) and a set of isolated nodes (say B). We thus have the following lemma.

Lemma 8

In G = (V, E), there is no edge that connects a node j ∈ B with another node in B or with a node belonging to a pair in \(\mathcal {P}\) . Furthermore, there is no edge that connects two nodes j,j ′ belonging to two different pairs \(P, P^{\prime } \in \mathcal {P}\).

Definition 4

An assignment \(\mathcal {A}\) is consistent iff two agents get the same item whenever they belong to the same triple or the same pair. To be more specific, for all j, j ′ ∈ V, we have that \(\mathcal {A}(j) = \mathcal {A}(j^{\prime })\) if either (a) j, j ′ ∈ T for some triple \(T \in \mathcal {T}\) or (b) \(\{j,j^{\prime }\} \in \mathcal {P}\).

The next lemma shows that by losing a factor of 5/18 in the approximation ratio, we can focus on maximizing the social welfare via a consistent assignment.

Lemma 9

The social welfare from the optimal consistent assignment is at least (5/18) ⋅ Opt, where Opt is the maximum social welfare over all assignments.

The proof of Lemma 9 exploits Algorithm 3 that converts an assignment \(\mathcal {A}^{*}\) that gives maximum social welfare into a consistent assignment \(\mathcal {A}\). First, for each triple \(\{j_{1}, j_{2}, j_{3}\} \in \mathcal {T}\), the algorithm picks one of the items \(\mathcal {A}^{*}(j_{1}), \mathcal {A}^{*}(j_{2}), \mathcal {A}^{*}(j_{3})\) uniformly at random, and assigns that item to all the three agents j 1, j 2, j 3. Second, for each pair \(\{j_{1}, j_{2}\} \in \mathcal {P}\), we consider the items \(\mathcal {A}^{*}(j_{1}), \mathcal {A}^{*}(j_{2})\) and let j 1 be the agent with larger \(u_{j}(\mathcal {A}^{*})\). If there is an adjacent triple that was assigned item \(\mathcal {A}^{*}(j_{1})\), then we assign item \(\mathcal {A}^{*}(j_{1})\) to both agents. Otherwise we assign \(\mathcal {A}^{*}(j_{2})\) to both agents. Finally, the remaining agents (those who are in B) get the same items as in \(\mathcal {A}^{*}\).

Proof (Lemma 9)

Let \(\mathcal {A}^{*}\) be an assignment (not necessarily consistent) that gives maximum social welfare. It is easy to see that Algorithm 3 returns a consistent assignment \(\mathcal {A}\). In the following we show that also \(\mathbf {E}\left [{\sum }_{j\in V} u_{j}(\mathcal {A})\right ] \geq 5/18 \cdot {\sum }_{j\in V} u_{j}(\mathcal {A}^{*})\). To this end we will show that \(\mathbf {E}\left [{\sum }_{j\in T} u_{j}(\mathcal {A})\right ] \geq 5/18 \cdot {\sum }_{j\in T} u_{j}(\mathcal {A}^{*})\) for each tuple \(T \in \mathcal {T} \cup \mathcal {P} \cup B\); we consider the following three cases.

- Case 1 (T ∈ B)::

-

Let T = {j} and \(\mathcal {A}^{*}(j) = i\). Since j ∈ B, it always gets the same item under \(\mathcal {A}\), i.e., \(\mathcal {A}(j) = i\). Now, if \(u_{j}(\mathcal {A}^{*}) = 0\), then the claim is trivially true. Otherwise it must be the case that \(\mathcal {A}^{*}(j^{\prime }) = i\) for some neighbor j ′ of j. Since j ∈ B, this neighbor j ′ must be part of some triple \(T \in \mathcal {T}\) (see Lemma 8). With probability at least 1/3 all the three nodes in T are assigned item i under \(\mathcal {A}\) and at least two nodes of T are in the 2-hop neighborhood of j. In that event j gets the same valuation as in \(\mathcal {A}^{*}\), and we have that \(\mathbf {E}[u_{j}(\mathcal {A})] \geq (1/3) \cdot u_{j}(\mathcal {A}^{*})\).

- Case 2 (\(T \in \mathcal {P}\))::

-

Consider the pair T = {j 1, j 2} with \(\mathcal {A}^{*}(j_{1}) = i\) and \(\mathcal {A}^{*}(j_{2}) = i^{\prime }\). If \(u_{j_{1}}(\mathcal {A}^{*}) = 0\) or \(u_{j_{2}}(\mathcal {A}^{*}) = 0\), we can exclude this node from our analysis and proceed as in case 1. Otherwise consider two cases.

-

Assume i = i ′. Then it must be the case that there exists a node j ′∉T with \(\mathcal {A}^{*}(j^{\prime }) = i\) such that j ′ is either a neighbor of j 1 or a neighbor of j 2. Since \(\{j_{1},j_{2}\} \in \mathcal {P}\), this agent j ′ must be part of some triple \(T^{\prime } \in \mathcal {T}\) (see Lemma 8). Consider the event that all the three nodes in T ′ are assigned item i under \(\mathcal {A}\); given this event we have \(u_{j}(\mathcal {A}) = u_{j}(\mathcal {A}^{*})\) for j ∈ {j 1, j 2}. It follows that \(\mathbf {E}[u_{j}(\mathcal {A})] \geq (1/3) \cdot u_{j}(\mathcal {A}^{*})\).

-

Assume i≠i ′ and w.l.o.g. \(u_{j_{1}}(\mathcal {A}^{*}) \geq u_{j_{2}}(\mathcal {A}^{*})\). Then there exists a neighbor \(j_{1}^{\prime } \not \in T\) of j 1 with \(\mathcal {A}^{*}(j_{1}^{\prime }) = i\) and a neighbor \(j_{2}^{\prime } \not \in T\) of j 2 with \(\mathcal {A}^{*}(j_{2}^{\prime }) = i^{\prime }\). Since \(\{j_{1},j_{2}\} \in \mathcal {P}\), these agents must be part of some triples \(T_{1}, T_{2} \in \mathcal {T}\) (see Lemma 8). Note that T 1 and T 2 can be identical. Let \(\mathcal {E}_{1}\) be the event that all the three nodes in T 1 are assigned item i under \(\mathcal {A}\), and \(\mathcal {E}_{2}\) be the event that all the three nodes in T 2 are assigned item i ′ under \(\mathcal {A}\). Let \(\mathcal {E}^{c}_{1}\) be the complementary event of \(\mathcal {E}_{1}\); then we have:

$$\mathbf{E}\left[{\sum}_{j\in T} u_{j}(\mathcal{A})\right] \geq \mathbf{P}[\mathcal{E}_{1}] \cdot u_{j_{1}}(\mathcal{A}^{*}) + \mathbf{P}[\mathcal{E}_{2} \cap \mathcal{E}^{c}_{1}] \cdot u_{j_{2}}(\mathcal{A}^{*}) $$Notice that the events \(\mathcal {E}_{2}\) and \(\mathcal {E}^{c}_{1}\) are not necessarily independent (there might be a triple that contains agents \(j_{1}^{*},j_{2}^{*}\) with \(\mathcal {A}^{*}(j_{1}^{*})=i\) and \(\mathcal {A}^{*}(j_{2}^{*})=i^{\prime }\)), but can be only positively correlated. Thus, we can use \(\mathbf {P}[\mathcal {E}_{2}] (1 - \mathbf {P}[{\mathcal {E}}_{1}])\) to lower bound \(\mathbf {P}[\mathcal {E}_{2} \cap \mathcal {E}^{c}_{1}]\).

$$\begin{array}{@{}rcl@{}} \mathbf{E}\left[{\sum}_{j\in T} u_{j}(\mathcal{A})\right] &\geq& \mathbf{P}[\mathcal{E}_{1}] \cdot u_{j_{1}}(\mathcal{A}^{*}) + \mathbf{P}[\mathcal{E}_{2}] (1 - \mathbf{P}[{\mathcal{E}}_{1}]) \cdot u_{j_{2}}(\mathcal{A}^{*})\\ &\geq& 1/3 \cdot u_{j_{1}}(\mathcal{A}^{*}) + 2/9 \cdot u_{j_{2}}(\mathcal{A}^{*}) \end{array} $$For the last inequality we use \(\mathbf {P}[\mathcal {E}_{i}] \geq 1/3\) and \(u_{j_{1}}(\mathcal {A}^{*}) \geq u_{j_{2}}(\mathcal {A}^{*})\). It then follows that \(\mathbf {E}[{\sum }_{j\in T} u_{j}(\mathcal {A})] \geq 5/18 \cdot (u_{j_{1}}(\mathcal {A}^{*}) + u_{j_{2}}(\mathcal {A}^{*}))= 5/18 \cdot {\sum }_{j\in T} u_{j}(\mathcal {A}^{*})\) (again by \(u_{j_{1}}(\mathcal {A}^{*}) \geq u_{j_{2}}(\mathcal {A}^{*})\)).

-

- Case 3 (\(T \in \mathcal {T}\))::

-

Consider the triple T = {j 1, j 2, j 3}. For each \(j\in \mathcal {T}\) with probability at least 1/3, all these three nodes are assigned item \(\mathcal {A}^{*}(j)\) under \(\mathcal {A}\), and in this event we have \(u_{j}(\mathcal {A}) \geq u_{j}(\mathcal {A}^{*})\) . It follows that \(\mathbf {E}[{\sum }_{j\in T} u_{j}(\mathcal {A})] \geq 1/3 \cdot {\sum }_{j\in T} u_{j}(\mathcal {A}^{*})\).

Now, we take the sum of the above inequalities \(\mathbf {E}[{\sum }_{j\in T} u_{j}(\mathcal {A})] \geq 5/18 \cdot {\sum }_{j\in T} u_{j}(\mathcal {A}^{*})\) for each tuple \(T \in \mathcal {T} \cup \mathcal {P} \cup B\), and by linearity of expectation infer that the expected social welfare under the consistent assignment \(\mathcal {A}\) is within a factor of 5/18 of the optimal social welfare. This concludes the proof of the lemma. □

Next, we will give a (1−1/e)-approximation algorithm for finding a consistent assignment of items that maximizes the social welfare. Along with Lemma 9, this will imply the main result of this section (see Theorem 6).

We use the term “resource” to refer to either a pair \(P \in \mathcal {P}\) or an agent j ∈ B. Let \(\mathcal {R} = \mathcal {P} \cup B\) denote the set of all resources. We say that a resource \(r \in \mathcal {R}\) neighbors a triple \(T \in \mathcal {T}\) iff in the graph G = (V, E) either (a) \(r = \{j,j^{\prime }\} \in \mathcal {P}\) and some node in {j, j ′} is adjacent to some node in T, or (b) r = j ∈ B and j is adjacent to some node in T. We slightly abuse the notation (see Section 2) and for a triple \(T \in \mathcal {T}\) let \(N(T) \subseteq \mathcal {R}\) denote the set of resources that are neighbors of T.

By definition, every consistent assignment ensures that if two agents belong to the same triple in \(\mathcal {T}\) (resp. the same pair in \(\mathcal {P}\)), then both of them get the same item. We say that the item is assigned to a triple (resp. resource). Note that the triples do not need externality from outside. To be more specific, the contribution of a triple \(T \in \mathcal {T}\) to the social welfare is always equal to \({\sum }_{j \in T} \lambda _{i,j}\), where i is the item assigned to T. Resources, however, do need outside externality, which by Lemma 8 can come only from a triple in \(\mathcal {T}\).

Lemma 10

In a consistent assignment, if a resource \(r \in \mathcal {R}\) makes a positive contribution to the social welfare, then it neighbors some triple \(T_{r} \in \mathcal {T}\) , and both the resource r and the triple T r receive the same item.

Proof

If a resource contributes a nonzero amount to the social welfare, then it must receive nonzero externality from the assignment. By Lemma 8, such externality can come only from a triple in \(\mathcal {T}\). The lemma follows. □

Thus, given a consistent assignment \(\mathcal {A}\) consider the following mapping \(T_{\mathcal {A}}(r)\) of a resource \(r \in \mathcal {R}\) to triples in \(\mathcal {T}\) in accordance with Lemma 10: If the resource r makes zero contribution towards the social welfare (a case not covered by the lemma), then we let \(T_{\mathcal {A}}(r)\) be any arbitrary triple from \(\mathcal {T}\). Otherwise \(T_{\mathcal {A}}(r)\) denotes an (arbitrary) neighboring triple of \(\mathcal {T}\) that receives the same item as r. We say that the triple \(T_{\mathcal {A}}(r)\) claims the resource r.

For ease of exposition, let λ i, r (T) be the valuation of the resource r when both the resource and the triple T that claims it get item i ∈ I, i.e.,

Now, any consistent assignment \(\mathcal {A}\) can be interpreted as follows. Under such an assignment, every triple \(T \in \mathcal {T}\) claims the subset of the resources \(S_{T} = \{r \in \mathcal {R} \mid T_{\mathcal {A}}(r) = T\}\); the subsets corresponding to different triples being mutually exclusive. A triple T and the resources in S T all get the same item (say i ∈ I). The valuation obtained from them is \(u_{T}(S_{T}, i) = {\sum }_{j \in T} \lambda _{i,j} + {\sum }_{r \in S_{T}} \lambda _{i,r}(T).\)

If our goal is to maximize the social welfare, then, naturally, for every triple T, we will pick the item that maximizes u T (S T , i), thereby extracting a valuation of u T (S T )= maxi u T (S T , i). The next lemma shows that this function is fractionally subadditive. Footnote 4

Lemma 11

The function u T (S T ) is fractionally subadditive in S T .

Proof

Note that the function u T (S T , i) is additive in S T , for every item i ∈ I. The lemma follows from the fact that the maximum of a set of linear functions is fractionally sub-additive. □

The preceding discussion shows that the problem of computing a consistent assignment for welfare maximization is equivalent to the following setting. We have a collection of triples \(\mathcal {T}\) and a set of resources \(\mathcal {R}\). We will distribute these resources amongst the triples, i.e., every triple T will get a subset \(S_{T} \subseteq \mathcal {R}\), and these subsets will be mutually exclusive. The goal is to maximize the sum \({\sum }_{T \in \mathcal {T}} u_{T}(S_{T})\), where the functions u T (.)’s are fractionally subadditive. By a celebrated result of Feige [14], we can get an (1−1/e)-approximation algorithm for this problem if we can implement the following subroutine (called demand oracle) in polynomial time: Each resource r is given a “cost” p(r) and we need to determine for each triple T a set of resources \(S^{*}_{T}\) that maximizes \(u_{T}(S_{T})-{\sum }_{r\in S_{T}} p(r)\) over all sets S T . Such a demand oracle can be implemented in polynomial time using a simple greedy algorithm for each T and each item i: Add a resource r to \(S^{*}_ T\) iff λ i, r (T) > p(r). The result of the approximation algorithm assigns each triple T a subset S T and we then pick the item i that maximizes u T (S T , i) over all items i. Together with Lemma 8, this implies the theorem stated below.

Theorem 6

We can get a polynomial-time \(\frac {5}{18} \cdot (1-1/e)\) -approximation algorithm for the problem of maximizing social welfare under 2-step function externalities.

The algorithm can be easily adapted for 1-hop step function externalities. The difference is that instead of computing a maximal collection \(\mathcal {T}\) of mutually disjoint triples, one computes a maximal collection of mutually disjoint pairs.

Theorem 7

We can get a polynomial-time \(\frac {1}{2} \cdot (1-1/e)\) -approximation algorithm for maximizing social welfare under 1-step function externalities.

In Appendix D we further generalize the above theorems to s-step functions in a s-hop neighborhoods for s ≥ 2.

Finally, we present our hardness results for step functions. By a reduction from Max Independent Set (see Appendix E) we can show that, for unbounded s, there is no constant factor approximation. The main idea is that we modify the graph such that we replace each edge by a path of length three and each of the original nodes j wants a different item, while j can only get positive externalities when having a support of 2δ j (δ j the node degree of j). The valuations of the newly introduced nodes are set to 0. That is, nodes that are adjacent in the original graph have two common nodes in the 2-neighborhood in the constructed graph, want different items, need all their neighbors as support, and thus only one of them can have positive valuation.

Theorem 8

For any ε > 0 the problem of maximizing social welfare under arbitrary s-step function externalities is not approximable within O(n 1/4−ε) unless NP = P, and not approximable within O(n 1/2−ε) unless NP = ZPP.

Second, we show that maximizing social welfare under 2-step function externalities is \(\mathcal {A}PX\)-hard and thus no PTAS can exists. This is by a reduction from Max Coverage. The APX-hardness for the setting with only two items is by a reduction from MAX-SAT.

Theorem 9

The problem of maximizing social welfare under 2-step function externalities is APX-hard, in particular, there is no polynomial time \(1-\frac {1}{e}+\epsilon \) -approximation algorithm (unless P = NP).

Furthermore, the problem remains APX-hard (although with a larger constant) even if there are only two items.

Theorem 10

Maximizing social welfare with s-step function externalities in a p-neighborhood (p ≥ 1) is APX-hard even for the case with 2 items, i.e., it is NP-hard to approximate better than a factor of \(\frac {23}{24}\).

6 Conclusion

In this work we considered friends-of-friends externalities in a setting where multiple items are assigned to unit-demand agents and one aims to maximize social-welfare. We considered three kinds of externality functions, i.e., concave, linear, and step-functions, and gave approximation as well as hardness of approximation results. In particular, (1) for concave externalities we gave an \(O(\sqrt {n})\)-approximation algorithm, (2) for linear externalities we gave an O(log m)-approximation algorithm, and (3) for 2-step function externalities we gave an \(\frac {5}{18}(1-1/e)\)-approximation algorithm. We complimented these algorithms by hardness results showing that maximizing welfare for any of these externalities is APX-hard. Moreover, we improved results for the 1-hop setting, by (a) an improved algorithm for 1-hop step function externalities and (b) by a correction and improvements of the complexity results for 1-hop externalities.

We identify the following directions for future research. First, there are gaps between the approximation ratios of our algorithm and the hardness results we provided. Narrowing these gaps by better algorithms and stronger upper bounds is an obvious direction for future research. Second, beyond the types of externalities studied in this work there are more general notions of externalities, like submodular externalities, that received attention in the 1-hop setting [7]. Another question for future research is how to extend our results to these types of externalities. Finally, friends-of-friends externalities are also relevant for different problem settings with network externalities. For instance one can consider strategic agents and the problem of maximizing social welfare in an incentive-compatible way [7], or the problem of revenue maximization when selling a product [21].

Notes

This is also true for the results in [7]. In both results, the assumption is that the valuation functions are additive over the items.

A function is fractionally subadditive if it can be expressed as the maximum of additive functions [14].

Notice that all these observations hold for both, our definition of linear externalities and the slightly different definition of linear externalities in [7].

References

Akhlaghpour, H., Ghodsi, M., Haghpanah, N., Mirrokni, V.S., Mahini, H., Nikzad, A.: Optimal Iterative Pricing over Social Networks. In: 6Th WINE, pp. 415–423 (2010)

Alon, N., Feldman, M., Procaccia, A.D., Tennenholtz, M.: A note on competitive diffusion through social networks. Inf. Process. Lett. 110(6), 221–225 (2010)

Anari, N., Ehsani, S., Ghodsi, M., Haghpanah, N., Immorlica, N., Mahini, H., Mirrokni, V.S.: Equilibrium pricing with positive externalities. Theor. Comput. Sci. 476, 1–15 (2013)

Anshelevich, E., Bhardwaj, O., Usher, M.: Friend of my friend: Network formation with two-hop benefit. In: Vöcking, B. (ed.) Algorithmic Game Theory - 6th International Symposium, SAGT 2013, Aachen, Germany, October 21-23, 2013. Proceedings, volume 8146 of Lecture Notes in Computer Science, pp. 62–73. Springer (2013)

Arthur, D., Motwani, R., Sharma, A., Ying, X.: Pricing Strategies for Viral Marketing on Social Networks. In: 5Th WINE, pp. 101–112 (2009)

Bensaid, B., Lesne, J.-P.: Dynamic monopoly pricing with network externalities. Int. J. of Industrial Organization 14(6), 837–855 (1996)

Bhalgat, A., Gollapudi, S., Munagala, K.: Mechanisms and Allocations with Positive Network Externalities. In: 13Th EC, pp. 179–196 (2012)

Bhattacharya, S., Dvořák, W., Henzinger, M., Starnberger, M.: Welfare Maximization with Friends-of-Friends Network Externalities. In: Mayr, E.W., Ollinger, N. (eds.) 32nd International Symposium on Theoretical Aspects of Computer Science (STACS 2015), volume 30 of Leibniz International Proceedings in Informatics (LIPIcs), pp. 90–102, Dagstuhl, Germany, 2015. Schloss Dagstuhl–Leibniz-Zentrum fuer Informatik

Bhattacharya, S., Korzhyk, D., Conitzer, V.: Computing a Profit-Maximizing Sequence of Offers to Agents in a Social Network. In: 8Th WINE, pp. 482–488 (2012)

Cigler, L., Dvořák, W., Henzinger, M., Starnberger, M.: Limiting price discrimination when selling products with positive network externalities. In: Tie-Yan Liu, Qi Qi, and Yinyu Ye, editors, Web and Internet Economics - 10th International Conference, WINE 2014, Beijing, China, December 14-17, 2014. Proceedings, volume 8877 of Lecture Notes in Computer Science, pp. 44–57. Springer, (2014)

Dobzinski, S., Nisan, N., Schapira, M.: Approximation algorithms for combinatorial auctions with complement-free bidders. Math. Oper Res. 35(1), 1–13 (2010)

Dubey, P., Garg, R., Meyer, B.D.: Competing for Customers in a Social Network: The Quasi-Linear Case. In: 2Nd WINE, pp. 162–173 (2006)

Feige, U.: A threshold of ln n for approximating set cover. J. ACM 45(4), 634–652 (1998)

Feige, U.: On maximizing welfare when utility functions are subadditive. SIAM J. Comput. 39(1), 122–142 (2009)

Feld, S.L.: Why your friends have more friends than you do. American J. of Sociology 96(6), 1464–1477 (1991)

Fotakis, D., Siminelakis, P.: On the Efficiency of Influence-And-Exploit Strategies for Revenue Maximization under Positive Externalities. In: 8Th WINE, pp. 270–283 (2012)

Mark Gold, E.: Complexity of automaton identification from given data. Inf. Control. 37(3), 302–320 (1978)

Goyal, S., Kearns, M.: Competitive Contagion in Networks. In: 44Th STOC, pp. 759–774 (2012)

Haghpanah, N., Immorlica, N., Mirrokni, V.S., Munagala, K.: Optimal auctions with positive network externalities. ACM Trans. Economics and Comput. 1 (2), 13:1–13:24 (2013)

Hampton, K.N., Goulet, L.S., Marlow, C., Rainie, L.: Why most facebook users get more than they give Pew Internet & American Life Project (2012)

Hartline, J., Mirrokni, V.S., Sundararajan, M.: Optimal Marketing Strategies over Social Networks. In: 17Th WWW, pp. 189–198 (2008)

Håstad, J.: Clique is hard to approximate within n1-epsilon. Electronic Colloquium on Computational Complexity (ECCC), 4(38) (1997)

Håstad, J.: Some optimal inapproximability results. J. ACM 48(4), 798–859 (2001)

He, X., Kempe, D.: Price of Anarchy for the N-Player Competitive Cascade Game with Submodular Activation Functions. In: 9Th WINE, pp. 232–248 (2013)

Jackson, M.O., Rogers, B.W.: Meeting strangers and friends of friends: How random are social networks?. Am. Econ. Rev. 97(3), 890–915 (2007)

Kempe, D., Kleinberg, J.M., Tardos, É.: Maximizing the Spread of Influence through a Social Network. In: 9Th KDD, pp. 137–146 (2003)

Khuller, S., Moss, A., Naor, J.: The budgeted maximum coverage problem. Inf. Process. Lett. 70(1), 39–45 (1999)

Mirrokni, V.S., Roch, S., Sundararajan, M.: On Fixed-Price Marketing for Goods with Positive Network Externalities. In: 8Th WINE, pp. 532–538 (2012)

Seeman, L., Singer, Y.: Adaptive Seeding in Social Networks. In: 54Th Annual IEEE Symposium on Foundations of Computer Science, FOCS 2013, 26-29 October, 2013, Berkeley, CA, USA, pages 459–468. IEEE Computer Society (2013)

Simon, S., Apt, K.R.: Choosing Products in Social Networks. In: 8Th WINE, pp. 100–113 (2012)

Takehara, R., Hachimori, M., Maiko, S.: A comment on pure-strategy nash equilibria in competitive diffusion games. Inf Process. Lett. 112(3), 59–60 (2012)

Tzoumas, V., Amanatidis, C., Markakis, E.: A Game-Theoretic Analysis of a Competitive Diffusion Process over Social Networks. In: 8Th WINE, pages 1–14 (2012)

Acknowledgments

Open access funding provided by University of Vienna. A preliminary version of this paper has been presented at the 32nd International Symposium on Theoretical Aspects of Computer Science (STACS 2015) [8]. The authors want to thank the anonymous referees of the current paper and the preceding STACS paper for their comments which helped to improve the presentation.

The research leading to these results has received funding from the European Research Council under the European Union’s Seventh Framework Programme (FP/2007-2013) under grant agreement no. 317532 and from the Vienna Science and Technology Fund (WWTF) through project ICT10-002. The authors are also grateful to Parinya Chalermsook for helpful discussions on “hardness of approximation”.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A: Multi-Demand Setting for Linear Externalities

We show how to adapt the proof of Lemma 5 to the more general setting. Recall that each user can be assigned up to c j different items (each at most once). The rounding scheme is slightly adapted for the Multi-Demand setting as following: the sets W i are replaced by multi-sets W i such that in W 0 there c j copies for each j ∈ V; in step 4 when subtracting a set T i−1 we delete only one copy of each j ∈ T i−1.

Lemma 12

For every node t ∈ V and every i ∈ I, we have P[t ∈ W i ] ≥ 5/6. Thus, P[{t 1, t 2, t 3} ⊆ W i ] ≥ 1/2 for all t 1, t 2, t 3 ∈ V.

Proof

Fix any node j ∈ V and any item i ∈ I, and consider an indicator random variable \({\Gamma }_{i^{\prime }j}\) that is set to one iff \(j \in T_{i^{\prime }}\). It is easy to check that

for all items i ′ ∈ I. By constraint 7 and linearity of expectation, we thus have:

Applying Markov’s inequality, we get \(\mathbf {P}[{\sum }_{i^{\prime } < i} {\Gamma }_{i^{\prime }j} < c_{j}] \geq 5/6\). In other words, with probability at least 5/6, we have that less than c j many items have been assigned to j when item i is processed. Under this event, we must have j ∈ W i . The lemma follows. □

Appendix B: p-hop Externalities

In this section we generalize our notation for the p-hop case. For p ≥ 2, let the p-hop neighborhood \({F_{j}^{p}}\) and the p-neighborhood \(F_{j}^{\le p}\) be defined as follows.

Then the p-hop support \({N_{j}^{p}}(i, \mathcal {A})\) and the p-support \(S^{p}_{ij}(\mathcal {A},G)\) are defined as

Appendix C: Hardness for Linear Externalities

Bhalgat et al. [7] state that “The welfare maximization problem for linear externality and complete graphs is MaxSNP-hard”. As on complete graphs there is no difference between 1-hop, 2-hop and in general p-hop linear externalities this would imply MaxSNP hardness for our setting. Unfortunately, as we explain next, their claim is not true. There is a quite simple method to find the optimal assignment in the case of a complete graph. Consider only the assignments that pick one item i ∈ I and assign it to every agent, and select the optimal assignment among them. This can be done in linear time in the number of items m and the number of agents n. Lemma 13 shows that such an assignment is also optimal among all possible assignments. Assuming NP ≠P the incorrectness of Theorem 3.1 in [7] follows.

Lemma 13

Given convex functions \(f_{i,j}\!:\mathbb {N}_{0}\rightarrow \mathbb {R}_{\ge 0}\) with f i,j (0) = 0 for all i ∈ I :={1,…,m} and all j ∈ V, and a partition of V into m sets S 1 ,…,S m (i.e., \(\bigcup _{i\in I}S_{i}=V\) and \(S_{i}\cap S_{i^{\prime }}=\emptyset \) for all i ≠ i ′ ∈I) it holds that \({\sum }_{i\in I}{\sum }_{j\in S_{i}}f_{i,j}(|S_{i}|)\le \max _{i\in I}{\sum }_{j\in V}f_{i,j}(|V|)\).

Proof

For the proof we set n = |V| and define \(i^{*}\in \mathcal {A}rg \max _{i\in I}{\sum }_{j\in V}f_{i,j}(|V|)\).

Equality (13) follows from the convexity of f i, j (.) and f i, j (0) = 0. Equality (14) holds because \(\frac {|S_{i}|}{n}\) does not depend on j. Inequality (15) follows from the non-negativity of f i, j (.). For Inequality (16) observe that for any fixed i ∈ I it holds that \({\sum }_{j\in V}f_{i,j}(n)\le {\sum }_{j\in V}f_{i^{*},j}(n)\) by the definition of i ∗. Further, \({\sum }_{j\in V}f_{i^{*},j}(n)\) does not depend on i, which implies the left-hand side of Equality (17). For the right-hand side of Equality (17) recall that S 1,…,S m is a partition of V, and thus, \({\sum }_{i\in I} |S_{i}|=|V|=n\). This gives \({\sum }_{j\in V}f_{i^{*},j}(n)\) which is by definition \(\max _{i\in I}{\sum }_{j\in V}f_{i,j}(|V|)\). □

Notice that the valuation of an agent with linear externality is indeed a convex function f i, j with f i, j (0) = 0 and in a complete graph the externality of a node only depends on the number of nodes having the same item. Moreover if no one has the item the valuation an agent gets from this item is clearly 0. Footnote 5 Thus the above lemma implies that there is an optimal assignment assigning the same item to all nodes. From that the following theorem follows immediately.

Theorem 11

The welfare maximization problem for linear externalities and complete graphs can be solved in polynomial time.

However, on general graphs the problem becomes NP-hard. We next show show this NP-hardness in a generalized version of Theorem 4. That is we consider p-hop linear externalities (p ≥ 1), i.e. the support of an agent j ∈ V for item i ∈ I is defined as \(S^{p}_{ij}(\mathcal {A},G)\) (see Appendix B). To this end we consider a simpler version of Max Coverage (where all weights w i are set to 1) which is still NP-hard [13, 27].

Definition 5

The input to the Max Coverage problem is a set of domain elements D = {1,2,…,n}, a collection \(\mathcal {S}= \{E_{1},\dots , E_{m}\}\) of subsets of D and a positive integer k. The goal is to find a collection \(\mathcal {S}^{\prime }\subseteq \mathcal {S}\) of cardinality k maximizing \(\left | \bigcup _{E \in \mathcal {S}^{\prime }} E\right |\).

Theorem 4

The problem of maximizing social welfare under p-hop linear function externalities is NP-hard for p ≥ 1.

Proof

The proof is by a reduction from the NP-hard problem Max Coverage (see Definition 5). The reduction maps an instance \((D,\mathcal {S},k)\) of the Max Coverage problem to our problem as follows. It constructs a graph G (cf. Fig. 1) with a node set V containing X = {x 1,…,x k }, Y = {y 1,…,y k }, D and \(\{e^{h}_{i,j} \mid i\in D, 1\leq j\leq k, 1\leq h < p \}\). The undirected edges are given by

-

{(x l , y l )∣1 ≤ l ≤ k};

-

\(\{(e^{h}_{i,j},e^{h+1}_{i,j}) \mid i\in D, 1\leq j\leq k, 1\leq h< p-1 \}\); and

-

If p > 1 we have edges \(\{(i,e^{1}_{i,j}), (e^{p-1}_{i,j},y_{j})\mid i\in D, 1\leq j\leq k\}\). Otherwise if p = 1 then there are edges {(i, y j )∣i ∈ D,1 ≤ j ≤ k}.

The items are given by \(\{E_{i,l}\mid E_{i} \in \mathcal {S}, 1 \leq l \leq k \}\). Finally the intrinsic values of an agent j ∈ D for getting item E i, l are given by

while the intrinsic values of and agent x j are given by

and for all other agents j and items i the weight λ i, j = 0.

Lemma 14

The above reduction maps each instance of the Max Coverage problem to a network of agents with linear function externalities in p-neighborhoods such that for the value opt MC of the optimal selection for the Max Coverage instance and opt SW the social welfare of the optimal item assignment it holds that opt SW = k 2 np(1 + (p − 1)n) + p ⋅ opt MC .

Proof

(1) We first show that o p t S W ≥ k 2 n p(1+(p−1)n) + p⋅o p t M C : W.l.o.g., we assume that an optimal coverage O p t M C is given by {E 1,…,E k }. Consider the following assignment \(\mathcal {A}\). For each 1 ≤ j ≤ k, the nodes x j , y j and \(\{e^{h}_{i,j} \mid i\in D, 1\leq h < p \}\) are assigned the item E j, j . If an element d ∈ D is covered by O p t M C , i.e., it is contained in some E l ∈ O p t M C , then d is assigned the item E l, l (if there are several such E l pick the one with the lowest index). Otherwise, if d is not covered by O p t M C then item E 1,1 is assigned to d. Now consider the social welfare of \(\mathcal {A}\). First of all, by construction, only the nodes in X and D contribute social welfare. A node x ∈ X has a p-neighborhood of 1+(p−1)⋅n many nodes, and all of his p-neighbors get the same item. Thus, x has a valuation of n⋅k⋅p⋅(1+(p−1)⋅n). As |X| = k the set X has a social welfare of n⋅k 2⋅p⋅(1+(p−1)⋅n). Now consider d ∈ D. If d is covered by an E l ∈ O p t M C , i.e., d ∈ E l , then it was assigned the item E l, l . Moreover, E l, l is also assigned to the nodes \(\{e^{h}_{d,l} \mid 1\leq h < p \}\) and y l which are in the p-neighborhood of d. Thus each covered d ∈ D has valuation ≥ p and therefore o p t S W ≥ k 2 n p(1+(p−1)n) + p⋅o p t M C .

(2) It remains to show that o p t S W ≤ k 2 n p(1+(p−1)n) + p⋅o p t M C : We first show that in an optimal assignment O p t S W each x l ∈ X must have valuation n⋅k⋅p⋅(1+(p−1)⋅n), i.e., x l is assigned to the one of the items \(\{E_{i,l}\mid E_{i} \in \mathcal {S}\}\), and \(\{e^{h}_{i,l} \mid i\in D, 1\leq h < p \}\) and y l is assigned to the same item. Towards a contradiction assume that there is an assignment \(\mathcal {A}\) with one x ∈ X that has lower valuation. Then the social welfare of X in \(\mathcal {A}\) is bounded by n k 2 p(1+(p−1)n)−n k p. Each d ∈ D has a p-neighborhood of size k⋅p. Thus the social welfare of D in \(\mathcal {A}\) is bounded by n⋅k⋅p. We have that the social welfare of \(\mathcal {A}\) is bounded by n k 2 p(1+(p−1)n). But from (1) we know that o p t S W ≥ k 2 n p(1+(p−1)n) + p⋅o p t M C where o p t M C ≥ 1 for all none trivial instances. We obtain the desired contradiction. Thus we know that in O p t S W the social welfare of X is given by n⋅k 2⋅(1+(p−1)⋅n).

Now consider an i ∈ D. The p-neighborhood of i consists of connected sets \(\{e^{h}_{i,j} \mid 1\leq h \leq p-1\} \cup \{y_{j}\}\) for 1 ≤ j ≤ k. By the above observation for each set \(\{e^{h}_{i,j} \mid 1\leq h \leq p-1\} \cup \{y_{j}\}\) in O P T S W all nodes are assigned the same item, but all sets are assigned different items (as they belong to different x j ). Hence for i ∈ D the valuation is either p if it gets an item E i, l with d ∈ E i and one of its neighbor sets gets the same item. Otherwise d has valuation 0. We obtain that o p t S W = k 2 n p(1+(p−1)n) + p⋅|{d ∈ D∣d has positive valuation in o p t S W }|. Finally, we can construct an k-covering by choosing the sets E i corresponding to the items assigned to X. By the construction, this k-covering covers all d ∈ D with positive valuation and thus o p t M C ≥ |{d ∈ D∣d has positive valuation in o p t S W }|. Hence we obtain o p t S W ≤ k 2 n p(1+(p−1)n) + p⋅o p t M C . □

By the above lemma, the presented faithfully reduction maps the decision version of Max Coverage where one ask whether o p t M C ≥ C to a decision version welfare maximization where one ask whether o p t S W ≥ k 2 n p(1+(p−1)n) + p⋅C. Moreover it can be also performed in polynomial time and thus Theorem 4 follows from the corresponding result for Max Coverage [13, 27]. □

Appendix D: Generalization of Step-Function Externalities

We can generalize the result in Section 5 as follows (Recall the definitions from Appendix B).

Definition 6

In an s-step externality function in a p-neighborhood for all j ∈ V, i ∈ I and \(\mathcal {A} : V \rightarrow I\) it holds that \(u_{j}(\mathcal {A},G) = \lambda _{\mathcal {A}(j),j} \cdot f_{\mathcal {A}(j),j}(|S^{p}_{\mathcal {A}(j),j}(\mathcal {A},G)|)\) with f i j (t) is 1 if t ≥ s and 0 otherwise.

Note that we can assume p ≤ s as the p-hop neighborhood cannot be reached with fewer than p nodes. We show next how to generalize the algorithm of the previous setting to s-step externality functions in s neighborhoods. We leave it as an open question to give an approximation algorithm for s-step externality functions in p-neighborhoods for arbitrary p ≤ s. Moreover the algorithm can be also applied to scenarios where different nodes j have different s j -step functions. Then the parameter s is set to the maximum of all s j . The generalized algorithm works as follows: Analogous to triples in Section 5 we compute a maximal collection of mutually disjoint connected sets of size s + 1 in the graph and decompose the remaining graph into maximal collections of connected sets of size s,…,1. The definition of a consistent assignment carries directly over to this new decomposition of the graph and Lemma 9 can be generalized as follows.

Lemma 15

The social welfare from the optimal consistent assignment is at least \(\left (1- (\frac {s}{s+1})^{s} \right )/s \cdot {\textsc {Opt}}\), where Opt is the maximum social welfare over all assignments under s-step function externalities in an s-neighborhood.

Proof

Let \(\mathcal {A}^{*}\) be an assignment (not necessarily consistent) that gives maximum social welfare. We convert it into a (random) consistent assignment \(\mathcal {A}\) as follows. First, for each connected component T of size s + 1, we pick one of the items \(\{\mathcal {A}^{*}(j) \mid j \in T\}\) uniformly at random, and assign that item to all agents in T. The events corresponding to different connected component are mutually independent. Second, for each connected component T of size ≤ s, we consider agents j ∈ T such that there is a adjacent component of size s + 1 which was assigned item \(\mathcal {A}^{*}(j)\). Among those agents we select the one with maximal \(u_{j}(\mathcal {A}^{*})\) and assign the item \(\mathcal {A}^{*}(j)\) to all agents in T. It is easy to see that the resulting assignment \(\mathcal {A}\) is consistent. We claim that \(\mathbf {E}[{\sum }_{j\in T} u_{j}(\mathcal {A})] \geq \left (1- (\frac {s}{s+1})^{s} \right )/s \cdot {\sum }_{j\in T} u_{j}(\mathcal {A}^{*})\) for each connected component T. To prove this claim, we consider two cases.Case 1 (T is a component of size s + 1): Consider an arbitrary j ∈ T. With probability at least 1/(s + 1), all these nodes are assigned item \(\mathcal {A}^{*}(j)\) under \(\mathcal {A}\), and in this event we have \(u_{j}(\mathcal {A}) \geq u_{j}(\mathcal {A}^{*})\). It follows that \(\mathbf {E}[u_{j}(\mathcal {A})] \geq (1/(s+1)) \cdot u_{j}(\mathcal {A}^{*})\) and thus also

Case 2 (T is a component of size k ≤ s): Consider a component T = {j 1, j 2,…,j k } such that \(u_{j}(\mathcal {A}^{*})\) is ordered non-increasingly. Let \(\mathcal {E}_{\ell }\) be the event that all nodes in T and all agents of an adjacent (s + 1)-component are assigned item \(\mathcal {A}^{*}(j_{\ell })\) under \(\mathcal {A}\); then

W.l.o.g. assume that \(\mathcal {A}^{*}(j) \not = \mathcal {A}^{*}(j^{\prime })\) for j, j ′ ∈ T, otherwise sum the utilities of the two nodes and consider them as one. Moreover, we assume that \(u_{j}(\mathcal {A}^{*})>0\) for all j ∈ T; that is, we ignore nodes that do not contribute to the social welfare of \(\mathcal {A}^{*}\).

The probability \(\mathbf {P}[\mathcal {E}_{\ell }]\) can be split into two parts. First the probability p ℓ that item \(\mathcal {A}^{*}(j_{\ell })\) is assigned to an adjacent triple under the condition that none of the higher valued items is assigned to an adjacent triple. Second the probability q ℓ that none of the higher valued items is assigned to an adjacent triple. We then have \(\mathbf {P}[\mathcal {E}_{\ell }] = \mathbf {P}[\mathcal {E}_{\ell } \cap ({\textstyle \bigcup _{t<\ell } \mathcal {E}_{t}})^{c}] = p_{\ell } \cdot q_{\ell }\). These probabilities are given as follows.

We can compute q ℓ with ℓ > 1 by the following recursion q ℓ = q ℓ−1⋅(1−p ℓ−1). Then, as q 1 = 1, we obtain that \(q_{\ell }={\textstyle {\prod }_{t<\ell }(1-p_{t})}\).

Claim 1: It holds that p ℓ ≥ 1/(s + 1) for all 1 ≤ ℓ ≤ k.

Proof

As \(u_{j_{\ell }}(\mathcal {A}^{*})>0\) there exists an agent j ′ in an adjacent s + 1-component T ′ with \(\mathcal {A}^{*}(j^{\prime })=\mathcal {A}^{*}(j_{\ell })\). Thus with probability of at least 1/(s + 1) all agents in T ′ get assigned item \(\mathcal {A}^{*}(j_{\ell })\) by \(\mathcal {A}\). This concludes the proof of Claim 1. □

Using p ℓ , q ℓ the lower bound for the expected social welfare of \(\mathcal {A}\) can be rewritten as follows.

Claim 2: For a lower bound we can assume that p ℓ = 1/(s + 1) for all 1 ≤ l ≤ k.

Proof

Towards a contradiction assume there are indices ℓ where p ℓ > 1/(s + 1) and setting them to 1/(s + 1) would not result in a lower bound. Let ℓ ′ be the largest index of them.

If ℓ ′ = k there is just one summand containing p k , that is \(p_{k} \cdot {\textstyle {\prod }_{t<k} (1-p_{t})} \cdot u_{j_{k}}(\mathcal {A}^{*})\). This summand is monotone in p k and thus picking the minimal possible value 1/(s + 1) (Claim 1), would only decrease the sum and result in a lower bound. Thus we can assume that ℓ ′ < k. We consider the summands in which \(p_{\ell ^{\prime }}\) occurs in (18) and apply the assumption that p ℓ equals 1/(s + 1) for ℓ > ℓ ′.

Now, as the \(u_{j_{\ell }}(\mathcal {A}^{*})\) are ordered non-increasingly and k ≤ s the above sum is monotone in \(p_{\ell ^{\prime }}\) and thus can be lower bounded by replacing \(p_{\ell ^{\prime }}\) with 1/(s + 1). This concludes the proof of Claim 2. □

By Claim 2 we obtain the following lower bound.

This concludes the analysis of case 2.

Now, we take the sum of the inequalities for each component, and by linearity of expectation infer that the expected social welfare under the consistent assignment \(\mathcal {A}\) is within a factor of

of the optimal social welfare. This concludes the proof of the lemma. □

The remaining arguments carry through without any changes.

Theorem 12