Abstract

A variety of approaches has been developed to deal with uncertain optimization problems. Often, they start with a given set of uncertainties and then try to minimize the influence of these uncertainties. The reverse view is to first set a budget for the price one is willing to pay and then find the most robust solution. In this article, we aim to unify these inverse approaches to robustness. We provide a general problem definition and a proof of the existence of its solution. We study properties of this solution such as closedness, convexity, and boundedness. We also provide a comparison with existing robustness concepts such as the stability radius, the resilience radius, and the robust feasibility radius. We show that the general definition unifies these approaches. We conclude with an example that demonstrates the flexibility of the introduced concept.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In many real-world problems, one does not know exactly the input data of a formulated optimization problem. This may be due to the fact that we are working with forecasts, predictions, or simply unavailable information. To cope with this, it is essential to treat the given data as uncertain. In principle, there are two different ways to treat uncertainty. Either one knows some distribution of the uncertainty, or not. In the first case, this information can be used for the mathematical optimization problem, while in the second case, no additional information is given. Both approaches are widely used in many real-world applications, such as energy management, finance, scheduling, and supply chain management. For a detailed overview of possible applications of robust optimization, we refer to Bertsimas et al. (2011). In this article, we focus mainly on problems without information about the distribution of uncertainty.

Fixing the uncertain scenario to solve the corresponding optimization problem may yield a solution that is infeasible for other scenarios of the uncertainty set. Therefore, one tries to find solutions that are feasible for all possible scenarios of the uncertainty set. The problem of finding an optimal solution, i.e. the solution with the best objective function value, among these feasible solutions is called the robust counterpart (cf. Ben-Tal et al. 2009).

There are many surveys on robust optimization, such as Ben-Tal et al. (2009) or Bertsimas et al. (2011). For tractability reasons, the focus is often limited to robust linear or robust conic optimization. Robust optimization in the context of semi-infinite optimization can be found, for instance, in Goberna et al. (2013), while López and Still (2007); Vázquez et al. (2008); Stein (2012); Stein and Still (2003) consider general solution methods. For applications and results on robust nonlinear optimization, we refer to a survey by Leyffer et al. (2020).

The question of how to construct a suitable uncertainty set is essential for real-world applications. This has been addressed, for example, in Bertsimas et al. (2004) and Bertsimas and Brown (2009). However, the exact size of the uncertainty set is often difficult to determine in practice (cf. Gorissen et al. 2015). Nevertheless, this choice has a great influence on the actual solution (see, for example, Bertsimas and Sim 2004; Chassein and Goerigk 2018a, b). A closely related question is which subset of the uncertainty set is covered by a given solution. Considering a larger uncertainty set may lead to overly conservative solutions, since more and more scenarios have to be considered. This trade-off between the probability of violation and the effect on the objective function value of the nominal problem is called the price of robustness and was introduced by Bertsimas and Sim (2004). Many robust concepts that have been formulated and analyzed in recent years try to deal with the price of robustness in order to avoid or reduce it.

Bertsimas and Sim (2003, 2004) defined the Gamma robustness approach, where the uncertainty set is reduced by cutting out less likely scenarios. The concept of light robustness was first defined by Fischetti and Monaci (2009) and later generalized by Schöbel (2014). Given a tolerable loss for the optimal value of the nominal solution, one tries to minimize the grade of infeasibility over all scenarios of the uncertainty set. Another approach to try to avoid overly conservative solutions is to allow a second stage decision. Ben-Tal et al. (2004) introduced the idea of adjustable robustness, where the set of variables is divided into here-and-now variables and wait-and-see variables. While the former need to be chosen before the uncertainty is revealed, the latter need to be chosen only after the realization is known. For a survey on adjustable optimization, we refer to Yanıkoğlu et al. (2019).

In this article, we pursue a different approach to address the price of robustness, which we call inverse robustness. The main idea is to reverse the perspective of the approaches described above. Instead of finding a solution that minimizes (or maximizes) the objective function under a given set of uncertainties, we want to find a solution that maximizes, for example, the size of the considered set of uncertainties respecting a bound on the objective function. In this way, we are not dependent on the a priori choice of the uncertainty set and then accepting the loss of objective value. Instead, we can set the price we are willing to pay and then find the most robust solution with this given budget. Furthermore, the study of the above approaches is often limited to the robust linear case. We want to define inverse robustness in a more general way and study the concept also for nonlinear problems.

Our approach is related to the inverse perspective to robust optimization introduced by Chassein and Goerigk (2018a)). They considered a generalization of robust optimization problems where only the shape of the uncertainty set is given, but not its actual size. Later, they extended their results to finding a solution that performs well on average over different uncertainty set sizes (cf. Chassein and Goerigk 2018b). Especially for the linear case, concepts have been introduced to measure the robustness of a given solution. The stability radius and resilience radius of a solution can be seen as measures for a fixed solution of how much the uncertain data can deviate from a nominal value while still being an (almost) optimal solution. For a more detailed discussion of resilience we refer to Weiß (2016). Both concepts can be seen as properties of a given solution, and the shape of the uncertainty set must be specified in advance. A similar concept has been studied in the area of facility location problems. Labbé presented in Labbé et al. (1991) an approach to compute the sensitivity of a facility location problem. Several publications (Carrizosa and Nickel 2003; Carrizosa et al. 2015; Ciligot-Travain and Traoré 2014; Blanquero et al. 2011) investigate the question of how to find a solution that is least sensitive, and thus deal with a concept quite similar to resilience. We will show that finding a point that maximizes the stability radius or the resilience radius, given a budget on the objective, can be seen as a special case of inverse robust optimization. However, the general definition of inverse robustness provides more flexibility. First, it allows to define measures that can include distributional information about the uncertainty. Second, the shape of the considered uncertainty is not restricted to given shapes, but can be more complex.

The outline of the article is as follows. In Sect. 2 we define the inverse robust optimization problem (IROP) and discuss properties of this approach using two examples. In the next section we investigate the existence and structural properties of the solutions to the inverse robust optimization problem. In Sect. 4 we discuss different possible choices and descriptions for the cover space that contains all potential uncertainty sets. Afterwards, we compare our general definition with other inverse robustness concepts in Sect. 5. In Sect. 6 we provide and discuss an extended example. Finally, we conclude the article with a brief outlook.

2 The inverse robust optimization problem

In this article, we consider parametric optimization problems given by

depending on an uncertain parameter \(u\in {\mathbb {R}}^m\). We assume that \(f(\cdot ,u),g(\cdot ,u): X \rightarrow {\mathbb {R}}\) are at least continuous functions w.r.t. x for some fixed parameter u, which is also called scenario, belonging to an uncertainty set \({U}\subseteq {\mathbb {R}}^m\). The set \(X \subseteq {\mathbb {R}}^n\) is given by further restrictions on x that do not depend on u. For simplicity, we consider only one constraint that depends on the uncertain parameter u. However, the following results generalize to multiple constraints by considering their maximum. We assume that there is a special scenario \({\bar{u}}\in {U}\) called nominal scenario. This could be the average of the scenarios, or the most likely scenario. The nominal problem \(({\mathcal {P}}_{{\bar{u}}})\) is defined as follows:

We call the objective function value of the optimization problem for the nominal scenario above the nominal objective value and denote it as \(f^*\). Throughout this article we assume that at least the nominal problem has a feasible solution and the nominal objective value \(f^*\) is well-defined.

The idea of the inverse robust optimization problem (IROP) is to allow a nonnegative deviation \(\epsilon \ge 0\) from the nominal objective value in order to cover the uncertainty set \({U}\) as much as possible. We refer to the deviation as the budget. The task to cover \({U}\) as much as possible needs a more precise interpretation. For this, we define a cover space \({\mathcal {W}}\subseteq 2^{U}\) and a merit function \(V:{\mathcal {W}}\rightarrow {\mathbb {R}}\) which maps every subset of \({U}\) to a value in \({\mathbb {R}}\). With this, we obtain an instance of the IROP as follows:

We call the constraint (3) the budget constraint and the constraints (4) the feasibility constraint of the IROP.

Depending on the uncertainty set \({U}\), it may be possible to choose a budget \(\epsilon \) such that the entire uncertainty set can be covered. This case is rather uninteresting, since the budget constraint becomes irrelevant. We will mostly focus on the case where it is a limiting constraint, and given a budget \(\epsilon \), we cannot cover the entire uncertainty set \({U}\). The idea in inverse robustness is to choose a large uncertainty set \({U}\) just as a ground set for the uncertainty. The actual uncertainty set W covered is determined by the optimization problem and depends on the chosen budget \(\epsilon \).

Please note that it is a non-trivial task to define a merit function V and a cover space \({\mathcal {W}}\), since the optimal solution and the tractability depend on it. A bad choice can even lead to an ill-posed problem due to Vitali’s theorem (cf. Halmos 1950). However, this should not be seen as a drawback. These two objects make the definition of an inverse robust optimization problem very general. The merit function can be simply the volume, but can also contain information about the distribution of the uncertain parameter u. The cover space can either consist of sets with a concrete shape, such as ellipses or boxes, or it can also be a generic set system like a \(\sigma \)-algebra.

In Sect. 4 we will discuss some concrete choices of the cover space. In Sect. 3 we are going to show some general statements about the existence and shape of solutions for \(({\mathcal {P}}_\text {IROP})\). One property we want to emphasize here, is that the existence of a feasible solution is relatively easy to guarantee. As long as \(\{{\bar{u}}\}\in {\mathcal {W}}\), there is a feasible solution, as we assumed that the nominal problem is well-defined.

Before turning to the theoretical investigation of the inverse robust optimization problem, we present two examples that show properties of the problem and the difference to the classical robust counterpart.

2.1 Dependency on budget

The first example illustrates that the solution of an inverse robust optimization problem does not depend on the choice of the uncertainty set \({U}\) in general, but instead on the available budget \(\epsilon \ge 0\). To this end, we focus on the following parametric optimization problem

where we consider a parameterized uncertainty set \({U}(a) = [0,a]\) with \(a \ge 1\). Choosing \({\overline{u}}= 0\) leads to the nominal solution

The corresponding inverse robust optimization problem with \({\mathcal {W}}= \{[0,d], d \in [0,a]\}\) and the merit function \(V(W) = vol(W)\) has the form

As we will see later, due to Lemmas 3.5–3.7, considering the cover space \({\mathcal {B}}({U}(a))\) would lead to an equivalent problem.

The inverse robust optimization problem has the solution \(x^*= d^* = \min \left\{ -\frac{1}{2} + \sqrt{\frac{1}{4} + \epsilon },2,a\right\} \). If we choose a large parameter a or a small budget \(\epsilon \), this solution is independent of the uncertainty set parameter \(a \ge 1\) and thus allows modeling mistakes in the specification of \({U}\).

On the contrary, the corresponding strict robust optimization problem

has the solution \(x^*(a) = a\) and \(f^*(a) = a^2+a\) for \(a \in [1,2]\) and no solution for \(a > 2\). This dependence makes it in classical robust optimization crucial to think about the specification of \({U}\) beforehand, whereas in inverse robust optimization a solution always exists.

2.2 Extreme scenarios

In the next example we want to study the effect of extreme scenarios that can occur especially in nonlinear optimization. We consider for \(u \in [0,1]\) the following parameterized optimization problem

If we consider the nominal scenario \({\overline{u}} = 0\), then the nominal objective value \(f^*=0\), we receive for chosen budget \(\epsilon \ge 0\) and the same cover space as before the following inverse robust optimization problem:

The optimal solution is given by \(x^*=\min \{ \epsilon ,1\}\) and \(d^*=\min \{\epsilon ^{\frac{1}{100}},1\}\). On the other hand, choosing an uncertainty set \({U}=[0,a]\) with \(a \in [0,1]\) before solving the classical robust counterpart

leads to the optimal solution \(x^*=a^{100}\).

A very conservative choice in classical robust optimization would be to choose \(a=1\) which would also lead to a high price for robustness and \(x^*=1\) as optimal robust solution. In inverse robustness we would first choose a budget \(\epsilon \), for example \(\epsilon =0.1\). The price of robustness we would pay is fixed. The maximal uncertainty set we can cover with this budget has a size of \(d^*=0.1^{1/100} \approx 0.977\). This means that we only need to pay a price of 0.1, but cover more than \(95\%\) of the area of the original uncertainty set.

Choosing a smaller a priori set with \(a=0.5\) leads to a very small price to pay to achieve robustness, \(\frac{1}{2^{100}}\). However, if one is ready to pay more for robustness, e.g. \(\epsilon = 0.001\) one can cover more than \(90\%\) of the area of the original uncertainty set, which is a large part of all scenarios.

The reason for this phenomenon is that \(u=1\) is, for this problem, an extreme scenario. Covering it has a high price in optimality. In the inverse robust formulation we tend to leave out extreme scenarios and try to find a good solution on the remainder.

These first two examples show two differences to a robust counterpart. First, the solution depends directly on the price we are willing to pay to achieve robustness and not on the a priori choice of the uncertainty set. Second, the inverse robust optimization will leave out extreme scenarios, making it a less conservative approach for robust optimization.

3 Existence and structure of solutions

After the formal introduction and two motivational examples, this section is devoted to properties of the solutions of the problems. We are first especially interested in the existence of a solution and then give some statements about structural properties of the solution. To illustrate that the solution does not always have to exist, we start with a simple example. We consider for an uncertain scenario \(u \in {\mathbb {R}}^2\) the following optimization problem:

If we choose as a nominal scenario \({\bar{u}}=0\), then the optimal solution is given by \({\bar{x}}=0\). As a cover space \({\mathcal {W}}\) we consider unit balls with an arbitrary norm

and as merit function the volume \(V(W):=vol(W)\). If we now allow for a budget \(\epsilon >0\), we receive the following inverse robust optimization problem

All feasible solutions have a volume strictly less than \(\epsilon ^2\). The sequence \((0,W(k,\epsilon ))_{k\in \mathbb {N}}\) is feasible for the inverse robust optimization problem and \(vol(W(k,\epsilon ))\) is monotonically increasing and converges towards \(\epsilon ^2\). This means that in this simple example no optimal solution exists. For this example, the main issue for existence is the choice of the cover space \({\mathcal {W}}\) and not the optimization variables.

After this negative example we now introduce statements that ensure the existence of a solution. Motivated by the above example, we make for the next statements some basic assumptions about the cover space \({\mathcal {W}}\) and the merit function. Given a compact subset \(C\subseteq {U}\), we denote the set of all compact subsets of C by \({\mathcal {K}}(C)\).

Assumption 3.1

We assume that the cover space \({\mathcal {W}}\) satisfies the following conditions:

-

1.

For any \(W \in {\mathcal {W}}\), we know that \(\overline{W} \in {\mathcal {W}}\), where \(\overline{W}\) denotes the closure of W.

-

2.

\({\mathcal {K}}(C) \cap {\mathcal {W}}\) is complete with respect to the Hausdorff-metric \(d_H\) for any compact subset \(C\subseteq {U}\).

-

3.

\(\{{\overline{u}}\} \in {\mathcal {W}}\).

In the following we let \({\tilde{{\mathcal {W}}}}{:}{=}{\mathcal {K}}({U})\cap {\mathcal {W}}\). Note that, if \({U}\) is itself compact, it suffices to check the second condition in Assumption 3.1 for \(C={U}\).

Assumption 3.2

Given a cover space \({\mathcal {W}}\subseteq 2^{U}\), we assume that the objective function \(V: {\mathcal {W}}\rightarrow {\mathbb {R}}\) satisfies the following conditions:

-

1.

\(V: {\tilde{{\mathcal {W}}}} \rightarrow {\mathbb {R}}\) is upper semi-continuous w.r.t. the topology induced by the Hausdorff-metric and

-

2.

\(V(W_1) \le V(W_2)\) for all \(W_1, W_2 \in {\mathcal {W}}\) with \(W_1 \subseteq W_2\).

In the remainder of this section we study how the structure of the parametric problem \(({\mathcal {P}}_u)\) influences an optimal chosen set \(W^* \in {\mathcal {W}}\). We start with a theorem that ensures the existence of a solution of \(({\mathcal {P}}_\text {IROP})\).

Theorem 3.3

Given a compact uncertainty set \({U}\subseteq {\mathbb {R}}^m\), two continuous functions \(f,g: X \times {U}\rightarrow {\mathbb {R}}\) w.r.t. \((x,u) \in X \times {U}\), a compact set \(X \subseteq {\mathbb {R}}^n\), a cover space \({\mathcal {W}}\subseteq 2^{U}\) and a merit function V which fulfill Assumptions 3.1 and 3.2. Then there exists a maximizer \((x^*,W^*) \in X \times {\mathcal {W}}\) of \(({\mathcal {P}}_\text {IROP})\), where \(W^*\) is a compact set.

Proof

First we show that if a solution exists, then the corresponding solution set \(W^*\) is a compact set. Let \(\overline{W}\) be the closure of a set \(W \in {\mathcal {W}}\). Because of Assumption 3.1, we know that \(\overline{W} \in {\mathcal {W}}\) holds. Due to the continuity of f, g w.r.t. u we can also conclude that for any feasible \((x,W) \in {\mathcal {F}}\), where

holds, also \((x,\overline{W}) \in {\mathcal {F}}\) is feasible. Since we assumed that \(V(W_1) \le V(W_2)\) for any \(W_1,W_2 \in {\mathcal {W}}\) with \(W_1 \subseteq W_2\), we can reduce the search space of the original optimization problem to the space of closed elements of the cover space \({\mathcal {W}}\). As the uncertainty set \({U}\) was assumed to be compact, we reduce the search space to the space of compact elements of the cover space which is by definition \({\tilde{{\mathcal {W}}}}\).

In a second step, we show that the feasible set

is compact and non-empty. As \({U}\) is compact, \({\mathcal {K}}({U})\) is also a compact set itself.Footnote 1 Because we assumed that \({\tilde{{\mathcal {W}}}}\) is complete w.r.t. the Hausdorff-metric \(d_H\), we know that it is closed and therefore compact, too. Consequently, the set \(X \times {\tilde{{\mathcal {W}}}}\) is a compact set as the Cartesian product of two compact sets.

Next we prove that \(\tilde{{\mathcal {F}}}\) is a closed set. To do so, we consider a convergent sequence \((x_{k},W_{k})_{{k} \in {\mathbb {N}}} \subseteq \tilde{{\mathcal {F}}}\) with limit \((x^*,W^*) \in X \times {\tilde{{\mathcal {W}}}}\). We have to show that \((x^*,W^*) \in \tilde{{\mathcal {F}}}\). We do this by showing that the constraints (3–(5)) are satisfied.

-

As \((x_{k},W_{k}) \in \tilde{{\mathcal {F}}}\), we know that \({\overline{u}}\in W_{k}\) holds for all \({k} \in {\mathbb {N}}\) and consequently \({\overline{u}}\in \bigcap _{{k} \in {\mathbb {N}}} W_{k} \subseteq W^*\) as \(d_H(W_{k},W^*) \rightarrow 0\), where \(d_H\) is the Hausdorff-metric.

-

Fix an arbitrary \(u^* \in W^*\). As \(\lim _{{k} \rightarrow \infty } d_H(W_{k},W^*) = 0\), we can find a sequence \((u_{k})_{{k} \in \mathbb {N}}\) with \(u_{k} \in W_{k}\) for all \({k} \in \mathbb {N}\) and \(u_{k} \rightarrow u^*\). By continuity of g and feasibility of \((x_{k}, W_{k})\) for all \({k} \in \mathbb {N}\) we get:

$$\begin{aligned} g(x^*,u^*) = \lim _{{k} \rightarrow \infty } g(x_{k},u_{k}) \le \lim _{{k} \rightarrow \infty } \max _{u \in W_{k}} g(x_{k},u) \le 0. \end{aligned}$$As \(u^* \in W^*\) was chosen arbitrarily this implies \(\max _{u \in W^*} g(x^*,u) \le 0\).

-

We can argue the same way as for the feasibility constraint (4) to show: \(\max _{u \in W^*} f(x^*,u) \le f^* + \epsilon \).

This means that all constraints are satisfied and \((x^*,W^*) \in \tilde{{\mathcal {F}}}\). As the sequence \((x_{k},W_{k})_{{k} \in {\mathbb {N}}}\) was arbitrarily chosen, we showed that \(\tilde{{\mathcal {F}}}\) is closed.

In total we know that the feasible set \(\tilde{{\mathcal {F}}}\) is compact as a closed subset of a compact set.

Because V was assumed to be upper semi-continuous w.r.t. W on \({\tilde{{\mathcal {W}}}}\), we can ensure the existence of a maximizer of \(({\mathcal {P}}_\text {IROP})\). Note that the feasible set \({\mathcal {F}}\) is non-empty as the choice \((x^*,\{{\bar{u}}\})\) is feasible by definition of \(f^*\) for all budgets \(\epsilon \ge 0\). \(\square \)

In the statement above we assumed that \({U}\) is compact. We will now drop this assumption but demand that the function V is a finite measure on a \(\sigma \)-algebra.

Theorem 3.4

Assume that \({\mathcal {W}}\) is a \(\sigma \)-algebra on \({U}\) and \(V: {\mathcal {W}}\rightarrow {\mathbb {R}}\) is a finite measure. Let X be a compact set, \(f,g: X\times {U}\rightarrow {\mathbb {R}}\) be continuous functions and let Assumption 3.1 hold. Moreover, assume that there is a sequence of compact sets \(C_k \in {\mathcal {W}}, k\in \mathbb {N}\), such that \(C_{k}\subseteq C_{k+1}\) for \(k\in \mathbb {N}\) and \(\bigcup _{k\in \mathbb {N}} C_k = {U}\). Then there exists a maximizer \((x^*,W^*) \in X \times {\mathcal {W}}\) of \(({\mathcal {P}}_\text {IROP})\).

Proof

As in the proof of Theorem 3.3 we can restrict our consideration to closed sets in \({\mathcal {W}}\). Note that by assumption the feasible set of \(({\mathcal {P}}_\text {IROP})\) is non-empty and we consider a finite measure, which fulfills Assumption 3.2 (2) by definition and guarantees that the objective is bounded. Thus the supremum \(V^*\) exists and we can find a sequence of feasible elements \((x_{l},W_{l})_{{l} \in \mathbb {N}}\) such that

As X is assumed to be compact, we can find a subsequence which converges towards an \(x^* \in X\). We can assume for the remainder that \(\lim _{{l}\rightarrow \infty } {x_l} = x^*\). As we consider a finite measure we can find for each \(\delta > 0\) a \(k \in \mathbb {N}\) such that

Now \({\mathcal {K}}(C_k)\cap {\mathcal {W}}\) is, as in the proof above, again a compact set. Which implies that for a fixed k the sequence \((W_{l} \cap C_k)_{{l} \in \mathbb {N}}\) has an accumulation point \(W^*_k\). W.l.o.g. we assume that this accumulation point is unique. Otherwise, we switch notations to the corresponding subsequence.

As \(W_k^*\) is a compact set and V is a finite measure, we conclude using Fatou’s Lemma that

Because of Eq. (7) we moreover know that \(V(W_{l} \cap C_k) \ge V(W_{l}) -\delta \) for all \({l} \in \mathbb {N}\). Together with Eq. (6) we receive

It is easy to check that \((x^*,W^*)\) is feasible, where we let \(W^* = \bigcup _{k\in {\mathbb {N}}} W^*_{k}\). As \({\mathcal {W}}\) is a \(\sigma \)-algebra we can guarantee \(W^* \in {\mathcal {W}}\) and by the continuity of measures we have \(V(W^*)= \lim _{{l} \rightarrow \infty } V(W_{l}) = V^*\) such that \((x^*,W^*)\) is a maximizer of \(({\mathcal {P}}_\text {IROP})\). \(\square \)

After ensuring the existence of a solution, we can ask which properties of the original problem described by f, g and \({U}\) induce which structure of \(W^*\). One property that we will use later in the discussion of an example problem in Sect. 6 is the inheritance of convexity.

Lemma 3.5

If a given IROP instance has a maximizer \((x^*,W^*)\), and \(f(x^*,\cdot )\), \(g(x^*,\cdot )\) are convex functions w.r.t. \(u \in conv({U})\)—where \(conv({U})\) denotes the convex hull of \({U}\)—, the merit function V satisfies Assumption 3.2 and the cover space satisfies \({\tilde{W}}^* {:}{=}conv(W^*) \cap {U}\in {\mathcal {W}}\), then the decision \((x^*, {\tilde{W}}^*)\) is also a maximizer of the problem.

Proof

Let us denote the optimal solution of the IROP instance as \((x^*,W^*)\). We argue by showing that the choice \((x^*,{\tilde{W}}^*)\) satisfies \(V(W^*) \le V({\tilde{W}}^*)\) and that this choice is feasible w.r.t. the inverse robust constraints.

By definition we know \(W^* \subseteq {\tilde{W}}^* \subseteq {U}\) and by Assumption 3.2 that implies \(V(W^*) \le V({\tilde{W}}^*)\). In order to prove that \({\tilde{W}}^*\) is feasible, we choose any arbitrary \(u \in {\tilde{W}}^*\). By the definition of \({\tilde{W}}^*\) there exist \(w_1,w_2 \in W^*, \lambda \in [0,1]\) such that

holds. Due to the convexity of f w.r.t. \(w \in {U}\) and the feasibility of \(W^*\) we know that

holds as well. Since \(u \in {\tilde{W}}^*\) was chosen arbitrarily we know that

Analogously we show \(g(x^*,u) \le 0 \ \forall u \in {\tilde{W}}^*\). Furthermore we know that \({\bar{u}}\in W^* \subseteq {\tilde{W}}^*\) and consequently \({\tilde{W}}^*\) is feasible and the claim holds. \(\square \)

Next we will show that the continuity of the describing functions f, g w.r.t. u will imply the (relative) closedness of \(W^*\) (w.r.t. \({U}\)).

Lemma 3.6

If a given IROP instance has a maximizer \((x^*,W^*)\), and \(f(x^*,\cdot )\), \(g(x^*,\cdot )\) are continuous functions w.r.t. u and the objective function V satisfies Assumption 3.2 and the cover space satisfies \({\tilde{W}}^* {:}{=}\overline{W^*} \cap {U}\in {\mathcal {W}}\), then the decision \((x^*,{\tilde{W}}^*)\)—where \(\overline{W^*}\) denotes the closure of \(W^*\)—is also a maximizer of the problem.

Proof

Let us denote the optimal solution of the inverse robust problem as \((x^*,W^*)\). We will argue by showing that the choice \((x^*,{\tilde{W}}^*)\) satisfies \(V(W^*) \le V({\tilde{W}}^*)\) and that this choice is feasible w.r.t. the inverse robust constraints.

By definition we know \(W^* \subseteq {\tilde{W}}^* \subseteq {U}\) and by Assumption 3.2 this implies \(V(W^*) \le V({\tilde{W}}^*)\). Next we show that \({\tilde{W}}^*\) is feasible:

To do so, we choose any arbitrary \(u \in {\tilde{W}}^*\). By the definition of \({\tilde{W}}^*\) there exist a sequence \((w_{k})_{{k} \in {\mathbb {N}}} \subseteq W^*\) such that

holds. Due to the continuity of f w.r.t. \(w \in {U}\) we know that

holds as well. Since \(u \in {\tilde{W}}^*\) was chosen arbitrarily we know that

Analogously we show \(g(x^*,u) \le 0 \ \forall u \in {\tilde{W}}^*\). Furthermore we know that \({\bar{u}}\in W^* \subseteq {\tilde{W}}^*\) and consequently \({\tilde{W}}^*\) is feasible and the claim holds. \(\square \)

Last, but not least we will specify conditions for the boundedness of \(W^*\). In the following statement we call a function \(f:{\mathbb {R}}^n \rightarrow {\mathbb {R}}\) coercive, if

Lemma 3.7

If a given IROP instance has a maximizer \((x^*,W^*)\) and \(h(x^*,\cdot ) {:}{=}\max \{f(x^*,\cdot ), g(x^*,\cdot )\}\) is a coercive function w.r.t. u or \({U}\) is bounded, then the set \(W^*\) is bounded.

Proof

For the sake of contradiction, we assume that \(W^*\) is unbounded. If \({U}\) is bounded, this is a contradiction to \(W^* \subseteq {U}\). If \(h(x^*,\cdot )\) is coercive and \(W^*\) is unbounded, then there exists a sequence \((w_{k})_{{k} \in {\mathbb {N}}}\) such that \(w_{k} \in W^*\) and \(\lim _{{k} \rightarrow \infty } \Vert w_{k}\Vert = \infty \). As \((x^*,W^*)\) is assumed to be a maximizer and therefore is feasible, we conclude that

holds. This contradicts the coercivity of \(h(x^*,\cdot )\) that guarantees for every unbounded sequence \((u_{k})_{{k} \in {\mathbb {N}}} \subseteq {U}\)

This settles the proof. \(\square \)

4 Choice of cover space

Before we can introduce the inverse robust optimization problem \(({\mathcal {P}}_\text {IROP})\), we need to specify the cover space \({\mathcal {W}}\). This section illustrates some example cover spaces which satisfy Assumption 3.1 such as the whole power set, the Borel-\(\sigma \)-algebra of the uncertainty set or parameterized families of subsets. These cover spaces can be used together with Theorem 3.3 to generate a solution of the \(({\mathcal {P}}_\text {IROP})\).

The whole power set At first we consider the whole power set \({\mathcal {W}}= 2^{U}\) and show that it satisfies Assumption 3.1. We assume that the uncertainty set \({U}\) is compact. We then know that for an arbitrary \(W \in 2^{U}\) the closure \(\overline{W} \subseteq {U}\) and therefore \(\overline{W} \in {\mathcal {W}}\). This means that the first condition of Assumption 3.1 holds. The second condition

holds because \({U}\) is compact (see Hausdorff 1957). The last condition holds because \({\overline{u}}\in {U}\) and therefore \(\{{\overline{u}}\} \in 2^{U}= {\mathcal {W}}\). Consequently, \({\mathcal {W}}= 2^{U}\) satisfies Assumption 3.1 for any compact uncertainty set \({U}\subseteq {\mathbb {R}}^m\). Because the power set is in a sense big enough to contain a solution for \(({\mathcal {P}}_\text {IROP})\), it is not surprising that it satisfies Assumption 3.1. One application of the whole powerset can be in the discrete case, where \({U}\) is finite. Then one could wish to maximize the number of scenarios covered. In the next steps we gradually decrease the size of the cover space.

Borel-\(\sigma \)-algebra A more suitable choice, especially if we want to consider measures, is a \(\sigma \)-algebra. We are interested in the cover space \({\mathcal {W}}= {\mathcal {B}}({U})\) where \({\mathcal {B}}({U})\) denotes the Borel-\(\sigma \)-algebra on the closed set \({U}\).

By definition the Borel-\(\sigma \)-algebra contains all closed subsets of \({U}\), especially all compact sets and \(\{{\overline{u}}\}\). This means that the first and last condition of Assumption 3.1 hold if \({U}\) is a closed set. As the Borel-\(\sigma \)-algebra on a closed set contains also all compact sets, the completeness condition then also follows. This means that for a closed set \({U}\) Assumption 3.1 is satisfied.

Also the additional assumptions of Theorem 3.4 on a cover space \({\mathcal {W}}\) holds for the Borel-\(\sigma \)-algebra. As the sequence \((C_k)_{k \in \mathbb {N}}\) of compact sets, unit balls around the nominal solution with increasing radius \(k\in \mathbb {N}\) can be considered.

Sets described by continuous inequality constraints Another step towards a numerically more controllable cover space is done by considering

In this cover space each element is described by a continuous inequality constraints on \({U}\). Specifying

we can guarantee that \(W(\delta _{{\overline{u}}}) = \{{\overline{u}}\}\) is in \({\mathcal {W}}\). Furthermore, the inclusion \({\mathcal {K}}({U}) \subseteq {\mathcal {W}}\) holds as for any compact set \(A \in {\mathcal {K}}({U})\) the distance function

is continuous. Because for a compact A the points satisfying \(\delta _A(u) \le 0\) are exactly the points \(u \in A\), we can conclude that \(A = W(\delta _A) \in {\mathcal {W}}\) holds for an arbitrary \(A \in {\mathcal {K}}({U})\).

Consequently, \({\mathcal {W}}\) satisfies Assumption 3.1.

Sets described by a family of continuous inequality constraints Last, but not least, we consider cover spaces that are induced by elements of a design space \(D \subseteq {\mathbb {R}}^q\). Using so called design variables \(d \in D\) we focus on the cover space induced sets

where \(v(\cdot ,d): {U}\rightarrow {\mathbb {R}}\) is a continuous function w.r.t. \(u \in {U}\) for all \(d \in D\). Consequently, all sets W(d) are closed for any \(d \in D\) such that the first condition of Assumption 3.1 is fulfilled by construction. The other two conditions will not automatically hold and depend on the choice of the function v and the set D. As an easy positive example one could think about \(v(u,d) = \Vert u-{\overline{u}}\Vert - d\), for an arbitrary norm \(\Vert \cdot \Vert \). This way the function v induces the elements \(W(d) = B_d({\overline{u}}):= \{u \in {\mathbb {R}}^m \mid \Vert u\Vert \le d\}\) for \(d \in D\). The choice \(d = 0\) ensures \(\{{\overline{u}}\} \in {\mathcal {W}}\). Choosing \(D=[0,r]\) for some \(r\in {\mathbb {R}}\) will then ensure that \({\mathcal {W}}\) satisfies Assumption 3.1. Analogously also ellipsoidal sets can be covered.

However it is possible to construct examples where there exists no solution to \(({\mathcal {P}}_\text {IROP})\). Consider for example \({U}=[-1,1]\), \({\overline{u}}=0\), \(D=[0,1]\) and

We can then set \(W(d)=[-d,0]\) for all \(d \in [0,1)\), however, for \(d=1\) one obtains \(W(1)=[-1,1]\). Given \(X=[-1,1]\), the objective function as \(f(x,u){:}{=}x\) and the constraint as \(g(x,u){:}{=}0.5-x+u\), and consider the corresponding inverse robust problem. Here, we can define a feasible point (x, d) for every \(d \in [0,1)\). If we take the length of the interval W(d) as a merit function, we are interested in the choice \(d = 1\). Because (x, 1) is always infeasible for any \(x \in [-1,1]\), there exists no solution to \(({\mathcal {P}}_\text {IROP})\).

This is not surprising. The choice of a finite dimensional design space \(D \subseteq {\mathbb {R}}^q\) with \(q \in \mathbb {N}\) reduces the inverse robust optimization problem to a general semi-infinite problem (GSIP) as we can rewrite \(({\mathcal {P}}_\text {IROP})\) in this case as:

For GSIP it is well known that the solution might not exist. For a more detailed discussion we refer to Stein (2003). A survey of GSIP solution methods is given in Stein (2012).

A possibility to ensure the existence of a solution and to design discretization methods is to assume the existence of a fixed compact set \(Z\subseteq {\mathbb {R}}^{{\tilde{m}}}\) and a continuous transformation map \(t: D\times Z \rightarrow {\mathbb {R}}^m\), such that for every \(d \in D\) holds

In this case the GSIP reduces to a standard semi-infinite optimization problem and a solution can be guaranteed by assuming compactness of X. This idea is used by the transformation based discretization method introduced in Schwientek et al. (2020).

5 Comparison to other robustness approaches

As we have pointed out in the introduction, there exist several concepts similar to the inverse robustness. Here we briefly discuss how the stability radius, the resilience radius and the radius of robust feasibility fit in the context of inverse robustness.

5.1 Stability radius and resilience radius

The stability radius provides a measure for a fixed solution on how much the uncertain parameter can deviate from a nominal value while still being an (almost) optimal solution. There are many publications regarding the stability radius in the context of (linear) optimization. For an overview, we refer to Weiß (2016).

Let \(\bar{x} \in {\mathbb {R}}^n\) denote an optimal solution to a parameterized optimization problem with fixed parameter \({\bar{u}}\in {U}\) of the form

where the set of feasible solutions is denoted by \(X \subseteq {\mathbb {R}}^n\). The solution \(\bar{x}\) is called stable if there exists a \(\rho > 0\) such that \(\bar{x}\) is \(\epsilon \)-optimal, i.e. \(f(\bar{x}, u) \le f(x, u) + \epsilon \) for all feasible solutions \(x\in X\) with an \(\epsilon \ge 0\), for all uncertainty scenarios \(u\in B_\rho (\bar{u})\). The stability radius is given as the largest such value \(\rho \). Altogether, it can be calculated for a given solution \(\bar{x} \in X\) and a budget \(\epsilon \ge 0\) by

To compare this concept with the concept of inverse robustness, we let the uncertainty set be \({U}{:}{=}{\mathbb {R}}^m\) and define \({{\mathcal {W}}{:}{=}\{ W(d) {:}{=}B_d({\bar{u}}) \mid d {:}{=}[0, \infty )\}}\). Instead of considering the volume vol(W(d)) we can simply consider the radius as merit function \(V(W(d))=d\).

Further, we consider the so called regret (see e.g. Inuiguchi and Sakawa 1995). For a scenario \(u\in {U}\) let \(f^*(u) {:}{=}\min _{x\in X} f(x,u)\). If we now consider as an objective function \(f(x,u)-f^*(u)\) and consider an budget \(\epsilon >0\) then we obtain the following inverse robust optimization problem:

The difference to the problem \((\mathcal {P}_{\text {SR}})\) is that we no longer consider a fixed point \(\bar{x}\), but allow the problem to find a point such that the regret is minimal. If we denote the optimal solution of \((\mathcal {P}_{\text {SR}})\) by \(\rho ^*\) and the optimal solution of \((\mathcal {P}_{\text {IROP,SR}})\) by \(x^*,d^*\) we obtain the following inequality:

To obtain an equality one could replace the feasible set X by a set containing only the nominal solution \(\bar{x}\). In this case we can exactly model the problem \((\mathcal {P}_{\text {SR}})\) as an inverse robust optimization problem.

While the stability radius compares a fixed decision \(\bar{x}\) with all other feasible choices \(x \in X\), the resilience radius allows to change the former optimal decision to gain feasibility. For an introduction into this topic we also recommend (Weiß 2016). Given a budget w.r.t. the objective value, the resilience radius searches the biggest ball centered at a given uncertainty scenario that satisfies feasibility with respect to the original problem. If we denote the optimal solution of a parameterized optimization problem with fixed parameter \(\bar{u}\) again by \(\bar{x}\), then \(\bar{x}\) is called B-feasible for some budget \(B \in {\mathbb {R}}\) and some scenario \(u \in {U}\) if \({f(\bar{x},u)}\) is lower than B.

Then, the resilience ball of a B-feasible solution \(\bar{x}\) around a fixed scenario \({\bar{u}}\in {U}\) is defined as the largest radius \(\rho \ge 0\) such that \(\bar{x}\) is B-feasible for all scenarios in this ball. Finally the resilience radius is the biggest radius of a resilience ball around some \(x \in X\) and can be calculated by solving the following optimization problem:

Letting as above \({\mathcal {W}}{:}{=}\{ W(d) {:}{=}B_d({\bar{u}}) \mid d {:}{=}[0, \infty )\}\) and \(V(W(d))=d\), the problem is directly equivalent to the following inverse robust optimization problem:

where \(\epsilon {:}{=}B-f(\bar{x},\bar{u})\).

5.2 Radius of robust feasibility

The radius of robust feasibility is a measure on the maximal ’size’ of an uncertainty set under which one can ensure the feasibility of the given optimization problem. It is discussed for example in the context of convex programs (Goberna et al. 2016), linear conic programs (Goberna et al. 2021) and mixed-integer programs (Liers et al. 2021). For a recent survey on the radius of robust feasibility, we refer to Goberna et al. (2022).

The radius of robust feasibility \(\rho _{RFF}\) is defined as

where

with \(U_\alpha {:}{=}(\bar{A},\bar{b}) + \alpha Z\) for nominal values \(\bar{A}\in {\mathbb {R}}^{m\times n}, \bar{b}\in {\mathbb {R}}^{m}\) and Z being a compact and convex set. Since we are only interested in the feasibility of \((\text {PR}_\alpha )\), we can replace its objective function by 0. Therefore, given a fixed, convex, compact set Z we can compute the radius of robust feasibility by solving the following optimization problem:

with \(U_\alpha {:}{=}(\bar{A},\bar{b}) + \alpha Z\). To compare this concept to the concept of inverse robustness, we define \(W(d) {:}{=}{\bar{u}}+ dZ\) as subsets of \({U}{:}{=}{\mathbb {R}}^{mn + m}\) characterized by \(d \in D {:}{=}[0, \infty )\). Furthermore we let \({\mathcal {W}}{:}{=}\{W(d)\mid d \in [0,\infty )\}\) and use the merit function \(V(W(d)) {:}{=}d\). Since we do not consider an objective function, we drop the budget constraint (or have the always satisfied constrained \(0 \le 0+\epsilon \)). Thus, given a nominal scenario \({\bar{u}}{:}{=}(\bar{A},\bar{b}) \in {U}\) and a function \(g(x, (A,b)) {:}{=}Ax - b\), we obtain the inverse robust problem

We see that this way to calculate the radius of robust feasibility can be interpreted as a special inverse robust optimization problem, where we are searching for sets of the form \({\bar{u}}+ \alpha Z\) and where we are not interested in the budget constraint. The radius of robust feasibility allows us to analyze problems without any pre-defined values such as the given budget \(\epsilon \ge 0\) or the nominal solution \(f^*\). But, the certain structure of the set Z is rather restrictive and we do not know how the objective value of a solution x with a large radius \(\alpha \) deviates from the nominal solution value.

6 A bi-criteria problem

We have seen that some already existing concepts can be interpreted as specific inverse robust problems, such as the resilience radius and the radius of feasible stability. On the one hand, the concept of inverse robustness unites these approaches into a bigger framework allowing questions to be answered in a more generic context. On the other hand, new problem formulations arise easily as we will see in this final example. To this end, we consider a bi-criteria optimization problem with an inequality constraint as the original problem. We assume that both objectives as well as the constraint are influenced by an uncertainty for which we have distributional information. In detail, we focus on the problem

Please note that the constraint is linear with respect to the decision parameter \(x \in {\mathbb {R}}\), but nonlinear in the uncertainty \(u \in {U}\), such that a solution for a nominal scenario can be easily computed, while the analysis of the behavior with respect to the uncertainty is not trivial. Fixing the nominal scenario \({\overline{u}}= 0\), we can compute the Pareto-front \(F^*\) as

After considering the original problem using a fixed nominal scenario, we now state the inverse robust problem. We allow a generic budget \(\epsilon = (\epsilon _1,\epsilon _2)^\top \in {\mathbb {R}}_{\ge 0}^2\) and fix a point on the Pareto-front, i.e. \(f^* = (-2,4)^\top \in F^*\).

Additionally, we assume that our uncertainty is given by a normal-distributed random variable \(u \sim {\mathcal {N}}(0,1)\). Consequently, we focus on the cover space \({\mathcal {W}}={\mathcal {B}}({\mathbb {R}})\), where \({\mathcal {B}}({\mathbb {R}})\) denotes the \(\sigma \)-algebra of Borel-measurable sets of \({\mathbb {R}}\). We want to maximize the probability of uncertainties we can handle while not losing more than \(\epsilon \) from our solution \(f^*\), which leads to:

As this formulation is numerically challenging, we use the statements from Sect. 2 to reformulate it. Although all statements are formulated for only one objective, it is easy to check that they carry over to the case of multiple objectives and can be used to investigate the present example.

As \(f_1\) is increasing and \(f_2\) is decreasing w.r.t. x, we can substitute \(X ={\mathbb {R}}\) by a compact interval \({\tilde{X}}\) depending on the budget \(\epsilon \). According to Theorem 3.4 then an optimal solution \((x^*,W^*)\) exists and we can replace the supremum of the last problem by a maximum.

As \({\mathcal {B}}({\mathbb {R}})\) is too large as a search space, we substitute it by the set of intervals \(W(d) {:}{=}[d_1,d_2]\) defined by elements of the design space \(D {:}{=}\{ d \in {\mathbb {R}}^2 \, \ d_1 \le d_2\}\). Since \(f_1(x,\cdot ), f_2(x,\cdot ), g(x,\cdot ) \) are convex functions w.r.t. \(u \in {\mathbb {R}}\) for any \(x \in {\mathbb {R}}\), we can use Lemma 3.5. As the describing functions \(f_1,f_2,g\) are continuous w.r.t. u we can use Lemma 3.6 and by Lemma 3.7 we focus on a bounded solution set as \(h(x,u) = \max \{f_1(x,u),f_2(x,u),g(x,u)\}\) is a coercive function w.r.t. u for any arbitrary \(x \in {\mathbb {R}}\). Consequently, the choice of designs \(W(d) = [d_1,d_2], d_1,d_2 \in {\mathbb {R}}\) to search for a convex, closed, bounded set in \({\mathbb {R}}\) is appropriate and leads to the same solution as considering all \(W \in {\mathcal {B}}({\mathbb {R}})\).

We collect this simplification in the following proposition a proof is given in the Appendix.

Proposition 6.1

(Reduced problem reformulation) The inverse robust example problem can be simplified to the reduced inverse robust example problem given as:

Furthermore, this problem is a convex optimization problem w.r.t. \((x,d)^\top \in X \times D\) and has a solution for all \(\epsilon \in {\mathbb {R}}^2_{\ge 0}\).

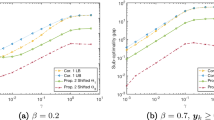

The solutions corresponding to the budgets \(\epsilon _i \in \{0, 0.5, 1,\ldots ,5\}, i=1,2\) are visualized in Fig. 1 where the optimal objective values of \(({\mathcal {P}}_{\text {red}})\) are shown. We see that without budget (meaning \(\epsilon _1=\epsilon _2 = 0\)) the solution does not allow any uncertainty, i.e. \(W(d^*) = \{0\}\). If we allow to differ from the nominal values \(f_1^*\) or \(f_2^*\), we gain more robustness by increasing \(\epsilon _1\) at first. For each \(\epsilon _2\) there is an \(\epsilon _1'\) such that for \(\epsilon _1 \ge \epsilon _1'\) the solution does not change anymore. A proof of this can be found in Proposition A.1 in the “Appendix”. For larger \(\epsilon _2\) the objective value converges towards \({\mathbb {P}}(u\le 1) \approx 0.842\). We can understand this as on the one hand the decision

is feasible for \(({\mathcal {P}}_{\text {red}})\) with the budgets \(\epsilon _1=0\) and \(\epsilon _{2,k} = -2k(1-\exp (1-\frac{1}{k}))+k\) for large \(k \in \mathbb {N}\). On the other hand, \({\mathbb {P}}(u\le 1)\) is an upper bound for IROP by the definition of the equivalent problem \(({\mathcal {P}}_{\text {red}})\). This causes that the objective value has to converge towards \({\mathbb {P}}(u\le 1)\) for \(\epsilon _2 \rightarrow \infty \).

Some of the optimal solution sets \(W^*\) and the robustified decisions \(x^*\) can be seen in Figs. 2 and 3 for different values of \(\epsilon \). In contrast to the resilience ball and stability radius approach, the solution sets of this inverse robust problem does not satisfy an ordering w.r.t. \(\subseteq \) if \(\epsilon \) increases component-wise. This behavior can be seen in Fig. 2 and is caused by a change of the decision \(x^*(\epsilon )\). In return higher objective values are achievable.

7 Conclusion

Given a parameterized optimization problem, a corresponding nominal scenario, and a budget, one can ask for a solution that is close to optimal with respect to the objective function value of the nominal optimization problem, while being feasible for as many scenarios as possible.

In this article, we introduced an optimization problem to compute the best coverage of a given uncertainty set. In Sect. 2 we introduced the inverse robust optimization problem (IROP) and discussed some differences to worst-case robustness using two simple examples. In the next section we then investigated the existence of solutions and some structural properties of the solutions. In Sect. 4 we discussed different cover spaces that satisfy the assumptions needed for the given structural results of Sect. 3. After comparing IROP with the stability radius, the resilience radius, and the radius of robust feasibility in Sect. 5, we provided an example in Sect. 6 that demonstrates the flexibility of the concept of inverse robustness.

This flexibility could in future research be investigated in the light of other robustness concepts. Interesting examples are for example a comparison to Gamma-robustness, or the application of the approach to adjustable robustness. As the last example showed, it should in principle also be possible to apply the concept in a multicriteria setting. In Sect. 2 we discussed the differences to a worst case robust optimization using two simple examples. In future research it will be interesting to compare worst-case robust optimization and inverse robustness using real-world examples.

Availability of data and materials

Not applicable.

Code Availability

Not applicable.

Notes

In the set of subsets of \({\mathbb {R}}^m\) using the topology induced by the Hausdorff-metric, see Hausdorff (1957).

References

Ben-Tal A, Goryashko A, Guslitzer E, Nemirovski A (2004) Adjustable robust solutions of uncertain linear programs. Math Program 99(2):351–376

Ben-Tal A, El Ghaoui L, Nemirovski A (2009) Robust optimization. Princeton series in applied mathematics, vol 28. Princeton University Press, Princeton

Bertsimas D, Brown DB (2009) Constructing uncertainty sets for robust linear optimization. Oper Res 57(6):1483–1495

Bertsimas D, Sim M (2003) Robust discrete optimization and network flows. Math Program 98(1–3):49–71

Bertsimas D, Sim M (2004) The price of robustness. Oper Res 52(1):35–53

Bertsimas D, Pachamanova D, Sim M (2004) Robust linear optimization under general norms. Oper Res Lett 32(6):510–516

Bertsimas D, Brown DB, Caramanis C (2011) Theory and applications of robust optimization. SIAM Rev 53(3):464–501

Blanquero R, Carrizosa E, Hendrix EM (2011) Locating a competitive facility in the plane with a robustness criterion. Eur J Oper Res 215(1):21–24

Carrizosa E, Nickel S (2003) Robust facility location. Math Methods Oper Res 58(2):331–349

Carrizosa E, Ushakov A, Vasilyev I (2015) Threshold robustness in discrete facility location problems: a bi-objective approach. Optim Lett 9(7):1297–1314

Chassein A, Goerigk M (2018) Variable-sized uncertainty and inverse problems in robust optimization. Eur J Oper Res 264(1):17–28

Chassein A, Goerigk M (2018) Compromise solutions for robust combinatorial optimization with variable-sized uncertainty. Eur J Oper Res 269(2):544–555

Ciligot-Travain M, Traoré S (2014) On a robustness property in single-facility location in continuous space. TOP 22(1):321–330

Fischetti M, Monaci M (2009) Light robustness. In: Ahuja RK, Möhring RH, Zaroliagis CD (eds) Robust and online large-scale optimization: models and techniques for transportation systems. Springer, Berlin, pp 61–84

Goberna MA, Jeyakumar V, Li G, López MA (2013) Robust linear semi-infinite programming duality under uncertainty. Math Program 139(1):185–203

Goberna MA, Jeyakumar V, Li G, Linh N (2016) Radius of robust feasibility formulas for classes of convex programs with uncertain polynomial constraints. Oper Res Lett 44(1):67–73

Goberna MA, Jeyakumar V, Li G (2021) Calculating radius of robust feasibility of uncertain linear conic programs via semi-definite programs. J Optim Theory Appl 54:1–26

Goberna MA, Jeyakumar V, Li G, Vicente-Pérez J (2022) The radius of robust feasibility of uncertain mathematical programs: a survey and recent developments. Eur J Oper Res 296(3):749–763

Gorissen BL, Yanıkoğlu İ, den Hertog D (2015) A practical guide to robust optimization. Omega 53:124–137

Halmos PR (1950) Measure theory. Graduate texts in mathematics, vol 18. Springer, New York

Hausdorff F (1957) Set theory. Chelsea Press, New York

Inuiguchi M, Sakawa M (1995) Minimax regret solution to linear programming problems with an interval objective function. Eur J Oper Res 86(3):526–536

Labbé M, Thisse J-F, Wendell RE (1991) Sensitivity analysis in minisum facility location problems. Oper Res 39(6):961–969

Leyffer S, Menickelly M, Munson T, Vanaret C, Wild SM (2020) A survey of nonlinear robust optimization. INFOR Inf Syst Oper Res 58(2):342–373

Liers F, Schewe L, Thürauf J (2021) Radius of robust feasibility for mixed-integer problems. INFORMS J Comput 34:243–261

López M, Still G (2007) Semi-infinite programming. Eur J Oper Res 180(2):491–518

Schöbel A (2014) Generalized light robustness and the trade-off between robustness and nominal quality. Math Methods Oper Res 80(2):161–191

Schwientek J, Seidel T, Küfer K-H (2020) A transformation-based discretization method for solving general semi-infinite optimization problems. Math Methods Oper Res 93(1):83–114

Stein O (2003) Bi-level strategies in semi-infinite programming. Nonconvex optimization and its applications, vol 71. Springer, New York

Stein O (2012) How to solve a semi-infinite optimization problem. Eur J Oper Res 223(2):312–320

Stein O, Still G (2003) Solving semi-infinite optimization problems with interior point techniques. SIAM J Control Optim 42(3):769–788

Vázquez FG, Rückmann J-J, Stein O, Still G (2008) Generalized semi-infinite programming: a tutorial. J Comput Appl Math 217(2):394–419

Weiß C (2016) Scheduling models with additional features—synchronization, pliability and resiliency. Ph.D. thesis, University of Leeds

Yanıkoğlu İ, Gorissen BL, den Hertog D (2019) A survey of adjustable robust optimization. Eur J Oper Res 277(3):799–813

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Contributions

All authors contributed in the conceptualization and writing to the presented research. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Properties of Example 6

Appendix A: Properties of Example 6

Proof of Proposition 6.1

As discussed in Sect. 6 it is enough to consider bounded intervals. Thus, we know that the problem is equivalent to

This problem can be reformulated by computing the maxima within the budget and feasibility constraints

To determine the maximal argument in the feasibility constraint we used the identity \(\partial _u g(x,u) = x + \exp (u)\) and that \(0 \in [d_1,d_2]\) implies that \(g(x,0) = -x \le 0\) is a necessary condition for a feasible choice of x. Therefore \(\partial _u g(x,u) > 0\) holds for all feasible choices of x and \(u \in {\mathbb {R}}\). In a last step, we obtain the maximizer \(d_2\) by considering \(g(x,u) = \int _{d_1}^u \partial _u g(x,w) dw\).

The last constraint also shows that \(d_2 \le 1\). Otherwise if \(d_2>1\) we would violate the feasibility constraint with \(x\ge 0\) via

This means that we receive the following equivalent problem

The objective function is concave in \(d_1 \in (-\infty ,0]\) and \(d_2\in [0,\infty )\). The nonlinear constraint is convex in x and \(d_2\), as \(x \ge 0\) and \(d_2 \in [0,1]\). Since all other constraints are linear w.r.t. \((x,d) \in {\mathbb {R}}^3\), the reduced problem is a convex optimization problem. The existence of a solution is guaranteed by Theorem 3.4. \(\square \)

For the following proposition denote for a given budged \(\epsilon \) the optimal solution of the reduced problem \(({\mathcal {P}}_{\text {red}})\) by \(x^*(\epsilon ),d_1^*(\epsilon )\) and \(d_2^*(\epsilon )\)

Proposition A.1

(Behavior w.r.t. increasing budgets) Fixing \(\epsilon _0 {:}{=}(0,0)^\top \) leads to the solution \(x^* = 2, d^* = (0,0)^\top \) and therefore \(V(W(d^*(\epsilon _0))) = 0\). For any fixed \(\epsilon _1 \ge 0\) we get:

-

\(\lim _{\epsilon _2 \rightarrow \infty } x^*(\epsilon ) = \infty \),

-

\(\lim _{\epsilon _2 \rightarrow \infty } d^*_1(\epsilon ) = -\infty \),

-

\(\lim _{\epsilon _2 \rightarrow \infty } d_2^*(\epsilon ) = 1\).

For any fixed \(\epsilon _2 \ge 0\) and \(\epsilon _1 \ge {\bar{\epsilon }}_1 {:}{=}3\) the second budget constraint and the feasibility constraint are active. Since the feasibility constraint is independent of \(\epsilon \), it will not change w.r.t. an increasing budget and therefore we obtain

-

\(\lim _{\epsilon _1 \rightarrow \infty } x^*(\epsilon ) = x^*({\bar{\epsilon }}_1,\epsilon _2)\),

-

\(\lim _{\epsilon _1 \rightarrow \infty } d^*_1(\epsilon ) = d_1^*({\bar{\epsilon }},\epsilon _2)\),

-

\(\lim _{\epsilon _1 \rightarrow \infty } d_2^*(\epsilon ) = d_2^*({\bar{\epsilon }},\epsilon _2)\).

Proof of Proposition A.1

-

(i)

Case \(\epsilon = (0,0)^\top \). Given the budget \(\epsilon {:}{=}(0,0)^\top \), the reduced inverse robust example problem can be formulated as:

$$\begin{aligned} \max _{x \in {\mathbb {R}}, d_1,d_2 \in {\mathbb {R}}} \,&{\mathbb {P}}(u\le d_2) - {\mathbb {P}}(u\le d_1) \nonumber \\ \text { s.t. } \,&-x+d_2 \le -2 , \end{aligned}$$(A4)$$\begin{aligned}&2x-d_1 \le 4,\\&x(d_2 - 1) + \exp (d_2) - 1\le 0,\nonumber \\&d_1 \le 0,\nonumber \\&0 \le d_2,\nonumber \\&\, 0 \le x. \nonumber \end{aligned}$$(A5)Considering the budget constraints (A4) and (A5), we conclude

$$\begin{aligned} x \in \left[ 2+ d_2, 2 + \frac{d_1}{2} \right] . \end{aligned}$$Since \(d_1 \le 0, d_2 \ge 0\) has to hold, it follows directly

$$\begin{aligned} x = 2 \; \wedge \; d_1 = 0 \; \wedge \; d_2 = 0. \end{aligned}$$Since this is the only feasible point, it is also the optimal solution of the given problem.

-

(ii)

Case \(\lim \epsilon _2 \rightarrow \infty \). We have seen in Sect. 6 that for \(\epsilon _1=0\) and \(\epsilon _2\) going to infinity there is a sequence of feasible points such that the objective value converges towards \({\mathbb {P}}(u\le 1)\). This means that for the optimal objective value we have

$$\begin{aligned} \lim _{\epsilon _2 \rightarrow \infty } {\mathbb {P}}(u\in [d_1^*(\epsilon ),d_2^*(\epsilon )]) = {\mathbb {P}}(u\in (-\infty ,1]). \end{aligned}$$This is only possible if

$$\begin{aligned}&\lim _{\epsilon _2 \rightarrow \infty } d^*_1(\epsilon ) = -\infty ,\\&\lim _{\epsilon _2 \rightarrow \infty } d^*_2(\epsilon ) = 1. \end{aligned}$$Considering the feasibility constraint we receive

$$\begin{aligned} x^*(\epsilon ) \ge \frac{\exp (d_2^*(\epsilon ))-1}{1-d_2^*(\epsilon )}. \end{aligned}$$This shows that we have \(\lim _{\epsilon _2 \rightarrow \infty } x^*(\epsilon )=\infty \).

-

iii)

Case \(\lim \epsilon _1 \rightarrow \infty \). Let us fix an arbitrary \(\epsilon _2 \ge 0\). If we analyze the reduced inverse robust example problem again, we can rewrite its first budget constraint as

$$\begin{aligned} d_2 \le -2+\epsilon _1+x. \end{aligned}$$As we know that the variable \(d_2\) is bounded above by 1 and we already mentioned that a feasible x has to satisfy \(x \ge 0\). Consequently the first budget constraint is fulfilled for all \(\epsilon _1 \ge 3\).

Because \(\epsilon _1\) just occurs in the first budget constraint of the reduced inverse robust example problem, we know that for \(\epsilon _1 \ge 3\) the solution of the problem instance just depends on the choice of \(\epsilon _2 \ge 0\) what proves the claim.

-

(ii)

\(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Berthold, H., Heller, T. & Seidel, T. A unified approach to inverse robust optimization problems. Math Meth Oper Res 99, 115–139 (2024). https://doi.org/10.1007/s00186-023-00844-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00186-023-00844-x