Abstract

This paper extends the matrix exponential spatial specification to panel data models. The matrix exponential spatial panel specification produces estimates and inferences comparable to those from conventional spatial panel models, but has computational advantages. We present maximum likelihood approach to the estimation of this spatial model specification and compare the results with the fixed effects spatial autoregressive panel model.

Similar content being viewed by others

Notes

It is assumed spatial weight matrix to be constant through time.

Notice \(\mathbf{q}\) is symmetric.

Extensively approached in Baltagi (2008).

Where \(\mathbf{j}_p\) is a vector of \(1\)’s and \(p\) is the dimension of \(\varvec{\beta }\).

References

Anselin L (1988) Spatial econometrics: methods and models. Kluwer Academic Publishers, Dordrecht

Baltagi BH (2008) Econometric analysis of panel data, 4th edn. Wiley, London

Elhorst P (2003) Specification and estimation of spatial panel data models. Int Reg Sci Rev 26:244–268

Haining R (2004) Spatial data analysis: theory and practice. Cambridge University Press, Cambridge

Horn R, Johnson C (1988) Matrix analysis. Cambridge University Press, Cambridge

Hsiao C (2003) Analysis of panel data, 2nd edn. Cambridge University Press, Cambridge

Kapoor M, Kelejian H, Prucha I (2007) Panel data models with spatially correlated error components. J Econ 140:97–130

Kelejian H, Prucha I (2002) A generalized moments estimator for the autoregressive parameter in a spatial model. Int Econ Rev 40(2):509–533

LeSage JP, Pace K (2007) A matrix exponential spatial specification. J Econ 140:190–214

Ord J (1975) Estimation methods for models of spatial interaction. J Am Stat Assoc 70:120–126

Silva AR, Alves PF (2007) Computational algorithm for estimation of panel data models with two fixed effects. Braz J Math Stat 25(2):19–32

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

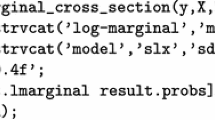

1.1 The FESAR estimation

Usually, \(\mathbf{X}\) has a vector of \(1\)’s for estimating the intercept. The fixed effect \(\mathbf{c}\) is restricted so that \(\mathbf{j}_N ' \mathbf{c}=0\). Thus, the interpretation of the fixed effect \(c_i\) is the difference between the \(i\)th observation mean and the overall mean.

In practice, using the within transformation, the presence of intercept does not make any difference because all effects constants through time are dropped. Therefore, the model (1) can be rewritten as

The likelihood function of the FESAR model considering the within transformation is

Fixing \(\varvec{\beta }\) and \(\sigma ^2\), we can find the likelihood function only for \(\rho \). Thus, let \(\tilde{\varvec{\beta }}=(\mathbf{X'QX})^{-1}\mathbf{X'Qy}\) and \(\tilde{\sigma }^2=\frac{1}{ NT }\mathbf{y'S'PSy}\), where \(\mathbf{P}\) is the orthogonal projections matrix given by \(\mathbf{P} = \mathbf{I}_{ NT } - \mathbf{QX}(\mathbf{X'QX})^{-1}\mathbf{X'Q}\). Therefore, the likelihood for \(\rho \) is given by:

Note that \(\mathbf{I}_{ NT }-\rho (\mathbf{I}_T\otimes \mathbf{W})=\mathbf{I}_T \otimes (\mathbf{I}_N-\rho \mathbf{W})\), and therefore, \(|\mathbf{S}|=| \mathbf{I}_{T}\otimes (\mathbf{I}_N-\rho \mathbf{W}) | = | \mathbf{I}_N - \rho \mathbf{W}|^T \). So, we can write (14) as

Using eigenvalues, (15) can be written as

where \(\omega _i\) is the \(i\)th eigenvalue of the matrix \(\mathbf{W}\).

Note that

because

Consequently, the likelihood for \(\rho \) is

So \(\rho \) can be estimated by an iterative Newton-Raphson process as

where

If it is desirable to estimate the model with two fixed effects, then the matrix \(\mathbf{Q}\) is replaced by

and the estimation procedure is the same as of the one-way fixed effect.

1.2 Properties of the MESPS maximum likelihood estimators

Let the likelihood of the model \(\mathbf{Sy}=\mathbf{X}\varvec{\beta }+\varvec{\epsilon }\) be

once \(|\mathbf{S}|=1\) and \(\varvec{\theta }=(\varvec{\beta }, \gamma ,\alpha )\).

Let, for instance, \(H(\varvec{\theta })=- l (\mathbf{y},\varvec{\theta })\) and \(G(\varvec{\theta })=\frac{\partial }{\partial \varvec{\theta }} H(\varvec{\theta })\). In a first-order Taylor series context, it is reasonable to write

Evaluating \(\varvec{\theta }\) at \(\hat{\varvec{\theta }}_{ML}\) and if \( o \) is a neglectable error, then \(G(\hat{\varvec{\theta }}_{ML})=G(\varvec{\theta }_0 )+\frac{\partial }{\partial \varvec{\theta }}G(\varvec{\theta }_0)(\hat{\varvec{\theta }}_{ML} -\varvec{\theta }_0) \).

Notice that \(G(\hat{\varvec{\theta }}_{ML})=0\) because that is the optimality first condition.

Rewriting \(G(\hat{\varvec{\theta }}_{ML})=G(\varvec{\theta }_0 )+\frac{\partial }{\partial \varvec{\theta }}G(\varvec{\theta }_0)(\hat{\varvec{\theta }}_{ML} -\varvec{\theta }_0) \), we have

And \( I (\varvec{\theta })=\left( - \frac{\partial ^2}{\partial \varvec{\theta } \partial \varvec{\theta }'} l (\mathbf{y},\varvec{\theta })\right) ^{-1}\) is the Fisher information matrix. Rearranging,

Notice that \( l (\mathbf{y},\varvec{\theta })=\sum _{i=1}^{ NT } \log \; f(y_i)\) and \(E \left[ \frac{\partial }{\partial \varvec{\theta }}\log \;f(y_i) \right] =0\) because

and

The Central Limit Theorem may be used in the sense that

Rights and permissions

About this article

Cite this article

Figueiredo, C., da Silva, A.R. A matrix exponential spatial specification approach to panel data models. Empir Econ 49, 115–129 (2015). https://doi.org/10.1007/s00181-014-0862-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00181-014-0862-2