Abstract

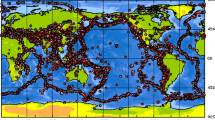

We present a simple method for long- and short-term earthquake forecasting (estimating earthquake rate per unit area, time, and magnitude). For illustration we apply the method to the Pacific plate boundary region and the Mediterranean area surrounding Italy and Greece. Our ultimate goal is to develop forecasting and testing methods to validate or falsify common assumptions regarding earthquake potential. Our immediate purpose is to extend the forecasts we made starting in 1999 for the northwest and southwest Pacific to include somewhat smaller earthquakes and then adapt the methods to apply in other areas. The previous forecasts used the CMT earthquake catalog to forecast magnitude 5.8 and larger earthquakes. Like our previous forecasts, the new ones here are based on smoothed maps of past seismicity and assume spatial clustering. Our short-term forecasts also assume temporal clustering. An important adaptation in the new forecasts is to abandon the use of tensor focal mechanisms. This permits use of earthquake catalogs that reliably report many smaller quakes with no such mechanism estimates. The result is that we can forecast earthquakes at higher spatial resolution and down to a magnitude threshold of 4.7. The new forecasts can be tested far more quickly because smaller events are considerably more frequent. Also, our previous method used the focal mechanisms of past earthquakes to estimate the preferred directions of earthquake clustering, however the method made assumptions that generally hold in subduction zones only. The new approach escapes those assumptions. In the northwest Pacific the new method gives estimated earthquake rate density very similar to that of the previous forecast.

Similar content being viewed by others

1 Introduction

The importance of earthquake forecasting for seismic hazard and risk estimation and the difficulty of resolving basic differences in forecast models have motivated an international effort. How can we establish standards for reporting and testing earthquake forecasts? One significant effort began in California, wherein the Regional Earthquake Likelihood Models (RELM) project published a dozen models for earthquake rate density and a likelihood-based method for prospective testing (Field, 2007; Schorlemmer and Gerstenberger, 2007). The various models included ones with strongly varying emphasis based on past earthquakes, fault geometry and slip rates, and geodetic strain rates. Some assume that large earthquakes reduce the probability of similar ones soon thereafter, while others assume the opposite. While testing awaits further time and more events, the Collaboratory for Study of Earthquake Predictability (CSEP) is extending the tests to several natural laboratories around the globe. CSEP will also test short-term forecasts updated daily.

Our technique is to establish a statistical model which fits the catalog of earthquake times, locations, and seismic moments, and subsequently to base forecasts on this model. While most components of the model have been tested (Kagan and Knopoff, 1987; Kagan, 1991; Jackson and Kagan, 1999; Kagan and Jackson, 2000), some require further exploration and can be modified as our research progresses. Our purpose in this work is to extend a clustering model that we used to make testable forecasts over large regions of the western Pacific (ibid.) to short-term forecasts and additional test laboratories.

Our previous forecast model was based on constructing a map of smoothed rates of past earthquakes. We used the CMT catalog (Ekström et al., 2005) because it employs relatively consistent methods and reports tensor focal mechanisms. The focal mechanisms allow us to estimate the fault plane orientation for past earthquakes, through which we can identify a preferred direction for future events. Using the forecasted tensor focal mechanism, it is possible in principle to calculate an ensemble of seismograms for each point of interest on the earth’s surface.

However, the CMT catalog imposes some restrictions: It only began in 1977, and from that date is complete only for earthquake magnitudes of about 5.8 and larger. Here we experiment with a forecast based on the US Geological Survey PDE catalog (US Geological Survey, 2008) instead. While that catalog does not report focal mechanisms routinely, it uses relatively stable methods and reliably reports earthquakes about one magnitude unit smaller than does the CMT. Preliminary tests indicate that the PDE catalog gives similar results to the CMT and can thus enable credible forecasts for tests in the CSEP effort.

Both the CMT and PDE are global catalogs employing data from worldwide seismic networks. We advocate use of global catalogs even in CSEP test laboratories where regional catalogs with lower magnitude thresholds are available. Why? Global catalogs are more homogeneous than local catalogs, and lack spatial boundary effects which greatly complicate analysis of local catalogs. Moreover, local seismicity may be dominated by a few aftershock sequences of strong events, such as the m 7.5 1952 Kern County and the m 7.3 1992 Landers earthquakes in southern California. Explosions and earthquakes caused by volcanic and geothermal activity are more likely to contaminate earthquake records in local and regional catalogs.

The PDE catalog has significant advantages over that of the CMT. The PDE has a longer observation period (the surface wave magnitude M S was determined starting from the middle of 1968), and a lower magnitude threshold (m t ). Depending on time period and region, the threshold is of the order 4.5–4.7 (Kagan, 2003), i.e., much lower than the CMT catalog threshold (around 5.4–5.8). This means that the forecast estimates can be practically obtained for all global seismic areas. The PDE reports earthquake hypocenters, which can be estimated far more precisely than the moment centroid locations reported by the CMT catalog.

On the other hand, the PDE catalog has a few drawbacks when compared to the CMT data set. For one, the PDE catalog generally lacks the focal mechanism solutions. Also, the PDE reports a somewhat inconsistent mix of different magnitudes (local, body wave, surface wave, moment, etc.) with less accuracy than the moment magnitude inferred from the CMT catalog. Moreover, the PDE magnitudes are influenced by strong systematic effects and biases (Kagan, 2003). Another drawback is that the hypocenter, which the PDE catalog uses for representing location, could be at the edge of the rupture zone for a large earthquake. The moment centroid, reported by the CMT, more meaningfully describes the location even though the centroid is generally more uncertain than the hypocenter.

Our forecasting method involves several steps which we have performed here but which still deserve more research. These steps include the following.

-

Select an earthquake catalog, test it for homogeneity in time and space, and investigate its accuracy and magnitude threshold (Kagan, 2003).

-

Determine the distribution of hypocentral distances between all pairs of earthquakes used to normalize the spatial earthquake distribution in the branching model of earthquake occurrence (Kagan, 1991, Eq. (27); 2007).

-

Using likelihood analysis, estimate the parameters of the clustering model and their uncertainties for use in the short-term forecast model (Kagan et al., 2010).

-

Optimize the smoothing kernel parameters of the long-term forecast using the first part of the catalog as a learning set and the second part as a control set (Kagan and Jackson, 1994).

-

Use determined parameters of the branching model for a short-term forecast of earthquake occurrence.

-

Write a computer program to combine the results of the steps above into an earthquake forecast algorithm, such that the program can be run by an independent party with a clearly identified source of future earthquake data (Jackson and Kagan, 1999; Kagan and Jackson, 2000).

-

Finally, test the forecast using independent data to ensure that the program works as intended (Kagan and Jackson, 2000; Kagan, 2009).

The program we propose in this paper should be considered as a scientific demonstration project; implementing the technique for the real-life earthquake forecast would require additional work.

2 Methods and Data

For our long-term model we factor the rate density into spatial and magnitude distributions, assuming they are mutually independent. We estimate the spatial distribution using all earthquakes above the assumed completeness threshold in the catalog. For the magnitude distribution, we assume a tapered Gutenberg–Richter distribution (Bird and Kagan, 2004; Kagan et al., 2010). At any place, the spatial rate serves as a multiplicative constant, proportional to the “a-value” of the magnitude distribution. Thus, any forecast based on a catalog with a given lower magnitude threshold applies as well to larger earthquakes. We test the models over all magnitudes above the threshold, and because large earthquakes are expected to be less frequent, they count more than smaller ones for the likelihood test. In principle this method would allow us to forecast earthquakes smaller than the threshold magnitude. But we choose not to do so because the smoothing kernels that determine our spatial distribution are influenced indirectly by earthquake magnitudes. Thus, catalogs with smaller earthquakes provide higher spatial resolution.

In our previous papers (Kagan and Jackson, 1994, 2000; Jackson and Kagan, 1999) we studied earthquake distributions and clustering for the global CMT catalog of moment tensor inversions compiled by Ekström et al. (2005). The CMT catalog now contains more than 28,000 earthquake entries for the period 1977/1/1 to 2007/12/31. This catalog characterizes earthquake size by the scalar seismic moment M. Here we use the scalar seismic moment directly, but for easy comparison we convert it into an approximate moment magnitude using the relationship

(Hanks and Kanamori, 1979; Hanks, 1992), where M is measured in Newton m (Nm). Note that various authors use slightly different values for the final constant (here 9.0) so the magnitude values we use in tables and diagrams may not be fully consistent with values used by others.

The PDE (preliminary determinations of epicenters) worldwide catalog is published by the USGS (US Geological Survey, 2008). In spite of its name, the catalog is distributed in final form with a few months latency. When we wrote this paper, the catalog was in final form through the end of 2007. However, truly preliminary information on earthquakes is usually available with a delay of just a few minutes.

The PDE catalog reports earthquake size using several magnitude scales, providing the body wave (m b ) and surface wave (M S ) magnitudes for most moderate and large events since 1965 and 1968, respectively. The moment magnitude (m w ) estimate has been added recently. To construct our smoothed seismicity maps, we need a single standard magnitude estimate for each earthquake. Ideally, we would like to convert all other magnitude estimates into moment magnitude and use that as a standard. Unfortunately, we have not found a reliable way to do that (see Kagan, 2003). Kagan (1991) used a weighted average of several different magnitude scales but found the results not fully satisfying, especially now since several additional magnitudes are reported.

In this work we decided to use as standard the maximum magnitude among those shown for each earthquake. For smaller moderate earthquakes it is usually m b , since other magnitude estimates are unavailable. For larger events M S magnitude would be selected; for even larger recent earthquakes the maximum magnitude most likely is m w .

3 Forecast Models

3.1 Long-term Rate Density Estimates

We have developed a long-term forecast procedure based on earthquake data alone (Kagan and Jackson, 1994, 2000). Our procedure is based on the smoothing of past earthquake locations. The degree of spatial smoothing is controlled by the kernel function

where r is epicentroid distance, r s is the scale parameter. The scaling distance r s may depend on many factors including tectonic environment, Earth structure, earthquake location accuracy, and so on. Thus, one should determine it in principle for each forecast region.

Recently similar techniques based on smoothing of earthquake locations have been applied to long-term forecasts in California and other regions (Rhoades and Evison, 2005, 2006; Rhoades, 2007; Helmstetter et al., 2007; Schorlemmer and Gerstenberger, 2007; Console et al., 2010; Kagan and Jackson, 2010).

For the CMT catalog, we use the same values for a long-term smoothing kernel as Kagan and Jackson (2000, see their Eq. 3): the spatial scale parameter, r s is 15 km for the Northwest Pacific (NW), and the azimuthal concentration factor (δ in Eq. 6, ibid.) is 100.

In Figure 1 we display the NW Pacific long-term rate density for magnitudes of 5.8 and above based on the CMT catalog. A similar forecast based on the PDE catalog with the same time period and magnitude threshold as the CMT catalog is shown in Figure 2. For the PDE catalog we apply an isotropic kernel function with r s = 15 km (2). Both forecasts are similar in appearance. Given that the magnitude threshold is lower for the PDE catalog (m t = 5.0), the forecast in Figure 3 shows more details since many more earthquakes were used in computation. More detailed maps are, in principle, preferable; however, to evaluate the forecast performance quantitatively we need to perform a statistical analysis of the forecasts and earthquakes that occurred after the forecasts have been issued.

Our procedure allows us to optimize the parameters by choosing those r s values which best predict the second part of a catalog from the first part. Kagan and Jackson (1994) subdivided the catalog into two equal parts, whereas Kagan and Jackson (2000) used the CMT catalog for 1977–1996 as the ‘training’ part and as the test catalog the data for 1997–1998. In this paper earthquakes of 2004–2006 are used as the control set.

Based on the optimized parameters, we simulate thousands of earthquake catalogs to compare with the observed test catalog, and evaluate the likelihood function for both types of catalogs (Kagan and Jackson, 1994). In the simulations, earthquakes are assumed to occur at the centers of grid cells with the rates defined by the forecast. We normalize the cell rates and simulate a random number uniformly distributed in the interval [0, 1]. The random number corresponding to a particular segment of the cumulative normalized rate curve defines the cell where an event occurs. We obtain one synthetic catalog by repeating this procedure n times. The simulated catalogs, by construction, are consistent with the forecast model. If the observed catalog is also consistent, its likelihood score should be in the middle range of the simulated values.

Several statistics can be used to characterize a forecast and its fit to a future earthquake occurrence (Kagan, 2009). For an inhomogeneous Poisson process in which n points are observed (x 1,…,x n ) in a region A, the log-likelihood can be written as (Daley and Vere-Jones, 2003, Eq. 7.1.2)

where λ(x i ) is the process rate (density) at a point x i .

The log-likelihood of an inhomogeneous Poisson process is normally compared to a similar log-likelihood, L 0, calculated for a Poisson process with constant intensity (ξ) to obtain the log-likelihood ratio (Daley and Vere-Jones, 2003, Ch. 7; Schorlemmer et al., 2007)

In our calculations we normalize both rates (λ, ξ) by the observed event number n, hence the integral term in (4) is zero.

Kagan and Knopoff (1977) suggested measuring the performance of the earthquake prediction algorithm by first evaluating the likelihood ratio to test how well a model approximates an earthquake occurrence. In particular, they estimated the information score, \({\hat I}\), per one event by

where \(\ell - \ell_0\) is the log-likelihood ratio, n is the number of earthquakes in a catalog, log2 is used to obtain the score measured in the Shannon bits of information, and λ i is the rate of earthquake occurrence according to a stochastic model.

In this work we compute the information score

where λ k is the forecasted rate for the actual epicenter (epicentroid) locations. In the above formulas a spatially uniform Poisson process is assumed as the null hypothesis. This hypothesis, of course, cannot be considered ‘sensible’ (Stark, 1997), the actual distribution of seismicity is highly inhomogeneous. Thus, we use the actual forecast model as another null hypothesis and compare it with the record of predicted earthquakes.

To evaluate the distribution of events in a forecast model we simulate them by a Monte-Carlo procedure. In simulated catalogs we generate multiple \(({\mathcal N} = 10,000)\) sets of n events and calculate the rate for cell centers as the earthquake location

and

The I 3 values characterize the information gain which is obtained from smoothed seismicity maps, as compared to randomly selecting a point from a region on a spherical surface.

The standard deviation of the log-likelihood for the set of independent n events is (Kagan, 2009)

where ν2 is the second moment of the likelihood score and n is the earthquake number.

In Table 1 we display an example of r s determination for the NW Pacific region. To compare the CMT and PDE catalogs, we selected the time period 1977–2003 for calculating a forecast table and next the earthquakes of 2004–2006 to test and optimize our spatial kernel (2).

The best r s value for forecast purposes corresponds to I 3 − I 2 = 0, i.e., when the score for earthquakes which occurred in 2004–2006 equals the average score obtained by simulating events with the smoothed forecast spatial distribution. (Kagan and Jackson, 1994, Fig. 4 discuss in more detail interpretation of I 3 − I 2 differences.) Therefore, the optimal estimates of r s for both catalogs are between 10 and 15 km.

Italy and its surrounding seismicity forecast: Latitude limits from 36.0°N to 48.0°N, longitude limits from 7.0°E to 19.0°E. The forecast is calculated at 121 × 121 grid. Colors show the rate density of shallow (depth less or equal to 70 km) earthquake occurrence calculated using the PDE 1969–2003 catalog; nine m ≥ 4.7 earthquakes for 2004–2006 are shown in white

We could test both forecasts to infer which is preferable on statistical grounds (Schorlemmer et al., 2007). In such a test, simulated earthquake sets from one forecast may be tested for agreement with another model and vice versa. These more extensive tests are the subject of our further work and are not discussed here.

To illustrate how the long-term method can be applied to arbitrary areas of the Earth, in Figure 4 we show the forecast for Italy and surrounding areas and in Figure 6 that for Greece and surrounding areas. In Figure 5 we display the distribution of likelihood scores for observed and simulated catalogs for the forecast model as shown in Figure 4. We simulate earthquake locations with r s = 5.0 km, each time calculating the likelihood function and comparing the function value with that obtained for the real catalog in 2004–2006. We normalize the distributions by dividing the values by the standard deviation [see Eq. (9) and Table 1] so the plotted cumulative curve has a unit standard deviation. From the plot it is clear that r s = 5.0 km is close to optimal, since the curve for observed earthquakes intersects the solid vertical line near the middle.

Dashed line is a cumulative curve of the log-likelihood function differences for 2004–2006 simulated earthquakes (see Fig. 4). The function is normalized to have a unit standard deviation. The red solid line is the Gaussian curve with a zero mean and unit standard deviation. Curves on the right from the Gaussian curve correspond to simulations that are worse than the observed earthquake distribution; curves on the left correspond to simulations that are better than the observed earthquake distribution

Greece and its surrounding seismicity forecast: Latitude limits from 34.0°N to 42.0°N, longitude limits from 19.0°E to 29.0°E. The forecast is calculated at 121 × 121 grid. Colors show the rate density of shallow (depth less or equal to 70 km) earthquake occurrence calculated using the PDE 1969–2003 catalog; 15 m ≥ 5 earthquakes for 2004–2006 are shown in white

3.2 Short-term Rate Density Estimates

The assumptions we make in constructing our initial branching model of earthquake occurrence have been summarized in Kagan and Knopoff (1987), Kagan (1991), and Kagan and Jackson (2000). A similar model called the ETAS (Epidemic Type Aftershock Sequence) was proposed by Ogata (1988, 1998), as well as by Ogata and Zhuang (2006).

In both our model and ETAS, seismicity is approximated by a Poisson cluster process, in which clusters or sequences of earthquakes are statistically independent, although individual earthquakes in the cluster are triggered. The clusters are assumed to form a spatially inhomogeneous Poisson point process with a constant temporal rate. The major assumption regarding the interrelationships between events within a cluster is that the propagation of an earthquake rupture is closely approximated by a stochastic space–time critical branching process. Under this assumption there is a sole trigger for any given dependent event. As shown below, the space–time distribution of interrelated earthquake sources within a sequence is controlled by simple relations justified by analyzing available statistical data on seismicity.

Recently Console and Murru (2001), Console et al. (2006a, b, 2007) and many other researchers have applied the ETAS branching models to Japan, Greece, California, and Italy. Lombardi and Marzocchi (2007) and Marzocchi and Lombardi (2008) have applied the ETAS model to two global catalogs of large earthquakes. Kagan et al. (2010, Section 6.2) discuss certain drawbacks of the ETAS model in its parametrization of seismicity parameters. These defects may influence the implementation and quality of the forecast programs based on the ETAS model.

The short-term forecast in this work was carried out according to the technique described by Kagan and Jackson (2000, Section 3.1.2). Examples of the short-term forecast are shown in several of our papers (ibid., Kagan and Knopoff, 1987; Molchan and Kagan, 1992; Jackson and Kagan, 1999; Helmstetter et al., 2006; Kagan et al., 2003, 2007).

We used the values of parameters obtained during the likelihood function search (Kagan et al., 2010, Table 4) obtained for the full PDE catalog, m ≥ 5.0: the branching coefficient μ = 0.141, the parent productivity exponent a = 0.63, the time decay exponent θ = 0.28, and the horizontal location error ερ = 9.5 km. From other tables by Kagan et al. (2010) we see that the values of the earthquake clustering parameters are similar for subduction zones (trenches), active continental zones and plate interiors. These regions are of interest for evaluating seismic hazard.

Figure 7 shows a short-term forecast for the Northwest Pacific region. The map is more detailed than similar ones obtained with the help of the CMT catalog such as in Fig. 5 by Jackson and Kagan (1999) or Fig. 5.4 by Kagan et al. (2003). More general comparison of both forecasts based on the PDE and the CMT catalogs is complicated by the issue of close-in-time aftershocks (Kagan et al., 2010, Section 6.1). As with our long-term forecast, these tests will be subjects of our future work and are not discussed here.

3.3 Forecasts Comparison

In Table 2 we display numerically the forecasts based on the CMT and PDE catalogs for an East-West profile north of the Phillipines. Kagan and Jackson (2000) showed the same area in their Table 1. Columns 3 and 6 show the rate of events per unit area at or above the magnitude thresholds of two catalogs, m t = 5.8 for the CMT and m t = 5.0 for the PDE catalog. The earthquake rate sum for the PDE catalog is \(670 \times 10^{-9}\,\hbox{Eq/day}\times\,\hbox{km}^2\), while for the CMT catalog it is equal to \(94 \times 10^{-9}\ \hbox{Eq/day}\times\,\hbox{km}^2\). The ratio of the two rates is 7.1, close to the value of 6.3 expected for a magnitude threshold difference of 0.8 from the Gutenberg–Richter magnitude distribution with a b value of 1.0. The same sum for Table 1 of Kagan and Jackson (2000) is \(117 \times 10^{-9}\ \hbox{Eq/day}\times\,\hbox{km}^2\), close to the present value of \(94 \times 10^{-9}\ \hbox{Eq/day}\times\,\hbox{km}^2\). This slight difference may result from the fact that here we are using data up to the end of 2007 for estimating rate density.

The short-term forecast rates are more variable. The PDE and CMT catalogs produce similar rate densities (columns 4 and 7 of Table 2), but the 2008 forecast using the CMT differs from the corresponding value from 1999. Also, the ratio of long- and short-term rates is dissimilar for both catalogs, a difference mostly due to lower occurrence rates for the CMT catalog because of its higher magnitude threshold. Why is the ratio of long- and short-term rates higher in Table 1 by Kagan and Jackson (2000)? At that time (ibid.) we selected the region of high current activity to demonstrate high short-term rates due to nearby earthquakes also close-in-time.

4 Conclusions

We demonstrated a technique for calculating and evaluating long- and short-term earthquake rate forecasts in practically any seismically active region of the Earth. This advance was possible after our statistical analysis of the global earthquake occurrence (Kagan et al., 2010) that yielded parameter values necessary for a forecast program. The forecast tables can be tested both retrospectively and prospectively, using the methods developed by us and other researchers. In using the PDE rather than the CMT catalog, we give up moment tensor information but gain a lower magnitude threshold, a longer observation period, and consequently more earthquakes on which to base the forecast. Our comparison of forecasts based on the CMT and PDE in the NW Pacific revealed that the PDE forecasts are quantitatively similar to and produce higher resolution than the CMT. Thus, our present forecast program represents a significant progress when compared to our Pacific forecast (Kagan and Jackson, 2000).

References

Bird, P. and Kagan, Y.Y. (2004), Plate-tectonic analysis of shallow seismicity: Apparent boundary width, beta, corner magnitude, coupled lithosphere thickness, and coupling in seven tectonic settings, Bull. Seismol. Soc. Am. 94(6), 2380–2399 (plus electronic supplement).

Console, R. and Murru, M. (2001), A simple and testable model for earthquake clustering, J. Geophys. Res. 106, 8699–8711.

Console, R., Murru, M. and Catalli, F. (2006a), Physical and stochastic models of earthquake clustering, Tectonophysics, 417, 141–153.

Console, R., Rhoades, D.A., Murru, M., Evison, F.F., Papadimitriou, E.E. and Karakostas, V.G. (2006b), Comparative performance of time-invariant, long-range and short-range forecasting models on the earthquake catalog of Greece, J. Geophys. Res. 111, B09304, doi:10.1029/2005JB004113.

Console, R., Murru, M., Catalli, F. and Falcone, G. (2007), Real-Time forecasts through an earthquake clustering model constrained by the rate-and- state constitutive law: Comparison with a purely stochastic ETAS model, Seism. Res. Lett. 78(1), 49–56.

Console, R., Jackson, D.D. and Kagan, Y.Y. (2010), Using the ETAS model for catalog declustering and seismic background assessment, Pure Appl. Geophys., this issue.

Daley, D.J. and Vere-Jones, D. An Introduction to the Theory of Point Processes, Springer-Verlag, New York (2003), 2nd ed., vol. 1, 469 pp.

Ekström, G., Dziewonski, A.M., Maternovskaya, N.N. and Nettles, M. (2005), Global seismicity of 2003, Centroid-moment-tensor solutions for 1087 earthquakes, Phys. Earth Planet. Inter., 148(2–4), 327–351.

Field, E.H. (2007), Overview of the Working Group for the Development of Regional Earthquake Likelihood Models (RELM), Seism. Res. Lett. 78(1), 7–16.

Hanks, T.C. (1992), Small earthquakes, tectonic forces, Science 256, 1430–1432.

Hanks, T.C. and Kanamori, H. (1979), A moment magnitude scale J. Geophys. Res. 84, 2348–2350.

Helmstetter, A., Kagan, Y.Y. and Jackson, D.D. (2006), Comparison of short-term and time-independent earthquake forecast models for southern California, Bull. Seismol. Soc. Amer. 96(1), 90-106.

Helmstetter, A., Kagan, Y. Y. and Jackson, D. D. (2007), High-resolution time-independent grid-based forecast for M ≥ 5 earthquakes in California, Seism. Res. Lett. 78(1), 78–86.

Jackson, D.D. and Kagan, Y.Y. (1999), Testable earthquake forecasts for 1999, Seism. Res. Lett. 70(4), 393–403.

Kagan, Y.Y. (1991), Likelihood analysis of earthquake catalogs, Geophys. J. Int. 106(1), 135–148.

Kagan, Y.Y. (2003), Accuracy of modern global earthquake catalogs, Phys. Earth Planet. Inter. (PEPI) 135(2–3), 173–209.

Kagan, Y.Y. (2007), Earthquake spatial distribution: The correlation dimension, Geophys. J. Int. 168(3), 1175–1194.

Kagan, Y.Y. (2009), Testing long-term earthquake forecasts: Likelihood methods and error diagrams, Geophys. J. Int., 177(2), 532–542, doi:10.1111/j.1365-246X.2008.04064.x.

Kagan, Y.Y., Bird, P. and Jackson, D.D. (2010), Earthquake patterns in diverse tectonic zones of the Globe, Pure Appl. Geophys., this issue.

Kagan, Y.Y. and Jackson, D.D. (1994), Long-term probabilistic forecasting of earthquakes, J. Geophys. Res. 99(B7), 13,685–13,700.

Kagan, Y.Y. and Jackson, D.D. (2000), Probabilistic forecasting of earthquakes, Geophys. J. Int. 143(2), 438–453.

Kagan, Y.Y. and Jackson, D.D. (2010), Short- and long-term earthquake forecasts for California and Nevada Pure Appl. Geophys., this issue.

Kagan, Y.Y., Jackson, D.D. and Rong, Y.F. (2007), A testable five-year forecast of moderate and large earthquakes in southern California based on smoothed seismicity, Seism. Res. Lett. 78(1), 94–98.

Kagan, Y.Y., Rong, Y.F. and Jackson, D.D. Probabilistic forecasting of seismicity, Chapter 5.2 in Earthquake Science and Seismic Risk Reduction, NATO Science Series IV: Earth and Environmental Sciences, vol. 32, eds. F. Mulargia and R.J. Geller, pp. 185–200 (Kluwer, Dordrecht, The Netherlands. 2003).

Kagan, Y.Y. and Knopoff, L. (1977), Earthquake risk prediction as a stochastic process, Phys. Earth Planet. Inter., 14(2), 97−108.

Kagan, Y.Y. and Knopoff, L. (1987), Statistical short-term earthquake prediction, Science 236(4808), 1563–1567.

Lombardi, A.M. and Marzocchi, W. (2007), Evidence of clustering and nonstationarity in the time distribution of large worldwide earthquakes, J. Geophys. Res. 112, B02303, doi:10.1029/2006JB004568.

Marzocchi, W. and Lombardi, A.M. (2008), A double branching model for earthquake occurrence, J. Geophys. Res. 113, B08317, doi:10.1029/2007JB005472.

Molchan, G.M. and Kagan, Y.Y. (1992), Earthquake prediction and its optimization, J. Geophys. Res. 97(B4), 4823–4838.

Ogata, Y. (1988), Statistical models for earthquake occurrence and residual analysis for point processes, J. Am. Statist. Assoc. 83, 9–27.

Ogata, Y. (1998), Space–time point-process models for earthquake occurrences, Ann. Inst. Statist. Math. 50(2), 379–402.

Ogata, Y. and Zhuang, J.C. (2006), Space–time ETAS models and an improved extension, Tectonophysics 413(1–2), 13–23.

Rhoades, D.A. (2007), Application of the EEPAS Model to Forecasting Earthquakes of Moderate Magnitude in Southern California, Seism. Res. Lett. 78(1), 110–115.

Rhoades, D.A. and Evison, F.F. (2005), Test of the EEPAS forecasting model on the Japan earthquake catalog, Pure Appl. Geophys. 162(6–7), 1271–1290.

Rhoades, D.A. and Evison, F.F. (2006), The EEPAS forecasting model and the probability of moderate-to-large earthquakes in central Japan, Tectonophysics, 417(1–2), 119–130.

Schorlemmer, D. and Gerstenberger, M.C. (2007), RELM testing Center, Seism. Res. Lett. 78(1), 30–35.

Schorlemmer, D., Gerstenberger, M.C., Wiemer, S., Jackson, D.D. and Rhoades, D.A. (2007), Earthquake likelihood model testing, Seism. Res. Lett. 78(1), 17–29.

Stark, P.B., (1997), Earthquake prediction: The null hypothesis, Geophys. J. Int. 131(3), 495–499.

US Geological Sturvey, Preliminary determinations of epicenters (PDE), (2008), US Dep. of Inter., Natl. Earthquake Inf. Cent., http://neic.usgs.gov/neis/epic/epic.html or http://neic.usgs.gov/neis/epic/code_catalog.html.

Acknowledgments

The authors appreciate support from the National Science Foundation through grants EAR 04-09890 and EAR-0711515, as well as from the Southern California Earthquake Center (SCEC). SCEC is funded by NSF Cooperative Agreement EAR-0529922 and the US Geologic Survey (USGS) Cooperative Agreement 07HQAG0008. We thank Kathleen Jackson for significant improvements in the text. Comments by two anonymous reviewers and by the Associate Editor D. Rhoades have been helpful in the revision of the manuscript. Publication 1245, SCEC.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Kagan, Y.Y., Jackson, D.D. Earthquake Forecasting in Diverse Tectonic Zones of the Globe. Pure Appl. Geophys. 167, 709–719 (2010). https://doi.org/10.1007/s00024-010-0074-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00024-010-0074-4