Abstract

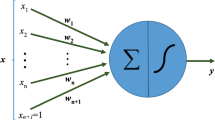

A constructive algorithm is proposed for feed-forward neural networks which uses node-splitting in the hidden layers to build large networks from smaller ones. The small network forms an approximate model of a set of training data, and the split creates a larger, more powerful network which is initialised with the approximate solution already found. The insufficiency of the smaller network in modelling the system which generated the data leads to oscillation in those hidden nodes whose weight vectors cover regions in the input space where more detail is required in the model. These nodes are identified and split in two using principal component analysis, allowing the new nodes to cover the two main modes of the oscillating vector. Nodes are selected for splitting using principal component analysis on the oscillating weight vectors, or by examining the Hessian matrix of second derivatives of the network error with respect to the weights.

Similar content being viewed by others

References

Wynne-Jones M. Constructive algorithms and pruning: Improving the multi layer perceptron. In: Vichnevetsky R, Miller JJH, editors. Proceedings of the 13th IMACS World Congress on Computation and Applied Mathematics; 1991 July; Dublin: 747–50

Ash T. Dynamic node creation in backpropagation networks. La Jolla (CA): Institute for Cognitive Science, UCSD; 1989 Feb. Technical Report 8901

Press WH, Flannery BP, Teukolsky SA, Vetterling WT. Numerical Recipes in C: The Art of Scientific Computing. Cambridge: Cambridge University Press, 1986

Oja E. Subspace Methods of Pattern Recognition. Section 3.2. Letchworth: Research Studies Press, 1983

Sanger T, Optimal unsupervised learning in a single-layer feedforward neural network. Neural Networks 1989; 2: 459–473

Hanson SJ, Meiosis Networks. In: Touretzky DS, editor. Advances in Neural Information Processing Systems 2. San Mateo, CA: Morgan Kaufmann, 1990 Apr: 533–541

Mozer MC, Smolensky P. Skeletonization: a technique for trimming the fat from a neural network. In: Touretzky DS, ed. Advances in Neural Information Processing Systems 1. San Mateo, CA: Morgan Kaufmann, 1989 Apr: 107–115

Le Cun Y, Denker JS, Solla SA. Optimal Brain Damage. In: Touretzky DS, editor. Advances in Neural Information Processing Systems 2. San Mateo, CA: Morgan Kaufmann, 1990 Apr: 598–605

Heading AJR. An analysis of noise tolerance in multi-layer perceptrons. Malvern, UK: DRA Electronics Division, Research Note SP4 122

Huang D, Ariki Y, Jack MA. Hidden Markov models for speech recognition, Edinburgh: Edinburgh University Press, 1990

Bridle JS, Cox SJ. Recnorm: Simultaneous normalisation and classification applied to speech recognition. In: Lippmann RP, Moody JE, Touretzky DS, eds. Advances in Neural Information Processing Systems 3. San Mateo, CA: Morgan Kaufmann, 1991 Sept: 234–240

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Wynne-Jones, M. Node splitting: A constructive algorithm for feed-forward neural networks. Neural Comput & Applic 1, 17–22 (1993). https://doi.org/10.1007/BF01411371

Received:

Issue Date:

DOI: https://doi.org/10.1007/BF01411371