Abstract

The objective of this study is to evaluate the accuracy of AI in the diagnosis of early cervical cancer using a systematic evaluation/meta-analysis approach and a comprehensive search of published literature. A comprehensive computer search of foreign language databases such as PubMed/MEDLINE, Embase, Cochrane Library, and IEEE; and Chinese databases such as China Knowledge Network, Wan fang Data Knowledge Platform, and Wipu.com (VIP) was conducted to retrieve reports on diagnostic accuracy of AI in early cervical cancer included between 1946 and December 2022. The literature was screened according to inclusion and exclusion criteria, and the quality of the included literature was evaluated using the QUADAS-2 quality evaluation chart. 2 × 2 diagnostic data in text were extracted and complete data were calculated using Review Manager 5.3. Heterogeneity between studies was analyzed using Stata SE 15.0 software with Meta Di Sc 1.4 and causes of heterogeneity were sought. A total of 42 data sets were included in the study of AI for the identification of benign and malignant cervical vitreous nodules, with a combined Sen value of 0.90; a combined Spe value of 0.90; a combined + LR value of 9.0; a combined −LR combined value was 0.11; DOR combined value was 83; and AUC was 0.96. The Fagan plot suggested a 50% pre-test probability and a 90% post-test probability of confirming diagnosis when the AI model diagnosed a glassy nodule positively, and a 10% probability of misdiagnosing the nodule when the result was negative. A total of 34 data sets were included in the study to determine benignity and malignancy of solid cervical nodules by AI, showing a combined Sen value of 0.92; a combined Spe value of 0.93; a combined + LR value of 13.37; a combined −LR combined value of 0.08; DOR combined value of 164; AUC of 0.97. The Fagan plot suggested a 50% pre-test probability and a 93% post-test probability of confirming the diagnosis of a solid cervical nodule when the AI model was positive, and an 8% probability of misdiagnosing the nodule when the result was negative. The results of likelihood ratio dot plots suggest that the use of an AI model for cervical detection in the clinical setting has a good exclusionary diagnostic power. Summing up the accuracy and specificity of the A1 model for diagnosis of early cervical cancer, accuracy for diagnosis of solid cervical nodules (0.90) > diagnosis of cervical nodules (0.92), and specificity for diagnosis of solid cervical nodules (0.90) > diagnosis of cervical nodules (0.93). The AI model is highly accurate in diagnosing early cervical cancer and has high clinical diagnostic value. The accuracy of the AI model in diagnosing solid nodules in the cervical region was higher than diagnosing ground glass nodules in the cervical region. The labeling method, image pre-processing method, and feature learning method affected the accuracy of the AI model in diagnosing early cervical cancer, while the choice of learning image library and validation database did not usually affect the accuracy of the model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Cervical cancer is one of the deadliest gynecological malignancies in the world, with more than half a million new cases each year. According to Global Cancer Statistics Report 2020, the number one cancer causing death among women in 157 out of 185 countries in the world is breast cancer, and the second most common cancer is cervical cancer, with more than 600,000 new cases (6.5%) and 340,000 deaths (7.7%) reported worldwide in 2020 [1]. In China, cervical cancer accounts for about 110,000 cases (5.2%) and 59,000 deaths (5.0%), making it the sixth most prevalent cancer among women [2]. Due to the heavy burden of this disease, the World Health Organization (WHO) adopted in 2020 a global strategy to eliminate cervical cancer by 2030 through quality cervical screening, i.e., to reduce the incidence of cervical cancer to below 4 per 100,000. The long window period also provides time and opportunity for cervical cancer screening, which can be done through timely and effective cervical screening measures to detect cervical lesions and obtain early treatment [3]. As cervical cancer prevention and control efforts have been aggressively pursued, more and more precancerous cervical lesions have been detected, reducing the risk of cervical cancer and cervical cancer death by approximately 80–90% [4]. Over the years, global authorities have continued to update cervical cancer screening methods and ages to find a more integrated approach, with several important guidelines being updated. As early as 1988, the American Cancer Society (ACS) recommended that the initial population for cervical screening should be women with a history of sexual intercourse or who had reached adulthood (18 years of age), and in 2002 ACS recommended screening for women who had been sexually active for at least three years and were over 21 years of age. In 2012, cytology should be recommended every three years between the ages of 21 and 29 years, with combined screening recommended for women over 30 years of age [5]. In 2016, ACOG stated in its cervical cancer screening and prevention guidelines that FDA-approved HPV testing may be used for primary cervical screening in women aged 25 years and older, but that cytologic screening alone and combined screening is also recommended (Level B evidence). In July 2020, ACS, in its latest guidelines for cervical cancer screening, recognized the importance of primary HPV testing in screening and, for the first time, raised the age of cervical cancer screening to 25 years, with primary HPV testing preferred every 5 years between 25 and 65 years [6]. 1 primary HPV test every 5 years (strongly recommended) [7]. In July 2021, WHO recommended a change in the exact mode of initial screening to HPV DNA testing and the age of women starting screening, for the general population, from 30 years of age, using HPV DNA as initial screening and for women with human immunodeficiency virus infection from 25 years of age screening is initiated.

The emergence of AI-based cervical nodule detection systems based on deep learning has become an inevitable trend, which is a big step forward toward precision medicine [8]. The AI cervical nodule-assisted diagnosis system is based on automatically extracted computer data about cervical nodules and can quickly detect nodules and respond to nodule information including nodule location, density, and size, and predict their benign and malignant risk [9]. Many reports have shown that cervical nodule-assisted detection systems can improve the detection rate and efficiency of radiologists in detecting cervical nodules, and that use of AI software in medical imaging can reduce pressure on physicians, thus assisting them in accurate diagnosis and treatment [10]. However, the role of AI in clinical practice is still at a trial and research stage, and its performance deserves further evaluation [11]. Expanding and diversifying datasets, maintenance, external validation, cross-validation, regulatory approvals, metrics evaluation, benchmarking, clinical trials, peer review, ethical considerations, interpretability, monitoring, robustness testing, expert input, implementation, specific clinical contexts, transparency, and professional education are all part of the validation process for AI models in healthcare. AI is unique in its ability to transform image interpretation from a subjective, qualitative task to an objective, quantitative one, with its efficient mechanism for processing complex images [12]. However, the achievements of AI in the diagnosis of early cervical cancer are manifold, and the development of AI models has been greatly hampered by confusion caused by the variety of AI models and the diversity of methods. The present study will address these issues by conducting a systematic evaluation and meta-analysis of AI models for diagnosis of early cervical cancer [13]. The main topics include (1) evaluation of Sen and specificity of the AI model for diagnosis of cervical and cervical solid nodules, respectively, and (2) evaluation of Sen and specificity of the labeling method, image pre-processing method, feature learning method, and image library in diagnosis, respectively.

2 Information and Methods

2.1 Search Strategy

Foreign language databases, Chinese databases such as China Knowledge Network (CNKI), Wan Fang, and Wei Pu, to find diagnostic reports published between 1946 and December 2022 on diagnostic accuracy of A1 in early cervical cancer accuracy of report [14]. There were no language restrictions. We developed a search strategy combining keywords and medical subject terms (Me SH)/free words and will use the following expressions.

#1 “AI” [Mesh].

#2 “Machine learning model”.

#3 “Machine learning algorithms” [Title/Abstract].

#4 “Algorithms” [Title/Abstract].

#5 “Machine learning” [Title/Abstract].

#6 “Deep learning” [Title/Abstract].

#7 “Computational Approach”.

#8 “Automated-computer aided”.

#9 “Convolutional neural network”.

#10 “Artificial neuronal network”.

#11 “Support Vector Machine”.

#12 OR/#1-#11.

#13 cervical* [Mesh] [Title/Abstract].

#14 Pulmonary* [Title/Abstract].

#15 chest* [Title/Abstract].

#16 bronchial* [Title/Abstract].

#17 OR/#13-#16.

#18 nodule* [Mesh] [Title/Abstract].

#19 cancer* [Mesh] [Title/Abstract].

#20 tumor*[Title/Abstract].

#21 neoplasm* [Title/Abstract].

#22 lesion*[Title/Abstract].

#23 Carcinoma* [Title/Abstract].

#24 OR/#18-#23.

#25 #17 AND #24.

#26 #12 AND #25.

2.2 Eligibility and Exclusion Criteria

2.2.1 Inclusion Criteria

Independent assessment will be performed by 2 reviewers. Inclusion criteria are as follows: (1) At least one AI model is included to be used for diagnosis of early cervical cancer. (2) The data are complete containing sample size, Sen, specificity, or sufficient information to construct a 2*2 outcome table. (3) The gold standard for Sen and specificity reference is tissue biopsy. (4) The article generally conforms to the STARD (Standards for the Reporting of Diagnostic Accuracy Studies) statement.

2.2.2 Exclusion Criteria

-

(1)

Studies for which data were not available or could not be calculated in text, appendices, or after contacting the lead author were excluded.

-

(2)

Excluded were reviews, case controls, case reports, reviews, conference abstracts, animal studies, and other types of articles.

-

(3)

Articles with cases lacking pathological gold standard confirmation of results were excluded.

2.2.3 Study Selection and Data Extraction

Articles will be independently screened by two researchers based on title and abstract, and those that do not meet the criteria will be eliminated [15]. If there is a difference between the two researchers’ evaluations, disagreement will be resolved through discussion and, if necessary, submitted to a third researcher for independent review [16]. Following this initial stage, all remaining articles will be reviewed in full by two fellows independently, and these two fellows will determine the final study by inclusion or exclusion from unsatisfactory literature [17].

2.3 Quality Assessment

The study used the QUADAS-2 tool to assess the quality of included diagnostic accuracy literature, with two reviewers independently assessing the risk of bias for each included study [18]. The QUADAS-2 tool evaluates the patient selection, index test, reference standard, and flow/timing domains to assess the quality of diagnostic accuracy studies in systematic reviews. Consider particular elements for each domain. It has an impact on the overall caliber and dependability of the data used in systematic reviews and meta-analyses to support diagnostic accuracy. When using the QUADAS-2 tool, reviewers were asked to evaluate the risk of bias sequentially according to the four components described on the website. In the second stage, the QUADAS-2 tool is used to determine the level of risk of bias, and information in the text is used to determine the level of “High risk”, “Unclear risk”, or “Low risk”. If all items in one of the modules are scored as ‘Yes’, then a low risk of bias can be concluded; if one of the answers in one of the modules is ‘No’, then a possible risk of bias can be assessed and reviewer needs to further use guidelines provided in Stage 2 to determine the risk of bias. The reviewer needs to use the guidelines provided in Stage 2 to determine the risk of bias [19]. If literature does not provide the necessary information to make a judgment, then an ‘Unclear’ classification is applied. Disagreements, if any, will need to be resolved through discussion and consensus. The final risk of bias and quality score is presented in the form of a quality assessment chart. Decision-making is substantially influenced by quality evaluations in diagnostic trials, with high-quality studies having an impact on clinical practice, guideline creation, health policy, and budget allocation. Making educated decisions based on the information provided is ensured by rigorous and transparent processes.

2.4 Assessment of Heterogeneity

Heterogeneity was checked by visually inspecting forest plots of Sen and specificity for each study, as well as SROC curves associated with individual study results. Finding the ideal cutoff threshold for a diagnostic test is the goal of the threshold effect analysis used in investigations of diagnostic accuracy. The SROC curve shows graphically how test performance changes with thresholds, and the spot on the curve with the highest performance corresponds to the ideal threshold. The extracted data were entered into StataSE15.0 software and the ‘mid as’ command was used to perform relevant statistical analyses. Using bivariate box plots, included studies were tested for heterogeneity using Q-tests and P-statistics, with heterogeneity indicated if p < 0.1 or 12 > 50%, and insignificant heterogeneity if p > 0.1 and I2 < 50%. The ability to recognize study heterogeneity is essential for meta-analyses and systematic reviews. Cochrane's Q statistic, I2 statistic, forest plots, subgroup analysis, meta-regression, sensitivity analysis, and Baujat plot are examples of common techniques. To analyze the source of heterogeneity, the presence or absence of a threshold effect could be determined by visually determining the presence or absence of a “shoulder–arm shape” in included studies through scatter distribution of the SROC curve. Numerous sources of variance, intricate relationships, limited sample sizes, insufficient reporting, and publication bias are some of the causes of the confusion around the significance of labeling techniques in research heterogeneity. More investigation is required. Meta Di Sc 1.4 was used to calculate Spearman’s correlation coefficient and distribution of the ratio of diagnoses to the ratio of combined diagnoses for rechecking the presence of a threshold effect. In research on diagnostic accuracy, techniques like Spearman’s correlation coefficient and the ratio of diagnoses are employed to assess the threshold effect. The best diagnostic threshold for clinical decision-making is determined by measuring sensitivity and specificity, comparing positive diagnoses at various thresholds, and performing these measurements. If combined results suggest no heterogeneity, then either a fixed-effects model or a random-effects model can be used to validate combined data. If heterogeneity is determined to be due to non-threshold effects, effects can be combined using a random-effects model. Due to its capacity to take into account heterogeneity, offer conservative estimates of effect sizes, and improve analytical reliability against extreme or outlier research, a random-effects model is used for data analysis. It is consistent with the reasonable supposition that genuine impact sizes varied between studies as a result of variations in demographics, locations, or methodology.

2.5 Data Analysis

The data were imported into Stata SE 15.0 and merged using the ‘mid as’ command to integrate Sen, Spe, + LR, −LR, DOR, DS, etc., plotting SROC and calculating diagnostic metrics such as AUC, Fagan plots and likelihood ratio dot plots to determine accuracy. Sen, Spe, + LR, and −LR are common diagnostic test accuracy indicators; DOR values usually range from 0 to infinity, with larger values indicating better test identification. Through standardization, efficiency, improved picture quality, feature extraction, consistency, data integration, machine learning, and AI, and decreased reader fatigue, automatic image processing increases diagnostic accuracy, specifically sensitivity (Spe). It guarantees reliable, repeatable analysis, minimizes human error, and combines data from diverse sources for thorough analysis. When DOR < 1 it means that the test is incorrectly designed, probably due to the presence of more negative tests; the DS value is usually proportional to the AUC value and is often used for comparison with other diagnostic modalities; AUC is calculated by plotting SROC curve to reflect accuracy of diagnostic test and is usually artificially divided into five bands: 0.90 to 1 (excellent), 0.80 to The post-test probabilities were evaluated by Fagan plots to simulate improvement of pre-test probabilities by diagnostic approach; likelihood ratio dot plots were used to visualize likelihood ratios of diagnostic models. 10, LRN < 0.1 can be excluded and confirmed, RUQ: LRP > 10, LRN > 0.1 can be confirmed only, LLQ: LRP < 10, LRN < 0.1 can be excluded only, RLQ: LRP < 10, LRN > 0.1 can be excluded and confirmed neither.

2.6 Publication Bias

Dee k’s funnel plot was plotted by Stata SE 15.0 to assess the publication bias of included studies. A visual tool for meta-analysis known as Dee K’s funnel plot forms a symmetric funnel shape with the x-axis standing for effect size and the y-axis signifying study accuracy. Each point on the scatterplot represents different research. The closer the angle between the line in the plot and the X-axis is to 90°, the more publication bias exists; at the same time, p-value analysis was combined with p < 0.05 to indicate statistical significance and publication bias.

3 Results

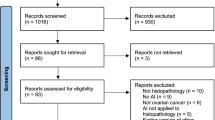

The search form was imported into databases to be searched on demand, and a total of 4719 subject-related papers were obtained, including 4077 papers in English and 642 papers in Chinese, all papers were imported into EndNote X9 software to eliminate duplicates 346 papers, and then titles and abstracts of remaining papers were browsed to exclude 3901 papers that did not meet research direction, and then full papers were obtained and read through Internet. The final total number of included studies was 68 (in addition to 8 groups of data from included literature), as shown in Fig. 1. 42 groups of data were published between 2015 and 2020, including 42 groups of data for AI identification of benign and malignant cervical nodules, and 34 groups of data for AI identification of benign and malignant solid cervical nodules [20]. The data were published between 2015 and 2020, with 42 sets of data for AI to identify benign and 34 sets of data for AI to identify solid cervical nodules [20].

The QUADAS-2 graphical display template for assessing the quality of diagnostic trials provided on the QUADAS-2 website was used to evaluate the quality of included studies in turn and to automatically generate. By methodically evaluating patient selection, index test, reference standard, flow, and timing, the QUADAS-2 graphical display template improves diagnostic trial quality evaluation. It offers clear direction, improves openness, calls for expert judgment, and supports well-informed decision-making. The responses were “Yes”, “Unclear” or “No” according to descriptions and landmark questions in each item. The risk of bias and clinical suitability of content is then rated as ‘High risk’, ‘Unclear risk’, or ‘Low risk’, and the graphic display template automatically generates green, orange, and blue colors of the quality assessment chart. The results of the quality assessment show that the overall quality of the included studies is high. The basic characteristics of the included studies are shown in Fig. 2, Tables 1 and 2.

A total of 42 studies were included in the study of AI for the identification of benign and malignant cervix. Through a systematic search, screening, full-text review, publication bias detection, data extraction, quality assessment, interpretation, meta-analysis, statistical analysis, inclusion criteria, reporting, and peer review, the AI study for differentiating between benign and malignant cervix conditions uses 42 studies. The calculated four-compartment tables were imported into StataSE15.0 software and correlation analyses were performed using the “mi das” command. The bivariate box plot showed that six studies were located outside the graph (Fig. 3) and 12 values for combined Spe were 99.12%, p = 0; 12 values for combined Sen were 99.36%, P = 0, suggesting heterogeneity of studies. To analyze the results, it was first necessary to analyze the threshold effect. The results showed that there was no "shoulder–arm" distribution of dotted lines in the SROC curve plan, suggesting that there was no threshold effect in this group of included studies; in addition, Spearman’s correlation coefficient calculated by Meta Di Sc 1.4 was − 0.387, p = 0.092 (p > 0.05), and DOR forest plot showed that distribution of ratio of diagnoses to ratio of combined diagnoses was not linear, so above two results also indicated that there was heterogeneity in included studies due to non-threshold effects. A Diagnostic Odds Ratio (DOR) forest plot, which displays study-specific DORs, an overall DOR, and quantitative indicators of heterogeneity, is crucial in a diagnostic accuracy meta-analysis. In addition to providing numerical insights into the level of heterogeneity and identifying outliers, it graphically evaluates possible heterogeneity and highlights aspects other than threshold effects. In summary, results suggest heterogeneity in the results of included articles and considering possible heterogeneity due to non-threshold effects, we will use a random-effects model to combine effect values in data analysis.

The forest plots are shown in Fig. 4, yielding a combined Sen value of 0.90 and a combined Spe value of 0.90; Fig. 5 shows a combined DOR value of 83 and a combined DS value of 4.42; Fig. 6 shows a combined + LR value of 9.0 and a combined −LR value of 0.11. The combined + LR value was 9.0 and the combined −LR value was 0.11; the SROC curve was plotted (Fig. 7) and the area under the curve AUC was 0.96 (95% CI 0.93, 0.97). The Fagan plot (Fig. 8) suggests a 50% pre-test probability and a 90% post-test probability of confirming diagnosis when the AI model diagnoses the cervix as positive, and a 10% probability of misdiagnosing nodule when the result is negative. The Fagan plot is a useful tool for evaluating the effects of the model’s test outcomes on pre-test and post-test probability when used in the context of an AI model for cervix detection. This graphical tool aids medical professionals in diagnosing patients, risk assessment, and outcomes communication. The results of likelihood ratio dot plots (Fig. 9) indicate that the use of an AI model for cervical detection in clinical practice has weak exclusionary diagnostic power.

To further analyze heterogeneity, we explored sources of heterogeneity in the Meta-analysis of AI models for diagnosing cervix from the pre-processing method and model structure of AI models, and conducted meta-regression for labeling method, image pre-processing method, feature learning method, and image library, respectively (Fig. 10). The results showed that three variables of the label generation method, image pre-processing method, and feature learning method could generate heterogeneity. To determine how these factors affect outcomes, a meta-regression analysis entails defining the research question, gathering data, standardizing it, performing an initial meta-analysis, performing meta-regression for each factor, assessing heterogeneity, determining confounders, conducting sensitivity analyses, and reporting findings. The labeling method was divided into supervised learning groups, semi-supervised learning groups, and unsupervised learning groups. The results are shown in Table 2. r was lower in the unsupervised learning group and semi-supervised learning group than before, but Spe—98.07% in the supervised learning group—was not changed from the unsupervised group, so it is not clear whether labeling method whether it was a source of heterogeneity in the study. Notably, results of subgroup analysis showed an improvement in Sen: 0.94 (95% CI 0.91, 0.95) and Spe: 0.96 (95% CI 0.93, 0.98) for the semi-supervised group, as well as improvements in −LR, + LR, DOR, and AUC. The image pre-processing methods were divided into manual and automatic image processing. The results are shown in Table 2. 12 was lower in the automatic image processing group than in the medium manual processing group, so the image pre-processing method may be a source of study heterogeneity. In this work, meta-analysis, heterogeneity assessment, sensitivity analysis, and detailed reporting are used to compare the effects of low-value automatic image processing and medium manual processing on diagnostic accuracy. Due to elements including picture quality, complexity, algorithmic efficacy, human operator experience, data characteristics, and assessment methodology, automatic image processing only received a lower score of 12 than manual image processing. The automatic image processing group showed an improvement in all diagnostic accuracy indicators, especially in Spe: 0.93 (95% CI 0.89, 0.95). The feature learning method was divided into a deep learning group and a shallow learning group. Deep learning models, similar to deep neural networks, can deal with complicated data patterns and need a lot of training data. While shallow learning methods, such as logistic regression, need less data and human feature building, they are more adaptable to fluctuations. When analyzing heterogeneity, researchers should take into account the kind of their data. The deep learning group included 25 studies and the shallow learning group included 17 studies. The subgroups were tested for heterogeneity in turn and effect sizes were combined, and results are shown in Table 2. In a meta-analysis, subgroups are identified, heterogeneity tests are run, the results are interpreted, a technique is selected, effect sizes are combined, robustness is evaluated, publication bias is evaluated, and clinical practice-relevant conclusions are drawn. In addition, the deep learning group showed an improvement in all diagnostic accuracy indicators such as Sen, Spe, −LR, + LR, DOR, and AUC. The image library was divided into the LIDC/IDRI group, its database group, and the combined group. The image library is divided into three groups: database group, which contains data from other medical image databases; LIDC/IDRI group, which consists of lung CT scan images for lung nodule analysis; and merged group, which combines data from both groups for thorough medical image analysis and research, guaranteeing data accuracy and dependability. The LIDC/IDRI group included 22 studies, the database group included 12 studies, and the combined group included 7 studies. In image library analysis, using LIDC/IDRI and one’s database groups improves data diversity, model generalization, discovery of unusual conditions, cross-dataset validation, diagnostic precision, research validity, dependability, and clinical application.

To further analyze sources of heterogeneity in included studies, Sen analysis was conducted using Stata SE 15.0 (Fig. 11). After removing each study individually, the impact analysis plot and outlier detection plot revealed the possibility of heterogeneity in six articles, but the goodness of fit and binary normality suggested that fitted images largely overlapped with the null line, indicating that combined results were relatively stable.

Publication bias was evaluated for included studies by producing Dee ‘s funnel plot (Fig. 12) via StataSE15.0 and results suggested p = 0.10 (p > 0.05) suggesting no publication bias.

4 Discussion

Cervical cancer is the fourth most common malignancy in women worldwide, and its main pathological types include squamous cell carcinoma of the cervix and adenocarcinoma of the cervix, which has caused disease and death in millions of women over past decades [23]. About 311,000 women died of cervical cancer in 2018, and about 29,500 women die of cervical cancer each year in China. The age of cervical cancer patients is gradually becoming younger, with precancerous lesions in patients under 35 years of age accounting for almost half of the total [22]. Although the launch of the bivalent HPV vaccine in China has increased the rate of HPV vaccination in Chinese women and fundamentally improved the future incidence of cervical cancer, cervical cancer is still a major threat to Chinese women due to the short time since its launch and low awareness of vaccination among Chinese women. The main cause of death in cervical cancer patients is the depth of infiltration of lesion and occurrence of lymph node metastasis. To reduce mortality and improve the prognosis of women with cervical cancer, early diagnosis, and accurate prognosis prediction are essential to guide clinical treatment, but treatment of cervical cancer has still not improved in past decades. There is an urgent need to explore an effective indicator to suggest prognosis and pathological features that will facilitate. Tumor stage, size, lymph node involvement, histological type, HPV status, invasion depth, p16 expression, immunological response, patient age, and general health are variables that affect the prognosis of cervical cancer. These variables support illness severity evaluation, treatment planning, and outcome prediction. There is an urgent need to explore an effective indicator to suggest prognostic and pathological features that will facilitate the selection of targeted therapies for cervical cancer and provide strong theoretical support for future cervical cancer treatment and prognosis prediction [21]. Enhance research quality by reviewing prior studies, enhancing methodologies, carrying out pilot studies, standardizing data, utilizing peer review, implementing open scientific practices, offering training, securing financing, working with specialists, and ensuring ethical oversight. After years of exploration in China, combining our clinical experience and foreign big data studies, an Expert Consensus on Issues Related to Cervical Cancer Screening and Abnormal Management in China was not created until 2017 to guide clinical work. By learning from European and American screening guidelines and big data analysis, CSCCP in China recommended cervical cancer screening for women aged 25–30 years [24]. Recommendations for cervical cancer screening for women between the ages of 25 and 30 are based on elements including rarity and the self-resolving nature of HPV infections. Early detection might result in needless interventions and higher healthcare expenses. HPV immunization for adolescents is advised. A healthcare professional should be consulted before making any healthcare decisions. However, at this stage, for various reasons, younger women in China, especially those under 25 years of age, are also very active in cervical cancer screening, leading to an increase in number of women under 25 years of age who are screened for cervical cancer compared to previous period, possibly due to following reasons. On one hand, they tend to request cervical cancer screening on their initiative due to their high health expectations and higher compliance than other age groups, increasing the number of universal and opportunistic cervical cancer screenings. On the other hand, it has been documented that women who receive the HPV vaccine have a lower risk of developing high-grade cervical lesions compared to those who do not receive the HPV vaccine and that the HPV vaccine reduces the incidence of cervical lesions and cervical cancer. As more young women learn about the long-term benefits of HPV vaccination for women’s health, more women of the right age want to be vaccinated against HPV, and some of them have a history of sexual activity or are even married with children and have not yet been vaccinated against HPV. Most vaccination facilities require a cervical cancer screening report from the recipient, so they will go to the hospital to be screened for cervical cancer on their initiative. Due to the disparity in medical standards between different institutions in China and the specificity of women under 25 years of age, it is difficult for many health professionals to consistently follow guidelines for post-screening management, or to misinterpret test results and over-treat a transient HPV infection that did not need to be over-treated, while patients who should be referred for immediate colposcopy are hesitant to do so, resulting in patients losing out on treatment. The best time to treat patients is lost. In clinical practice, fear of cervical cancer in young women increases the rate of colposcopy biopsies due to unnecessary referrals for colposcopy, which in turn increases the rate of overdiagnosis. Young women have special characteristics of colposcopy images, and there is currently a lack of multicenter, large sample, and prospective research in China on whether it is possible to minimize colposcopy biopsy rates, quickly and efficiently detect hidden high-grade cervical lesions, and reduce the harm of overtreatment or missed diagnosis to women in their reproductive period. The use of evidence-based recommendations, patient involvement, risk assessment tools, routine screening programs, advanced technology, second opinions, early detection awareness, monitoring, surveillance, education, patient advocacy, and quality assurance are just a few of the strategies being used by healthcare providers to lessen overtreatment and missed diagnoses in women of reproductive age.

Meta-analysis of AI model for diagnosis of cervix showed a combined Sen of 90% and a combined specificity of 90%, indicating a 10% miss rate and a 10% misdiagnosis rate, respectively; combined value of + LR was 9.0, indicating that true positive rate of positive nodules diagnosed by AI model was 9 times higher, and combined value of −LR was 0.11, indicating that negative nodules diagnosed by AI model were 0.11 times more likely to be false negative. The DOR was 83 and AUC was 0.96, indicating that the accuracy of diagnosis of the cervix was high; assuming a pre-test probability of 50%, the post-test probability of a diagnosis of the cervix by AI model showed a 90% probability of confirming diagnosis when test result was positive, and a 10% probability of misdiagnosing nodule when the result was negative, indicating that A1 model was effective in the diagnosis of cervix. Consequences might include overdiagnosis, missed diagnoses, and significant clinical, emotional, and financial effects for AI models with a 90% positive diagnosis rate and a 10% mistake rate. Those making decisions should evaluate this probability and take into account techniques like confirmatory testing and risk tolerance analysis. The likelihood ratios of individual studies were summarized in likelihood ratio dot plots, suggesting that the AI model was more evenly distributed across four quadrants of dot plots and that combined results were in the RLQ quadrant, indicating that the AI model was weak in detecting cervical nodules and prone to false-positive and false-negative results. Due to elements including data consistency, standard data collection, strong AI algorithms, enough sample size, controlled variables, rigorous assessment techniques, and random-effects models, the study’s diagnostic accuracy was not greatly impacted by the database used. The bivariate box plots and r values of Spe suggest heterogeneity in the study. To investigate the source of heterogeneity, Meta-regression was conducted for several underlying variables such as labeling method, image pre-processing method, feature learning method, and image library, respectively. The results showed that three variables, namely the label generation method, image pre-processing method, and feature learning method, were likely to generate heterogeneity.

This study also has some limitations: first, the proportion of malignant tumors in the original study sample included in the article was much higher, which may lead to overfitting and more optimistic results, so results still need prospective large sample studies to confirm conclusions; second, for literature where complete diagnostic data were not available in main text, we were still unable to obtain them by downloading original supplementary data and contacting original authors. Third, the study conducted a comprehensive search of relevant databases, but only included literature in English and Chinese, which had some impact on the systematicity of the study; fourth, original studies were mainly retrospective, and the quality of original studies would affect the quality of systematic evaluation.

5 Conclusions

According to the study, feature learning techniques and picture pre-processing might increase heterogeneity. Due to human label input and repeated validation learning, the diagnostic accuracy of the semi-supervised and autonomous image processing groups increased. Deep learning’s complex algorithms and logical framework led to better diagnostic outcomes. The accuracy of diagnosis was unaffected by database selection. Sen analysis, which excluded trials with higher heterogeneity, indicated steady combined results.

Using 42 data sets, the study examined the utility of AI for differentiating between benign and malignant cervical vitreous lesions. When the AI model was positive, the findings indicated a high accuracy rate of 50% pre-test probability and 90% post-test likelihood of confirming a diagnosis. The study also discovered that the AI model’s exclusionary diagnostic power for cervical detection was high. When detecting solid nodules in the cervical area as opposed to ground glass nodules, the AI model’s accuracy was greater. The labeling approach, picture pre-processing method, and feature learning method all had an impact on the AI model’s accuracy.

Code Availability

Not applicable.

Data Availability

No datasets were generated or analyzed during the current study.

Change history

03 June 2024

This article has been retracted. Please see the Retraction Notice for more detail: https://doi.org/10.1007/s44196-024-00540-6

References

Yu, Y., Wang, J., Tan, Y., Wan, H., Zheng, N., He, Z., Mao, L., Ren, W., Lin, Z., He, G., Chen, Y.: 1136p a clinically applicable cervical cancer artificial intelligence screening system for accurate cytopathological diagnosis: a multicenter population-based study and randomized controlled trial. Ann. Oncol. 32, S925–S926 (2021)

Chen, Y.M.: Artificial intelligence assisted computational tomographic detection of lung nodules for prognostic cancer examination: a large-scale clinical trial. Biomedicines 11, 147 (2023)

Olson, L.G., Throne, R.D., Rusnak, E.I., Gannon, J.P.: Force-based stiffness mapping for early detection of breast cancer. Inverse Probl. Sci. Eng. 29, 1–35 (2021)

Nishimura, H., Ping, T.Y., Oguntade, H., Kennedy, C.E., Narasimhan, M.: Hpv self-sampling for cervical cancer screening: a systematic review of values and preferences. Br. Med. J. Glob. Health 6, e003743 (2021)

Zhang, H., Chen, C., Ma, C., Chen, C., Lv, X.: Feature fusion combined with Raman spectroscopy for early diagnosis of cervical cancer. IEEE Photonics J. 13, 1–11 (2021)

Senthilkumar, G., Ramakrishnan, J., Frnda, J., Ramachandran, M., Mohammed, M.A.: Incorporating artificial fish swarm in ensemble classification framework for recurrence prediction of cervical cancer. IEEE Access 9, 83876–83886 (2021)

Guo, C., Wang, J., Wang, Y., Qu, X., Hua, K.: Novel artificial intelligence machine learning approaches to precisely predict survival and site-specific recurrence in cervical cancer: a multi-institutional study. Transl. Oncol. 14, 101032 (2021)

Munetoshi, A., Hashimoto, K.: Artificial intelligence in gynecologic cancers: current status and future challenges – a systematic review. Artif. Intell. Med. 120, 102164 (2021)

Mai, A.G., El-Ghoneimey, S., Ibrahim, S.A.S., Mohamedhanafy, S.: Systemic lupus erythematosus as a risk factor for cervical cancer and its precursor conditions: assessment using pap smear and histopathology original research. Gynecol. Obstet. Res.–Open J. 8, 1–7 (2021)

Al, J.: Cervical cancer diagnosis system using ant-miner for managing the knowledge in medical database. Turkish J. Comput. Math. Educ. (TURCOMAT). 12, 1728–1737 (2021)

Yang, H., Chen, H., Ni, R., Zhang, G., Huang, Y., Ye, X., Fan, X., Kuang, Y., Zhang, J., Huang, C., Liu, H.: Circulating genetically abnormal cells combined with artificial intelligence for accurate and non-invasive early detection on nsclc. J. Clin. Oncol. 39, 3056–3056 (2021)

Gupta, S., Saulnier, G., Newll, J., Faaborg-Andersen, C., Kelley, R.S., Vargas, A.: Uterine bioimpedance combined with artificial intelligence as a means of cancer detection. J. Med. Eng. Technol. 45, 606–613 (2021)

Dan, L., Li, Z., Gu, Y., Ge, S., Mao, Y., Gu, Y., Cao, X.: A novel sers biosensor for ultrasensitive detection of hpv-e7 and opn based on a cascade signal amplification strategy of catalytic hairpin assembly and hybridization chain reaction. Mater. Chem. Front. 6, 1331–1343 (2022)

Ilyas, Q.M., Ahmad, M.: An enhanced ensemble diagnosis of cervical cancer: a pursuit of machine intelligence towards sustainable health. IEEE Access 9, 12374–12388 (2021)

Naderan, M.: Review methods for breast cancer detection using artificial intelligence and deep learning methods. Syst. Res. Inf. Technol. (2021). https://doi.org/10.20535/SRIT.2308-8893.2021.1.08

Young, M.R., Abrams, N.F., Ghosh-Janjigian, S., Rinaudo, J., Marquez, G., Koay, E., Kenner, B., Srivastava, S.: Prediagnostic images, electronic health records, and artificial intelligence: early detection of pancreatic cancer. Pancreas 50, 1111–1111 (2021)

Urushibara, A., Saida, T., Mori, K., Ishiguro, T., Masumoto, T.: Diagnosing uterine cervical cancer on a single t2-weighted image: comparison between deep learning versus radiologists. Eur. J. Radiol. 135, 109471 (2020)

Begum, V.: Detection of cervical cancer using multiple neuro fuzzy inference technique. Int. J. Multidiscip. Res. Rev. 6, 725–729 (2019)

Crowell, E.F., Bazin, C., Thurotte, V., Elie, H., Renoouf, A.: Adaptation of cytoprocessor for cervical cancer screening of challenging slides. Diagn. Cytopathol. 47, 890–897 (2019)

Dong, J.R., Jhingran, A., Kisling, K., Cardenas, C., Court, L.: Automated radiation treatment planning for cervical cancer. Semin. Radiat. Oncol. 30, 340–347 (2020)

Long, Y., Yao, D.S., Wei, Y.S., Chen, J., Ye, X.Q.: Perineural invasion in early-stage cervical cancer: detection and influence on prognosis. Eur. J. Gynaecol. Oncol. 40, 452–456 (2019)

Tang, D., Wang, L., Ling, T., Lv, Y., Zou, X.: Development and validation of a real-time artificial intelligence-assisted system for detecting early gastric cancer: a multicenter retrospective diagnostic study. EBioMedicine 62, 103146 (2020)

Bedell, S.L., Goldstein, L.S., Goldstein, A.R., Goldstein, A.T.: Cervical cancer screening: past, present, and future. Sex. Med. Rev. 8, 28–37 (2019)

Aiswarya, R.S., Kumar, V., Punitha, P.: The effect of grain size and silicon content on non-oriented grain steel anomalous loss through frequency excitation in medical healthcare by using big data analysis. Tamjeed J. Healthc. Eng. Sci. Technol. 1, 43–53 (2023)

Funding

The authors did not receive any funding.

Author information

Authors and Affiliations

Contributions

DQ, CZ, HZ, XY, is responsible for designing the framework, analyzing the performance, validating the results, and writing the article. GR, SZ, MW, ZP, is responsible for collecting the information required for the framework, provision of software, critical review, and administering the process.

Corresponding author

Ethics declarations

Conflict of Interest

Authors do not have any conflicts.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Corresponding author: Zhigang Pei

This article has been retracted. Please see the retraction notice for more detail: https://doi.org/10.1007/s44196-024-00540-6

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Qin, D., Zhang, C., Zhou, H. et al. RETRACTED ARTICLE: Meta-analysis of Artificial Intelligence-Assisted Pathology for the Detection of Early Cervical Cancer. Int J Comput Intell Syst 16, 189 (2023). https://doi.org/10.1007/s44196-023-00367-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s44196-023-00367-7