Abstract

This paper studies a two-agent strategic model of capital accumulation with heterogeneity in preferences and income shares. Preferences are represented by recursive utility functions that satisfy decreasing marginal impatience. The stationary equilibria of this dynamic game are analyzed under two alternative information structures: one in which agents precommit to future actions, and another one where they use Markovian strategies. In both cases, we develop sufficient conditions to show the existence of these equilibria and characterize their stability properties. Under certain regularity conditions, a precommitment equilibrium shows monotone convergence of aggregate variables, but Markovian equilibria may exhibit nonmonotonic paths, even in the long-run.

Similar content being viewed by others

Notes

Other significant early contributions include Mantel [36], Uzawa [55], Lucas and Stokey [35], and Epstein [25], and—more recently—Mantel [37,38,39], Das [19], and Stern [52]. The key difference among these approaches lies on how the rate of time preference is modeled as a function of consumption or capital.

These properties of optimal growth models with recursive preferences were actively investigated by the late Rolf Mantel. Although Mantel’s work along these lines is far less known than his celebrated results on the Sonnenschein–Mantel–Debreu Theorem in General Equilibrium Theory, it follows from the same foundational concerns. For a detailed treatment, see Tohmé [54].

For additional enlightened discussion on applications of strategic dynamic programming methods, see the Preface to the Special Issue on Dynamic Games in Macroeconomics by Prescott and Reffett [44] and references therein.

For consistency, the following conventions are adopted: \(\prod \nolimits _{s=0}^{-1}\alpha _i(c_s^i)=1\) and \(\prod \nolimits _{s=0}^{0}\alpha _i(c_s^i)=\alpha _i(c_0^i)\).

For instance, in a game of capital accumulation with a non-concave production function, Dockner and Nishimura [23] use a continuity property and the monotonicity of the optimal path. Erol et al. [26] apply tools of nonsmooth analysis to show that the value function is differentiable almost everywhere, which leads to uniqueness of the optimal path. Camacho et al. [10] obtain similar results in a strategic environment, for the case of open-loop strategies, relying on the theory of monotone comparative statics and supermodularity.

For an in-depth treatment of the saddle-point property and minimax problems in finite dimensions, see the classical work by Rockafellar [45]. A recent discussion on the optimization of concave–convex functions in Banach spaces can be found in Barbu and Precupanu [4], but many important results are restricted to reflexive Banach spaces.

The assumption that the sequence \(\mathbf {l}^i\) begins at \(t=0\) and \(\mathbf {m}^i\) at \(t=1\) is innocuous, but turns out to be quite important for the economic interpretation of these values.

A similar approach is followed in Lucas and Stokey [35].

This is a slight abuse of terminology, since \(\delta _i\), \(\omega _i\) and \(\eta _i\), \(i=1,2\), are not exactly parameters, but expressions derived from the model’s primitives evaluated at a stationary point \((\overline{c}^i,\overline{c}^j,\overline{k})\). If we were to assume specific functional forms for the fundamentals, they would ultimately depend on certain parameters.

A related condition, albeit presented in statistical terms, called no-upward-crossing, has been recently introduced by Chade and Swinkels [15] in a study of the first-order approach to the classical moral hazard problem.

Details of this analysis are given in “Appendix B”.

The analysis of non-hyperbolic fixed points is based on the center manifold. See Galor [28, Ch. 4] and references therein.

For a review of the theory of infinite and related finite matrices, as sections or truncations, in the light of modern operator theory, see Shivakumar and Sivakumar [48]. Diagonally dominant infinite matrices occur in many applications, including partial differential equations and dynamical systems.

In this expression we adopt the conventions that \(\sum \nolimits _{s=0}^{-1}\delta _s =0\) and \(\sum \nolimits _{s=0}^{0}\delta _s =\delta _0\).

With this functional form for periodic utility, \(w_t\) can take negative values. But, since \(0< c_t < f(k_m)\), \(u(c_t)\) is bounded, and all previous results remain valid as long as \(U_t >0\) and \(W_t < 0\) hold.

Young’s inequality states that if a and b are nonnegative real numbers, and p and q positive real numbers such that \(1/p+1/q=1\), then \(ab \le a^p/p+b^q/q\).

The theorem states that any invertible \(n \times n\) matrix A over the real field satisfies its own characteristic polynomial.

Allowing for complex roots does not alter the results in any significant way, so that case is omitted.

References

Arrow KJ, Debreu G (1954) Existence of an equilibrium for a competitive economy. Econometrica 22(3):265–290

Başar T, Olsder GJ (1999) Dynamic noncooperative game theory, classics in applied mathematics, vol 23, 2nd edn. SIAM, Philadelphia

Banks J, Duggan J (2004) Existence of Nash equilibria on convex sets. Working paper, W. Allen Wallis Institute of Political Economy, University of Rochester

Barbu V, Precupanu T (2012) Convexity and optimization in Banach spaces. Springer Monographs in Mathematics, 4th edn. Springer, Dordrecht

Beals R, Koopmans T (1969) Maximizing stationary utility in a constant technology. SIAM J Appl Math 17(5):1001–1015

Becker R (2006) Equilibrium dynamics with many agents. In: Dana RA, Le Van C, Mitra T, Nishimura K (eds) Handbook on optimal growth 1. Discrete time. Springer, Berlin, pp 385–442

Becker R, Foias C (1998) Implicit programming and the invariant manifold for Ramsey equilibria. In: Abramovich Y, Avgerinos E, Yannelis N (eds) Functional analysis and economic theory. Springer, Berlin, pp 119–144

Becker R, Foias C (2007) Strategic Ramsey equilibrium dynamics. J Math Econ 43(3):318–346

Becker R, Dubey R, Mitra T (2014) On Ramsey equilibrium: capital ownership pattern and inefficiency. Econ Theory 55(3):565–600

Camacho C, Saglam C, Turan A (2013) Strategic interaction and dynamics under endogenous time preference. J Math Econ 49(4):291–301

Carlson D, Haurie A (1995) A turnpike theory for infinite horizon open-loop differential games with decoupled controls. In: Olsder GJ (ed) New trends in dynamic games and applications. Birkhäuser, Boston, pp 353–376

Carlson D, Haurie A (1996) A turnpike theory for infinite-horizon open-loop competitive processes. SIAM J Control Optim 34(4):1405–1419

Carlson D, Haurie A, Zaccour G (2016) Infinite horizon concave games with coupled constraints. In: Başar T, Zaccour G (eds) Handbook of dynamic game theory. Springer, Basel, pp 1–44

Carlson DA, Haurie AB (2000) Infinite horizon dynamic games with coupled state constraints. In: Filar JA, Gaitsgory V, Mizukami K (eds) Advances in dynamic games and applications. Birkhäuser, Boston, pp 195–212

Chade H, Swinkels J (2017) The no-upward-crossing condition and the moral hazard problem. Working paper, Department of Economics, Arizona State University

Coleman WJ (1991) Equilibrium in a production economy with an income tax. Econometrica 59(4):1091–1104

Coleman WJ (1997) Equilibria in distorted infinite-horizon economies with capital and labor. J Econ Theory 72(2):446–461

Coleman WJ (2000) Uniqueness of an equilibrium in infinite-horizon economies subject to taxes and externalities. J Econ Theory 95(1):71–78

Das M (2003) Optimal growth with decreasing marginal impatience. J Econ Dyn Control 27(10):1881–1898

Datta M, Mirman LJ, Reffett K (2002) Existence and uniqueness of equilibrium in distorted dynamic economies with capital and labor. J Econ Theory 103(2):377–410

Dechert WD (1982) Lagrange multipliers in infinite horizon discrete time optimal control models. J Math Econ 9(3):285–302

Dockner E, Nishimura K (2004) Strategic growth. J Differ Equ Appl 10(5):515–527

Dockner E, Nishimura K (2005) Capital accumulation games with a non-concave production function. J Econ Behav Organ 57(4):408–420

Drugeon JP, Wigniolle B (2017) On impatience, temptation and Ramsey’s conjecture. Econ Theory 63(1):73–98

Epstein LG (1987) A simple dynamic general equilibrium model. J Econ Theory 41(1):68–95

Erol S, Le Van C, Saglam C (2011) Existence, optimality and dynamics of equilibria with endogenous time preference. J Math Econ 47(2):170–179

Fesselmeyer E, Mirman LJ, Santugini M (2016) Strategic interactions in a one-sector growth model. Dyn Games Appl 6(2):209–224

Galor O (2007) Discrete dynamical systems. Springer, Berlin

Geoffard PY (1996) Discounting and optimizing: capital accumulation problems as variational minmax problems. J Econ Theory 69(1):53–70

Greenwood J, Huffman G (1995) On the existence of nonoptimal equilibria in dynamic stochastic economies. J Econ Theory 65(2):611–623

Horn R, Johnson C (1990) Matrix analysis. Cambridge University Press, Cambridge

Houba H, Sneek K, Vardy F (2000) Can negotiations prevent fish wars? J Econ Dyn Control 24(8):1265–1280

Iwai K (1972) Optimal economic growth and stationary ordinal utility: a Fischerian approach. J Econ Theory 5(1):121–151

Le Van C, Saglam C (2004) Optimal growth models and the Lagrange multiplier. J Math Econ 40(3–4):393–410

Lucas RE, Stokey N (1984) Optimal growth with many consumers. J Econ Theory 32(1):139–171

Mantel RR (1967) Maximization of utility over time with a variable rate of time preference. Discussion paper, Cowles Foundation for Research in Economics, CF-70525(2), Yale University

Mantel RR (1993) Grandma’s dress, or what’s new for optimal growth. Rev Anal Econ 8(1):61–81

Mantel RR (1995) Why the rich get richer and the poor get poorer. Estud Econ 22(2):177–205

Mantel RR (1999) Optimal economic growth with recursive preferences: decreasing rate of time preference. Económica 45(2):331–348

McKenzie L (1959) On the existence of general equilibrium for a competitive market. Econometrica 54:54–71

Mirman LJ, Morand O, Reffett K (2008) A qualitative approach to Markovian equilibrium in infinite horizon economies with capital. J Econ Theory 139(1):75–98

Pichler P, Sorger G (2009) Wealth distribution and aggregate time-preference. J Econ Dyn Control 33(1):1–14

Ponstein J (1981) On the use of purely finitely additive multipliers in mathematical programming. J Optim Theory Appl 33(1):37–55

Prescott EC, Reffett K (2016) Preface: special issue on dynamic games in macroeconomics. Dyn Games Appl 6(2):157–160

Rockafellar RT (1970) Convex analysis. Princeton University Press, Princeton

Rockafellar RT, Wets RJB (1976) Stochastic convex programming: relatively complete recourse and induced feasibility. SIAM J Control Optim 14(3):574–589

Rosen JB (1965) Existence and uniqueness of equilibrium points for concave \(N\)-person games. Econometrica 3(33):520–534

Shivakumar P, Sivakumar K (2009) A review of infinite matrices and their applications. Linear Algebra Appl 430(4):976–998

Sorger G (2002) On the long-run distribution of capital in the Ramsey model. J Econ Theory 105(1):226–243

Sorger G (2006) Recursive Nash bargaining over a productive asset. J Econ Dyn Control 30(12):2637–2659

Sorger G (2008) Strategic saving decisions in the infinite-horizon model. Econ Theory 36(3):353–377

Stern M (2006) Endogenous time preference and optimal growth. Econ Theory 29(1):49–70

Stokey N, Lucas RE, Prescott EC (1989) Recursive methods in economic dynamics. Harvard University Press, Cambridge

Tohmé F (2006) Rolf Mantel and the computability of general equilibria: on the origins of the Sonnenschein–Mantel–Debreu theorem. Hist Polit Econ 38(Suppl. 1):213–227

Uzawa H (1968) Time preference, the consumption function and optimum assets holdings. In: Wolfe J (ed) Value, capital, and growth: papers in honour of Sir John Hicks. Aldine, Chicago, pp 485–504

Acknowledgements

Luis Alcalá acknowledges financial support from the Universidad Nacional de San Luis, through Grant PROICO 319502, and from the Consejo Nacional de Investigaciones Científicas y Técnicas (CONICET), through Grant PIP 112-200801-00655. We thank an associate editor and an anonymous referee whose insightful comments and detailed suggestions led to significant improvements of the paper.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix

Some Fundamental Issues: Details and Proofs

1.1 Joint Concavity

In this section, we develop sufficient conditions for the joint concavity of each player’s utility function. We base our analysis on the following results from Horn and Johnson [31].

Definition 4

Let \(M_n\) be the space of real-valued symmetric matrices with element \(A=[a_{ij}]\). The matrix A is said to be diagonally dominant if

It is said to be strictly diagonally dominant if

Theorem 3

If \(A=[a_{ij}] \in M_n\) is strictly diagonally dominant and if \(a_{ii} < 0\) for all \(i=1,2,\ldots ,n\), then A is negative definite.

1.1.1 Strict Diagonal Dominance and Strict Concavity

To simplify the exposition, the superscripts \(i,j=1,2\) will be omitted. First, we consider a finite-horizon version of the intertemporal utility function (1), which is simply

where \(1 \le T < \infty \) and \(\mathbf {c}_T:=(c_0,c_1,\ldots ,c_T)\) denotes a finite consumption sequence, and develop some conditions to determine whether \(w_0^T\) is concave. Second, we analyze the limiting behavior of this utility function as T tends to infinity.Footnote 15

Some preliminaries are needed for the analysis. Let \(\mathbf {c}_{t,T}:=(c_t,c_{t+1},\ldots ,c_{T-1},c_{T})\), with \(\mathbf {c}_{0,T}:= \mathbf {c}_T\), denote a consumption subsequence of \(\mathbf {c}_T\) starting at some \(0 \le t < T\). The continuation utility associated to \(\mathbf {c}_{t,T}\) will be defined as

We also adopt the convention that \(w_T^T = u(c_T)\).

To simplify notation, let \(u_t:=u(c_t)\) and \(\alpha _t:=\alpha (c_t)\), for each \(c_t \ge 0\). Hence, the objective function can be written more succinctly as

where \(\beta _t:= \prod _{s=0}^{t-1}\alpha _s\) is the discount factor at period \(t=0,1,\ldots ,T\). Then, we compute the first and second partial derivatives of \(w_0^T\), which are given by

and

We also introduce the following notation, which will be used throughout the paper:

for all \(t=0,1,\ldots ,T\).

As mentioned earlier, showing that (62) is strictly concave at \(\mathbf {c}_T\) is equivalent to proving that the Hessian matrix of \(w_0^T\) has negative diagonal entries and is strictly diagonally dominant at \(\mathbf {c}_T\). The Hessian matrix of \(w_0^T\) at \(\mathbf {c}_T\) is given by:

Note that all the diagonal entries in this matrix are strictly negative. Applying the result from Theorem 3, the following \((T+1)\) inequalities must hold for the strict diagonal dominance of \({\mathscr {H}}\):

where \(D_t : = \sum _{s=0}^{t-1}\delta _s\), for all t.Footnote 16

Certain conditions must be imposed for (66)–(67) to be well defined in the limit as \(T \rightarrow \infty \). Since most of our results are developed for neighborhoods of interior stationary points, it is reasonable to restrict the analysis to convergent positive consumption sequences. Then, we assume that \(\mathbf {c}_T\) converges to some feasible value, say \(c >0\). Let \(w_t\) be the infinite-horizon continuation value at t, given by \(w_t:=\lim _{T \rightarrow \infty } w_t^T\). This limit is well defined if the consumption sequence is convergent. In turn, this allows us to define

Further suppose that \({\mathscr {U}}_t: = \lim _{T \rightarrow \infty }\ \sum _{\tau =t}^T \beta _\tau U_\tau \) exists in \({\mathbb {R}}_+\), for all t. Note that these definitions are consistent with the notation introduced in Sect. 4 and used throughout the paper. We can conclude that the conditions for strict diagonal dominance for the Hessian matrix \({\mathscr {H}}\) in the infinite-horizon case are

that are obtained taking limits in (66)–(67).

1.1.2 An Example

We consider similar functional forms as in Stern [52] and Erol et al. [26], adapted to our framework. The discount function is assumed to have the exponential form

with \(0< {\bar{\alpha }} < 1\), \(1< \rho < +\infty \), and \(0< \gamma < 1\), and the periodic utility function belongs to the standard CRRA class,Footnote 17

Then, for some positive consumption sequence \(\mathbf {c}=\{c_t\}_{t=0}^\infty \) that converges to some \(c >0\), we have that

where

Observe that \(\lim _{t \rightarrow \infty } \beta _t =0\) and \(0< \delta _t < \gamma \), for all t. Moreover, if \(w_{t+1} > 0\), then \(W_t\) is negative, as \(c_t+ \rho > (\gamma /(1+\gamma ))^{\frac{1}{\gamma }}\).

In order to determine the conditions for strict diagonal concavity given in (68), we need the following

Finally, (69) holds provided that

is nonnegative as \(t \rightarrow \infty \).

1.2 Existence

Proof of Proposition 1

Existence follows from standard arguments. The remaining of the proof is divided into two steps. \(\square \)

Step 1. The following “auxiliary problem” is directly related to the definition of variational utility

The assumption that \(\mathbf {c}^i \in \ell _\infty \) is justified by the fact that \(c_t^i \in [0,\theta ^i f(k_m)]\), for all t, in the original saddle-point problem (\(\text {SVP}^i\)). The first inequality restriction above could be also considered as an equality restriction. But this formulation allows to keep certain symmetry between the inf and the sup problems, and rules out an uninteresting solution with \(\mathbf {B}^i=(1,0,0,\ldots ,0,\ldots )\) for any \(\mathbf {c}^i \in \ell _\infty \), which could arise if the reverse inequality holds.

Clearly, the problem (VP\(^i\)) fits into the framework of Dechert [21], who solves an optimization problem of the form \(\inf _{\mathbf {x} \in \ell _\infty }\ \left\{ F(\mathbf {x}):\varPhi (\mathbf {x}) \le 0\right\} \), where \(F:\ell _\infty \rightarrow {\mathbb {R}}\) and \(\varPhi :\ell _\infty \rightarrow \ell _\infty \). Simply put \(\mathbf {x}:=\mathbf {B}^i\), define \(F(\mathbf {x}):=\sum _{t=0}^\infty G_t(\mathbf {x})\), where \(G_t(\mathbf {x})=\beta _t^i u_i(c_t^i)\) for each t, and \(\varPhi (\mathbf {x})\) as \(\varPhi _t(\mathbf {x}):=(\varPhi _t^1(\mathbf {x}),\varPhi _t^2(\mathbf {x}))\), for each t, where \(\varPhi _t^1(\mathbf {x}):=\alpha _i(c_t^i)\beta _t^i-\beta _{t+1}^i\) and \(\varPhi _t^2(\mathbf {x}):=-\beta _{t+1}^i\).

To characterize a solution, it suffices to show that (VP\(^i\)) satisfies the hypothesis of Theorems 2 and 3 in Dechert [21]. This is guaranteed by the following set of conditions:

-

(A1)

each \(G_t\), \(\varPhi _t^1\), and \(\varPhi _t^2\) is convex and continuous for all t;

-

(A2)

for all \(\mathbf {x} \in \ell _\infty \), \(\{G_t\} \in \ell _1\) and \(\{\varPhi _t\} \in \ell _\infty \);

-

(A3)

there exists \(\mathbf {x}^0 \in \ell _\infty \) such that \(\sup _t \varPhi _t(\mathbf {x}^0) < 0\) (Slater condition).

Conditions (A1) and (A2) are straightforward. To verify (A3), note that under assumptions (U1)–(U4) on \(\alpha _i\) and \(u_i\), for any \({\mathbf {c}}^i \in \ell _\infty \), it follows that \(\alpha _i(0) \le \alpha _i(c_t^i) \le {\overline{\alpha }}_i < 1\), for all t and for each \(i=1,2\). Then the sequence \(\mathbf {x}^0 = (1,1,\ldots ,1,\ldots )\) yields \(\varPhi _t^1(\mathbf {x}^0) = -\left( 1-\alpha _i(c_t^i)\right) \le -(1-{\overline{\alpha }}^i) < 0\) and \(\varPhi _t^2(\mathbf {x}^0) = -1 < 0\), for all t, hence \(\sup _t\varPhi _t(\mathbf {x}^0) < 0\) holds. Therefore, there exist \(\mathbf {B}^i \in \ell _\infty \) and sequences of Lagrange multipliers, \(\mathbf {m}^i:=\{\mu _{t+1}\}_{t=0}^\infty \) in \(\ell _1\) and \(\mathbf {n}^i :=\{\nu _{t+1}\}_{t=0}^\infty \) in \(\ell _1\), such that

and the complementary slackness conditions

are satisfied.

In Dechert’s formulation, transversality conditions are implicit from (70)–(71). But the transversality condition \(\lim _{t \rightarrow \infty }\ \mu _{t+1}^i\beta _{t+1}^i = 0\) in (5) has a more meaningful economic interpretation and can be obtained in the usual way. Assuming an infinite horizon \(T \ge 1\), optimality implies that \(\mu _{T+1}^i=-\nu _{T+1}^i\). But since (71b) must also hold at infinity, simply take \(T \rightarrow \infty \), and the desired result follows. Note that \(\beta _{t+1}^i = 0\) can never be optimal for any finite \(t \ge 0\), then from (71), \(\nu _{t+1}^i =0\) and \(\mu _{t+1}^i > 0\) along any optimal path. Given that \(\beta _{t+1}^i = \alpha _i(c_t^i)\beta _t^i\), for all t, \(\beta _{t+1}^i \rightarrow 0\) as \(t \rightarrow \infty \), which implies that the transversality condition is satisfied. It also follows that \(\mathbf {m}^i \in (\ell _1)_+ \backslash \{0\}\).

Now, evaluate (70) at \(t=0\) and multiply by \(\beta _1^i\) on both sides of the equality to obtain

Continuing this iterative procedure, at the Tth step we have that

As \(T \rightarrow \infty \), the transversality condition \(\lim _{T \rightarrow \infty } \mu _{T+1}^i\beta _{T+1}^i = 0\) implies that

hence \(\mu _0^i\) is the optimal value of (\(\text {SVP}^i\)) for player i. In other words, the dual problem

yields the same value \(\mu _0^i\) as (VP\(^i\)), for any \(\mathbf {c}^i \in \ell _\infty \).

Step 2. Next, using (dVP\(^i\)), the original problem (\(\text {SVP}^i\)) can be reformulated in terms of \((\mathbf {c}^i,\mathbf {k}^i)\) and the dual variable \(\mathbf {m}^i\) as follows

The analysis is similar to Step 1, applying additional results from Le Van and Saglam [34], since the objective and the restrictions now satisfy Inada conditions and can take values in the extended real line. The main difference is that the solution must be interior, which explains the fact that each decision variable is restricted to \((\ell _\infty )_+\) and the multipliers \(\lambda _t^i\) and \(\mu _{t+1}^i\) are strictly positive. Obviously, conditions (A1)–(A3) need to be appropriately reformulated. To verify these conditions, the arguments of Examples 1 and 2 in Le Van and Saglam [34] (pages 399–407) can be easily adapted to the current setup, so the details are left to the reader.

As the nonnegativity constraints can be safely ignored, the Lagrangian associated to (dSVP\(^i\)) is

Then, from Theorem 2 in Le Van and Saglam [34], there exists an optimal solution \((\mathbf {c}^i,\mathbf {k}^i,\mathbf {m}^i)\) in the space \((\ell _\infty )_+ \times (\ell _\infty )_+ \times (\ell _\infty )_+\) and sequences of Lagrange multipliers \(\mathbf {l}^i:=\{\lambda _t^i\}_{t=0}^\infty \) and \(\mathbf {e}^i:=\{\varepsilon _{t+1}^i\}_{t=0}^\infty \) in \((\ell _1)_+ \times (\ell _1)_+\) satisfying

and the complementary slackness conditions

Given that the solution is interior, (72a)–(72c) imply that \(\lambda _t >0\), for all t. Hence \(\mathbf {l}^i \in (\ell _1)_+ \backslash \{0\}\). It is also clear from (72d) and (72e) that \(\varepsilon _{t+1}^i > 0\), for all t. Thus the first two inequality restrictions in (dSVP\(^i\)) must hold with equality. By duality, notice that \(\varepsilon _{t+1}^i\) can be replaced with \(\beta _{t+1}^i\) in the above conditions. As shown before, a combination of (72) and (73) yields the transversality condition \(\lim _{t \rightarrow \infty } \lambda _t k_{t+1}^i =0\) in (5). Finally, conditions (3) and (4) in the statement of the proposition can be obtained by making the appropriate substitutions. This completes the proof. \(\square \)

1.2.1 Linearized Dynamical System

The optimality conditions for agent i form a discrete dynamical system in the variables \((\beta _t^i,c_t^i,k_t^i,\mu _t^i)\), taking the path of \(k_t^j\) as given,

A stationary point \((c^i,c^j,\beta ^i,\beta ^j,k^i,k^j,\mu ^i,\mu ^j)\) with \(c^i,c^j,k^i,k^j > 0\) for the dynamical system formed by (3a)–(3c) and (4a)–(4b) can be characterized as follows

In fact, the system can be reduced in one variable, since the dynamics of \(\beta _t^i\) depend entirely on \(c_t^i\). Taking a first-order approximation in a neighborhood of a stationary point and defining deviations from any variable with respect to its stationary value as \({\hat{x}}_t^i:=x_t^i-x^i\), we have that

for \(j \ne i=1,2\), and for all t. Thus, the coefficient matrices in (9) are given by

where all functions are evaluated at the stationary point and

This notation is consistent with the remaining of the paper, so these results are directly comparable with those developed in the Sects. 4 and 5.

1.3 The Strategy Space

Proof of Lemma 1

For simplicity, assume that \(\alpha _i(c) \ge \alpha _j(c)\) holds for all \(c \in [0,k_m]\), since the argument does not hinge on this particular assumption. By (T2), we have that \(\alpha _i(0)f'(0^+) > 1\) (it could be infinity). From (T1)–(T2), the maximum sustainable level satisfies \(f'(k_m) < 1\). This, together with (U1) and the fact that \(f(k_m)-k_m > 0\), implies \(\alpha _i(k_m)f'(k_m) < 1\). Hence the existence of \(k_a^i \in (0,k_m)\) follows from the continuity of \(\alpha _i\) and \(f'\). The proof for \(k_a^j \in (0,k_m)\) is analogous. \(\square \)

It follows from the condition for a stationary equilibrium (12) that

where

Assume that \(k_a^i < k_a^j\). Given that \(f'(k_a^i)> f'(k_a^j) >1\), the above condition implies \(\alpha _i(c_a^i)<\alpha _j(c_a^j)\). For this inequality to hold, \(c_a^i\) must be sufficiently lower than \(c_a^j\). In particular, this implies that

or, equivalently,

By the mean value theorem, there is a \(k_a^i< x < k_a^j\) such that \(f'(x) < 1\). But this contradicts the concavity of f. Hence, \(k_a^j \le k_a^i\).

Precommitment Equilibria: Details and Proofs

Proof of Proposition 2

Since (23a) and (23b) hold for any interior stationary point, we have

hence \(\alpha _i(\overline{c}^i) < 1\) and \(\alpha _j(\overline{c}^j) < 1\) by (U1). This in turn implies \(f'(\overline{k}) > 1\), \(f'(k_a^i) > 1\), and \(f'(k_a^j) > 1\). Next, divide (74a) by (74b) to obtain

Given that \(\alpha _i(\cdot ) \ge \alpha _j(\cdot )\), for the first equality to hold it must be the case that \(\overline{c}^i \le \overline{c}^j\), which proves (i). \(\square \)

For part (ii), note that from (74a)–(74b) and the resource constraint, it follows that

From the second equality above, the nonnegativity condition \(\overline{c}^i \ge 0\) is equivalent to

Hence, \(\overline{k} \le k_a^j\). The remaining inequality \(\overline{k} \le k_a^i\) follows from Lemma 1.

To show part (iii), rearrange (74a) and apply the result from part (ii) of this proposition to obtain

hence the monotonicity of the discount factor implies \(\overline{c}^i \le c_a^i\). The remaining inequality can be obtained applying a similar argument to (74b). This completes the proof. \(\square \)

Proof of Proposition 3

Given that the stationary equilibrium satisfies (P1), it follows that

on \(I^i \times I^j \times I^k\). Multiplying the first inequality above by \(\alpha _i'/\alpha _i\) and the second inequality by \(\alpha _j'/\alpha _j\), and adding up the result, we have

Given that \(\alpha _i'/\alpha _i,\,\alpha _j'/\alpha _j \ge 0\), Young’s inequality implies that

Hence, it follows that

which is (31), the desired result.Footnote 18\(\square \)

Proof of Theorem 1

Note that \(\det (A) \ne 0\), so the matrix of coefficients A is locally invertible and, given that all elements of \(D\varPhi (\overline{x})\) exist and are continuous in some neighborhood \({\mathscr {U}}\) of \(\overline{x}\), the map \(\varPhi \) is a local diffeomorphism.

It remains to prove that the eigenvalues of A satisfy (33). Note that its trace and determinant are

By the Cayley–Hamilton theorem, the coefficients of the characteristic polynomial can be expressed in terms of traces of powers of A, one of them being the determinant of A.Footnote 19 In particular, for \(n=3\), the characteristic polynomial is given by

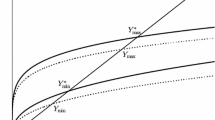

and has the eigenvalues of A as roots. A graphical approach is useful for the characterization of \(p(\lambda )\). Generally speaking, the graph of a third-degree polynomial, can be characterized by its roots, the intersection with the y-axis, a local maximum, a local minimum, and a point of inflection. \(\square \)

The remaining of the proof will be carried out in several steps which we formulate as independent lemmas.

Lemma 2

\(p(-1)< p(0)< 0 < p(1)\).

Proof

Reordering terms in (75) yields

By (P1) and the fact that \(\omega _i-\delta _i >0\) and \(\omega _j-\delta _j > 0\), we have \({{\mathrm{tr}}}(A) > 0\). It is clear from (76) that \(\det (A) > 0\). The linear coefficient of p is given by

which is positive with \(\eta _i,\eta _j < 0\). These values completely describe the coefficients of the polynomial p. Hence, we will evaluate \(p(\cdot )\) at certain reference points to help determine the stable and unstable local manifolds. Here we choose 0, 1, and \(-1\) to obtain

Simple calculation shows that \(p(-1)<p(0)\). It immediately follows that \(p(-1)< p(0)< 0 < p(1)\) holds and the proof is complete. \(\square \)

The next result is useful to characterize the critical points of p.

Lemma 3

If \(\eta _i < 0\) and \(\eta _j < 0\), then \(\frac{1}{3}{{\mathrm{tr}}}(A) > 1\).

Proof

We have already established that \(f'({\bar{k}}) > 1\) for any given stationary point. Since the remaining entries of the main diagonal of A, are positive, it suffices to show that their sum is greater than two, or equivalently, that \(F_1^i + F_1^j -2 > 0\). From (26), we have that

and the desired result follows. \(\square \)

Lemma 4

Suppose that \(\eta _i < 0\), \(\eta _j < 0\) and \({{\mathrm{tr}}}(A^2)-\tfrac{1}{3}{{\mathrm{tr}}}^2(A)>0\). Then, \(p(\lambda )\) has a local maximum at \(r_1 > 0\), a local minimum at \(r_2\), and a point of inflection at \(r_3\) with \(r_1< r_3 < r_2\) and \(r_3 > 1\).

Proof

First differentiate p, and we are able to find two critical points which are the zeroes of \(p'(\lambda )\) in \({\mathbb {R}}\), i.e., the solution of the quadratic equation

Since \({{\mathrm{tr}}}(A^2)-\tfrac{1}{3}{{\mathrm{tr}}}^2(A)>0\), the critical points \(r_1\) and \(r_2\), given by

are well defined.Footnote 20 In particular, taking into account the result of Lemma 3, it is easy to verify that \(0< r_1 < r_2\). Differentiating \(p'\) once more, we have that

thus \(p''\) vanishes at \(r_3 = \frac{1}{3}{{\mathrm{tr}}}(A)\) and \(p''(\lambda )< (>)\ 0 \iff \lambda < (>)\ r_3\), respectively, so \(r_3\) is a point of inflection. By simple inspection of (77), it is clear that \(r_1< r_3 < r_2\), which immediately implies that \(r_1\) is a local maximum and \(r_2\) a local minimum of p in \({\mathbb {R}}\). Finally, applying Lemma 3 again, it follows that \(r_3 > 1\). This completes the proof. \(\square \)

1.1 Analysis of the Stable Manifold

Let \(\varPhi :X \rightarrow X\) be a map describing the nonlinear discrete dynamical system

and let \(\overline{x} \in X\) be a point such that \(\overline{x} = \varPhi (\overline{x})\), i.e., a stationary point. By the stable manifold theorem, if \(\varPhi \) is continuously differentiable in a neighborhood \({\mathscr {N}}\) of \(\overline{x}\) and \(A=D\varPhi (\overline{x})\) is the Jacobian matrix of \(\varPhi \), then there exists a neighborhood \({\mathscr {U}} \subset {\mathscr {N}}\), and a continuously differentiable function \(\phi : {\mathscr {U}} \rightarrow {\mathbb {R}}^2\), for which the matrix \(D\phi (\overline{x})\) has full rank. Moreover, if \(\{x_t\}\) is a solution to (78) with \(x_0 \in {\mathscr {U}}\) and \(\phi (x_0)=0\), then \(\lim _{t \rightarrow \infty } x_t=\overline{x}\). The set of x values satisfying \(\phi (x)=0\) is called the stable manifold of the nonlinear dynamical system.

By Jordan decomposition, the matrix A of the linearized system (78) around \(\overline{x}\) can be written as \(A=B^{-1} \varLambda B\), where B is nonsingular and \(\varLambda \) is a diagonal matrix containing the eigenvalues of A. Hence, a solution can be expressed recursively in terms of these matrices

It is clear that \(x_t \rightarrow \overline{x}\) if and only if \(B(x_0-\overline{x})=w_0\), where \(w_0^i=w_0^j=0\). Let \({\hat{x}}_t:=x_t-\overline{x}\) denote deviations from stationary values for all t. This condition is equivalent to

where \(b_{31}{\hat{c}}_0^i + b_{32}{\hat{c}}_0^j + b_{33}{\hat{k}}_0=w\) is a constant to be determined.

Then, (79) implies

where \(M_{ij}\) is the \(3 \times 3\) matrix whose elements are the principal minors of B and \(\det (B) \ne 0\). The stable root is assumed to be \(\overline{\lambda }_3\), then solving the system above yields

which represents a stable trajectory for all variables, since \(0< \overline{\lambda }_3 < 1\). Now, (80) can be expressed as

Moreover, assuming \(b_{11}b_{22}-b_{12}b_{21} \ne 0\), it follows that

which yields \({\hat{c}}_0^i =(M_{31}/M_{33}){\hat{k}}_0\) and \({\hat{c}}_0^j =(M_{32}/M_{33}){\hat{k}}_0\). And solving for w again in (80), it follows that

In order to characterize the stable manifold, from the fact that \(BA=\varLambda B\), we have

which we can solve for arbitrary nonzero values of \(b_{11}\), \(b_{22}\), and \(b_{33}\), therefore

The stable manifold is the set of values \((c^i,c^j,k)\in {\mathscr {U}}\) such that \(\phi (c^i,c^j,k)=0\) holds. By the implicit function theorem, there exist functions \(\pi _i\) and \(\pi _j\) such that

Differentiating around \(\overline{k}\), we obtain

where \(\overline{\phi }_l^i\), \(l=1,2,3\), denotes the partial derivative of \(\phi ^i\) with respect to the lth argument, evaluated at the stationary point, and similarly for \(\overline{\phi }_l^j\). In fact, the derivatives of the stable manifold at the stationary point are related to the coefficients of B as follows,

This allows to solve for the derivatives of the policy functions from (81), yielding

Equivalently,

where

An appropriate set of conditions must be imposed on the model’s parameters for all previous values to be well defined, in particular \(f' - \overline{\lambda }_1 \ne 0\), \(f' - \overline{\lambda }_2 \ne 0\), \(\overline{F}_1^j-\overline{F}_2^j-\overline{\lambda }_1 \ne 0\), \(\overline{F}_1^i-\overline{F}_2^i-\overline{\lambda }_2 \ne 0\), and \((\overline{F}_1^j-\overline{F}_2^j-\overline{\lambda }_1)(\overline{F}_1^i-\overline{F}_2^i-\overline{\lambda }_2)-(\overline{F}_1^i-\overline{F}_2^i-\overline{\lambda }_1)(\overline{F}_1^j-\overline{F}_2^j-\overline{\lambda }_2) \ne 0\).

Markov Equilibria: Details and Proofs

Proof of Proposition 4

Let g be a continuous function satisfying (44). If g(k) has a stationary point \(k^* > 0\), then

From (42) and (43), this implies \(k^*=g(k^*)=f(k^*)-G^i(k^*,k^*)-G^j(k^*,k^*)\). Given that the strategies \(G^i\), \(G^j\) solve the first-order conditions (39), and taking into account that \(U^i,U^j > 0\) for all \(k \in (0,k_m]\), it follows that

where \(f'(k^*)-G_1^i(k^*,k^*)>0\) and \(f'(k^*)-G_1^i(k^*,k^*)>0\) hold from optimality conditions. \(\square \)

1.1 Analysis of the Stable Manifold

The stable manifold theorem implies the existence of a neighborhood \({\mathscr {N}}\) of \((k^*,k^*)\) and a continuously differentiable function \(\psi : {\mathscr {N}} \rightarrow {\mathbb {R}}\) such that for \(k_0\) sufficiently close to \(k^*\), there exists \(k_1\) with \((k_1,k_0) \in {\mathscr {N}}\) and \(\psi (k_1,k_0)=0\). This is true if the Jacobian matrix \([D\psi (k^*,k^*)]\) has full rank.

The linearized system \(\varPsi \) can be represented in terms of the coefficients of the characteristic polynomial \(P(\lambda )\) as \(\varPsi _1^*(k_t-k^*) + \varPsi _2^*(k_{t+1}-k^*) + \varPsi _3^*(k_{t+2}-k^*)=0\), so that the behavior of \(k_t\) near \(k^*\) can be characterized by a \(2 \times 2\) matrix

which is nonsingular. By Jordan decomposition, A can be written as \(A = B^{-1}\varLambda B\), where B is a nonsingular matrix, and \(\varLambda \) a diagonal matrix with the eigenvalues \(\lambda _1^*\) and \(\lambda _2^*\) in the main diagonal. Using the fact that \(BA=\varLambda B\), the stable manifold can be characterized from the following system

and for any nonzero values of \(b_{11}\) and \(b_{22}\),

Note that the saving function must satisfy \(\psi [g(k),k]=0\), hence its derivative is given by

The derivatives of the stable manifold at the stationary point are

which in turn implies

If the system is governed by \(\lambda _2^*\) instead, then simply replace \(\lambda _1^*\) with \(\lambda _2^*\) in all previous calculations.

Proof of Theorem 2

Two technical lemmas complete the proof. In both cases, it is assumed that \(\lambda \) and \(I_\varepsilon \) are defined as in Sect. 5.3. \(\square \)

Lemma 5

Let \(\{h_n\}_{n \in {\mathbb {N}}}\) be a sequence of functions in \(D_{|\lambda |}(I_\varepsilon )\) which converges to h. Then, the sequence \(h^2_n(k):=h_n(h_n(k))\) converges uniformly to \(h^2(k):=h(h(k))\) for all \(k \in I_\varepsilon \).

Proof

Since the family of functions in \(D_{|\lambda |}(I_\varepsilon )\) is uniformly bounded and equicontinuous, by the Arzelà-Ascoli theorem, \(h_n\) converges uniformly on \(I_\varepsilon \). Given that h is continuous, hence uniformly continuous, for every \(\upsilon > 0\), there is a \(\delta > 0\) such that \(k,k' \in I_\varepsilon \) with \(|k-k'|< \delta \) implies \(|h(k)-h(k')|< \upsilon \). On the other hand, there is a positive integer N such that \(|h_n(k) - h(k)|<\delta \) for all \(n>N\) and all \(k \in I_\varepsilon \). The combination of both results immediately implies that for every \(\upsilon > 0\), there is some N such that \(|h_n(h_n(k))-h(h(k))| < \upsilon \) for all \(n > N\) and all \(k \in I_\varepsilon \). Hence \(h^2_n(k)\) converges uniformly on \(I_\varepsilon \) to \(h^2(k)\). \(\square \)

Lemma 6

The operator \(T:D_{|\lambda |}(I_\varepsilon ) \rightarrow D_{|\lambda |}(I_\varepsilon )\) is continuous in the sup norm.

Proof

Let \(h_n\) be a sequence in \(D_{|\lambda |}(I_\varepsilon )\) that converges to h and fix \(\upsilon >0\). By a similar argument given in the main body of the proof, there exists a real number \(m_2 \ne 0\) such that

for all n and for all \(k \in I_\varepsilon \). Then, for some \(0< \delta < \upsilon /(|m_2\lambda |)\), if \(\Vert h_n-h\Vert < \delta \), we have

which proves that T is continuous. \(\square \)

Rights and permissions

About this article

Cite this article

Alcalá, L., Tohmé, F. & Dabús, C. Strategic Growth with Recursive Preferences: Decreasing Marginal Impatience. Dyn Games Appl 9, 314–365 (2019). https://doi.org/10.1007/s13235-018-0269-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13235-018-0269-3