Abstract

The current work builds on research demonstrating the effectiveness of Productive Failure (PF) for learning. While the effectiveness of PF has been demonstrated for STEM learning, it has not yet been investigated whether PF is also beneficial for learning in non-STEM domains. Given this need to test PF for learning in domains other than mathematics or science, and the assumption that features embodied in a PF design are domain-independent, we investigated the effect of PF on learning social science research methods. We conducted two quasi-experimental studies with 212 and 152 10th graders. Following the paradigm of typical PF studies, we implemented two conditions: PF, in which students try to solve a complex problem prior to instruction, and Direct Instruction (DI), in which students first receive instruction followed by problem solving. In PF, students usually learn from their failure. Failing to solve a complex problem is assumed to prepare students for deeper learning from subsequent instruction. In DI, students usually learn through practice. Practicing and applying a given problem-solving procedure is assumed to help students to learn from previous instruction. In contrast to several studies demonstrating beneficial effects of PF on learning mathematics and science, in the present two studies, PF students did not outperform DI students on learning social science research methods. Thus, the findings did not replicate the PF effect on learning in a non-STEM domain. The results are discussed in light of mechanisms assumed to underlie the benefits of PF.

Similar content being viewed by others

Introduction

In the last decade there has been increased interest in investigating the effectiveness of approaches with problem solving prior to instruction, such as Productive Failure (PF), for students’ knowledge acquisition. Several recent reviews of studies testing for the PF effect (cf., Kapur 2015; Loibl et al. 2017; Darabi et al. 2018) have demonstrated beneficial effects of PF (or problem-solving prior to instruction approaches more generally) on students’ acquisition of conceptual knowledge. This increased interest in the effectiveness of PF might be partly due to the expectation that PF holds the potential to resolve the assistance dilemma (Kapur and Rummel 2009). The assistance dilemma (Koedinger and Aleven 2007) describes a critical and hitherto unanswered question in instructional science: How can the giving and withholding of instructional support be balanced in order to promote students’ learning most effectively?

The PF approach suggests temporarily withholding instructional support in order to foster students’ development of a deep conceptual understanding. Specifically, PF combines two successive learning phases, an initial problem-solving phase and a subsequent instruction phase. During the former, students collaboratively generate solution ideas for a complex and novel problem without instructional support (Kapur and Bielaczyc 2012). During the latter, the instructor builds upon erroneous student solutions and compares and contrasts the features of these erroneous solutions with the components of the canonical solution (Kapur and Bielaczyc 2012). Most students fail to solve the complex problem canonically during the initial problem-solving phase, i.e. they generate incomplete or erroneous solution ideas due to lacking the specific knowledge. However, this initial failure is assumed to productively prepare students for learning during subsequent instruction (e.g. Kapur 2016). When students fail to solve the problem (often expressed in erroneous solution ideas) or at least when confronted with their erroneous solution ideas during instruction, they should experience the limitations of their existing understanding (e.g. Loibl and Rummel 2014a). These experiences of failure are assumed to challenge students’ current understanding and thus prepare them for revising their current understanding (cf., Tawfik et al. 2015).

The effectiveness of PF for students’ acquisition of conceptual knowledge has predominantly been examined and replicated for learning in STEM domains, especially mathematics. Thus, there is a lack of empirical evidence regarding whether the PF effect depends on the structuredness of the domain or whether it also occurs for learning in less structured domains (Kapur 2015; Loibl et al. 2017). Accordingly, Kapur (2015) and Loibl et al. (2017) called for research investigating the effectiveness of PF for student learning in domains other than mathematics or science. To address this research gap, the present work attempts to probe the effectiveness of PF for learning beyond STEM domains. Specifically, we examine the effectiveness of PF for students’ acquisition of knowledge about social science research methods in two quasi-experimental studies.

In the following sections, we first describe the features of the PF design and the reasons why these features are hypothesized to be important for the effectiveness of PF. Next, we discuss potential particularities of STEM versus non-STEM domains and how these may be linked to the effectiveness of PF. Finally, we give an overview of previous research on PF, focusing on the design features implemented in these studies and the domains that they investigated. The description of the PF design features, the discussion of potential requirements that should be met by the domain in a PF setting, and the literature overview lead to several criteria that guided the design of our two studies.

Theoretical background

PF stresses the benefits of failure, caused by temporarily withholding certain support structures (i.e., structure of a problem-solving task and instructional support from a teacher) during a problem-solving activity, for students’ learning (Kapur 2008). The idea of PF builds strongly on VanLehn’s (1988) theory of impasse-driven learning, postulating that learning only occurs when learners’ knowledge is incomplete, such that they reach an impasse during problem-solving. According to research on PF, the effectiveness of these impasses or failures for learning is determined by particular features of the instructional design and specific learning-related mechanisms, which are described in the following section.

Productive Failure: design features and learning mechanisms

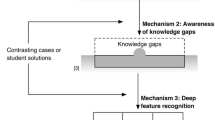

Kapur (2015) claims that the PF effect depends on particular features of the instructional design. The review by Loibl et al. (2017) also suggests that the effectiveness of PF (or problem solving prior to instruction more generally) relates to specific features of the instructional design. These features are expected to trigger the following three learning-related mechanisms which are hypothesized to underlie the effectiveness of PF (Loibl et al. 2017): (1) activation of prior knowledge, (2) awareness of knowledge limitations, and (3) recognition of the deep features of the targeted learning concepts. The instructional design as well as the instructor have to promote these processes in particular ways (for an overview, see Table 1). Specifically, asking students to collaboratively invent solution ideas to a complex and novel problem prior to instruction is expected to trigger mechanisms (1) and (2). For this purpose, the selected problem has to meet a certain level of complexity. Comparing and contrasting features of erroneous solution ideas with components of the canonical solution during subsequent instruction is assumed to promote mechanisms (2) and (3). For this purpose, the instruction has to use students’ erroneous solution ideas.

These design features and underlying mechanisms of PF demonstrate that PF is both a learning activity that triggers certain learning mechanisms in learners and an instructional approach, as the instructor and the instructional design must foster these mechanisms in particular ways. In the following paragraphs, we describe the three mechanisms in more detail.

Activation of prior knowledge

The first mechanism (i.e., activation of prior knowledge) is expected to emerge during the initial problem-solving phase of PF. When students generate intuitive solution ideas to a complex and novel problem prior to instruction, PF is hypothesized to help them activate relevant prior knowledge (Kapur 2016; Loibl et al. 2017; Newman and DeCaro 2018). In turn, this should prepare students for “future learning” (Schwartz and Martin 2004), that is, for learning the targeted concepts during subsequent instruction. According to schema theory, the activation of preexisting schemas (i.e., prior knowledge) is a crucial requirement for the integration of new knowledge and thus for the adaptation of one’s preexisting schemas (Sweller 1988; Sweller et al. 1998).

In a PF setting, the process of prior knowledge activation is expected to depend on the design of the problem that students are asked to solve. According to Kapur and Bielaczyc (2012), the problem should address students’ prior knowledge by meeting a sweet spot of complexity or, as Jacobson et al. (2015) conjecture, by meeting a zone of proximal failure. That is, students should not be able to develop the canonical solution during the initial problem-solving phase. At the same time, they should be able to invent intuitive ideas without becoming frustrated with the difficulty. Previous PF studies have used students’ invented solution ideas as a measure for PF students’ activation of prior knowledge (e.g. Kapur and Bielaczyc 2012; Loibl and Rummel 2014b). As PF students usually generate their intuitive solution ideas in small groups and without any instructional support during the initial problem-solving phase, they can build only on their prior knowledge in order to invent solution ideas (cf., Kapur 2016). These invented solution ideas therefore reflect PF students’ prior knowledge (Kapur 2012; Loibl and Rummel 2014b).

Awareness of knowledge limitations

The second mechanism (i.e., awareness of knowledge limitations) is expected to emerge during the delayed instruction phase. By comparing and discussing erroneous student solutions during the delayed instruction phase, PF is hypothesized to help students become aware of the limitations and gaps in their prior knowledge (Loibl et al. 2017). The process of helping learners to become aware of their knowledge limitations or failures is assumed to be a crucial process in failure-based learning approaches (for a review, see Tawfik et al. 2015). The experience of failures is expected to challenge learners’ existing mental models, thus preparing them to reflect on and resolve their failures (Tawfik et al. 2015). Hence, it is likely that an awareness of knowledge limitations prepares students for the construction of new knowledge during instruction, as it may help learners to pay attention to the concepts that they need in order to adapt their existing incomplete or erroneous understandings.

Students in a PF setting may already notice their knowledge limitations during the initial problem-solving phase when they struggle or even fail to solve a complex and novel problem canonically (Loibl and Rummel 2014a; Kapur 2016; Newman and DeCaro 2018). The initial problem-solving phase may foster a rather global awareness or feeling of failure in students. However, as PF problems are usually complex and ill-structured, they do not explicitly offer students the opportunity to evaluate their solutions with respect to specific features that are met or not met and to recognize the concrete reasons why their solutions do not work (Loibl et al. 2017). Thus, the initial problem-solving phase does not usually foster students’ awareness of their specific knowledge gaps. To achieve this, the delayed instruction phase should build on erroneous student solutions (Loibl and Rummel 2014a; Loibl et al. 2017).

Previous PF studies assessed students’ awareness of knowledge limitations by using different self-report measures, such as students’ perceived competence (Loibl and Rummel 2015) or their perceived knowledge gaps (e.g. Newman and DeCaro 2018).

Recognition of deep features

The third mechanism (i.e., recognition of deep features) is also expected to emerge during the delayed instruction phase in a PF setting. By contrasting the features of typical erroneous student solutions with each component of the canonical solution during instruction, PF is hypothesized to afford learners the possibility to pay attention to the critical conceptual features of the targeted learning concepts (Loibl et al. 2017; Kapur and Bielaczyc 2012). Moreover, the instructor-led comparing and contrasting activity supports students in recognizing how these critical features relate to one another and to the canonical solution (Kapur and Bielaczyc 2012). Thereby, the third PF mechanism probably fosters students’ deep understanding of the targeted learning concept.

In previous PF studies, evidence for students’ recognition of deep features was derived from PF students’ performance on posttest items assessing conceptual knowledge. Conceptual knowledge is defined as understanding of the deep features of a domain and of the interrelations between these features and principles in a domain (Rittle-Johnson and Alibali 1999).

In summary, according to the PF literature, for PF to be effective, it appears important that students work on a complex problem prior to providing instruction, and that the instruction uses erroneous student solutions and compares and contrasts the features of student solutions with the components of the canonical solution. This fosters students’ activation of prior knowledge, awareness of knowledge limitations, and recognition of deep features (see Table 1). While these three PF mechanisms were derived from research focusing on STEM domains (see the review by Loibl et al. 2017), the features of PF design that are expected to foster the three PF mechanisms are considered to be domain-independent (Kapur 2015). Presumably, therefore, the PF effect and its potential underlying mechanisms should also occur for learning in a respectively designed non-STEM setting. Nevertheless, given the features of the PF design and the mechanisms hypothesized to underlie the PF effect, one could also argue that not every domain is equally appropriate for learning in a PF setting: If the instruction in a PF setting has to compare and contrast the components of the canonical solution with features of erroneous student solutions (Loibl and Rummel 2014a; Loibl et al. 2017; Kapur 2016), then the targeted learning concept needs to have canonical solutions with clearly definable components that can be compared and contrasted with features of erroneous solution ideas. However, this does not imply that the PF problem allows only for one possible correct solution. On the contrary, as mentioned above, the PF problem should be complex and allow students to generate different solution ideas. There should, though, be a canonical or established solution to the problem, the crucial components of which can be presented and explained to students during instruction. Otherwise, PF students would probably have difficulties to detect their specific knowledge gaps and to recognize the deep features of the targeted learning concept during instruction. Therefore, Loibl et al. (2017) hypothesize that the effectiveness of PF may only emerge for learning in structured domains, which “allow for clear identification of deep features and evaluation of solution attempts” (p. 712). The following section discusses these domain features in more detail.

Productive failure: domain features

Kapur (2015) and Loibl et al. (2017) emphasize the lack of evidence on whether PF is also effective for learning in domains that are less structured than mathematics and science. The characteristics of such structured domains can be illustrated through the mathematics topic that has been investigated in several previous PF studies, namely standard deviation. In these studies (e.g. Kapur 2014; Loibl and Rummel 2014a), secondary school students received data sets of fictitious athletes and their scores per year. Students were asked to explore the most consistent athlete in scores. During the subsequent instruction, the standard deviation formula was presented as a canonical solution to the previous problem-solving task. The standard deviation formula includes crucial components that are clearly definable and evaluable, such as taking the square of the distances from each data point to the mean in order to ensure that positive and negative values do not cancel each other out.

In contrast to such STEM-related learning topics, several non-STEM learning topics are likely less structured given the differing nature of knowledge between STEM and non-STEM disciplines. Kagan (2009), for instance, describes that the circumstances under which knowledge can be gained differs between the natural sciences, the social sciences, and the humanities. While the natural sciences can often observe phenomena under controlled conditions, the social sciences and humanities cannot (Kagan 2009). The level of control of the conditions under which knowledge can be gathered within the disciplines may equate to the level of structuredness of the knowledge that is discovered within these disciplines. Specifically, the lower the control of conditions under which certain phenomena can be observed, the higher the possibility to develop multiple and partly contradictory explanations and interpretations of these phenomena, and consequently the greater the difficulty of defining the deep features, rules, and patterns underlying these phenomena and concepts.

The claim that several learning topics in non-STEM domains are not as structured as the above-described STEM learning topic (i.e., standard deviation) may be exemplified through learning topics within the social and educational sciences. The curricula for social and educational science classes in the German state of North Rhine-Westphalia (where the present studies took place), for instance, prescribe learning topics such as social inequality (for social science classes) or learning and development (for educational science classes). In this context, secondary school students should get to know different perspectives, theories, and models, but canonical explanations with clearly evaluable components for the development of social inequality or the processes underlying learning and development cannot be taught (although some teachers may try to do so in classroom realities). Hence, these or similar non-STEM learning topics appear to have little structure and may therefore be inappropriate for learning in a PF setting. However, there are also more structured non-STEM learning topics. For instance, the social and educational science curricula also include interpreting empirical evidence and analyzing experiments by referring to different quality criteria. These learning topics related to social science research methods can be characterized as rather structured, as they allow for a clear identification of crucial principles and criteria relevant for evaluating empirical evidence and experimental study designs, such as the systematic variation of variables, the random assignment of participants, or sample size. Nevertheless, depending on the traditions or culture within a social science discipline, the principles and criteria for an experimental study design appropriate for investigating a certain research question may differ. Thus, one could argue that topics related to social science research methods, such as principles of experimental design, are not as structured as several STEM topics (e.g. standard deviation in mathematics), but are more structured than non-STEM topics relating, for instance, to theoretical, political, or other debates. On a continuum ranging from structured to non-structured, topics related to social science research methods may be positioned towards the structured side of the continuum and may thus be termed as rather structured topics. We assume that these or similarly structured non-STEM topics may be appropriate for learning in a PF setting. As previously mentioned, however, research has not focused on the effectiveness of PF for learning in non-STEM domains, which are usually not as highly structured as several STEM-related learning topics. Nevertheless, some scholars have tested the PF effect in domains other than mathematics and science. These studies are described in more detail in the following section.

Design features and domains in previous studies: an overview

Although some studies have tested the effect of problem solving prior to instruction on learning in non-STEM domains, it can be noted that the PF effect has not been tested in what one would call a classical PF study in these domains. Classical PF studies, such as those by Kapur (2010, 2011, 2012), Kapur and Bielaczyc (2011, 2012), Kapur and Lee (2009), Loibl and Rummel (2014a, b), and Westermann and Rummel (2012), focus on students’ learning in a STEM domain (i.e., mathematics) and additionally share the following three characteristics:

-

(a)

The implemented PF or problem-solving prior to instruction condition fulfilled crucial design features described by Kapur and Bielaczyc (2012): (1) students collaboratively invented intuitive solution ideas to a complex problem during the initial problem-solving phase, and (2) erroneous student solutions were compared and contrasted with the canonical solution during the subsequent instruction phase.

-

(b)

The effectiveness of PF was tested for secondary school students’ (or undergraduate students’) knowledge acquisition.

-

(c)

The effectiveness of PF for students’ knowledge acquisition was examined by comparing PF to a particular control condition; specifically, by comparing PF, a problem solving prior to instruction approach, to an instruction prior to problem-solving approach.

Note that the control condition in classical PF studies is sometimes termed “instruction prior to problem solving” (Loibl and Rummel 2014a, b) or “lecture and practice” (Kapur 2010, 2011; Kapur and Lee 2009), although most studies call this condition “direct instruction (DI)” (Kapur 2012; Kapur and Bielaczyc 2011, 2012; Westermann and Rummel 2012). The main difference between the PF condition and the DI condition is the timing of instruction: While DI students receive instruction prior to problem solving, PF students receive instruction after problem solving. The DI approach implemented in classical PF studies differs from DI approaches implemented in studies conducted by, for instance, Stevens et al. (1991) or Klahr and Nigam (2004), in which the term direct does not relate to the timing of instruction (as in classical PF studies) but rather to the fact that detailed information and explanations are directly or explicitly provided by the instructor instead of learners having to discover this information on their own. In these studies, direct instruction (in terms of explicit instruction) was given between two learning activities (cf., Klahr and Nigam 2004) or prior to a learning activity, and in addition adaptively during a learning activity (cf., Stevens et al. 1991). In the classical PF studies described here, both DI students and PF students receive explicit instructional explanations, either prior to problem solving in the DI condition or after problem solving in the PF condition. Students in both conditions do not usually receive any instructional support during the problem-solving activity.

Although most previous PF research focused on the effect of problem solving prior to instruction on learning in STEM domains (for a review, see Sinha and Kapur 2019), eight studies should be mentioned which examined the effect of problem solving prior to instruction on learning in non-STEM domains or on learning domain-general skills (see Table 2). These studies differ from classical PF studies with regard to (a) particular design features of the implemented problem-solving prior to instruction condition, (b) participants’ age, or (c) the implemented control condition. Hence, we describe these studies as PF-similar studies. The eight PF-similar studies focused on learning in psychology (i.e., schema and encoding concepts), educational psychology (i.e., learning strategies), medical domains (i.e., dental hygiene and dental surgery), and on learning the Control of Variables Strategy (CVS). The CVS is often framed in a STEM-related context (e.g. in physics by Kant et al. 2017), but can also be described as a domain-general skill of scientific inquiry and reasoning (Chase and Klahr 2017). Of all eight studies, only the two by Schwartz and Bransford (1998) found a positive effect of problem solving prior to instruction on student learning (see Table 2). In the following paragraphs, we briefly describe how the PF-similar studies differ from classical PF studies.

Seven studies (all except Tam 2017) differ from classical PF studies regarding the design of the problem-solving prior to instruction condition. In these studies, the instruction phase did not compare and contrast erroneous student solutions with each other and with the canonical solution (as in classical PF studies). As described above, using students’ erroneous student solutions and comparing the features of these solutions with the components of the canonical solution during the instruction phase is hypothesized to trigger crucial mechanisms (i.e., awareness of knowledge gaps and recognition of deep features) that may underlie the PF effect (see Table 1). Thus, it remains unclear whether the lack of effects of problem solving prior to instruction shown by the majority of these PF-similar studies is attributable to the differing design of the respective condition compared to classical PF studies. Moreover, in six studies (all except Tam 2017; Chase and Klahr 2017), students worked individually during the initial problem-solving phase and not in small groups or pairs, as in classical PF studies. However, so far, evidence on the role of collaboration for the effectiveness of problem solving prior to instruction, although described as an important feature of the PF design by Kapur and Bielaczyc (2012), is rare and inconclusive (e.g. Weaver et al. 2018).

The studies conducted by Chase and Klahr (2017) and Matlen and Klahr (2013) differ from classical PF studies regarding the age of the participants, with participants being younger than in classical PF studies. Other studies testing problem solving prior to instruction compared to instruction prior to problem solving with rather young school students (i.e., 2nd to 5th graders) also found no effect of the former on learning in mathematics (cf., Loehr et al. 2014; Fyfe et al. 2014; Mazziotti et al. 2019). Hence, it may be assumed that the effectiveness of PF does not apply to young students, and that the age of the participants is the reason why Chase and Klahr (2017) and Matlen and Klahr (2013) did not find a positive effect of problem solving prior to instruction on students’ learning of the CVS.

The studies by Schwartz and Bransford (1998), Glogger-Frey et al. (2015), and Tam (2017) differ from classical PF studies regarding the control condition. Schwartz and Bransford (1998) and Glogger-Frey et al. (2015) compared the problem-solving prior to instruction condition not with an instruction prior to problem-solving condition but rather with another learning activity (e.g. studying worked examples or reading a text) prior to instruction. Thus, regardless of whether students in the problem-solving prior to instruction condition outperformed (Schwartz and Bransford 1998) or did not outperform (Glogger-Frey et al. 2015) their counterparts in the control condition, it remains unclear whether these students would have outperformed students from an instruction prior to problem-solving condition as in classical PF studies. In the study by Tam (2017), students in the control condition worked on well-structured problems after instruction and received instructional guidance during problem solving. Hence, in contrast to classical PF studies, Tam (2017) varied not only the timing of instruction but also the type of problem (ill-structured versus well-structured) and the provision of instructional guidance during problem solving (without versus with guidance). Due to these confounding factors, the study findings are difficult to interpret.

In summary, the eight PF-similar studies can be seen as first attempts to transfer the effect of problem solving prior to instruction from learning in STEM domains to learning in non-STEM domains. As these studies revealed inconsistent findings and differ from classical PF studies, it remains unclear whether the effectiveness of PF depends on the learning domain or rather on particular design features of the problem-solving prior to instruction approach.

Productive failure in learning non-STEM domains

As argued above, the effectiveness of PF is hypothesized to depend on certain design features and learning-related mechanisms (see Table 1). To date, these mechanisms and the effectiveness of PF have only been examined for learning in STEM domains. Only a few studies tested the effect of problem solving prior to instruction on learning in non-STEM domains (see Table 2), and mostly demonstrated no or a negative effect of problem solving prior to instruction on learning in a domain other than mathematics or science. However, these studies differ from the design of classical PF studies, whereby it remains unclear whether the effectiveness of PF depends on the learning domain or rather on certain design features as hypothesized by Kapur (2015). Hence, there is a need to test the effectiveness of PF for learning in a non-STEM domain or—according to Kapur (2015) and Loibl et al. (2017)—in domains that are less structured than mathematics and science. At the same time, as mentioned above, it is hypothesized that the PF effect may depend on the structuredness of the learning domain (Loibl et al. 2017). Consequently, probing the effectiveness of PF for learning beyond STEM domains goes along with three challenges and requirements: (1) the design of the PF setting should follow certain principles such that mechanisms hypothesized to underlie the PF effect can emerge, (2) the study design should emulate the features of classical PF studies, and (3) the learning domain should be less structured than mathematics and science, but at the same time meet a certain level of structuredness such that students’ solution ideas can be evaluated as well as compared and contrasted with clearly defined features of the canonical solution during instruction. We aimed to face these challenges in the present studies and extended PF from learning in mathematics and science to learning social science research methods. As argued above, topics related to these methods (e.g. analysis of experiments and evaluation of empirical evidence) can be described as rather structured, as they are less structured than the majority of STEM-related learning topics but still allow for a clear definition and evaluation of canonical components. To investigate the PF effect on learning social science research methods, we conducted two quasi-experimental studies while emulating the design of classical PF studies. That is, (a) our PF condition followed the features that are expected to trigger the PF mechanisms (see Table 1), (b) we conducted our studies with secondary school students, and (c) we compared two conditions: PF with problem solving prior to instruction and DI with instruction prior to problem solving.

Research context of the present work

In the state of North Rhine-Westphalia, where the present studies took place, students can usually choose a social or educational science class in 10th grade. According to the German curricula for the respective classes, students should not only acquire content knowledge of different theories and concepts, but also knowledge related to the research methods and scientific practices within the social and educational sciences. To foster students’ knowledge related to research methods and scientific practices, visits to out-of-school labs are expected to be promising (e.g. Pauly 2012). Out-of-school labs were increasingly initiated after the first PISA study in the year 2000, which demonstrated that 15-year-old students’ scientific literacy scores in Germany were significantly lower than the overall average of the 43 investigated countries (OECD 2001). To increase students’ scientific literacy following this “PISA shock” (Waldow 2009), numerous out-of-school labs for natural sciences and recently also for social sciences were founded in Germany. Out-of-school labs are non-formal learning settings that are assumed to be highly authentic environments for learning scientific ways of thinking and working due to their location (often on a university campus), the instructors (often real scientists or prospective scientists), and the scientifically authentic materials, methods, and contents that students work with during their visit (Garner and Eilks 2015; Scharfenberg and Bogner 2014; Glowinski and Bayrhuber 2011). Usually, projects in such labs run during regular school days; students take part as a whole class and arrive at the lab together with their teachers. As the visits are typically organized by the teachers, they are a compulsive school activity for every student of the class. To enable such formal activity during regular school hours, the lab projects often match the respective curriculum. The present studies took place in an out-of-school lab for social sciences at a large German university.

Investigating the effectiveness of PF for learning social science research methods in an out-of-school lab, as compared to conducting the studies in schools, holds three advantages: (1) As the classes visit the out-of-school lab together with their teachers, the location / environment (lab on a university campus) and the instructor (often a scientist) are the same in all classes. (2) As a non-formal learning setting, the lab may offer a better and more appropriate space for learning by PF than the usual and formal school context, because “school too often allows much less space for risk, exploration, and failure” (Gee 2005a, p. 35). In many cases, experiencing failure has negative connotations in school, and is closely linked to unsatisfactory performance and bad grades (Gee 2005b). (3) Out-of-school labs appear to be ideal settings for implementing PF. More specifically, out-of-school labs aim at implementing authentic experiential learning activities that emulate processes of scientific inquiry in order to situate learners in the role of a scientist and to foster their knowledge about scientific ways of thinking and working (e.g. Euler 2004; Glowinski and Bayrhuber 2011). PF can also situate learners in the role of a scientist by emulating features of authentic scientific practices (Cho et al. 2015; Kapur and Toh 2015). That is, students are asked to explore and to generate solution attempts to a complex problem during an initial problem-solving phase. When being taught the canonical solution in the subsequent instruction phase, students are then required to falsify their initial assumptions about potential solutions. This process of posing hypotheses, discovering that these conjectures are limited or even false, and finally refuting and falsifying the initially generated hypotheses, is characteristic for scientific inquiry processes (cf., Chalmers 2013). Accordingly, it appears that the combination of PF and the out-of-school lab could be particularly beneficial for learning social science research methods.

Research questions

So far, we have argued that problem solving prior to instruction approaches, such as PF, have a greater effect on students’ knowledge acquisition than instruction followed by problem solving approaches, such as DI. While this effect has been demonstrated for learning in STEM domains (especially mathematics), the effectiveness of PF has not been tested for learning in non-STEM domains. Previous research suggests that the PF effect does not depend on the domain (cf., Kapur 2015), but rather on particular features of the PF design (see Table 1), which are expected to foster certain learning-related mechanisms (cf., Loibl et al. (2017) and thus the effectiveness of PF for learning. Therefore, when adhering to these design features, the PF effect should be transferable from learning in STEM domains to learning in non-STEM domains. Building on this argument, the present studies focus on the question of whether the effectiveness of PF unfolds when learning social science research methods. To investigate this question, we conducted two quasi-experimental studies in an interdisciplinary out-of-school lab at a large German university and compared PF to DI.

As the present studies are a first attempt to transfer the design of classical PF studies from learning in a STEM domain to learning in a non-STEM domain, we first examine whether our study design fulfilled the features of the classical PF design that are expected to foster the PF mechanisms and thus the effectiveness of PF. Subsequently, we explore whether the mechanisms that are hypothesized to underlie the PF effect, which have also only been replicated for learning in STEM domains, emerge when learning social science research methods. Finally, we investigate our main research question, namely whether the PF effect occurs for learning social science research methods. In summary, we investigate the following two design-related questions, three mechanism-related questions, and one main research question:

-

Design-related question 1: Does the problem-solving task meet the sweet spot of complexity (cf., Table 1), insofar as PF students are able to invent solution ideas without solving the problem canonically? This design feature is assumed to be important for promoting PF students’ activation of relevant prior knowledge during the initial problem-solving phase (cf., Kapur and Bielaczyc 2012; Loibl et al. 2017).

-

Design-related question 2: Do PF students’ solution ideas indeed contain the errors that are focused on during instruction? In the present studies, the instruction lesson was the same in both conditions, and thus standardized by using erroneous student solutions identified in pilot tests as typical for the materials. Using students’ erroneous solutions during instruction is expected to foster students’ awareness of knowledge limitations and their recognition of the deep features of the targeted learning concept (cf., Loibl and Rummel 2014a; Loibl et al. 2017).

-

Mechanism-related question 1: Do PF students activate relevant prior knowledge during problem solving? According to previous PF studies, this mechanism should be reflected in a positive association between PF students’ performance during problem solving (e.g. the quality of their invented solution ideas) and their performance on a posttest assessing their learning outcome (cf., Kapur and Bielaczyc 2012; Loibl and Rummel 2014b). This positive association would indicate that PF students were able to activate relevant prior knowledge during the problem-solving phase, which subsequently helped them to learn the targeted concept.

-

Mechanism-related question 2: Do PF students develop an awareness of their knowledge limitations? Given the findings of Loibl and Rummel (2015), this mechanism should be indicated by PF students’ competence perceptions after both learning phases: PF students should report lower perceived competence than DI students after both learning phases. Moreover, PF students’ perceived competence after instruction should correlate with their learning outcome. This positive correlation would indicate that the instruction helped students to accurately evaluate their competence.

-

Mechanism-related question 3: Do PF students recognize the deep features of the targeted learning concept during instruction? So far, evidence for this mechanism stems from students’ performance on posttest items assessing their deep understanding (cf., Loibl et al. 2017). That is, PF students should perform better than DI students on items testing their ability to apply and transfer their knowledge for solving novel and unfamiliar tasks. This question also addresses our main research question, but focuses on certain items of the posttest assessing deep understanding, while the main research question relates to the total learning outcome.

-

Main research question: Do students in the PF condition outperform students in the DI condition when learning social science research methods? As ample research has demonstrated the effectiveness of PF for learning in a structured domain, we hypothesize that PF students will outperform DI students on learning social science research methods.

Method study 1

Participants and learning domain

Participants were 10th graders from eight secondary schools in the German state of North Rhine-Westphalia. In total, 213 students from eleven social or educational science classes agreed to participate with written parental consent. 212 students (Age: M = 16.43, SD = 0.78; 62% girls) who were present during all learning phases were included in the analysis. Our sample is sufficient to reveal effects of η2 ≥ 0.04 or d ≥ 0.4 (f = 0.20, 1-β = 0.83; G-Power analysis; Faul et al. 2007).

Participants had no or limited instructional experience with the targeted learning topic prior to the study. The topic for study 1 was principles of experimental design, which is from the 11th grade syllabus. According to the respective curriculum, at the end of the 11th grade, students should be able to analyze experiments with respect to different quality criteria. Thus, we expected that the 10th graders in our study had no instructional experience with the topic of principles of experimental design in the social sciences. Selecting a learning topic from the next year’s syllabus is a common strategy employed in previous PF studies to ensure that the study participants have no instructional experience with the given topic (e.g. Kapur 2010).

Experimental design

The eleven participating classes were each randomly assigned to our experimental conditions as a whole. Assignment of whole classes was determined by the out-of-school-lab setting of our study. Our study took place in an interdisciplinary out-of-school lab at a large German university, which offers projects for natural sciences, social sciences, and humanities for students from secondary schools (from 5th grade to 13th grade).

As out-of-school labs are visited by whole classes with their teachers during regular school hours, we used a quasi-experimental design and assigned six whole classes (n = 121) to the PF condition and five whole classes (n = 91) to the DI condition. Thus, we implemented a between-subjects design. In both conditions, students experienced two successive learning phases: a problem-solving phase and an instruction phase. PF students experienced the instruction phase after the problem-solving phase and DI students experienced the instruction phase prior to the problem-solving phase.

Besides the two learning phases, we implemented four test phases, comprising three questionnaires and a posttest. Prior to the first learning phase, a pre-questionnaire assessed students’ grades in three different subjects: social sciences, German language, and mathematics. After the first and second learning phase, a questionnaire assessed students’ perceived competence. At the end of the study, all students completed a posttest.

Learning materials

Problem-solving tasks and instruction lesson

To teach students principles of experimental design, we created an instruction lesson and two isomorphic problem-solving tasks (Problem 1: a teacher conference scenario, and Problem 2: a parent-teacher conference scenario). Problem 1 was embedded in a cover story about a teacher conference (see Online Resource 1) and depicted three different suggestions and opinions of three fictitious math teachers about how to improve math teaching and learning in 10th grade. Problem 2 was embedded in a cover story about a parent-teacher conference and depicted three different suggestions and opinions of fictitious parents and history teachers about how to improve history education in 9th grade. Both problems asked students to imagine themselves as educational researchers and to design a study which would allow them to investigate all opinions and suggestions mentioned by the fictitious teachers (and parents). The instruction lesson built upon Problem 1 and comprised the following three parts:

-

Part 1: Recapitulation (in PF) or introduction (in DI) of Problem 1 as depicted in Fig. 1.

-

Part 2: Comparing and contrasting four features of typical erroneous student solutions with the components of the canonical solution (see Table 3), whereby the canonical solution was explained in a step-by-step procedure. The contents of the second part of the instruction were based on the results of pilot tests of our learning materials, which are described in the section below.

-

Part 3: Presentation of the canonical solution, namely a 2 × 2 factorial design with pre- and posttest and questionnaire to measure control variables (see Online Resource 2). The contents of the third part of the instruction phase were based on solutions to Problem 1 developed by experts in our research group. The instructor also presented an alternative canonical solution to Problem 1: a 3 × 3 factorial design with pre- and posttest in which the control variable (i.e., language skills of students) was implemented as a third factor. However, the instructor described that this would be challenging in real-world learning settings, such as schools, for several reasons. Thus, a 2 × 2 design with pre- and posttest and questionnaire was presented as being more appropriate.

Pilot tests of the learning materials

In two rounds of pilot tests, we aimed to ensure that the teacher conference problem (Problem 1) had an appropriate level of complexity, allowing students to produce (non-canonical) solution ideas (cf., Kapur and Bielaczyc 2012), and that the instructional explanation used typical erroneous student solutions (cf., Loibl and Rummel 2014a).

In the first pilot round, we tested four triads from two 10th grade classes, which were participating in different out-of-school lab projects at the time of our pilot tests. We investigated whether the students were able to generate and explore different solution ideas to Problem 1. According to Kapur and Bielaczyc (2012), the problem-solving task meets a sweet spot of complexity when students are able to generate different solution ideas without solving the problem canonically. On average, the students generated 6.5 solution ideas in their groups and these solution ideas typically included at least one of the four errors, which are listed in Table 3 (see left column). Building on the findings of this first round of pilot tests, we created the instruction lesson.

In the second pilot round, we tested both learning phases of our PF design with one whole social science class in the out-of-school lab. The results again revealed that 10th graders were able to generate various solution ideas without solving the problem canonically. Specifically, eight student groups developed an average of 4.6 ideas, which again included at least one of the four errors listed in Table 3.

In summary, the results of our pilot tests suggested that the teacher conference problem (Problem 1) met a sweet spot of complexity, as students were able to produce solution ideas without solving the problem canonically. Additionally, these results enabled us to use typical erroneous solution ideas for the instruction lesson.

Measures

To investigate our research questions, we collected and coded students’ solutions generated during the problem-solving phase, assessed their perceived competence with a questionnaire after both learning phases, and measured students’ learning outcome in a posttest. See Table 4 for an overview of the research questions and the measures used for investigating these questions. Additionally, we administered a pre-questionnaire to assess certain control variables. In the following paragraphs, we describe all measures in more detail.

Pre-questionnaire

Findings from previous PF studies (e.g. Kapur, 2014; Loibl and Rummel 2014b) showed that students’ (math) grades influence their learning outcome. Therefore, students’ grades were measured as a control variable by asking students to indicate their grades in three different subject areas in the pre-questionnaire administered prior to the first learning phase: social sciences, German language, and mathematics. Students’ grades were used as an indicator of their general achievement in the three school subjects. We assumed that students’ achievement in these subjects could be related to their ability to work on the problem-solving task and to develop an understanding of the targeted learning concept. That is, the learning topic is strongly related to the social sciences, the task that students were asked to solve was a complex word problem, and prior knowledge on mathematical concepts could be helpful for understanding principles of experimental design, such as the systematic variation of variables.

Student solutions

To analyze whether PF students were able to generate different solution ideas without solving the problem canonically (design-related question 1) and whether the solution ideas contained typical errors that were discussed during instruction (design-related question 2), we coded the solution ideas that students generated during the problem-solving phase, measuring the quality and quantity of PF student solutions. This also allows us to investigate whether PF students activated relevant prior knowledge (mechanism-related question 1).

The invented solution ideas were collected after students completed the problem-solving phase. Previously, all groups had received blank sheets of paper for their group work, and students in the PF condition were asked to number their different solution ideas. To measure the quality of the PF solution ideas, we assessed the number of canonical principles within each solution idea and used the best idea (highest number of canonical principles) for further analyses, similarly to previous studies on PF (e.g. Loibl and Rummel, 2014b). The number of canonical principles was composed of two sets of principles (see Table 5), based on both the features of typical student solutions and the components of the canonical solution, which, as mentioned above, had been generated for the teacher conference problem by experts of our research group. One set involves four core principles that—building on typical student misconceptions – were explained in detail during instruction (e.g. inclusion of a control condition). A second set encompasses four further implicit principles of the canonical solution that were not explicitly explained during instruction as they did not represent typical student misconceptions (e.g. comparison of different groups). Therefore, the quality score ranged from 0 (solution without canonical principles) to 8 (canonical solution). See Online Resource 3 for a coding example of a typical PF student solution. Two raters scored around 30% of the student solutions, i.e., n = 46 (ICCabsolute = 0.71; 95% CI [0.48, 0.84]). According to Cicchetti (1994), this ICC value can be interpreted as good.

Questionnaires 1 and 2

To analyze whether PF students became aware of their knowledge limitations (mechanism-related question 2), students’ perceived competence was assessed after the first and the second learning phase using the short scale of intrinsic motivation developed by Wilde et al. (2009). The questionnaire includes four subscales (interest/enjoyment, perceived competence, perceived choice, and pressure/tension) with a total of 12 items rated from 1 (strongly disagree) to 5 (strongly agree). To form the perceived competence index, students’ ratings on the following three items were averaged: (1) I am satisfied with my performance during this learning phase; (2) I was skilled in the activities during this learning phase; (3) I think I was pretty good at the activities during this learning phase. The internal consistency of the subscale for perceived competence was satisfactory (after learning phase 1: Cronbach’s α = 0.84, after learning phase 2: Cronbach’s α = 0.91).

Posttest

To investigate whether PF students recognized the deep features of the targeted learning concept (mechanism-related question 3) and whether they outperformed DI students on acquiring knowledge about principles of experimental design (main research question), a posttest assessed the learning outcome as our dependent variable (i.e., knowledge of the four principles of experimental design conveyed in the instruction phase) after the second learning phase.

Students had 30 min to work on the posttest items, and all completed them on time. Building on Bloom’s revised taxonomy (e.g. Krathwohl 2002), four items tested remembering and understanding, three items tested application, and two items tested analysis and evaluation. The distinction between procedural and conceptual knowledge, as used, for instance, by Loibl and Rummel (2014a) in classical PF studies, builds on a classification developed by Rittle-Johnson and Alibali (1999) and their work on mathematical domains. As we needed a more domain-general classification, we decided to use Bloom’s taxonomy to construct our posttest items. Nevertheless, this means that our posttest also allows for testing procedural and conceptual knowledge. As Rittle-Johnson, Siegler, and Alibali (2001) describe, procedural knowledge is often tested by asking learners to solve routine problems that require the acquisition of previously learned solution methods. In contrast, conceptual knowledge is often tested by using novel problems that require learners to invent new solution methods based on their knowledge (Rittle-Johnson et al. 2001). Our posttest also includes both items that ask students to reproduce the concepts and solution methods from the previous instruction phase and items that require students to transfer their knowledge acquired during instruction for solving novel problems.

Two raters scored around 20% of the posttests, i.e., n = 37 (ICCabsolute = 0.99; 95% CI [0.98, 0.996]). This ICC value can be interpreted as excellent (cf., Cicchetti 1994). After an analysis of the posttest items, we excluded one item from further analyses and created a weighted scale of the remaining eight posttest items with a total score from 0 to 25. A more detailed description of the posttest items and of the item analysis can be found in the supplementary material (see Online Resource 4).

Experimental procedure

On the day of the experiment, students underwent four test phases (i.e., pre-questionnaire, questionnaires 1 and 2, posttest) and two successive learning phases: a problem-solving phase and an instruction phase. While the sequence of these two learning phases differed between the two conditions, the problem-solving tasks, the instruction lesson, and the social surroundings during both phases were the same in both conditions.

PF students’ first learning phase was the problem-solving phase, in which they were asked to collaboratively generate different solution ideas to an unfamiliar problem (Problem 1) without any instructional support on relevant or correct problem-solving steps. In the second learning phase, they received instruction: First, the task was recapitulated with the whole class (see Fig. 1). Next, the experimenter compared and contrasted typical erroneous student solutions with the components of the canonical solution (see Table 3). Subsequently, the experimenter gave instruction on the canonical solution and explained relevant components thereof. At the end of the second learning phase, the experimenter presented a second isomorphic problem (Problem 2) and the whole class generated and discussed its canonical solution with the experimenter.

DI students’ first learning phase was the instruction phase, in which the unfamiliar problem (Problem 1) was introduced (see Fig. 1) before they received the same instruction as PF students. That is, the experimenter compared and contrasted typical erroneous student solutions with the canonical solution (see Table 3) and then explained the relevant components of the canonical solution. The second learning phase was the problem-solving phase: Initially, students collaboratively repeated and practiced the canonical problem-solving procedure of the problem that had been presented and discussed during the instruction (Problem 1). Subsequently, the same small student groups practiced the solution procedure on the second isomorphic problem (Problem 2).

Our experimental procedure ensured that the introductory unfamiliar problem (i.e., Problem 1, introduced at the beginning of the first learning phase) and the final familiar problem (i.e., Problem 2, practiced at the end of the second learning phase) were the same in both conditions. By using typical student solutions during the instruction phase – similarly to the procedure of previous PF studies (i.e., Loibl and Rummel 2014a, b)—we were able to apply the same instruction in both conditions. Furthermore, to keep instruction comparable across classes and conditions, the same experimenter gave the instruction in both conditions. The instruction was experimenter-led and included whole-class discussions. Thus, the social surroundings during instruction were the same in both conditions. The social surroundings were also the same in both conditions during the problem-solving phase, as students were asked to work in small groups without any instructional support. The small groups were formed by the students themselves, often with seat neighbors. Most of the groups were triads as required by the experimenter, with a few exceptions (two or four group members) due to class size constraints.

Table 6 provides an overview of the experimental procedure including the administration of questionnaires and tests, the breaks, and the respective duration of each learning phase, test phase, and break.

Results study 1

For all analyses, the significance level was set at 0.05. For (co)variance analyses, we used partial η2 as a measure of effect size. According to Cohen (1988), values < 0.01 represent no effect, between 0.01 and 0.06 a small effect, between 0.06 and 0.14 a medium effect, and values > 0.14 a large effect. For correlation analyses, we used Pearson’s r as a measure of effect size. According to Cohen (1988), values < 0.10 represent no effect, between 0.10 and 0.30 a small effect, between 0.30 and 0.50 a medium effect, and values > 0.50 a large effect.

Prior analyses

Before investigating all study questions, we ensured that PF and DI students did not differ regarding their reported grades. For this purpose, we conducted a MANOVA with condition as factor and the three grades as dependent variables. There were no differences between the two conditions in the three reported grades (social sciences: MPF = 2.36, SDPF = 0.80, MDI = 2.53, SDDI = 0.98, F(1, 209) = 1.91, p = 0.17, ηp2 = 0.009, 90% CI [0.00; 0.04]; German language: MPF = 2.61, SDPF = 0.84, MDI = 2.63, SDDI = 0.87, F(1, 209) = 0.02, p = 0.88, ηp2 = 0.000, 90% CI [0.000; 0.004]; mathematics: MPF = 2.83, SDPF = 1.04, MDI = 2.74, SDDI = 1.11, F(1, 209) = 0.42, p = 0.52, ηp2 = 0.002, 90% CI [0.00; 0.02]).

Design features

To investigate design-related question 1, we analyzed whether PF students were able to invent intuitive solution ideas without solving the problem canonically. This indicates whether the problem-solving task met the sweet spot of complexity and addressed PF students’ prior knowledge. The analyses of PF students’ solution ideas (N = 173) reveal that PF groups (n = 44) developed an average of 3.93 (SD = 1.43) solution ideas and on average, their best solution ideas involved 5.07 (SD = 1.29) canonical principles. None of the groups solved the problem canonically, as their invented solution attempts did not apply eight canonical principles. The highest number of canonical principles was seven, applied by only two PF groups in their best solution idea. Thus, similar to our pilot tests, participants of the PF condition were able to independently generate different solution ideas in small groups without solving the problem canonically. These results suggest that our problem-solving task met an appropriate level of complexity, which is expected to be important for fostering students’ activation of prior knowledge during problem solving.

To investigate whether our instruction fulfilled the prerequisite hypothesized to foster students’ awareness of knowledge limitations and their recognition of the deep features of the targeted learning concept, we analyzed whether PF students’ solution ideas included the typical mistakes that were focused on during instruction (design-related question 2). Descriptive statistics show that the majority of PF students did not include the four particular canonical categories in their solution ideas: A pretest was missing in 93% of the generated solution ideas. The principle of a systematic variation of variables was violated in 82% of all PF student solutions. The principle naming and controlling of a particular control variable was absent in 64% of the invented solutions, and again 64% of the ideas did not include a control group. Each of the other four categories (experimental test of learning methods, between-subjects design, posttest, and naming of four learning methods) was included in 50% or more of the generated solutions. These frequencies of the four most frequently missing concepts within the PF solutions demonstrate that PF students’ solution ideas contained the typical mistakes which formed the basis of the developed instructional material and which were focused on during the compare and contrast method (see Table 3 in methods section). Therefore, our PF design fulfilled a relevant prerequisite for fostering students’ awareness of knowledge limitations and their recognition of the deep features of the targeted learning concept during instruction.

Activation of prior knowledge and awareness of knowledge limitations

Regarding mechanism-related question 1 (i.e., PF students’ activation of prior knowledge), as in previous PF studies (e.g. Loibl and Rummel 2014b), we calculated correlations between solution quality and learning outcome as well as solution quantity and learning outcome for the PF condition. The quality of the PF student solutions (number of canonical principles in best idea) correlated significantly with the individual posttest performance (n = 121), r = 0.33 (medium effect), p < 0.001. The correlation between solution quality and learning outcome on an individual level was calculated by the multiple use of the solution-quality score (i.e., the solution-quality score was used for each group member). As the quality of the solutions was assessed on a group level, we also analyzed the correlation between solution quality and learning outcome on a group level with the mean posttest scores of each group (n = 44). The correlation on the group level was also significant, r = 0.55 (large effect), p < 0.001. Regarding the quantity of invented solutions, we found no significant correlation with the learning outcome, either on the individual (r = 0.11, p = 0.24) or on the group level (r = 0.14, p = 0.36). The procedure of analyzing the association between students’ learning outcome and the quality or quantity of their generated solutions on both an individual and a group level is in line with methods used in previous PF studies (e.g. Kapur 2012; Loibl and Rummel 2014b). With respect to mechanism-related question 1, these results indicate that the PF students were able to activate relevant prior knowledge, as reflected in the correlation of the quality, and not the quantity, of their solution ideas with their posttest performance.

To investigate mechanism-related question 2 (i.e., PF students’ awareness of knowledge limitations), we compared students’ perceived competence between the two conditions by calculating a MANCOVA with the factor condition, an averaged index of the three subject grades as covariate (due to correlations with students’ perceptions), and both measures of perceived competence as dependent variables. The descriptive statistics for students’ perceived competence can be found in Table 7.

For students’ perceived competence after the first learning phase (assessed after problem solving for PF students and after instruction for DI students), the MANCOVA reveals that students of the PF condition reported significantly higher perceived competence than students of the DI condition, F(1, 209) = 18.93 p < 0.001, ηp2 = 0.08, 90% CI [0.03; 0.15]. For the second learning phase (assessed after instruction for PF students and after problem solving for DI students), the MANCOVA reveals that students of the PF condition reported significantly lower perceived competence than students of the DI condition, F(1, 209) = 62.83, p < 0.001, ηp2 = 0.23, 90% CI [0.15; 0.31]. Results of correlation analyses show that the learning outcome of PF students significantly and positively correlated with their perceived competence after the second learning phase (r = 0.41, p < 0.001), but not after the first learning phase (r = 0.03, p = 0.76). With respect to mechanism-related question 2, these results indicate that PF students developed an awareness of their competence limitations during the second learning phase, as they reported substantially less (large effect) perceived competence than DI students. This awareness of limited competence after the second learning phase (and prior to the posttest), was apparently realistic and accurate, as indicated by the positive and medium-sized correlation with PF students’ learning outcome. However, PF students did not develop an accurate awareness of their competence limitations during the first learning phase, as they reported higher perceived competence than DI students.

In addition to the previous analyses, we conducted a multiple linear regression analysis to investigate whether PF students’ prior knowledge activation (i.e., the quality and quantity of solution ideas) and their awareness of knowledge limitations (i.e., perceived competence after both learning phases) not only correlated with their learning outcome, but also predicted it. The results of the regression analysis, which are presented in Table 8, are in line with the results of our correlation analyses.

The results demonstrate that while PF students’ solution quality and their perceived competence after the second learning phase (i.e., after instruction) significantly predicted their learning outcome, the quantity of their solution ideas and their perceived competence after the first learning phase (i.e., after problem solving) did not. With respect to students in the DI condition, the results show that only their perceived competence after the first learning phase (i.e., after instruction) significantly predicted their learning outcome. As DI students developed only one solution (namely the canonical solution) during the problem-solving phase after instruction, the quantity of solution ideas was excluded from the analysis.

Deep feature recognition and learning outcome

To investigate mechanism-related question 3 (i.e., PF students’ recognition of deep features), we compared students’ performance on posttest items testing application and transfer abilities between the two conditions. We calculated an ANCOVA with the factor condition, an averaged index of the three subject grades as covariate (due to correlations with students’ performance on the posttest items assessing application and transfer), and a particular set of posttest items as dependent variable. For this purpose, we created a scale of the four tasks (i.e., two unfamiliar application tasks and two analysis / evaluation tasks) that required students to apply the contents of the instruction in novel contexts. The total score of this transfer scale ranged from 0 to 16. The descriptive statistics for students’ performance on posttest items assessing application and transfer as well as total learning outcome are presented in Table 9.

The results of the ANCOVA show a medium-sized main effect of condition on the transfer scale, F(1, 210) = 31.11, p < 0.001, ηp2 = 0.13, 90% CI [0.07; 0.20]. Contrary to our expectations, PF students achieved a significantly lower score than DI students (see Table 9). With respect to our mechanism-related question 3, these results indicate that PF students did not recognize the deep features of the targeted learning concept during instruction, as they performed significantly worse on posttest items testing their application and transfer abilities than DI students.

To assess differences in the effect of the experimental condition on students’ total learning outcome (main research question), we calculated an ANCOVA with the factor condition and an averaged index of the three subject grades as covariate (due to correlations with students’ learning outcome). For this analysis, we used the weighted posttest scale (as described in our method section and Online Resource 4) as dependent variable. The ANCOVA yielded a medium-sized main effect of condition on the total learning outcome, F(1, 209) = 27.46, p < 0.001, ηp2 = 0.12, 90% CI [0.06; 0.19]. Contrary to our hypothesis, PF students did not outperform DI students (see Table 9).

Discussion study 1 and post-hoc analyses

Our results suggest that contrary to our expectations, PF did not have an effect on students’ learning of social science research methods, namely on learning principles of experimental design (main research question). Against our hypothesis, DI had a greater impact on learning principles of experimental design than PF. The question is how this result might be explained. The analysis of the student-generated solutions within the PF condition indicates that the two major design requirements of the learning environment, which are assumed to facilitate the mechanisms underlying the effectiveness of PF (cf., Loibl et al. 2017), were indeed fulfilled: 1. PF students were able to independently invent different solution ideas to a complex problem that addressed their prior knowledge (design-related question 1). 2. Typical mistakes within the student solutions matched the four principles that were explained by comparing and contrasting typical student solutions with the canonical solution during the instruction phase (design-related question 2). Asking students to invent different solution ideas to a complex problem fostered the PF mechanism of activating relevant prior knowledge, which is reflected in the significant correlation between the quality of PF students’ solution ideas and their learning outcome (mechanism-related question 1). Additionally, confronting students with incorrect solution ideas during the instruction phase facilitated the PF mechanism of developing awareness of knowledge limitations (mechanism-related question 2). This is especially reflected in the lower perceived competence or higher awareness of competence limitations of PF students compared to DI students after the second learning phase, and additionally in the significant positive correlation between this awareness and PF students’ learning outcome (mechanism-related question 2). Nevertheless, PF students seemingly did not recognize the deep features of the targeted learning concept during instruction, as they did not outperform DI students, either on items testing transfer abilities (mechanism-related question 3) or on the total learning outcome (main research question). Given that our study fulfilled all features of the PF design, it is surprising that the PF students did not recognize the deep features of the targeted learning concept or—more generally—that we were unable to replicate the effect of PF on learning social science research methods (more specifically: principles of experimental design).

A possible explanation for our findings may relate to PF students’ perceived competence prior to instruction. Against our expectation, PF students’ perceived competence after the first learning phase was higher than that of DI students (mechanism-related question 2). Moreover, PF students’ perceived competence decreased between the two learning phases (after first learning phase: M = 3.53, SD = 0.63; after second learning phase: M = 3.02, SD = 0.90). This is in contrast to the data reported in Loibl and Rummel’s (2015) study in the context of mathematics, in which PF students’ perceived competence was not only lower than that of DI students after both learning phases, but also increased between the two learning phases. It must be noted that the analyses conducted by Loibl and Rummel (2015) did not focus on the development of students’ competence perceptions. Thus, our interpretations relate only to their reported descriptive statistics. In our post-hoc analyses, we tested for significance in a repeated measures ANOVA, which yielded a significant and large-sized effect of time of measurement, F(1, 120) = 23.12, p < 0.001, ηp2 = 0.21. As the perceived competence after the first learning phase did not correlate with PF students’ learning outcome, either in Loibl and Rummel’s study (2015) or in our study, we conducted further post-hoc analyses, exploring whether the decrease in PF students’ competence perceptions between the two learning phases was related to their learning outcome. Therefore, we conducted a correlation analysis with PF students’ learning outcome and the difference score of their reported perceived competence between the first and the second learning phase. A positive difference score indicates a decrease (after learning phase 1 > after learning phase 2) and a negative score an increase (after learning phase 1 < after learning phase 2). For PF students, the results revealed a negative and medium-sized association (r =—0.36, p < 0.001). Accordingly, the stronger PF students’ perceived competence decreased between the two learning phases (i.e., positive difference score), the lower their learning outcome. In summary, the results regarding PF students’ perceived competence indicate that PF students did not develop an appropriate awareness of their competence limitations prior to instruction, and may have believed that they had already mastered the task. Consequently, PF students might have experienced a frustrating and overwhelming contrast between their self-assessment prior to instruction (regarding the quality of their solutions and their competence) and their experiences during the following instruction phase, in which they recognized their competence limitations.

To ensure that these findings and especially the lack of effect of PF on learning social science research methods are replicated for topics related to social science research methods other than principles of experimental design, in our second study, we investigated the effectiveness of PF for learning to evaluate causal versus correlational evidence. In the following sections, we describe the method and results of the second study, before discussing the results of both studies in greater detail.

Method study 2

Participants and learning domain

152 students participated with written parental consent in all learning and testing phases. The students were 10th graders (age: M = 16.11, SD = 0.90; 65% girls) from seven social science classes of six secondary schools in Germany. The sample size is sufficient to reveal small-sized effects of η2 ≥ 0.05 (f = 0.23, 1-β = 0.80; G-Power analysis).

As in study 1, participants had no or limited instructional experience with the targeted learning topic prior to the study. In study 2, we selected the following learning topic related to social science research methods: evaluating causal versus correlational evidence. According to the curriculum, students should be able to independently interpret empirical data by considering certain quality criteria at the end of 11th grade. Thus, as in study 1 and in previous PF studies (e.g. Kapur 2010), this ensured that the 10th graders in our study had no instructional experience with the learning topic.

Experimental design

Similar to study 1, we implemented a quasi-experimental between-subjects design and randomly assigned the seven classes to the PF condition (three classes: n = 80) or the DI condition (four classes: n = 72). The assignment of whole classes was determined by the setting of our study: As in our first study, the current study took place in an interdisciplinary out-of-school lab at a large German university.

The design of study 2 was fairly similar to that of study 1: Students underwent two learning phases and four test phases. In contrast to study 1, the second learning phase additionally incorporated a practice phase after instruction in the PF condition and after problem solving in the DI condition. The implementation of a practice phase is in line with the PF design within the classical PF studies by Kapur (2010, 2011, 2012, 2014) and Kapur and Bielaczyc (2011, 2012). See Table 10 for an overview of the experimental design.

Learning materials

To teach students the differences between correlational and causal evidence, we designed two isomorphic problem-solving tasks (i.e., one for the collaborative problem-solving phase and one for the individual practice phase) and an instruction lesson.