Abstract

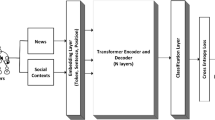

Cybersecurity event detection aims to detect and classify the occurrence of cybersecurity events from a large amount of data. Previous approaches to event detection have used trigger word detection as the entry point for the task. However, constructing trigger words requires selecting words that describe the type of event occurrence from a large number of event sentences, which makes annotation of the training corpus time-consuming and expensive. To solve this issue, based on the latest achievements of contrastive learning in sentence embedding, we propose a novel method called Trigger-free Cybersecurity Event Detection Based on Contrastive Learning (TCEDCL), which incorporates semantics into the representation model to detect events without triggers. To demonstrate the feasibility of our proposed TCEDCL method, we collected and constructed a dataset from numerous cybersecurity news and blog posts. Extensive experiments have shown that our proposed method performs exceptionally well in detecting cybersecurity events and enables the extension of new types of event detection.

Similar content being viewed by others

References

Jian L, Yubo C, Kang L, Jun Z (2019) Neural cross-lingual event detection with minimal parallel resources. In: Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing(EMNLP-IJCNLP), Association for Computational Linguistics. pp. 738–748, Hong Kong, China.

Meihan T, Bin X, Shuai W, Yixin C, Lei H, Juanzi L, Jun X (2020) Improving event detection via open-domain trigger knowledge. In: Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online. Association for Computational Linguistics, pp. 5887–5897.

Lifu H, Heng J (2020) Semi-supervised new event type induction and event detection. In: Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online. Association for Computational Linguistics, pp. 718–724.

Christopher W, Stephanie S, Julie M, Kazuaki MACE (2005) multilingual training corpus. Linguistic Data Consortium, Philadelphia, 57.

Satyapanich T, Ferraro F, Finin T (2020) Casie: Extracting cybersecurity event information from text. In: Proceedings of the AAAI Conference on Artifificial Intelligence, pp. 8749–8757.

Semih Y, Mehmet SS, Begum C, Batuhan B, Seren G, Azmi Y, Emin IT (2019) Detecting cybersecurity events from noisy short text. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, Minnesota. Association for Computational Linguistics, Volume 1 (Long and Short Papers), pp. 1366–1372.

Shulin L, Yang L, Feng Z, Tao Y, and Xinpeng Z. Event Detection without Triggers. In: Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, Minnesota. Association for Computational Linguistics, pp. 735–744, 2019.

Tianyu G, Xingcheng Y, Danqi C (2021) SimCSE: Simple Contrastive Learning of Sentence Embeddings. In: Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing. Online and Punta Cana, Dominican Republic. Association for Computational Linguistics, pp. 6894–6910.

Dong W, Ning D, Piji L, Haitao Z (2021) CLINE: Contrastive learning with semantic negative examples for natural language understanding. In: Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online. Association for Computational Linguistics.pp. 2332–2342.

Zhou H, Yin H, Zheng H et al (2020) A survey on multi-modal social event detection. Knowl-Based Syst 195(3):105695

Takeshi S, Makoto O, Yutaka M (2010) Earthquake shakes Twitter users: real-time event detection by social sensors. In Proc. WWW’10, 2010.

Alessio S, Alberto Maria S, Philip MP (2011) The use of Twitter to track levels of disease activity and public concern in the US during the influenza A H1N1 pandemic. PloS one 6(5):e19467

Alex L, Michael JP, Mark D (2013) Separating fact from fear: Tracking flu infections on twitter. In Proceedings of HLT-NAACL, pp. 789–795.

Semih Y, Mehmet SS, Begum C, Batuhan B, Seren G, Azmi Y, Emin IT (2019) Detecting cybersecurity events from noisy short text. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, Minnesota. Association for Computational Linguistics, pp. 1366–1372.

Qiu X, Lin X, Qiu L (2016) Feature representation models for cyber attack event extraction. In: 2016 IEEE/WIC/ACM International Conference on Web Intelligence Workshops (WIW), pp. 29–32. https://doi.org/10.1109/WIW.2016.020.

Ritter A et al (2015) Weakly supervised extraction of computer security events from twitter. In: International World Wide Web Conferences Steering Committee International World Wide Web Conferences Steering Committee, pp. 896–9055.

Khandpur RP et al (2017) Crowdsourcing cybersecurity: cyber attack detection using social media. CIKM pp. 1049–1057.

Luo N et al (2021) A framework for document-level cybersecurity event extraction from open source data. In: 2021 IEEE 24th International Conference on Computer Supported Cooperative Work in Design (CSCWD), pp. 422–427. https://doi.org/10.1109/CSCWD49262.2021.9437745.

Dhivya C, Vijay M (2021) Evolution of semantic similarity—a survey. ACM Comput. Surv. 54, 2, Article 41 (March 2022), 37 pages. https://doi.org/10.1145/3440755.

Kumar P, Rawat P, Chauhan S (2022) Contrastive self-supervised learning: review, progress, challenges and future research directions. International Journal of Multimedia Information Retrieval 11(4):461–488

Gunel B et al (2021) Supervised Contrastive Learning for Pre-trained Language Model Fine-tuning." ICLR 2021.

Zeng H, Cui X (2022) SimCLRT: a simple framework for contrastive learning of rumor tracking. Eng Appl Artif Intell 110(2022):104757.

Dong W, Ning D, Piji L, Haitao Z (2021) CLINE: Contrastive Learning with Semantic Negative Examples for Natural Language Understanding. In: Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online. Association for Computational Linguistics, pp. 2332–2342.

Zixuan K, Bing L, Hu X, Lei S (2021) CLASSIC: Continual and contrastive learning of aspect sentiment classification tasks. In: Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Online and Punta Cana, Dominican Republic. Association for Computational Linguistics. pp. 6871–6883..

Taeuk K, Kang MY, S Lee (2021) Self-guided contrastive learning for BERT sentence representations. In: Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Online. Association for Computational Linguistics.pp. 2528–2540.

Li T, Chen X, Zhang S, Dong Z, Keutzer K (2021) Cross-Domain Sentiment Classification with Contrastive Learning and Mutual Information Maximization. ICASSP 2021 –2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 8203–8207. https://doi.org/10.1109/ICASSP39728.2021.9414930.

Lan J et al (2020) CLLD: contrastive learning with label distance for text classification. arXiv preprint, 2020. arXiv:2110.13656.

Hanlu W, Tengfei M, Lingfei W, Tariro M, Shouling J (2020) unsupervised reference-free summary quality evaluation via contrastive learning. In: Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online. Association for Computational Linguistics.pp. 3612–3621.

Jacob D, M-W Chang, Kenton L, Kristina T (2019) BERT: Pre-training of deep bidirectional transformers for language understanding. In: Proceedings of NAACL-HLT, pp. 4171–4186.

Srivastava N et al (2014) Dropout: a simple way to prevent neural networks from overfitting. J Mach Learn Res 15(1):1929–1958

Sepp H, Jürgen S (1997) Long short-term memory. Neural Comput 9(8):1735–1780

John DL, Andrew M, Fernando CNP (2001) Conditional random fields: Probabilistic models for segmenting and labeling sequence data. In: Proceedings of ICML, pp. 282–289.

Yubo C, Liheng X, Kang L, Daojian Z, Jun Z (2015) Event extraction via dynamic multi-pooling convolutional neural networks. In: Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), Beijing, China. Association for Computational Linguistics. pp. 167–176.

Xiaozhi W, Ziqi W, Xu H, Wangyi J, Rong H, Zhiyuan L, Juanzi L, Peng L, Yankai L, Jie Z (2020) MAVEN: A Massive General Domain Event Detection Dataset. In: Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online. Association for Computational Linguistics. pp. 1652–1671.

Thomas W, Lysandre D, Victor S, Julien C, Clement D, Anthony M, Pierric C, Tim R, Remi L, Morgan F, Joe D, Sam S, von Platen P, Clara M, Yacine J, Julien P, Canwen X, Teven LS, Sylvain G, et al. Transformers: state-of-the-art natural language processing. In: Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrations, Online. Association for Computational Linguistics. pp. 38–45, 2020.

Xin C, Shiyao C, Bowen Y, Tingwen L, Wang Y, Bin W (2021) few-shot event detection with prototypical amortized conditional random field. In: Findings of the Association for Computational Linguistics: ACL-IJCNLP 2021, Online. Association for Computational Linguistics. pp. 28–40.

Aghaei E, Niu X, Shadid W et al. (2022) SecureBERT: A Domain-Specific Language Model for Cybersecurity. arXiv preprint. arXiv:2204.02685.

Acknowledgements

We greatly appreciate anonymous reviewers and the associate editor for their valuable and high quality comments that greatly helped to improve the quality of this article. This research is funded by National Natural Science Foundation of China (62276091), Major Public Welfare Project of Henan Province (201300311200), Shanghai Science and Technology Innovation Action Plan(No.23ZR1441800 and No.23YF1426100 ) , and Open Research Fund of NPPA Key Laboratory of Publishing Integration Development, ECNUP, and Shanghai Open University project (No. 2022QN001).

Author information

Authors and Affiliations

Contributions

Mengmeng Tang performed the experiment, the data analyses and wrote the manuscript; Yuanbo Guo, Han Zhang contributed significantly to analysis and manuscript preparation; Qingchun Bai helped perform the analysis with constructive discussions. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

1.1 Cybersecurity event types in TCEDCL

The types of events defined in this paper are listed in Table 5. To further enrich the types of events studied, the event types in the existing research [5] are expanded. The events studied in this paper are classified into nine types: DataBreach, Phishing, Ransom, DDoSAttack, Malware, SupplyChain, VulnerabilityImpact, VulnerabilityDiscover and VulnerabilityPatch. In the process of data processing, nine types of cybersecurity events with high incidence and great impact are selected. The types of defined events are shown in Table 5.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Tang, M., Guo, Y., Bai, Q. et al. Trigger-free cybersecurity event detection based on contrastive learning. J Supercomput 79, 20984–21007 (2023). https://doi.org/10.1007/s11227-023-05454-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-023-05454-2