Abstract

The COVID 19 pandemic not only affected our health and social life in many aspects, but it also changed the classical way of training in classrooms and education preferences of society. As a solution various e-learning platforms were developed and preferred by many educational institutions where the individuals had the opportunity to try the advantages of e-learning platforms. Since the COVID-19 pandemic is neither the first nor the last epidemic, e-learning attracts more attention than ever before and the need for e-learning platforms is expected to be more in the near future. Thus it is necessary to define all critical success factors determining the efficiency of e-learning systems. E-learning platforms have advantages as well as disadvantages and comparisons involve uncertainties and qualitative assessments. A systematic approach should be used to determine the platforms' dimensions, features and weights of critical criteria. The motivation of our study is to determine the weights of all critical success criteria and offer a reliable method for evaluating e-learning platforms. In this study, the interval type-2 fuzzy Analytical Hierarchy Process was utilized to compare critical success factors of e-learning platforms. This is the most comprehensive study considering all critical success factors of e-learning platforms as an Multi Criteria decision Making problem, where 11 criteria and 106 sub-criteria were defined, evaluated and prioritized. This study provides an acceptable rationale for evaluations of e-learning platforms and the results of this study can be used in real-world performance evaluations.

Similar content being viewed by others

1 Introduction

E-learning is a teaching model that supports access to information via digital platforms or media which is mainly based on internet technologies to share academic contents. E-learning systems not only allow to create and share content but also to have control over other aspects of teaching (tuition fees, grades) and sources of exchange between students (chats, forums, etc.) (Muilenburg & Berge, 2005). E-learning platforms are defined as systems where students access online resources and curriculum, communicate with instructors and receive the instructor’s assessments (Bhuasiri et al., 2012). Today, e-learning platforms are preferred because they allow students to continue their education while fulfilling their other responsibilities. E-learning platforms have advantages as well as disadvantages. The flexibility provided by these platforms in terms of time and space also confronts us as an obstacle between teacher and student (Lara et al., 2014).

The market share of e-learning platforms is increasing day by day. Many factors affect the success of the platform and customer satisfaction. Instructor, courses, technology, design and environment are factors affecting satisfaction (Sun et al., 2008). The COVID-19 epidemic, which affects social life in many aspects, has also profoundly affected the education preferences of society, and higher education institutions needed to reevaluate their teaching techniques. In this context, the assessment of e-learning portals has become a necessity (Ouajdouni et al., 2021). Evaluation of e-learning systems is multidisciplinary and involves its difficulties (Roffe, 2002). A systematic approach should be used to determine the platforms' dimensions, features, and critical criteria (Ouadoud et al., 2016). E-learning portal selection or comparison is a complex process and, at the same time, a multi-criteria decision making (MCDM) problem (Gong et al., 2021).

The features of e-learning platforms or learning management systems (LMS) have been superficially studied in the literature and it has been reported that interfaces and models should be improved for comparisons (Buendia & Hervas, 2006). COVID 19 is neither the first nor the last pandemic. The interest in e-learning platforms will increase in the coming years. The possible risk of disease and the advantages offered by distance education increase, and the quality of education offered are equal to face-to-face education. The motivation of our study is to determine the weights of all critical success criteria and offer a reliable method for evaluating e-learning platforms. This study consists of five sections. The literature review is given in the second section, the evaluation criteria, Type-2 Fuzzy Set and F-AHP in the third section, the findings and obtained weights in the fourth section, the results and future works are presented in the fifth section. This is the most comprehensive study considering all critical success factors of e-learning platforms as an MCDM problem, where 11 criteria and 106 sub-criteria in Table 1 were defined, evaluated and prioritized. All these criteria and sub-criteria were determined and defined by conducting a deep literature survey. Originality and value of this paper are defining all critical factors for e-learning platforms, ranking these factors, revealing the most important ones and using MCDM methods for the evaluations of effectiveness factors for the first time.

2 Literature review

Today, e-learning platforms attract more attention than ever before. The issue that determines the quality of education in these platforms is the technical features of the platforms and the pedagogical method used. For this reason, the educational strategies of the platforms should be considered (Begičević et al., 2007). E-learning should be based on pedagogy. The Behaviouristic, Cognitive and Constructivistic Approach were used to compare some e-learning platforms (Moedritscher, 2006). The increase in the usage rate of e-learning platforms depends on the richness of the portal's contents. The creation and reusability of learning content are ensured by effective content libraries (Yigit et al., 2014). Increased multimedia use, high media update speed and short waiting times increase students' attentions (Lin et al., 2011). The framework (portal) does also increase learning effectiveness for all learning branches (Sahasrabudhe & Kanungo, 2014). Different multimedia content is used to support learning in e-learning platforms. A quality model has been proposed to determine the importance of factors such as media loss, video stream quality, download time, file size, user control, timeliness, user-friendliness that affect learning (Jeong & Yeo, 2014). It was shown that developing an e-learning platform according to students' needs using a student-oriented approach increases efficiency (Dominici & Palumbo, 2013).

Three important features of e-learning platforms have been defined as structure, media and communication capabilities. The factors affecting the design of e-learning platforms are theoretical orientation, learning objectives, content, student characteristics and technological ability (Susan & Kenneth, 2000). Furthermore there is a significant relationship between individual differences, academic achievement and usability of the e-learning portal (Karahoca & Karahoca, 2009). The purpose of educational platforms is to ensure that students acquire the skills they need and increase their knowledge level. However, each student’s capability and needs are different. Thus the e-learning systems should be adaptable for the various student needs and present information in different formats accordingly (Leka et al., 2016).

Multi-Criteria Decision-Making (MCDM) methods have been used in the literature to compare and evaluate e-learning platforms. The main components of the platforms were used as criteria for comparisons. These components are digital libraries of educational resources and services, learning objects and virtual learning environments (Kurilovas & Dagiene, 2009). Availability of online discussions is one of the essential factors for benchmarking e-learning platforms (Ng & Murphy, 2005). Furthermore, learning effectiveness evaluation is vital in comparing learning platforms. The hybrid MCDM evaluation method, which combines the Analytic Hierarchy Process (AHP) and fuzzy integral method, is employed to simultaneously considers the interactive relationship between the criteria and the blurriness of subjective perception. The relationship between the criteria was examined by factor analysis and Decision Making Trial And Evaluation Laboratory (DEMATEL) method (Tzeng et al., 2007).

Hwang et al. employed integrated group decision approach including fuzzy theory with the grey system theory to evaluate e-learning platforms (Hwang et al., 2004). In addition e-learning platforms were compared using consistent fuzzy preference relations (Chao & Chen, 2009). The Proximity Indexed Value (PIV), which is a multi-criteria decision-making method, has been developed to compare these platforms. Vise Kriterijumska Optimizacija I Kompromisno Resenje (VIKOR) and the Complex Proportional ASsessment (COPRAS) methods were also employed for platform evaluations (Khan et al., 2019). Moreover, a combination of AHP and Quality Function Deployment (QFD), was used to evaluate the e-learning systems (Xu et al., 2009). A combination of fuzzy logic-based Kirkpatrick and the layered evaluation framework PeRSIVA model is proposed to evaluate e-learning methods (Chrysafiadi & Virvou, 2013). Other parameters used to compare e-learning platforms include adaptability, customization, extensibility and customization. Adaptation is defined as the student's adaptation to the flow of the course (Graf & List, 2005). In addition, self-learning evaluation is recommended to compare e-learning platforms using AHP. Learning behavior, cooperation and communication, resource use and learning effect were considered as criteria in the AHP method (Chen & Yang, 2010; Mingli & Yihui, 2010). Comparison of educational platforms should focus on education issues as well as technical issues (Martin et al., 2008). AHP and artificial neural networks were used to compare the qualities and learning efficiency of the e-learning platform (Chen & Fu, 2010). A primitive cognitive network process, which considers multiple criteria and alternatives, is proposed to select the e-learning platform and this method was compared with the AHP (Yuen, 2012).

Implementation of e-learning platforms have also been examined to compare their performances. Success factors at the implementation stage were investigated using AHP with group decision-making and F-AHP (Naveed et al., 2020). AHP diagrams were used to evaluate the learning effectiveness of the web-based e-learning platforms (Murakoshi et al., 2001). Key factors affecting e-learning were examined with the help of AHP (Qin & Zhang, 2008). AHP was used for students' adoption of e-learning platforms, and 33 different factors were examined. Factors such as cost, quality, agility, timing control, degree certification and personal demands have been reported to have a significant impact on individuals' adoption of e-learning platforms (Zhang et al., 2010). A methodology has been developed to determine the difficulty levels of the e-learning platform. The Linear Program (LP) was used to evaluate the difficulty level of the questions (Matsatsinis & Fortsas, 2005). The association rule with F-AHP was used to evaluate the application score and interactive learning process in e-learning platforms (Wang & Lin, 2012). Quality function deployment (QFD) and fuzzy linear regression were used for e-learning platform selection (Alptekin & Karsak, 2011). MCDM was used to determine the quality of learning material in e-learning platforms (Kurilovas & Dagienė, 2009). Factors affecting the successful implementation of e-learning platforms were identified by AHP (Lo et al., 2011). Fuzzy mathematics was used to determine the factors affecting the effectiveness of e-learning platforms (Bo et al., 2009). AHP and F-AHP have been widely used to set criteria priorities in comparing e-learning platforms (Alptekin & Karsak, 2011; Bo et al., 2009; Chen & Fu, 2010; Lo et al., 2011; Martin et al., 2008; Murakoshi et al., 2001; Naveed et al., 2020; Qin & Zhang, 2008; Wang & Lin, 2012; Yuen, 2012). Other methods used for prioritizing the e-learning criteria can be expressed as DEMATEL, PERSIVA, LP, QFD, PIV and VIKOR [24,(Chrysafiadi & Virvou, 2013; Kurilovas & Dagienė, 2009; Matsatsinis & Fortsas, 2005). In previous studies, the adaptation of the platform student, the success factors for the implementation of e-learning platforms, and the determination of the weights of the education-related criteria was examined. (Graf & List, 2005; Naveed et al., 2020; Zhang et al., 2010).

In this study, 11 criteria (Adaptation, Framework, Function properties, Security, Content, Collaboration & Communication, Quality, Learning, Assessment and evaluation, Technical Specifications, Support) and 106 sub-criteria were defined to evaluate e-learning platforms. Main criteria and sub-criteria weights were determined by using Type-2 Fuzzy Sets AHP. This is the most comprehensive study performed on this issue with 11 criteria and 106 sub-criteria for evaluating e-learning platforms.

3 Material and method

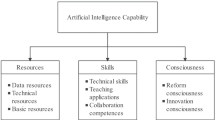

The motivation of our study is to determine the weights of all critical success criteria and offer a reliable method for evaluating e-learning platforms. The decision model was structured hierarchically in Fig. 1 to prioritize all critical success factors for e-learning platforms. In the first level the goal of this study is explained as determining the priorities of critical success factors in e-learning systems. The criteria handled in this study are C1-adaptation, C2-framework, C3-function, C4-security, C5-content, C6-collabration & communication, C7-quality, C8-learning, C9-assesment and evaluation, C10-technicial specifications, C11-management support. The vagueness and subjectivity of trainee and trainer are taken into account by linguistic parameters of interval valued trapezoidal fuzzy numbers.

3.1 Evaluation Criteria

In this study, 11 criteria and 106 sub-criteria in Table 1 for e-learning platforms comparison were defined, evaluated and prioritized. All these criteria and sub-criteria were determined and defined by conducting a deep literature survey. The criteria are determined as adaptation (C1), framework (C2), function (C3), security (C4), content (C5), cooperation and communication (C6), quality (C7), learning (C8), assessment and evaluation (C9), technical specifications (C10), and support (C11).

The C1-adaptation is based on the 4 sub-criteria, e.g C11-compatibility, C12-extendibility, C13-customization and C14-adaptability. C11-compatibility means high correlation between user needs and system features in terms of learning material and framework. C12-extendibility is architecture and content designed according to subsequent needs. C13-customization means user can customize the platform according to his needs. C14-adaptability means that platform can be customized according to user needs.

The C2-framework is based on the 15 sub-criteria. These are C21-warning/message system, C22-ease of understanding, C23-clear navigation, C24-attractive interface, C25-graphics layout, C26-easy participation, C27-offline resource, C28-interface customization, C29-computer knowledge, C210-user friendly, C211-learning objectives, C212-usability, C213-easy data access, C214-color match, C215-ergonomic. C21-warning/message system means that the warning and error messages guide the user with clear and understandable precaution. C22-ease of understanding is that the label and routing in the interface are clear and understandable. C23-clear navigation is that the menus and subpage hierarchy within the portal are clear and understandable. C24-attractive interface is a platform design that is engaging, simple and focused on learning material. C25-graphics layout refers to the placement of figures, tables, videos on the screen. C26-easy participation donates that participation in the training is straightforward, and there are no complicated links. C27-offline resource is that students can have offline access to pre-downloaded resources. C28-interface customization refers that the interface can be customized according to the needs of the user. C29-computer knowledge is the level of computer usage knowledge that the user will need to use the portal. C210-user friendly means that portal has an easy-to-understand and easy-to-use interface and a nice look, as well as a good user experience. C211-learning objectives is the ability of users to achieve the intended learning goal with the course and training. C212-usability is implied that the portal does not have design-related errors or omissions that have been overlooked. C213-easy data access points that educators can share additional data, documents with course content, and students can easily access the content provided. C214-color match is portal design includes harmonious and combined colors. C215-ergonomic betoken designing portal according to ergonomic criteria.

The C3-function criteria is based on 9 sub-criteria. These are C31-evaluation architecture, C32-user control, C33-search, C34-learning history, C35-progress control, C36-application architecture, C37-counselor, C38-discussion, and C39-additive content. C31-evaluation architecture is that a new evaluation process can be designed for training. C32-user control is the student's ability to organize learning activities. C33-search imply that the ability of searching for training/content on the portal with different parameters. C34-learning history is that the student can see the completed training and share the system certificates. C35-progress control donates that the student can see his progress in the training he has received. C36-application architecture is the student/trainer's ability to define a predecessor or successor relationship between training specifically for the student. C37-counsellor is that the student can access the advisor online 24/7. C38-discussion indicates that technical tools allow students to open a new topic within the portal and share their ideas. C39-additive content shows that trainers can add teaching materials (media, pictures, documents) to the portal.

The C4-security are based on 4 sub-criteria. The sub-criteria of C4-security are C41-logging, C42-authorization based access, C43-password and, C44- assessment & evaluation. C41-logging is the registration of user access information. C42-authorization based access is means that users have different menu groups and data authorization according to the authority they have. C43-password shows that the portal has strong password management. C44- assessment & evaluation refers to ensure assessment & evaluation (exam, test) security.

The C5-content is based 18 sub-criteria. These are C51-material, C52-content, C53-presentation, C54-engaging content, C55-practice/test, C56-interactive mode, C57-functional content, C58-current content, C59-right content, C510-applications, C511-guide material, C512-ease of use, C513-perspective, C514-instructional design, C515-pedagogical content, C516-transnational curriculum, C517-approved curriculum, and C518-material quantity. C51-material means that the portal has active and lively multimedia training material. C52-content means controlling the information contained on the portal and the assurance of the information quality through standards. C53-presentation refer to supervise the presentation of information and to meet certain standards requirements. C54-engaging content means attracting the attention of the student attention via rich multimedia content and making a positive contribution to learning. C55-practice/test points that the portal has good practice and test material. C56-interactive mode donates that the portal has a learning-based interactive mode. C57-functional content means using of the same educational content by different educational programs. C58-current content refers to follow the training material up-to-date. C59-right content means checking the accuracy of the training material. C510-applications refers to include practical educational applications. C511-guide material point that there are guide materials on the platform. C512-ease of use point that fit to be used of learning material. C513-perspective means learning activities have a systematic perspective. C514-instructional design shows that instructional management has a particular methodology and design. C515-pedagogical content is that pedagogical factors are taken into account in the teaching method. C516-transnational curriculum is to include transnational curriculum topics. C517-approved curriculum platform has an assessment tool and curriculum approved by the country's education authority. C518-material quantity has acceptable content.

C6-collaboration & communication criteria is based on 11 sub-criteria. These sub criteria are C61-information sharing, C62-interoperability, C63-discussion, C64-announcement, C65-dialogue, C66-forum, C67-attendance, C68-collaboration, C69-mail/messages, C610-conversation, and C611-question sharing. C61-information sharing is to provide mutual communication opportunity for the platform. C62-interoperability is to offer the possibility of working together with the means of remote access. C63-discussion is the ability to open a discussion topic and share ideas within the platform. C64-announcement is the ability to provide one-sided communication with trainers and managers. C65-dialogue has the opportunity to have a conversation with the trainer during the training. C66-forum is the ability of trainees to express their views on a topic that concerns the learning community. C67-attendance is the ability to list meeting participants. C68-collaboration is to provide the opportunity for students to work together on a particular learning topic. C69-mail/messages is the possibility to receive personal mail and messages within the platform. C610-conversation is the possibility of text chat during training. C611-question sharing is the opportunity to share questions between trainers and trainees.

C7-quality criteria is based on 9 sub-criteria. These criteria are C71-integrity, C72-education quality, C73-instructor quality, C74-satisfaction measurement, C75-material and content, C76-reliability, C77-download resources, C78-documentation, C79-standard. C71-integrity is training materials that complement each other. C72-education quality is that platform has a quality internal control system. C73-instructor quality is that trainers are subject to standardization training at certain periods. C74-satisfaction measurement is that measuring student/learning satisfaction. C75-material and content is the control of content quality. C76-reliability is that the number of unplanned failures of the system is at an acceptable level. C77-download resources documentation content count and streaming speed are sufficient. C78-documentation is the system usage document of the platform. C79-standard is that the platform has sufficient national and international education standards because it has a standard.

The C8-learning criteria is based on 8 sub-criteria. These sub-criteria are C81-organization, C82-access, C83-source, C84-threshing, C85-re-access, C86-process control, C87-feedback, C88-encourage. C81-organization is that the course objects are organized. C82-access is that the training content is accessible at different times and places. C83-source is that platform that provides access to libraries related to the subject. C84-threshing is that the training method is enriched with online training materials. C85-re-access is that there is no possibility of regaining access to the training material studied/used. C86-process control is the portals ability of the student development. C87-feedback is a timely response and feedback from the learning counsellor. C88-encourage is that students are encouraged by the trainer for discussion and feedback.

The C9-assessment and evaluation criteria is based on 10 sub-criteria. These sub criteria are C91-different exam mode, C92-result record, C93-rating, C94-progress follow-up, C95- experience observation, C96-exam level, C97-progress control, C98-recording, C99-process control, C910-transfer. C91-different exam mode is that measurement can be made with different techniques and methods. C92-result record is the regulation of recording the assessment score. C93-rating is the ability to rate trainees. C94-progress follow-up is the student's ability to follow their development. C95- experience observation is that the trainer cannot observe the learner experiences. C96-exam level is a form of exams that assess the learning level. C97-progress control is the trainee’s ability to control learning progress. C98-recording is the portal's ability to record learning performance. C99-process control is the learner's ability to control the learning process. C910-transfer is the transfer of knowledge gained in the process between the student and the teacher.

C10-technical specifications criteria is explained depend on 11 sub-criteria. These are C101-payment, C102-style, C103-connection, C104-update, C105-hierarchical structure, C106-language support, C107-adaptation, C108-access speed, C109-compatibility, C1010-mobile support, C1011-verification. C101-payment is that alternative methods can pay fees. C102-style is that the interface style is selectable. C103-connection is that the platform internet connection is stable. C104-update is to be maintained and updated regularly. C105-hierarchical structure is that the structure is that the sections and subsections of the tutorials and pages are clearly defined. C106-language support is that the platform has multi-language support. C107-adaptation is that feedback and adaptation are possible during training. C108-access speed is that the speed of accessing the content is sufficient. C109-compatibility is that the matching of metadata to content. C1010-mobile support is that it has mobile system support. C1011-verification is that the platform has data transmission verification.

The C11-support criteria is based on 7 sub-criteria. These sub criteria are C111-budget support, C112-profile, C113-institutionalism, C114-reward system, C115-planning, C116-admin, C117-equipment support. C111-budget support is the provision of necessary financial support by senior management or access to financial resources. C112-profile is personal user profile and account management. C113-institutionalism is that the unit that organizes the training is institutional. C114-reward system is that it is a certificate etc., reward mechanism for learning activities. C115-planning is the alignment of platform plans and activities. C116-admin is that the system has a maintenance operation and maintenance application manager. C117-equipment support is information technology equipment is sufficient.

3.2 Interval type-2 fuzzy sets

In this section the interval type-2 fuzzy sets is briefly explained and some definitions are given (Çalık and Paksoy, 2017; Kahraman et al, 2014).

Definition 1

A type- 2 fuzzy sets \(\stackrel{\sim }{\tilde{A }}\) in the universe of discourse X can be represented by a type-2 membership function \({\mu }_{\stackrel{\sim }{\tilde{A }}}\) shown as follows:

where Jx denotes an interval [0,1]. The type-2 fuzzy set \(\stackrel{\sim }{\tilde{A }}\) may also be expressed as follows:

where \({J}_{x}\in [\mathrm{0,1}]\) and \(\int \int\) represents the combination of all reasonable (acceptable) values of x and u.

Definition 2

If all \({\mu }_{\stackrel{\sim }{\tilde{A }}}\left(x,u\right)=1\), the set \(\stackrel{\sim }{\tilde{A }}\) is called interval type-2 fuzzy set. A specific instance of a type-2 fuzzy set is an interval type-2 fuzzy set, which may be described as:

where \({J}_{x}\in [\mathrm{0,1}]\).

Definition 3

The lower and upper membership functions of the interval type-2 fuzzy set are type-1 membership functions, respectively. Chen and Lee (2010) presented a new approach for employing interval type-2 fuzzy sets in solving fuzzy multi-criteria group decision-making issues in their investigations. The heights of the upper and lower membership functions, as well as the reference points of the interval type-2 fuzzy sets, were utilized to describe the type-2 fuzzy sets using this technique. The following item represents a trapezoidal interval type-2 fuzzy set.

where, \({A}_{i}^{U}\) and \({A}_{i}^{L}\) are type-1 fuzzy sets. \({a}_{i1}^{U},{a}_{i2}^{U},{a}_{i3}^{U},{a}_{i4}^{U}\), \({a}_{i1}^{L},{a}_{i2}^{L},{a}_{i3}^{L},{a}_{i4}^{L}\) are the reference point of interval type-2 fuzzy set \({\tilde{A }}_{i}^{U}\). \({H}_{j}({\tilde{A }}_{i}^{U});\) denotes the membership value of the element \({a}_{j\left(j+1\right)}^{U}\) in the upper trapezoidal membership function \({\tilde{A }}_{i}^{U}\), \(1\le j\le 2\). \({H}_{j}({\tilde{A }}_{j}^{L});\) denotes the membership value of the element \({a}_{j(j+1)}^{L}\) in the lower trapezoidal membership function \(({\tilde{A }}_{i}^{L})\), \(1\le j\le 2\). \({H}_{1}\left({\tilde{A }}_{i}^{U}\right)\in [\mathrm{0,1}]\), \({H}_{2}\left({\tilde{A }}_{i}^{U}\right)\in [\mathrm{0,1}]\) ,\({H}_{1}\left({\tilde{A }}_{i}^{L}\right)\in [\mathrm{0,1}]\), \({H}_{2}\left({\tilde{A }}_{i}^{L}\right)\in [\mathrm{0,1}]\) and \(1\le i\le n\).

Definition 4

The following equation shows the summation operator of the trapezoidal interval type-2 fuzzy clusters:

Definition 5

The following is the procedure for subtracting the trapezoidal interval type-2 fuzzy sets:

Definition 6

The following is the multiplication between trapezoidal interval type-2 fuzzy clusters:

Definition 7

The followings are the arithmetic operations between trapezoidal interval type-2 fuzzy sets and scalar k:

where k>0.

3.3 Interval Type-2 Fuzzy AHP

We have employed Interval Type-2 Fuzzy AHP for evaluating our criteria. Kahraman et al. (2014) extended Buckley's (1985) fuzzy AHP approach based on type-1 fuzzy clusters into interval type-2 fuzzy clusters (Buckley, 1985; Çalık & Paksoy, 2017; Kahraman et al., 2014). The AHP technique was developed by Saaty (Saaty, 1980) and it has been used to find solutions to or help solve decision-making problems in various fields onward the day it was developed (risk assessment (Adem et al., 2018), green ergonomics (Adem et al., 2021), occupational health and safety (Adem et al., 2020), machine selection (Özceylan et al., 2016); site selection (Paul et al., 2021); vendor selection Gernowo & Surarso, 2021; data intelligence implementation (Merhi, 2021); green energy (Asadi and Pourhossein, 2021)).

E-learning platforms factors evaluation process with Interval Type-2 Fuzzy Sets is presented in Fig. 2. This method's steps are outlined as follows (Kahraman et al., 2014; Çalık & Paksoy, 2017):

-

Step 1: Determine the criteria, sub-criteria and alternatives of the determined decision-making problem. (Decision-making problems may contain all or some of the elements listed here.)

-

Step 2: Table 2 lists linguistic variables and associated interval type-2 fuzzy scales. Fuzzy pairwise comparison matrices are generated using linguistic variables, as shown in Eq (1).

$$\stackrel{\sim }{\tilde{A }}=\left[\begin{array}{c}\begin{array}{ccc}1& {\stackrel{\sim }{\tilde{a }}}_{12}& {\stackrel{\sim }{\tilde{a }}}_{1n}\end{array}\\ \begin{array}{ccc}{\stackrel{\sim }{\tilde{a }}}_{21}& 1& {\stackrel{\sim }{\tilde{a }}}_{2n}\end{array}\\ \begin{array}{ccc}{\stackrel{\sim }{\tilde{a }}}_{n1}& {\stackrel{\sim }{\tilde{a }}}_{n1}& 1\end{array}\end{array}\right]=\left[\begin{array}{l}\begin{array}{ccc}1& {\stackrel{\sim }{\tilde{a }}}_{12}& {\stackrel{\sim }{\tilde{a }}}_{1n}\end{array}\\ \begin{array}{ccc}1/{\stackrel{\sim }{\tilde{a }}}_{12}& 1& {\stackrel{\sim }{\tilde{a }}}_{2n}\end{array}\\ \begin{array}{ccc}1/{\stackrel{\sim }{\tilde{a }}}_{1n}& 1/{\stackrel{\sim }{\tilde{a }}}_{2n}& 1\end{array}\end{array}\right]$$(1)Where \(1/\stackrel{\sim }{\tilde{a }}=((\frac{1}{{a}_{14}^{u}},\frac{1}{{a}_{13}^{u}},\frac{1}{{a}_{12}^{u}},\frac{1}{{a}_{11}^{u}};{H}_{1}\left({a}_{12}^{u}\right),{H}_{2}\left({a}_{13}^{u}\right)),((\frac{1}{{a}_{24}^{L}},\frac{1}{{a}_{23}^{L}},\frac{1}{{a}_{22}^{L}},\frac{1}{{a}_{21}^{L}};{H}_{1}\left({a}_{22}^{L}\right),{H}_{2}\left({a}_{23}^{L}\right))\),

-

Step 3: If there is more than one expert in the decision-making process, the experts' judgements need to be aggregated with the help of the geometric mean. The calculation details of the geometric mean are shown as follows:

$${\widetilde{\widetilde r}}_i={\lbrack a_{i1} \oplus a_{i2} \oplus \dots {\oplus} a_{in}\rbrack}^{1/n}$$(2)where,

$$\sqrt[n]{{\stackrel{\sim }{\tilde{a }}}_{ij}}=((\sqrt[n]{{\stackrel{\sim }{\tilde{a }}}_{ij1}^{U}},\sqrt[n]{{\stackrel{\sim }{\tilde{a }}}_{ij2}^{U}},\sqrt[n]{{\stackrel{\sim }{\tilde{a }}}_{ij3}^{U}},\sqrt[n]{{\stackrel{\sim }{\tilde{a }}}_{ij4}^{U}};{H}_{1}^{U}\left({a}_{ij}\right),{H}_{2}^{U}\left({a}_{ij}\right),((\sqrt[n]{{\stackrel{\sim }{\tilde{a }}}_{ij1}^{L}},\sqrt[n]{{\stackrel{\sim }{\tilde{a }}}_{ij2}^{L}},\sqrt[n]{{\stackrel{\sim }{\tilde{a }}}_{ij3}^{L}},\sqrt[n]{{\stackrel{\sim }{\tilde{a }}}_{ij4}^{L}};{H}_{1}^{L}\left({a}_{ij}\right),{H}_{2}^{L}({a}_{ij}))$$ -

Step 4: The fuzzy weights of each criterion are calculated. First of all, \({\stackrel{\sim }{\tilde{r }}}_{i}\), the geometric mean of each row is computed. After that, the fuzzy weight of criterion (\({\stackrel{\sim }{\tilde{p }}}_{i}\)) is calculated as follows:

$${\widetilde{\widetilde p}}_i={\widetilde{\widetilde r}}_i \oplus \left[{\widetilde{\widetilde r}}_1 \oplus{\widetilde{\widetilde r}}_2\dots.. \oplus {\widetilde{\widetilde r}}_n\right]^{-1}$$(3)where

$$\begin{array}{l}\frac{{\stackrel{\sim }{\tilde{a }}}_{ij}}{{\stackrel{\sim }{\tilde{b }}}_{ij}}=(\frac{{a}_{1}^{U}}{{b}_{4}^{U}},\frac{{a}_{2}^{U}}{{b}_{3}^{U}},\frac{{a}_{3}^{U}}{{b}_{2}^{U}},\frac{{a}_{4}^{U}}{{b}_{1}^{U}};\mathrm{min}({H}_{1}^{U}\left(a\right),{H}_{1}^{U}\left(b\right)),\mathrm{min}({H}_{2}^{U}\left(a\right),{H}_{2}^{U}\left(b\right))),\\ (\frac{{a}_{1}^{L}}{{b}_{4}^{L}},\frac{{a}_{2}^{L}}{{b}_{3}^{L}},\frac{{a}_{3}^{L}}{{b}_{2}^{L}},\frac{{a}_{4}^{L}}{{b}_{1}^{L}};\mathrm{min}({H}_{1}^{L}\left(a\right),{H}_{1}^{L}\left(b\right)),\mathrm{min}({H}_{2}^{L}\left(a\right),{H}_{2}^{L}\left(b\right)))\end{array}$$ -

Step 5: The computed weights must be defuzzied because they are in the form of interval type-2 fuzzy. The defuzzification procedure is based on the following formula:

$$DTraT=\frac{[\frac{\left({u}_{U}-{l}_{U}\right)+\left({\beta }_{U}.{m}_{1U}-{l}_{U}\right)+\left({\alpha }_{U}.{m}_{2U}-{l}_{U}\right)}{4}+{l}_{U}]+\frac{\left({u}_{L}-{l}_{L}\right)+\left({\beta }_{L}.{m}_{1L}-{l}_{L}\right)+\left({\alpha }_{L}.{m}_{2L}-{l}_{L}\right)}{4}+{l}_{L}}{2}$$(4)

4 Results and discussion

In this part of the paper, the criteria that affect the success of e-learning systems were prioritized by utilizing interval valued type-2 fuzzy AHP technique. 11 criteria and 106 sub-criteria were determined by conducting a deep literature survey, and the determined criteria were shown in Table 1. The joint fuzzy evaluations of a team of experts and calculation details of 11 criteria weights are shown in Tables 3, 4, 5 and 6.

Equation (2) was utilized to compute the geometric mean of each criterion. To illustrate this calculation, how to calculate the geometric mean of the first criterion is shown as follows:

After all the main criteria's geometric mean, now, the fuzzy weights of each criterion can be computed. Table 4 shows the geometric mean of each criterion.

Equation (3) was employed to compute fuzzy weights of criteria. To illustrate this calculation, how to calculate the fuzzy weights of the first criterion is shown as follows:

Table 5 shows the fuzzy weights of criteria. The computed weights must be defuzzied because they are in the form of interval type-2 fuzzy.

By utilizing Eq. (4), the computed fuzzy weights are defuzzied. Table 6 shows the defuzzied and normalized weights of the main criteria.

The same steps were repeated for all sub-criteria, and the local weights of the sub-criteria were calculated. Table 7 shows the global weights of the sub-criteria obtained by multiplying the weights of the main and sub-criteria.

According to the weight values, the first three main criteria were C9-Assessment and Evaluation, C1-Adaptation and C4-Security with 0.214, 0.138, 0.129 weight values respectively. The ranked weights of 11 criteria are presented in Table 8. According to the global weight values first ten sub-criteria are C44- assessment & evaluation security, C910-transfer, C11-compatibility, C91-different exam mode, C14-adaptability, C92-result record, C84-threshing, C41-logging, C12-extendibility and C13-customization. The ranked global weights of 106 sub-criteria are presented in Table 9.

The most important criterion is the “C9-assessment and evaluation” means that the level of knowledge and ability of the student varies so the achievements of students in the e-learning should be measured with sufficient accurate measurement techniques at different stages of the learning progress. The second important criterion is the “C1-adaptation” implies the harmony between users’ needs, architecture, and framework should be considered simultaneously. The third important criterion is the “C4-security” indicates that user information, authorization-based access, ensuring the security of measurement and evaluation system should be considered.

The most important sub-criterion according to global weight is “C44-sssessment & evaluation” emphasizes performing complete and inclusive assessment & evaluation processes, and taking anti-cheating precaution. The second important sub-criterion is “C910-transfer” means transferring knowledge gained in the process between students and teacher. The third important sub-criterion is “C11-compatibility” which shows correlation between user needs and system features in terms of learning material and framework. The fourth important sub-criterion is “C91-different exam mode” stands for measurement the level of learning which can be made with different techniques and methods. The fifth important sub-criterion is “C14-adaptability” means that platforms should be customized according to user needs.

5 Conclusion

E-learning is a teaching model that supports access to information via digital platforms or media. Distance education is learning, and knowledge management activities carried out through internet technologies. Today, e-learning platforms attract more attention due to COVID 19 pandemic. The individuals have had the opportunity to try the advantages of e-learning platforms for themselves throughout the COVID-19 pandemic. The need for e-learning platforms has increased by users and educational institutions. Different e-learning systems have been developed. The main problem in this area is assessment process includes uncertainty and qualitative assessment. Another problem is the need to examine the factors to be used in the evaluation of changing and developing platforms.

In this study, the criteria to be used to evaluate e-learning platforms were determined by a comprehensive literature review. The fuzzy analytic hierarchy method was used to compare the e-learning platforms. 11 criteria and 106 sub-criteria were determined to evaluate e-learning platforms. According to the weight values, the first three main criteria were C9-Assessment and Evaluation, C1-Adaptation and C4-Security with 0.214, 0.138, 0.129 weight values respectively. According to the global weight values first ten sub-criteria are C44- assessment & evaluation security, C910-transfer, C11-compatibility, C91-different exam mode, C14-adaptability, C92-result record, C84-threshing, C41-logging, C12-extendibility and C13-customization.

This study provides an acceptable rationale for evaluations of e-learning platform. The results of this study can be used in real-world performance evaluations of various e-learning platforms. The effectiveness of e-learning platform with respect to our factor weights can be compared.

References

Adem, A., Çakit, E., & Dağdeviren, M. (2020). Occupational health and safety risk assessment in the domain of Industry 4.0. SN Applied Sciences, 2(5), 1–6.

Adem, A., Çakıt, E., & Dağdeviren, M. (2021). A fuzzy decision-making approach to analyze the design principles for green ergonomics. Neural Computing and Applications, 1–12.

Adem, A., Çolak, A., & Dağdeviren, M. (2018). An integrated model using SWOT analysis and Hesitant fuzzy linguistic term set for evaluation occupational safety risks in life cycle of wind turbine. Safety science, 106, 184–190.

Asadi, M., & Pourhossein, K. (2021). Wind farm site selection considering turbulence intensity. Energy, 236, 121480.

Alptekin, S. E., & Karsak, E. E. (2011). An integrated decision framework for evaluating and selecting e-learning products. Applied Soft Computing, 11(3), 2990–2998. https://doi.org/10.1016/j.asoc.2010.11.023

Begičević, N., Divjak, B., & Hunjak, T. (2007). Prioritization of e-learning forms: a multicriteria methodology. Central European Journal of Operations Research, 15(4), 405–419. https://doi.org/10.1007/s10100-007-0039-6

Bhuasiri, W., Xaymoungkhoun, O., Zo, H., Rho, J. J., & Ciganek, A. P. (2012). Critical success factors for e-learning in developing countries: A comparative analysis between ICT experts and faculty. Computers & Education, 58(2), 843–855. https://doi.org/10.1016/j.compedu.2011.10.010

Bo, L., Xuning, P., & Bingquan, B. (2009, 30-31 May 2009). Modeling of network education effectiveness evaluation in fuzzy analytic hierarchy process. 2009 International Conference on Networking and Digital Society.

Buckley, J. J. (1985). Fuzzy hierarchical analysis. Fuzzy Sets and Systems, 17(3), 233–247. https://doi.org/10.1016/0165-0114(85)90090-9

Buendia, F., & Hervas, A. (2006, 5-7 July 2006). An evaluation framework for e-learning platforms based on educational standard specifications. Sixth IEEE International Conference on Advanced Learning Technologies (ICALT'06).

Çalık, A., & Paksoy, T. (2017). Third-Party Reverse Logistics (3PTL) Company Selection with Interval Type-2 Fuzzy AHP. Selçuk Üniversitesi Sosyal Bilimler Meslek Yüksekokulu Dergisi, 20(1), 52–67.

Chao, R.-J., & Chen, Y.-H. (2009). Evaluation of the criteria and effectiveness of distance e-learning with consistent fuzzy preference relations. Expert Systems with Applications, 36(7), 10657–10662. https://doi.org/10.1016/j.eswa.2009.02.047

Chen, M., & Fu, Y. (2010, 16-18 April 2010). Comprehensive evaluation of teaching websites based on intelligence methods. 2010 2nd IEEE International Conference on Information Management and Engineering.

Chen, S. M., & Lee, L. W. (2010). Fuzzy multiple attributes group decision-making based on the interval type-2 TOPSIS method. Expert Systems with Applications, 37, 2790–2798.

Chen, Y., & Yang, M. (2010, 17-19 Sept. 2010). Study and construct online self-learning evaluation system model based on AHP method. 2010 2nd IEEE International Conference on Information and Financial Engineering.

Chrysafiadi, K., & Virvou, M. (2013). PeRSIVA: An empirical evaluation method of a student model of an intelligent e-learning environment for computer programming. Computers & Education, 68, 322–333. https://doi.org/10.1016/j.compedu.2013.05.020

Dominici, G., & Palumbo, F. (2013). How to build an e-learning product: Factors for student/customer satisfaction. Business Horizons, 56(1), 87–96. https://doi.org/10.1016/j.bushor.2012.09.011

Gernowo, R., & Surarso, B. (2021). Fuzzy-AHP MOORA approach for vendor selection applications. Register: Jurnal Ilmiah Teknologi Sistem Informasi, 8(1), 24–37.

Gong, J.-W., Liu, H.-C., You, X.-Y., & Yin, L. (2021). An integrated multi-criteria decision making approach with linguistic hesitant fuzzy sets for E-learning website evaluation and selection. Applied Soft Computing, 102, 107118. https://doi.org/10.1016/j.asoc.2021.107118

Graf, S., & List, B. (2005, 5-8 July 2005). An evaluation of open source e-learning platforms stressing adaptation issues. Fifth IEEE International Conference on Advanced Learning Technologies (ICALT'05).

Hwang, G.-J., Huang, T. C. K., & Tseng, J. C. R. (2004). A group-decision approach for evaluating educational web sites. Computers & Education, 42(1), 65–86. https://doi.org/10.1016/S0360-1315(03)00065-4

Jeong, H.-Y., & Yeo, S.-S. (2014). The quality model for e-learning system with multimedia contents: a pairwise comparison approach. Multimedia Tools and Applications, 73(2), 887–900. https://doi.org/10.1007/s11042-013-1445-5

Kahraman, C., Öztayşi, B., Uçal Sarı, İ, & Turanoğlu, E. (2014). Fuzzy analytic hierarchy process with interval type-2 fuzzy sets. Knowledge-Based Systems, 59, 48–57. https://doi.org/10.1016/j.knosys.2014.02.001

Karahoca, D., & Karahoca, A. (2009). Assessing effectiveness of the cognitive abilities and individual differences on e-learning portal usability evaluation. Procedia - Social and Behavioral Sciences, 1(1), 368–380. https://doi.org/10.1016/j.sbspro.2009.01.068

Khan, N. Z., Ansari, T. S. A., Siddiquee, A. N., & Khan, Z. A. (2019). Selection of E-learning websites using a novel Proximity Indexed Value (PIV) MCDM method. Journal of Computers in Education, 6(2), 241–256. https://doi.org/10.1007/s40692-019-00135-7

Kurilovas, E., & Dagiene, V. (2009). Learning objects and virtual learning environments technical evaluation criteria. Electronic Journal of e-Learning, 7(2), 127–136.

Kurilovas, E., & Dagienė, V. (2009). Multiple criteria comparative evaluation of E-learning systems and components. Informatica, 20, 499–518.

Lara, J. A., Lizcano, D., Martínez, M. A., Pazos, J., & Riera, T. (2014). A system for knowledge discovery in e-learning environments within the European Higher Education Area – Application to student data from Open University of Madrid, UDIMA. Computers & Education, 72, 23–36. https://doi.org/10.1016/j.compedu.2013.10.009

Leka, L., Kika, A., & Greca, S. (2016). Adaptivity In E-learning Systems. RTA-CSIT-2016.

Lin, R. J., Chen, H. P., & Tseng, M. L. (2011). Evaluating the effectiveness of e-learning system in uncertainty. Industrial Management & Data Systems, 111(6), 869–889. https://doi.org/10.1108/02635571111144955

Lo, T.-S., Chang, T.-H., Shieh, L.-F., & Chung, Y.-C. (2011). Key factors for efficiently implementing customized e-learning system in the service industry. Journal of Systems Science and Systems Engineering, 20(3), 346. https://doi.org/10.1007/s11518-011-5173-y

Martin, L., Martínez, D., Revilla, O., Aguilar, M., Santos, O. C., & G. Boticario, J. (2008). Usability in e-learning platforms: heuristics comparison between Moodle, Sakai and dotLRN.

Matsatsinis, N. F., & Fortsas, V. C. (2005). A multicriteria methodology for the assessment of distance education trainees. Operational Research, 5(3), 419–433. https://doi.org/10.1007/BF02941129

Merhi, M. I. (2021). Evaluating the critical success factors of data intelligence implementation in the public sector using analytical hierarchy process. Technological Forecasting and Social Change, 173, 121180.

Mingli, Y., & Yihui, C. (2010, 22-24 Oct. 2010). The research of evaluation system model of web self- learning based on ahp method and the system implement. 2010 International Conference on Computer Application and System Modeling (ICCASM 2010).

Moedritscher, F. (2006). e-Learning theories in practice: A comparison of three methods. Journal of Universal Science and Technology of Learning (JUSTL), 10, 3–18.

Muilenburg, L. Y., & Berge, Z. L. (2005). Student barriers to online learning: A factor analytic study. Distance Education, 26(1), 29–48. https://doi.org/10.1080/01587910500081269

Murakoshi, H., Kawarasaki, T., & Ochimizu, K. (2001, 8-12 Jan. 2001). Comparison using AHP Web-based learning with classroom learning. Proceedings 2001 Symposium on Applications and the Internet Workshops (Cat. No.01PR0945).

Naveed, Q. N., Qureshi, M. R. N., Tairan, N., Mohammad, A., & Shaikh, A. (2020). Evaluating critical success factors in implementing E-learning system using multi-criteria decision-making. PLoS ONE, 15(5), e0231465. https://doi.org/10.1371/journal.pone.0231465

Ng, K. C., & Murphy, D. (2005). Evaluating interactivity and learning in computer conferencing using content analysis techniques. Distance Education, 26(1), 89–109. https://doi.org/10.1080/01587910500081327

Ouadoud, M., Chkouri, M. Y., Nejjari, A., & Kadiri, K. E. E. (2016, 24-26 Oct. 2016). Studying and comparing the free e-learning platforms. 2016 4th IEEE International Colloquium on Information Science and Technology (CiSt).

Ouajdouni, A., Chafik, K., & Boubker, O. (2021). Measuring e-learning systems success: Data from students of higher education institutions in Morocco. Data in Brief, 35, 106807. https://doi.org/10.1016/j.dib.2021.106807

Özceylan, E., Kabak, M., & Dağdeviren, M. (2016). A fuzzy-based decision making procedure for machine selection problem. Journal of Intelligent & Fuzzy Systems, 30(3), 1841–1856.

Qin, Y., & Zhang, Q. (2008, 12-14 Dec. 2008). The Research on Affecting Factors of E-learning Training Effect. 2008 International Conference on Computer Science and Software Engineering.

Roffe, I. (2002). E-learning: engagement, enhancement and execution. Quality Assurance in Education, 10(1), 40–50. https://doi.org/10.1108/09684880210416102

Paul, A., Deshamukhya, T., & Pal, J. (2021). Investigation and Utilization of Indian Peat in the Energy Industry with Optimal Site-Selection Using Analytic Hierarchy Process: A Case Study in North-Eastern India. Energy, 122169.

Saaty, T. L. (1980). The analytic hierarchy process. McGraw-Hill.

Sahasrabudhe, V., & Kanungo, S. (2014). Appropriate media choice for e-learning effectiveness: Role of learning domain and learning style. Computers & Education, 76, 237–249. https://doi.org/10.1016/j.compedu.2014.04.006

Sun, P.-C., Tsai, R. J., Finger, G., Chen, Y.-Y., & Yeh, D. (2008). What drives a successful e-Learning? An empirical investigation of the critical factors influencing learner satisfaction. Computers & Education, 50(4), 1183–1202. https://doi.org/10.1016/j.compedu.2006.11.007

Susan, M. M., & Kenneth, L. M. (2000). theoretical and practical considerations in the design of web-based instruction. In A. Beverly (Ed.), Instructional and cognitive impacts of web-based education (pp. 156–177). IGI Global. https://doi.org/10.4018/978-1-878289-59-9.ch010

Tzeng, G.-H., Chiang, C.-H., & Li, C.-W. (2007). Evaluating intertwined effects in e-learning programs: A novel hybrid MCDM model based on factor analysis and DEMATEL. Expert Systems with Applications, 32(4), 1028–1044. https://doi.org/10.1016/j.eswa.2006.02.004

Wang, C., & Lin, S. (2012, 25-28 Aug. 2012). Combining fuzzy AHP and association rule to evaluate the activity processes of e-learning system. 2012 Sixth International Conference on Genetic and Evolutionary Computing.

Xu, X., Dey, P. K., Ho, W., Bahsoon, R., & Higson, H. E. (2009). Measuring performance of virtual learning environment system in higher education. Quality Assurance in Education, 17(1), 6–29. https://doi.org/10.1108/09684880910929908

Yigit, T., Isik, A. H., & Ince, M. (2014). Web-based learning object selection software using analytical hierarchy process. IET Software, 8(4), 174–183. https://doi.org/10.1049/iet-sen.2013.0116

Yuen, K. K. F. (2012, 11-13 Jan. 2012). A multiple criteria decision making approach for e-learning platform selection: The primitive cognitive network process. 2012 Computing, Communications and Applications Conference.

Zhang, L., Wen, H., Li, D., Fu, Z., & Cui, S. (2010). E-learning adoption intention and its key influence factors based on innovation adoption theory. Mathematical and Computer Modelling, 51(11), 1428–1432. https://doi.org/10.1016/j.mcm.2009.11.013

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests/Competing interests

The authors declare that they have no conflict of interest/competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Atıcı, U., Adem, A., Şenol, M.B. et al. A comprehensive decision framework with interval valued type-2 fuzzy AHP for evaluating all critical success factors of e-learning platforms. Educ Inf Technol 27, 5989–6014 (2022). https://doi.org/10.1007/s10639-021-10834-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10639-021-10834-3