Abstract

Deep neural networks can achieve great successes when presented with large data sets and sufficient computational resources. However, their ability to learn new concepts quickly is limited. Meta-learning is one approach to address this issue, by enabling the network to learn how to learn. The field of Deep Meta-Learning advances at great speed, but lacks a unified, in-depth overview of current techniques. With this work, we aim to bridge this gap. After providing the reader with a theoretical foundation, we investigate and summarize key methods, which are categorized into (i) metric-, (ii) model-, and (iii) optimization-based techniques. In addition, we identify the main open challenges, such as performance evaluations on heterogeneous benchmarks, and reduction of the computational costs of meta-learning.

Similar content being viewed by others

1 Introduction

In recent years, deep learning techniques have achieved remarkable successes on various tasks, including game-playing (Mnih et al. 2013; Silver et al. 2016), image recognition (Krizhevsky et al. 2012; He et al. 2015), machine translation (Wu et al. 2016), and automatic classification in biomedical domains (Goceri 2019a; Goceri and Karakas 2020; Iqbal et al. 2019a, b, 2020). Despite these advances and recent solutions (Goceri 2019b, 2020), ample challenges remain to be solved, such as the large amounts of data and training that are needed to achieve good performance. These requirements severely constrain the ability of deep neural networks to learn new concepts quickly, one of the defining aspects of human intelligence (Jankowski et al. 2011; Lake et al. 2017).

Meta-learning has been suggested as one strategy to overcome this challenge (Naik and Mammone 1992; Schmidhuber 1987; Thrun 1998). The key idea is that meta-learning agents improve their learning ability over time, or equivalently, learn to learn. The learning process is primarily concerned with tasks (set of observations) and takes place at two different levels: an inner- and an outer-level. At the inner-level, a new task is presented, and the agent tries to quickly learn the associated concepts from the training observations. This quick adaptation is facilitated by knowledge that it has accumulated across earlier tasks at the outer-level. Thus, whereas the inner-level concerns a single task, the outer-level concerns a multitude of tasks.

Historically, the term meta-learning has been used with various scopes. In its broadest sense, it encapsulates all systems that leverage prior learning experience in order to learn new tasks more quickly (Vanschoren 2018). This broad notion includes more traditional algorithm selection and hyperparameter optimization techniques for Machine Learning (Brazdil et al. 2008). In this work, however, we focus on a subset of the meta-learning field which develops meta-learning procedures to learn a good inductive bias for (deep) neural networks.Footnote 1 Henceforth, we use the term Deep Meta-Learning to refer to this subfield of meta-learning.

The field of Deep Meta-Learning is advancing at a quick pace, while it lacks a coherent, unifying overview, providing detailed insights into the key techniques. Vanschoren (2018) has surveyed meta-learning techniques, where meta-learning was used in the broad sense, limiting its account of Deep Meta-Learning techniques. Also, many exciting developments in deep meta-learning have happened after the survey was published. A more recent survey by Hospedales et al. (2020) adopts the same notion of deep meta-learning as we do, but aims for a broad overview, omitting technical details of the various techniques.

We attempt to fill this gap by providing detailed explications of contemporary Deep Meta-Learning techniques, using a unified notation. More specifically, we cover modern techniques in the field for supervised and reinforcement learning, that have achieved state-of-the-art performance, obtained popularity in the field, and presented novel ideas. Extra attention is paid to MAML (Finn et al. 2017), and related techniques, because of their impact on the field. We show how the techniques relate to each other, detail their strengths and weaknesses, identify current challenges, and provide an overview of promising future research directions. One of the observations that we make is that the network complexity is highly related to the few-shot classification performance (see Fig. 1). One might expect that in a few-shot setting, where only a few examples are available to learn from, the number of network parameters should be kept small to prevent overfitting. Clearly, the figure shows that this does not hold, as techniques that use larger backbones tend to achieve better performance. One important factor might be that due to the high amount of tasks that have been seen by the network, we are in a setting where similarly large amounts of observations have been evaluated. This result suggests that the size of the network should be taken into account when comparing algorithms.

This work can serve as an educational introduction to the field of Deep Meta-Learning, and as reference material for experienced researchers in the field. Throughout, we will adopt the taxonomy used by Vinyals (2017), which identifies three categories of Deep Meta-Learning approaches: (i) metric-, (ii) model-, and (iii) optimization-based meta-learning techniques.

The remainder of this work is structured as follows. Sect. 2 builds a common foundation on which we will base our overview of Deep Meta-Learning techniques. Sections 3, 4, and 5 cover the main metric-, model-, and optimization-based meta-learning techniques, respectively. Section 6 provides a helicopter view of the field and summarizes the key challenges and open questions. Table 1 gives an overview of the notation that we will use throughout this paper.

2 Foundation

In this section, we build the necessary foundation for investigating Deep Meta-Learning techniques in a consistent manner. To begin with, we contrast regular learning and meta-learning. Afterwards, we briefly discuss how Deep Meta-Learning relates to different fields, what the usual training and evaluation procedure looks like, and which benchmarks are often used for this purpose. We finish this section by describing the context and some applications of the meta-learning field.

2.1 The meta abstraction

In this subsection, we contrast base-level (regular) learning and meta-learning for two different paradigms, i.e., supervised and reinforcement learning.

2.1.1 Regular supervised learning

In supervised learning, we wish to learn a function \(f_{\varvec{\theta }}: X \rightarrow Y\) that learns to map inputs \({\varvec{x}}_{i} \in X\) to their corresponding outputs \(y_{i} \in Y\). Here, \(\varvec{\theta }\) are model parameters (e.g. weights in a neural network) that determine the function’s behavior. To learn these parameters, we are given a data set of m observations: \(D = \{({\varvec{x}}_{i}, y_{i})\}_{i=1}^{m}\). Thus, given a data set \({\mathcal {D}}\), learning boils down to finding the correct setting for \(\varvec{\theta }\) that minimizes an empirical loss function \({\mathcal {L}}_{D}\), which must capture how the model is performing, such that appropriate adjustments to its parameters can be made. In short, we wish to find

where SL stands for “supervised learning”. Note that this objective is specific to the data set \({\mathcal {D}}\), meaning that our model \(f_{\varvec{\theta }}\) may not generalize to examples outside of \({\mathcal {D}}\). To measure generalization, one could evaluate the performance on a separate test data set, which contains unseen examples. A popular way to do this is through cross-validation, where one repeatedly creates train and test splits \(D^{tr}, D^{test} \subset D\) and uses these to train and evaluate a model respectively (Hastie et al. 2009).

Finding globally optimal parameters \(\varvec{\theta }_{SL}\) is often computationally infeasible. We can, however, approximate them, guided by pre-defined meta-knowledge \(\omega\) (Hospedales et al. 2020), which includes, e.g., the initial model parameters \(\varvec{\theta }\), choice of optimizer, and learning rate schedule. As such, we approximate

where \(g_{\omega }\) is an optimization procedure that uses pre-defined meta-knowledge \(\omega\), data set \({\mathcal {D}}\), and loss function \({\mathcal {L}}_{D}\), to produce updated weights \(g_{\omega }(D, {\mathcal {L}}_{D})\) that (presumably) perform well on \({\mathcal {D}}\).

2.1.2 Supervised meta-learning

In contrast, supervised meta-learning does not assume that any meta-knowledge \(\omega\) is given, or pre-defined. Instead, the goal of meta-learning is to find the best \(\omega\), such that our (regular) base-learner can learn new tasks (data sets) as quickly as possible. Thus, whereas supervised regular learning involves one data set, supervised meta-learning involves a group of data sets. The goal is to learn meta-knowledge \(\omega\) such that our model can learn many different tasks well. Thus, our model is learning to learn.

More formally, we have a probability distribution of tasks \(p({\mathcal {T}})\), and wish to find optimal meta-knowledge

Here, the inner-level concerns task-specific learning, while the outer-level concerns multiple tasks. One can now easily see why this is meta-learning: we learn \(\omega\), which allows for quick learning of tasks \({\mathcal {T}}_{j}\) at the inner-level. Hence, we are learning to learn.

2.1.3 Regular reinforcement learning

In reinforcement learning, we have an agent that learns from experience. That is, it interacts with an environment, modeled by a Markov Decision Process (MDP) \(M = (S, A, P, r, p_{0}, \gamma , T)\). Here, S is the set of states, A the set of actions, P the transition probability distribution defining \(P(s_{t+1}| s_{t}, a_{t})\), \(r: S \times A \rightarrow {\mathbb {R}}\) the reward function, \(p_{0}\) the probability distribution over initial states, \(\gamma \in [0,1]\) the discount factor, and T the time horizon (maximum number of time steps) (Sutton and Barto 2018; Duan et al. 2016).

At every time step t, the agent finds itself in the state \(s_{t}\), in which the agent performs an action \(a_{t}\), computed by a policy function \(\pi _{\varvec{\theta }}\) (i.e., \(a_{t} = \pi _{\varvec{\theta }}(s_{t})\)), which is parameterized by weights \(\varvec{\theta }\). In turn, it receives a reward \(r_{t} = r(s_{t}, \pi _{\varvec{\theta }}(s_{t})) \in {\mathbb {R}}\) and a new state \(s_{t+1}\). This process of interactions continues until a termination criterion is met (e.g. fixed time horizon T reached). The goal of the agent is to learn how to act in order to maximize its expected reward. The reinforcement learning (RL) goal is to find

where we take the expectation over the possible trajectories \(\text{ traj } = (s_{0}, \pi _{\varvec{\theta }}(s_{0}), ...s_{T}, \pi _{\varvec{\theta }}(s_{T}))\) due to the random nature of MDPs (Duan et al. 2016). Note that \(\gamma\) is a hyperparameter that can prioritize short- or long-term rewards by decreasing or increasing it, respectively.

Also in the case of reinforcement learning, it is often infeasible to find the global optimum \(\varvec{\theta }_{RL}\), and thus we settle for approximations. In short, given a learning method \(\omega\), we approximate

where again \({\mathcal {T}}_{j}\) is the given MDP, and \(g_{\omega }\) is the optimization algorithm, guided by pre-defined meta-knowledge \(\omega\).

Note that in a Markov Decision Process (MDP), the agent knows the state at any given time step t. When this is not the case, it becomes a Partially Observable Markov Decision Process (POMDP), where the agent receives only observations O and uses these to update its belief with regard to the state it is in Sutton and Barto (2018).

2.1.4 Meta reinforcement learning

The meta abstraction has as its object a group of tasks, or Markov Decision Processes (MDPs) in the case of reinforcement learning. Thus, instead of maximizing the expected reward on a single MDP, the meta reinforcement learning objective is to maximize the expected reward over various MDPs, by learning meta-knowledge \(\omega\). Here, the MDPs are sampled from some distribution \(p({\mathcal {T}})\). So, we wish to find a set of parameters

2.1.5 Contrast with other fields

Now that we have provided a formal basis for our discussion for both supervised and reinforcement meta-learning, it is time to contrast meta-learning briefly with two related areas of machine learning that also have the goal to improve the speed of learning. We will start with transfer learning.

Transfer Learning In Transfer Learning, one tries to transfer knowledge of previous tasks to new, unseen tasks (Pan and Yang 2009; Taylor and Stone 2009), which can be challenging when a new task comes from a different distribution than the one used for training Iqbal et al. (2018). The distinction between Transfer Learning and Meta-Learning has become more opaque over time. A key property of meta-learning techniques, however, is their meta-objective, which explicitly aims to optimize performance across a distribution over tasks (as seen in previous sections by taking the expected loss over a distribution of tasks). This objective need not always be present in Transfer Learning techniques, e.g., when one pre-trains a model on a large data set and fine-tunes the learned weights on a smaller data set.

Multi-task learning Another, closely related field, is that of multi-task learning. In multi-task learning, a model is jointly trained to perform well on multiple fixed tasks (Hospedales et al. 2020). Meta-learning, in contrast, aims to find a model that can learn new (previously unseen) tasks quickly. This difference is illustrated in Fig. 2.

Adapted from https://meta-world.github.io/.

The difference between multi-task learning and meta-learning.

2.2 The meta-setup

In the previous section, we have described the learning objectives for (meta) supervised and reinforcement learning. We will now describe the general setting that can be used to achieve these objectives. In general, one optimizes a meta-objective by using various tasks, which are data sets in the context of supervised learning, and (Partially Observable) Markov Decision Processes in the case of reinforcement learning. This is done in three stages: the (i) meta-train stage, (ii) meta-validation stage, and (iii) meta-test stage, each of which is associated with a set of tasks.

First, in the meta-train stage, the meta-learning algorithm is applied to the meta-train tasks. Second, the meta-validation tasks can then be used to evaluate the performance on unseen tasks, which were not used for training. Effectively, this measures the meta-generalization ability of the trained network, which serves as feedback to tune, e.g., hyper-parameters of the meta-learning algorithm. Third, the meta-test tasks are used to give a final performance estimate of the meta-learning technique.

Illustration of N-way, k-shot classification, where \(N = 5\), and \(k = 1\). Meta-validation tasks are not displayed. Adapted from Ravi and Larochelle (2017)

2.2.1 N-way, k-shot Learning

A frequently used instantiation of this general meta-setup is called N-way, k-shot classification (see Fig. 3). This setup is also divided into the three stages—meta-train, meta-validation, and meta-test—which are used for meta-learning, meta-learner hyperparameter optimization, and evaluation, respectively. Each stage has a corresponding set of disjoint labels, i.e., \(L^{tr}, L^{val}, L^{test} \subset Y\), such that \(L^{tr} \cap L^{val} = \emptyset , L^{tr} \cap L^{test} = \emptyset\), and \(L^{val} \cap L^{test} = \emptyset\). In a given stage s, tasks/episodes \({\mathcal {T}}_{j} = (D^{tr}_{{\mathcal {T}}_{j}}, D^{test}_{{\mathcal {T}}_{j}})\) are obtained by sampling examples \(({\varvec{x}}_{i}, y_{i})\) from the full data set \({\mathcal {D}}\), such that every \(y_{i} \in L^{s}\). Note that this requires access to a data set \({\mathcal {D}}\). The sampling process is guided by the N-way, k-shot principle, which states that every training data set \(D^{tr}_{{\mathcal {T}}_{j}}\) should contain exactly N classes and k examples per class, implying that \(|D^{tr}_{{\mathcal {T}}_{j}}| = N \cdot k\). Furthermore, the true labels of examples in the test set \(D_{{\mathcal {T}}_{j}}^{test}\) must be present in the train set \(D^{tr}_{{\mathcal {T}}_{j}}\) of a given task \({\mathcal {T}}_{j}\). \(D^{tr}_{{\mathcal {T}}{j}}\) acts as a support set, literally supporting classification decisions on the query set \(D^{test}_{{\mathcal {T}}_{j}}\). Importantly, note that with this terminology, the query set (or test set) of a task is used during the meta-training phase. Furthermore, the fact that the labels across stages are disjoint ensures that we test the ability of a model to learn new concepts.

The meta-learning objective in the training phase is to minimize the loss function of the model predictions on the query sets, conditioned on the support sets. As such, for a given task \({\mathcal {T}}_j\), the model ‘sees’ the support set, and extracts information from the support set to guide its predictions on the query set. By applying this procedure to different episodes/tasks \({\mathcal {T}}_j\), the model will slowly accumulate meta-knowledge \(\omega\), which can ultimately speed up learning on new tasks.

The easiest way to achieve this is by doing this with regular neural networks, but as was pointed out by various authors (see, e.g., Finn et al. 2017) more sophisticated architectures will vastly outperform such networks. In the remainder of this work, we will review such architectures.

At the meta-validation and meta-test stages, or evaluation phases, the learned meta-information in \(\omega\) is fixed. The model is, however, still allowed to make task-specific updates to its parameters \(\varvec{\theta }\) (which implies that it is learning). After task-specific updates, we can evaluate the performance on the test sets. In this way, we test how well a technique performs at meta-learning.

N-way, k-shot classification is often performed for small values of k (since we want our models to learn new concepts quickly, i.e., from few examples). In that case, one can refer to it as few-shot learning.

2.2.2 Common benchmarks

Here, we briefly describe some benchmarks that can be used to evaluate meta-learning algorithms.

-

Omniglot (Lake et al. 2011): This data set presents an image recognition task. Each image corresponds to one out of 1 623 characters from 50 different alphabets. Every character was drawn by 20 people. Note that in this case, the characters are the classes/labels.

-

ImageNet (Deng et al. 2009): This is the largest image classification data set, containing more than 20K classes and over 14 million colored images. miniImageNet is a mini variant of the large ImageNet data set (Deng et al. 2009) for image classification, proposed by Vinyals et al. (2016) to reduce the engineering efforts to run experiments. The mini data set contains 60 000 colored images of size \(84 \times 84\). There are a total of 100 classes present, each accorded by 600 examples. tieredImageNet (Ren et al. 2018) is another variation of the large ImageNet data set. It is similar to miniImageNet, but contains a hierarchical structure. That is, there are 34 classes, each with its own sub-classes.

-

CIFAR-10 and CIFAR-100 (Krizhevsky 2009): Two other image recognition data sets. Each one contains 60K RGB images of size \(32 \times 32\). CIFAR-10 and CIFAR-100 contain 10 and 100 classes respectively, with a uniform number of examples per class (6 000 and 600 respectively). Every class in CIFAR-100 also has a super-class, of which there are 20 in the full data set. Many variants of the CIFAR data sets can be sampled, giving rise to e.g. CIFAR-FS (Bertinetto et al. 2019) and FC-100 (Oreshkin et al. 2018).

-

CUB-200-2011 (Wah et al. 2011): The CUB-200-2011 data set contains roughly 12K RGB images of birds from 200 species. Every image has some labeled attributes (e.g. crown color, tail shape).

-

MNIST (LeCun et al. 2010): MNIST presents a hand-written digit recognition task, containing ten classes (for digits 0 through 9). In total, the data set is split into a 60K train and 10K test gray scale images of hand-written digits.

-

Meta-Dataset (Triantafillou et al. 2020): This data set comprises several other data sets such as Omniglot (Lake et al. 2011), CUB-200 (Wah et al. 2011), ImageNet (Deng et al. 2009), and more (Triantafillou et al. 2020). An episode is then constructed by sampling a data set (e.g. Omniglot), selecting a subset of labels to create train and test splits as before. In this way, broader generalization is enforced since the tasks are more distant from each other.

-

Meta-world (Yu et al. 2019): A meta reinforcement learning data set, containing 50 robotic manipulation tasks (control a robot arm to achieve some pre-defined goal, e.g. unlocking a door, or playing soccer). It was specifically designed to cover a broad range of tasks, such that meaningful generalization can be measured (Yu et al. 2019).

Learning continuous robotic control tasks is an important application of Deep Meta-Learning techniques. Image is taken from (Yu et al. 2019)

2.2.3 Some applications of meta-learning

Deep neural networks have achieved remarkable results on various tasks from image recognition, text processing, game playing to robotics (Silver et al. 2016; Mnih et al. 2013; Wu et al. 2016), but their success depends on the amount of available data (Sun et al. 2017) and computing resources. Deep meta-learning reduces this dependency by allowing deep neural networks to learn new concepts quickly. As a result, meta-learning widens the applicability of deep learning techniques to many application domains. Such areas include few-shot image classification (Finn et al. 2017; Snell et al. 2017; Ravi and Larochelle 2017), robotic control policy learning (Gupta et al. 2018; Nagabandi et al. 2019) (see Fig. 4), hyperparameter optimization (Antoniou et al. 2019; Schmidhuber et al. 1997), meta-learning learning rules (Bengio et al. 1991, 1997; Miconi et al. 2018, 2019), abstract reasoning (Barrett et al. 2018), and many more. For a larger overview of applications, we refer interested readers to Hospedales et al. (2020).

2.3 The meta-learning field

As mentioned in the introduction, meta-learning is a broad area of research, as it encapsulates all techniques that leverage prior learning experience to learn new tasks more quickly (Vanschoren 2018). We can classify two distinct communities in the field with a different focus: (i) algorithm selection and hyperparameter optimization for machine learning techniques, and (ii) search for inductive bias in deep neural networks. We will refer to these communities as group (i) and group (ii) respectively. Now, we will give a brief description of the first field, and a historical overview of the second.

Group (i) uses a more traditional approach, to select a suitable machine learning algorithm and hyperparameters for a new data set \({\mathcal {D}}\) (Peng et al. 2002). This selection can for example be made by leveraging prior model evaluations on various data sets \(D'\), and by using the model which achieved the best performance on the most similar data set (Vanschoren 2018). Such traditional approaches require (large) databases of prior model evaluations, for many different algorithms. This has led to initiatives such as OpenML (Vanschoren et al. 2014), where researchers can share such information. The usage of these systems would limit the freedom in picking the neural network architecture as they would be constrained to using architectures that have been evaluated beforehand.

Driven by advances in neural networks another approach, taken by group (ii), is to adopt the view of a self-improving agent, which improves its learning ability over time by finding a good inductive bias (a set of assumptions that guide predictions). We now present a brief historical overview of developments in this field of Deep Meta-Learning, based on Hospedales et al. (2020).

Pioneering work was done by Schmidhuber (1987) and Hinton and Plaut (1987). Schmidhuber developed a theory of self-referential learning, where the weights of a neural network can serve as input to the model itself, which then predicts updates (Schmidhuber 1987, 1993). In that same year, Hinton and Plaut (1987) proposed to use two weights per neural network connection, i.e., slow and fast weights, which serve as long- and short-term memory respectively. Later came the idea of meta-learning learning rules (Bengio et al. 1991, 1997). Meta-learning techniques that use gradient-descent and backpropagation were proposed by Hochreiter et al. (2001) and Younger et al. (2001). These two works have been pivotal to the current field of Deep Meta-Learning, as the majority of techniques rely on backpropagation, as we will see on our journey of contemporary Deep Meta-Learning techniques. We will now cover the three categories metric-, model-, and optimization-based techniques, respectively.

2.4 Overview of the rest of this work

In the remainder of this work, we will look in more detail at individual meta-learning methods. As indicated before, the techniques can be grouped into three main categories (Vinyals 2017), namely (i) metric-, (ii) model-, and (iii) optimization-based methods. We will discuss them in sequence.

To help give an overview of the methods, we draw your attention to the following figure and tables. Table 2 summarizes the three categories and provides key ideas, strengths, and weaknesses of the approaches. The terms and technical details are explained more fully in the remainder of this paper. Table 3 contains an overview of all techniques that are discussed further on.

3 Metric-based meta-learning

At a high level, the goal of metric-based techniques is to acquire—among others—meta-knowledge \(\omega\) in the form of a good feature space that can be used for various new tasks. In the context of neural networks, this feature space coincides with the weights \(\varvec{\theta }\) of the networks. Then, new tasks can be learned by comparing new inputs to example inputs (of which we know the labels) in the meta-learned feature space. The higher the similarity between a new input and an example, the more likely it is that the new input will have the same label as the example input.

Metric-based techniques are a form of meta-learning as they leverage their prior learning experience (meta-learned feature space) to ‘learn’ new tasks more quickly. Here, ‘learn’ is used in a non-standard way since metric-based techniques do not make any network changes when presented with new tasks, as they rely solely on input comparisons in the already meta-learned feature space. These input comparisons are a form of non-parametric learning, i.e., new task information is not absorbed into the network parameters.

More formally, metric-based learning techniques aim to learn a similarity kernel, or equivalently, attention mechanism \(k_{\varvec{\theta }}\) (parameterized by \(\varvec{\theta }\)), that takes two inputs \({\varvec{x}}_{1}\) and \({\varvec{x}}_{2}\), and outputs their similarity score. Larger scores indicate larger similarities. Class predictions for new inputs \({\varvec{x}}\) can then be made by comparing \({\varvec{x}}\) to example inputs \({\varvec{x}}_{i}\), of which we know the true labels \(y_{i}\). The underlying idea is that the larger the similarity between \({\varvec{x}}\) and \({\varvec{x}}_{i}\), the more likely it becomes that \({\varvec{x}}\) also has label \(y_{i}\).

Given a task \({\mathcal {T}}_{j} = (D^{tr}_{{\mathcal {T}}_{j}}, D^{test}_{{\mathcal {T}}_{j}})\) and an unseen input vector \({\varvec{x}} \in D^{test}_{{\mathcal {T}}_{j}}\), a probability distribution over classes Y is computed/predicted as a weighted combination of labels from the support set \(D^{tr}_{{\mathcal {T}}_{j}}\), using similarity kernel \(k_{\varvec{\theta }}\), i.e.,

Importantly, the labels \(y_{i}\) are assumed to be one-hot encoded, meaning that they are represented by zero vectors with a ‘1’ on the position of the true class. For example, suppose there are five classes in total and our example \({\varvec{x}}_{1}\) has true class 4. Then, the one-hot encoded label is \(y_{1} = [0,0,0,1,0]\). Note that the probability distribution \(p_{\varvec{\theta }}(Y|{\varvec{x}}, D^{tr}_{{\mathcal {T}}_{j}})\) over classes is a vector of size |Y|, in which the i-th entry corresponds to the probability that input \({\varvec{x}}\) has class \(Y_{i}\) (given the support set). The predicted class is thus \({\hat{y}} = {{\,\mathrm{arg\,max}\,}}_{i=1,2,\ldots ,|Y|} p_{\varvec{\theta }}(Y|{\varvec{x}},S)_{i}\), where \(p_{\varvec{\theta }}(Y|{\varvec{x}},S)_{i}\) is the computed probability that input \({\varvec{x}}\) has class \(Y_{i}\).

3.1 Example

Suppose that we are given a task \({\mathcal {T}}_{j} = (D^{tr}_{{\mathcal {T}}_{j}}, D^{test}_{{\mathcal {T}}_{j}})\). Furthermore, suppose that \(D^{tr}_{{\mathcal {T}}_{j}} = \{ ([0,-4], 1), ([-2,-4],2), ([-2,4],3), ([6,0], 4) \}\), where a tuple denotes a pair \(({\varvec{x}}_{i},y_{i})\). For simplicity, the example will not use an embedding function, which maps example inputs onto an (more informative) embedding space. Our query set only contains one example \(D^{test}_{{\mathcal {T}}_{j}} = \{ ([4, 0.5], y) \}\). Then, the goal is to predict the correct label for new input [4, 0.5] using only examples in \(D^{tr}_{{\mathcal {T}}_{j}}\). The problem is visualized in Fig. 5, where red vectors correspond to example inputs from our support set. The blue vector is the new input that needs to be classified. Intuitively, this new input is most similar to the vector [6, 0], which means that we expect the label for the new input to be the same as that for [6, 0], i.e., 4.

Suppose we use a fixed similarity kernel, namely the cosine similarity, i.e., \(k({\varvec{x}}, {\varvec{x}}_{i}) = \frac{{\varvec{x}} \cdot {\varvec{x}}_{i}^{T}}{||{\varvec{x}}|| \cdot ||{\varvec{x}}_{i}||}\), where \(||{\varvec{v}}||\) denotes the length of vector \({\varvec{v}}\), i.e., \(||{\varvec{v}}|| = \sqrt{(\sum _{n}v_{n}^{2})}\). Here, \(v_{n}\) denotes the n-th element of placeholder vector \({\varvec{v}}\) (substitute \({\varvec{v}}\) by \({\varvec{x}}\) or \({\varvec{x}}_{i}\)). We can now compute the cosine similarity between the new input [4, 0.5] and every example input \({\varvec{x}}_{i}\), as done in Table 4, where we used the facts that \(||{\varvec{x}}|| = ||\, [4,0.5] \, || = \sqrt{4^{2}+0.5^{2}} \approx 4.03\), and \(\frac{{\varvec{x}}}{||{\varvec{x}}||} \approx \frac{[4,0.5]}{4.03} = [0.99,0.12]\).

From this table and Eq. 7, it follows that the predicted probability distribution \(p_{\varvec{\theta }}(Y|{\varvec{x}}, D^{tr}_{{\mathcal {T}}_{j}}) = -0.12y_{1} -0.58y_{2} - 0.37y_{3} + 0.99y_{4} = -0.12 [1,0,0,0] - 0.58 [0,1,0,0] -0.37[0,0,1,0] + 0.99[0,0,0,1] =[-0.12,-0.58,-0.37,0.99]\). Note that this is not really a probability distribution. That would require normalization such that every element is at least 0 and the sum of all elements is 1. For the sake of this example, we do not perform this normalization, as it is clear that class 4 (the class of the most similar example input [6, 0]) will be predicted.

One may wonder why such techniques are meta-learners, for we could take any single data set \({\mathcal {D}}\) and use pair-wise comparisons to compute predictions. At the outer-level, metric-based meta-learners are trained on a distribution of different tasks, in order to learn (among others) a good input embedding function. This embedding function facilitates inner-level learning, which is achieved through pair-wise comparisons. As such, one learns an embedding function across tasks to facilitate task-specific learning, which is equivalent to “learning to learn”, or meta-learning.

After this introduction to metric-based methods, we will now cover some key metric-based techniques.

3.2 Siamese neural networks

A Siamese neural network (Koch et al. 2015) consists of two neural networks \(f_{\varvec{\theta }}\) that share the same weights \(\varvec{\theta }\). Siamese neural networks take two inputs \({\varvec{x}}_{1}, {\varvec{x}}_{2}\), and compute two hidden states \(f_{\varvec{\theta }}({\varvec{x}}_{1}), f_{\varvec{\theta }}({\varvec{x}}_{2})\), corresponding to the activation patterns in the final hidden layers. These hidden states are fed into a distance layer, which computes a distance vector \({\varvec{d}} = |f_{\varvec{\theta }}({\varvec{x}}_{1}) - f_{\varvec{\theta }}({\varvec{x}}_{2})|\), where \(d_{i}\) is the absolute distance between the i-th elements of \(f_{\varvec{\theta }}({\varvec{x}}_{1})\) and \(f_{\varvec{\theta }}({\varvec{x}}_{2})\). From this distance vector, the similarity between \({\varvec{x}}_{1}, {\varvec{x}}_{2}\) is computed as \(\sigma (\varvec{\alpha }^{T} {\varvec{d}})\), where \(\sigma\) is the sigmoid function (with output range [0,1]), and \(\varvec{\alpha }\) is a vector of free weighting parameters, determining the importance of each \(d_{i}\). This network structure can be seen in Fig. 6.

Source: Koch et al. (2015)

Example of a Siamese neural network.

Koch et al. (2015) applied this technique to few-shot image recognition in two stages. In the first stage, they train the twin network on an image verification task, where the goal is to output whether two input images \({\varvec{x}}_{1}\) and \({\varvec{x}}_{2}\) have the same class. The network is thus stimulated to learn discriminative features. In the second stage, where the model is confronted with a new task, the network leverages its prior learning experience. That is, given a task \({\mathcal {T}}_{j} = (D^{tr}_{{\mathcal {T}}_{j}}, D^{test}_{{\mathcal {T}}_{j}})\), and previously unseen input \({\varvec{x}} \in D^{test}_{{\mathcal {T}}_{j}}\), the predicted class \({\hat{y}}\) is equal to the label \(y_{i}\) of the example \(({\varvec{x}}_{i},y_{i}) \in D^{tr}_{{\mathcal {T}}_{j}}\) which yields the highest similarity score to \({\varvec{x}}\). In contrast to other techniques mentioned further in this section, Siamese neural networks do not directly optimize for good performance across tasks (consisting of support and query sets). However, they do leverage learned knowledge from the verification task to learn new tasks quickly.

In summary, Siamese neural networks are a simple and elegant approach to perform few-shot learning. However, they are not readily applicable outside the supervised learning setting.

3.3 Matching networks

Matching networks (Vinyals et al. 2016) build upon the idea that underlies Siamese neural networks (Koch et al. 2015). That is, they leverage pair-wise comparisons between the given support set \(D^{tr}_{{\mathcal {T}}_{j}} = \{ ({\varvec{x}}_{i}, y_{i}) \}_{i=1}^{m}\) (for a task \({\mathcal {T}}_{j}\)), and new inputs \({\varvec{x}} \in D^{test}_{{\mathcal {T}}_{j}}\) from the query set which we want to classify. However, instead of assigning the class \(y_{i}\) of the most similar example input \({\varvec{x}}_{i}\), matching networks use a weighted combination of all example labels \(y_{i}\) in the support set, based on the similarity of inputs \({\varvec{x}}_{i}\) to new input \({\varvec{x}}\). More specifically, predictions are computed as follows: \({\hat{y}} = \sum _{i=1}^{m} a({\varvec{x}}, {\varvec{x}}_{i})y_{i}\), where a is a non-parametric (non-trainable) attention mechanism, or similarity kernel. This classification process is shown in Fig. 7. In this figure, the input to \(f_{\varvec{\theta }}\) has to be classified, using the support set \(D^{tr}_{{\mathcal {T}}_{j}}\) (input to \(g_{\varvec{\theta }}\)).

Source: Vinyals et al. (2016)

The architecture of matching networks.

The attention that is used consists of a softmax over the cosine similarity c between the input representations, i.e.,

where \(f_{\varvec{\phi }}\) and \(g_{\varvec{\varphi }}\) are neural networks, parameterized by \(\varvec{\phi }\) and \(\varvec{\varphi }\), that map raw inputs to a (lower-dimensional) latent vector, which corresponds to the output of the final hidden layer of a neural network. As such, neural networks act as embedding functions. The larger the cosine similarity between the embeddings of \({\varvec{x}}\) and \({\varvec{x}}_{i}\), the larger \(a({\varvec{x}}, {\varvec{x}}_{i})\), and thus the influence of label \(y_{i}\) on the predicted label \({\hat{y}}\) for input \({\varvec{x}}\).

Vinyals et al. (2016) propose two main choices for the embedding functions. The first is to use a single neural network, granting us \(\varvec{\theta } = \varvec{\phi } = \varvec{\varphi }\) and thus \(f_{\varvec{\phi }} = g_{\varvec{\varphi }}\). This setup is the default form of matching networks, as shown in Fig. 7. The second choice is to make \(f_{\varvec{\phi }}\) and \(g_{\varvec{\varphi }}\) dependent on the support set \(D^{tr}_{{\mathcal {T}}_{j}}\) using Long Short-Term Memory networks (LSTMs). In that case, \(f_{\varvec{\phi }}\) is represented by an attention LSTM, and \(g_{\varvec{\varphi }}\) by a bidirectional one. This choice for embedding functions is called Full Context Embeddings (FCE), and yielded an accuracy improvement of roughly 2% on miniImageNet compared to the regular matching networks, indicating that task-specific embeddings can aid the classification of new data points from the same distribution.

Matching networks learn a good feature space across tasks for making pair-wise comparisons between inputs. In contrast to Siamese neural networks (Koch et al. 2015), this feature space (given by weights \(\varvec{\theta }\)) is learned across tasks, instead of on a distinct verification task.

In summary, matching networks are an elegant and simple approach to metric-based meta-learning. However, these networks are not readily applicable outside of supervised learning settings, and suffer from performance degradation when label distributions are biased (Vinyals et al. 2016).

3.4 Prototypical networks

Just like Matching networks (Vinyals et al. 2016), prototypical networks (Snell et al. 2017) base their class predictions on the entire support set \(D^{tr}_{{\mathcal {T}}_{j}}\). However, instead of computing the similarity between new inputs and examples in the support set, prototypical networks only compare new inputs to class prototypes (centroids), which are single vector representations of classes in some embedding space. Since there are fewer (or equal) class prototypes than the number of examples in the support set, the amount of required pair-wise comparisons decreases, saving computational costs.

The underlying idea of class prototypes is that for a task \({\mathcal {T}}_{j}\), there exists an embedding function that maps the support set onto a space where class instances cluster nicely around the corresponding class prototypes (Snell et al. 2017). Then, for a new input \({\varvec{x}}\), the class of the prototype nearest to that input will be predicted. As such, prototypical networks perform nearest centroid/prototype classification in a meta-learned embedding space. This is visualized in Fig. 8.

More formally, given a distance function \(d: X \times X \rightarrow [0, +\infty )\) (e.g. Euclidean distance) and embedding function \(f_{\varvec{\theta }}\), parameterized by \(\varvec{\theta }\), prototypical networks compute class probabilities \(p_{\varvec{\theta }}(Y | {\varvec{x}}, D^{tr}_{{\mathcal {T}}_{j}})\) as follows

where \({\varvec{c}}_{k}\) is the prototype/centroid for class k and \(y_{i}\) are the classes in the support set \(D^{tr}_{{\mathcal {T}}_{j}}\). Here, a class prototype for class k is defined as the average of all vectors \({\varvec{x}}_{i}\) in the support set such that \(y_{i} = k\). Thus, classes with prototypes that are nearer to the new input \({\varvec{x}}\) obtain larger probability scores.

Snell et al. (2017) found that the squared Euclidean distance function as d gave rise to the best performance. With that distance function, prototypical networks can be seen as linear models. To see this, note that \(-d(f_{\theta }({\varvec{x}}), {\varvec{c}}_{k}) = -|| f_{\theta }({\varvec{x}}) - {\varvec{c}}_{k}||^{2} = - f_{\theta }({\varvec{x}})^{T}f_{\theta }({\varvec{x}}) + 2{\varvec{c}}_{k}^{T}f_{\theta }({\varvec{x}}) - {\varvec{c}}_{k}^{T}{\varvec{c}}_{k}\). The first term does not depend on the class k, and does thus not affect the classification decision. The remainder can be written as \({\varvec{w}}_{k}^{T}f_{\theta }({\varvec{x}}) + {\varvec{b}}_{k}\), where \({\varvec{w}}_{k} = 2{\varvec{c}}_{k}\) and \({\varvec{b}}_{k} = -{\varvec{c}}_{k}^{T}{\varvec{c}}_{k}\). Note that this is linear in the output of network \(f_\theta\), not linear in the input of the network \({\varvec{x}}\). Also, Snell et al. (2017) show that prototypical networks (coupled with Euclidean distance) are equivalent to matching networks in one-shot learning settings, as every example in the support set will be its own prototype.

Source: Snell et al. (2017). (Color figure online)

Prototypical networks for the case of few-shot learning. The \({\varvec{c}}_{k}\) are class prototypes for class k which are computed by averaging the representations of inputs (colored circles) in the support set. Note that the representation space is partitioned into three disjoint areas, where each area corresponds to one class. The class with the closest prototype to the new input \({\varvec{x}}\) in the query set is then given as prediction.

In short, prototypical networks save computational costs by reducing the required number of pair-wise comparisons between new inputs and the support set, by adopting the concept of class prototypes. Additionally, prototypical networks were found to outperform matching networks (Vinyals et al. 2016) in 5-way, k-shot learning for \(k=1,5\) on Omniglot (Lake et al. 2011) and miniImageNet (Vinyals et al. 2016), even though they do not use complex task-specific embedding functions. Despite these advantages, prototypical networks are not readily applicable outside of supervised learning settings.

3.5 Relation networks

Source: Sung et al. (2018)

Relation network architecture. First, the embedding network \(f_{\varvec{\varphi }}\) embeds all inputs from the support set \(D^{tr}_{{\mathcal {T}}_{j}}\) (the five example inputs on the left), and the query input (below the \(f_{\varvec{\varphi }}\) block). All support set embeddings \(f_{\varvec{\varphi }}({\varvec{x}}_{i})\) are then concatenated to the query embedding \(f_{\varvec{\varphi }}({\varvec{x}})\). These concatenated embeddings are passed into a relation network \(g_{\varvec{\phi }}\), which computes a relation score for every pair \(({\varvec{x}}_{i}, {\varvec{x}})\). The class of the input \({\varvec{x}}_{i}\) that yields the largest relation score \(g_{\varvec{\phi }}([f_{\varvec{\varphi }}({\varvec{x}}), f_{\varvec{\varphi }}({\varvec{x}}_{i})])\) is then predicted.

In contrast to previously discussed metric-based techniques, Relation networks (Sung et al. 2018) employ a trainable similarity metric, instead of a pre-defined one (e.g. cosine similarity as used in matching networks (Vinyals et al. 2016)). More specifically, matching networks consist of two chained, neural network modules: the embedding network/module \(f_{\varvec{\varphi }}\) which is responsible for embedding inputs, and the relation network \(g_{\varvec{\phi }}\) which computes similarity scores between new inputs \({\varvec{x}}\) and example inputs \({\varvec{x}}_{i}\) of which we know the labels. A classification decision is then made by picking the class of the example input which yields the largest relation score (or similarity). Note that Relation networks thus do not use the idea of class prototypes, and simply compare new inputs \({\varvec{x}}\) to all example inputs \({\varvec{x}}_{i}\) in the support set, as done by, e.g., matching networks (Vinyals et al. 2016).

More formally, we are given a support set \(D^{tr}_{{\mathcal {T}}_{j}}\) with some examples \(({\varvec{x}}_{i}, y_{i})\), and a new (previously unseen) input \({\varvec{x}}\). Then, for every combination \(({\varvec{x}}, {\varvec{x}}_{i})\), the Relation network produces a concatenated embedding \([f_{\varvec{\varphi }}({\varvec{x}}), f_{\varvec{\varphi }}({\varvec{x}}_{i})]\), which is the vector obtained by concatenating the respective embeddings of \({\varvec{x}}\) and \({\varvec{x}}_{i}\). This concatenated embedding is then fed into the relation module \(g_{\varvec{\phi }}\). Finally, \(g_{\varvec{\phi }}\) computes the relation score between \({\varvec{x}}\) and \({\varvec{x}}_{i}\) as

The predicted class is then \({\hat{y}} = y_{{{\,\mathrm{arg\,max}\,}}_{i} r_{i}}\). This entire process is shown in Fig. 9. Remarkably enough, Relation networks use the Mean-Squared Error (MSE) of the relation scores, rather than the more standard cross-entropy loss. The MSE is then propagated backwards through the entire architecture (Fig. 9).

The key advantage of Relation networks is their expressive power, induced by the usage of a trainable similarity function. This expressivity makes this technique very powerful. As a result, it yields better performance than previously discussed techniques that use a fixed similarity metric.

3.6 Graph neural networks

Graph neural networks (Garcia and Bruna 2017) use a more general and flexible approach than previously discussed techniques for N-way, k-shot classification. As such, graph neural networks subsume Siamese (Koch et al. 2015) and prototypical networks (Snell et al. 2017). The graph neural network approach represents each task \({\mathcal {T}}_{j}\) as a fully-connected graph \(G = (V,E)\), where V is a set of nodes/vertices and E a set of edges connecting nodes. In this graph, nodes \({\varvec{v}}_{i}\) correspond to input embeddings \(f_{\varvec{\theta }}({\varvec{x}}_{i})\), concatenated with their one-hot encoded labels \(y_{i}\), i.e., \({\varvec{v}}_{i} = [f_{\varvec{\theta }}({\varvec{x}}_{i}), y_{i}]\). For inputs \({\varvec{x}}\) from the query set (for which we do not have the labels), a uniform prior over all N possible labels is used: \(y = [\frac{1}{N},\ldots ,\frac{1}{N}]\). Thus, each node contains an input and label section. Edges are weighted links that connect these nodes.

The graph neural network then propagates information in the graph using a number of local operators. The underlying idea is that label information can be transmitted from nodes of which we do have the labels, to nodes for which we have to predict labels. Which local operators are used, is out of scope for this paper, and the reader is referred to Garcia and Bruna (2017) for details.

By exposing the graph neural network to various tasks \({\mathcal {T}}_{j}\), the propagation mechanism can be altered to improve the flow of label information in such a way that predictions become more accurate. As such, in addition to learning a good input representation function \(f_{\varvec{\theta }}\), graph neural networks also learn to propagate label information from labeled examples to unlabeled inputs.

Graph neural networks achieve good performance in few-shot settings (Garcia and Bruna 2017) and are also applicable in semi-supervised and active learning settings.

3.7 Attentive recurrent comparators

Source: Shyam et al. (2017)

Processing in an attentive recurrent comparator. At every time step, the model takes a glimpse of a part of an image and incorporates this information into the hidden state \(h_t\). The final hidden state after taking various glimpses of a pair of images is then used to compute a class similarity score.

Attentive recurrent comparators (Shyam et al. 2017) differ from previously discussed techniques as they do not compare inputs as a whole, but by parts. This approach is inspired by how humans would make a decision concerning the similarity of objects. That is, we shift our attention from one object to the other, and move back and forth to take glimpses of different parts of both objects. In this way, information of two objects is fused from the beginning, whereas other techniques (e.g., matching networks (Vinyals et al. 2016) and graph neural networks (Garcia and Bruna 2017)) only combine information at the end (after embedding both images) (Shyam et al. 2017).

Given two inputs \({\varvec{x}}_{i}\) and \({\varvec{x}}\), we feed them in interleaved fashion repeatedly into a recurrent neural network (controller): \({\varvec{x}}_{i}, {\varvec{x}},\ldots ,{\varvec{x}}_{i},{\varvec{x}}\). Thus, the image at time step t is given by \(I_{t} = {\varvec{x}}_{i}\) if t is even else \({\varvec{x}}\). Then, at each time step t, the attention mechanism focuses on a square region of the current image: \(G_{t} = attend(I_{t}, \varOmega _{t})\), where \(\varOmega _{t} = W_{g}h_{t-1}\) are attention parameters, which are computed from the previous hidden state \(h_{t-1}\). The next hidden state \(h_{t+1} = \text{ RNN }(G_{t}, h_{t-1})\) is given by the glimpse at time t, i.e., \(G_{t}\), and the previous hidden state \(h_{t-1}\). The entire sequence consists of g glimpses per image. After this sequence is fed into the recurrent neural network (indicated by RNN(\(\circ\))), the final hidden state \(h_{2g}\) is used as a combined representation of \({\varvec{x}}_{i}\) relative to \({\varvec{x}}\). This process is summarized in Fig. 10. Classification decisions can then be made by feeding the combined representations into a classifier. Optionally, the combined representations can be processed by bi-directional LSTMs before passing them to the classifier.

The attention approach is biologically inspired, and biologically plausible. A downside of attentive recurrent comparators is the higher computational cost, while the performance is often not better than less biologically plausible techniques, such as graph neural networks (Garcia and Bruna 2017).

3.8 Metric-based techniques, in conclusion

In this section, we have seen various metric-based techniques. The metric-based techniques meta-learn an informative feature space that can be used to compute class predictions based on input similarity scores. Figure 11 shows the relationships between the various metric-based techniques that we have covered.

As we can see, Siamese networks (Koch et al. 2015) mark the beginning of metric-based, deep meta-learning techniques in few-shot learning settings. They are the first to use the idea of predicting classes by comparing inputs from the support and query sets. This idea was generalized in graph neural networks (GNNs) (Hamilton et al. 2017; Garcia and Bruna 2017) where the information flow between support and query inputs is parametric and thus more flexible. Matching networks (Vinyals et al. 2016) are directly inspired by Siamese networks as they use the same core idea (comparing inputs for making predictions), but directly train in the few-shot setting and use cosine similarity as a similarity function. Thus, the auxiliary, binary classification task used by Siamese networks is left out, and matching networks directly train on tasks. Prototypical networks (Snell et al. 2017) increase the robustness of input comparisons by comparing every query set input with a class prototype instead of individual support set examples. This reduces the number of required input comparisons for a single query input to N instead of \(k \cdot N\). Relation networks (Sung et al. 2018) replace the fixed, pre-defined similarity metrics used in matching and prototypical networks with a neural network, which allows for learning a domain-specific similarity function. Lastly, attentive recurrent comparators (ARCs) (Shyam et al. 2017) take a more biologically plausible approach by not comparing entire inputs but by taking multiple interleaved glimpses at various parts of the inputs that are being compared.

Key advantages of these metric-based techniques are that (i) the underlying idea of similarity-based predictions is conceptually simple, and (ii) they can be fast at test-time when tasks are small, as the networks do not need to make task-specific adjustments. However, when tasks at meta-test time become more distant from the tasks that were used at meta-train time, metric-learning techniques are unable to absorb new task information into the network weights. Consequently, performance may suffer.

Furthermore, when tasks become larger, pair-wise comparisons may become computationally expensive. Lastly, most metric-based techniques rely on the presence of labeled examples, which make them inapplicable outside of supervised learning settings.

4 Model-based meta-learning

A different approach to Deep Meta-Learning is the model-based approach. On a high level, model-based techniques rely upon an adaptive, internal state, in contrast to metric-based techniques, which generally use a fixed neural network at test-time.

More specifically, model-based techniques maintain a stateful, internal representation of a task. When presented with a task, a model-based neural network processes the support set in a sequential fashion. At every time step, an input enters and alters the internal state of the model. Thus, the internal state can capture relevant task-specific information, which can be used to make predictions for new inputs.

Because the predictions are based on internal dynamics that are hidden from the outside, model-based techniques are also called black-boxes. Information from previous inputs must be remembered, which is why model-based techniques have a memory component, either in- or externally.

Recall that the mechanics of metric-based techniques were limited to pair-wise input comparisons. This is not the case for model-based techniques, where the human designer has the freedom to choose the internal dynamics of the algorithm. As a result, model-based techniques are not restricted to meta-learning good feature spaces, as they can also learn internal dynamics, used to process and predict input data of tasks.

More formally, given a support set \(D^{tr}_{{\mathcal {T}}_{j}}\) corresponding to a task \({\mathcal {T}}_{j}\), model-based techniques compute a class probability distribution for a new input \({\varvec{x}}\) as

where f represents the black-box neural network model, and \(\varvec{\theta }\) its parameters.

4.1 Example

Using the same example as in Sect. 3, suppose we are given a task support set \(D^{tr}_{{\mathcal {T}}_{j}} = \{ ([0,-4], 1), ([-2,-4],2), ([-2,4],3), ([6,0], 4) \}\), where a tuple denotes a pair \(({\varvec{x}}_{i},y_{i})\). Furthermore, suppose our query set only contains one example \(D^{test}_{{\mathcal {T}}_{j}} = \{ ([4, 0.5], 4) \}\). This problem has been visualized in Fig. 5 (in Sect. 3). For the sake of the example, we do not use an input embedding function: our model will operate on the raw inputs of \(D^{tr}_{{\mathcal {T}}_{j}}\) and \(D^{test}_{{\mathcal {T}}_{j}}\). As an internal state, our model uses an external memory matrix \(M \in {\mathbb {R}}^{4 \times (2+1)}\), with four rows (one for each example in our support set), and three columns (the dimensionality of input vectors, plus one dimension for the correct label). Our model proceeds to process the support set in a sequential fashion, reading the examples from \(D^{tr}_{{\mathcal {T}}_{j}}\) one by one, and by storing the i-th example in the i-th row of the memory module. After processing the support set, the memory matrix contains all examples, and as such, serves as internal task representation.

Given the new input [4, 0.5], our model could use many different techniques to make a prediction based on this representation. For simplicity, assume that it computes the dot product between \({\varvec{x}}\), and every memory M(i) (the 2-D vector in the i-th row of M, ignoring the correct label), and predicts the class of the input which yields the largest dot product. This would produce scores \(-2, -10, -6,\) and 24 for the examples in \(D^{tr}_{{\mathcal {T}}_{j}}\) respectively. Since the last example [6, 0] yields the largest dot product, we predict that class, i.e., 4.

Note that this example could be seen as a metric-based technique where the dot product is used as a similarity function. However, the reason that this technique is model-based is that it stores the entire task inside a memory module. This example was deliberately easy for illustrative purposes. More advanced and successful techniques have been proposed, which we will now cover.

4.2 Recurrent meta-learners

Recurrent meta-learners (Duan et al. 2016; Wang et al. 2016) are, as the name suggests, meta-learners based on recurrent neural networks. The recurrent network serves as dynamic task embedding storage. These recurrent meta-learners were specifically proposed for reinforcement learning problems, hence we will explain them in that setting.

The recurrence is implemented by e.g. an LSTM (Wang et al. 2016) or a GRU (Duan et al. 2016). The internal dynamics of the chosen Recurrent Neural Network (RNN) allows for fast adaptation to new tasks, while the algorithm used to train the recurrent network gradually accumulates knowledge about the task structure, where each task is modelled as an episode (or set of episodes).

The idea of recurrent meta-learners is quite simple. That is, given a task \({\mathcal {T}}_{j}\), we simply feed the (potentially processed) environment variables \([s_{t+1},a_{t},r_{t},d_{t}]\) (see Sect. 2.1.3) into an RNN at every time step t. Recall that s, a, r, d denote the state, action, reward, and termination flag respectively. At every time step t, the RNN outputs an action and a hidden state. Conditioned on its hidden state \(h_{t}\), the network outputs an action \(a_{t}\). The goal is to maximize the expected reward in each trial. See Fig. 12 for a visual depiction. From this figure, it also becomes clear why these techniques are model-based. That is, they embed information from previously seen inputs in the hidden state.

Recurrent meta-learners have been shown to perform almost as well as asymptotically optimal algorithms on simple reinforcement learning tasks (Wang et al. 2016; Duan et al. 2016). However, their performance degrades in more complex settings, where temporal dependencies can span a longer horizon. Making recurrent meta-learners better at such complex tasks is a direction for future research.

4.3 Memory-augmented neural networks (MANNs)

The key idea of memory-augmented neural networks (MANNs) (Santoro et al. 2016) is to enable neural networks to learn quickly with the help of an external memory. The main controller (the recurrent neural network interacting with the memory) then gradually accumulates knowledge across tasks, while the external memory allows for quick task-specific adaptation. For this, Santoro et al. (2016) used Neural Turing Machines (Graves et al. 2014). Here, the controller is parameterized by \(\varvec{\theta }\) and acts as the long-term memory of the memory-augmented neural network, while the external memory module is the short-term memory.

The workflow of memory-augmented neural networks is displayed in Fig. 13. Note that the data from a task is processed as a sequence, i.e., data are fed into the network one by one. The support set is fed into the memory-augmented neural network first. Afterwards, the query set is processed. During the meta-train phase, training tasks can be fed into the network in arbitrary order. At time step t, the model receives input \({\varvec{x}}_{t}\) with the label of the previous input, i.e., \(y_{t-1}\). This was done to prevent the network from mapping class labels directly to the output (Santoro et al. 2016).

Source: Santoro et al. (2016)

Workflow of memory-augmented neural networks. Here, an episode corresponds to a given task \({\mathcal {T}}_j\). After every episode, the order of labels, classes, and samples should be shuffled to minimize dependence on arbitrarily assigned orders.

Source: Santoro et al. (2016)

Controller-memory interaction in memory-augmented neural networks.

The interaction between the controller and memory is visualized in Fig. 14. The idea is that the external memory module, containing representations of previously seen inputs, can be used to make predictions for new inputs. In short, previously obtained knowledge is leveraged to aid the classification of new inputs. Note that neural networks also attempt to do this, however, their prior knowledge is slowly accumulated into the network weights, while an external memory module can directly store such information.

Given an input \({\varvec{x}}_{t}\) at time t, the controller generates a key \({\varvec{k}}_{t}\), which can be stored in memory matrix M and can be used to retrieve previous representations from memory matrix M. When reading from memory, the aim is to produce a linear combination of stored keys in memory matrix M, giving greater weight to those which have a larger cosine similarity with the current key \({\varvec{k}}_{t}\). More specifically, a read vector \({\varvec{w}}^{r}_{t}\) is created, in which each entry i denotes the cosine similarity between key \({\varvec{k}}_{t}\) and the memory (from a previous input) stored in row i, i.e., \(M_{t}(i)\). Then, the representation \({\varvec{r}}_{t} = \sum _{i}w_{t}^{r}(i)M(i)\) is retrieved, which is simply a linear combination of all keys (i.e., rows) in memory matrix M.

Predictions are made as follows. Given an input \({\varvec{x}}_{t}\), memory-augmented neural networks use the external memory to compute the corresponding representation \({\varvec{r}}_{t}\), which could be fed into a softmax layer, resulting in class probabilities. Across tasks, memory-augmented neural networks learn a good input embedding function \(f_{\varvec{\theta }}\) and classifier weights, which can be exploited when presented with new tasks.

To write input representations to memory, Santoro et al. (2016) propose a new mechanism called Least Recently Used Access (LRUA). LRUA either writes to the least, or most recently used memory location. In the former case, it preserves recent memories, and in the latter, it updates recently obtained information. The writing mechanism works by keeping track of how often every memory location is accessed in a usage vector \({\varvec{w}}_{t}^{u}\), which is updated at every time step according to the following update rule: \({\varvec{w}}_{t}^{u} := \gamma {\varvec{w}}^{u}_{t-1} + {\varvec{w}}_{t}^{r} + {\varvec{w}}_{t}^{w}\), where superscripts u, w and r refer to usage, write and read vectors, respectively. In words, the previous usage vector is decayed (using parameter \(\gamma\)), while current reads (\({\varvec{w}}_{t}^{r}\)) and writes (\({\varvec{w}}_{t}^{w}\)) are added to the usage. Let n be the total number of reads to memory, and \(\ell u(n)\) (\(\ell u\) for ‘least used’) be the n-th smallest value in the usage vector \({\varvec{w}}^{u}_{t}\). Then, the least-used weights are defined as follows:

Then, the write vector \({\varvec{w}}_{t}^{w}\) is computed as \({\varvec{w}}^{w}_{t} = \sigma (\alpha ) {\varvec{w}}^{r}_{t-1} + (1 - \sigma (\alpha )){\varvec{w}}^{\ell u}_{t-1}\), where \(\alpha\) is a parameter that interpolates between the two weight vectors. As such, if \(\sigma (\alpha ) = 1\), we write to the most recently used memory, whereas when \(\sigma (\alpha ) = 0\), we write to the least recently used memory locations. Finally, writing is performed as follows: \(M_{t}(i) := M_{t-1}(i) + w_{t}^{w}(i){\varvec{k}}_{t}\), for all i.

In summary, memory-augmented neural networks (Santoro et al. 2016) combine external memory and a neural network to achieve meta-learning. The interaction between a controller, with long-term memory parameters \(\varvec{\theta }\), and memory M, may also be interesting for studying human meta-learning (Santoro et al. 2016). In contrast to many metric-based techniques, this model-based technique is applicable to both classification and regression problems. A downside of this approach is the architectural complexity.

4.4 Meta networks

Source: Munkhdalai and Yu (2017)

The architecture of a Meta Network.

Meta networks are divided into two distinct subsystems (consisting of neural networks), i.e., the base- and meta-learner (whereas in memory-augmented neural networks the base- and meta-components are intertwined). The base-learner is responsible for performing tasks, and for providing the meta-learner with meta-information, such as loss gradients. The meta-learner can then compute fast task-specific weights for itself and the base-learner, such that it can perform better on the given task \({\mathcal {T}}_{j} = (D^{tr}_{{\mathcal {T}}_{j}}, D^{test}_{{\mathcal {T}}_{j}})\). This workflow is depicted in Fig. 15.

The meta-learner consists of neural networks \(u_{\varvec{\phi }}, m_{\varvec{\varphi }}\), and \(d_{\varvec{\psi }}\). Network \(u_{\varvec{\phi }}\) is used as an input representation function. Networks \(d_{\varvec{\psi }}\) and \(m_{\varvec{\varphi }}\) are used to compute task-specific weights \(\varvec{\phi }^{*}\) and example-level fast weights \(\varvec{\theta }^{*}\). Lastly, \(b_{\varvec{\theta }}\) is the base-learner which performs input predictions. Note that we used the term fast-weights throughout, which refers to task- or input-specific versions of slow (initial) weights.

In a similar fashion to memory-augmented neural networks (Santoro et al. 2016), meta networks (Munkhdalai and Yu 2017) also leverage the idea of an external memory module. However, meta networks use the memory for a different purpose. The memory stores for each observation \({\varvec{x}}_i\) in the support set two components, i.e., its representation \({\varvec{r}}_i\) and the fast weights \(\varvec{\theta }_i^*\). These are then used to compute an attention-based representation and fast weights for new inputs, respectively.

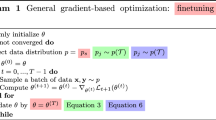

The pseudocode for meta networks is displayed in Algorithm 1. First, a sample of the support set is created (line 1), which is used to compute task-specific weights \(\varvec{\phi }^{*}\) for the representation network \(u_{\varvec{\phi }}\) (lines 2-5). Note that \(u_{\varvec{\phi }}\) has two tasks, (i) it should compute a representation for inputs \(({\varvec{x}}_{i}\) (line 10 and 15), and (ii) it needs to make predictions for inputs \(({\varvec{x}}_{i}\), in order to compute a loss (line 3). To achieve both goals, a conventional neural network can be used that makes class predictions. The states of the final hidden layer are then used as representations. Typically, the cross-entropy is calculated over the predictions of representation network \(u_{\varvec{\phi }}\). When there are multiple examples per class in the support set, an alternative is to use a contrastive loss function (Munkhdalai and Yu 2017).

Then, meta networks iterate over every example \(({\varvec{x}}_{i}, y_{i})\) in the support set \(D^{tr}_{{\mathcal {T}}_{j}}\). The base-learner \(b_{\varvec{\theta }}\) attempts to make class predictions for these examples, resulting in loss values \({\mathcal {L}}_{i}\) (line 7). The gradients of these losses are used to compute fast weights \(\varvec{\theta }^{*}\) for example i (line 8), which are then stored in the i-th row of memory matrix M (line 9). Additionally, input representations \({\varvec{r}}_{i}\) are computed and stored in memory matrix R (lines 10-11).

Now, meta networks are ready to address the query set \(D^{test}_{{\mathcal {T}}_{j}}\). They iterate over every example \(({\varvec{x}}, y)\), and compute a representation \({\varvec{r}}\) of it (line 15). This representation is matched against the representations of the support set, which are stored in memory matrix R. This matching gives us a similarity vector \({\varvec{a}}\), where every entry k denotes the similarity between input representation \({\varvec{r}}\) and the k-th row in memory matrix R, i.e., R(k) (line 16). A softmax over this similarity vector is performed to normalize the entries. The resulting vector is used to compute a linear combination of weights that were generated for inputs in the support set (line 17). These weights \(\varvec{\theta }^{*}\) are specific for input \({\varvec{x}}\) in the query set, and can be used by the base-learner b to make predictions for that input (line 18). The observed error is added to the task loss. After the entire query set is processed, all involved parameters can be updated using backpropagation (line 20).

Source: Munkhdalai and Yu (2017)

The layer augmentation setup used to combine slow and fast weights.

Note that some neural networks use both slow- and fast-weights at the same time. Munkhdalai and Yu (2017) use a so-called augmentation setup for this, as depicted in Fig. 16.

In short, meta networks rely on a reparameterization of the meta- and base-learner for every task. Despite the flexibility and applicability to both supervised and reinforcement learning settings, the approach is quite complex. It consists of many components, each with its own set of parameters, which can be a burden on memory usage and computation time. Additionally, finding the correct architecture for all the involved components can be time-consuming.

4.5 Simple neural attentive meta-learner (SNAIL)

Instead of an external memory matrix, SNAIL (Mishra et al. 2018) relies on a special model architecture to serve as memory. Mishra et al. (2018) argue that it is not possible to use Recurrent Neural Networks for this, as they have limited memory capacity, and cannot pinpoint specific prior experiences (Mishra et al. 2018). Hence, SNAIL uses a different architecture, consisting of 1D temporal convolutions (Oord et al. 2016) and a soft attention mechanism (Vaswani et al. 2017). The temporal convolutions allow for ‘high bandwidth’ memory access, and the attention mechanism allows one to pinpoint specific experiences. Figure 17 visualizes the architecture and workflow of SNAIL for supervised learning problems. From this figure, it becomes clear why this technique is model-based. That is, model outputs are based upon the internal state, computed from earlier inputs.

Source: Mishra et al. (2018). (Color figure online)

Architecture and workflow of SNAIL for supervised and reinforcement learning settings. The input layer is red. Temporal Convolution blocks are orange; attention blocks are green.

SNAIL consists of three building blocks. The first is the DenseBlock, which applies a single 1D convolution to the input, and concatenates (in the feature/horizontal direction) the result. The second is a TCBlock, which is simply a series of DenseBlocks with an exponentially increasing dilation rate of the temporal convolutions (Mishra et al. 2018). Note that the dilation is nothing but the temporal distance between two nodes in a network. For example, if we use a dilation of 2, a node at position p in layer L will receive the activation from node \(p-2\) from layer \(L-1\). The third block is the AttentionBlock, which learns to focus on the important parts of prior experience.

In a similar fashion to memory-augmented neural networks (Santoro et al. 2016) (Sect. 4.3), SNAIL also processes task data in sequence, as shown in Fig. 17. However, the input at time t is accompanied by the label at time t, instead of \(t-1\) (as was the case for memory-augmented neural networks). SNAIL learns internal dynamics from seeing various tasks so that it can make good predictions on the query set, conditioned upon the support set.

A key advantage of SNAIL is that it can be applied to both supervised and reinforcement learning tasks. In addition, it achieves good performance compared to previously discussed techniques. A downside of SNAIL is that finding the correct architecture of TCBlocks and DenseBlocks can be time-consuming.

4.6 Conditional neural processes (CNPs)

Source: Garnelo et al. (2018)

A schematic view of how conditional neural processes work. Here, h denotes a network outputting a representation for an observation, a denotes an aggregation function for these representations, and g denotes a neural network that makes predictions for unlabelled observations, based on the aggregated representation.

In contrast to previous techniques, a conditional neural process (CNP) (Garnelo et al. 2018) does not rely on an external memory module. Instead, it aggregates the support set into a single aggregated latent representation. The general architecture is shown in Fig. 18. As we can see, the conditional neural process operates in three phases on task \({\mathcal {T}}_{j}\). First, it observes the support set \(D^{tr}_{{\mathcal {T}}_{j}}\), including the ground-truth outputs \(y_{i}\). Examples \(({\varvec{x}}_{i},y_{i}) \in D^{tr}_{{\mathcal {T}}_{j}}\) are embedded using a neural network \(h_{\varvec{\theta }}\) into representations \({\varvec{r}}_{i}\). Second, these representations are aggregated using operator a to produce a single representation \({\varvec{r}}\) of \(D^{tr}_{{\mathcal {T}}_{j}}\) (hence it is model-based). Third, a neural network \(g_{\varvec{\phi }}\) processes this single representation \({\varvec{r}}\), new inputs \({\varvec{x}}\), and produces predictions \({\hat{y}}\).

Let the entire conditional neural process model be denoted by \(Q_{\varvec{\varTheta }}\), where \(\varTheta\) is a set of all involved parameters \(\{ \varvec{\theta }, \varvec{\phi } \}\). The training process is different compared to other techniques. Let \({\varvec{x}}_{{\mathcal {T}}_{j}}\) and \({\varvec{y}}_{{\mathcal {T}}_{j}}\) denote all inputs and corresponding outputs in \(D_{{\mathcal {T}}_{j}}^{tr}\). Then, the first \(\ell \backsim U(0,\ldots , k \cdot N -1)\) examples in \(D^{tr}_{{\mathcal {T}}_{j}}\) are used as a conditioning set \(D^{c}_{{\mathcal {T}}_{j}}\) (effectively splitting the support set into a true training set and a validation set). Given a value of \(\ell\), the goal is to maximize the log-likelihood (or minimize the negative log-likelihood) of the labels \({\varvec{y}}_{{\mathcal {T}}_{j}}\) in the entire support set \(D^{tr}_{{\mathcal {T}}_{j}}\)

Conditional neural processes are trained by repeatedly sampling various tasks and values of \(\ell\), and propagating the observed loss backwards.

In summary, conditional neural processes use compact representations of previously seen inputs to aid the classification of new observations. Despite its simplicity and elegance, a disadvantage of this technique is that it is often outperformed in few-shot settings by other techniques such as matching networks (Vinyals et al. 2016) (see Sect. 3.3).

4.7 Neural statistician

A neural statistician (Edwards and Storkey 2017) differs from earlier approaches as it learns to compute summary statistics, or meta-features, of data sets in an unsupervised manner. These latent embeddings (making the approach model-based) can then later be used for making predictions. Despite the broad applicability of the model, we discuss it in the context of Deep Meta-Learning.

A neural statistician performs both learning and inference. In the learning phase, the model attempts to produce generative models \({\hat{P}}_{i}\) for every data set \(D_{i}\). The key assumption that is made by Edwards and Storkey (2017) is that there exists a generative process \(P_{i}\), which conditioned on a latent context vector \({\varvec{c}}_{i}\), can produce a data set \(D_{i}\). At inference time, the goal is to infer a (posterior) probability distribution over the context \(q({\varvec{c}}|D)\).

The model uses a variational autoencoder, which consists of an encoder and decoder. The encoder is responsible for producing a distribution over latent vectors \({\varvec{z}}\): \(q({\varvec{z}}|{\varvec{x}}; \varvec{\phi })\), where \({\varvec{x}}\) is an input vector, and \(\varvec{\phi }\) are the encoder parameters. The encoded input \({\varvec{z}}\), which is often of lower dimensionality than the original input \({\varvec{x}}\), can then be decoded by the decoder \(p({\varvec{x}}|{\varvec{z}};\varvec{\theta })\). Here, \(\varvec{\theta }\) are the parameters of the decoder. To capture more complex patterns in data sets, the model uses multiple latent layers \({\varvec{z}}_{1},\ldots ,{\varvec{z}}_{L}\), as shown in Fig. 19. Given this architecture, the posterior over c and \({\varvec{z}}_{1},..,{\varvec{z}}_{L}\) (shorthand \({\varvec{z}}_{1:L}\)) is given by

The neural statistician is trained to minimize a three-component loss function, consisting of the reconstruction loss (how well it models the data), context loss (how well the inferred context \(q({\varvec{c}}|D;\varvec{\phi })\) corresponds to the prior \(P({\varvec{c}})\), and latent loss (how well the inferred latent variables \({\varvec{z}}_{i}\) are modelled).

This model can be applied to N-way, few-shot learning as follows. Construct N data sets for every of the N classes, such that one data set contains only examples of the same class. Then, the neural statistician is provided with a new input \({\varvec{x}}\), and has to predict its class. It computes a context posterior \(N_{{\varvec{x}}} = q({\varvec{c}}|{\varvec{x}};\varvec{\phi })\) depending on new input \({\varvec{x}}\). In a similar fashion, context posteriors are computed for all of the data sets \(N_{i} = q({\varvec{c}}|D_{i};\varvec{\phi })\). Lastly, it assigns the label i such that the difference between \(N_{i}\) and \(N_{{\varvec{x}}}\) is minimal.

In summary, the neural statistician (Edwards and Storkey 2017) allows for quick learning on new tasks through data set modeling. Additionally, it is applicable to both supervised and unsupervised settings. A downside is that the approach requires many data sets to achieve good performance (Edwards and Storkey 2017).

4.8 Model-based techniques, in conclusion

In this section, we have discussed various model-based techniques. Despite apparent differences, they all build on the notion of task internalization. That is, tasks are processed and represented in the state of the model-based system. This state can then be used to make predictions. Figure 20 displays the relationships between the covered model-based techniques.