Abstract

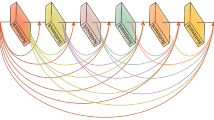

Residual networks (ResNets) have been utilized for various computer vision and image processing applications. The residual connection improves the training of the network with better gradient flow. A residual block consists of a few convolutional layers having trainable parameters, which leads to overfitting. Moreover, the present residual networks are not able to utilize the high- and low-frequency information suitably, which also challenges the generalization capability of the network. In this paper, a frequency-disentangled residual network (FDResNet) is proposed to tackle these issues. Specifically, FDResNet includes separate connections in the residual block for low- and high-frequency components, respectively. Basically, the proposed model disentangles the low- and high-frequency components to increase the generalization ability. Moreover, the computation of low- and high-frequency components using fixed filters further avoids the overfitting. The proposed model is tested on benchmark CIFAR-10/100, Caltech, and TinyImageNet datasets for image classification. The performance of the proposed model is also tested in the image retrieval framework. It is noticed that the proposed model outperforms its counterpart residual model. The effect of kernel size and standard deviation is also evaluated. The impact of the frequency disentangling is also analyzed using a saliency map.

Similar content being viewed by others

Notes

In this notation used here as well as at other places, the 1-st value in the brackets is the kernel size for high-pass filtering and the 2-nd value is the kernel size for low-pass filtering.

References

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp. 1097–1105 (2012)

Liu, W., Wang, Z., Liu, X., Zeng, N., Liu, Y., Alsaadi, F.E.: A survey of deep neural network architectures and their applications. Neurocomputing 234, 11–26 (2017)

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521(7553), 436–444 (2015)

Szegedy, C., Liu, W., Jia, Y., Sermanet, P., Reed, S., Anguelov, D., Erhan, D., Vanhoucke, V., Rabinovich, A.: Going deeper with convolutions. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–9 (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Dubey, S.R., Singh, S.K., Singh, R.K.: Rotation and illumination invariant interleaved intensity order-based local descriptor. IEEE Trans. Image Process. 23(12), 5323–5333 (2014)

Dubey, S.R., Singh, S.K., Singh, R.K.: Local wavelet pattern: a new feature descriptor for image retrieval in medical ct databases. IEEE Trans. Image Process. 24(12), 5892–5903 (2015)

Dubey, S.R., Singh, S.K., Singh, R.K.: Multichannel decoded local binary patterns for content-based image retrieval. IEEE Trans. Image Process. 25(9), 4018–4032 (2016)

Schmidhuber, J.: Deep learning in neural networks: an overview. Neural Netw. 61, 85–117 (2015)

Basha, S.S., Dubey, S.R., Pulabaigari, V., Mukherjee, S.: Impact of fully connected layers on performance of convolutional neural networks for image classification. Neurocomputing 378, 112–119 (2020)

Dubey, S.R., Chakraborty, S., Roy, S.K., Mukherjee, S., Singh, S.K., Chaudhuri, B.B.: Diffgrad: an optimization method for convolutional neural networks. IEEE Trans. Neural Netw. Learn. Syst. 31(11), 4500–4511 (2019)

He, K., Gkioxari, G., Dollár, P., Girshick, R.: Mask r-cnn. In: IEEE International Conference on Computer Vision, pp. 2961–2969 (2017)

He, D., Xie, C.: Semantic image segmentation algorithm in a deep learning computer network. Multimed. Syst. 28(6), 2065–2077 (2022)

Ma, H., Liu, D., Xiong, R., Wu, F.: iwave: CNN-based wavelet-like transform for image compression. IEEE Trans. Multimed. 22(7), 1667–1679 (2019)

Liu, L., Chen, T., Liu, H., Pu, S., Wang, L., Shen, Q.: 2c-net: integrate image compression and classification via deep neural network. Multimed. Syst. 29(3), 945–959 (2023)

Srivastava, Y., Murali, V., Dubey, S.R.: A performance comparison of loss functions for deep face recognition. In: Seventh National Conference on Computer Vision, Pattern Recognition, Image Processing and Graphics, pp. 322–332 (2019)

Chen, Z., Chen, J., Ding, G., Huang, H.: A lightweight CNN-based algorithm and implementation on embedded system for real-time face recognition. Multimed. Syst. 29(1), 129–138 (2023)

Choi, J.Y., Lee, B.: Combining of multiple deep networks via ensemble generalization loss, based on MRI images, for Alzheimer’s disease classification. IEEE Signal Process. Lett. 27, 206–210 (2020)

Dubey, S.R., Roy, S.K., Chakraborty, S., Mukherjee, S., Chaudhuri, B.B.: Local bit-plane decoded convolutional neural network features for biomedical image retrieval. Neural Comput. Appl. 32, 7539–7551 (2020)

Tian, C., Xu, Y., Zuo, W., Zhang, B., Fei, L., Lin, C.W.: Coarse-to-fine CNN for image super-resolution. IEEE Trans. Multimed. 23, 1489–1502 (2020)

Liu, J., Ge, J., Xue, Y., He, W., Sun, Q., Li, S.: Multi-scale skip-connection network for image super-resolution. Multimed. Syst. 27, 821–836 (2021)

Fang, M., Bai, X., Zhao, J., Yang, F., Hung, C.C., Liu, S.: Integrating gaussian mixture model and dilated residual network for action recognition in videos. Multimed. Syst. 26, 715–725 (2020)

Tripathy, S.K., Kostha, H., Srivastava, R.: Ts-mda: two-stream multiscale deep architecture for crowd behavior prediction. Multimed. Syst. 29(1), 15–31 (2023)

Que, Y., Li, S., Lee, H.J.: Attentive composite residual network for robust rain removal from single images. IEEE Trans. Multimed. 23, 3059–3072 (2020)

Park, K., Soh, J.W., Cho, N.I.: Dynamic residual self-attention network for lightweight single image super-resolution. IEEE Trans. Multimed. 25, 907–918 (2023)

Akbari, M., Liang, J., Han, J., Tu, C.: Learned multi-resolution variable-rate image compression with octave-based residual blocks. IEEE Trans. Multimed. 23, 3013–3021 (2021)

Chen, S., Tan, X., Wang, B., Lu, H., Hu, X., Fu, Y.: Reverse attention-based residual network for salient object detection. IEEE Trans. Image Process. 29, 3763–3776 (2020)

Zhou, W., Wu, J., Lei, J., Hwang, J.N., Yu, L.: Salient object detection in stereoscopic 3d images using a deep convolutional residual autoencoder. IEEE Trans. Multimed. 23, 3388–3399 (2020)

Liu, S., Thung, K.H., Lin, W., Yap, P.T., Shen, D.: Real-time quality assessment of pediatric MRI via semi-supervised deep nonlocal residual neural networks. IEEE Trans. Image Process. 29, 7697–7706 (2020)

Tang, C., Liu, X., An, S., Wang, P.: Br 2net: Defocus blur detection via a bidirectional channel attention residual refining network. IEEE Trans. Multimed. 23, 624–635 (2020)

Yeh, C.H., Huang, C.H., Kang, L.W.: Multi-scale deep residual learning-based single image haze removal via image decomposition. IEEE Trans. Image Process. 29, 3153–3167 (2019)

Fan, Y.Y., Liu, S., Li, B., Guo, Z., Samal, A., Wan, J., Li, S.Z.: Label distribution-based facial attractiveness computation by deep residual learning. IEEE Trans. Multimed. 20(8), 2196–2208 (2017)

Ioffe, S., Szegedy, C.: Batch normalization: Accelerating deep network training by reducing internal covariate shift. In: International Conference on Machine Learning, pp. 448–456 (2015)

Perez, L., Wang, J.: The effectiveness of data augmentation in image classification using deep learning. arXiv preprint arXiv:1712.04621 (2017)

Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., Salakhutdinov, R.: Dropout: a simple way to prevent neural networks from overfitting. J. Machine Learning Res. 15(1), 1929–1958 (2014)

Agrawal, A., Mittal, N.: Using CNN for facial expression recognition: a study of the effects of kernel size and number of filters on accuracy. Visual Comput. 36(2), 405–412 (2020)

Hayou, S., Doucet, A., Rousseau, J.: On the selection of initialization and activation function for deep neural networks. arXiv preprint arXiv:1805.08266 (2018)

Sineesh, A., Raveendranatha Panicker, M.: Edge preserved universal pooling: novel strategies for pooling in convolutional neural networks. Multimed. Syst. 29, 1277–1290 (2023)

Yedla, R.R., Dubey, S.R.: On the performance of convolutional neural networks under high and low frequency information. In: International Conference on Computer Vision and Image Processing, pp. 214–224 (2021)

Vuilleumier, P., Armony, J.L., Driver, J., Dolan, R.J.: Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nat. Neurosci. 6(6), 624–631 (2003)

Monson, B.B., Hunter, E.J., Lotto, A.J., Story, B.H.: The perceptual significance of high-frequency energy in the human voice. Front. Psychol. 5, 587 (2014)

Yi, Z., Chen, Z., Cai, H., Mao, W., Gong, M., Zhang, H.: Bsd-gan: branched generative adversarial network for scale-disentangled representation learning and image synthesis. IEEE Trans. Image Process. 29, 9073–9083 (2020)

Chen, X., Wang, Y., Liu, J., Qiao, Y.: Did: Disentangling-imprinting-distilling for continuous low-shot detection. IEEE Trans. Image Process. 29, 7765–7778 (2020)

Chen, H., Deng, Y., Li, Y., Hung, T.Y., Lin, G.: Rgbd salient object detection via disentangled cross-modal fusion. IEEE Trans. Image Process. 29, 8407–8416 (2020)

Kottayil, N.K., Valenzise, G., Dufaux, F., Cheng, I.: Blind quality estimation by disentangling perceptual and noisy features in high dynamic range images. IEEE Trans. Image Process. 27(3), 1512–1525 (2017)

Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., Huang, Z., Karpathy, A., Khosla, A., Bernstein, M., et al.: Imagenet large scale visual recognition challenge. Int. J. Comput. Vision 115(3), 211–252 (2015)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. In: International Conference on Learning Representations (2015)

Huang, G., Liu, Z., Van Der Maaten, L., Weinberger, K.Q.: Densely connected convolutional networks. In: IEEE Conference on Computer Vision and Pattern Recognition. pp. 4700–4708 (2017)

Xie, S., Girshick, R., Dollár, P., Tu, Z., He, K.: Aggregated residual transformations for deep neural networks. In: IEEE Conference on Computer Vision and Pattern Recognition. pp. 1492–1500 (2017)

Huang, G., Sun, Y., Liu, Z., Sedra, D., Weinberger, K.: Deep networks with stochastic depth. In: European Conference on Computer Vision. pp. 646–661 (2016).

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: IEEE Conference on Computer Vision and Pattern Recognition, pp. 7132–7141 (2018)

Krizhevsky, A.: Learning multiple layers of features from tiny images. Master’s thesis, University of Tront (2009)

Griffin, G., Holub, A., Perona, P.: Caltech-256 object category dataset. CalTech Report (2007)

Le, Y., Yang, X.: Tiny imagenet visual recognition challenge. CS 231N, 7 (2015)

Selvaraju, R.R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., Batra, D.: Grad-cam: Visual explanations from deep networks via gradient-based localization. In: IEEE International Conference on Computer Vision, pp. 618–626 (2017)

Dubey, S.R.: A decade survey of content based image retrieval using deep learning. IEEE Trans. Circ. Syst. Video Technol. 32(5), 2687–2704 (2021)

Acknowledgements

This research is funded by the Global Innovation and Technology Alliance (GITA) on Behalf of Department of Science and Technology (DST), Govt. of India under India–Taiwan joint project with Project Code GITA/DST/TWN/P-83/2019. We would like to acknowledge the NVIDIA for supporting with NVIDIA GPUs and Google Colab service for providing free computational resources which have been used in this research for the experiments.

Author information

Authors and Affiliations

Contributions

Equal contribution by SRS and RRY in terms of conducting experiments and computing the results. SRD conceptualized the idea, wrote the paper and supervised the work. RKS and W-TC edited the paper and provided the supervision.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Communicated by B. Bao.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Singh, S.R., Yedla, R.R., Dubey, S.R. et al. Frequency disentangled residual network. Multimedia Systems 30, 9 (2024). https://doi.org/10.1007/s00530-023-01232-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00530-023-01232-5