Abstract

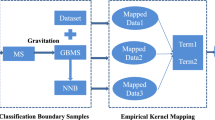

Broad learning system (BLS) is an emerging neural network with fast learning capability, which has achieved good performance in various applications. Conventional BLS does not effectively consider the problems of class imbalance. Moreover, parameter tuning in BLS requires much effort. To address the challenges mentioned above, we propose a double-kernelized weighted broad learning system (DKWBLS) to cope with imbalanced data classification. The double-kernel mapping strategy is designed to replace the random mapping mechanism in BLS, resulting in more robust features while avoiding the step of adjusting the number of nodes. Furthermore, DKWBLS considers the imbalance problem and achieves more explicit decision boundaries. Numerous experimental results show the superiority of DKWBLS in tackling imbalance problems over other imbalance learning approaches.

Similar content being viewed by others

References

Gong W, Zhang W, Bilal M, Chen Y, Xu X, Wang W (2022) Efficient Web APIs Recommendation with privacy-preservation for mobile app development in industry 4.0. IEEE Trans Ind Info. https://doi.org/10.1109/TII.2021.3133614

Deebak BD, Memon FH, Khowaja SA, Dev K, Wang W, Qureshi NMF, Su C (2022) Lightweight Blockchain Based Remote Mutual Authentication for AI-Empowered IoT sustainable computing systems. IEEE Intern Things J. https://doi.org/10.1109/JIOT.2022.3152546

Deebak BD, Memon FH, Dev K, Khowaja SA, Wang W, Qureshi NMF (2022) TAB-SAPP: a trust-aware blockchain-based seamless authentication for massive IoT-enabled industrial applications. IEEE Trans Indust Info. https://doi.org/10.1109/TII.2022.3159164

Haixiang G, Yijing L, Shang J, Mingyun G, Yuanyue H, Bing G (2017) Learning from class-imbalanced data: review of methods and applications. Expert Sys Appl 73:220–239

Sarmanova A, Albayrak S (2013) Alleviating class imbalance problem in data mining. In 2013 21st Signal Processing and Communications Applications Conference (SIU) (pp. 1-4), April. IEEE

Alsolai H, Roper M (2020) A systematic literature review of machine learning techniques for software maintainability prediction. Info Softw Tech 119:106214

Malhotra R (2015) A systematic review of machine learning techniques for software fault prediction. Appl Soft Comput 27:504–518

Cui L, Yang S, Chen F, Ming Z, Lu N, Qin J (2018) A survey on application of machine learning for internet of things. Int J Mach Learn Cybern 9(8):1399–1417

Krawczyk B, Galar M, Jeleń Ł, Herrera F (2016) Evolutionary undersampling boosting for imbalanced classification of breast cancer malignancy. Appl Soft Comput 38:714–726

Wei W, Li J, Cao L, Ou Y, Chen J (2013) Effective detection of sophisticated online banking fraud on extremely imbalanced data. World Wide Web 16(4):449–475

Zakaryazad A, Duman E (2016) A profit-driven Artificial Neural Network (ANN) with applications to fraud detection and direct marketing. Neurocomputing 175:121–131

Wang K, Liu L, Yuan C, Wang Z (2021) Software defect prediction model based on LASSO\(\ddot{\,}\)CSVM. Neur Comput Appl 33(14):8249–8259

Lee T, Nam J, Han D, Kim S, In HP (2016) Developer micro interaction metrics for software defect prediction. IEEE Trans Softw Eng 42(11):1015–1035

Krawczyk B (2016) Learning from imbalanced data: open challenges and future directions. Progr Artif Intell 5(4):221–232

Hsiao YH, Su CT, Fu PC (2020) Integrating MTS with bagging strategy for class imbalance problems. Int J Mach Learn Cybern 11(6):1217–1230

Zhe W et al (2020) Multi-matrices entropy discriminant ensemble learning for imbalanced problem. Neural Comput Appl 32(12):8245–8264

Liu XY, Wu J, Zhou ZH (2008) Exploratory undersampling for class-imbalance learning. IEEE Trans Sys, Man and Cybern, Part B (Cybernetics) 39(2):539–550

Elkan C (2001) The foundations of cost-sensitive learning. In International joint conference on artificial intelligence (Vol. 17, No. 1, pp. 973-978). Lawrence Erlbaum Associates Ltd

Zhou ZH, Liu XY (2005) Training cost-sensitive neural networks with methods addressing the class imbalance problem. IEEE Trans Knowl Data Eng 18(1):63–77

Chen CP, Liu Z (2017) Broad learning system: an effective and efficient incremental learning system without the need for deep architecture. IEEE Trans Neural Netw Learn Systems 29(1):10–24

Zhang L et al (2020) Analysis and variants of broad learning system. IEEE Trans Sys, Man Cybern Sys. https://doi.org/10.1109/TSMC.2020.2995205

LeCun Y, Huang FJ, Bottou L (2004) Learning methods for generic object recognition with invariance to pose and lighting. In Proceedings of the 2004 IEEE Computer society conference on computer vision and pattern recognition, 2004. CVPR 2004. (Vol. 2, pp. II-104). IEEE

Yi H, Shiyu S, Xiusheng D, Zhigang C (2016) A study on deep neural networks framework. In 2016 IEEE Advanced information management, communicates, electronic and automation control conference (IMCEC) (pp. 1519-1522). IEEE

Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP (2002) SMOTE: synthetic minority over-sampling technique. J Artif Intell Res 16:321–357

Han X, Cui R, Lan Y, Kang Y, Deng J, Jia N (2019) A Gaussian mixture model based combined resampling algorithm for classification of imbalanced credit data sets. Int J Mach Learn Cybern 10(12):3687–3699

Han H, Wang WY, Mao BH (2005) Borderline-SMOTE: a new over-sampling method in imbalanced data sets learning. In: International conference on intelligent computing (pp. 878-887). Springer, Berlin and Heidelberg

Bunkhumpornpat C, Sinapiromsaran K, Lursinsap C (2009). Safe-level-smote: safe-level-synthetic minority over-sampling technique for handling the class imbalanced problem. In Pacific-Asia conference on knowledge discovery and data mining (pp. 475-482), April. Springer, Berlin and Heidelberg

Maciejewski T, Stefanowski J (2011, April) Local neighbourhood extension of SMOTE for mining imbalanced data. In 2011 IEEE symposium on computational intelligence and data mining (CIDM) (pp. 104-111). IEEE

Sauptik D, Vladimir C (2014) Development and evaluation of cost-sensitive universum-SVM. IEEE Trans Cybern 45(4):806–818

Hazarika BB, Deepak Gupta (2021) Density-weighted support vector machines for binary class imbalance learning. Neural Comput Appl 33(9):4243–4261

Xu R, Wen Z, Gui L, Lu Q, Li B, Wang X (2020) Ensemble with estimation: seeking for optimization in class noisy data. Int J Mach Learn Cybern 11(2):231–248

Yong Z et al (2017) Ensemble weighted extreme learning machine for imbalanced data classification based on differential evolution. Neural Comput Appl 28(1):259–267

Krawczyk B, Woźniak M, Schaefer G (2014) Cost-sensitive decision tree ensembles for effective imbalanced classification. Appl Soft Comput 14:554–562

Xiao W, Zhang J, Li Y, Zhang S, Yang W (2017) Class-specific cost regulation extreme learning machine for imbalanced classification. Neurocomputing 261:70–82

Raghuwanshi BS, Shukla S (2018) Class-specific extreme learning machine for handling binary class imbalance problem. Neural Netw 105:206–217

Zong W, Huang GB, Chen Y (2013) Weighted extreme learning machine for imbalance learning. Neurocomputing 101:229–242

Yang K, Yu Z, Chen CP, Cao W, You JJ, San Wong H (2021) Incremental weighted ensemble broad learning system for imbalanced data. IEEE Trans Knowl Data Eng. https://doi.org/10.1109/TKDE.2021.3061428

Raghuwanshi BS, Shukla S (2021) Classifying imbalanced data using SMOTE based class-specific kernelized ELM. Int J Mach Learn Cybern 12(5):1255–1280

Xiaokang W, Huiwen W, Yihui W (2020) A density weighted fuzzy outlier clustering approach for class imbalanced learning. Neural Comput Appl 32(16):13035–13049

Mao W, Wang J, Xue Z (2017) An ELM-based model with sparse-weighting strategy for sequential data imbalance problem. Int J Mach Learn Cybern 8(4):1333–1345

Kemal P (2018) Similarity-based attribute weighting methods via clustering algorithms in the classification of imbalanced medical datasets. Neural Comput Appl 30(3):987–1013

Georgios D, Fernando B, Felix L (2018) Improving imbalanced learning through a heuristic oversampling method based on k-means and SMOTE. Info Sci 465:1–20

Pao YH, Park GH, Sobajic DJ (1994) Learning and generalization characteristics of the random vector functional-link net. Neurocomputing 6(2):163–180

Igelnik B, Pao YH (1995) Stochastic choice of basis functions in adaptive function approximation and the functional-link net. IEEE Trans Neural Netw 6(6):1320–1329

Olshausen BA, Field DJ (1997) Sparse coding with an overcomplete basis set: a strategy employed by V1? Vision Research 37(23):3311–3325

Zhang L, Suganthan PN (2017) Benchmarking ensemble classifiers with novel co-trained kernel ridge regression and random vector functional link ensembles [research frontier]. IEEE Comput Intell Magaz 12(4):61–72

Yu Z, Lan K, Liu Z, Han G (2021) Progressive ensemble kernel-based broad learning system for noisy data classification. IEEE Trans Cybern. https://doi.org/10.1109/TCYB.2021.3064821

Huang GB, Zhou H, Ding X, Zhang R (2011) Extreme learning machine for regression and multiclass classification. IEEE Trans Sys, Man Cybern, Part B (Cybernetics) 42(2):513–529

Huang GB, Zhu QY, Siew CK (2004) Extreme learning machine: a new learning scheme of feedforward neural networks. In 2004 IEEE International joint conference on neural networks (IEEE Cat. No. 04CH37541) (Vol. 2, pp. 985-990). IEEE

Liu Z, Cao W, Gao Z, Bian J, Chen H, Chang Y, Liu T-Y (2020) Self-paced ensemble for highly imbalanced massive data classification. In 2020 IEEE 36th International conference on data engineering (ICDE) (pp. 841-852), April. IEEE

Jinyan L, Simon F, Wong Raymond K, Chu Victor W (2018) Adaptive multi-objective swarm fusion for imbalanced data classification. Info Fus 39:1–24

Asuncion A, Newman D (2007) UCI machine learning repository

Alcalá-Fdez J, Fernández A, Luengo J, Derrac J, García S, Sánchez L, Herrera F (2011) Keel data-mining software tool: data set repository, integration of algorithms and experimental analysis framework. J Mult-Val Log Soft Comput, 17

Menzies T, Greenwald J, Frank A (2006) Data mining static code attributes to learn defect predictors. IEEE Trans Softw Eng 33(1):2–13

Song Q, Jia Z, Shepperd M, Ying S, Liu J (2010) A general software defect-proneness prediction framework. IEEE Trans Softw Eng 37(3):356–370

Gong LN, Jiang SJ, Jiang L (2019) Research progress of software defect prediction. Ruan Jian Xue Bao/J Softw 30(10):3090–3114

Song Q, Guo Y, Shepperd M (2018) A comprehensive investigation of the role of imbalanced learning for software defect prediction. IEEE Trans Softw Eng 45(12):1253–1269

Martin Shepperd et al (2013) Data quality: some comments on the NASA software defect datasets. IEEE Trans Softw Eng 39(9):1208–1215

Wang S, Yao X (2013) Using class imbalance learning for software defect prediction. IEEE Trans Reliab 62(2):434–443

Funding

This article was funded by Key-Area Research and Development Program of Guangdong Province under the Grant No. (2019B010153002) and National Natural Science Foundation of China under the Grant No. (62106224) and Marine Economy Development (Six Marine Industries) Special Foundation of Department of Natural Resources of Guangdong Province under Grant GDNRC [2020]056.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chen, W., Yang, K., Zhang, W. et al. Double-kernelized weighted broad learning system for imbalanced data. Neural Comput & Applic 34, 19923–19936 (2022). https://doi.org/10.1007/s00521-022-07534-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-022-07534-5