Abstract

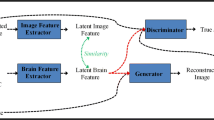

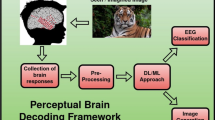

Understanding the reaction of the human brain to visual stimuli is active research for the past few years due to its wide application in Brain-Computer Interaction. Brain signals generated through this has high information content about the perceived scenes and therefore can be used to regenerate the perceived images. But the regeneration of high-quality perceived images using brain signals is a big challenge. With advancements in Generative Adversarial Networks (GANs) in Deep Learning, seen images can now be regenerated in high-quality using visual cues from brain signals. Electroencephalography (EEG) is a cost-effective way to record brain signals. This paper proposes an EEG classifier followed by conditional Progressive Growing of GANs to regenerate perceived images using EEG. After training and testing on publicly available dataset, EEG Classifier was able to achieve 98.8% test accuracy, and cProGAN achieved an inception score (IS) of 5.15 surpassing the previous best 5.07 IS.

Similar content being viewed by others

References

Bashashati A, Fatourechi M, Ward RK, Birch GE (2007) A survey of signal processing algorithms in brain-computer interfaces based on electrical brain signals. J Neural Eng 4(2):R32

Zúquete A, Quintela B, da Silva Cunha JP (2010) Biometric authentication using brain responses to visual stimuli. In: Biosignals, pp. 103–112

Soldati N, Robinson S, Persello C, Jovicich J, Bruzzone L (2009) Automatic classification of brain resting states using fMRI temporal signals. Electr Lett 45(1):19–21

Logothetis NK (2002) The neural basis of the blood-oxygen-level-dependent functional magnetic resonance imaging signal. Philos Trans Royal Soc London Series B: Biol Sci 357(1424):1003–1037

Pouyanfar S, Sadiq S, Yan Y, Tian H, Tao Y, Reyes MP, Shyu ML, Chen SC, Iyengar S (2018) A survey on deep learning: algorithms, techniques, and applications. ACM Comput Surveys (CSUR) 51(5):1–36

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. Adv Neural Inf Process Syst 27:2672–2680

Sharma A, Jindal N, Rana P (2020) Potential of generative adversarial net algorithms in image and video processing applications—a survey. Multimed Tools Appl 79(37):27407–27437

Mirza M, Osindero S (2014) Conditional generative adversarial nets. arXiv preprint arXiv:1411.1784

Chu C, Minami K, Fukumizu K (2020) Smoothness and stability in GANs. arXiv preprint arXiv:2002.04185

Cao Z, Niu S, Zhang J, Wang X (2019) Fast generative adversarial networks model for masked image restoration. IET Image Process 13(7):1124–1129

Karras T, Aila T, Laine S, Lehtinen J (2017) Progressive growing of GANs for improved quality, stability, and variation. arXiv preprint arXiv:1710.10196

Sherstinsky A (2018) Fundamentals of recurrent neural network (RNN) and long short-term memory (LSTM) network. CoRR arXiv:1808.03314

Chung J, Gulcehre C, Cho K, Bengio Y (2014) Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv preprint arXiv:1412.3555

Gallant J, Naselaris T, Prenger R, Kay K, Stansbury D, Oliver M, Vu A, Nishimoto S (2009) Bayesian reconstruction of perceptual experiences from human brain activity. International conference on foundations of augmented cognition. Springer, Berlin, pp 390–393

Nishimoto S, Vu AT, Naselaris T, Benjamini Y, Yu B, Gallant JL (2011) Reconstructing visual experiences from brain activity evoked by natural movies. Curr Biol 21(19):1641–1646

Du C, Du C, Huang L, He H (2018) Reconstructing perceived images from human brain activities with Bayesian deep multiview learning. IEEE Trans Neural Netw Learn Syst 30(8):2310–2323

Lee Y-E, Lee M, Lee S-W (2020) Reconstructing ERP signals using generative adversarial networks for mobile brain-machine interface. arXiv preprint arXiv:2005.08430

Ren Z, Li J, Xue X, Li X, Yang F, Jiao Z, Gao X (2019) Reconstructing perceived images from brain activity by visually-guided cognitive representation and adversarial learning. arXiv preprint arXiv:1906.12181

Kavasidis I, Palazzo S, Spampinato C, Giordano D, Shah M (2017) Brain2image: Converting brain signals into images. In: Proceedings of the 25th ACM international conference on multimedia, pp. 1809–1817. ACM

Tirupattur P, Rawat YS, Spampinato C, Shah M (2018) Thoughtviz: visualizing human thoughts using generative adversarial network. In: Proceedings of the 26th ACM international conference on multimedia, pp. 950–958

Jiao Z, You H, Yang F, Li X, Zhang H, Shen D (2019) Decoding EEG by visual-guided deep neural networks. In: IJCAI, pp. 1387–1393

Mozafari M, Reddy L, VanRullen R (2020) Reconstructing natural scenes from fMRI patterns using BigBiGAN. In: 2020 International joint conference on neural networks (IJCNN), pp. 1–8. IEEE

Wang P, Zhou R, Wang S, Li L, Bai W, Fan J, Li C, Childs P, Guo Y (2021) A general framework for revealing human mind with auto-encoding GANs. arXiv preprint arXiv:2102.05236

Spampinato C, Palazzo S, Kavasidis I, Giordano D, Souly N, Shah M (2017) Deep learning human mind for automated visual classification. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 6809–6817

Zhang H, Xu T, Li H, Zhang S, Wang X, Huang X, Metaxas DN (2018) Stackgan++: realistic image synthesis with stacked generative adversarial networks. IEEE Trans Pattern Anal Mach Intell 41(8):1947–1962

Russakovsky O, Deng J, Su H, Krause J, Satheesh S, Ma S, Huang Z, Karpathy A, Khosla A, Bernstein M et al (2015) Imagenet large scale visual recognition challenge. Int J Comput Vis 115(3):211–252

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Khare, S., Choubey, R.N., Amar, L. et al. NeuroVision: perceived image regeneration using cProGAN. Neural Comput & Applic 34, 5979–5991 (2022). https://doi.org/10.1007/s00521-021-06774-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00521-021-06774-1