Abstract

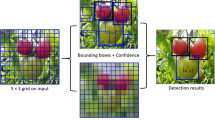

Fruit recognition and location are the premises of robot automatic picking. YOLOv3 has been used to detect different fruits in complex environment. However, for the object with definite features, the complex network structure will increase the computing time and may cause overfitting. Therefore, this paper has carried out a lightweight design for the YOLOv3. This paper proposed an improved T-Net to detect tomato images. Firstly, the T-Net reduces the residual network layers. This paper changed the number of cycles in each group of the residual unit to 1, 2, 2, 1, and 1. Second, two feature layers with different scales are selected according to the features of tomatoes. Meanwhile, the convolutional layer at the neck has been reduced by two layers. Finally, the location and approximate diameter of the ripe tomato are obtained by combining the node information of the Intel D435i camera and T-Net in the Robot Operation System. T-Net obtains mean average precision (mAP) of 99.2%, F1-score of 98.9%, precision of 99.0%, and recall of 98.8% at a detection rate of 104.2 FPS. The proposed T-Net has outperformed the YOLOv3 with 0.4%, 0.1%, and 0.2% increase in precision, mAP, and F1-score. The detection speed of T-Net is 1.8 times faster than YOLOv3. The mean errors of the center coordinates and diameter of the tomato are 8.5 mm and 2.5 mm, respectively. This model provides a method for efficient real-time detection and location of tomatoes.

Similar content being viewed by others

Data availability

The data sets used or analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- T-Net:

-

Tomato-network

- ROS:

-

Robot operation system

- AP:

-

Average precision

- mAP:

-

Mean average precision

- P:

-

Precision

- R:

-

Recall

- FPS:

-

Frame per second

- CF:

-

Confidence

- IOU:

-

Intersection over union

- NMS:

-

Non-max suppression

- BN:

-

Batch normalization

- DCBL:

-

Darknet_Conv2D_BN_LeakyRelu

- RB:

-

Residual block

- Z c :

-

Depth in the camera coordinate system

- u, v :

-

The pixel coordinates of the target point

- dx, dy :

-

The number of pixels per unit length

- F :

-

The focal length of the camera

- p rgb :

-

The pixel coordinates of the target points in the color image

- p d :

-

The pixel coordinates of the target points in the depth image

- Z rgb :

-

The depth value in the color camera coordinate system

- Z d :

-

The depth value in the depth camera coordinate system

- K rgb :

-

The internal parameter matrices of color camera

- K d :

-

The internal parameter matrices of depth camera

- R, T :

-

The transformation matrix connecting the coordinate system of color camera and depth camera

- R rgb, T rgb :

-

The external parameter matrix of color camera

- R d, T d :

-

The external parameter matrix of the depth camera

- X max :

-

The max of the x-coordinate of the predicted bounding box

- X min :

-

The min of the x-coordinate of the predicted bounding box

- Zxmax :

-

The depth value of the Xmax point

- Zxmin :

-

The depth value of the Xmin point

- u Xmax :

-

The u-coordinate value of Xmax point in the pixel coordinate system

- u Xmin :

-

The u-coordinate value of Xmin point in the pixel coordinate system

- Errp :

-

Coordinate error value

- ErrD :

-

Diameter error value

References

Septiarini, A., Sunyoto, A., Hamdani, H., et al.: Machine vision for the maturity classification of oil palm fresh fruit bunches based on color and texture features. Sci. Hortic. 286, 110245 (2021)

Moreirag, G., Magalhães, S.A., Pinho, T., et al.: Benchmark of deep learning and a proposed HSV colour space models for the detection and classification of greenhouse tomato. Agronomy 12(2), 356–378 (2022)

Yoshida, T., Kawahara, T., Fukao, T.: Fruit recognition method for a harvesting robot with RGB-D cameras. ROBOMECH J. 9(1), 1–10 (2022)

Tsoulias, N., Paraforos, D.S., Xanthopoulos, G., et al.: Apple shape detection based on geometric and radiometric features using a LiDAR laser scanner. Remote. Sens. 12(15), 2481–2498 (2020)

Zhang, Y., Ta, N., Guo, S., et al.: Combining spectral and textural information from UAV RGB images for leaf area index monitoring in kiwifruit orchard. Remote. Sens. 14(5), 1063–1079 (2022)

Linker, R.: Machine learning based analysis of night-time images for yield prediction in apple orchard. Biosyst. Eng. 167, 114–125 (2018)

Chandio, A., Gui, G., Kumar, T. et al.: Precise single-stage detector. arXiv preprint arXiv:2210.04252 (2022)

Ren, S., He, K., Girshick, R., Sun, J.: Faster R-CNN: towards realtime object detection with region proposal networks. IEEE Trans. Pattern. Anal. Mach. Intell. 39, 1137–1149 (2015)

Redmon, J., Divvala, S., Girshick, R., & Farhadi, A. You only look once: unified, real-time object detection. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016)

Afonso, M., Fonteijn, H., Fiorentin, F.S., et al.: Tomato fruit detection and counting in greenhouses using deep learning. Front. Plant. Sci. 11, 571299–571310 (2020)

Pan, S., Ahamed, T.: Pear recognition in an orchard from 3D stereo camera datasets to develop a fruit picking mechanism using mask R-CNN. Sensors 22(11), 4187–4211 (2022)

Roy, A.M., Bhaduri, J.: Real-time growth stage detection model for high degree of occultation using DenseNet-fused YOLOv4. Comput. Electron. Agric. 193, 106694 (2022)

Roy, A.M., Bhaduri, J., Kumar, T., et al.: WilDect-YOLO: an efficient and robust computer vision-based accurate object localization model for automated endangered wildlife detection. Ecol. Inform. 75, 101919 (2023)

Roy, A.M., Bose, R., Bhaduri, J. A fast accurate fine-grain object detection model based on YOLOv4 deep neural network. Neural. Comput. Appl. 1–27 (2022)

Lawal, M.O.: Tomato detection based on modified YOLOv3 framework. Sci. Rep. 11(1), 1–11 (2021)

Gai, R., Chen, N., Yuan, H.: A detection algorithm for cherry fruits based on the improved YOLO-v4 model. Neural. Comput. Appl. 1–12 (2021)

Junos, M.H., Mohd Khairuddin, A.S., Thannirmalai, S., et al.: Automatic detection of oil palm fruits from UAV images using an improved YOLO model. Vis. Comput. 38(7), 2341–2355 (2021)

Wang, M.S., Horng, J.R., Yang, S.Y., et al.: Object localization and depth estimation for eye-in-hand manipulator using mono camera. IEEE Access 8, 121765–212779 (2020)

Gene-Mola, J., Sanz-Cortiella, R., Rosell-Polo, J.R., et al.: Fuji-SfM dataset: a collection of annotated images and point clouds for Fuji apple detection and location using structure-from-motion photogrammetry. Data. Brief. 30, 105591–105597 (2020)

Andriyanov, N., Khasanshin, I., Utkin, D., et al.: Intelligent system for estimation of the spatial position of apples based on YOLOv3 and real sense depth camera D415. Symmetry 14(1), 148–161 (2022)

Uramoto, S., Suzuki, H., Kuwahara, A., et al.: Tomato recognition algorithm and grasping mechanism for automation of tomato harvesting in facility cultivation. J. Signal. Process. 25(4), 151–154 (2021)

Hsieh, K.W., Huang, B.Y., Hsiao, K.Z., et al.: Fruit maturity and location identification of beef tomato using R-CNN and binocular imaging technology. J. Food. Meas. Charact 15(6), 5170–5180 (2021)

Zhu, C., Wu, C., Li, Y., et al.: Spatial location of sugarcane node for binocular vision-based harvesting robots based on improved YOLOv4. Appl. Sci. 12(6), 3088–3104 (2022)

Niu Y.C., The research on flexible precision picking technology of tomato picking robot. Shanghai University. (2020) (in Chinese)

Wu, D., Yang, B., Wang, H., et al.: An energy-efficient data forwarding strategy for heterogeneous WBANs. IEEE Access 4, 7251–7261 (2016)

Chen, J., Wang, Z., Wu, J., et al.: An improved Yolov3 based on dual path network for cherry tomatoes detection. J. Food. Process. Eng. 44(10), 13803–13820 (2021)

Shafiee, M.J., Chywl, B., Li, F. et al.: Fast YOLO: a fast you only look once system for real-time embedded object detection in video. arXiv preprint. https://doi.org/10.48550/arXiv.1709.05943 (2017)

Liu, S.S.: Self-adaptive scale pedestrian detection algorithm based on deep residual network. Int. J. Intell. Comput. Cybern. 12(3), 318–332 (2019)

Perez-Borrero, I., Marin-Santos, D., Vasallo-Vazquez, M.J., et al.: A new deep-learning strawberry instance segmentation methodology based on a fully convolutional neural network. Neural. Comput. Appl. 33(22), 15059–15071 (2021)

Ju, M., Luo, H., Wang, Z., et al.: The application of improved YOLO V3 in multi-scale target detection. Appl. Sci. 9(18), 3775–3788 (2019)

Zaghari, N., Fathy, M., Jameii, S.M., et al.: The improvement in obstacle detection in autonomous vehicles using YOLO non-maximum suppression fuzzy algorithm. J. Supercomput. 77(11), 13421–13446 (2021)

Thenmozhi, K., Srinivasulu, R.U.: Crop pest classification based on deep convolutional neural network and transfer learning. Comput. Electron. Agric. 164, 104906–104916 (2019)

An, G., Lee, S., Seo, M.W., et al.: Charuco board-based omnidirectional camera calibration method. Electron. 7(12), 421–435 (2018)

Chang, Y.H., Sahoo, N., Chen, J.Y., et al.: ROS-based smart walker with fuzzy posture judgement and power assistance. SENSORS-BASEL 21(7), 2371–2389 (2021)

Fu G, Zhang X.: ROSBOT: a low-cost autonomous social robot. IEEE International Conference on Advanced Intelligent Mechatronics (AIM):1789–1794 (2015)

Buyukarikan, B., Ulker, E.: Classification of physiological disorders in apples fruit using a hybrid model based on convolutional neural network and machine learning methods. Neural. Comput. Appl. 34(19), 16973–16988 (2022)

Tian, Y., Yang, G., Wang, Z., et al.: Apple detection during different growth stages in orchards using the improved YOLO-V3 model. Comput. Electron. Agric. 157, 417–426 (2019)

Liu, G., Nouaze, J.C., Touko Mbouembe, P.L., et al.: YOLO-tomato: a robust algorithm for tomato detection based on YOLOv3. Sensors 20(7), 2145 (2020)

Zheng, T., Jiang, M., Li, Y., et al.: Research on tomato detection in natural environment based on RC-YOLOv4. Comput. Electron. Agric. 198, 107029 (2022)

Wang, X., Liu, J.: Tomato anomalies detection in greenhouse scenarios based on YOLO-Dense. Front. Plant Sci. 12, 634103 (2021)

Zhang, F., Lv, Z., Zhang, H., et al.: Verification of improved YOLOX model in detection of greenhouse crop organs: considering tomato as example. Comput. Electron. Agric. 205, 107582 (2023)

Singh, P., Mittal, N.: An efficient localization approach to locate sensor nodes in 3D wireless sensor networks using adaptive flower pollination algorithm. Wirel. Netw. 27(3), 1999–2014 (2021)

Acknowledgements

The work was supported by National Natural Science Foundation of China (No. 52075312)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

He, B., Qian, S. & Niu, Y. Visual recognition and location algorithm based on optimized YOLOv3 detector and RGB depth camera. Vis Comput 40, 1965–1981 (2024). https://doi.org/10.1007/s00371-023-02895-x

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-023-02895-x