Abstract

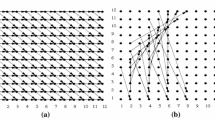

Parallel loops account for the greatest amount of parallelism in numerical programs. Executing nested loops in parallel with low run-time overhead is thus very important for achieviog high performance in parallel processing systems. However, in parallel processing systems with caches or local memories in memory hierarchies, “thrashing problem” may arise when data move back and forth frequently between the caches or local memories in different processors. The techniques associated with parallel compiler to solve the problem are not completely developed. In this paper, we present two restructuring techniques called loop staggering, loop staggering and compacting, with which we can not only eliminate the cache or local memory, thrashing phenomena significantly, but also exploit the potential parallelism existing in outer serial loop. Loop staggering benefits the dynamic loop scheduling strategies, whereas loop staggering and compacting is good for static loop scheduling strategies. Our method especially benefits parallel programs, in which a parallel loop is enclosed by a serial loop and array elements are repeatedly used in the different iterations of the parallel loop.

Similar content being viewed by others

References

W. Abu, D. Kuck and D. Lawrie, On the performance enhancement of paging systems through program analysis and transformations.IEEE Trans. Comput., 1981, C-30 (5).

J. R. Allen and K. Kennedy, Automatic loop interchange. Proc. of the ACM SIGPLAN 84 Symposium on Compiler Construction, June 1984, 233–246.

M. Burke and R. Cytron, Interprocedural dependence analysis and parallelization. Proc. SIGPLAN 1986 Symposium on Compiler Construction, July 1986.

Z. Fang, Cache or local memory thrashing and compiler strategy in parallel processing systems. ICPP '90, 271–275.

Z. Fang, C. Yew, T. Tang and C. Zhu, Dynamic processor self-scheduling for general parallel nested loops. ICPP '87, 1–18.

D. Kuck, R. Kuhn, D. Padua, B. Leasure and M. Wolfe, Dependence graphs and compiler optimizations. Proc. of the 8th ACM Symp. on Principles of Programming Languages (POPL), 1981.

D. Kuck, The Structure of Computer and Computations Vol. 1. John Wiley and Sons, New York, 1978.

D. Kucket al., Parallel supercomputing today and Cedar approach.Science, 1988, 967–974.

C.D. Polychronopoulos, Parallel Programming and Compilers. Kluwer Academic Publishers, 1988.

D. Padua and D. Kuck, High speed multiprocessors and compilation techniques.IEEE Trans. Comput. 1980, C-29 (9), 763–776.

M. Wolfe and U. Banerjee, Data dependence and its application to parallel processing.International Journal of Parallel Programming, 1987, 16 (2).

M. Wolfe, Iteration space tiling for memory hierarchies. Proc. of the Third SLAM Conf. on Parallel Processing, Los Angeles, CA, Dec., 1987, 1–4.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Jin, G., Yang, X. & Chen, F. Loop staggering, loop compacting: Restructuring techniques for thrashing problem. J. of Comput. Sci. & Technol. 8, 49–57 (1993). https://doi.org/10.1007/BF02946585

Received:

Revised:

Issue Date:

DOI: https://doi.org/10.1007/BF02946585