Abstract

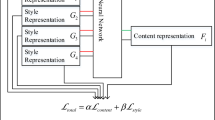

Image background subtraction refers to the technique which eliminates the background in an image or video to extract the object (foreground) for further processing like object recognition in surveillance applications or object editing in film production, etc. The problem of image background subtraction becomes difficult if the background is cluttered, and therefore, it is still an open problem in computer vision. For a long time, conventional background extraction models have used only image-level information to model the background. However, recently, deep learning based methods have been successful in extracting styles from the image. Based on the use of deep neural styles, we propose an image background subtraction technique based on a style comparison. Furthermore, we show that the style comparison can be done pixel-wise, and also show that this can be applied to perform a spatially adaptive style transfer. As an example, we show that an automatic background elimination and background style transform can be achieved by the pixel-wise style comparison with minimal human interactions, i.e., by selecting only a small patch from the target and the style images.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

References

Bouwmans T (2014) Traditional and recent approaches in background modeling for foreground detection: an overview. Comput Sci Rev 11:31

Barnich O, Droogenbroeck MV (2011) ViBe: a universal background subtraction algorithm for video sequences. IEEE Trans Image Process 20:1709

St-Charles PL, Bilodeau GA, Bergevin R (2014) Subsense: A universal change detection method with local adaptive sensitivity. IEEE Trans Image Process 24:359

Rother C, Kolmogorov V, Blake A (2004) GrabCut: interactive foreground extraction using iterated graph cuts. ACM Trans Graph 23:309

Dou J, Qin Q, Tu Z (2019) Background subtraction based on deep convolutional neural networks features. Multimedia Tools Appl 78:14549

Braham M, Droogenbroeck MV (2016) Deep background subtraction with scene-specific convolutional neural networks. In: International conference on systems, signals and image processing, IWSSIP, Bratislava, Slovakia, pp 1–4

Campilani M, Maddalena L, Alcover GM, Petrosino A, Salgado L (2017) A benchmarking framework for background subtraction in RGBD videos. In: International conference on image analysis and processing, ICIAP, Catania, Italy, pp 219–229

Lim K, Jang W, Kim C (2017) Background subtraction using encoder-decoder structured convolutional neural network. In: IEEE International conference on advanced video and signal based surveillance, IEEE, Lecce, Italy, pp 1–6

Gatys LA, Ecker AS, Bethege M (2019) Image style transfer using convolutional neural networks. In: IEEE Conference on computer vision and pattern recognition, IEEE, Lasvegas, USA, pp 2414–2423

Li Y, Wang N, Liu J, Hou X (2017) Demystifying neural style transfer. In: International joint conference on artificial intelligence,Melbourne, Australia, pp 2230–2236

Acknowledgements

This work was supported by the Basic Science Research Program through the National Research Foundation of Korea under Grant NRF-2019R1I1A3A01060150 and the Electronics and Telecommunications Research Institute (ETRI) grant funded by the Korean government. [19AH1200, Development of programmable interactive media creation service platform based on open scenario]

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Kurniawan, E., Song, BD., Choi, YJ., Lee, SH. (2021). Image Background Subtraction and Partial Stylization Based on Style Representation of Convolutional Neural Networks. In: Kim, H., Kim, K.J., Park, S. (eds) Information Science and Applications. Lecture Notes in Electrical Engineering, vol 739. Springer, Singapore. https://doi.org/10.1007/978-981-33-6385-4_3

Download citation

DOI: https://doi.org/10.1007/978-981-33-6385-4_3

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-33-6384-7

Online ISBN: 978-981-33-6385-4

eBook Packages: Computer ScienceComputer Science (R0)