Abstract

Video analytics of real-life scenario deals with the multimedia data statistics that may be characterized by multimodal features of the video components. Large varieties of low-scale multimodal features of the objects creates many challenging issues for discrimination and analysis. On the other hand occlusion, varied illuminations, and complex environmental conditions highlight the video parsing, a challenging research problem. For the experimental purpose, the vital components of the videos include scenes, shots, keyframes, objects, and background. In this work, we focus on keyframes and shot boundaries for scene segmentation of the sample videos taken from YouTube. Structure Similarity index (SSIM) of the shots is computed from the histograms of LBP and HSV color similarities. Motion similarity and inverse time proximity are added to generate Shot Similarity Graph. Sliding window methods are used for grouping similar shots. The proposed work for scene segmentation is validated on six videos of various semantics characterized by human being and animals. The play of the video ranges from 0.5 to 15 min and total no. of scenes in the videos range from 06 to 33.

Similar content being viewed by others

Keywords

- Keyframe

- Shot boundary detection (SBD)

- Local binary pattern (LBP)

- Scene segmentation

- Video understanding

1 Introduction

Video scene and shot segmentation on the basis of their semantic information is an important research problem in the field of computer vision and video processing [1]. More than last 20 years, the researchers are involved in solving this problem due to many aspects of real-life applications like video annotation. Common process of everyday life includes image and video understanding, which is termed as scene parsing in computer vision community [2]. The complexity of scene parsing is realized in labeling of every pixel in an image due to the overhead in exact detection, segmentation, and commonly labeled objects classification. The problem of scene labeling is resolved in [3] by using multiscale CNN. The research work can be entitled as a temporal video scene segmentation [4,5,6]. Generally, scene can be defined as the grouping of the related or similar action part of the video, these parts may be taken anywhere from the video along timeline.

1.1 Video Frames and Shot Boundary

The visual data consists of a rich and complex set of information. Dynamic summarization of video is preferably more important due to closely related scope in real-time analytics problems. For this shot, boundary detection [7,8,9,10,11,12] and scene segmentation are the key for video summarization. Multidimensional video signal is considered as 3D tensor of frames and the tensors of similar contents are termed as shot [13]. The shots in a video predict the cuts, fade, and dissolve. A fast CNN is proposed in [14] to detect the shot boundary. In this work, we compromised with the missing long dissolve, partial changes in motion blur and fast scene changes. In [9], the problem is resolved by exploiting big data thorough spatiotemporal CNN. For this, the lowest variance of the curve by morphology is chosen as gradual localization points. A shot boundary detection scheme based on Fuzzy Color Distribution Chart (FCDC) is developed, which is invariant of noise and small illuminations [15]. Based on SIFT, FCDC algorithm can detect the gradual changes in the video scenes. A fast Shot Boundary Detection (SBD) scheme is presented using Singular Value Decomposition (SVD) [16]. Video cuts detection scheme based on dissimilarity measure is developed by using Bayesian estimation and linear regression [17]. The challenging issues are realized in SBD during gradual scene changes.

The shots in a video have multiple similar frames, it means intrasimilarity of the shot is maximize and the intershot similarity is minimized that is why movies can be divided into shots. Any shot \( S_{i} \), of movie can be represented as set of n frames as given in Eq. (1.1).

1.2 Keyframe

The frames or images are the elementary module of the video. The computation overhead can be minimized by extracting some of the frames as keyframes from each shot [18]. Hierarchical keyframe selection technique is proposed [7]. We select only those frames which have maximum information about that particular shot and redundancy of similar frame is minimized up to some decided threshold limit. Simplex Hybrid Genetic Algorithm (SHGA) is used for extracting the keyframes for human body animation. In [19], keyframe extraction is performed by exploiting the image epitome and Min-Max algorithm. Keyframe is used for SBD process and the segmentation is performed by spatiotemporal clustering and GMM. The innovative and fast key frame extraction algorithm is proposed, which extracts the keyframes on basis of consecutive frame difference. Curvature points determine the keyframes from the cumulative frame difference [20]. To preserve a rich set of previous events in the video, KNN-matting-based keyframe extraction method is proposed [21]. In this method, shot segmentation is performed by improved Mutual Information (MI) retrieval. The semantic hierarchy of video sequence can be shown in Fig. 1.

1.3 Motivation and Applications

Indoor and outdoor scene understanding is quite common in everyday life. Motivated by the problems of discrimination among the objects and exact labeling, indoor and outdoor scene segmentation scheme is developed based fully connected CNN and pixel-wise segmentation [22,23,24]. The scheme works in encoding and decoding fashion. Max pooling layer in decoding phase performs nonlinear up-sampling which create densely sampled maps. This motivates the deep network for achieving high performance in terms of memory and computational time for scene segmentation. Video scene segmentation is the first step toward automatic video annotation of video sequences, but complete scene understanding adds classification in the process [1, 4, 6, 25, 26]. The theme of major applications includes automatic video segmentation, semantic understanding of video indexing and browsing or reusability of indexed video segments.

2 Related Work

2.1 Rule-Based Video Scene Segmentation

Video Scene Segmentation is a very well-known problem of the video processing, because of some higher level tasks require video scenes as the fundamental unit [4]. In rule-based approach, different authors have first selected the specific videos and video format, which has the predefined structure of the scene in the video making. In these videos, the scene is structured in professional movie production. Zhu and Liu et al. [20] proposed a visual-based probabilistic framework that works on MPEG format videos, and present an approach for the segmentation of continuously recorded TV broadcasts videos. Real life scenario pertains to the large-scale complex objects, which are not easier for parametric semantic segmentation [4, 27]. Motivated by traditional Convolutional Neural Network (CNN), Deep Parsing Network (DPN) is introduced semantic image segmentation to outperform the state of art established by MRF/CRF [28]. The work presented in [29] alleviates from the large network training for indoor scene understating. The overall process is completed by Randomize Multilayer Perceptron (rMLP) and l1-norm regularization. Semantic scene segmentation by fully connected CNN adapts the several deep nets like Alexnet, VGGNet, and GoogLeNet. The network is helpful to predict densely complex tasks by drawing connections to the previous models [26]. A huge amount of work is reported in semantic scene segmentation [30, 31] to resolve the several problems of densely common features and overloaded parameters to train the network. All the recent work reports that deep network with unsupervised learning is paid more attention instead of hand-crafted feature engineering.

Kolmogorov-Smirnov is applied for testing temporal similarity [32]. Scene parsing by five semantic features and random forest classifier from the dense depth maps of CamVid dataset achieved higher accuracy than that of 3D sparse and appearance-based features [33]. This approach is invariant of both view and lightning conditions. Single-shot object segmentation is motivated by deep network training and outperformed the state of the art on DAVIS dataset [34]. The segmentation of single-annotated object in one shot disproved the requirement of large network training for deep network. For this purpose, detecting contour and performing hierarchical segmentation is performed [35]. Various types of videos are segmented to represent their semantic meaning [36]. Scene segmentation and classification accuracy are improved by removing redundant frames with the help of template matching. Video summarization is to represent semantics meaning of the complete content of the video.

2.2 Graph-Based Video Scene Segmentation

In graph-based approach first video is divided into keyframes or shots using some key features like color histograms. Shots are arranged in a graph representation and then clustered by partitioning the graph [6, 37]. The Shot Transition Graph (STG), used in [22], is one of the most used models in this category: here each node represents a shot and the edges between the shots are weighted by shot similarity. Then split this STG into subgraphs by applying the normalized cuts for graph partitioning. This model is exploited by two-phase graph cut methods for object segmentation. In [24] this, the author extracted the keyframes using color histograms comparison of each successive frame and then using k-means clustering he found the shot-representative keyframes. Shot boundary detection is validated on TRECVid 2001 and 2007 datasets by Gist and local descriptor [14]. The processing complexity overhead is relaxed in this scheme. In [25], scene parsing is introduced as a challenging problem of labeling the objects in the images along with parallel segmentation and classification process. The future directions of multiscale feature learning for scene parsing include the search over multiple and complex graph segmentation.

3 Proposed Work

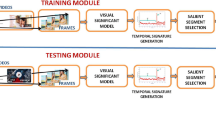

All video processing tasks are started with frames extraction of the video. The frames are considered to process still images for extracting some low- and high-level features. On the basis of those features, we can achieve our objective. The proposed framework of temporal video scene segmentation is divided into 6 main parts as follows.

-

1.

Features detection and extraction of the video frames.

-

2.

Defining of shot boundaries on the basis of extracted features.

-

3.

Keyframes extraction from each shot.

-

4.

Extract some features of those extracted key frames.

-

5.

Find the similarity between all shots pairs.

-

6.

Defining the boundaries of scene on the basis of similarity of shots.

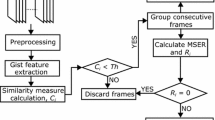

The block diagram of algorithmic steps to proceed the proposed work is shown in Fig. 2. The architecture of working flow is divided into 3 blocks A, B, and C. Initially, the block A performs HSV color processing of the RGB color sequence. HSV color space is separated by Phase 1 and Phase 2. The main objective of block A is the computing LBP features and color similarity histogram for shot boundary detection. Block B creates Shot Similarity Graph (SSG) by motion similarity and shot similarity profile. Finally, Block C develops the scene boundary by grouping similar shots. Slide window method is used to find the scene boundary of the shots profile.

3.1 Shot Boundary Computation

The extracted frames from the videos is in RGB format. The experiments are performed in HSV color space. The number of the histogram of HSV images are taken 256 (H = 8, S = 8, V = 4). The similarity between the histogram of consecutive frames is computed by using Eq. (3.1). The belonging of next frame to the particular shot is decided on the base of threshold chosen as given in Eq. (3.2).

where Hi and Hj are the HSV color histogram with 256 bins each and jth frame is the next to ith frame, i.e., j = i + 1. If the similarity between two consecutive frames is above and equal to that threshold then that particular pair of frame shares the previous shot and if this measure is less than the threshold then a new shot is started. We have chosen a variable threshold value for the experimental view that is in range of [0.8, 0.9]. This process virtually cuts whole the video into some finite number of shots.

3.1.1 Permanent Shot Construction Method

In this phase, permanent shots are constructed from the grouping of several temporary shots. Figure 3 shows that shot5 is similar to any of the shot2 or shot3 or shot4, so shot5 is considered as part of the previous permanent shot. The window is slid to check for next shot6. In this experiments, the window size is kept fixed to four. Last temporary shot of the window compared with rest of the window shots, if it found any of the shot which has higher similarity than the threshold then the last shot is merged to the previous permanent shot and the window is moved to next shot.

If any temporary shot is not similar to the rest of the window shot then window slides and the starting shot of the window is previously compared shot and window length is to next 3 shots. This process defines a new boundary for the permanent shots. But if there are multiple consecutive shots which have very less frames, means the video is split into shots which have a few frames or of very short duration then we can merge that shot to previous or next shot based on the SSIM index. As shown in Fig. 4, shot5 is less similar than the threshold value so from shot5 a new permanent shot begins.

3.1.2 Shot Similarity Graph (SSG)

Grap-based scene detection has been given higher priority due to easier modeling [16, 40] for video summarization. Shot similarity graph (SSG) is constructed based on the shots and the weighted similarity between them. A graph G (V, E) is consists of two sets one is set of nodes and other is set of edges between the nodes. The edge between any pairs of shot has some weight. In our graph shots, the node of the graph and the weighted similarity is edges between these shots. Shot similarity graph is defined in Eq. (3.3)

where in this equation W (i, j) is the inverse time proximity weight and the ShotSim is defined in Eq. (3.5).

where in above equation α and β are weights given to each similarity features. This weight is given such that ShotSim index is in range of [0, 1]. In our experiment α + β = 1, where α = 0.5 and β = 0.5.

3.1.3 Scene Boundaries Using Sliding Window Approach

In our method, we have chosen a window of 4 shots. let’s say for shot A, B, C and D, if shot A, B, and C are part of the same scene and now we are checking for shot D. First D is compared with shot A, if similarity between these two shot is greater than decided threshold then Shot D is also part of the previous running scene and window moves to next shot E and checks for their similarity against previously selected shots in that scene.

4 Experiments and Result Analysis

For the experiment purpose, we have taken 6 videos pertaining the different issues of different social conditions. The videos are sequentially number as V1, to V6. Data statistics of all the videos is described in Table 1. Different videos have a different character. Some of the videos are having the animal and other are having human as an actor. These videos are taken from YouTube. Most of the videos are concerned to focus on the activities rather than the background of the videos.

The visualization of this results is given by Fig. 5, for ground truth and total detected and correct scenes. The performance measure of the proposed method is justified by recall and precession in Fig. 6. The results show that the precession and recall for wildlife video V1 is 100%.

4.1 Classification Statistics

The results are computed using precision and recall in Eqs. (4.1) and (4.2).

5 Conclusion and Future Directions

In this research work, the authors proposed an approach for the scene and shot boundary segmentation of real-life videos. All the experimental work is performed on the videos taken from YouTube. All the videos pertain various modality of actions and sentiments. We proposed two methods for the experimental work. The texture based local binary patterns and shot similarity feature extraction techniques are chosen from HSV color histogram of the video sequences. Keyframes are extracted by proposed color similarity features of HSV histogram of the videos. Mid-frame of the set of keyframes is considered to initialize the shot. Next keyframe is determined to include in the shot of keyframe by color similarly Algorithm 1. SSG approach is used by applying motion similarity and shot similarity to resolve keyframe issues in the multiple shots. Scene segmentation is performed by sliding window approach and grouping similar shots. Thus, scene boundary is determined based on shots similarities. We look for the large performance of our approach to challenging and revise input of the extended datasets.

Densely sampled super-pixel based segmentation of the scenes and deep neural network will be considered to develop a suitable training model for large-scale complex network [22]. For this, super-voxels point cloud library can provide a supportive step to deal with the issues of unconstraint videos.

References

Li, L.J., Socher, R., Fei-Fei, L.: Towards total scene understanding: Classification, annotation and segmentation in an automatic framework. In: Computer Vision and Pattern Recognition, 2009. CVPR 2009. IEEE Conference on pp. 2036–2043. IEEE, (2009)

Tighe, J., Lazebnik, S.: Superparsing. IJCV 101(2), 329–349 (2013)

Farabet, C., Couprie, C., Najman, L., LeCun, Y.: Learning hierarchical features for scene labeling. IEEE Trans. Pattern Anal. Mach. Intell. 35(8), 1915–1929 (2013)

Myeong, H., Mu Lee, K.: Tensor-based high-order semantic relation transfer for semantic scene segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3073–3080, (2013)

Hong, S., Noh, H., Han, B.: Decoupled deep neural network for semisupervised semantic segmentation, in NIPS, pp. 1495–1503, (2015)

Shi, J., Malik, J.: Normalized cuts and image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 22(8), 888–905 (2000)

Ioannidis, A., Chasanis, V., Likas, A.: Weighted multi-view key-frame extraction. Pattern Recogn. Lett. 72, 52–61 (2016)

Gygli, M.: Ridiculously fast shot boundary detection with fully convolutional neural networks. arXiv preprint arXiv:1705.08214, (2017)

Hassanien, A., Elgharib, M., Selim, A., Hefeeda, M., Matusik, W.: Large-scale, fast and accurate shot boundary detection through spatio-temporal convolutional neural networks. arXiv preprint arXiv:1705.03281, (2017)

Lee, H., Yu, J., Im, Y., Gil, J.M., Park, D.: A unified scheme of shot boundary detection and anchor shot detection in news video story parsing. Multimedia Tools Appl. 51(3), 1127–1145 (2011)

Mondal, J., Kundu, M.K., Das, S., Chowdhury, M.: Video shot boundary detection using multiscale geometric analysis of nsct and least squares support vector machine. Multimedia Tools Appl. 1–23 (2017)

Mohanta, P.P., Saha, S.K., Chanda, B.: A model-based shot boundary detection technique using frame transition parameters. IEEE Trans. Multimedia 14(1), 223–233 (2012)

Cyganek, B., Woźniak, M.: Tensor-based shot boundary detection in video streams. New Generation Computing 35(4), 311–340 (2017)

Thounaojam, D.M., Bhadouria, V.S., Roy, S., Singh, K.M.: Shot boundary detection using perceptual and semantic information. Int. J. Multimedia Inf. Retrieval 6(2), 167–174 (2017)

Fan, J., Zhou, S., Siddique, M.A.: Fuzzy color distribution chart-based shot boundary detection. Multimedia Tools Appl. 76(7), 10169–10190 (2017)

Lu, Z.M., Shi, Y.: Fast video shot boundary detection based on SVD and pattern matching. IEEE Trans. Image Process. 22(12), 5136–5145 (2013)

Bae, G., Cho, S.I., Kang, S.J., Kim, Y.H.: Dual-dissimilarity measure-based statistical video cut detection. J. Real-Time Image Process. 1–11 (2017)

Yong, S.P., Deng, J.D., Purvis, M.K.: Wildlife video key-frame extraction based on novelty detection in semantic context. Multimedia Tools and Appl. 62(2), 359–376 (2013)

Dang, C.T., Kumar, M., Radha, H.: Key frame extraction from consumer videos using epitome. In: Image Processing (ICIP), 2012 19th IEEE International Conference on, pp. 93–96. IEEE, (2012)

Gianluigi, C., Raimondo, S.: An innovative algorithm for key frame extraction in video summarization. J. Real-Time Image Process. 1(1), 69–88 (2006)

Fei, M., Jiang, W., & Mao, W.: A novel compact yet rich key frame creation method for compressed video summarization. Multimedia Tools Appl. 1–21 (2017)

Badrinarayanan, V., Kendall, A., Cipolla, R.: Segnet: A deep convolutional encoderdecoder architecture for scene segmentation. IEEE Trans. Pattern Anal. Machine Intell. (2017)

Neverova, N., Luc, P., Couprie, C., Verbeek, J., LeCun, Y.: Predicting deeper into the future of semantic segmentation. arXiv preprint arXiv:1703.07684, (2017)

Li, L., Qian, B., Lian, J., Zheng, W., Zhou, Y.: Traffic scene segmentation based on RGB-D image and deep learning. IEEE Trans. Intell. Transport. Syst. (2017)

Farabet, C., Couprie, C., Najman, L., LeCun, Y.: Scene parsing with multiscale feature learning, purity trees, and optimal covers. arXiv preprint arXiv:1202.2160, (2012)

J. Long, E. Shelhamer, T. Darrell: Fully convolutional networks for semantic segmentation. In: CVPR, pp. 3431–3440 (2015)

Galleguillos, C., Belongie, S.: Context based object categorization: A critical survey. Comput. Vis. Image Underst. 114(6), 712–722 (2010)

Liu, Z., Li, X. Luo, P. Loy, C.-C., Tang, X.: Semantic image segmentation via deep parsing network. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1377–1385, (2015)

Handa, A., Patraucean, V., Badrinarayanan, V. Stent, S., Cipolla, R.: Scenenet: Understanding real world indoor scenes with synthetic data. In: CVPR, (2016)

Song, S., Lichtenberg, S.P., Xiao, J.: Sun rgb-d: a rgb-d scene understanding benchmark suite,: In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 567–576, (2015)

H. Noh, S. Hong, B. Han: Learning deconvolution network for semantic segmentation. In: ICCV, pp. 1520–1528 (2015)

Moscheni, F., Bhattacharjee, S., Kunt, M.: Spatiotemporal segmentation based on region merging. IEEE Trans. Pattern Anal. Mach. Intell. 20(9), 897–915 (1998)

C. Zhang, L. Wang, and R. Yang: Semantic segmentation of urban scenes using dense depth maps. In: ECCV, pp. 708–721, Springer, (2010)

Caelles, S., Maninis, K.K., Pont-Tuset, J., Leal-Taixé, L., Cremers, D., Van Gool, L.: One-shot video object segmentation. In: CVPR, IEEE, (2017)

Arbelaez, P., Maire, M., Fowlkes, C., Malik, J.: Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 33(5), 898–916 (2011)

Zhu, S., Liu, Y.: Video scene segmentation and semantic representation using a novel scheme. Multimedia Tools and Appl. 42(2), 183–205 (2009)

Hariharan, B., Arbelaez, P., Girshick, R., Malik, J.: Hypercolumns for object segmentation and fine-grained localization. In CVPR, pp. 447–456, (2015)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Kumar, N., Sukavanam, N. (2019). Keyframes and Shot Boundaries: The Attributes of Scene Segmentation and Classification. In: Yadav, N., Yadav, A., Bansal, J., Deep, K., Kim, J. (eds) Harmony Search and Nature Inspired Optimization Algorithms. Advances in Intelligent Systems and Computing, vol 741. Springer, Singapore. https://doi.org/10.1007/978-981-13-0761-4_74

Download citation

DOI: https://doi.org/10.1007/978-981-13-0761-4_74

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-13-0760-7

Online ISBN: 978-981-13-0761-4

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)