Abstract

The goal of unsupervised domain adaptation (UDA) is to eliminate the cross-domain discrepancy in probability distributions without the availability of labeled target samples during training. Even recent studies have revealed the benefit of deep convolutional features trained on a large set (e.g., ImageNet) in alleviating domain discrepancy. The transferability of features decreases as (i) the difference between the source and target domains increases, or (ii) the layers are toward the top layer. Therefore, even with deep features, domain adaptation remains necessary. In this paper, we treat UDA as a special case of semi-supervised learning, where the source samples are labeled while the target samples are unlabeled. Conventional semi-supervised learning methods, however, usually attain poor performance for UDA. Due to domain discrepancy, label noise generally is inevitable when using the classifiers trained on source classifier to predict target samples. Thus we deploy a robust deep logistic regression loss on the target samples, resulting in our RDLR model. In such a way, pseudo-labels are gradually assigned to unlabeled target samples according to their maximum classification scores during training. Extensive experiments show that our method yields the state-of-the-art results, demonstrating the effectiveness of robust logistic regression classifiers in UDA.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In many computer vision and pattern recognition applications, it is generally assumed that the training and test samples are independent identically distributed (i.i.d.). This assumption, however, usually seldom hold true in most real-world scenarios, where the training and test data are collected with different sensors and at dissimilar scenarios [1,2,3], i.e. from different domains. Without loss of generality, we suppose the training data is from the source domain and the test data from the target domain. Thus, domain adaptation is introduced to learn a domain-invariant classifier from both target data and labeled source data [4, 5].

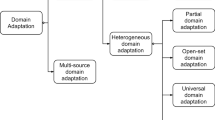

Domain adaptation is a special kind of transfer learning method which aims at eliminating the distribution difference for two different but related domains [6, 7]. According to the availability of labeled samples in target domain, there are three types of domain adaptation methods, i.e. supervised, semi-supervised, and unsupervised. In this work, we focus on unsupervised domain adaptation (UDA), where all the target samples are unlabeled during training.

For UDA, due to the unlabeled target samples, the classifier in general is trained by: (1) minimizing the classification loss on source data, and (2) reducing the discrepancy in distributions between source and target domains. By far, various classifiers, e.g., support vector machine (SVM) and logistic regression, have been deployed. A number of domain discrepancy metrics, such as maximum mean discrepancy (MMD) [5], distribution-matching embedding (DME) [8], and domain confusion [9], have also been presented.

With the progress in deep convolutional networks (CNNs), recent studies have shown that deep convolutional features trained on a large set (e.g., ImageNet) are beneficial in alleviating domain discrepancy [10]. Even so, as pointed out by Yosinski et al. [11], the transferability of features decreases along with the increase of the domain difference and the increase of layers toward the top layer. As a result, deep UDA methods have received considerable research interest in the last few years. In this paper, we present a semi-supervised learning (SSL) perspective for deep UDA. We note that most existing UDA methods [14, 16,17,18] only consider the domain discrepancy and do not make full use of the unlabeled target samples in a SSL manner. Actually, UDA can also be treated as a special case of SSL, where the source samples are labeled while the target samples are unlabeled. Conventional SSL methods are based on the assumption that the labeled and unlabeled samples are i.i.d., which generally is not hold for UDA due to domain discrepancy. Thus, it remains a challenging issue to study for extending SSL to UDA.

To extend SSL to UDA, we propose a robust deep logistic regression (RDLR) model. Analogous to the SSL methods in [12, 13], our RDLR alternates between (i) assigning pseudo-labels to target samples, and (ii) updating classifiers. Denote \(\mathbf{D }_{s} = \{(\mathbf{{x}}_{s,i}, y_{s,i})\}_{i=1}^{N_s}\) and \({\mathbf{D}}_{t} = \{{\mathbf{x}}_{t,i}\}_{i=1}^{N_t}\) as the labeled source data and unlabeled target data in training.

We note that even the labels of source samples are known, label noise generally is inevitable in the pseudo-labels \(\{\hat{y}_{t,i}\}_{j=1}^{N_t}\) when using the classifiers to predict target samples. Thus, we simply adopt the logistic loss \({\mathcal {L}_s}(\mathbf{{W}}; \mathbf D _s)\) on the source data. As to target data, we suggest to use the robust logistic regression loss to alleviate the adverse effect of labeling error. Specifically, the confidence of pseudo-label is assessed by considering two aspects: (i) the classification score, and (ii) the ratio between the first two maximum output values. Based on the confidence of pseudo-label, we divide the target samples into three groups, i.e. samples with high, medium, and low confidence. For each group, specific robust logistic regression loss is designed to improve the robustness against label noise.

Furthermore, we stack the robust logistic regression model upon the CNN architecture to utilize the discriminative ability and transferability of deep representation. As illustrated in Fig. 1, in forward propagation, we assign pseudo-label and confidence level for each target sample. According to the confidence level, specific robust logistic regression loss (e.g., \(\ell _{TH}\), \(\ell _{TM}\), or \(\ell _{TL}\) in Fig. 1) is computed. In backward propagation, the model parameters are updated by minimizing the logistic loss on source samples and minimizing the specific robust logistic loss based on confidence level. Extensive experiments have been conducted to evaluate the proposed RDLR model on the Office-Caltech dataset and the Office-31 dataset [20]. The results show that RDLR is effective in handling label error and reducing domain discrepancy, and performs favorably in comparison with the state-of-the-art UDA methods.

The architecture of our proposed RDLR method based on Alexnet [19]. During training the model, the first two layer are frozen for avoiding overfitting, and other layers are optimized by both source and target data simultaneously. In forward propagation, pseudo labels are assigned for target samples which are divided into three groups: targets with high, medium and low confidence (TH, TM, TL). In backward propagation, the model parameters are updated by minimizing the logistic loss (\({\mathcal {L}_s}\)) on source data and minimizing the specific robust logistic loss (\(\ell _{TH}\), \(\ell _{TM}\), or \(\ell _{TL}\)) on target data.

To sum up, the contribution of this paper is three-fold:

-

1.

We present a SSL perspective for UDA. To remedy the label error caused by domain discrepancy, we assess the confidence level of pseudo-labels for target samples, and suggest three specific robust logistic regression losses for them with high, medium, and low confidence, respectively.

-

2.

By stacking robust logistic losses upon CNN, we propose a robust deep logistic regression (RDLR) model for UDA in a SSL manner. The back-propagation algorithm is then deployed to learn model parameters.

-

3.

Experimental results on the Office-Caltech dataset and the Office-31 dataset validate the effectiveness of the proposed RDLR model in handling label error and reducing domain descrepancy. And RDLR can achieve the state-of-the-art results for UDA.

The remainder of this paper is organized as follows. Section 2 presents the model and learning algorithm of RDLR. Section 3 evaluates our RDLR method on standard UDA benchmarks datasets, i.e. Office-Caltech dataset and the Office-31 dataset. Section 4 analyzes the parameter sensitivity and feature visualization of the proposed RDLR method. Section 5 ends this paper by providing several concluding remarks.

2 Proposed Method

In this paper, the architecture of our unsupervised deep domain adaptation method is described in Fig. 1. This architecture is based on Alex network (AlexNet) [19] which is composed of an input layer, 5 convolutional layers from conv1 to conv5 (including pooling layers), 3 fully connected layers from fc6 to fc8 and an output layer. In our proposed model, the first two convolutional layers (conv1–conv2 layers) are frozen for learning general features and others layers are fine-tuned or trained to learn specific features for classification. During training our model progress, both source and target samples are taken as input data simultaneously, which is beneficial to fusing their knowledge in deep architecture and reducing the distribution divergency across two different domains.

2.1 Performing Pseudo Labels for Target Samples

For a training dataset \(\mathbf{D } = \{(\mathbf{x }_{n}, y_{n})\}_{n=1}^{N}\), the softmax logistic loss of a deep learning network is defined as function 1 which aims at optimizing parameters \(\mathbf W \).

where N is the number of training samples, K stands for the number of categories, \({z_{n,k}} = f({\mathbf{x }_n},{y_n};\mathbf {W})\) denotes the k-th representation in the fully connected 8-th layer (fc8 layer) of Alex network, \(\delta ( \cdot )\) is the Kronecker delta function given by

The derivative about \(z_{n,k}\) is given by function 3.

During training the proposed model, we use stochastic gradient descent (SGD) approach to optimize the parameters W of deep neural network. The update progress of \(z_{n,k}\) can be described as follows.

where \(\eta \) denotes learning rate and p stands for training epoch of deep neural network.

According to the function 4, the representation \(z_{n,k}\) \((k = y_n)\) for a training sample \(\{\mathbf{x }_{n}, y_{n}\}\) in fc8 layer of Alex network will increase relatively as the training epoch grows, while other representations \(z_{n,k}\) \((k \ne y_n)\) decrease. In other word, the gap between the representation \(z_{n,k}\) \((k = y_n)\) and other representations \(z_{n,k}\) \((k \ne y_n)\) of an image sample is enlarged during optimizing the deep networks. Therefore, the values of {\(z_{n,1}\),\(\cdots \), \(z_{n,K}\)} can be taken as the classification scores {s \(_{n,1}\), \(\cdots \), s \(_{n,K}\)} and pseudo labels for target samples are set based on the maximum values of their classification scores {s \(_{T,n,1}\), \(\cdots \), s \(_{T,n,K}\)}.

Let s \(_{T,n,max}\) and s \(_{T,n,max2}\) be the first two maximum values among {s \(_{T,n,1}\), \(\cdots \), s \(_{T,n,K}\)} of a target sample. According to the pseudo-label definition of target samples, we can deduce two inferences described as follows.

(1) For a set of target samples with same pseudo labels, they have a higher classification confidence to be classified into actual categories as the maximum classification scores s \(_{T,n,max}\) increase.

(2) For a target image sample, the ratio \(\mu _{T,n}\) between the first two maximum classification scores s \(_{T,n,max}\) and s \(_{T,n,max2}\) suggests its noise level, which reflects the probability of classification accuracy. Hence, a target sample with a bigger ratio \(\mu _{T,n}\) tends to have a higher classification confidence correspondingly.

2.2 Robust Deep Logistic Regression for Target Samples

Although, in Sect. 2.1, pseudo labels \(\hat{\mathbf{Y }}_{T}\) of target samples are assigned according to their classification score, they can not be guaranteed to be true. It is rational that target samples whose pseudo labels correspond to their actual categories \(\mathbf Y _{T}\) are in favor of training domain adaptation model, and vice versa. To learn a robust domain adaptation model, the target samples should be utilized based on their classification confidence during learning the parameters W with the fixed pseudo labels \({\hat{\mathbf{Y }}}\). We divide the target samples into three groups (samples with high, medium, and low classification confidence) and then construct robust logistic loss functions for them respectively.

Target samples with high classification confidence

The pseudo labels of this group target samples are correct with the highest probability. For reducing the disturbance by other classes, we just take the pseudo labels of target samples into consideration. And the logistic loss function can be given by

where \(N_{TH}\) is the number of target samples with high classification score; \(\alpha \) is the adaptive constant used to weight the target samples.

Target samples with medium classification confidence

Although the classification scores of these target samples are smaller than those mentioned above, their classification confidence is also high. Besides the pseudo labels, the ratio between the first two classification scores is taken into consideration. Their logistic loss function is described as

where \(N_{TM}\) is the number of target samples with medium classification score; \(\gamma _n\) denotes adaptive parameter of n-th target sample depended on the ratio \(\mu _{TM,n}\) between the first two maximum classification scores and \({\gamma _n \le \alpha }\).

Target samples with lower classification confidence

In this part, the classification confidence of target samples is smaller than that of others. In order to learn a robust domain adaptation model, we take other classes into consideration besides the pseudo labels. Their logistic loss function is described as function 7.

where \({{\beta _1} \ge {\beta _2}}\), \(N_{TL}\) is the number of target samples with lower classification confidence.

The global objective function

Combining the objective functions mentioned above, we yield the global objective function for optimizing the parameters \(\mathbf{W }\) which is described as function 8.

where \({L_S}(\mathbf{W}\} \) is the objective function for labeled source data, which is the same as function 1. The goal is to minimize the objective function \({L_\mathrm{{G}}}(\mathbf{W})\) about parameters \(\mathbf W \) of deep neural network. These parameters updated via SGD method in epoch p + 1 are described as follows:

where \(\mathbf Z \) stands for the representation of target samples in the fc8 layer and \(\eta \) is the learning rate.

3 Experiments

To analyze the effectiveness of the proposed RDLR, we did some experiments on unsupervised adaptation problems over the Office-31 dataset [20] and the Office-Caltech dataset [14], which are standard domain adaptation datasets. Then we compared them with other state-of-the-art domain adaptation methods and found that our method was more effective in unlabeled target domains.

3.1 Setup

The Office-31 dataset used in our experiments is composed of three distinct domains: Amazon (A), Webcam (W) and DSLR (D). The images of Amazon were collected from www.amazon.com, and the images of Webcam and DSLR were taken by web camera and digital SLR camera respectively. Every these domains has 31 categories of common objections, such as tables, bottles, mugs and bags, which are used in a typical office/house environment. There are a total of 4110 images with an average of 16 images per category for DSLR, 90 images per category for Amazon, and 26 images per category for Webcam. The Office-Caltech dataset consists of 10 overlapping categories which are selected from the Office-31 dataset [20] (Amazon, Webcam and DSLR) and Caltech-256 (C) dataset [21]. In the corresponding domains, they have 958, 295, 157 and 1123 image samples respectively, with a total of 2,533 images. As these images were acquired in unconstrained settings with inconsistent lighting and backgrounds, they highlight the challenges for object recognition task and domain adaptation. Some images chosen from the Office-Caltech dataset are shown in Fig. 2.

In our experiments, we minimally pre-processed the images to satisfy the AlexNet [19]: (1) All images were re-scaled to size \(270\times 270\) pixels; (2) Each pixel of an image was subtracted by its corresponding mean pixel of all source images, and then the results were divided by the maximum result value of all source images.

For all experiments, we initialized the deep CNN parameters from the convolutional layer 1 to the fully connect layer 7 using the pre-trained AlexNet [19] parameters which were downloaded from MatConvNet website [23]. As the low layers of the deep neural networks have a strong ability of learning general features and the high layers are good at extracting specific features contributing to the classification [11], we froze the first two convolutional layers, fine-tuned the conv4–conv5 layers and fully connected layers fc6–fc7, and trained the last layer fc8. The stochastic gradient descent (SGD) in our model was set by 0.9 momentum.

3.2 Evaluation

In domain adaptation experiments, we used the complete source data with labels as training data and the complete target samples without labels as test data for unsupervised problems. We made this decision primarily to have more training data per domain adaptation task, and also for the convenience of training the domain adaptation model [14]. Before training our proposed models, we firstly fine-tuned the initialized AlexNet only using the labeled source data until its classification ability did not improve on every domain of the Office-31 dataset and the Office-Caltech dataset. The learning rate in fine-tune process was set by 0.001. And the batch size was set by 128. The classification results of two datasets based on fine-tuned AlexNet are shown in the Tables 1 and 2.

Evaluation on Office-Caltech Dataset

While evaluating our models on Office-Caltech dataset, learning rate was set by 0.0001 at beginning which might be fine-tuned if the networks were not stable in the training period; \(\lambda = 2\), \(\alpha = 0.8\), \(\beta _1 = 0.06\) and \(\beta _2 = 0.04\). The value of the parameter \(\gamma _n\) is shown in function 10. These parameters will be analyzed in the following context.

where \(\mu _{TM,n}\) denotes the ratio between the first two maximum classification scores s \(_{TM,n,max}\) and s \(_{TM,n,max2}\) of n-th target sample with medium classification confidence.

In Table 1, RDLR0 denotes the unsupervised deep domain adaptation model trained by the target samples whose pseudo labels with high and medium classification confidence and RDLR1 stands for the proposed method optimized with all the target samples. Geodesic flow kernel (GFK) [14], transfer component analysis (TCA) [5], subspace alignment (SA) [15], and JCSL [3] are shallow transfer learning methods, and others, such as deep transfer learning (DTN) [22], DASH-N [18], AlexNet [19] and our methods RDLR0 and RDLR1, are based on deep learning.

The experimental results of these outstanding methods reported in their original papers are used to make comparison with the proposed models (RDLR0 and RDLR1). It is obvious that the transferable ability using deep learning significantly outperforms that of shallow models. Especially, our methods get excellent achievements on the unsupervised scenarios of the 12 across train/test splits, which improves about 11.57% for RDLR0 and 13.41% for RDLR1 on average, even more than 20% in some cases, compared with the AlexNet which just used the source data to train the classifier. Moreover, the classification performance of RDLR1 is better than that of RDLR0 in most of domain adaptation tasks.

Evaluation on Office-31 Dataset

In these group experiments, the adaptive parameters of our proposed model are the same as those used on Office-Caltech dataset. The Table 2 shows the classification results of some algorithms tested on the Office-31 dataset, where TCA [5], GFK [14] and domain adaptive neural networks (DaNN) [25] method are based on shallow transfer learning and others, such as DDC [16], DLID [17], DASH-N [18], AlexNet [19] and our RDLR0 and RDLR1 methods, are based on deep learning. In contrast, our methods greatly outperform other compared methods, especially the RDLR1 whose average classification accuracy is higher about 7% than that of the baseline AlexNet algorithm on across domain adaptation tasks.

4 Analysis

Here we firstly analyze the sensitivity of parameters in our method. Then, according to the feature visualization, we prove that our method can reduce the distribution discrepancy between two different domains.

4.1 Parameter Sensitivity

During optimizing the proposed domain adaptation model, maximum classification scores and ratios between the first two maximum classification scores of target samples are always changing and the target samples for the three groups \(\mathbf{X }_{TH}\), \(\mathbf{X }_{TM}\), \(\mathbf{X }_{TL}\) have to be reselected in each training epoch. So, the adaptive parameters \(\alpha \), \(\gamma \), \(\beta _1\) and \(\beta _2\) weight different target samples. Considering \(\gamma \) depends on \(\alpha \) which is reacted by parameters \(\lambda \), \(\beta _1\) and \(\beta _2\) meanwhile, hence we just investigate the effects of parameters \(\lambda \), \(\beta _1\) and \(\beta _2\) on across domain tasks.

Firstly, the parameter sensitivity of \(\lambda \) was evaluated while \(\beta _1\) and \(\beta _2\) were set to 0 and \(\alpha = 0.8\). Its classification performance across domains was shown in Fig. 3 which indicated that all three domain adaptation tasks achieved highest classification accuracy when \(\lambda = 2\). Then setting \(\lambda = 2\), we tested the sensitivity of parameters \(\beta _1\) and \(\beta _2\) while one of them was fixed alternatively. According to their classification accuracy described in Fig. 4(a) and (b); when \(\beta _1 = 0.06\) and \(\beta _2 = 0.04\), the proposed domain adaptation model got the outstanding performance. Therefore, in our domain adaptation experiments, parameters were given by \(\lambda = 2\), \(\beta _1 = 0.06\) and \(\beta _2 = 0.04\) while \(\alpha = 0.8\).

4.2 Feature Visualization

The proposed logistic loss function uses both source and target data simultaneously to train the domain adaptation model, so that their distribution discrepancy is reduced by fusing their knowledge together. In order to evaluate this theory, we used t-Distributed Stochastic Neighbor Embedding (t-SNE) approach [24] to visualize the representation features for the cross domain task D\(\rightarrow \)W before and after domain adaptation (DA). Their features with 4096 dimension were extracted from the fc7 layer of AlexNet.

The feature visualization for the cross domain task is shown in Fig. 5 where points represent source samples and ‘\(\times \)’ denotes target samples. And different colors denote different categories of the cross domains. The Fig. 5(a) and (b) illustrate the representation features of across domain tasks before and after domain adaptation respectively. Compared with those without domain adaptation, the representation features extracted from our model tend to confuse together. It means that the distribution of target data is much more consistent with that of source data in our domain adaptation model.

5 Conclusion

In this paper, we proposed a novel method called RDLR, which used both source and target data to train an unsupervised deep domain adaptation model. During optimizing this model, target samples without labels were set to pseudo labels based on their maximum classification scores, and their robust logistic loss functions were built and weighted according to their classification confidence. By this method, the knowledge of source domain and target domain were learned simultaneously so that their knowledge fused together to decrease their distribution discrepancy and improve the domain adaptation ability.

On experimental evaluations, our method yielded the state of the art achievements on two benchmark databases. When we used all the unlabeled target samples to train the proposed domain adaptation model, the average classification accuracy improved more than 13% on the Office-Caltech dataset and nearly 7% on the Office-31 dataset for across domain tasks compared with the baseline method, AlexNet.

References

Torralba, A., Efros, A.A.: Unbiased look at dataset bias. In: IEEE Computer Vision and Pattern Recognition (CVPR), pp. 1521–1528. IEEE (2011)

Shimodaira, H.: Improving predictive inference under covariate shift by weighting the log-likelihood function. J. Stat. Plann. Infer. 90(2), 227–244 (2000)

Fernando, B., Tommasi, T., Tuytelaars, T.: Joint cross-domain classification and subspace learning for unsupervised adaptation. Pattern Recogn. Lett. 65, 60–66 (2015)

Daumé III, H., Kumar, A., Saha, A.: Frustratingly easy semi-supervised domain adaptation. In: Proceedings of the 2010 Workshop on Domain Adaptation for Natural Language Processing, pp. 53–59. Association for Computational Linguistics (2010)

Pan, S.J., Tsang, I.W., Kwok, J.T., Yang, Q.: Domain adaptation via transfer component analysis. IEEE Trans. Neural Netw. 22(2), 199–210 (2011)

Pan, S.J., Yang, Q.: A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 22(10), 1345–1359 (2010)

Ghosn, J., Bengio, Y.: Bias learning, knowledge sharing. IEEE Trans. Neural Netw. 14(4), 748–765 (2003)

Baktashmotlagh, M., Harandi, M., Salzmann, M.: Distribution-matching embedding for visual domain adaptation. J. Mach. Learn. Res. 17(1), 3760–3789 (2016)

Tzeng, E., Hoffman, J., Darrell, T., Saenko, K.: Simultaneous deep transfer across domains and tasks. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), pp. 4068–4076. IEEE (2015)

Donahue, J., Jia, Y., Vinyals, O., Hoffman, J., Zhang, N., Tzeng, E., Darrell, T.: DeCAF: a deep convolutional activation feature for generic visual recognition. In: International Conference on Machine Learning (ICML), pp. 647–655 (2014)

Yosinski, J., Clune, J., Bengio, Y., Lipson, H.: How transferable are features in deep neural networks? In: Advances in Neural Information Processing Systems, pp. 3320–3328 (2014)

Amini, M.-R., Gallinari, P.: The use of unlabeled data to improve supervised learning for text summarization. In: Proceedings of the 25th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval (ACM), pp. 105–112 (2002)

Vapnik, V., Sterin, A.: On structural risk minimization or overall risk in a problem of pattern recognition. Autom. Remote Control 10(3), 1495–1503 (1977)

Gong, B., Shi, Y., Sha, F., Grauman, K.: Geodesic flow kernel for unsupervised domain adaptation. In: IEEE Computer Vision and Pattern Recognition (CVPR), pp. 2066–2073. IEEE (2012)

Fernando, B., Habrard, A., Sebban, M., Tuytelaars, T.: Unsupervised visual domain adaptation using subspace alignment. In: IEEE Computer Vision and Pattern Recognition (CVPR), pp. 2960–2967. IEEE (2013)

Tzeng, E., Hoffman, J., Zhang, N., Saenko, K., Darrell, T.: Deep domain confusion: maximizing for domain invariance. arXiv preprint arXiv:1412.3474 (2014)

Chopra, S., Balakrishnan, S., Gopalan, R.: DLID: deep learning for domain adaptation by interpolating between domains. In: ICML Workshop on Challenges in Representation Learning, vol. 2, no. 6 (2013)

Nguyen, H.V., Ho, H.T., Patel, V.M., Chellappa, R.: DASH-N: joint hierarchical domain adaptation and feature learning. IEEE Trans. Image Process. 24(12), 5479–5491 (2015)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Advances in Neural Information Processing Systems, pp. 1097–1105 (2012)

Saenko, K., Kulis, B., Fritz, M., Darrell, T.: Adapting visual category models to new domains. In: Daniilidis, K., Maragos, P., Paragios, N. (eds.) ECCV 2010. LNCS, vol. 6314, pp. 213–226. Springer, Heidelberg (2010). https://doi.org/10.1007/978-3-642-15561-1_16

Griffin, G., Holub, A., Perona, P.: Caltech-256 object category dataset. California Institute of Technology (2007)

Zhang, X., Yu, F.X., Chang, S.-F., Wang, S.: Deep transfer network: unsupervised domain adaptation. arXiv preprint arXiv:1503.00591 (2015)

Van, L., Maaten, D.: Accelerating t-SNE using tree-based algorithms. J. Mach. Learn. Res. 15(1), 3221–3245 (2014)

Ghifary, M., Kleijn, W.B., Zhang, M.: Domain adaptive neural networks for object recognition. In: Pham, D.-N., Park, S.-B. (eds.) PRICAI 2014. LNCS (LNAI), vol. 8862, pp. 898–904. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-13560-1_76

Acknowledgements

The work is partially supported by the GRF fund from the HKSAR Government, the central fund from Hong Kong Polytechnic University, the NSFC fund (61332011, 61671182, 50905040) and Shenzhen Fundamental Research fund (JCYJ20150403161923528, JCYJ20140508160910917). Besides, we gratefully acknowledge NVIDIA corporation providing the Tesla K40c GPU for our research.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG, part of Springer Nature

About this paper

Cite this paper

Wu, G., Chen, W., Zuo, W., Zhang, D. (2018). Unsupervised Domain Adaptation with Robust Deep Logistic Regression. In: Paul, M., Hitoshi, C., Huang, Q. (eds) Image and Video Technology. PSIVT 2017. Lecture Notes in Computer Science(), vol 10749. Springer, Cham. https://doi.org/10.1007/978-3-319-75786-5_17

Download citation

DOI: https://doi.org/10.1007/978-3-319-75786-5_17

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-75785-8

Online ISBN: 978-3-319-75786-5

eBook Packages: Computer ScienceComputer Science (R0)