Abstract

Two important control strategies for Rough Set based reduct computation are Sequential Forward Selection (SFS), and Sequential Backward Elimination (SBE). SBE methods have an inherent advantage of resulting in reduct whereas SFS approaches usually result in superset of reduct. The fuzzy rough sets is an extension of rough sets used for reduct computation in Hybrid Decision Systems. The SBE based fuzzy rough reduct computation has not attempted till date by researchers due to the fuzzy similarity relation of a set of attributes will not typically lead to fuzzy similarity relation of the subset of attributes. This paper proposes a novel SBE approach based on Gaussian Kernel-based fuzzy rough set reduct computation. The complexity of the proposed approach is the order of three while existing are fourth order. Empirical experiment conducted on standard benchmark datasets established the relevance of the proposed approach.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

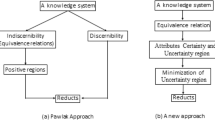

Feature selection is an important technique for dimensionality reduction which is widely used in the field of Data mining and Machine Learning. It is a crucial preprocessing stage in Knowledge Discovery in Databases (KDD). The feature selection or feature subset selection is the process of selecting a subset of features by removing redundant features without resulting in information loss. Pawlak [13] developed Rough Set Theory (RST), which got established as a popular soft computing methodology for knowledge discovery amidst uncertainty. Reduct denotes the subset of the features selected using RST.

Classical RST is used for reduct computation in complete symbolic decision systems. But it is inappropriate for reduct computation in real-valued or hybrid decision systems (HDS). Fuzzy rough set model was introduced by Dubois and Prade [3] in 1990 for dealing with hybrid decision systems. Several extensions to Dubois and Prade Fuzzy-Rough Set model were introduced in literature such as Radzikowska and Kerre’s model [14], Hu model [5] and Gaussian Kernel-based Fuzzy Rough Set model [6] etc. Parallely reduct computation approaches are developed in these models which are primarily Sequential Forward Selection (SFS) based algorithms [4, 6, 7, 9, 16,17,18,19,20].

Computing all the possible reducts of a given decision system or computing minimum length optimal reduct is proved to be an NP-Hard problem [14]. Hence researchers are developing the heuristic approach for near optimal reduct computation algorithms. Two important aspects of reduct computing algorithm are the dependency measure (heuristic), used for assessing the quality of the reduct, and the control strategy used for attribute selection. Two important control strategies for reduct computation in literature are SFS and SBE approaches. In SFS, reduct is initialized to empty set and in every iteration the attribute with the optimal heuristic measure is included into the reduct till the end condition is reached. In SBE, reduct is initialized to all the attributes, and with every attribute, a test is conducted to check whether the omission of the attribute doesn’t lead to information loss. If an attribute is found to be redundant then it is removed from the reduct. The SBE approaches will always result in a reduct without redundancy, and the SFS approaches can not guaranty the redundancy less reduct computation.

The complexity of the reduct computation algorithm is much higher in fuzzy rough sets compared to classical rough sets. It is observed that while many SFS and SBE approaches are available for the classical rough sets, in our literature exploration, we have not come across any SBE approach for fuzzy rough sets.

This paper presents an efficient and effective SBE based reduct computation algorithm for Gaussian Kernel-based fuzzy rough sets (GK-FRS). The proposed methodology acquires significance as the theoretical time complexity is of third order (\(O(|C||U|^2)\)) which is significantly better in comparison to existing fuzzy rough set reduct algorithm having fourth order complexity (\(O(|C|^2|U|^2)\)). Cardinality of conditional attributes and universe of objects is denoted by |C| and |U| respectively.

The organization of this paper is given here. Section 2 discusses the basic concepts of rough sets and fuzzy rough sets. Section 3 describes the fundamentals of GK-FRS. Section 4 details the proposed approach for SBE reduct computation in GK-FRS. Experiments and results are provided in Sect. 5 followed by conclusion.

2 Theoretical Background

2.1 Rough Sets and Fuzzy-Rough Sets

The Rough Set Theory is a useful tool to discover data dependency and reduce the dimensionality of the data using data alone. Fuzzy-rough sets is a hybrid model of rough sets and fuzzy sets with ability to deal with quantitative data. Basic rough set theory and fuzzy-rough set theory are described in [4, 18]. The concepts of GK-FRS are described below using the Hybrid Decision Systems (HDS):

HDS is represented by \((U, C \cup \{d\}, V, f)\) where in U is the set of objects and C is the collection of heterogeneous attributes such as quantitative, qualitative, logical, set valued, interval based etc., and ‘d’ is the qualitative decision attribute.

2.2 Gaussian Kernel Function

The Gaussian function is a very popular kernel which is extensively used in SVM and RBF neural networks. The similarity between two objects \(u_i, u_j \in U\) is computed using Gaussian kernel function \(k(u_i, u_j)\) given in Eq. (1)

The distance of object \(u_i\) to object \(u_j\) is given by \(||u_i - u_j||\) and a user controlled parameter \(\delta \) influences the resulting quality of approximation. \(||u_i - u_j||\) is computed as [20]:

where \(a\in C\) is a quantitative conditional attribute and \(\delta _a\) represents the standard deviation of a.

3 Gaussian Kernel Based Fuzzy Rough Sets

The kernel method and rough set theory are the two imperative aspects of pattern recognition. The kernel function maps the data into a high dimensional space whereas rough set approximate the space. Qinghua Hu et al. [6] introduced Gaussian kernel function with fuzzy rough sets (GK-FRS) by incorporating Gaussian Kernel function with fuzzy-rough sets. The Gaussian kernel based fuzzy lower and upper approximations [6, 20] of a decision system is calculated as:

where \(d_i \in U/\{d\}\) and \(x\in U\). For a given \(B \subseteq C\), \(R_G^B\) denotes Gaussian Kernel-based fuzzy similarity relation expressed as a matrix of order |U| \(\times \) |U|. For any \(x,y \in U\), \(R_G^B(x,y)\) represents the fuzzy similarity between the object x and object y based on B attributes. Based on Proposition 3 in [20], \(R_G^{\{a\}\cup \{b\}}\) can be calculated using \(R_G^{\{a\}}\) and \(R_G^{\{b\}}\) by element-wise matrix multiplication as given in Eq. (5)

Indiscernible classes based on decision attribute is \(U/\{d\}\) = \(\{d_1,d_2,...d_l\}\), a partition of U. The fuzzy positive region is computed as:

The measure of dependency of ‘d’ on \(B\subseteq C\) is given by

where \(\bigcup _{i=1}^l \underline{R_G^B} d_i = \sum _i \sum _{x\in d_i} \underline{R_G^B} d_i(x)\).

In 2010 Qinghua Hu et al. [6], proposed a feature selection algorithm FS-GKA which is based on computing Dependency with Gaussian kernel approximation (DGKA). Later Zeng et al. [20] used this DGKA algorithm and proposed FRSA-NFS-HIS [20] algorithm for feature selection of the HDS. In 2016, Ghosh et al. [4] proposed an Improved Gaussian kernel approximation (IDGKA) algorithm for dependency computation and developed the algorithm MFRFS-NFS-HIS using IDGKA algorithm.

4 Proposed Backward Elimination Approach for Feature Selection

The nature of SBE based reduct computation requires |C| iterations irrespectively of size of the reduct Red. The SFS based reduct computation benefit from the fact that the number of iterations is limited by the size of the reduct |Red|. In addition to this observation, the primary reason for the computational complexity of SBE stems from the fact that the relational representation (indiscernibility for classical RST, fuzzy similarity relation in fuzzy rough sets) of \(C-\{a\}\) (\(\forall a \in \) C) can not be obtained from the relational representation of C. For example in fuzzy rough sets, fuzzy similarity matrix \(R^{C-\{a\}}\) is usually not derivable directly from the \(R^C\). This results in the requirement for recomputation of \(R^{C-\{a\}}\) from similarity matrices of attributes of \(C-\{a\}\).

The proposed SBE reduct computation algorithm BEA-GK-FRFS using GK-FRS emerged from the identification of possibility for deriving \(R_G^{C-\{a\}}\) directly from \(R_G^C\) and \(R_G^{\{a\}}\) as described bellow:

The Eq. (5) originally from literature [20] can be casted as Eq. (8)

where operator * represents the element wise matrix multiplication. Hence from Eq. (8) the required \(R_G^{C-\{a\}}\) is obtained by

Equation (9) is well defined only when the atomic component \(R_G^{\{a\}}\) does not contain zeros. The \(R_G^{\{a\}}\) is free from zeros due to the Gaussian Kernel adaptation. However, the possible occurrence of zeros due to the system limitation is addressed by thresholding to \(\epsilon \) (infinitesimal number). It is found by experimental verification that reduct computation is insensitive for this infinitesimal modification.

The composite component \(R_G^C\), resulting from the multiplication of matrices is expected to have very very small value \((>0)\) and more so when |C| is becoming larger. This infinitesimally small value will be represented by exact zero due to the system limitation in representation of numerical precision. These zeros are detrimental for carrying out the computation in Eq. (9). A logarithmic transformation aptly engineered to overcome this ill-conditioning scenario as shown in Eqs. (10–13).

Here operations of log, exp and \(\sum \) are defined as element-wise matrix operations. The required \(R_G^{C-\{a\}}\) is computed as:

The Eq. (13) follows from the Eq. (11).

The proposed algorithm BEA-GK-FRFS is given in Algorithm 1. The order of checking the redundancy in SBE reduct algorithms has an influence on the resulting reduct [8]. BEA-GK-FRFS (line: 4) uses attributes in the ascending order by gamma measure. The time complexity of sequential forward selection based reduct computation algorithms is \(O(|C|^2|U|^2)\) [20]. The order of time complexity of SBE based algorithms also is \(O(|C|^2|U|^2)\) where in an iteration \(R_G^{Red-\{a\}}\) requires O(|C|) matrix operations. In BEA-GK-FRFS using Eqs. (12 and 13), \(R_G^{Red-\{a\}}\) requires only two matrix operations. Hence, the time complexity of algorithm BEA-GK-FRFS is \(O(|C||U|^2)\).

5 Experiments, Results and Analysis

The configuration of the system used for experiments is: CPU:Intel(R) Core i5, Clock Speed: 2.66 GHz, RAM:4 GB, OS:Ubuntu 16.04 LTS 64 bit, and Software:R Studio Version 1.0.136. Nine benchmark quantitative decision systems from UCI Machine Learning Repository [10] were used in the experiments. Out of these datasets, four(6–9 in Table 1) datasets were of large magnitude that memory for representing the fuzzy similarity matrices exceed the system limit. Hence, for these datasets, a stratified random sampling based sub-dataset are used in our experiment. The original size of the dataset is indicated in the bracket.

5.1 Comparative Experiments with MFRSA-NFS-HIS and FRSA-SBE Algorithms

The proposed BEA-GK-FRFS is implemented in R environment. Its performance is compared with the R implementation of MFRSA-NFS-HIS by Ghosh et al. [4] which was established to be an efficient fuzzy-rough set based reduct computation algorithm using SFS strategy. To aptly illustrate the relevance of proposed SBE algorithm FRSA-SBE is implemented in R environment following traditional SBE approach. FRSA-SBE is exactly same as BEA-GK-FRFS except for \(6^{th}\) step in Algorithm 1 wherein \(R_G^{Red-\{a\}}\) is computed from the atomic component \(R_G^{b}, \forall b \in (Red-{a})\). These results are summarized in Table 1 reporting reduct length, computation time in seconds. Table 1 also reports computation gain percentage obtain by BEA-GK-FRFS over MFRSA-NFS-HIS and FRSA-SBE.

Analysis of Results

Based on the results in Table 1, BEA-GK-FRFS in general computationally efficient in comparison to other algorithms. MFRSA-NFS-HIS has performed better than FRSA-SBE establishing the reason for the precedence given to SFS approaches in comparison to SBE approaches till date. It is observed that on all decision systems especially large scale datasets such as Web, DNA, batch1cifar, Spambase, BEA-GK-FRFS has obtained significant computational gained (greater than 34%) over MFRSA-NFS-HIS validating empirically the betterment observed in theoretical time complexity of BEA-GK-FRFS. On small scale dataset such as German, Image Segmentation (small scale due to less |C|) Sona_Mines_Rocks no significant gain are obtained with respect to FRSA-SBE. This is due to the observation that when |C| is less, direct matrix multiplication is performing similar to exponential and logarithmic operation.

5.2 Comparative Experiments with L-FRFS and B-FRFS Algorithms

R package “Rough Set” [15] is a collaborative effort by several researchers in bringing together established algorithm of rough sets and fuzzy rough sets into a unified framework. L-FRFS and B-FRFS are SFS based fuzzy rough reduct algorithm [9] were made available in Rough Set package. The experiment of reduct computation is performed with B-FRFS and L-FRFS using package implementation, in the same system used for proposed algorithm. It is observed from Table 2 that BEA-GK-FRFS has achieved highly significant computational gained (greater than 95%) compared to L-FRFS and B-FRFS.

We have also analysed performance of reduct in construction of classifier modelFootnote 1, using 10 fold cross validation. No significant differences were observed in the classifier analysis between BEA-GK-FRFS and MFRSA-NFS-HIS.

6 Conclusion

Researchers of fuzzy rough sets have preferred SFS based reduct computation over SBE based owing to increased computation requirements in SBE. This work presented a novel SBE based reduct computation algorithm BEA-GK-FRFS in GK-FRS. The time complexity of BEA-GK-FRFS is third order \((O(|C||U|^2))\) in comparison to existing fuzzy rough set reduct algorithms having fourth order time complexity \((O(|C|^2|U|^2))\). Experiments conducted on benchmark datasets have validated the computation efficiency of BEA-GK-FRFS in comparison to existing fuzzy rough set reduct algorithms.

Notes

- 1.

The details of classification experiment are not reported due to the space constraint.

References

Chouchoulas, A., Shen, Q.: Rough set-aided keyword reduction for text categorization. Appl. Artif. Intell. 15(9), 843–873 (2001)

Cornelis, C., Jensen, R., Hurtado, G., Śle, D.: Attribute selection with fuzzy decision reducts. Inf. Sci. 180(2), 209–224 (2010)

Dubois, D., Prade, H.: Rough fuzzy sets and fuzzy rough sets. Int. J. Gen. Syst. 17(2–3), 191–209 (1990)

Ghosh, S., Sai Prasad, P.S.V.S., Raghavendra Rao, C.: An efficient Gaussian kernel based fuzzy-rough set approach for feature selection. In: Sombattheera, C., Stolzenburg, F., Lin, F., Nayak, A. (eds.) MIWAI 2016. LNCS (LNAI), vol. 10053, pp. 38–49. Springer, Cham (2016). doi:10.1007/978-3-319-49397-8_4

Hu, Q., Xie, Z., Yu, D.: Hybrid attribute reduction based on a novel fuzzy-rough model and information granulation. Pattern Recogn. 40(12), 3509–3521 (2007)

Hu, Q., Zhang, L., Chen, D., Pedrycz, W., Yu, D.: Gaussian kernel based fuzzy rough sets: model, uncertainty measures and applications. Int. J. Approximate Reasoning 51(4), 453–471 (2010)

Jensen, R., Shen, Q.: Fuzzy-rough attribute reduction with application to web categorization. Fuzzy Sets Syst. 141(3), 469–485 (2004)

Jensen, R., Shen, Q.: Rough set based feature selection: a review. In: Rough Computing: Theories, Technologies and Applications, pp. 70–107 (2007)

Jensen, R., Shen, Q.: New approaches to fuzzy-rough feature selection. IEEE Trans. Fuzzy Syst. 17(4), 824–838 (2009)

Lichman, M.: UCI machine learning repository (2013)

Mi, J.-S., Zhang, W.-X.: An axiomatic characterization of a fuzzy generalization of rough sets. Inf. Sci. 160(1), 235–249 (2004)

Moser, B.: On representing and generating kernels by fuzzy equivalence relations. J. Mach. Learn. Res. 7, 2603–2620 (2006)

Pawlak, Z.: Rough sets. Int. J. Comput. Inf. Sci. 11(5), 341–356 (1982)

Radzikowska, A.M., Kerre, E.E.: A comparative study of fuzzy rough sets. Fuzzy Sets Syst. 126(2), 137–155 (2002)

Riza, L.S., Janusz, A., Slezak, D., Cornelis, C., Herrera, F., Benitez, J.M., Bergmeir, C., Stawicki, S.: Package roughsets, 5 September 2015

Sai Prasad, P.S.V.S., Raghavendra Rao, C.: IQuickReduct: an improvement to quick reduct algorithm. In: Sakai, H., Chakraborty, M.K., Hassanien, A.E., Ślęzak, D., Zhu, W. (eds.) RSFDGrC 2009. LNCS (LNAI), vol. 5908, pp. 152–159. Springer, Heidelberg (2009). doi:10.1007/978-3-642-10646-0_18

Sai Prasad, P.S.V.S., Raghavendra Rao, C.: Extensions to IQuickReduct. In: Sombattheera, C., Agarwal, A., Udgata, S.K., Lavangnananda, K. (eds.) MIWAI 2011. LNCS (LNAI), vol. 7080, pp. 351–362. Springer, Heidelberg (2011). doi:10.1007/978-3-642-25725-4_31

Sai Prasad, P.S.V.S., Raghavendra Rao, C.: An efficient approach for fuzzy decision reduct computation. In: Peters, J.F., Skowron, A. (eds.) Transactions on Rough Sets XVII. LNCS, vol. 8375, pp. 82–108. Springer, Heidelberg (2014). doi:10.1007/978-3-642-54756-0_5

Wei, W., Liang, J., Qian, Y.: A comparative study of rough sets for hybrid data. Inf. Sci. 190, 1–16 (2012)

Zeng, A., Li, T., Liu, D., Zhang, J., Chen, H.: A fuzzy rough set approach for incremental feature selection on hybrid information systems. Fuzzy Sets Syst. 258, 39–60 (2015)

Acknowledgments

This work was supported by the Universities with Potential for Excellence (UPE) Phase-II, University of Hyderabad, Hyderabad, India.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Ghosh, S., Sai Prasad, P.S.V.S., Rao, C.R. (2017). Third Order Backward Elimination Approach for Fuzzy-Rough Set Based Feature Selection. In: Shankar, B., Ghosh, K., Mandal, D., Ray, S., Zhang, D., Pal, S. (eds) Pattern Recognition and Machine Intelligence. PReMI 2017. Lecture Notes in Computer Science(), vol 10597. Springer, Cham. https://doi.org/10.1007/978-3-319-69900-4_32

Download citation

DOI: https://doi.org/10.1007/978-3-319-69900-4_32

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-69899-1

Online ISBN: 978-3-319-69900-4

eBook Packages: Computer ScienceComputer Science (R0)