Abstract

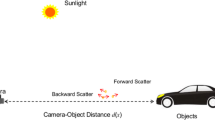

In most cases, autonomous vehicles perform well in normal lighting conditions. However, their performance is significantly reduced when faced with poor visibility circumstances because of the lack of detail and information. These situations can easily cause collisions and many dangerous accidents for transportation. Many previous efforts have focused on improving images only in a single case, such as low-light, fog, or dust. This way leads to self-driving automobile systems embedding various image restoration models, increasing computation time, and not meeting real-time processing needs. Therefore, designing an enhancer algorithm restoring input image quality is more imperative than ever before. This paper proposes a method to enhance the image under poor visibility circumstances such as foggy and low light. On top of that, we introduce the defogging algorithm based on contrast energy, entropy, and sharpness characteristics. On the other hand, the inverse of an image captured in a dark environment will be equivalent to a daytime image obtained in a fog atmosphere. Inspired by this interpretation, we adopt the proposed defogging model to enhance images in low-light conditions. Experimental results demonstrated that our method was feasible to execute pre-processing input images for autonomous vehicles driving in poor visibility circumstances.

Access this chapter

Tax calculation will be finalised at checkout

Purchases are for personal use only

Similar content being viewed by others

Change history

26 March 2023

In the original version of the book, the following corrections has been updated:

Chapter 2

In the Table 2 has been updated as up arrow symbol instead of down arrow.

Chapter 5

Chapter title has been updated from “A Comparison of Feature Construction Methods in the Context of Supervised Feature Election for Classification” to “A Comparison of Feature Construction Methods in the Context of Supervised Feature Selection for Classification”

The correction chapters and the book has been updated with the changes.

References

Alcantarilla, P.F., Bartoli, A., Davison, A.J.: Kaze features. In: European Conference on Computer Vision, pp. 214–227. Springer, Berlin (2012)

Ancuti, C.O., Ancuti, C., Timofte, R., De Vleeschouwer, C.: O-haze: a dehazing benchmark with real hazy and haze-free outdoor images. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 754–762 (2018)

Cai, B., Xu, X., Jia, K., Qing, C., Tao, D.: Dehazenet: an end-to-end system for single image haze removal. IEEE Trans. Image Process. 25(11), 5187–5198 (2016)

Do, T.D., Duong, M.T., Dang, Q.V., Le, M.H.: Real-time self-driving car navigation using deep neural network. In: 2018 4th International Conference on Green Technology and Sustainable Development (GTSD), pp. 7–12. IEEE (2018)

Duong, M.T., Do, T.D., Le, M.H.: Navigating self-driving vehicles using convolutional neural network. In: 2018 4th International Conference on Green Technology and Sustainable Development (GTSD), pp. 607–610. IEEE (2018)

Harris, C., Stephens, M., et al.: A combined corner and edge detector. In: Alvey Vision Conference, vol. 15, pp. 10–5244. Citeseer (1988)

He, K., Sun, J., Tang, X.: Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33(12), 2341–2353 (2010)

Huang, S.C., Cheng, F.C., Chiu, Y.S.: Efficient contrast enhancement using adaptive gamma correction with weighting distribution. IEEE Trans. Image Process. 22(3), 1032–1041 (2012)

Le, M.C., Le, M.H., Duong, M.T.: Vision-based people counting for attendance monitoring system. In: 2020 5th International Conference on Green Technology and Sustainable Development (GTSD), pp. 349–352. IEEE (2020)

Lee, S., Yun, S., Nam, J.H., Won, C.S., Jung, S.W.: A review on dark channel prior based image dehazing algorithms. EURASIP J. Image Video Process. 2016(1), 1–23 (2016)

Leutenegger, S., Chli, M., Siegwart, R.Y.: Brisk: Binary robust invariant scalable keypoints. In: 2011 International Conference on Computer Vision, pp. 2548–2555. IEEE (2011)

Li, C., Guo, J., Porikli, F., Fu, H., Pang, Y.: A cascaded convolutional neural network for single image dehazing. IEEE Access 6, 24877–24887 (2018)

Li, Z., Zheng, J., Zhu, Z., Yao, W., Wu, S.: Weighted guided image filtering. IEEE Trans. Image process. 24(1), 120–129 (2014)

Lowe, D.G.: Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 60(2), 91–110 (2004)

McCartney, E.J.: Optics of the Atmosphere: Scattering by Molecules and Particles. New York (1976)

Nistér, D., Stewénius, H.: Linear time maximally stable extremal regions. In: European Conference on Computer Vision, pp. 183–196. Springer (2008)

Park, D., Park, H., Han, D.K., Ko, H.: Single image dehazing with image entropy and information fidelity. In: 2014 IEEE International Conference on Image Processing (ICIP), pp. 4037–4041. IEEE (2014)

Rahman, Z.u., Jobson, D.J., Woodell, G.A.: Multi-scale retinex for color image enhancement. In: Proceedings of 3rd IEEE International Conference on Image Processing, vol. 3, pp. 1003–1006. IEEE (1996)

Rosten, E., Drummond, T.: Fusing points and lines for high performance tracking. In: Tenth IEEE International Conference on Computer Vision (ICCV’05) Volume 1, vol. 2, pp. 1508–1515. IEEE (2005)

Shen, L., Yue, Z., Feng, F., Chen, Q., Liu, S., Ma, J.: Msr-net: Low-light image enhancement using deep convolutional network (2017). arXiv:1711.02488

Silva, K.G., Aloise, D., Xavier-de Souza, S., Mladenovic, N.: Less is more: simplified nelder-mead method for large unconstrained optimization. Yugoslav J. Oper. Res. 28(2), 153–169 (2018)

Singh, K., Kapoor, R., Sinha, S.K.: Enhancement of low exposure images via recursive histogram equalization algorithms. Optik 126(20), 2619–2625 (2015)

Tarel, J.P., Hautiere, N., Caraffa, L., Cord, A., Halmaoui, H., Gruyer, D.: Vision enhancement in homogeneous and heterogeneous fog. IEEE Intell. Transp. Syst. Mag. 4(2), 6–20 (2012)

Wang, L., Fu, G., Jiang, Z., Ju, G., Men, A.: Low-light image enhancement with attention and multi-level feature fusion. In: 2019 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), pp. 276–281. IEEE (2019)

Wang, M., Tian, Z., Gui, W., Zhang, X., Wang, W.: Low-light image enhancement based on nonsubsampled shearlet transform. IEEE Access 8, 63162–63174 (2020)

Wang, W., Wu, X., Yuan, X., Gao, Z.: An experiment-based review of low-light image enhancement methods. IEEE Access 8, 87884–87917 (2020)

Wang, W., Wei, C., Yang, W., Liu, J.: Gladnet: Low-light enhancement network with global awareness. In: 2018 13th IEEE International Conference on Automatic Face and Gesture Recognition (FG 2018), pp. 751–755. IEEE (2018)

Wang, Z., Bovik, A.C., Sheikh, H.R., Simoncelli, E.P.: Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 13(4), 600–612 (2004)

Wei, C., Wang, W., Yang, W., Liu, J.: Deep retinex decomposition for low-light enhancement (2018). arXiv:1808.04560

Yeh, C.H., Huang, C.H., Kang, L.W.: Multi-scale deep residual learning-based single image haze removal via image decomposition. IEEE Trans. Image Process. 29, 3153–3167 (2019)

Zhang, L., Zhang, L., Mou, X., Zhang, D.: Fsim: a feature similarity index for image quality assessment. IEEE Trans. Image Process. 20(8), 2378–2386 (2011)

Zhu, Q., Mai, J., Shao, L.: A fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 24(11), 3522–3533 (2015)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this paper

Cite this paper

Duong, MT. et al. (2023). An Image Enhancement Method for Autonomous Vehicles Driving in Poor Visibility Circumstances. In: Huang, YP., Wang, WJ., Quoc, H.A., Le, HG., Quach, HN. (eds) Computational Intelligence Methods for Green Technology and Sustainable Development. GTSD 2022. Lecture Notes in Networks and Systems, vol 567. Springer, Cham. https://doi.org/10.1007/978-3-031-19694-2_2

Download citation

DOI: https://doi.org/10.1007/978-3-031-19694-2_2

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-19693-5

Online ISBN: 978-3-031-19694-2

eBook Packages: Intelligent Technologies and RoboticsIntelligent Technologies and Robotics (R0)