Abstract

This chapter aims to critically engage with the performative nature of bibliometric indicators and explores how they influence scholarly practice at the macro, meso, and individual levels. It begins with a comparison between two national performance-based funding systems in Sweden and Norway at the macro level, within universities at the meso level, down to the micro level where individual researchers must relate these incentives to knowledge building within their specialty. I argue that the common-sense “representational model of bibliometric indicators” is questionable in practice, since it cannot capture the qualities of research in any unambiguous way. Furthermore, a performative notion on scientometric indicators needs to be developed that takes into account the variability and uncertainty of the aspects of research that is to be evaluated.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

Introduction

Performance-based research evaluation using quantitative indicators has many purposes. But regardless of their use for ranking purposes, quality evaluation, or the distribution of funding, from a research policy perspective, quantitative indicators are thought of as tools to evaluate research without steering it directly. Often, these metrics (such as citation counts) are interpreted as indicators of “quality.” In bibliometric research, the sociological basis of their use is founded on the Mertonian so-called CUDOS norms. This acronym stands for “Commun[al]ism, Universalism, Disinterestedness, and Organized Skepticism” and is often described as a role model for how research should be conducted. But norms do not determine actual practice, and even though quantitative indicators are often claimed represent notions of quality, it could be argued that several prerequisites have to be met for the fulfillment of such claims. For example, it is expected that the indicators chosen should be distinct objective measures, and that data is unobtrusively collected so that those who are evaluated are not influenced or affected directly by the measurement. In this view, the use of quantitative indicators makes it (relatively) easy to operationalize performance goals based on bibliometric indicators.

In this chapter the following lines of thought are pursued: Firstly, it is argued that the so-called representational model of bibliometric indicators as described above is questionable in practice because goal displacement over time will alter which representation should be chosen, but also that in the light of future developments, representations tend to lose their stability and become contingent on external factors. And secondly, that the uncertainty in relevant choices is not merely a technical problem that is solved by larger samples, better accuracy, or more sophisticated statistics, but that it is inherent in the kind of linear model that is used as the basis for measurement. It is therefore argued that a performative notion on scientometric indicators needs to be developed that takes account of the variability and uncertainty of the aspects of research that is to be evaluated.

This performativity will be investigated using empirical examples at three levels of scale from the perspective of the Swedish research policy. At the macro level, the Swedish performance-based funding system (PRFS) for reallocating parts of the national funding to universities using citation-based bibliometric indicators will be discussed against the background of other available PRFS at the time of its inception in 2009, predominantly, the Norwegian point-based system.

While controversial already at its inception, with suggestions that it should be evaluated after an initial period of use posed both by government officials themselves and by actors across the university sector (Nelhans, 2013), the Swedish system has never been subjected to a formal review (Kesselberg, 2015). Instead, this PRFS has been used relatively untroubled as an established part of research policy (except for the years it has not been used; see below). At the meso level, there are both self-initialized evaluations within universities as well as their internal funding systems, where higher education institutions (HEIs) risk losing self-government due to the establishment of standardized performance-based indicators, leading to a “hands-tied” situation for vice-chancellors when steering has to be negotiated in light of results. Finally, there is the micro level of the individual researcher, who in daily practice must navigate between different sets of norms and directives coming from the other levels as well as discipline-related notions and specific knowledge demands coming from the actual research field at hand.

Algorithmic Historiography and the Birth of Scientometrics

Evaluation of academic research has been with us for a long time. For a century-based timeline, it is enough to go back to the early notions of evaluating American Men of Science (first printed in 1906, later renamed American Men and Women of Science) (Cattell, 1921). This volume, containing biographical sketches for thousands of American scientists, also had a ranking system, whereby an asterisk was affixed to about a thousand entries of scientists that were “supposed to be most important,” “by order of merit” (ibid.). The exact method of calculation was not disclosed, but it is stated that it involved the ranking of subjects within 1 of 12 different natural or exact sciences, ranging from chemistry physics and astronomy, past the biological and earth sciences, mathematics, psychology, and medical sciences, to anthropology. The ranking was performed by ten “leading students of the science” (ibid.). Thereafter, statistical methods of ordering the names were used to finalize the ranking. The darker background for this exercise was to determine a group of leading “American men of science” for scientific study “[t]o secure data for a statistical study of the conditions, performance, traits, etc., of a large group of men of science.” While not clearly stated, it is could be implied that there was a nationalistic air to the endeavor that built on studies by one of his intellectual forefathers, Francis Galton, who published English Men of Science, with the subtitle “Their Nature and Nurture” (Galton, 1876).

Another work prompting for evaluation and distinction of scientific work was made by the first science policy adviser to President Franklin D. Roosevelt, Vannevar Bush, who famously argued for increased support for basic research in public and private colleges in his recommendation for the instigation of a government agency called the National Research Foundation (NRF). Here, Bush argued for a balance between the Foundation’s adherence to the “complete independence and freedom for the nature, scope, and methodology of research carried on in the institutions receiving public funds”; at the same time he argued for the Foundation “retaining discretion in the allocation of funds among such institutions” (Bush, 1945, p. 27). Consequently, Bush suggested the creation of a permanent Science Advisory board composed of disinterested scientists without the intervention of either the legislative or the executive branch of the government. To a large extent, these views still permeate the academic research system and are at heart in the argument for “academic freedom.”

In a parallel historical setting, 60 years ago, the citation index was founded as a means of analyzing the history of science by quantitative methods—for algorithmic historiography (Garfield, 1955). Its use was intended for information retrieval, although some form of evaluation was implied even from the start: ”[A] bibliographic system for science literature that can eliminate the uncritical citation of fraudulent, incomplete, or obsolete data by making it possible for the conscientious scholar to be aware of criticisms of earlier papers” (Garfield, 1955).

Although the incentive to measure scientific publications quantitatively was at least 30 years older (Lotka, 1926), it was not until a citation theory of sorts was formed that interest in the citation as a measure of scientific quality or the merits of research was introduced. Compare, for example, Urquhart’s ranking lists of loans of scientific periodicals (Urquhart, 1959) and Derek de Solla Price’s first notions of “Quantitative Measures of the Development of Science,” first published as early as in 1951 but more generally known from his monographs Science Since Babylon and Little Science, Big Science (Price, 1951, 1961, 1963). In the sixties, together with the development of Sociology of Science and the studies of the institutional structure of science, with Cole and Cole, Zuckerman, and others as members (Cole & Cole, 1967; Zuckerman, 1967), building on the works of Merton (1973a), a new view of the scientific publication and the reference was formed that paved the way for equating quantitative measures of references indexed as citations in the citation index (Kaplan, 1965; Price, 1963, pp. 78–79).

Soon the citation and the metrics that were derived from the practices of publishing within journals had come to use in very different settings. On the one hand, a new field, bibliometrics (Pritchard, 1969), or scientometrics (from Naukometrija; Nalimov & Mul’chenko, 1969), had evolved from the older notion of “statistical bibliography” (Hulme, 1923). To a large degree, this followed the notions from the earlier use of quantitative measures in the library field, both for classification purposes and for journal selection criteria.

Early bibliometrics focused on the network model of the structure of publications (Price, 1965) and the development of bibliographic coupling and co-citation analysis at the article level for identifying “scientific specialties” (Griffith et al., 1974; Kessler, 1963; Small & Griffith, 1974). Co-citation analysis was subsequently developed into focusing on co-citation at the authorship level (McCain, 1986; White & Griffith, 1981), and later at the journal level (McCain, 1991a, 1991b). Notions of co-citations as a means of illustrating the intellectual base of a research area and bibliographic coupling for identifying research fronts have been suggested (Persson, 1994).

On the other hand, the sociological interest in the bibliometric tools led to a closer relationship between bibliometrics and research policy studies. As noted above, the view of the citation as an indicator of quality stems very much from the Mertonian norm system of science. It is commonly owned; universal in terms of being valid everywhere and for anyone; that scientists should be disinterested, meaning that they should not have personal bonds toward the research that they pursue; and they should strive for originality in their research, while at the same time they should hold a skeptical attitude toward all claims, both their own and those of fellow scientists (Merton, 1973b [1942]).

All this lays the ground for the peer review system, by which research is refereed before getting published, but it stands also as a guarantee for us, referring to previous research in a true and timely manner—“standing on the shoulders of giants,” meaning that science is a cumulative knowledge-making process and that we should always acknowledge our predecessors.

Against this, there is another line of argument, stemming from the more critical stance of Science and Technology Studies, stating that, although the norms of doing research are important as the goal, for various reasons they are impossible to follow to the letter in practice.

If we take the Mertonian norms for “how science should be done,” one could talk about a set of counter-norms (Mitroff, 1974), which substitute actual practices of research that many would attribute to problematic issues which limit academic freedom such as external influence or steering of research or more broadly: poor practice. It is not implied that this is a binary distinction, but rather that there is a continuum between the two end-points. These “counter-norms of scientific practice” have been spelled out by the British theorist of science John Ziman as PLACE: Proprietary, Local, Authoritarian, Commissioned, and Expert, as opposites to CUDOS. Ziman showed that in practice, for every virtue of the Mertonian “ideal scientist,” there is a contextual counterforce intertwined, which is hardly possible to break free from. Here, the elevated norms of science meet practice and we get to the first clash between how science “ought to be done” and the practical implication of research being performed in practice.

Bibliometrics for the Evaluation of Research

The Journal Impact Factor (JIF) has become a testament to this duality. It was developed with a certain set of journals in focus, empirically tuned to the publication patterns of the coverage of Science Citation Index (SCI) in the latter half of the 1960s, where the bulk of citations for a paper were found to have been received within a two-year window from its publication (Garfield, 1972). Even though it was not created as a tool for evaluating research(ers), but for calculating the inclusion in SCI, it was almost immediately used in that way upon the publication of the first citation index. This led Eugene Garfield to write an early commentary about the sociological use of his invention, stating: “One purpose of this communication is to record my forewarning concerning the possible promiscuous and careless use of quantitative citation data for sociological evaluations, including personnel and fellowship selection.” Furthermore, he stated quite unequivocally that “[i]mpact is not the same as importance or significance” (Garfield, 1963).

Other bibliometricians even suggested that every bibliometric study should be accompanied by a warning:

The warning reads: “CAUTION! Any attempt to equate high frequency of citation with worth or excellence will end in disaster; nor can we say that low frequency of citation indicates lack of worth.” (Kessler & Heart, 1962)

But while there was stark criticism against this use, by the mid-1970s, there was already an established textbook on “the use of publication and citation analysis in the evaluation of scientific activity” (Narin, 1976).

The Citation as Mediator: The Performativity of “Being Cited”

Here, I would like to briefly discuss the key arguments for and against using citations in evaluation by mentioning the two positions in the controversy regarding indicator use in evaluation. On the one hand, there is the notion that citations indicate the actual use and influence of previous research, and that citation could be seen as a reward (Cole & Cole, 1967) or currency in a scholarly “quasi-economy.” On the other hand, there is the view that researchers cite persuasively, and that citation could be viewed as a rhetorical device (Cozzens, 1989; Gilbert, 1977; Gilbert & Woolgar, 1974). The implications of this perspective in the citation system are important. On the one hand, it could be described as, although researchers cite the sources that have influenced them, giving credit where credit is due, on the other, there are other motivations for citing a reference. It could be done to note that the cited author is wrong (negative citations) or to cite authorities, for example.

Borrowing a notion from the Actor-Network Theory, a bibliometric indicator such as the citation can be described as either an intermediary or a mediator. In the first case, according to Michel Callon (Callon, 1986; Callon et al., 1991), an (1) intermediary only transmits the information from one point to the other without transforming it, while Bruno Latour (2005) has noticed the role of (2) mediators as entities that actually transform the meaning and thus need to be explained in terms of “other activities” (such as the social realm), since these entities not only transfer meaning but translate it. We will not dig deeper into this, but to note that when Derek de Solla Price noted that citations were a viable way of measuring impact in the 1960s, he regarded citations as “unobtrusive” indicators (intermediaries) of scholarly activity, something that could be studied without exercising an influence on those who were to be measured. In this view, then, to theorize about the citation and its role as a mediator of scientific work would be not to view it as a representation of the research that is studied, but rather as a performative agent. The citation as mediator implies the notion of the citation being performative rather than representative in practice (let us leave nature out here).

So, to what consequences does this lead? Well, for one thing, it renders the citation into an object of sociological study and opens up an interesting venue to act upon. Of course, it also brings into question that the quantity of citations implies quality. If we return to the classic debate, as noted above, traditionally, citations are given to research as a reward. This means that citation performance that is observed in the citation index could be viewed as a true representation of “true impact” or quality. From the perspective sketched here, it is more relevant to talk about the performativity of “being cited,” and that we as researchers act both in accordance to this dynamic and in a reflexive mode. In this view the different ways that research is published or the different practices of publishing and citing one’s results are not static, but instead are co-produced by both internal demands from research and the social and, in this internet-based world we live in, technical demands or affordances that are provided by such systems as the citation databases in Web of Science (WoS) or Google Scholar (GS).

Consequences for Research Policy

Moving the discussion to research policy, we can make a similar trajectory from a traditional “linear perspective” of the relationship between science and politics to one which includes multiple feedback loops. For this, we need to ask ourselves: Why would we evaluate research and allocate funding resources based on indicator models?

First of all, research policy needs tools to allocate funds without steering research directly. Secondly, there is also the idea that indicators would mean that evaluation would be based on notions of “quality.” According to the position that was sketched above, these would be the Mertonian CUDOS norms, which would ensure that this is the fact. Of course, this would build on the prerequisites that citation indicators are objective measures and that they are unobtrusive in their actions on researchers (Price, 1963). Lastly, quantitative models are (quite) easy to operationalize, meaning that they can easily be separated from the object under study. Still, they are not easy to interpret and play different roles in different contexts.

In the following, I will make a historical account of research policy and paraphrase an argument made by science policy scholar and Emeritus Professor of Theory of Science Aant Elzinga (personal communication). After World War II three research policy “regimes” can be identified (Table 8.1). There is a move from a so-called linear model where, given enough funding and not disturbing researchers too much, the resulting knowledge could be directly implemented in technology. Next, by the 1960s–1970s there was a notion that by focusing on funding in a specific strain, according to the needs of society, it would be possible to increase the output of useful knowledge. Then, at present, we are in a more heterogeneous constellation where science, technology, and society are much more interlinked and driven by the economic promises of future application of research.

At the same time, there is a general sense in academia that its relation to society has shifted from a social contract of trust to mistrust with regard to control mechanisms being instigated. This is not least driven by governing phenomena such as New Public Management (NPM)—better called “outcome-oriented public management,” empowered by new bureaucratic layers, such as branding and high-ranking list scores—as well as digitalized audit society (Power, 1999), seeking to foster cultures of bibliometric compliance in academia.

The concept of “epistemic drift” (Elzinga, 1984, 1997, 2010), first noted in connection with sectionalization policy, is relevant here. It states that politically driven agendas can crowd out internal quality control criteria in favor of external relevance. With “economization” pressures nowadays, we find the same risk. With regard to scientometric indicators, one could add the notion of “bibliometric creep,” where, in practice, bibliometric measures are constantly tuned to external needs not linked to internal research values or needs.

This situation could be explicated with the notion of the “co-production” of science and society. Scientometric indicators play an increasing role in research, both by its design as a reflection of the act of citing scientific references and as a result of being constructed—and used—in valuation practices of perceived scientific quality. Therefore, it is important to study what is here labeled “the performative nature of citations,” either critically (1) from the outside, or as a more (2) reflexive endeavor within the metric community, taking into account that practitioners in the scientometric community create or employ indicators in different ways that have an impact on those who are measured. Among other things, it is often argued that single indicators of research (such as the citation) could not be regarded as the actual representation of how research “is done.” Instead, it is important to establish their origin and subsequent development to get an understanding of how they work in practice.

Co-production of Science and Society

From a constructivist position within science and technology studies (STS)—which generally has been highly critical to the use of quantitative indicators to represent research—what scientific research is and what it should be are empirical questions that are context dependent. This means that cognitive and social factors cannot be separated from each other. In this chapter, this critique is taken at face value in that the technical conditions, society’s demands to measure research performance and researchers’ pursuit of knowledge, are treated as expressions of what has been described as a co-production (Jasanoff, 2004) between science, technology, and society, wherein there is mutual interdependency between science/technology and society (Felt et al., 2017).

But there is yet another level that co-production works on that is relevant to the topic of this chapter: At the policy level, governments and other policy-setting organizations introduce new indicators to evaluate research based on how it performs in the societal realm as “societal impact” or as “collaboration with society.” Today, it is even stated that “[s]ocietal impact should increase” (Prop. 2016/17: 1, 2016, p. 20). In later budgets, economic incentives for collaboration with societal actors have been introduced in the national funding of higher education institutions in Sweden (Prop. 2019/20: 1, 2019).

Performance-Based Research Allocation Models at Three Levels

In the following, I will argue that the way indicators are implemented in different contexts means that the actors in the academic system are torn between different ways of evaluating the academic impact of research, which risks making it problematic for the individual researcher to navigate the evaluational landscape. By taking the Swedish PRFS as an example, we will pick three different levels of bibliometric evaluation for the allocation of funding: the macro level, the national renegotional model for funding HEIs; the meso level, within universities; and lastly, at the micro level, using the individual researcher as the object of study. It should also be noted that “evaluation” will be used as a term to describe the respective performance-based funding systems, since, in their presentation, they are described as quality-based models (Prop. 2008/09: 50, 2008, p. 55) (Universitets- og høgskolerådet, 2004, p. 35).

To contextualize the development of the Swedish PRFS, this section outlines the immediate research policy background against which it was developed. In this new era of epistemic drift, as proposed above, at the turn of the century, one could identify three means of using publication-based evaluation indicators apart from a pure peer-review-based model (Hicks, 2012). Such a system had been used in the United Kingdom since 1986, using a purely qualitative evaluation system with peer review panels to evaluate the research within universities. The Research Assessment Exercise (RAE), generally performed every six years (since 2014, redeveloped into the Research Evaluation Framework) has, in review, been found to be resource-intensive and expensive (RAE, 2009). In the mid-1990s, Australia developed a publication-based indicator that evaluated HEIs based on the count of Web of Science (WoS)-indexed publications and award funding accordingly. It was not met favorably by experts. For example, one influential study showed that while the numbers of publications rose in the Australian higher education system during the time, researchers seemed to publish their studies in lesser ranked journals, as measured with JIF (Butler, 2003). Notions such as salami slice publishing and Least Publishable Unit (LPU) became household terms during this time.

When it was time for the Nordic countries to select a model for evaluating research, both the British RAE model and the Australian straight counting were dismissed. As will be shown below, different choices were made. On the one hand, in Norway, an evaluation model using the perceived quality of the publication channel was introduced, while in Sweden, the perceived quality of the actual specimen using citation counts as an indicator was introduced. Arguably, it is only here that we can speak of bibliometric systems that involve calculations which attempt to compare the results between research fields. The next section presents the main features of the Norwegian and Swedish PRFS before we compare some of their main characteristics.

PRFS at the Macro Level: A Comparison Between the Norwegian and Swedish Systems

As noted above, in Norway, the funding model was developed in light of the Australian model and its perceived shortcomings regarding concerns of mass publishing of journal articles (Universitets- og høgskolerådet, 2004). Therefore, it was found necessary to introduce a quality-based notion into the evaluation indicator. In its simplest form, it can be described as measuring impact based on publication “channel” and ”quality level,” where normal publication channels are evaluated at the basic level (1), and high-quality publication channels are evaluated at level 2. For journals, in disciplines with a tradition of publishing in (double-blind) peer-reviewed journals found in citation indices such as WoS, these levels were based on JIF, and for other disciplines and publication channels such as book publishers, they were based on the degree of internationalization. Panels representing scholars from different fields were then in charge of evaluating the publication channels and suggest substitutions of sources at different levels. In Sweden, then, the performance-based allocation model for the bibliometric indicator used field normalized citations and Waring distributions of publications were used to evaluate the performance of universities. This model was inspired by a system used in the Flanders region at the same time. Here, only research publications indexed in WoS were included (Sandström & Sandström, 2009; Vetenskapsrådet, 2009). Additional features are that a four-year moving average is used, together with author fractionalization, and lastly, an additional (arbitrary) weighting that awards different research areas differently so that Medicine and Technology are multiplied with 1.0, the Sciences with 1.5, the Social Sciences and the Humanities with 2.0, and other areas with 1.1. The source of this weighting scheme has not been recovered, but seems to be old-established in Swedish research policy (Nelhans, 2013; Prop. 2008/09: 50, 2008; Prop. 2012/13: 30, 2012)

If we compare the Swedish and Norwegian models, we find several contrasting features (Table 8.2).

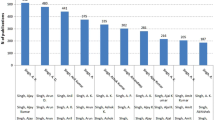

First of all, the Norwegian model distributes 2% of the total funding (Hicks, 2012, p. 257), while the Swedish model first used 5% and later 10% of the renegotiated funding. While both models are designed to measure the performance of the unit under study, the Swedish model evaluates performance based on received citations for each specimen in four years, the Norwegian uses an impact-factor-styled model, which evaluates the judged channel of the publication, rather than the actual impact in itself. These features result in differences concerning the selection, sources of data, measures of quality, and transparency of the evaluation exercises. First of all, the underlying coverage of the Norwegian model consists of all publication channels, regardless the form of publications that are found to have a scientific, peer-reviewed status. Journals, monographs, and edited books can be eligible. An authoritative list of all channels is maintained and all publications that are reported by Norwegian researchers are matched against this list. The Swedish model, in turn, uses an external source, the WoS journal indices, which means that only publications in journals indexed by this database are covered. Concerning the actual quality indicator, the Swedish model uses field-normalized citations to the (likewise) field-normalized publications from researchers at the respective HEIs. In Norway, as noted above, the evaluated level of the publication impact, instead of the actual impact, is noted. Lastly, with regard to the transparency of each model, the Norwegian uses a pre-determined system of points (0.7, 1, 5 at level 1 and 1, 3, 8 points respectively at level 2 for chapters in edited books, journal articles, and monographs). In the Swedish model, the indicators are not transparent but relate not only to the number of citations but also to the calculated weight factors for both citations and publications, as well as the total number of publications and citations in the whole model. Instead, the actual “worth” of one point in the Norwegian model could be established quite easily and was calculated to the sum of about 40,030 NOK in the year 2007 (SOU, 2007, p. 81, 2007, p. 385). By dividing the total funding each year in Norway with the accrued number of points performed in the Norwegian system, it can be found that there is a significant devaluation of the performance of Norwegian researchers, as valued in funding per point. As shown in Fig. 8.1, for each year, a point is worth less, and in 2019, it was calculated to 23,572 NOK, based on data from Kunnskapsdepartementet (Kunnskapsdepartementet, 2018). If consumer price index is taken into account, a Norwegian publishing point is worth roughly 45% of the buying power of a Norwegian point in 2007.

On the other hand, while the Swedish model seems to be straightforward to calculate, in practice, there have been several issues that were reported at the outset, and that have never been corrected. Most importantly, the Swedish Research Council, which has been tasked with performing the calculations, has reported every year that they are not able to calculate the Waring distribution for field-normalizing the publication counts (Swedish Research Council, 2009; Vetenskapsrådet, 2011). Instead, pre-set values as described already in 2007 have been used (SOU, 2007, p. 81, 2007). Any new publications that have been introduced have been given the same reference value as the field that is most prominent in the new sources’ reference lists (e.g. Vetenskapsrådet, 2012). While not properly documented, by inspecting each yearly budget, it can be found that the model has not been used for reallocating the basic funding in the Swedish budget since the year 2017 (Prop. 2016/17: 1, 2016, p. 208).

The outcome of the Swedish PRFS analysis shows some unexpected systemic features that are relevant to note. Below, the renegotiated funding for four HEI types that are found in Sweden was calculated. For administrative uses, the Swedish HEI system is divided into comprehensive universities (e.g. Uppsala and Lund), special universities, (e.g. Karolinska Institutet, KI, and Chalmers University of Technology), newly formed (~2005) universities (e.g. Karlstad and Linnaeus Universities), and university colleges, roughly correlating with polytechnics in the United Kingdom, which only have the right to award doctorates in select subjects (Hansson et al., 2019).

As noted in Fig. 8.2, for the years 2010–2016, for which the renegotiation model was used, an interesting feature could be noted. Only special universities and university colleges have a net positive performance in bibliometric performance as calculated in SEK, based on government data. These results are somewhat unexpected, given that basic funding is heavily weighted toward universities, with very small parts distributed to university colleges. That these latter perform so much better than expected is an open question that would need further attention. For instance, a 1 MSEK in extra funding based on performance is a quite large sum for a university college with basic funding amounting to less than 100 MSEK on average, while it is a rather small sum for one of the comprehensive universities like Uppsala University with basic funding for research at about 2000 MSEK (e.g. Prop. 2016/17: 1, 2016).

A last feature of the Swedish performance-based renegotiation model needs to be described. By way of how it is presented in the annual budgets, this model has never been recorded to render any significant negative economic impact for universities, regardless of their performance, a seemingly magical feat. This is due to how the results are presented, combined with new funding added to the university sector each year. In all the years the model has been used for renegotiation, the combined funds have shown a negative result just once, as shown in Table 8.3, where the university college in Kristianstad received net negative funding of 55,000 SEK, about €5000 (Nelhans, 2015; Prop. 2011/12: 1, 2011, p 169).

At the Meso Level, Within Universities

In two studies, we set out to map and describe different bibliometric models and indicators that are used in the allocation of funds within Swedish HEIs and the collective aim was to invite a critical discussion about the advantages and disadvantages and the relative value of using such indicators for allocation of funds within academia (Hammarfelt et al., 2016; Nelhans & Eklund, 2015).

We found that at the time, all HEIs in Sweden—except Stockholm School of Economics—used (or were in the process of start using, in the case of Chalmers University of Technology) bibliometric measures to some extent for resource allocation at one or several levels. On the other hand, it was found that the types of measures and models used differ considerably, but two types stood out:

-

“Actual impact” calculated as the share of publications and citations (as used in the Swedish national allocation model

-

“Point-based evaluation” of the number of publications, combined with an appraisal of the average impact of the publication channel (similar to the Norwegian/Danish/Finnish model).

Two specialized universities, Karolinska Institutet (KI) and Royal Institute of Technology in Stockholm (KTH), used state-of-the-art models, including field-normalization of citations at the aggregate level, for the performance-based model at department or school level. Additionally, comprehensive universities with a broad range of disciplines often use a range of measurements depending on faculty type.

At the Micro Level, Individual Researchers

In our studies, we also found that, sometimes, performance-based funding models were used also at the micro level. Here we found several distinct ways in which bibliometric indicators were used for funding individual researchers. Three of these are mentioned here.

Even before the Swedish national PRFS was introduced, a performance-based model including a bibliometric component has been used since 2008 at the humanities faculty at Umeå University. Here, researchers and teachers apply for funds in competition, and publication measures are an important part of the application process. In an evaluation performed already in 2011, it was found that “[m]any believe that the system has a negative impact on the work climate,” and that “many cite that they experience individual stress and press.” (Sjögren, 2011).

At Linnaeus University, 2.5% of the allotted research funding was distributed at the individual level using a “field-normalized publication point model” where publication points were translated to actual currency. Between 8000 and 150,000 SEK were distributed directly to the researcher based on publication performance. At the same time, publication points <8000 SEK were distributed to the department instead, probably so that the model would not stigmatize researchers who do not perform well. Additionally, an excellence share was distributed as a “bonus”; 20% of researchers with the highest share of publication points receive an additional 15,000 SEK per individual.

At Luleå Technical University, an “economic publishing support” was awarded at the departmental level, but directly based on a price tag per publication with an ISSN. At the Norwegian level 1, 35,000 SEK was awarded and double that amount for a publication at level 2 or indexed in WoS. An interesting feature of the model was that since there were set price tags, in 2016, researchers “broke the bank” when they performed better than the amount of funding allowed. The result was that for conference papers with an ISSN at level 2, the lower amount was awarded, while at level 1 the support was removed (Luleå Technical University, 2016).

Conclusions: The Performativity of Performance-Based Research Funding on Different Levels

Here I would like to reflect on how researchers are affected by the above-mentioned examples and how the culture of bibliometric compliance is performed in practice, and what role does peer review play in this equation.

As noted above, peer review is at the center of the publication tradition mentioned here. In general, only peer-reviewed publications are used in the bibliometric evaluation and as a basis for PRFS. But that is not to say that peer review in itself has issues that may affect how it impacts bibliometric compliance. While peer review in general is seen as a guarantee against unsubstantiated claims and subjective arguments, in practice, reviewers are also actors in the performative setting that involves the publication of research. Bibliometric data is seldom used without an evaluator that is using them, and, as discussed at several instances here, decisions are partly based on the data, but often in light of expected outcomes and normative views about how it “should be.” For example, it is expected that bibliometric models are constructed so that all disciplines can perform “on par”; otherwise, there would be claims that the model is biased, even though we know that researchers in different disciplines and subspecialties publish in different ways. Some may output loads of short papers in collaboration, while others would call these working papers or even small studies for a manuscript in a book-length format that is published by a respected publication house. Still, as in the Norwegian model, there is an inherent claim that you can compare between different kinds of research. And in Sweden, the citation-based system normalizes both the production and citation impacts with European averages at the discipline level to make the numbers comparable. And when the numbers cannot be calculated, or when the government decides to add a weighting factor based on established ratings, an arbitrary factor is included, thus yielding the bibliometric system less effective.

While it is hard to directly pinpoint the effect of a single incentive on researchers’ publication practice, there is an extensive literature that discusses the issues (e.g. de Rijcke et al., 2016; Hammarfelt & de Rijcke, 2015; Hicks et al., 2015; Wilsdon et al., 2015). These authors argue that there are visible effects on researchers’ publishing practice and that these could be related to the introduction of PRFS at different levels. Rather than reiterating their arguments, I will exemplify these issues with several practices that I have documented at different levels in academia.

First, at the individual level, let us start by making a Google search on the terms “Curriculum vitae” AND “H-index.” H-index is a measure of the rate of articles having a certain number of citations, which has become an increasingly used shorthand for scholarly excellence (Bar-Ilan, 2008). We find that researchers seemingly have gotten the incentive to add the H-index value to their CVs, sometimes even at the title level of the document, such that it states: “Curriculum vitae for [name], [title], H-index = [h].” Of course, this does not show a direct correspondence between the funding model and scholarly practice, but it does show that researchers are catching on when new indicators are constructed.

Many other activities are used to “game the system.” With regard to citation data, there is self- (or colleague-) citing of references to one’s own work or editor coercion, where editors or reviewers suggest references to articles published in the same journal or to themselves, for example, “Manuscript should refer to at least one article published in ‘*** Journal of *** Sciences’ [the title of the same journal]” (Nelhans, 2013). According to the ethics guidance for peer reviewers published by the Committee on Publication Ethics (COPE Council, 2017), reviewers should “refrain from suggesting that authors include citations to your (or an associate’s) work merely to increase citation counts or to enhance the visibility of your or your associate’s work; suggestions must be based on valid academic or technological reasons.”

Citation cartels, where editors join up to cite each other’s journals to artificially raise each journal’s JIF, have been identified (Davis, 2012) and, in some cases, this has led to the removal of the journals from citation indices.

At the meso level, the will to perform well in university rankings could lead to the following suggestions by administrators. ”[A]nother way of advancing on the list would be to appoint highly cited researchers since they ’bring with them’ their earlier citations”(Gunnarsson, 2013). This claim is not even true, since citations are linked to the affiliation at the time of publication and will not follow the researcher to a new institution. Other suggestions have been to publish review articles or methods papers, especially if these are cited extensively outside the specialty.

Finally, I would like to reflect on the consequences of using bibliometric indicators for evaluating and funding research. As already noted above, using indicators for evaluating research builds on the notion that (aspects of) quality can be measured. The goal of this study has not been to show that indicators themselves are bad, and should be abolished altogether, but instead to critically engage with their performative nature and to explore ways in which they could influence scholarly practice at many levels.

For instance, at the research policy level, the occurrence of bibliometric models has been regarded as a supposedly objective and unobtrusive tool to “tap” the research system for information about its intrinsic qualities, but without influencing the research analyzed. But here, unobtrusiveness has been questioned, at the individual level, not least in how new practices of searching for one’s publications in online search indices to find out how many references one’s works have received or to calculate one’s H-index.

For research in itself, it can be seen that different bibliometric models, such as publication-point-based models or citation-based models, suit some disciplines better, while others fare worse. Additionally, the introduction of performance-based models creates incentives for researchers to publish according to the yardstick used, rather than to further knowledge as such. This creates false competition.

For researchers, performance-based evaluation incentives are forced on them in a top-down direction, as performance-based models have trickled down at all levels in the research practice. To conclude, the impact on individual researchers is discussed as they grapple with adapting their performance to different and sometimes contradictory quantitative benchmarks.

Indicators have been shown to work not only as intermediaries between research and research policy but, at the same time, as mediators, which add performative aspects in terms of incentives, shifted policy goals, and evaluation practices that act to change how research gets done.

To end on a somewhat more positive note, rather than arguing for the abolishment of metric indicators in evaluation and funding allocation altogether, I would like to argue for some sort of constructive pragmatism (echoing a call for methodological pragmatism by (Sivertsen, 2016)) in their use. The proposed program will build on what I label “meta-metric evaluation,” which adapts its use of indicators for evaluation in a specific situation or for a specific subject area from within a pool of possible measures that are combinable in creative ways. This would mean that the universalistic claim of having an indicator to evaluate them all would be lost in favor of lining up indicator-based measurements with skilled expertise that is trained to evaluate research using both qualitative and quantitative data. Instead, transparency and openness would be the guiding principles, while at the same time, a communal agreement of what works best for the discipline or subspecialty at hand would be needed. While the latter is a setback for the prognosis of future metric performance, at the same time, it counteracts the use of gaming metrics or adapting to the scale, and instead helps researchers to focus on the “best possible research” regardless of whether its metric footprint will be the largest according to some external measure of past performance.

Still, since at the aggregate level, there is a quite good correlation between many scientometric indicators and peers’ evaluation of the underlying research, it would be counterintuitive to not use them at all. The mission instead is to curb the scientometric indicators to tailor them into measuring what we intend to measure instead of what we can measure.

References

Bar-Ilan, J. (2008). Informetrics at the beginning of the 21st century‚ A review. Journal of Informetrics, 2(1), 1–52. https://doi.org/10.1016/j.joi.2007.11.001

Bush, V. (1945). Science: The Endless Frontier a Report to the President by Vannevar Bush, Director of the Office of Scientific Research and Development, July 1945 (Web version). United States Government Printing Office. http://www.nsf.gov/od/lpa/nsf50/vbush1945.htm

Butler, L. (2003). Explaining Australia’s increased share of ISI publications—the effects of a funding formula based on publication counts. Research Policy, 32(1), 143–155. https://doi.org/10.1016/S0048-7333(02)00007-0. http://www.sciencedirect.com/science/article/B6V77-455VNDT-1/2/ebf2bd1294bd7f0aef6e2631791d2bec

Callon, M. (1986). Some elements of a sociology of translation: Domestication of the scallops and the fishermen of St Brieuc Bay. In J. Law (Ed.), Power, action and belief: A new sociology of knowledge? (pp. 196–233). Routledge & Kegan Paul.

Callon, M., Courtial, J. P., & Laville, F. (1991). Co-word analysis as a tool for describing the network of interactions between basic and technological research: The case of polymer chemistry. Scientometrics, 22(1), 155–205. https://doi.org/10.1007/BF02019280

Cattell, J. M. (Ed.). (1921). American men of science (3rd ed.). Jacques Cattell Press, The Science Press.

Cole, S., & Cole, J. R. (1967). Scientific output and recognition: A study in the operation of the reward system in science. American Sociological Review, 32(3), 377–390. http://www.jstor.org/stable/2091085

Consumer Price Index. (2020). Statistics Norway. https://www.ssb.no/en/kpi

COPE Council. (2017). Ethical guidelines for peer reviewers.

Cozzens, S. E. (1989). What do citations count? The rhetoric-first model. Scientometrics, 15(5), 437–447. https://doi.org/10.1007/BF02017064

Davis, P. M. (2012). The emergence of a citation cartel. Scholarly Kitchen. https://scholarlykitchen.sspnet.org/2012/04/10/emergence-of-a-citation-cartel/

de Rijcke, S., Wouters, P. F., Rushforth, A. D., Franssen, T. P., & Hammarfelt, B. (2016). Evaluation practices and effects of indicator use—a literature review. Research Evaluation, 25(2), 161–169.

Elzinga, A. (1984). Research, bureaucracy and the drift of epistemic criteria. In B. Wittrock & A. Elzinga (Eds.), The University research system. The public policies of the home of scientist (pp. 191–220). Almqvist & Wiksell International.

Elzinga, A. (1997). The science-society contract in historical transformation: With special reference to “epistemic drift”. Social Science Information, 36(3), 411–445. https://doi.org/10.1177/053901897036003002

Elzinga, A. (2010). Globalisation, new public management and traditional university values. 1st Workshop of the Nordic Network for International Research Policy Analysis (NIRPA) 7–8 April 2010, Swedish Royal Academy of Engineering Sciences (IVA), Stockholm.

Felt, U., Fouché, R., Miller, C. A., & Smith-Doerr, L. (Eds.). (2017). The handbook of science and technology studies (4th ed.). MIT Press.

Galton, F. (1876). English men of science: Their nature and nurture. Macmillan & Co..

Garfield, E. (1955, July 15). Citation indexes for science: A New dimension in documentation through association of ideas. Science, 122(3159), 108–111.

Garfield, E. (1963). Citation Indexes in Sociological and Historical Research. American Documentation, 14, 289–291.

Garfield, E. (1972). Citation analysis as a tool in journal evaluation. Science, 178, 471–479. (Essays of an Information Scientist, Vol1, pp. 527–544, 1962–73)

Gilbert, G. N. (1977). Referencing as persuasion. Social Studies of Science, 7(1), 113–122. http://www.jstor.org/stable/284636

Gilbert, G. N., & Woolgar, S. (1974). The quantitative study of science: An examination of the literature. Science Studies, 4(3), 279–294.

Griffith, B. C., Small, H. G., Stonehill, J. A., & Dey, S. (1974). The structure of scientific literatures II: Toward a macrostructure and microstructure for science [Article]. Science Studies, 4(4), 339–365. https://doi.org/10.1177/030631277400400402

Gunnarsson, M. (2013). SHANGHAIRANKINGEN 2013: En analys av resultatet för Göteborgs universitet. PM 2013: 07 (Dnr: V 2013/621).

Hammarfelt, B., & de Rijcke, S. (2015). Accountability in context: Effects of research evaluation systems on publication practices, disciplinary norms, and individual working routines in the faculty of Arts at Uppsala University [Article]. Research Evaluation, 24(1), 63–77. https://doi.org/10.1093/reseval/rvu029

Hammarfelt, B., Nelhans, G., Eklund, P., & Åström, F. (2016). The heterogeneous landscape of bibliometric indicators: Evaluating models for allocating resources at Swedish universities. Research Evaluation, 25(3), 292–305. https://doi.org/10.1093/reseval/rvv040

Hansson, G., Barriere, S. G., Gurell, J., Lindholm, M., Lundin, P., & Wikgren, M. (2019). The Swedish research barometer 2019: The Swedish research system in international comparison. Swedish Research Council.

Hicks, D. (2012). Performance-based university research funding systems. Research Policy, 41(2), 251–261. https://doi.org/10.1016/j.respol.2011.09.007

Hicks, D., Wouters, P., Waltman, L., Rijcke, S. d., & Rafols, I. (2015). The Leiden Manifesto for research metrics. Nature, 520, 429–431. https://doi.org/10.1038/520429a

Hulme, E. W. (1923). Statistical bibliography. Butler & Tanner; Grafton & Co.

Jasanoff, S. (2004). States of knowledge: The co-production of science and the social order. Routledge.

Kaplan, N. (1965). The norms of citation behavior: Prolegomena to the footnote. American Documentation, 16(3), 179–184. https://doi.org/10.1002/asi.5090160305

Kesselberg, M. (2015). Forskningsresurser baserade på prestation. Universitetskanslersämbetet.

Kessler, M. M. (1963). Bibliographic coupling between scientific papers [Article]. American Documentation, 14(1), 10–25. https://doi.org/10.1002/asi.5090140103

Kessler, M. M., & Heart, F. E. (1962). Concerning the probability that a given paper will be cited (pp. 19). Cambridge: Massachusetts Institute of Technology.

Kunnskapsdepartementet. (2018). Orientering om forslag til statsbudsjettet 2019 for universitet og høgskolar. https://www.regjeringen.no/contentassets/31af8e2c3a224ac2829e48cc91d89083/orientering-om-statsbudsjettet-2019-for-universiteter-og-hogskolar-100119.pdf

Latour, B. (2005). Reassembling the social: An introduction to actor-network-theory. Oxford University Press.

Lotka, A. J. (1926). The frequency distribution of scientific productivity. Journal of the Washington Academy of Sciences, 16, 317–324.

Luleå Technical University. (2016). Ekonomiskt publiceringsstöd 2015–2016. https://www.ltu.se/cms_fs/1.79817!/file/Ekonomiskt%20publiceringsst%C3%B6d%202015-2016.docx

McCain, K. W. (1986). Cocited author mapping as a valid representation of intellectual structure. Journal of the American Society for Information Science, 37(3), 111–122. https://doi.org/10.1002/(sici)1097-4571(198605)37:3<111::aid-asi2>3.0.co;2-d

McCain, K. W. (1991a). Core journal networks and cocitation maps—new bibliometric tools for serials research and management [Article]. Library Quarterly, 61(3), 311–336.

McCain, K. W. (1991b). Mapping economics through the journal literature—an experiment in journal cocitation analysis [Article]. Journal of the American Society for Information Science, 42(4), 290–296. https://doi.org/10.1002/(sici)1097-4571(199105)42:4<290::aid-asi5>3.0.co;2-9

Merton, R. K. (1973a). The sociology of science: Theoretical and empirical investigations. The University of Chicago Press.

Merton, R. K. (1973b [1942]). The normative structure of science. In The sociology of science: Theoretical and empirical investigations (pp. 267–278). The University of Chicago Press.

Mitroff, I. I. (1974). Norms and counter-norms in a select group of the Apollo moon scientists: A case study of the ambivalence of scientists. American Sociological Review, 39(4), 579–595. http://www.jstor.org/stable/2094423

Nalimov, V. V., & Mul’chenko, Z. M. (1969). Naukometriya. lzucheniye Razvitiya Nauki kak Informatsionnogo Protsessa [Translated title: Measurement of Science. Study of the Development of Science as an Information Process. Washington, DC: Foreign Technology Division, U.S. Air Force Systems Command, 13 October 1971. 196 p.]. http://www.garfield.library.upenn.edu/nalimov/nalimovmeasurementofscience/book.pdf

Narin, F. (1976). Evaluative bibliometrics: The use of publication and citation analysis in the evaluation of scientific activity. Report. (pp. 459): Cherry Hill, New Jersey: Computer Horizons, Inc.

Nelhans, G. (2013). Citeringens praktiker. Det vetenskapliga publicerandet som teori, metod och forskningspolitik. (The practices of the citation: Scientific publication as theory, method and research policy) University of Gothenburg]. Gothenburg. http://hdl.handle.net/2077/33516

Nelhans, G. (2015). Meaningful citation analysis? In 20th Nordic Workshop on Bibliometric and Research Policy, Oslo.

Nelhans, G., & Eklund, P. (2015). Resursfördelningsmodeller på bibliometrisk grund vid ett urval svenska lärosäten. http://urn.kb.se/resolve?urn=urn:nbn:se:hb:diva-21

Persson, O. (1994). The intellectual base and research fronts of JASIS 1986–1990. Journal of the American Society for Information Science, 45(1), 31–38. https://doi.org/10.1002/(SICI)1097-4571(199401)45:1<31::AID-ASI4>3.0.CO;2-G

Power, M. (1999). The audit society: Rituals of verification. Oxford University Press.

Price, D. J. d. S. (1951). Quantitative measures of the development of science VI Congrès International des Histoire des Sciences, août 1950, Amsterdam.

Price, D. J. d. S. (1961). Science since Babylon ([1975] ed.). Yale University Press.

Price, D. J. d. S. (1963). Little science, big science. Columbia University Press.

Price, D. J. d. S. (1965). Networks of scientific papers. Science, 149(3683), 510–515.

Pritchard, A. (1969). Statistical bibliography or bibliometrics? Journal of Documentation, 25(4), 348–349.

Prop. 2008/09:50. (2008). Ett lyft för forskning och innovation [Proposition]. Ministry of Education.

Prop. 2011/12: 1. (2011). Förslag till statsbudget för 2012: Utgiftsområde 16 (Vol. 2011/12: 1) [Proposition]. Ministry of Education.

Prop. 2012/13: 30. (2012). Forskning och innovation. Ministry of Education.

Prop. 2016/17: 1. (2016). Utgiftsområde 16, Government budget. Ministry of Finance.

Prop. 2019/20: 1. (2019). Utgiftsområde 16, Government budget.

RAE. (2009). RAE 2008 accountability review. PA consulting services limited. http://www.hefce.ac.uk/pubs/rdreports/2009/rd08_09/rd08_09.pdf

Sandström, U., & Sandström, E. (2009). The field factor: Towards a metric for academic institutions. Research Evaluation, 18(3), 243–250.

Sivertsen, G. (2016). A welcome to methodological pragmatism. Journal of Informetrics, 10(2), 664–666.

Sjögren, D. (2011). Utvärdering av kvalitetsbaserat resurstilldelningssystem på humanistiska fakulteten. Rapport till fakultetsnämnden 16/9 2011. Umeå: Institutionen för idé- och samhällsstudier, Umeå universitet. https://www.aurora.umu.se/globalassets/dokument/enheter/humfak/for-vara-anstallda/forskningsfragor/utvarderingav-kvalitetsbaserad-resurstilldelning-.docx

Small, H. G., & Griffith, B. C. (1974). The structure of scientific literatures I: Identifying and graphing specialties. Science Studies, 4(1), 17–40.

SOU. (2007). Resurser för kvalitet: Slutbetänkande av Resursutredningen. SOU 2007:81. Utbildningsdepartementet. Stockholm: Fritzes.

Swedish Research Council. (2009). Bibliometrisk indikator som underlag för medelsfördelning. Svar på uppdrag enligt regeringsbeslut U2009/322/F (2009-01-29) till Vetenskapsrådet.

Universitets- og høgskolerådet. (2004). Vekt på forskning: Nytt system for dokumentasjon av vitenskapelig publisering. http://www.uhr.no/documents/Vekt_p__forskning__sluttrapport.pdf

Urquhart, D. J. (1959). Use of scientific periodicals. In Proceedings of the International Conference on Scientific Information, Nov.16-21 1958, Washington.

Vetenskapsrådet. (2009). Bibliometrisk indikator som underlag för medelsfördelning. Svar på uppdrag enligt regeringsbeslut U2009/322/F (2009-01-29) till Vetenskapsrådet.

Vetenskapsrådet. (2011). Svar på regeringsuppdrag U2011/1203/F: Uppdrag till Vetenskapsrådet att redovisa underlag för indikatorn vetenskaplig produktion och citering. http://www.bibl.liu.se/bibliometri/litteratur/1.275135/VR-svar_110607_Regeringsuppdrag_U2011-1203-F.pdf

Vetenskapsrådet. (2012). Svar på regeringsuppdrag U2011/1203/F: Uppdrag till Vetenskapsrådet att redovisa underlag för indikatorn vetenskaplig produktion och citering. http://www.bibl.liu.se/bibliometri/litteratur/1.354375/VR-svarpregeringsuppdragU2011-1203-F_Underlagfrindikatornvetenskapligproduktionocitering.pdf

White, H. D., & Griffith, B. C. (1981). Author cocitation—a literature measure of intellectual structure [Article]. Journal of the American Society for Information Science, 32(3), 163–171. https://doi.org/10.1002/asi.4630320302

Wilsdon, J., Allen, L., Belfiore, E., Campbell, P., Curry, S., Hill, S. A., Jones, R., Kain, R., Kerridge, S., Thelwall, M., Tinkler, J., Viney, I., Wouters, P., Hill, J., & Johnson, B. (2015). The metric tide: Report of the independent review of the role of metrics in research assessment and management. https://doi.org/10.13140/RG.2.1.4929.1363

Zuckerman, H. (1967). Nobel laureates in science: Patterns of productivity, collaboration, and authorship. American Sociological Review, 32, 391–403.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Copyright information

© 2022 The Author(s)

About this chapter

Cite this chapter

Nelhans, G. (2022). Performance-Based Evaluation Metrics: Influence at the Macro, Meso, and Micro Level. In: Forsberg, E., Geschwind, L., Levander, S., Wermke, W. (eds) Peer review in an Era of Evaluation. Palgrave Macmillan, Cham. https://doi.org/10.1007/978-3-030-75263-7_8

Download citation

DOI: https://doi.org/10.1007/978-3-030-75263-7_8

Published:

Publisher Name: Palgrave Macmillan, Cham

Print ISBN: 978-3-030-75262-0

Online ISBN: 978-3-030-75263-7

eBook Packages: EducationEducation (R0)