Abstract

Assigning a label to each pixel in an image, namely semantic segmentation, has been an important task in computer vision, and has applications in autonomous driving, robotic navigation, localization, and scene understanding. Fully convolutional neural networks have proved to be a successful solution for the task over the years but most of the work being done focuses primarily on accuracy. In this paper, we present a computationally efficient approach to semantic segmentation, while achieving a high mean intersection over union (mIOU), \(70.33\%\) on Cityscapes challenge. The network proposed is capable of running real-time on mobile devices. In addition, we make our code and model weights publicly available.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Semantic segmentation is a major challenge in computer vision that aims to assign a label to every pixel in an image. [6, 16] Fully convolutional networks are shown to be the state-of-art approach in semantic segmentation tasks over the recent years and offer simplicity and speed during learning and inference [17]. Such networks have a broad range of applications such as autonomous driving, robotic navigation, localization, and scene understanding.

These architectures are trained by supervised learning over numerous images and detailed annotations for each pixel. Data sets that offer semantic segmentation annotations on a rich variety of objects and stuff categories have emerged such as COCO [16], ADE20K [25], Cityscapes [8], PASCAL VOC [10], thus opening new windows in the field.

Computationally efficient convolutional networks have been gaining momentum over the recent years but the segmentation task is still an open problem. Proposed networks for the semantic segmentation task are deep and resource hungry because of their purpose of achieving the highest accuracy such as [4, 6, 20, 24]. These approaches have high complexity and may contain custom operations which are not suitable to be run on current implementations of neural network interpreters offered for mobile devices. Such devices lack the computation power of specialised GPUs, resulting in very poor inference speed. Mobile capable approaches in the feature extraction task such as Mobilenet V2 [21], ShuffleNet V2 [18] have motivated us to explore the performance of such architectures to be used in this context.

In this paper, we explore ShuffleNet V2 [18], as the feature extractor with simplified DeepLabV3+ [6] heads and recently proposed DPC [4] architecture, and report our findings of both model on scene understanding using Cityscapes [8] data set. Furthermore, we present the number of floating point operations(FLOPs) and on-device inference performance of each approach. Our contributions to the field can be listed in three points:

-

1.

We achieve state-of-art computation efficiency in the semantic segmentation task while achieving \(70.33\%\) mean intersection over union (mIOU) on Cityscapes test set using ShuffleNet V2 along with DPC [4] encoder and a naive decoder module.

-

2.

Our proposed model and implementation is fully compatible with TensorFlow Lite and runs real-time on Android and iOS-based mobile phones.

-

3.

We make our Tensorflow implementation of the network, and trained models publicly available at https://github.com/sercant/mobile-segmentationFootnote 1.

2 Related Work

In this section, we talk about the current state-of-art in the task of semantic segmentation, especially mobile capable approaches and performance metrics to measure the efficiency of networks.

CNNs have shown to be the state-of-art method for the task of semantic segmentation over the recent years. Especially fully convolutional neural networks (FCNNs) have demonstrated great performance on feature generation task and end-to-end training and hence is widely used in semantic segmentation as encoders. Moreover, memory friendly and computationally light designs such as [13, 18, 21, 23], have shown to perform well in speed-accuracy trade-off by taking advantage of approaches such as depthwise separable convolution, bottleneck design and batch normalization [14]. These efficient designs are promising for usage on mobile CPUs and GPUs, hence motivated us to use such networks as encoders for the challenging task of semantic segmentation.

FCNN models for semantic segmentation proposed in the field have been the top-performing approach in many benchmarks such as [8, 10, 16, 25]. But these approaches use deep feature generators and complex reconstruction methods for the task, thus making them unsuitable for mobile use, especially for the application of autonomous cars where resources are scarce and computation delays are undesired [22].

In this sense, one of the recent proposals in feature generation, ShuffleNet V2 [18], demonstrates significant efficiency boost over the others while performing accurately. According to [18], there are four main guidelines to follow for achieving a highly efficient network design.

-

1.

When the channel widths are not equal, there is an increase in the memory access cost (MAC) and thus, channel widths should be kept equal.

-

2.

Excessive use of group convolutions should be avoided as they raise the MAC.

-

3.

Fragmentation in the network should be avoided to keep the degree of parallelism high.

-

4.

Element-wise operations such as ReLU, Add, AddBias are non-negligible and should be reduced.

To achieve such accuracy at low computation latency, they point out two main reasons. First, their guidelines for efficiency allow each building block to use more feature channels and have a bigger network capacity. Second, they achieve a kind of “feature reuse” by their approach of keeping half of the feature channels pass through the block to join the next block.

Another important issue is the metric of performance for convolutional neural networks. The efficiency of CNNs is commonly reported by the total number of floating point operations (FLOPs). It is pointed out in [18] that, despite their similar number of FLOPs, networks may have different inference speeds, emphasizing that this metric alone can be misleading and may lead to poor designs. They argue that discrepancy can be due to memory access cost (MAC), parallelism capability of the design and platform dependent optimizations on specific operations such as cuDNN’s \( 3 \times 3 \) Conv. Furthermore, they offer to use a direct metric (e.g., speed) instead of an indirect metric such as FLOPs.

Next, we shall examine the state-of-art on the semantic image segmentation task by focusing on the computationally efficient approaches.

Atrous convolutions, or dilated convolutions, are shown to be a powerful tool in the semantic segmentation task [5]. By using atrous convolutions it is possible to use pretrained ImageNet networks such as [18, 21] to extract denser feature maps by replacing downscaling at the last layers with atrous rates, thus allowing us to control the dimensions of the features. Furthermore, they can be used to enlarge the field of view of the filters to embody multi-scale context. Examples of atrous convolutions at different rates are shown in Fig. 1.

DeepLabV3+ DPC [4] achieves state-of-art accuracy when it is combined with their modified version of Xception [7] backbone. In their work, Mobilenet V2 has shown to have a correlation of accuracy with Xception [7] while having a shorter training time, and thus it is used in the random search [2] of a dense prediction cell (DPC). Our work is inspired by the accuracy that they have achieved with the Mobilenet V2 backbone on Cityscapes set in [4], and their approach of combining atrous separable convolutions with spatial pyramid pooling in [5]. To be more specific, we use lightweight prediction cell (denoted as basic) and DPC which were used on the Mobilenet V2 features, and the atrous separable convolutions on the bottom layers of feature extractor in order to keep higher resolution features.

Semantic segmentation as a real-time task has gained momentum on popularity recently. ENet [19] is an efficient and lightweight network offering low number of FLOPs in the design and ability to run real-time on NVIDIA TX1 by taking advantage of bottleneck module. Recently, ENet was further fine-tuned by [3], increasing the Cityscapes mean intersection over union from 58.29% to 63.06% by using a new loss function called Lovasz-Softmax [3]. Furthermore, SHUFFLESEG [11], demonstrates different decoders that can be used for Shufflenet, prior work to ShuffleNet V2, comparing their efficiency mainly with ENet [19] and SegNet [1] by FLOPs and mIOU metrics but they do not mention any direct speed metric that is suggested by ShuffleNet V2 [18]. The most comprehensive work on the search for an efficient real-time network was done in [22] and they report that SkipNet-Shufflenet combination runs 15 frames per second (fps) with an image resolution of \( 640 \times 360 \) on Jetson TX2. This work again, like SHUFFLESEG [11], is based on the prior design of the channel shuffle based approach.

Our literature review showed us that the ShuffleNet V2 architecture is yet to be used in semantic segmentation task as a feature generator. Both [22] and SHUFFLESEG [11] point out the low FLOP achievable by using ShuffleNet and show comparable accuracy and fast inference speeds. In this work, we exploit improved ShuffleNet V2 as an encoder module modified by atrous convolutions and well-proven encoder heads of DeepLabV3 and DPC in conjunction. Then, we evaluate the network on Cityscapes, a challenging task in the field of scene parsing.

3 Methodology

This section describes our network architecture, and the training procedures, and evaluation methods. The network architecture is based upon the state-of-art efficient encoder, ShuffleNet V2 [18], and DeepLabV3 [5] and DPC [4] heads built on top to perform segmentation. Training procedure includes restoring from an Imagenet [9] checkpoint, pre-training on MS COCO 2017 [16] and Cityscapes [8] coarse annotations, and fine-tuning on fine annotations of Cityscapes [8]. We then evaluate the trained network on Cityscapes validation set according to their evaluation procedure in [8].

3.1 Network Architecture

In this section, we will give a detailed explanation of each step of the proposed network. Figure 2 shows the different stages of the network and how the data flows from the start to the end. We start with Shufflenet V2 feature extractor, then add the encoder head (DPC or basic DeepLabV3), and finally use resize bilinear as a naive decoder to produce the segmentation mask. The final downsampling factor of the feature extractor is denoted as \(output\_stride\).

For the task of feature extraction, we choose Shufflenet V2 because of its success for speed versus accuracy trade-off. Our selection for the \(depth\_multiplier\) of ShuffleNet V2 is \(\times 1\) as it can be seen in the output channels column of Table 1. Our decision was purely made by accuracy and speed results of this variation on the ImageNet data set and we have not run experiments on different variations of the \(depth\_multiplier\). Although, one might choose lower values for this hyperparameter to achieve faster inference time by compromising on accuracy and conversely higher values might result in a gain of accuracy in favour of inference speed.

Figure 3 shows the building blocks of the feature extractor architecture in detail. Each stage consists of one spatial downsampling unit and several basic units. In the original implementation of Shufflenet V2 [18], \(output\_stride\) goes as low as 32. In our approach, entry flow, Stage2 and Stage3 of the proposed feature extractor is implemented as in [18]. In the case of \(output\_stride=16\), the last stage, namely Stage4, has been modified by setting \(stride=1\) instead of 2 on the downsampling layer and the atrous rate of the preceding depth-wise convolutions are set to \(network\_stride\) divided by \(output\_stride\) as described in [5] to adjust the final downsampling factor of the feature extractor. However, in the case of \(output\_stride=8\), stride and atrous rate modification starts from Stage3. We choose to focus on \(output\_stride=16\) due to its faster computation speed.

After ShuffleNet V2 features are extracted we employ the DPC encoder. Figure 4 shows the design of the basic encoder head [5] and the DPC head [4]. The basic encoder head does not contain any atrous convolutions in its design for lower complexity. On the other hand, DPC is using five different depthwise convolutions at different rates to understand features better. After the encoder heads, as seen in Fig. 5, features are reduced down to \(depth=256\), then to the number of classes. Afterwards, a drop out layer with 0.9 probability of keeping is applied.

For decoding, we are using the same naive approach as [5], where a simple bilinear resizing is applied to upscale back to the original image dimensions. In the case of \(output\_stride=16\), the upscaling factor is 16. In their later work, DeepLabV3+[6], they propose an approach where features from earlier stages (e.g. \(decoder\_stride\) of 4) are concatenated with the upsampled features of the last layer where the upscaling factor is \(output\_stride\) divided by \(decoder\_stride\) to preserve the finer details in the segmentation result. We have not included this part as it adds more complexity and slowing down the inference time.

3.2 Training

Proposed feature extractor weights are initially restored from an ImageNet [9] checkpoint. After that, we pre-train the network end-to-end on MS COCO [16]. Further pre-training was done on Cityscapes coarse annotations before we finalize our training on Cityscapes fine annotations. During the training at each step data augmentation was performed on the images by randomly scaling between 0.5 and 2.0 with steps of 0.25, randomly cropping by \( 769 \times 769 \) and randomly flipping left and right.

Preprocessing. For preprocessing the input images, we standardize each pixel to \([-1, 1]\) range according to Eq. 1.

MS COCO 2017. We pre-train the network on MS COCO as suggested in [4,5,6]. Only the relevant classes to the Cityscapes task, which are person, car, truck, bus, train, motorcycle, bicycle, stop sign and parking meter, were chosen and other classes were marked as background. Also, we further filter the samples by criteria of having a non-background class area of over 1000 pixels. This yields us 69795 training and 2956 validation samples. Training was done end-to-end by using a batch size of 16, \(output\_stride\) of 16, weight decay set to \(4e-5\) to prevent over-fitting, and a “poly” learning rate policy as shown in Eq. 2 where \(lr^{(k)}\) is the learning rate at step k, \(lr_{initial}\) set to 0.001 and power set to 0.9 [5] for 60K steps using Adam optimizer [15](\(beta1=0.9\), \(a2=0.999\), \(epsilon=10^{-8}\)).

Cityscapes. First, we pre-train the network further using 20K coarsely annotated samples for 60K steps, following the same parameters that we used in MS COCO training. Then, as the final step, we fine-tune the network for 120K steps on finely annotated set (2975 train, 500 validation samples) with an initial learning rate of 0.0001, a slow start at learning rate \(1e\) \(-\) \(5\) for the first 186 steps. We found out that lowering the learning rate at the fine-tuning stage is crucial to achieving good accuracy. Other parameters are kept the same as the previous steps.

4 Experimental Results on Cityscapes

Cityscapes [8] is a well-studied data set in the field of scene parsing task. It contains roughly 20K coarsely annotated samples, and 5000 finely annotated samples which are split into 2975, 500 and 1525 for training, validation, and testing respectively.

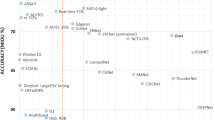

Our approach of combining ShuffleNet V2 with DeepLabV3 basic and DPC head achieves both state-of-art mIOU and inference speed with the respective GFLOPs counts. Table 2 shows the comparison of GFLOPs to mIOU performance on the validation set. ShuffleNet V2 with basic DeepLabV3 encoder head, having 2.18 GFLOPs, surpasses the SkipNet-ShuffleNet [22] which has similar floating operations count by \(12.2\%\) gain on mIOU. On the other hand, DPC variation performs \(+0.6\%\) more accurate than the Mobilenet V2 with basic heads, which has 1.54 times more GFLOPs.

ShuffleNet V2 with DPC heads outperforms state-of-art efficient networks on the Cityscapes test set. Table 3 shows that we make a gain of 7.27% mIOU over the best performing ENet architecture. Furthermore, on each of the classes highlighted here, ShuffleNet V2\(+\)DPC shows a great improvement. Also, as seen in the Table 4, our approach outperforms the previous methods on the category mIOU and instance level metrics.

We visualize the segmentation masks generated both by the basic and DPC approach in Fig. 6. From the visuals, we can see that the DPC head variant is able to segment thinner objects such as poles better than the basic encoder head. The figure is best viewed in colour.

4.1 Inference Speed on Mobile Phone

In this section, we provide inference speed results and our procedure for the measurements. As discussed in ShuffleNet V2 [18], we believe that the computational efficiency of the network should be measured on the target devices rather than purely comparing by the FLOPs. Thus, we convert the competing networks to Tensorflow Lite, a binary model representation for inferencing on mobile phones, for the speed evaluation. Tensorflow Lite has a limited number of available operations in its current implementation, thus complex architectures such as [12] cannot be converted without implementing the missing operations on the Tensorflow Lite. But, both Mobilenet V2 and ShuffleNet V2 are compatible and require no additional custom operations.

The measurements were done on OnePlus A6003, Snapdragon 845 CPU, 6 GB RAM, 16 + 20 MP Dual Camera, Android version 9, OxygenOS version 9.0.3. The device is stabilized in place, put to airplane mode, background services are disabled, the battery is fully charged and kept plugged to the power cable. The rear camera output image is scaled so that the smaller dimension is 224, then the middle of the image is cropped to get an input image of \(224 \times 224\). The downscaling and cropping is not included in the inference time shown in Table 5 however the preprocessing of the input image, according to Eq. 1, and the final ArgMax is included in the inference time. Inference speed measurements, presented in Table 5, are averaged over 300 frames after 30 s of an initial warm-up period.

Table 5 shows that our approach of using ShuffleNet V2 as a backbone is capable of performing real-time, close to 20 Hz, semantic segmentation on a mobile phone. Even with the complex DPC architecture, ShuffleNet V2 backbone ends up with 1.54 times fewer GFLOPs over the Mobilenet V2 with basic encoder head. Furthermore, model size is significantly lower which is desired for embedded devices that might have limited memory or storage size. One surprising finding is that the Mobilenet V2 variants show much higher variance in the inference time compared to the ShuffleNet V2 backbone, thus our approach provides a more stable fps.

5 Conclusion

In this paper, we have described an efficient solution for semantic segmentation by achieving state-of-art inference speed without compromising on the accuracy. Our approach shows that ShuffleNet V2 is a powerful and efficient backbone for the task of semantic segmentation. It achieves 70.33% mIOU when combined with the DPC head, and 67.7% mIOUFootnote 2 combined with the basic encoder head on Cityscapes challenge. Furthermore, we showed that our network is capable of running real-time, the DPC head at 15.41 fps and the basic encoder head at 19.65 fps, on a mobile phone with an input image size of \(224 \times 224\). Future work is to implement an efficient decoder architecture to get more refined edges along the borders of the objects on the segmentation mask.

Notes

- 1.

- 2.

The validation set result.

References

Badrinarayanan, V., Kendall, A., Cipolla, R.: SegNet: a deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39(12), 2481–2495 (2017)

Bergstra, J., Bengio, Y.: Random search for hyper-parameter optimization. J. Mach. Learn. Res. 13, 281–305 (2012)

Berman, M., Rannen Triki, A., Blaschko, M.B.: The lovász-softmax loss: a tractable surrogate for the optimization of the intersection-over-union measure in neural networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4413–4421 (2018)

Chen, L.C., et al.: Searching for efficient multi-scale architectures for dense image prediction. In: Advances in Neural Information Processing Systems, pp. 8713–8724 (2018)

Chen, L.C., Papandreou, G., Schroff, F., Adam, H.: Rethinking atrous convolution for semantic image segmentation. arXiv preprint arXiv:1706.05587 (2017)

Chen, L.-C., Zhu, Y., Papandreou, G., Schroff, F., Adam, H.: Encoder-decoder with atrous separable convolution for semantic image segmentation. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) ECCV 2018. LNCS, vol. 11211, pp. 833–851. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01234-2_49

Chollet, F.: Xception: Deep learning with depthwise separable convolutions. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), July 2017

Cordts, M., et al.: The cityscapes dataset for semantic urban scene understanding. In: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2016

Deng, J., Dong, W., Socher, R., Li, L.J., Li, K., Fei-Fei, L.: ImageNet: a large-scale hierarchical image database. In: CVPR 2009 (2009)

Everingham, M., Eslami, S.M.A., Van Gool, L., Williams, C.K.I., Winn, J., Zisserman, A.: The pascal visual object classes challenge: a retrospective. Int. J. Comput. Vis. 111(1), 98–136 (2015)

Gamal, M., Siam, M., Abdel-Razek, M.: ShuffleSeg: real-time semantic segmentation network. arXiv preprint arXiv:1803.03816 (2018)

He, K., Gkioxari, G., Dollar, P., Girshick, R.: Mask R-CNN. In: 2017 IEEE International Conference on Computer Vision (ICCV), October 2017

Howard, A.G., et al.: MobileNets: efficient convolutional neural networks for mobile vision applications. arXiv preprint arXiv:1704.04861 (2017)

Ioffe, S., Szegedy, C.: Batch normalization: accelerating deep network training by reducing internal covariate shift. In: Proceedings of the 32nd International Conference on International Conference on Machine Learning, ICML 2015, vol. 37, pp. 448–456. JMLR.org (2015)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Lin, T.-Y., et al.: Microsoft COCO: common objects in context. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8693, pp. 740–755. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10602-1_48

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 3431–3440 (2015)

Ma, N., Zhang, X., Zheng, H.-T., Sun, J.: ShuffleNet V2: practical guidelines for efficient CNN architecture design. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds.) Computer Vision – ECCV 2018. LNCS, vol. 11218, pp. 122–138. Springer, Cham (2018). https://doi.org/10.1007/978-3-030-01264-9_8

Paszke, A., Chaurasia, A., Kim, S., Culurciello, E.: ENet: a deep neural network architecture for real-time semantic segmentation. arXiv preprint arXiv:1606.02147 (2016)

Ronneberger, O., Fischer, P., Brox, T.: U-Net: convolutional networks for biomedical image segmentation. In: Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F. (eds.) MICCAI 2015. LNCS, vol. 9351, pp. 234–241. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24574-4_28

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A., Chen, L.C.: MobileNetV2: inverted residuals and linear bottlenecks. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, June 2018

Siam, M., Gamal, M., Abdel-Razek, M., Yogamani, S., Jagersand, M., Zhang, H.: A comparative study of real-time semantic segmentation for autonomous driving. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 700–70010. IEEE (2018)

Zhang, X., Zhou, X., Lin, M., Sun, J.: ShuffleNet: an extremely efficient convolutional neural network for mobile devices. In: 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, June 2018

Zhao, H., Shi, J., Qi, X., Wang, X., Jia, J.: Pyramid scene parsing network. In: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), July 2017

Zhou, B., Zhao, H., Puig, X., Fidler, S., Barriuso, A., Torralba, A.: Scene parsing through ADE20K dataset. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2017)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Türkmen, S., Heikkilä, J. (2019). An Efficient Solution for Semantic Segmentation: ShuffleNet V2 with Atrous Separable Convolutions. In: Felsberg, M., Forssén, PE., Sintorn, IM., Unger, J. (eds) Image Analysis. SCIA 2019. Lecture Notes in Computer Science(), vol 11482. Springer, Cham. https://doi.org/10.1007/978-3-030-20205-7_4

Download citation

DOI: https://doi.org/10.1007/978-3-030-20205-7_4

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-20204-0

Online ISBN: 978-3-030-20205-7

eBook Packages: Computer ScienceComputer Science (R0)