Abstract

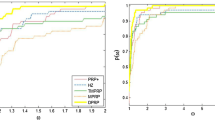

In this paper, we are concerned with the conjugate gradient methods for solving unconstrained optimization problems. It is well-known that the direction generated by a conjugate gradient method may not be a descent direction of the objective function. In this paper, we take a little modification to the Fletcher–Reeves (FR) method such that the direction generated by the modified method provides a descent direction for the objective function. This property depends neither on the line search used, nor on the convexity of the objective function. Moreover, the modified method reduces to the standard FR method if line search is exact. Under mild conditions, we prove that the modified method with Armijo-type line search is globally convergent even if the objective function is nonconvex. We also present some numerical results to show the efficiency of the proposed method.

Similar content being viewed by others

References

Al-Baali M. (1985) Descent property and global convergence of the Fletcher–Reeves method with inexact line search. IMA J. Numer. Anal. 5, 121–124

Andrei, N.: Scaled conjugate gradient algorithms for unconstrained optimization. Comput. Optim. Appl. (to apper)

Birgin E., Martínez J.M. (2001) A spectral conjugate gradient method for unconstrained optimization. Appl. Math. Optim. 43, 117–128

Bongartz K.E., Conn A.R., Gould N.I.M., Toint P.L. (1995) CUTE: constrained and unconstrained testing environments. ACM Trans. Math. Softw. 21, 123–160

Dai Y.H., Yuan Y. (1996) Convergence properties of the conjugate descent method. Adv. Math. 25, 552–562

Dai Y.H., Yuan Y. (1996) Convergence properties of the Fletcher–Reeves method. IMA J. Numer. Anal. 16, 155–164

Dai Y.H., Yuan Y. (2000) A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 10, 177–182

Dai, Y.H.: Some new properties of a nonlinear conjugate gradient method. Research report ICM-98-010, Insititute of Computational Mathematics and Scientific/Engineering Computing. Chinese Academy of Sciences, Beijing (1998)

Dixon L.C.W. (1970) Nonlinear optimization: a survey of the state of the art. In: Evans D.J. (eds) Software for Numerical Mathematics. Academic, New York, pp. 193–216

Dolan E.D., Moré J.J. (2002) Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213

Fletcher R. (1987) Practical Methods of Optimization, Unconstrained Optimization. vol. I. Wiley, New York

Fletcher R., Reeves C. (1964) Function minimization by conjugate gradients. J. Comput. 7, 149–154

Gilbert J.C., Nocedal J. (1992) Global convergence properties of conjugate gradient methods for optimization. SIAM. J. Optim. 2, 21–42

Grippo L., Lucidi S. (1997) A globally convergent version of the Polak–Ribiére gradient method. Math. Program. 78, 375–391

Hager W.W., Zhang H. (2005) A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16, 170–192

Hager W.W., Zhang H. (2006) A survey of nonlinear conjugate gradient methods. Pacific J. Optim. 2, 35–58

Hestenes M.R., Stiefel E.L. (1952) Methods of conjugate gradients for solving linear systems. J. Res. Nat. Bur. Stand. Sect. B. 49, 409–432

Hu Y., Storey C. (1991) Global convergence results for conjugate gradient methods. J. Optim. Theory Appl. 71, 399–405

Liu D.C., Nocedal J. (1989) On the limited memory BFGS method for large scale optimization methods. Math. Program. 45, 503–528

Liu G., Han J., Yin H. (1995) Global convergence of the Fletcher–Reeves algorithm with inexact line search. Appl. Math. J. Chin. Univ. Ser. B. 10, 75–82

Moré J.J., Garbow B.S., Hillstrome K.E. (1981) Testing unconstrained optimization software. ACM Trans. Math. Softw. 7, 17–41

Moré J.J., Thuente D.J. (1994) Line search algorithms with guaranteed sufficient decrease. ACM Trans. Math. Softw. 20, 286–307

Nocedal J. (1996) Conjugate gradient methods and nonlinear optimization. In: Adams L., Nazareth J.L. (eds.) Linear and Nonlinear Conjugate Gradient-Related Methods. SIAM, Philadelphia, pp. 9–23

Polak E. (1997) Optimization: Algorithms and Consistent Approximations. Springer, Berlin Heidelberg New York

Polak B., Ribiere G. (1969) Note surla convergence des méthodes de directions conjuguées. Rev. Francaise Imformat Recherche Opertionelle 16, 35–43

Polyak B.T. (1969) The conjugate gradient method in extreme problems. USSR Comp. Math. Math. Phys. 9, 94–112

Powell M.J.D. (1984) Nonconvex minimization calculations and the conjugate gradient method. In: Lecture Notes in Mathematics 1066, 121–141

Powell M.J.D. (1986) Convergence properties of algorithms for nonlinear optimization. SIAM Rev. 28, 487–500

Raydan M. (1997) The Barzilain and Borwein gradient method for the large unconstrained minimization problem. SIAM J. Optim. 7, 26–33

Sun J., Zhang J. (2001) Convergence of conjugate gradient methods without line search. Ann. Oper. Res. 103, 161–173

Touati-Ahmed D., Storey C. (1990) Efficient hybrid conjugate gradient techniques. J. Optim. Theory Appl. 64, 379–397

Zhang, L., Zhou, W.J., Li, D.H.: A descent modified Polak–Ribière–Polyak conjugate gradient method and its global convergence. IMA J. Numer. Anal. (to appear)

Zoutendijk G. (1970) Nonlinear programming, computational methods. In: Abadie J. (eds) Integer and Nonlinear Programming. North-Holland, Amsterdam, pp. 37–86

Author information

Authors and Affiliations

Corresponding author

Additional information

Supported by the 973 project (2004CB719402) and the NSF foundation (10471036) of China.

Rights and permissions

About this article

Cite this article

Zhang, L., Zhou, W. & Li, D. Global convergence of a modified Fletcher–Reeves conjugate gradient method with Armijo-type line search. Numer. Math. 104, 561–572 (2006). https://doi.org/10.1007/s00211-006-0028-z

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-006-0028-z