Abstract

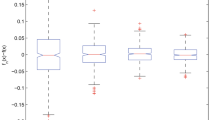

In this paper, the single index weighted version of Marcinkiewicz–Zygmund type strong law of large numbers and the double index weighted version of Marcinkiewicz–Zygmund type strong law of large numbers are investigated successively for a class of random variables, which extends the classical results for independent and identically distributed random variables. As applications of the results, we further study the strong consistency for the weighted estimator in the nonparametric regression model and the least square estimators in the simple linear errors-in-variables model. Moreover, we also present some numerical study to verify the validity of our results.

Similar content being viewed by others

References

Bradley RC (1992) On the spectral density and asymptotic normality of weakly dependent random fields. J Theor Probab 5:355–373

Deaton A (1985) Panel data from time series of cross-sections. J Econom 30(1):109–126

Dobrushin RL (1956) Central limit theorem for non-stationary Markov chain. Theory Probab Appl 1(4):72–88

Fan Y (1990) Consistent nonparametric multiple regression for dependent heterogeneous processes: the fixed design case. J Multivar Anal 33:72–88

Fan GL, Liang HY, Wang JF, Xu HX (2010) Asymptotic properties for LS estimators in EV regression model with dependent errors. AStA Adv Stat Anal 94:89–103

Georgiev AA (1985) Local properties of function fitting estimates with applications to system identification. In: W. Grossmann et al. (ed), Mathematical statistics and applications, vol B, proceedings of the 4th pannonian symposium on mathematical statistics

Georgiev AA (1988) Consistent nonparametric multiple regression: the fixed design case. J Multivar Anal 25(1):100–110

Georgiev AA, Greblicki W (1986) Nonparametric function recovering from noisy observations. J Stati Plan Inference 13:1–14

Hu TZ (2000) Negatively superadditive dependence of random variables with applications. Chin J Appl Probab Stat 16:133–1440

Hu SH, Zhu CH, Chen YB, Wang LC (2002) Fixed-design regression for linear time series. Acta Math Sci 22B(1):9–18

Hu SH, Pan GM, Gao QB (2003) Estimate problem of regression models with linear process errors. Appl Math Ser A 18A(1):81–90

Hu D, Chen PY, Sung SH (2017) Strong laws for weighted sums of \(\psi \)-mixing random variables and applications in errors-in-variables regression models. Test 26(3):600–617

Joag-Dev K, Proschan F (1983) Negative association of random variables with applications. Ann Stat 11(1):286–295

Kolmogorov AN, Rozanov YA (1960) On strong mixing conditions for stationary Gaussian processes. Theory Probab Appl 5:204–208

Lehmann E (1966) Some concepts of dependence. Ann Math Stat 37:1137–1153

Liang HY, Jing BY (2005) Asymptotic properties for estimates of nonparametric regression models based on negatively associated sequences. J Multivar Anal 95:227–245

Liu L (2009) Precise large deviations for dependent random variables with heavy tails. Stat Probab Lett 79:1290–1298

Liu JX, Chen XR (2005) Consistency of LS estimator in simple linear EV regression models. Acta Math Sci B 25(1):50–58

Miao Y, Wang K, Zhao FF (2011) Some limit behaviors for the LS estimator in simple linear EV regression models. Stat Probab Lett 81(1):92–102

Müller HG (1987) Weak and universal consistency of moving weighted averages. Period Math Hungar 18(3):241–250

Roussas GG (1989) Consistent regression estimation with fixed design points under dependence conditions. Stat Probab Lett 8:41–50

Roussas GG, Tran LT, Ioannides DA (1992) Fixed design regression for time series: asymptotic normality. J Multivar Anal 40:262–291

Shen AT, Yao M, Wang WJ, Volodin A (2016) Exponential probability inequalities for WNOD random variables and their applications. RACSAM 110(1):251–268

Stone CJ (1977) Consistent nonparametric regression. Ann Stat 5:595–645

Sung SH (2010) Complete convergence for weighted sums of \(\rho ^*\)-mixing random variables. Discrete Dyn Nat Soc 2010, Article ID 630608

Tran L, Roussas G, Yakowitz S, Van Truong B (1996) Fixed-design regression for linear time series. Ann Stat 24:975–991

Wang XJ, Zheng LL, Xu C, Hu SH (2015a) Complete consistency for the estimator of nonparametric regression models based on extended negatively dependent errors. Statistics 49(2):396–407

Wang XJ, Shen AT, Chen ZY, Hu SH (2015b) Complete convergence for weighted sums of NSD random variables and its application in the EV regression model. Test 24(1):166–184

Wu QY (2006) Probability limit theory for mixing sequences. Science Press, Beijing

Yang WZ, Xu HY, Chen L, Hu SH (2016) Complete consistency of estimators for regression models based on extended negatively dependent errors. Stat Pap. doi:10.1007/s00362-016-0771-x (in press)

Acknowledgements

The authors are most grateful to the Editor-in-Chief, Associate Editor and two anonymous referees for careful reading of the manuscript and valuable suggestions which helped in improving an earlier version of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported by the National Natural Science Foundation of China (11671012, 11501004, 11501005), the Natural Science Foundation of Anhui Province (1508085J06) and The Key Projects for Academic Talent of Anhui Province (gxbjZD2016005).

Appendix

Appendix

Proof of Lemma 2.4

Without loss of generality, we may assume that \(a_{ni}\ge 0\) for all \(1\le i\le n,n\ge 1\) and \(\sum _{i=1}^{n}a_{ni}^{\alpha }\le n\). Note that \(\sum _{i=1}^{n}a_{ni}^{s}\le n\) for any \(0<s<\alpha \) according to Hölder’s inequality. First let us consider the case that \(0<p<1\).

For fixed \(n\ge 1\), denote for \(1\le i\le n\) that

Consequently, to prove (3), it suffices to prove

and

For (44), taking \(\zeta =\min (1,\alpha )\), according to Markov’s inequality, \(C_{r}\)-inequality and Lemma 2.3, we can derive that

Noting that \(\sum _{n=1}^{\infty }n^{\gamma p-1}P(|X|>n^{\gamma })<\infty \) is equivalent to \(E|X|^{p}<\infty \), we need only to prove that \(\sum _{n=1}^{\infty }n^{\gamma p-1-\gamma \zeta }E|X|^{\zeta }I(|X|\le n^{\gamma })<\infty .\) Actually,

Therefore, (44) holds. It retains to prove (45). According to Markov’s inequality, \(C_{r}\)-inequality and Lemma 2.3, we can also obtain that

which implies (45).

For \(p\ge 1\), we decompose the coefficients \(a_{ni}\) into \(a_{ni}^{(1)}=a_{ni}I(0\le a_{ni}\le 1)\) and \(a_{ni}^{(2)}=a_{ni}I(a_{ni}>1)\) for any \(n\ge 1\) and \(1\le i\le n\). Thus to prove (3), we need to prove

and

For fixed \(n\ge 1\), denote for \(1\le i\le n\) that

Noting that \(E|X|^{p}<\infty \) and \(EX_{ni}=0\), we derive from Lemma 2.3 (further from the Dominated Convergence Theorem if \(\gamma p=1\)) that

Similarly, noting that \(\max _{1\le i\le n}a_{ni}^{(2)}\le n^{1/\alpha }\) and \(\gamma \ge 1/p>1/\alpha \), we obtain that

Therefore, \(\max _{1\le k\le n}\left| \sum _{i=1}^{k}EY_{ni}^{(j)}\right| \le \varepsilon n^{\gamma }/2\) for all n large enough and \(j=1,2\). We first prove (47). Recall that \(\sum _{n=1}^{\infty }n^{\gamma p-1}P(|X|>n^{\gamma })<\infty \) is equivalent to \(E|X|^{p}<\infty \). If \(p\ge 2\), it follows from (1.2), Lemma 2.3, \(C_{r}\)-inequality and Jensen’s inequality that for any \(r>\max \{p,(\gamma p-1)/(\gamma -1/2)\}\),

For \(I_{1}\), since \(\sum _{n=1}^{\infty }n^{\gamma p-1}P(|X|>n^{\gamma })<\infty \) is equivalent to \(E|X|^{p}<\infty \), we derive from the proof of (46) that

For \(I_{2}\), noting that \(r>(\gamma p-1)/(\gamma -1/2)\), \(|Y_{ni}^{(1)}|\le |a_{ni}^{(1)}X_{ni}|\) and that \(E|X|^{p}<\infty \) implies \(EX^{2}<\infty \) according to Hölder’s inequality, we have that

If \(1\le p<2\), the proof of the desired result (47) is similar to that of (49) and \(I_{1}\) with \(r=2\); hence, the detail is omitted. (48) is yet to be proved. We also deal with the case \(p\ge 2\) first. It follows from (1.2), Lemma 2.1, \(C_{r}\)-inequality and Jensen’s inequality that for \(r>\max \{\alpha ,(\gamma p-1)/(\gamma -1/2)\}\),

It follows from Lemmas 2.1 and 2.2 that

On the other hand, noting that \(|Y_{ni}^{(2)}|\le |a_{ni}^{(2)}X_{ni}|\), we have

Thus, (48) follows from (50)–(52)immediately.

If \(1\le p<2\), we can assume without loss of generality that \(p<\alpha <2\), then we can choose \(r=2\) such that \(\alpha <r\). The proof of (48) is similar to that of (50) and \(J_{1}\) with \(r=2\). This completes the proof of the lemma. \(\square \)

Proof of Lemma 2.5

Assume without loss of generality that \(a_{ni}\ge 0\) for all \(1\le i\le n\) and \(n\ge 1\) and \(\sum _{i=1}^{n}|a_{ni}|^{\alpha }\le n\). It follows from Hölder’s inequality that \(\sum _{i=1}^{n}|a_{ni}|^{s}\le n\) and \(\sum _{i=1}^{n}|a_{ni}|^{r}\le n^{r/\alpha }\) for any \(0<s<\alpha <r\). To prove (4), we consider the following three cases.

Case 1 \(0< p<\alpha \le 2\)

Noting that \(\max _{1\le i\le n}|X_{i}|\le Cn^{\delta }\) \(\mathrm{a.s.}\), we derive from (1) that for \(r>\max \{2,1/(1/p-1/\alpha -\delta )\}\),

Case 2 \(2<\alpha \le 2p/(2-p)\)

Noting that \(\alpha p/(\alpha -p)\ge 2\) and \(\sup _{n\ge 1}E|X_{n}|^{\alpha p/(\alpha -p)}<\infty \), we can obtain that \(\sup _{n\ge 1}EX_{n}^{2}<\infty \). For \(r>\max \{\alpha ,1/(1/p-1/\alpha -\delta ),1/(1/p-1/2)\}\), we obtain that

Case 3 \(\alpha >2p/(2-p)\)

Let \(r>\max \{\alpha ,1/(1/p-1/\alpha -\delta )\}\). Noting that \(\alpha p/(\alpha -p)<2\) and \(0\le \delta <(1/p-1/\alpha )\), we obtain that

Consequently, the desired result (4) follows from the Borel–Cantelli lemma. The proof is completed. \(\square \)

Rights and permissions

About this article

Cite this article

Wu, Y., Wang, X., Hu, S. et al. Weighted version of strong law of large numbers for a class of random variables and its applications. TEST 27, 379–406 (2018). https://doi.org/10.1007/s11749-017-0550-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11749-017-0550-6

Keywords

- Strong law of large numbers

- Rosenthal-type inequality

- Double index weight

- Nonparametric regression model

- Simple linear errors-in-variables model

- Strong consistency