Abstract

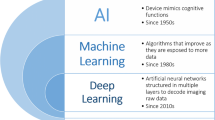

Artificial intelligence (AI) is a branch of Informatics that uses algorithms to tirelessly process data, understand its meaning and provide the desired outcome, continuously redefining its logic. AI was mainly introduced via artificial neural networks, developed in the early 1950s, and with its evolution into "computational learning models." Machine Learning analyzes and extracts features in larger data after exposure to examples; Deep Learning uses neural networks in order to extract meaningful patterns from imaging data, even deciphering that which would otherwise be beyond human perception. Thus, AI has the potential to revolutionize the healthcare systems and clinical practice of doctors all over the world. This is especially true for radiologists, who are integral to diagnostic medicine, helping to customize treatments and triage resources with maximum effectiveness. Related in spirit to Artificial intelligence are Augmented Reality, mixed reality, or Virtual Reality, which are able to enhance accuracy of minimally invasive treatments in image guided therapies by Interventional Radiologists. The potential applications of AI in IR go beyond computer vision and diagnosis, to include screening and modeling of patient selection, predictive tools for treatment planning and navigation, and training tools. Although no new technology is widely embraced, AI may provide opportunities to enhance radiology service and improve patient care, if studied, validated, and applied appropriately.

Similar content being viewed by others

Introduction

Artificial intelligence (AI) is a methodology of computer engineering that uses algorithms to tirelessly process data, understand its meaning and provide the desired outcome, continuously redefining its logic and selecting the optimal pathway to address the applied question.

Although its official birth is referred to as in 1955, when John McCarthy first defined it as “the science of making intelligent machines” [1], AI may have its roots with the invention of robots, whose first mention can be traced back to the third century in China. Over a millennium later, it was Leonardo Da Vinci, during the Renaissance, who made a detailed study of human anatomy and designed his humanoid robot. His sketches, rediscovered only in the 1950s, were in fact, a source of inspiration, even leading to the invention of the surgical system carrying the Da Vinci name, that facilitates complex surgery with a minimally invasive approach and can be controlled by a surgeon from a remote console.

Over the years, AI development has relied upon the introduction of artificial neural networks (RNA) in the early 1950s, and their subsequent further evolution into the "computational learning models," including Machine Learning (ML) and Deep Learning (DL).

ML is based on algorithms that can improve task performance and decision making or predict outcomes. Specifically, with a previous training period in which ML algorithms focus on specific or characteristic features through exposure to examples, they learn to extract desired meaningful patterns from data, even seeing features that are beyond human perception [2].

DL is made of multilayered, “or deep,” artificial neural networks: the numerous neural layers between input and output contribute to the plasticity of DL and offer the potential to define new patterns of intelligent classifications, mimicking the human brain mechanisms. Different from ML, DL can automatically discern the relevant features from data (often with an unknown mechanism or overall rationale) even if unlabeled, allowing to learn new patterns and to determinate more complex relationships, which is valuable if compared with a human reader who can only partially detect and use a fraction of the total big information content of digital images [3].

Combining ML/DL image processing with clinical and pathology/histology data, to find direct correlation between intrinsic Computed Tomography (CT) and Magnetic Resonance Imaging (MRI) patterns and specific pathology and histology subtypes, is the purpose of a new amazing sector of research called “Radiomics” [4,5,6].

Thus, AI is capable of globally revolutionizing healthcare and clinical practice, and will inform, influence, and transform radiologists more than most physician disciplines. AI will augment and support instead of replacing radiologists, via enhanced performance, both in terms of speed and precision, facilitating and improving the efficiency and accuracy of the diagnosis process. This will also help to customize treatments and use resources with maximum effectiveness, which are important priorities for interventional radiologists, who often diagnose and treat in real time and at the same time. Moreover, the utilization of novel navigational techniques that superimpose virtual pre-procedural 3D anatomic data onto real-world 2D visual images in real time, such as Augmented Reality (AR), multi-modality fusion, or Virtual Reality (VR), enables improved accuracy for minimally invasive treatments and reduced risks, complications and radiation, which are central goals for Interventional Radiology (IR) [7].

The purpose of this article is to emphasize how AI is relevant not only for Diagnostic Radiology, but also for IR. The potential applications of AI in IR go beyond computer vision, to include screening and modeling of patient selection, predictive and supportive tools for treatment planning and execution, training and intra-procedural registration, segmentation, navigation and treatment planning. Their real-time utilization in the angiography suite, with the help of augmented/virtual reality, could improve patient selection, operator’s expertise, drug discovery, and multidisciplinary pursuit of delivery of tailor-made precision medicine.

Fields of application

Workflow solutions: patient scheduling, screening and counseling

AI may directly optimize the interventional radiologist’s daily practice with intelligent patient scheduling, beforehand identifying patients at high risk and taking steps to avoid the risk, as well as reducing the likelihood of missing care, via intelligent scheduling and patient selection [8].

In addition, AI algorithms can generate a relevant summary from the available patient records, including patient problem lists, clinical notes, laboratory data, pathology reports, vital signs, prior treatments, and prior imaging reports to give the radiologist the most pertinent contextual information during the evaluation of a patient for diagnosis or therapy [9, 10].

In addition, intelligent algorithms are proposed for patient safety screening or reports, which have the potential for applications in radiology practice (for example, MRI safety screening or administration of contrast material) [11].

Moreover, the AR/VR can improve patient’s compliance through the possibility to show a simulation of the experience in angiography suite, allowing the patient to arrive calmer and more aware of what awaits at the time of the procedure [12, 13].

Clinical and Imaging decision support tools for treatment planning

One of the hardest challenges for IR is the identification of an accurate way to predict the success rate of a specific treatment in a specific patient, and to estimate/forecast the outcomes and benefits of a treatment in advance. Similarly, big data science has attempted to investigate how a patient’s demographic and pathologic characteristics before the treatment can influence its efficacy [14].

ML- or DL-based predictive models can try to classify patients as responders and non-responders to specific treatment algorithms. Such AI models based on DL or ML may help interventional radiologists to overcome this patient selection challenge, especially in the field of interventional oncology. Such tools hold the potential to reduce unnecessary and useless procedures and interventions, healthcare costs, and decreasing the risk for the patient [15].

From this perspective, both clinical and imaging decision support tools have been created. Among clinical ones, ML has been showcased in the evaluation of the relative prognostic significance of the Child–Turcotte–Pugh score and albumin–bilirubin grade, along with other risk factors, within a cohort of patients undergoing trans-arterial chemoembolization [16]. In addition, a ML algorithm based on serum creatinine in electronic health records was capable of predicting Acute Kidney Insufficiency (AKI) up to 72 h before onset, in specific patients, potentially allowing clinicians to intervene before kidney damage manifests, in advance of diagnostic and interventional radiology procedures and treatments [17].

Among imaging decision support tools, image texture analysis has made it possible to extrapolate “radiological biomarkers” that allow a prognostic pre-treatment evaluation.

For instance, the performance of pre-ablation CT texture features may predict post-treatment local progression and survival in patients who undergo tumor ablation, using ML to identifying specific CT texture patterns, as demonstrated by Daye et al. for adrenal metastases. During pre-procedural assessment, in fact, when CT-derived texture features were included in addition to clinical variables, there was an increase in accuracy to more than 95% [18]. Similarly, using MRI texture features and the presence or absence of cirrhosis, Abajian et al. assessed a ML algorithm able to predict chemoembolization outcomes in patients with hepatocellular carcinoma, obtaining a very good negative predictive value (88.5%) [19].

Additionally, in Neuroradiology, AI algorithms have been used to assist not only the diagnosis, but especially the treatment decisions in acute ischemic stroke, from estimating time of onset (that is one of the most relevant clinical criteria to decide if a patient is eligible for lytic treatment) [20, 21], lesion estimation [22], and salvageable tissue (essential in decision making before endovascular treatment) [23, 24]. This aided in analysis of cerebral edema [25, 26], and predicting complications, such as hemorrhagic transformation after treatment [27,28,29] and patient outcomes, as well as identifying factors that will contribute to neurological deterioration and increased morbidity or predict motor deficit in stroke patients after treatment.[30,31,32,33].

However, AI is not able to provide an explanation of the underlying cause and pathophysiology: more complex interpretation problems like these require deductive reasoning, medical knowledge and mechanisms to integrate the findings within a clinical, personal, and societal context. This is why the holistic human intelligence and the medical judgment of interventional radiologists will be augmented and cannot be completely replaced [34].

Image guidance: pre-procedural assessment and intraprocedural supportive tools

First of all, during pre-procedural assessment in a procedure room setting such as the angiography suite, it is critical to evaluate anatomy and its pathophysiologic changes. In this regard, Augmented Reality (AR) or Virtual Reality (VR) may find its best application. Through advanced 3D rendering and manipulation of imaging in space, AR and VR allow operators to conceptualize difficult anatomy, to increases realism in procedural planning (when compared with standard 2D images), and to improve procedural skills in a previous simulated environment, with no risk to patients [12].

Furthermore, by typical AI tasks may be applied to preoperative imaging to semi-automatically detect or identify specific patterns or organ or lesion segmentation. This is already used in breast, lung, liver and prostate cancer diagnosis, but is also valuable for interventional radiologists, such as with the biologic evaluation of an atherosclerotic plaque, which may allow adaptation of treatment technique [35].

For intra-procedural guidance, AI finds application in a wide range of settings:

-

Automation of protocols, based on information gathered from the electronic health records and tailored to optimize radiation exposure in a quicker and automatic way [36].

-

Real-time evaluation for proper catheter, sheath, or implant selection, such as stent or prothesis to treat stenotic vascular lesions or aortic aneurisms [37].

-

Prediction of ischemia-related lesions, from quantitative CT angiography, for instance in myocardial or diabetic foot treatment [38, 39].

-

Real-time estimation of fractional flow reserve, based on fluoroscopic imaging, for identifying ischemia-related stenosis in coronary artery or venous disease [40].

-

Automatic vessels analysis, by use of intravascular ultrasound (IVUS): in complex cases of peripheral vascular diseases, it benefits by aiding with analysis of vessel size, lesion characteristic and potential post-treatment effects.

-

Fusion of pre-procedure 3D images onto intraoperative 2D images: synchronizing MRI, CT or Cone Beam CT cross-sectional preoperative imaging with intraoperative real-time fluoroscopic or ultrasound images, allows for more precise guidance for biopsies [41,42,43,44,45] and local image guided therapies [46, 47] (Figs. 1, 2, 3) and improves problem solving during procedures [48,49,50]. Moreover, it is possible to generate, from CT images alone or in combination with MRI data, a virtual angiography [51] or “angioscopy” that could be a valuable procedure planning tool for interventional radiologists such as for aortic endovascular repair (Figs. 4, 5).

Fig. 1 Fig. 2 “Automatic 3D detection of arterial bleeding of the leg in a traumatic patient”—a CTA reveals arterial bleeding of the leg in a traumatic patient. b Initial 2D angiography does not demonstrate bleeding. c Target segmentation was performed on the CTA dataset after CBCT-CT fusion; then 3D CBCT datasets were synchronized with the C-arm and overlaid on live fluoroscopy, during the intervention, to facilitate catheter navigation to the damaged vessel. d Once the target arterial culprit was engaged, a selective angiogram is performed to confirm correct targeting of the bleeding site

Fig. 3 Fig. 4 -

Augmented Reality and AI-based navigation systems: by superimposing virtual 3D anatomic data, acquired using CT, CBCT or MR onto real-world 2D visual images in real-time, planning software generates a virtual device trajectory that is overlaid onto visual surface anatomy, facilitating accurate visual navigation, theoretically without need for fluoroscopy [7, 52,53,54]. Via integrated matching AI software, automatic landmark recognition and motion compensation was enabled using fiducial markers linked by a computer algorithm. This system is already applied in a range of minimally invasive surgery procedures including lesion targeting/localization, spinal/para-spinal injections, arthrograms, tumor ablation, bone biopsies and more recently in vertebroplasty, with reduction in patient radiation exposure compared with fluoroscopy alone [7, 47].

-

Automatic tools for multi-modality registration and segmentation, as an enhanced and more ergonomic approach for fusion guided procedures, such as biopsy and ablation. This would enhance standardization and normalization, potentially enabling lesser experienced IRs to perform more advanced procedures, reproducing the skill or experience or techniques of experts.

-

Touchless interaction devices directly correlate pre-procedural, patient-specific and literature-derived information, which reduces distraction, errors, and procedure time. These include eye-tracking systems, inertial sensors, cameras or webcams, and voice-driven smart assistants. In particular, the possibility of interrogating a previously instructed intelligent assistant to obtain suggestions on which device is most appropriate during a specific intervention procedure, before removing it from the sterile container, or what is the availability of the same in hospital stock or make a cost analysis with respect to another device, is even being studied by a group of researchers from the University of California. Its application could allow the operator to choose between two devices not only according to the appropriateness for the treatment but also in relation to the outcome data or patient-specific anatomy, optimizing results in terms of time and resource savings [55].

Other hypothetical examples could be ML models that analyze the relationships between catheter or needle position, therapeutic effect and patient outcomes; particularly in the field of ablative therapies, this would allow for the estimation of ablation margins, optimal probe placement and selection of energy settings, potentially even minimizing risk of damage to nearby structures [1, 56].

Training and education

Machine learning combined with augmented reality systems could give trainees a new platform for honing their procedural skills, leading to novel ways of assessing performance and improving their competency [37]. In particular, AR and VR applications have been shown to improve upon current training methods in motivation, interactivity and learning of material; advanced 3D rendering and manipulation of imaging in space allows trainees to conceptualize anatomy and to improve procedural skills in a simulated environment, with no risk to patients. Moreover, VR increases realism for trainees in procedural planning when compared with standard 2D images and improve multidisciplinary communication with remote colleagues [12].

Limitations

Despite continuous progress over the past decades, AI has not and may never reach the holistic intelligence of humans; AI algorithms are intensely data-hungry, which can be difficult to obtain, and training can be time-consuming and expensive and require the clinically relevant question to be posed. Deep learning annotations for training may require bulk of human ground truth, and data curation may be a hurdle.

Whereas human intelligence can be acquired through even one simple observation and without feedback, AI software must see the same object potentially hundreds of thousands of times to recognize it, needing constant iterative feedback, to indicate whether it has guessed correctly or not.

One of the main weaknesses of AI systems is the lack of experience in real life, which is necessary to make it reliable for realistic questions, and capable of abstraction and generalization. Moreover, clinical practice is studded with atypical presentations of diseases that current AI systems might misdiagnose, because no finished training set can fully represent the variety of obscure or rare cases that can be seen in real clinical practice. Clearly, rare findings or features present possible weaknesses due to lack of large volume of particular features.

In addition, more complex problems of interpretation of radiology typically require deductive reasoning, using knowledge of disease processes and selective integration of information from previous examinations or patient records, and currently there is no learning system capable of achieving the most complex integration of thinking. [3]. In fact, some clinical problems, which may seem solvable in theory, are unsolvable in practice and are intractable for computers. Beyond the pre-treatment decision-making process, interventional radiologists perform sophisticated procedures that require technical expertise and instant intraprocedural decisions and in these situations, and even the fastest computer can get stuck in an infinite cycle or take an unimaginably long time to produce an accurate decision or result [34].

A further limitation of AI, in particular of DL algorithms, is that they are relatively opaque: it remains difficult to clarify mechanisms or what the different parts of a large network do. This makes it difficult to delineate the limits of the network or debugging errors in image interpretation.

Ethical issues, privacy, and data sharing on the use of patient data for training the AI models are timely, relevant, and variable by geography. Although AI might improve reproducibility or standardization of specific medical practices, physicians must take responsibility for the medical diagnosis and treatment provided to patients. The clinical advice provided by AI software should always be reviewed by an experienced healthcare professional, who may or may not approve the recommendation provided.

In fact, AI applications could produce significant ethical, legal and governance problems in the healthcare sector if they cause abrupt disruptions to its contextual integrity and relational dynamics.

Actually, the current regulation of AI in healthcare is subject to a miscellaneous guidelines and subjectivity of the authorities; the EU policy observes the following three Directives on medical devices: 93/42/EEC Council Directive concerning Medical Devices [57], 90/385/EEC Council Directive on the approximation of the laws of the Member States relating to active implantable medical devices [58], Directive 98/79/EC of the European Parliament and of the Council on in vitro diagnostic medical devices [59]. Many diverse and different data sharing, and privacy policies govern how different geographies interact. Federated learning might overcome some of these challenges, whereby the AI model weights are exchanged instead of the actual private or identifiable patient imaging data.

With many of these limitations, today's AI systems are produced as an auxiliary tool for radiologists rather than a replacement; decision making within the IR is likely to remain a matter of natural intelligence for radiologists, or a multidisciplinary team (supported by the AI), but with the physicians taking full responsibility, considering the input from the AI but not necessarily following it in a prescriptive manner [60].

Technology remains neutral and is only as useful or strong as the human guidance used to annotate or ask the appropriate questions of the correct data. Since the data used to train ML and DL algorithms are generated by humans, the utility all depends on how it is designed and implemented.

Conclusions

Although concerns have been expressed about the impact of AI on the radiology professional community, AI is not actually a threat, but a great opportunity. Used correctly, it could augment conventional practice of Diagnostic and also Interventional Radiology. Although futuristic and speculative, AI combined with Augmented Reality/Virtual Reality could help the interventional radiologist make faster, correct and more accurate and efficient, cost-effective decisions in terms of diagnosis, planning and treatment management, by allowing earlier disease identification, earlier treatments at an earlier stage and with less invasiveness, thus improving patient care and satisfaction.

Human and medical judgment, and even more empathy and compassion in communicating critical findings (upon which millions of patients rely in their medical care), are difficult to quantify and even more difficult, or even impossible, to simulate.

Therefore, modern radiologists need to be aware of the basic principles of AI; thanks to their ability to adapt and innovate, they will remain essential for medical practice and take a more decisive role in the process of digitization and personalization of medicine.

Essentials

-

AI can improve patient’s compliance through the possibility to show a simulation of the experience in angiography suite through the use of AR/VR, allowing patient to aware of what awaits him/her at the time of the procedure.

-

Through the use of ML DL algorithms, AI models predict the success rate of a specific treatment in a specific patient, to estimate/forecast the outcomes and/or the cost efficiency and benefits of a treatment before performing it, perhaps via investigation of demographic and pathologic characteristics in advance.

-

During pre-procedural assessment in the IR/angiography suite, advanced 3D rendering and manipulation of imaging in space allow operators to conceptualize difficult anatomy, to increases realism in procedural planning when compared with standard 2D images, and to improve procedural skills in a previous simulated environment, with no risk to patient.

-

One of the main weaknesses of AI systems is the lack of clear mechanistic rationale and real-life experience, and the lack of the capability to abstract and generalize. Atypical presentations of diseases could be misdiagnosed by AI systems, because no finished training set can fully represent the variety of cases that can be seen in clinical practice.

-

Radiologists and IR should embrace AI tools that augment the practicality, quality, and efficiency of their practice and reject any AI model that does not.

References

Hamet P, Tremblay J (2017) Artificial intelligence in medicine. Metabolism 69:S36–S40. https://doi.org/10.1016/j.metabol.2017.01.011

Erickson BJ, Korfiatis P, Akkus Z, Kline TL (2017) Machine learning for medical imaging. Radiographics 37:505–515. https://doi.org/10.1148/rg.2017160130

Chartrand G, Cheng PM, Vorontsov E et al (2017) Deep learning: A primer for radiologists. Radiographics 37:2113–2131. https://doi.org/10.1148/rg.2017170077

Aerts HJWL (2016) The potential of radiomic-based phenotyping in precisionmedicine a review. JAMA Oncol 2:1636–1642

Kumar V, Gu Y, Basu S et al (2013) NIH Public Access 30:1234–1248. https://doi.org/10.1016/j.mri.2012.06.010.QIN

Lambin P, Rios-Velazquez E, Leijenaar R et al (2012) Radiomics: extracting more information from medical images using advanced feature analysis. Eur J Cancer. https://doi.org/10.1016/j.ejca.2011.11.036

Auloge P, Cazzato RL, Ramamurthy N et al (2019) Augmented reality and artificial intelligence-based navigation during percutaneous vertebroplasty: a pilot randomised clinical trial. Eur Spine J. https://doi.org/10.1007/s00586-019-06054-6

Glover M, Daye D, Khalilzadeh O et al (2017) Socioeconomic and demographic predictors of missed opportunities to provide advanced imaging services. J Am CollRadiol. https://doi.org/10.1016/j.jacr.2017.05.015

Iannessi A, Marcy PY, Clatz O et al (2018) A review of existing and potential computer user interfaces for modern radiology. Insights Imaging 9:599–609

Erdal BS, Prevedello LM, Qian S et al (2018) Radiology and enterprise medical imaging extensions (REMIX). J Digit Imaging. https://doi.org/10.1007/s10278-017-0010-6

Choy G, Khalilzadeh O, Michalski M et al (2018) Current applications and future impact of machine learning in radiology. Radiology 288:318–328. https://doi.org/10.1148/radiol.2018171820

Uppot RN, Laguna B, McCarthy CJ et al (2019) Implementing virtual and augmented reality tools for radiology education and training, communication, and clinical care. Radiology 291:570–580. https://doi.org/10.1148/radiol.2019182210

Ventura S, Baños RM, Botella C (2018) Virtual and augmented reality: new frontiers for clinical psychology. In: State of the art virtual reality and augmented reality knowhow

Letzen B, Wang CJ, Chapiro J (2019) The role of artificial intelligence in interventional oncology: a primer. J VascIntervRadiol 30:38-41.e1. https://doi.org/10.1016/j.jvir.2018.08.032

Iezzi R, Goldberg SN, Merlino B et al (2019) Artificial intelligence in interventional radiology: a literature review and future perspectives. J Oncol. https://doi.org/10.1155/2019/6153041

Zhong BY, Ni CF, Ji JS et al (2019) Nomogram and artificial neural network for prognostic performance on the albumin-bilirubin grade for hepatocellular carcinoma undergoing transarterial chemoembolization. J VascIntervRadiol. https://doi.org/10.1016/j.jvir.2018.08.026

Mohamadlou H, Lynn-Palevsky A, Barton C et al (2018) Prediction of acute kidney injury with a machine learning algorithm using electronic health record data. Can J Kidney Heal Dis. https://doi.org/10.1177/2054358118776326

Daye D, Staziaki PV, Furtado VF et al (2019) CT texture analysis and machine learning improve post-ablation prognostication in patients with adrenal metastases: a proof of concept. CardiovascInterventRadiol 42:1771–1776. https://doi.org/10.1007/s00270-019-02336-0

Abajian A, Murali N, Savic LJ et al (2018) Predicting treatment response to intra-arterial therapies for hepatocellular carcinoma with the use of supervised machine learning—an artificial intelligence concept. J VascIntervRadiol. https://doi.org/10.1016/j.jvir.2018.01.769

Thomalla G, Simonsen CZ, Boutitie F et al (2018) MRI-Guided thrombolysis for stroke with unknown time of onset. N Engl J Med. https://doi.org/10.1056/NEJMoa1804355

Ho KC, Speier W, El-Saden S, Arnold CW (2017) Classifying acute ischemic stroke onset time using deep imaging features. AMIA. Annu symp proceedings AMIA Symp

Chen L, Bentley P, Rueckert D (2017) Fully automatic acute ischemic lesion segmentation in DWI using convolutional neural networks. NeuroImageClin. https://doi.org/10.1016/j.nicl.2017.06.016

Bouts MJRJ, Tiebosch IACW, Van Der Toorn A et al (2013) Early identification of potentially salvageable tissue with MRI-based predictive algorithms after experimental ischemic stroke. J Cereb Blood Flow Metab. https://doi.org/10.1038/jcbfm.2013.51

Huang S, Shen Q, Duong TQ (2011) Quantitative prediction of acute ischemic tissue fate using support vector machine. Brain Res. https://doi.org/10.1016/j.brainres.2011.05.066

Chen Y, Dhar R, Heitsch L et al (2016) Automated quantification of cerebral edema following hemispheric infarction: application of a machine-learning algorithm to evaluate CSF shifts on serial head CTs. NeuroImageClin. https://doi.org/10.1016/j.nicl.2016.09.018

Dhar R, Chen Y, An H, Lee JM (2018) Application of machine learning to automated analysis of cerebral edema in large cohorts of ischemic stroke patients. Front Neurol. https://doi.org/10.3389/fneur.2018.00687

Bentley P, Ganesalingam J, Carlton Jones AL et al (2014) Prediction of stroke thrombolysis outcome using CT brain machine learning. NeuroImageClin. https://doi.org/10.1016/j.nicl.2014.02.003

Scalzo F, Alger JR, Hu X et al (2013) Multi-center prediction of hemorrhagic transformation in acute ischemic stroke using permeability imaging features. MagnReson Imaging. https://doi.org/10.1016/j.mri.2013.03.013

Yu Y, Guo D, Lou M et al (2018) Prediction of hemorrhagic transformation severity in acute stroke from source perfusion MRI. IEEE Trans Biomed Eng. https://doi.org/10.1109/TBME.2017.2783241

Nielsen A, Hansen MB, Tietze A, Mouridsen K (2018) Prediction of tissue outcome and assessment of treatment effect in acute ischemic stroke using deep learning. Stroke. https://doi.org/10.1161/STROKEAHA.117.019740

Asadi H, Dowling R, Yan B, Mitchell P (2014) Machine learning for outcome prediction of acute ischemic stroke post intra-arterial therapy. PLoS ONE. https://doi.org/10.1371/journal.pone.0088225

Forkert ND, Verleger T, Cheng B et al (2015) Multiclass support vector machine-based lesion mapping predicts functional outcome in ischemic stroke patients. PLoS ONE. https://doi.org/10.1371/journal.pone.0129569

Rondina JM, Filippone M, Girolami M, Ward NS (2016) Decoding post-stroke motor function from structural brain imaging. NeuroImageClin. https://doi.org/10.1016/j.nicl.2016.07.014

Pesapane F, Tantrige P, Patella F et al (2020) Myths and facts about artificial intelligence: why machine- and deep-learning will not replace interventional radiologists. Med Oncol 37:40. https://doi.org/10.1007/s12032-020-01368-8

Saba L, Sanfilippo R, Tallapally N et al (2011) Evaluation of carotid wall thickness by using computed tomography and semiautomatedultrasonographic software. J Vasc Ultrasound. https://doi.org/10.1177/154431671103500302

Lakhani P, Prater AB, Hutson RK et al (2018) Machine learning in radiology: applications beyond image interpretation. J Am CollRadiol. https://doi.org/10.1016/j.jacr.2017.09.044

Meek RD, Lungren MP, Gichoya JW (2019) Machine learning for the interventional radiologist. Am J Roentgenol 213:782–784. https://doi.org/10.2214/AJR.19.21527

Dey D, Gaur S, Ovrehus KA et al (2018) Integrated prediction of lesion-specific ischaemia from quantitative coronary CT angiography using machine learning: a multicentre study. EurRadiol. https://doi.org/10.1007/s00330-017-5223-z

Gurgitano M, Signorelli G, Rodà GM et al (2020) Use of perfusional CBCT imaging for intraprocedural evaluation of endovascular treatment in patients with diabetic foot: a concept paper. Acta Biomed 1:1. https://doi.org/10.23750/abm.v91i10-S.10267

Cho H, Lee JG, Kang SJ et al (2019) Angiography-based machine learning for predicting fractional flow reserve in intermediate coronary artery lesions. J Am Heart Assoc. https://doi.org/10.1161/JAHA.118.011685

Floridi C, Muollo A, Fontana F et al (2014) C-arm cone-beam computed tomography needle path overlay for percutaneous biopsy of pulmonary nodules. RadiolMedica. https://doi.org/10.1007/s11547-014-0406-z

Angileri SA, Di Meglio L, Petrillo M et al (2020) Software-assisted US/MRI fusion-targeted biopsy for prostate cancer. Acta Biomed 1:1. https://doi.org/10.23750/abm.v91i10-S.10273

Rotolo N, Floridi C, Imperatori A et al (2016) Comparison of cone-beam CT-guided and CT fluoroscopy-guided transthoracic needle biopsy of lung nodules. EurRadiol 26:381–389. https://doi.org/10.1007/s00330-015-3861-6

Fontana F, Piacentino F, Ierardi AM et al (2021) Comparison between CBCT and fusion PET/CT-CBCT guidance for lung biopsies. CardiovascInterventRadiol. https://doi.org/10.1007/s00270-020-02613-3

Floridi C, Carnevale A, Fumarola EM et al (2019) Percutaneous lung tumor biopsy under CBCT guidance with PET-CT fusion imaging: preliminary experience. CardiovascInterventRadiol. https://doi.org/10.1007/s00270-019-02270-1

Pandolfi M, Liguori A, Gurgitano M et al (2020) Prostatic artery embolization in patients with benign prostatic hyperplasia: perfusion cone-beam CT to evaluate planning and treatment response. Acta Biomed 1:1. https://doi.org/10.23750/abm.v91i10-S.10260

Floridi C, Radaelli A, Pesapane F et al (2017) Clinical impact of cone beam computed tomography on iterative treatment planning during ultrasound-guided percutaneous ablation of liver malignancies. Med Oncol. https://doi.org/10.1007/s12032-017-0954-x

Carrafiello G, Ierardi AM, Duka E et al (2016) Usefulness of cone-beam computed tomography and automatic vessel detection software in emergency transarterial embolization. CardiovascInterventRadiol. https://doi.org/10.1007/s00270-015-1213-1

Carrafiello G, Ierardi AM, Radaelli A et al (2016) Unenhanced cone beam computed tomography and fusion imaging in direct percutaneous sac injection for treatment of type II endoleak: technical note. CardiovascInterventRadiol. https://doi.org/10.1007/s00270-015-1217-x

Ierardi AM, Duka E, Radaelli A et al (2016) Fusion of CT angiography or MR angiography with unenhanced CBCT and fluoroscopy guidance in endovascular treatments of aorto-iliac steno-occlusion: technical note on a preliminary experience. CardiovascInterventRadiol. https://doi.org/10.1007/s00270-015-1158-4

Han X (2017) MR-based synthetic CT generation using a deep convolutional neural network method. Med Phys. https://doi.org/10.1002/mp.12155

Abe Y, Sato S, Kato K et al (2013) A novel 3D guidance system using augmented reality for percutaneous vertebroplasty. J Neurosurg Spine. https://doi.org/10.3171/2013.7.SPINE12917

Fritz J, U-Thainual P, Ungi T, et al (2012) Augmented reality visualization with image overlay for MRI-guided intervention: accuracy for lumbar spinal procedures with a 1.5-T MRI system. Am J Roentgenol 1:1. https://doi.org/10.2214/AJR.11.6918

Ierardi AM, Piacentino F, Giorlando F et al (2016) Cone beam computed tomography and its image guidance technology during percutaneous nucleoplasty procedures at L5/S1 lumbar level. Skeletal Radiol. https://doi.org/10.1007/s00256-016-2486-4

Seals K, Al-Hakim R, Mulligan P et al (2019) 03:45 PM Abstract No. 38 The development of a machine learning smart speaker application for device sizing in interventional radiology. J VascIntervRadiol 1:1. https://doi.org/10.1016/j.jvir.2018.12.077

Felfoul O, Mohammadi M, Taherkhani S et al (2016) Magneto-aerotactic bacteria deliver drug-containing nanoliposomes to tumour hypoxic regions. Nat Nanotechnol. https://doi.org/10.1038/nnano.2016.137

European Parliament (1993) Council directive 93/42/EEC

Ec (2000) Council Directive 90/385/EEC of 20 June 1990 on the approximation of the laws of the Member States relating to active implantable medical devices. Off J Eur Commun

Parliament thee, of Thec, Union Thee (1998) Directive 98/79/Ec of The European Parliament and of the Council of 27 October 1998 on in vitro diagnostic medical devices. Off J Eur Commun

Neri E, Coppola F, Miele V et al (2020) Artificial intelligence: Who is responsible for the diagnosis? Radiol Med

Acknowledgements

Supported in part by the Intramural Research Program of the NIH and the NIAID Intramural Targeted Anti-COVID-19 Program. NIH and NVIDIA, Philips, Siemens, and Canon Medical have collaborations in this space or Cooperative Research and Development Agreements.

Funding

This study was not supported by any funding.

Author information

Authors and Affiliations

Contributions

Each author has contributed to conception and design, analysis and interpretation of the data, drafting of the article, critical revision and final approval.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical standards

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Gurgitano, M., Angileri, S.A., Rodà, G.M. et al. Interventional Radiology ex-machina: impact of Artificial Intelligence on practice. Radiol med 126, 998–1006 (2021). https://doi.org/10.1007/s11547-021-01351-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11547-021-01351-x