Abstract

We study the generalized Galois numbers which count flags of length r in N-dimensional vector spaces over finite fields. We prove that the coefficients of those polynomials are asymptotically Gaussian normally distributed as N becomes large. Furthermore, we interpret the generalized Galois numbers as weighted inversion statistics on the descent classes of the symmetric group on N elements and identify their asymptotic limit as the Mahonian inversion statistic when r approaches ∞. Finally, we apply our statements to derive further statistical aspects of generalized Rogers–Szegő polynomials, reinterpret the asymptotic behavior of linear q-ary codes and characters of the symmetric group acting on subspaces over finite fields, and discuss implications for affine Demazure modules and joint probability generating functions of descent-inversion statistics.

Similar content being viewed by others

1 Introduction

Let \(G_{N}^{(r)} (q)\) denote the number of flags \(0 = V_{0} \subseteq \cdots \subseteq V_{r} = \mathbf{F}_{q}^{N}\) of length r in an N-dimensional vector space over a field with q elements where repetitions are allowed. Then \(G_{N}^{(r)} (q)\) is a polynomial in q, a so-called generalized Galois number [23]. In particular, when r=2, these are the Galois numbers which give the total number of subspaces of an N-dimensional vector space over a finite field [5].

The generalized Galois numbers are a biparametric family of polynomials, each with nonnegative integral coefficients, in the parameters N and r. We want to analyze their limiting properties as those parameters become large. Viewing each generalized Galois number as a discrete distribution on the real line, we determine the asymptotic behavior of this biparametric family of distributions. Our first result is the following:

Theorem 3.5

For r≥2 and N∈N, let G N,r be a random variable with probability generating function \(\mathrm {E}[q^{G_{N,r}}] = r^{-N} \cdot G_{N}^{(r)} (q)\). Then,

For fixed r and N→∞, the distribution of the random variable

converges weakly to the standard normal distribution.

Furthermore, we derive an exact formula in terms of weighted inversion statistics on the descent classes of the symmetric group and derive the asymptotic behavior with respect to the second parameter:

Theorem 4.1

Consider the Galois number \(G_{N}^{(r)}(q) \in \mathbf{N}[q]\) for r≥2 and N∈N. Let \(\mathfrak{S}_{N}\) be the symmetric group on N elements, and for a permutation \(\pi \in \mathfrak{S}_{N}\), denote by \(\operatorname {inv}(\pi)\) its number of inversions and by \(\operatorname {des}(\pi)\) the cardinality of its descent set D(π). Then,

For fixed N and r→∞, we have

To state one application, our exact formula in Theorem 4.1 allows us to reinterpret the asymptotic behavior of the numbers of equivalence classes of linear q-ary codes [7, 8, 24, 25] under permutation equivalence \((\mathfrak{S})\), monomial equivalence \((\mathfrak{M})\), and semi-linear monomial equivalence (Γ) as follows:

To state one application, our exact formula in Theorem 4.1 allows us to reinterpret the asymptotic behavior of the numbers of equivalence classes of linear q-ary codes [7, 8, 24, 25] under permutation equivalence \((\mathfrak{S})\), monomial equivalence \((\mathfrak{M})\), and semi-linear monomial equivalence (Γ) as follows:

Corollary 5.2

The number of linear q-ary codes of length n up to equivalence \((\mathfrak{S})\), \((\mathfrak{M})\), and (Γ) is given asymptotically, as q is fixed and n→∞, by

where a=|Aut(F q )|=log p (q) with \(p = \operatorname {char}(\mathbf{F}_{q})\). In particular, the numerator of the asymptotic numbers of linear q-ary codes is the (weighted) inversion statistic on the permutations having at most 1 descent.

The organization of our article is as follows. Since the generalized Galois numbers are a specialization of the generalized Rogers–Szegő polynomials [23], which are generating functions of q-multinomial coefficients [17, 18, 22], we summarize the statistical behavior of the q-multinomial coefficients in Sect. 2. The determination of mean and variance for generalized Galois numbers and of the higher cumulants for q-multinomial coefficients in Sect. 3, allow us to prove the asymptotic normality of the generalized Galois numbers (Theorem 3.5) through the method of moments. In Sect. 4 we analyze the combinatorial interpretation of q-multinomial coefficients in terms of inversion statistics on permutations [21]. Based on our interpretation of the generalized Galois numbers as weighted inversion statistics on the descent classes of the symmetric group, we describe their limiting behavior toward the Mahonian inversion statistic (Theorem 4.1). We conclude with applications of our results in Sect. 5. That is, we derive further statistical aspects of generalized Rogers–Szegő polynomials in Corollary 5.1, reinterpret the asymptotic behavior of the numbers of linear q-ary codes in Corollary 5.2, and discuss implications for affine Demazure modules in Corollary 5.6 and joint probability generating functions of descent-inversion statistics (5.10), (5.11).

2 Notation and preliminaries

We denote by N the set of nonnegative integers {0,1,2,3,…}. Let q be a variable, N∈N, and k=(k 1,…,k r )∈N r. The q-multinomial coefficient is defined as

Here, \([k]_{q} ! = \prod_{i=1}^{k} \frac{1-q^{i}}{1-q}\) denotes the q-factorial. Note that the q-multinomial coefficient is a polynomial in q and

For N∈N, the generalized Nth Rogers–Szegő polynomial \(H^{(r)}_{N}(\mathbf{z}, q) \in \mathbf{C}[z_{1}, \ldots ,\allowbreak z_{r}, q]\) is the generating function of the q-multinomial coefficients:

Here we use multiexponent notation \({\mathbf{z}^{\mathbf{k}}} = z_{1}^{k_{1}} \cdots z_{r}^{k_{r}}\) for k=(k 1,…,k r )∈N r. Note that by our definition of the q-multinomial coefficients it is convenient to suppress the condition k 1+⋯+k r =N in the summation index.

As described in [23], the q-multinomial coefficient \(\genfrac {[}{]}{0pt}{}{N}{\mathbf{k}}_{q}\) counts the number of flags \(0 = V_{0} \subseteq \cdots \subseteq V_{r} = \mathbf{F}_{q}^{N}\) subject to the conditions dim(V i )=k 1+⋯+k i , and consequently the specialization of the generalized Rogers–Szegő polynomial \(H^{(r)}_{N} (\mathbf{z},q)\) at z=1=(1,…,1) counts the total number of flags of subspaces of length r in \(\mathbf{F}_{q}^{N}\). This number (a polynomial in q) is called a generalized Galois number and denoted by \(G_{N}^{(r)}(q)\). In particular, when r=2, the specializations of the Rogers–Szegő polynomials are the Galois numbers G N (q) which count the number of subspaces in \(\mathbf{F}_{q}^{N}\) [5].

We will need notation from the context of symmetric groups. D(π) is the descent set of π, \(\mathcal{D}_{T}\) the descent class, \(\operatorname {des}(\pi)\) the number of descents, and \(\operatorname {inv}(\pi)\) the number of inversions of π, i.e.,

The sign ∼ refers to asymptotic equivalence, that is, for f,g:N→R >0, we write f(n)∼g(n) if lim n→∞ f(n)/g(n)=1. We write f(n)=O(g(n)) if there exists a constant C>0 such that f(n)≤Cg(n) for all sufficiently large n, and f(n)=o(g(n)) if lim n→∞ f(n)/g(n)=0.

Let us recollect some known results about statistics of q-multinomial coefficients. Note that one has the usual differentiation method.

Proposition 2.1

Let X be a discrete random variable with probability generating function E[q X]=f(q)∈R[q]. Then,

Via Proposition 2.1 one can prove from the definition (2.1) of the q-multinomial coefficient:

Proposition 2.2

(See [1, Eqs. (1.9) and (1.10)])

For k=(k 1,…,k r )∈N r, let X N,k be a random variable with probability generating function \(\mathrm {E}[q^{X_{N,\mathbf{k}}}] = \binom{N}{\mathbf{k}}^{-1} \cdot \genfrac {[}{]}{0pt}{}{N}{\mathbf{k}}_{q}\). Then,

Here, e i (k) denotes the ith elementary symmetric function in the variables k=(k 1,…,k r ).

3 Asymptotic normality of Galois numbers

Let us start by computing mean and variance of the generalized Galois numbers.

Lemma 3.1

Let G N,r be a random variable with probability generating function \(\mathrm {E}[q^{G_{N,r}}] = r^{-N} \cdot G_{N}^{(r)} (q)\). Then,

Proof

By Proposition 2.1 we have to compute the value of the derivatives \(\frac{d}{ dq}\) and \(\frac{d^{2}}{dq^{2}}\) of \(G_{N}^{(r)}(q) = H^{(r)}_{N}(\mathbf{1},q)\) evaluated at q=1. Since \(H^{(r)}_{N}(\mathbf{1},q) = \sum_{\mathbf{k} \in \mathbf{N}^{r}}\genfrac {[}{]}{0pt}{}{N}{\mathbf{k}}_{q}\), they can be computed from Proposition 2.2 via index manipulations in sums involving multinomial coefficients. We will need the identities

and

The last identity follows from

where p s denotes the sth power sum, and

Now, \(G_{N}^{(r)}(1) = r^{N}\) and, by (3.1),

For the variance, we will also need (3.2). That is,

□

In order to prove asymptotic normality, we use the well-known method of moments [3, Theorem 4.5.5]. We will need some preparatory statements. First, from the description through elementary symmetric polynomials in Proposition 2.2 we can derive the asymptotic behavior of the first two central moments of the central q-multinomial coefficients and their square-root distant neighbors.

Proposition 3.2

Let \(\mathbf{k}_{\mathrm {c}}= (k_{1}^{(N)} , \ldots , k_{r}^{(N)}) \in \mathbf{N}^{r}\) be a sequence such that \(k_{1}^{(N)} + \cdots + k_{r}^{(N)} = N\) and \(\lfloor \frac{N}{r} \rfloor \leq k_{i}^{(N)} \leq \lceil \frac{N}{r} \rceil\). Let \(X_{N,\mathbf{k}_{\mathrm {c}}}\) be a random variable with probability generating function \(\mathrm {E}[q^{X_{N,\mathbf{k}_{\mathrm {c}}}}] = \binom{N}{\mathbf{k}_{\mathrm {c}}}^{-1} \cdot \genfrac {[}{]}{0pt}{}{N}{\mathbf{k}_{\mathrm {c}}}_{q}\). Then, as r is fixed and N→∞,

Furthermore, for k c as above and any sequence \(\mathbf{s} = (s_{1}^{(N)} , \ldots , s_{r}^{(N)}) \in \mathbf{N}^{r}\) such that \(s^{(N)}_{1} + \cdots + s^{(N)}_{r} = N\) and \(\lVert \mathbf{s} - \mathbf{k}_{\mathrm {c}} \rVert = O(\sqrt{N})\), we have, as r is fixed and N→∞,

Proof

The asymptotic equivalences (3.3) and (3.4) can be computed from the definition of the elementary symmetric polynomials (recall Proposition 2.2). Recall that we are dealing with sequences of k c’s and s’s, and that all asymptotics refer to fixed r and N→∞. We will treat the first moment for illustration purposes:

Furthermore, for the s’s in question one has

Therefore, the claimed asymptotic equivalences (3.5) and (3.6) follow immediately from Proposition 2.2 and the exhibited quadratic and cubic asymptotic growth (in N) of \(\mathrm {E}[X_{N,\mathbf{k}_{\mathrm {c}}}]\) and \(\operatorname {Var}(X_{N,\mathbf{k}_{\mathrm {c}}})\), respectively. □

By a method of Panny [15], we can determine the cumulants of q-multinomial coefficients explicitly. The same technique has already been applied by Prodinger [16] to obtain the cumulants of q-binomial coefficients. The exact formula will be stated as (3.9), but in the sequel we will only need the following asymptotic statement:

Lemma 3.3

Let k=k (N)∈N r be any sequence such that \(k_{1}^{(N)} + \cdots + k_{r}^{(N)} = N\). For each N∈N, let X N,k be a random variable with probability generating function \(\mathrm {E}[q^{X_{N,\mathbf{k}}}] = \binom{N}{\mathbf{k}}^{-1} \cdot \genfrac {[}{]}{0pt}{}{N}{\mathbf{k}}_{q} \). Then, for all j≥1, the jth cumulant of X N,k is of order

as N→∞. Furthermore, if \(\lVert \mathbf{k} - \mathbf{k}_{\mathrm {c}}\rVert = O(\sqrt{N})\) for k c as above, then as r and α are fixed, and N→∞,

Proof

For k≥1, let Y k be a random variable with probability generating function \(\mathrm {E}[q^{Y_{k}}] = \frac{[k]_{q}!}{k!}\). Denote the jth cumulant of Y k by κ j,k . Panny [15, bottom of p. 176] shows that

where B j (x) denotes the jth Bernoulli polynomial evaluated at x, and B j denotes the jth Bernoulli number. Note that B j =0 for odd j≥3.

Our random variable X N,k has the probability generating function

Hence, its cumulant generating function is

and for j≥2, its cumulants are

Since B j (x)∼x j as x→∞ and each k i =O(N), the first part of the lemma follows (the case j=1 can be treated similarly).

For the second part, suppose \(\lVert \mathbf{k} - \mathbf{k}_{\mathrm {c}}\rVert= O(\sqrt{N})\). Since \(k_{i}^{(N)} = \frac{N}{r} + O(\sqrt{N})\), it follows that \(B_{j}(k_{i}^{(N)} + 1) \sim ( \frac{N}{r} + O(\sqrt{N}) )^{j} \sim \frac{1}{r^{j}} N^{j} \). Hence, if we consider the nonvanishing even cumulants κ 2β (X N,k ), we have

i.e., κ 2β (X N,k ) is exactly of order 2β+1 in N.

We abbreviate κ j (X N,k ) by κ j for convenience. Recall that the moment generating function is the exponential of the cumulant generating function. Consequently, one has the following standard relation between higher moments and cumulants:

Since the jth Bernoulli polynomial has degree j and the higher cumulants κ j of odd order vanish, we have (cf. [15, Sect. 3])

This leads to the asymptotic expansion

But κ 1(X N,k )=E[X N,k ], and since \(\| \mathbf{k} - \mathbf{k}_{\mathrm {c}}\| = O(\sqrt{N}) \), our Proposition 3.2 yields

as N→∞. This finishes the proof. □

For our proof of Theorem 3.5 by the method of moments, we need to show that the moments of the standardized central q-multinomial coefficients converge to the moments of the standard normal distribution. We will show this more generally for q-multinomial coefficients in \(O(\sqrt{N})\)-distance to the center, since our arguments yield this without extra effort. Similar results have been obtained by Canfield, Janson, and Zeilberger [1] (for a history concerning this distribution see their erratum).

Proposition 3.4

Let \(\mathbf{k}_{\mathrm {c}}= (k_{1}^{(N)} , \ldots , k_{r}^{(N)}) \in \mathbf{N}^{r}\) be a sequence such that \(k_{1}^{(N)} + \cdots + k_{r}^{(N)} = N\) and \(\lfloor \frac{N}{r} \rfloor \leq k_{i}^{(N)} \leq \lceil \frac{N}{r} \rceil\). Furthermore, consider a sequence \(\mathbf{s} = (s_{1}^{(N)} , \ldots , s_{r}^{(N)}) \in \mathbf{N}^{r}\) such that \(s^{(N)}_{1} + \cdots + s^{(N)}_{r} = N\) and \(\lVert \mathbf{s} - \mathbf{k}_{\mathrm {c}} \rVert = O(\sqrt{N})\). Let X N,s be a random variable with probability generating function \(\mathrm {E}[q^{X_{N,\mathbf{s}}}] = \binom{N}{\mathbf{s}}^{-1} \cdot \genfrac {[}{]}{0pt}{}{N}{\mathbf{s}}_{q} \). Then the moments of the random variable

converge to the moments of the standard normal distribution. In particular, the distribution of \(\tilde{X}_{N,\mathbf{s}}\) converges weakly to the standard normal distribution.

Proof

Once we have shown the convergence of the moments, the weak convergence of the distributions follows by the method of moments. Hence we will concentrate on showing the convergence of the moments. Since the moments of a random variable depend polynomially, in particular continuously, on its cumulants, it is sufficient to show that the cumulants of \(\tilde{X}_{N,\mathbf{s}}\) converge to the cumulants of the standard normal distribution, 0,1,0,0,0,… . It follows directly from the definition of cumulants that

Hence, for j≥3,

We are ready to prove the advertised asymptotic normality.

Theorem 3.5

For r≥2 and N∈N, let G N,r be a random variable with probability generating function \(\mathrm {E}[q^{G_{N,r}}] = r^{-N} \cdot G_{N}^{(r)} (q)\). Then,

For fixed r and N→∞, the distribution of the random variable

converges weakly to the standard normal distribution.

Proof

We will deploy the method of moments (cf. [3, Theorem 4.5.5]). All asymptotics refer to N→∞. We will show that for any α,

Note that once we have shown (3.12), the theorem follows by the method of moments: We have already shown in Proposition 3.4 that the moments of the standardized \(X_{N,\mathbf{k}_{\mathrm{c}}}\) converge to the moments of the standard normal distribution; hence the same holds for the standardized G N,r by linearity of the expected value and the binomial theorem.

We use the notation

In order to verify (3.12), we will show \(\mathrm {E}[G_{N,r}^{\alpha}] \gtrsim \mathrm {E}[X_{N,\mathbf{k}_{\mathrm{c}}}^{\alpha}]\) and \(\mathrm {E}[G_{N,r}^{\alpha}] \lesssim \mathrm {E}[X_{N,\mathbf{k}_{\mathrm{c}}}^{\alpha}]\) separately.

Let us start with \(\mathrm {E}[G_{N,r}^{\alpha}] \gtrsim \mathrm {E}[X_{N,\mathbf{k}_{\mathrm{c}}}^{\alpha}]\). For this, it is sufficient to prove that for all ε>0, there is an N 0∈N such that

for all N≥N 0. Let ε>0. For N∈N, let U N ⊂{x∈R r:x 1+⋯+x r =N} be the ball around \((\frac{N}{r}, \ldots, \tfrac{N}{r})\) of minimal radius such that \(\sum_{\mathbf{k} \in U_{N}} \binom{N}{\mathbf{k}} \geq \sqrt{1-\varepsilon } \cdot r^{N} \). By the central limit theorem for ordinary multinomial coefficients, the radii of U N are proportional to \(\sqrt{N}\) up to an error of order O(1). Choose a sequence k min with \(\mathbf{k}_{\min}^{(N)} \in U_{N}\) such that \(\mathrm {E}[X_{N,\mathbf{k}_{\min}}^{\alpha}] = \min_{\mathrm{k} \in U_{N}} \mathrm {E}[X_{N,\mathbf{k}}^{\alpha}]\). By (3.8), \(\mathrm {E}[X_{N,\mathbf{k}_{\min}}^{\alpha}] \sim \mathrm {E}[X_{N,\mathbf{k}_{\mathrm {c}}}^{\alpha}]\). Hence there is an N 0 such that

for all N≥N 0. Consequently,

We continue by showing \(\mathrm {E}[G_{N,r}^{\alpha}] \lesssim \mathrm {E}[X_{N,\mathbf{k}_{\mathrm{c}}}^{\alpha}]\). We claim that for all sequences k=k (N),

In order to show this, we will treat the summands of expression (3.10) for \(\mathrm {E}[X_{N,\mathbf{k}}^{\alpha}]\) individually. Let us start with the index π=(α,0,…,0), i.e., the summand κ 1(X N,k )α. Note that

Here NΔ r−1 denotes the dilated (r−1)-dimensional standard simplex in R r. Hence,

for all N, so our summand is bounded from above by

Now, consider a summand of (3.10) with index π≠(α,0,…,0). By the same arguments that lead to (3.11) such a summand is O(N 2α−1). Since the number of summands (the number of partitions of α) does not depend on N, we have

This proves (3.14). We are ready to prove the final inequality \(\mathrm {E}[G_{N,r}^{\alpha}] \lesssim \mathrm {E}[X_{N,\mathbf{k}_{\mathrm{c}}}^{\alpha}] \): Choose a sequence \(\mathbf{k}_{\max} = \mathbf{k}_{\max}^{(N)}\) in N r such that \(\mathrm {E}[X_{N,\mathbf{k}_{\max}}^{\alpha}] = \max_{\mathbf{k}} \mathrm {E}[X_{N,\mathbf{k}}^{\alpha}]\). Then

This verifies (3.12) and finishes the proof of the theorem. □

4 Galois numbers and inversion statistics

Let \(\mathcal{P}_{N,r}\) denote the set of partitions of an arbitrary integer into up to r parts of size up to N. Let \(p_{N,r} = \lvert \mathcal{P}_{N,r} \rvert\) denote the number of such partitions. Then

For T⊂{1,…,N} with t:=|T|≤r, let

denote the subset of \(\mathcal{P}_{N,r}\) consisting of all the partitions such that each element of T occurs as the size of a part. Then removing one part of each size in T defines a bijection \(\mathcal{P}_{N,r}^{T} \to \mathcal{P}_{N,r-t}\). Hence,

Note that we adhere to a more restrictive definition of binomial coefficients, namely \(\binom{a}{b} = 0\) unless 0≤b≤a.

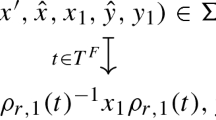

Theorem 4.1

Consider the Galois number \(G_{N}^{(r)}(q) \in \mathbf{N}[q]\) for r≥2 and N∈N. Let \(\mathfrak{S}_{N}\) be the symmetric group on N elements, and for a permutation \(\pi \in \mathfrak{S}_{N}\), denote by \(\operatorname {inv}(\pi)\) its number of inversions and by \(\operatorname {des}(\pi)\) the cardinality of its descent set D(π). Then,

For fixed N and r→∞, we have

Proof

\(G_{N}^{(r)}(q) = H^{(r)}_{N}(\mathbf{1}, q)\), and by definition

Note that there is a bijection \(\mathcal{P}_{N,r-1} \to \{ \mathbf{k} \in \mathbf{N}^{r} : k_{1} + \cdots + k_{r} = N \}\) given by

Hence,

By [21, Chap. 2, (20)],

Hence,

The last equality follows from (4.2) which implies our first assertion. As for the second claim, note that as N is fixed, 0≤t≤N−1, and r→∞, we have

The stated decomposition of the inversion statistic as a q-factorial is well known. □

5 Applications and discussions

5.1 Rogers–Szegő polynomials

Our Lemma 3.1 is a sufficient ingredient to determine the covariance of the overall distribution of coefficients in the generalized Nth Rogers–Szegő polynomial.

Corollary 5.1

Given N,r>0, let (X;Y)=(X 1,…,X r ;Y) be a random vector with probability generating function \(\mathrm {E}[\mathbf{z}^{\mathbf{X}} q^{Y}] = r^{-N}H^{(r)}_{N}(\mathbf{z},q)\). Then, the covariance of (X;Y) is given by

where Σ(X) is the covariance of the multinomial distribution.

Proof

The diagonal (block) entries are clear, since the specialization at (z,1) gives the multinomial distribution, whereas the specialization at (1,q) is exactly the generalized Galois number studied in Lemma 3.1. Therefore, we only have to prove that \(\operatorname {Cov}(X_{i},Y) = 0\) for i=1,…,r, which can be shown as follows: By symmetry, it is clear that all \(\operatorname {Cov}(X_{i},Y)\) coincide. As X 1+⋯+X r =N almost surely, it follows that

□

5.2 Linear q-ary codes

Consider the classical Galois numbers \(G_{n}(q) = G_{n}^{(2)}(q)\) that count the number of subspaces of \(\mathbf{F}_{q}^{n}\). For a general prime power q, Hou [7, 8] studies the number of equivalence classes N n,q of linear q-ary codes of length n under three notions of equivalence: permutation equivalence (\(\mathfrak{S}\)), monomial equivalence (\(\mathfrak{M}\)), and semi-linear monomial equivalence (Γ). He proves that

where a=|Aut(F q )|=log p (q) with \(p = \operatorname {char}(\mathbf{F}_{q})\). The case of binary codes up to monomial equivalence, \(N_{n,2}^{\mathfrak{S}} \sim \frac{G_{n}(2)}{n!}\), is previously derived by Wild [24, 25]. Now, the following corollary is immediate from our Theorem 4.1.

Corollary 5.2

The number of linear q-ary codes of length n up to equivalence \((\mathfrak{S})\), \((\mathfrak{M})\), and (Γ) is given asymptotically, as q is fixed and n→∞, by

where a=|Aut(F q )|=log p (q) with \(p = \operatorname {char}(\mathbf{F}_{q})\). In particular, the numerator of the asymptotic numbers of linear q-ary codes is the (weighted) inversion statistic on the permutations having at most 1 descent.

5.3 The symmetric group acting on subspaces over finite fields

Consider the character \(\chi_{N}(\tau) = \# \{ V \subset \mathbf{F}_{q}^{N} : \tau V = V \}\) of the symmetric group \(\mathfrak{S}_{N}\) acting on \(\mathbf{F}_{q}^{N}\) by permutation of coordinates. Lax [12] shows that the normalized character χ N (τ)/G N (q) asymptotically approaches the character which takes the value 1 on the identity and 0 otherwise.

Corollary 5.3

Consider the character χ N (τ) of the symmetric group \(\mathfrak{S}_{N}\) acting on \(\mathbf{F}_{q}^{N}\) by permutation of coordinates. Then, as N→∞, the normalized character

In particular, the character χ N approaches asymptotically the (weighted) inversion statistic on the permutations having at most 1 descent.

5.4 Affine Demazure modules

We refer the reader to [2, 4, 10] for the basic facts about the representation theory of affine Kac–Moody algebras and Demazure modules, and the notation used. Now, according to [6, Eq. (3.4)] and [19, Theorems 6 and 7], certain Demazure characters can be described via generalized Rogers–Szegő polynomials.

Lemma 5.4

Let r,N∈N, r≥2, and 0≤i<r, i≡Nmodr. Let q=e δ, \(\mathbf{z} = (e^{\varLambda_{1} - \varLambda_{0}}, e^{\varLambda_{2} -\varLambda_{1}}, \ldots , e^{\varLambda_{r-1} - \varLambda_{r-2}}, e^{\varLambda_{0} -\varLambda_{r-1}})\), and

Then, the character of the \(\widehat{\mathfrak{sl}}_{r}\) Demazure module \(V_{-N \omega_{1}}(\varLambda_{0})\) is given by

Proof

Note that \(t_{-\omega_{1}} \,{=}\, s_{1} s_{2} \ldots s_{r-1} \sigma^{r-1}\) with σ being the automorphism of the Dynkin diagram of \(\widehat{\mathfrak{sl}}_{r}\) which sends 0 to 1 (see, e.g., [14, Sect. 2]). Furthermore, following [19, Sect. 2], we have \([N] = t_{-N\omega_{1}} \cdot \eta_{N}\) with the convention σ⋅η N =η N . Here, [N]=(N,0,…,0)∈N r denotes the one-row Young diagram, and η N the smallest composition of degree N. That is, when N=kr+i with 0≤i<r, we have η N =((k)r−i,(k+1)i)∈N r. Then, by [19, Theorems 6 and 7]Footnote 1 and [6, Eq. (3.4)] we have

where P [N](z;q,0) denotes the specialized symmetric Macdonald polynomial (see [13, Chap. VI]) associated to the partition [N]. □

Example 5.5

For r=2, consider the specialization of the Rogers–Szegő polynomial \(H_{4}^{(2)}(z,z^{-1},q)\) and the Demazure module \(V_{-4 \omega_{1}}(\varLambda_{0})\). Via Demazure’s character formula we obtain

Furthermore, by definition,

Hence, with \(\mathbf{z} = (e^{\varLambda_{1} - \varLambda_{0}}, e^{\varLambda_{0} - \varLambda_{1}})\), q=e δ and d 2(4)=4, we have the equality

as claimed.

The coefficient l in e −lδ is commonly referred to as the degree of a monomial in \(\mathrm {ch}(V_{-N \omega_{1}}(\varLambda_{0}))\). When d is a scaling element, the polynomial \(\mathrm {ch}(V_{-N \omega_{1}}(\varLambda_{0})) |_{\mathbf{C}d} \in \mathbf{N}[e^{\delta}]\) is called the basic specialization of the Demazure character (see [10, Sects. 1.5,10.8, and 12.2] for the terminology in the context of integrable highest weight representations of affine Kac–Moody algebras). Based on the relation described in Corollary 5.4, we summarize our main results, Theorem 3.5 and Theorem 4.1, in this language.

Corollary 5.6

For r≥2 and N∈N, consider the \(\widehat{\mathfrak{sl}}_{r}\) Demazure module \(V_{-N \omega_{1}}(\varLambda_{0})\). Let Γ N,r be a random variable with probability generating function \(\mathrm {E}[e^{\varGamma_{N,r}\delta}] = r^{-N} \cdot \mathrm {ch}(V_{-N \omega_{1}}(\varLambda_{0})) |_{\mathbf{C}d} \in \mathbf{Q}[e^{\delta}]\). Then, for 0≤i<r, i≡Nmodr, we have

For fixed r and N→∞, the distribution of the random variable

converges weakly to the standard normal distribution \(\mathcal{N}(0,1)\). Furthermore, let \(\mathfrak{S}_{N}\) be the symmetric group on N elements, and for a permutation \(\pi \in \mathfrak{S}_{N}\), denote by \(\operatorname {inv}(\pi)\) its number of inversions and by \(\operatorname {des}(\pi)\) the cardinality of its descent set D(π). Then, with a N,r =N(N−1)/2−(N−i)(N+i−r)/2r,

For fixed N and r→∞, we have

It is interesting to continue the investigation of the basic specialization of Demazure characters including Kac–Moody algebra types different from A. The starting point should be Ion’s article [9], which is a generalization of Sanderson’s work [19], and one should also consider [11]. In view of (5.9) and [9, 11], we propose the following conjecture:

Conjecture 5.7

Let X=A,B,D and r∈N. Consider the \(\widehat{X}_{r}\) Demazure module \(V_{-N \omega_{1}}(\varLambda_{0})\), and let \(d_{r}^{X}(N)\) be the maximal occurring degree. For fixed N and r→∞, it holds

Here, W(X N ) is the Weyl group of finite type X N , l:W(X N )→N is the length function, and V(ω 1) denotes the standard representation of the finite-dimensional Lie algebra of type X r .

Note that (5.9) proves the case X=A. It is interesting to investigate an analogue of (5.8) for the types B and D.

5.5 Descent-inversion statistics

Stanley [20] derived a generating function identity for the joint probability generating function of the descent-inversion statistic on the symmetric group \(\mathfrak{S}_{N}\):

where \(\mathrm{Exp}_{q} (x) = \sum q^{\binom{n}{2}} x^{n} / [n]_{q} ! \). Motivated by Theorem 4.1, we define a weighted joint probability generating function of the descent-inversion statistic on the symmetric group \(\mathfrak{S}_{N}\) by

Note that \(G_{N}^{(r)}(q,1) = G_{N}^{(r)}(q)\). It is interesting to investigate the generating function

possibly with refinements depending on r and t, and the t-deformed generalized Galois number \(G_{N}^{(r)}(q,t)\) itself.

Notes

There seems to be a misprint in [19, Sect. 4]. Namely, the image π(q) should equal q=e δ, not q=e −δ.

References

Canfield, R., Janson, S., Zeilberger, D.: The Mahonian probability distribution on words is asymptotically normal. Adv. Appl. Math. 46, 109–124 (2011). doi:10.1016/j.aam.2009.10.001, Erratum 7 February 2012: http://www2.math.uu.se/~svante/papers/sj239-erratum.pdf

Carter, R.: Lie Algebras of Finite and Affine Type. Cambridge Studies in Advanced Mathematics, vol. 96. Cambridge University Press, Cambridge (2005). doi:10.1017/CBO9780511614910

Chung, K.L.: A Course in Probability Theory, 3rd edn. Academic Press, San Diego (2001)

Fourier, G., Littelmann, P.: Tensor product structure of affine Demazure modules and limit constructions. Nagoya Math. J. 182, 171–198 (2006)

Goldman, J., Rota, G.-C.: The number of subspaces of a vector space. In: Tutte, W.T. (ed.) Recent Progress in Combinatorics: Proceedings of the Third Waterloo Conference on Combinatorics, May 1968, pp. 75–83. Academic Press, San Diego (1969)

Hikami, K.: Representation of the Yangian invariant motif and the Macdonald polynomial. J. Phys. A 30, 2447–2456 (1997). doi:10.1088/0305-4470/30/7/023

Hou, X.-D.: On the asymptotic number of non-equivalent q-ary linear codes. J. Comb. Theory, Ser. A 112, 337–346 (2005). doi:10.1016/j.jcta.2005.08.001

Hou, X.-D.: Asymptotic numbers of non-equivalent codes in three notions of equivalence. Linear Multilinear Algebra 57, 111–122 (2009). doi:10.1080/03081080701539023

Ion, B.: Nonsymmetric Macdonald polynomials and Demazure characters. Duke Math. J. 116, 299–318 (2003). doi:10.1215/S0012-7094-03-11624-5

Kac, V.: Infinite-Dimensional Lie Algebras, 3rd edn. Cambridge University Press, Cambridge (1990)

Kuniba, A., Misra, K., Okado, M., Takagi, T., Uchiyama, J.: Characters of Demazure modules and solvable lattice models. Nucl. Phys. B 510, 555–576 (1998). doi:10.1016/S0550-3213(97)00685-8

Lax, R.: On the character of S n acting on subspaces of \(\mathbf {F}^{n}_{q}\). Finite Fields Appl. 10, 315–322 (2004). doi:10.1016/j.ffa.2003.09.001

Macdonald, I.: Symmetric Functions and Hall Polynomials, 2nd edn. Oxford University Press, Oxford (1995)

Macdonald, I.: Affine Hecke Algebras and Orthogonal Polynomials. Cambridge Tracts in Mathematics, vol. 157. Cambridge University Press, Cambridge (2003). doi:10.1017/CBO9780511542824

Panny, W.: A note on the higher moments of the expected behavior of straight insertion sort. Inf. Process. Lett. 22, 175–177 (1986). doi:10.1016/0020-0190(86)90023-2

Prodinger, H.: On the moments of a distribution defined by the Gaussian polynomials. J. Stat. Plan. Inference 119, 237–239 (2004). doi:10.1016/S0378-3758(02)00422-6

Rogers, L.: On a three-fold symmetry in the elements of Heine’s series. Proc. Lond. Math. Soc. 24, 171–179 (1893). doi:10.1112/plms/s1-24.1.171

Rogers, L.: On the expansion of certain infinite products. Proc. Lond. Math. Soc. 24, 337–352 (1893)

Sanderson, Y.: On the connection between Macdonald polynomials and Demazure characters. J. Algebr. Comb. 11, 269–275 (2000). doi:10.1023/A:1008786420650

Stanley, R.: Binomial posets, Möbius inversion, and permutation enumeration. J. Comb. Theory, Ser. A 20, 336–356 (1976)

Stanley, R.: Enumerative Combinatorics, vol. 1. Cambridge Studies in Advanced Mathematics, vol. 49. Cambridge University Press, Cambridge (1997)

Szegő, G.: Ein Beitrag zur Theorie der Thetafunktionen. Sitzungsberichte Preuss. Akad. Wiss., pp. 242–252 (1926)

Vinroot, R.: Multivariate Rogers–Szegő polynomials and flags in finite vector spaces (2010). arXiv:1011.0984

Wild, M.: The asymptotic number of inequivalent binary codes and nonisomorphic binary matroids. Finite Fields Appl. 6, 192–202 (2000). doi:10.1006/ffta.1999.0273

Wild, M.: The asymptotic number of binary codes and binary matroids. SIAM J. Discrete Math. 19, 691–699 (2005). doi:10.1007/s10801-012-0373-1

Acknowledgements

This work was supported by the Swiss National Science Foundation (grant PP00P2-128455), the National Centre of Competence in Research “Quantum Science and Technology,” the German Science Foundation (SFB/TR12, SPP1388, and grants CH 843/1-1, CH 843/2-1), and the Excellence Initiative of the German Federal and State Governments through the Junior Research Group Program within the Institutional Strategy ZUK 43.

The second author would like to thank Matthias Christandl for his kind hospitality at the ETH Zurich and Ryan Vinroot for helpful conversations.

We are indebted to the referees for pointing out an error in the proof of Theorem 3.5 in an earlier version of this article.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Bliem, T., Kousidis, S. The number of flags in finite vector spaces: asymptotic normality and Mahonian statistics. J Algebr Comb 37, 361–380 (2013). https://doi.org/10.1007/s10801-012-0373-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10801-012-0373-1