Abstract

While several tests for serial correlation in financial markets have been proposed and applied successfully in the literature, such tests provide rather limited information to construct predictive econometric models. This manuscript addresses this gap by providing a model-free definition of signed path dependence based on how the sign of cumulative innovations for a given lookback horizon correlates with the future cumulative innovations for a given forecast horizon. Such concept is then theoretically validated on well-known time series model classes and used to build a predictive econometric model for future market returns, which is applied to empirical forecasting by means of a profit-seeking trading strategy. The empirical experiment revealed strong evidence of serial correlation of unknown form in equity markets, being statistically significant and economically significant even in the presence of trading costs. Moreover, in equity markets, given a forecast horizon of one day, the forecasting strategy detected the strongest evidence of signed path dependence; however, even for longer forecast horizons such as 1 week or 1 month the strategy still detected such evidence albeit to a lesser extent. Currency markets also presented statistically significant serial dependence across some pairs, though not economically significant under the trading formulation presented.

Similar content being viewed by others

1 Introduction

The arrival of new information into asset price formation has been subject of extensive discussion. If such information is always fully reflected and incorporated into market prices, asset returns should be largely unpredictable and in the long run driven by compensation for taking market risk. Such a perspective on the driver for returns on investment is most directly justified in settings in which there exists a competitive double auction market with informed rational market participants that will not make irrational errors on a systematic basis, and instead will exploit any known informational inefficiency until its exhaustion. This is typically formulated in financial mathematics pricing theory through concepts such as the Efficient Market Hypothesis (EMH), von Neumann Morgenstern rationality and other related financial assumptions on markets and agent behaviours as captured in, for instance, the technical discussions on such assumptions in Chapters 9 and 14 of Platen and Heath (2006).

Initial work on the EMH focussed heavily on statistical properties of stock prices (Fama 1963, 1965a, b), arguing that in strongly efficient markets asset prices fluctuate in unpredictable and completely random ways. The seminal work in Fama (1970) has subsequently provided the definition of three forms of financial market efficiency: weak, where information contained in past prices is fully reflected in current prices; semi-strong, where all public information available (including past prices) is fully reflected in current prices; and strong, where all public and private information available is fully reflected in current prices. Such school of thought has evolved considerably and several different tests have been developed; however, it is still common practice to focus on the serial dependence of asset price changes to test for market efficiency, with a notable recent example seen in Urquhart and McGroarty (2016). These tests look for violations of the weak form of financial market efficiency that, if clearly present, could be used as evidence that the EMH does not hold in any of its forms.

Results from tests on serial depedency have been mixed. Nevertheless, the fact that a specific test does not detect serial dependence in a given price series does not guarantee in itself that serial dependence is not present: it is only evidence that a particular form of dependence is not present, but there might still be another form of serial dependence that was undetected due to test misspecification. This motivates a continuous research on finding potential new models that can cope with such alleged dependence and, ideally, use the detected dependence to make informed predictions of future market behaviour.

It is a stylized fact that asset returns do not exhibit linear serial correlation (Cont 2001); however, a number of different tests have been proposed in the literature which have detected statistically significant evidence that asset returns exhibit some form of serial correlation which is not necessarily linear. Section 3 of Lim and Brooks (2011) provides an extensive review of the most well-known methods. One of the most popular tests is the variance ratio test (VR), which postulates that, under the absence of serial correlation, the variance of the k-period return should be equal to k times the variance of the one-period return; therefore, the ratio of such variances should not be statistically different from one under the null hypothesis of absence of serial correlations. Nevertheless, this has been found not to hold across several global equity markets (Griffin et al. 2010).

Other well-known tests aiming to reject the hypothesis of the absence of serial correlations in financial markets include the automatic Box–Pierce test in Lim et al. (2013), long-memory tests in Chung (1996), Hurst Exponent tests in Qian and Rasheed (2004) and tests on the frequency domain that can be found in Lee and Hong (2001). However, despite several tests demonstrating the presence of serial correlation in financial markets and the potential for predictability, there aren’t many studies in the literature developing actual predictive models and validating them in empirical financial data.

Notably, Moskowitz et al. (2012) defines a linear regression of the return of an asset scaled by its ex-ante volatility against the sign of its return lagged by some amount of time and shows empirically there is strong evidence of predictability when a lagged 1-year return is used to predict the return 1 month ahead. Similar studies of this nature can also be found in Baltas and Kosowski (2013) where the authors establish relationships between univariate trend-following strategies in assets in futures markets and commodity trading advisors in order to examine questions of capacity constraints in trend following investing. Additionally, Corte et al. (2016) found evidence of negative dependence in daily returns of international stock, equity index, interest rate, commodity and currency markets, with such negative dependence becoming even stronger when the returns are decomposed into overnight (markets closed for trading) and intraday (markets open for trading). These studies though lack a stronger theoretical basis, being instead more focussed on the empirical aspects.

This paper provides theoretical contributions to the literature by proposing in Sect. 2 a non-parametric definition of signed path dependence and demonstrating the sufficient and necessary conditions for it to be present in covariance stationary time series. Further, Sect. 3 proposes a formal inference procedure to detect serial correlation of unknown form based on a hypothesis testing formulation of signed path dependence, which is validated on experiments on synthetic data in Sect. 4. This paper provides empirical contributions to the literature in Sect. 5 by using the test previously defined to detect evidence of serial correlation in a number of equity index and foreign exchange markets. Additionally, based on the test statistic proposed, a predictive model is defined and used to feed trading strategies whose out-of-sample performance is analysed, gross and net of transaction costs. Section 6 concludes the work. The proofs for all theorems and lemmas given in this paper can be found in the Technical Appendix (other than for commonly known theorems, which are just enunciated for the sake of completeness and clarity).

It is noted that this paper uses cross-validation and bootstrap techniques to calibrate and test the predictive model proposed. A comprehensive review of these techniques can be seen in Kohavi (1995). Numerous studies have used similar techniques to extract serial dependence and predict future behaviour in market prices: Qian and Rasheed (2007), Choudhry and Garg (2008) and Huang et al. (2005) to name a few. This paper contributes to such area of research by developing its own classifiers which are bespoke to markets that have signed path dependence and hence will have better classification performance than models such as Support Vector Machines, Genetic Algorithms for trading rules and others, given that these aim to be more generic and hence less sensitive to peculiar market features. After all, it is expected that a test whose null hypothesis is more specific to a particular problem in financial time series is likely to have more power and more accurate estimation properties than a test based on wider machine learning tools that will consequently imply a wider scope for its equivalent null hypothesis.

This paper focuses solely on both how to detect serial correlation in a formal statistical manner and how to model it, paving the way to build predictive models that can be used for the purposes of general market forecasting/risk management or even for the development of trading strategies. As such, technical discussions around assumptions on the price discovery process which might or might not generate price predictability are left outside of the scope of this paper. We also remark that some studies claim that apparent trend-following profits might actually not be arising out of positive serial correlation in returns but possibly out of intermediate horizon price performance (Novy-Marx 2012) or exogenous factors such as the presence of informed trading (Chena and Huainan 2012) or imbalances in liquidity and transaction costs (Lesmonda et al. 2004). In such cases, the use of time series models will not yield significant benefits to an investor, as claimed in Banerjee and Hung (2013). Further, as with any time series, its behaviour can abruptly change due to exogenous reasons; it is a common problem that can possibly reduce the usability of time series models, even though the tuning scheme based on a rolling observation window used in the calibration of the predictive model hereby proposed can alleviate this problem by readapting the model to most recent data and leaving obsolete data out of the fitted model.

2 A Definition of Signed Path Dependence and Implications to Time Series Models

In this section we propose an extension of the concept of time series momentum introduced in Moskowitz et al. (2012). In Moskowitz et al. (2012) it has been shown that for several markets the financial return in a particular month was positively correlated with the sign of the cumulative financial return of the previous 12 months. Empirical experiments demonstrated that, when employed together with a suitable leveraging strategy based on volatility scaling, such a phenomenom could be used to build investment strategies that offered a risk-reward ratio superior to the one offered by conventional equity investments. Our statistical framework is more general so that it provides a model-free hypothesis test for the presence of significant correlation, positive or negative, between the financial return in a given forecasting horizon against the sign the cumulative financial return over any arbitrary lookback window and use such alleged presence to predict the sign of future market returns. We also provide a method to detect the optimal lag to be used for forecasting and analyse our definition from the point of view of a number of parametric econometric models.

In most of this work, we will consider a single asset whose price is observed at different time points \(T\subseteq {\mathbb {Z}}\), interpreted as unit intervals of observation. We will denote the log-price of the asset at time \(t\in T\) by p(t), the log-return by r(t), as usual defined as \(r(t) := p(t) - p(t-1)\).

We further define cumulative log-returns over longer time scales as follows:

-

(i)

\(r_{-n}\left( t\right) = p(t) - p(t-n) = \sum _{i=0}^{n-1}{r\left( t-i\right) },\)

the (n unit intervals) look-back cumulative log-return at time t.

-

(ii)

\(r_{+h}\left( t\right) = p(t+h) - p(t) = \sum _{i=1}^{h}{r\left( t+i\right) },\)

the (over a horizon of h unit intervals) look-ahead cumulative log-return at time t.

As it is common, by slight abuse of notation, we will denote by the above objects simultaneously random variables from which they may be obtained as observations/realisations, with arguments t, n, h being the only quantities considered fixed and not random. When expectations are taken, they are taken over the whole generative process. In the definitions below, we will condition on some observational knowledge at a time point t; at this state, \(r_{-n}(t)\) is constant (not random) for any n, while \(r_{+h}\) is a random variable since the future price \(p(t+h)\) is unknown. Additionally, throughout this manuscript we shall be referring to \({\overline{r}}\) as the estimated long term mean log-return of the asset being modelled and we shall refer to \(s(t+1) = {\text {sgn}}(r(t+1)-{\overline{r}})\) where \({\text {sgn}}\) is the sign function. In the context of estimation, \(\widehat{s(t+1)} \in \{-1,1\}\) is the predicted realisation of \(s(t+1)\) given the observed realisation of the log-return time series up until time t.

Definition 1 generalizes the concept of time series momentum by the use of conditional expectations of cumulative returns:

Definition 1

(Signed Path Dependence) We say the considered asset (or the associated process) has signed path dependence with memorynat forecast horizon\(h>0\)—or simply dependence(\(-n\),\(+h\))—if, for all t where \(r_{-n}\left( t\right) \ne n{\mathbb {E}}\left[ r\left( t\right) \right] \) it holds that

Definition 1 intuitively says that, in a process with signed path dependence, knowledge of the look-back cumulative innovation over n time intervals in the past allows us to guess the sign of the look-ahead cumulative innovation h intervals in the future. If the equation is positive, such sign will be the same, and if negative, the sign will be the opposite. We intentionally look only at two cumulative innovations and only at the sign of the future one as to keep the definition intentionally parsimonious; this will allow us to check whether there is serial correlation without having to specify the exact quantitative nature of such a dependence. While being less strong for forecasting than an explicit forecasting model, we intentionally abstain from a more parametric approach as we first want to answer the question whether there is any form of dependence that could be forecast instead of right away making a forecast of a specific form. However, such definition still allows one to forecast future innovations with more accuracy than making a random guess (by accurately forecasting their signs) and such forecasting exercise on its own might after all be the best forecast one can make about future innovations if they are highly dynamic and seemingly unpredictable.

Formally, we would like to point out that the definition of signed path dependence depends on the look-back and look-ahead horizons n and h, as well as p(t) considered as a random variable. n and h are parameters of either definition, and while p(t) is unobserved we will see that “having serial dependence” is a property that can be estimated from observations of p(t) via predictive strategies. Further properties and basic observations pertaining to signed path dependence of a time series are listed in Remark 1 below.

Remark 1

-

(i)

The definition could also have been made as a function of prices only at three time points, namely at times t, \(t+h\), and \(t-n\).

-

(ii)

The same asset can have both positive dependence(\(-n\),\(+h\)) and negative dependence(\(-n'\),\(+h'\)) simultaneously as long as the pair (n, h) is different from the pair \((n',h')\).

-

(iii)

As the definition requires the inequality to hold for all time points t, it is possible that it won’t hold across the entire process; however, one can also define a local version of dependence where the inequality would hold only in a localised version of the process.

-

(iv)

The definitions make no assumption on the probability distribution of the returns of the asset or the rate of decay of statistical dependence of two points with increasing time interval.

-

(v)

The definitions are independent of the time scale the estimation is done: given three time points, it does not matter for the definition whether the observations have finer resolution or not. This means parameters for high frequency data and low frequency data can be estimated using the same algorithm and even the same time series: for example, if one has a high frequency data series but sets the forecasting horizon h to a sufficiently large number, one will be in essence making low frequency forecasts.

In this manuscript, we theoretically validate our definition of signed path dependence by verifying the conditions for its presence in the class of linear processes that admit a Wold representation. A key assumption in the models subsequently defined is that the input series is covariance stationary. Such property defined as follows:

Definition 2

(Covariance Stationarity) A time series r(t) is covariance stationary if \({\mathbb {E}}\left[ r\left( i\right) r\left( i+p\right) \right] =C_{p}\) for all \(i> 0, i+p > 0\) where \(C_{p}\) is a constant number.

We now provide the necessary and sufficient conditions for positive or negative path dependence in the case of covariance stationary processes. Our characterization will build on the the explicit classification of such processes given by Wold’s representation Theorem 1 Wold (1954).

Theorem 1

(Wold Representation Theorem) Every covariance stationary time series r(t) can be written as the sum of two time series, one deterministic and one stochastic, in the following form:

where \(\varepsilon _{t}\) is an uncorrelated innovation process, \(b_j\in {\mathbb {R}}\), and \(\eta (t)\), is a pure predictable time series, in the sense that \(P\left[ \eta (t+s) | r(t-1), r(t-2), ... \right] = \eta (t+s), s \ge 0\) where \(P\left[ \eta (t+s) | x \right] \) is the orthogonal projection of \(\eta (t+s)\) on x.

Remark 2

All linear processes have a Wold representation. Furthermore, one may consider this theorem as an existence theorem for any stationary process.

The following theorem gives the conditions for negative or positive path dependence for processes admiting a Wold representation:

Theorem 2

(Signed Path Dependence in Covariance Stationary Processes) Let \(r\left( t\right) \) be a purely non-deterministic covariance stationary process with Gaussian innovations that has a Wold representation \(r\left( t\right) =\sum _{j=0}^{\infty }{b_{j}\varepsilon \left( t-j\right) }\ \) with uncorrelated innovations such that \(\varepsilon \left( t\right) \sim {\mathcal {N}}\left( 0,\sigma _{t}^{2}\right) \).

If \(\Psi (h,t):=\sum _{i=1}^{h}{\sum _{j=h}^{\infty }b_{i+j-h}\sigma _{t-i-j+h+1}^{2}}\) then the following characterization holds:

-

(i)

The process \(r\left( t\right) \) has positive dependence\((-n,+h)\) if and only if \(\Psi (h,t)>0\) for all t.

-

(ii)

The process \(r\left( t\right) \) has negative dependence\((-n,+h)\) if and only if \(\Psi (h,t)<0\) for all t.

All above statements are independent of n.

Remark 3

Notice that the assumption of Gaussian innovations in Theorem 2 was only critical in the proof to ensure that the distribution of the cumulative increments was still Gaussian. Therefore, this assumption can be relaxed to an assumption that partial sums of the innovations of the process follow a stable law, i.e. their distribution is such that a linear combination of variables with this distribution has the same distribution up to location and scale parameters, and a similar derivation of the same properties can be constructed that allows for heavy tailed and skewed innovations.

Based on the results of Theorem 2, it becomes straightforward to infer in any covariance stationary fitted time series model whether there is positive or negative (or none) serial dependence in the sense of Definition 1 by finding its equivalent Wold decomposition and verifying if the fitted parameter values satisfy the derived conditions on the characteristic polynomial of AR and MA roots.

Moreover, notice that the definition of signed path dependence concerns only deviations around the process unconditional expectation—i.e. the deterministic part of the process. Also, if the process is linear, its innovations will have the same variance. As such, we can state the following corollary:

Corollary 1

(Signed Path Dependence in Linear Covariance Stationary Processes) Let \(r\left( t\right) \) be a linear covariance stationary process whose innovations follow a distribution such that a linear combination of variables with this distribution has the same distribution up to location and scale parameters. If \(r\left( t\right) \) has signed path dependence of sign \(\zeta \in \{-1,1\}\) with lookback \(n_l\) and horizon h for some \(n_l > 0\), then \(r\left( t\right) \) will have signed path dependence of the same sign \(\zeta \) with lookback n and horizon h for any integer \(n > 0\).

Remark 4

Also notice that if these sufficient conditions in Theorem 2 are not satisfied for all values of \(n\in {\mathbb {N}}^{+}\) but only a finite subset of these, then the presence of signed path dependence needs further refinement. In this way we comment that these conditions are sufficient but not necessary.

To facilitate understanding, we conclude the section by enunciating two lemmas that link the concept of Signed Path Dependence to known parametric time series models. These lemmas demonstrate one-to-one correspondences between parameter ranges and the presence of signed path dependence. Beyond providing theoretical evidence and hence validation for our definition, these lemmas will allow us to generate data with known signed path dependence by simulating a parametric process with suitable parameter values and subsequently use in the synthetic validation experiment given in Sect. 4. The first lemma provides sufficient and necessary results for the simplest non-trivial case of time series models, the \({\text {AR}}(1)\) processes. The lemma supresses the constant term by assuming that the variable being modelled has been measured as deviations from its mean.

Lemma 1

(Signed Path Dependence in a Stationary AR(1) Process of Constant Volatility) Assume that \(r\left( t\right) \) is a stationary \({\text {AR}}(1)\) process given by

with i.i.d. innovations \(\varepsilon \left( t\right) \sim {\mathcal {N}}\left( 0,\sigma ^{2}\right) \) and \(\left| \varphi \right| <1\). Then:

-

(i)

The process \(r\left( t\right) \) has positive path dependence if and only if \(\varphi > 0\).

-

(ii)

The process \(r\left( t\right) \) has negative path dependence if and only if \(\varphi < 0\).

In particular, if \(\varphi =0\) the process has no signed path dependence, and all statements above are independent of n and h.

And the second lemma derives conditions for Autoregressive Fractionally Integrated Moving Average (ARFIMA) models. These models generalize ARMA models by introducing long-memory features considering a non-integer differencing parameter. Note that the ARFIMA (= sFARIMA) models include the ARIMA models (as \({\text {ARFIMA}}(p,d,q)\) with d integer), the ARIMA models include the ARMA models (as \({\text {ARMA}}(p,q)={\text {ARIMA}}(p,0,q)\)), and the ARMA models include the AR models (as \({\text {AR}}(p)={\text {ARMA}}(p,0)\)), including the AR(1) of the first lemma.

Lemma 2

(Signed Path Dependence in an ARFIMA(p,d,q) Process) Assume that \(r\left( t\right) \) is a purely non-deterministic \({\text {ARFIMA}}\left( p,d,q\right) \) process with i.i.d. Gaussian innovations given by \(\Phi \left( B\right) \left( 1-B\right) ^{d}r\left( t\right) =\Theta \left( B\right) \varepsilon \left( t\right) \), where \(\Phi \) and \(\Theta \) are polynomials of degree p resp. q in the backshift operator B, with i.i.d. innovations \(\varepsilon \left( t\right) \sim {\mathcal {N}}\left( 0,\sigma ^{2}\right) \). Let \(\sum _{j=0}^{\infty }{b_{j}\varepsilon \left( t-j\right) }\) be the Wold representation of the ARMA process \(\frac{\Theta \left( B\right) }{\Phi \left( B\right) }\varepsilon \left( t\right) \) and define \(\Psi (h)=\sum _{i=1}^{h}{\sum _{j=h}^{\infty }b_{i+j-h}}\). It holds that:

-

(i)

If \(d>-\,0.5\), the process \(r\left( t\right) \) has positive path dependence for all \(h\in {\mathbb {N}}^{+}\) and \(n\in {\mathbb {N}}^{+}\) if and only if \(d\Psi (h)>0\); and

-

(ii)

If \(d>-\,0.5\), the process \(r\left( t\right) \) has negative path dependence for all \(h\in {\mathbb {N}}^{+}\) and \(n\in {\mathbb {N}}^{+}\) if and only if \(d\Psi (h)<0\).

Additionally, if \(p=q=0\), it holds that:

-

(i)

The process \(r\left( t\right) \) has positive path dependence if and only if \(d > 0\).

-

(ii)

The process \(r\left( t\right) \) has negative path dependence if and only if \(-0.5<d<0\).

Notice that ARFIMA models can demonstrate signed path dependence if they are at least in fact in the sub-class of invertible processes and may or may not contain long memory. In the case of reversal properties of an ARFIMA model we learn that invertible models are also of interest and again these sub-class of models may or may not contain long memory properties. Most interestingly, we see that in the special case of only fractional differencing of the process, i.e. no AR and no MA components of the process, we can only have positive path dependence in the ARFIMA process if the process is invertible and it may or may not be stationary or contain long memory. In the case of the ARFIMA model with no AR and no MA components of the process, then negative dependence can only occur in sub-processes which have the properties that they dont have long memory, furthermore, they must be invertible and stationary.

3 Statistical Inference Under Signed Path Dependence

The models in the previous section assumed some structure for the serial correlation present in the series. We now derive a non-parametric hypothesis test for signed path dependence under certain regularity conditions. Such regularity conditions are listed in Assumption 1. The test proposed assumes these conditions to hold over the entire series, but one can allow for a localised version of the test by segmenting the series being tested and testing each segment. The inference procedure proposed aims to test the following assumption (the single sided version of the test would swap the inequality sign for a greater than or less than sign depending on whether positive or negative path dependence is being tested):

H0 (Absence of Linear Correlations between Signs and Returns) given n, for all \(k \le n\) the sign of the sum of the previous k observations has no linear correlation with the observation 1-step ahead, i.e. \({\mathbb {E}}\left[ {\text {sgn}}\left( r_{-k}\left( t\right) \right) r\left( t+1\right) \right] = 0\) for all \(k \le n\).

H1 (Presence of some Linear Correlation between Signs and Returns) \(r\left( t\right) \) are associated such that \({\mathbb {E}}\left[ {\text {sgn}}\left( r_{-k}\left( t\right) \right) r\left( t+1\right) \right] \ne 0 \) for some value of \(k \le n\).

Before formally stating the not too restrictive classical regularity conditions, a definition is needed:

Definition 3

(Lindeberg Condition) A time series r(t) is said to satisfy the Lindeberg condition if for all \(\epsilon > 0\) the following holds:

where \(f_{i}\) is the density function of r(i) and \({\mathbb {I}}\) is the indicator function.

The intuition behind the Lindeberg condition is that, in the series being tested, the individual contribution of any observation to the sum of the variances of all obervations should be arbitrarily small for a sufficiently large series.

As such, the regularity conditions are given by the following assumption:

Assumption 1

The time series being tested r(t) is comprised of deviations from the long-term mean of a stochastic process with a well-defined mean, hence having zero mean by definition, is covariance stationary in the sense of Definition 2, satisfies the Lindeberg condition and weighted partial sums of the process are assumed to follow asymptotically a Gaussian law.

The assumption that the Lindeberg condition is satisfied is very general and few processes of interest will fail to satisfy this condition so it is not overly restrictive in any sense. Also notice that, as the series being tested is assumed covariance stationary in the sense of Definition 2, Theorem 2 will guarantee that if this series has positive or negative path dependence for a given forecast horizon h, it will have the same property (i.e. it will be always positive or always negative) for all values of the memory n, and at all time points t. So, one can fix the forecast horizon \(h=1\) and test for signed path dependence at that forecast horizon by aggregating the time series over t (for example, testing with daily data if the forecast horizon h equals to one day, testing with weekly data if it equals to 1 week, and so on so forth).

Before proposing our test statistic, we will state a result that will base the derivation of the asymptotic distribution of our test statistic under the null hypothesis for the class of processes which are covariance stationary. For proof, see H Diananda (1954).

Theorem 3

(Central Limit Theorem for Covariance Stationary m-Dependent Variables) Let \(R = r\left( 1\right) ,r\left( 2\right) ,\ldots ,r\left( n\right) \) be random variables of zero mean and finite variances such that \(r\left( t\right) \) is uncorrelated with \(r\left( t+i\right) \) for all \(i > m\), m fixed. If the variables are covariance stationary and satisfy the Lindeberg condition then, as \(n \rightarrow \infty \), \(\frac{\sum _{t=1}^{n}r\left( t\right) }{\sigma \sqrt{n}} \overset{D}{\rightarrow } {\mathcal {N}}\left( 0,1\right) \) with \(\sigma ^{2}={\mathbb {E}}\left[ \left( r\left( t\right) \right) ^{2}\right] +2\sum _{k=1}^{m}{\mathbb {E}}\left[ r\left( t\right) r\left( t+k\right) \right] \).

As such, the asymptotic distribution of our test statistic is given by the following theorem:

Theorem 4

(Critical Rejection Value of the Test Statistic) Given an arbitrary positive value of n, define \(d\left( t\right) =r(t)\omega (t)\) where \(\omega (t)=\frac{\sum _{i=1}^{n}sgn\left( \sum _{k=t-i}^{t-1}r\left( k\right) \right) }{n}\) and let \({\overline{d}}=\frac{1}{s-n}\sum _{j=n+1}^{s}d\left( j\right) \). Let r(t) be a time series that satisfies Assumption 1 and define a random vector \(R:=\left( r\left( 1\right) ,r\left( 2\right) ,\ldots ,r\left( s\right) \right) \) where s is a given constant. When \(s \rightarrow \infty \), under the null hypothesis of Absence of Linear Correlations between Signs and Returns the test statistic \(H\left( R\right) \overset{D}{\rightarrow } {\mathcal {N}}\left( 0,1\right) \) with

where

Therefore, for a given significance threshold \(\alpha \), the null hypothesis will be rejected as true if \(|H|>\Phi ^{-1}\left( 1-\frac{\alpha }{2}\right) \) where \(\Phi ^{-1}\left( x\right) \) is the inverse standard normal cumulative distribution function of x.

Remark 5

There are situations where one would be interested only to test for a specific sign of the signed path dependence. For example, if the aim is to test the suitability of trend-following strategies, one would be interested to test only for positive signed dependence. Equally, if the aim is to test the presence of mean reversion in a particular series, one would be interested to test only for negative signed dependence. These situations can be accommodated by making the test proposed in Theorem 4 one-sided and rejecting the null hypothesis if \(H>\Phi ^{-1}\left( 1-\alpha \right) \) for a positive sign dependence test or if \(H<\Phi ^{-1}\left( \alpha \right) \) for a negative sign dependence test.

Remark 6

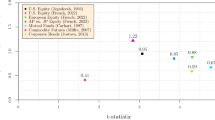

The test statistic defined in Eq. 3.2 can also be interpreted as a correlation coefficient if normalised by the sum of all absolute returns one-step ahead instead of normalised by the sample variance, i.e. if calculated as \(\rho =\frac{\sum _{j=n+1}^{s}d\left( j\right) }{\sum _{j=n+1}^{s}|r\left( j\right) |}\). Such coefficient will be bounded between − 1 and 1, with its sign indicating the sign of the path dependence being estimated and its absolute value indicating the strength of such dependency. Sect. 5 calculates this coefficient for a number of financial assets and forecast horizons and compares it against the Pearson correlation of the future return against the average return over the previous n days. We chose comparing our measure against the Pearson correlation to provide a meaningful comparison between signed path dependence and classical dependence in correlation metrics. The results show that this coefficient was able to detect a significantly positive serial correlation in situations where its Pearson counterpart was not significantly different than zero.

Remark 7

Theorem 4 can be easily extended with relaxation of the Lindeburg condition to admit covariation stationary processes which admit an analogous \(\alpha \)-stable limit theorem result, interested readers are referred to derivations of such results in Peters and Shevchenko (2015).

As the test given by Theorem 4 relies on the asymptotic distribution of the test statistic under the null hypothesis, one might want to investigate how large the sample should be to obtain convergence. In this subsection, an alternative test is proposed that has the same asymptotic distribution for the test statistic under the null hypothesis. This way, one can run both tests under the same simulation experimental conditions whilst increasing the sample size of the simulated data to assess the power of the test, also obtaining an indication of the speed of convergence of the asymptotic test given by Theorem 4. Such test is described in Algorithm 1. The test relies on an empirical percentile bootstrap to get a confidence interval for the test statistic under the null hypothesis of no signed path dependence and can therefore be used to check whether the value of test statistic calculated in the given sample is outside of this confidence interval, rejecting the null if this is the case.

The bootstrap test proposed is adequate to check for convergence of the asymptotic test statistic given by Theorem 4 because of the result given by Lemma 3:

Lemma 3

(Convergence of the Bootstrap Test Statistic Distribution under the Null Assumption) Let \(\widehat{h_{\alpha }}\) be the \(1-\frac{\alpha }{2}\) empirical percentile of the bootstrap test statistic used to reject the null hypothesis of the test procedure given by Algorithm 1 at significance threshold \(\alpha \). For large samples (\(s \rightarrow \infty \)), \(\widehat{h_{\alpha }} \rightarrow \Phi ^{-1}\left( 1-\frac{\alpha }{2}\right) \), where \(\Phi ^{-1}\) is the inverse CDF of a standard normal distribution. Therefore, for large samples, the critical rejection value of the bootstrap test statistic given by Algorithm 1 converges to the critical rejection value of the test given by Theorem 4.

It is worth noting that the rejection of the null hypothesis of the test given by Theorem 4 also implies that one can build a predictive model for the sign of return one step ahead based on the sign of the cumulative returns observed on the previous n steps. This is further explored in Sect. 5. The section compares the performance of a predictive model based on signed path dependence to make buy and sell decisions against the performance of an investment strategy known as Buy and Hold, which in essence is always buying and hence always estimating \(\widehat{s\left( t+1\right) }=1\). Theorem 5 gives the mathematical construction of the predictive model to be studied.

Theorem 5

(Mathematical Construction of the Predictive Model) Obtain \(\widehat{s\left( t+1\right) }\) by performing the following steps:

-

1.

Given a log-return sample \(r\left( 1\right) , r\left( 2\right) , \ldots , r\left( t\right) \) estimate the sample mean as \({\overline{r}}=\sum _{i=1}^{t} r\left( i\right) /t\).

-

2.

Apply the test given by Theorem 4 to the given sample to detect if there is path dependence of any sign and let \({\hat{\zeta }} \in \{-1 , 1\}\) be the sign of the path dependence detected.

-

3.

If \({\hat{\zeta }} = -1\) choose an appropriate value of n such that \({\mathbb {E}}\left[ sgn\left( r_{-n}\left( t\right) -n{\overline{r}}\right) r\left( t+1\right) -{\overline{r}}\right] < 0 \) and set \(\widehat{s\left( t+1\right) }=-sgn\left( r_{-n}\left( t\right) -n{\overline{r}}\right) \)

-

4.

Otherwise choose an appropriate value of n such that \({\mathbb {E}}\left[ sgn\left( r_{-n}\left( t\right) -n{\overline{r}}\right) r\left( t+1\right) -{\overline{r}}\right] > 0 \) and set \(\widehat{s\left( t+1\right) }=sgn\left( r_{-n}\left( t\right) -n{\overline{r}}\right) \)

Also define the prediction loss of a predictive strategy as \(L=-\widehat{s\left( t+1\right) }r\left( t+1\right) \). Note that the prediction loss of a Buy and Hold strategy is trivially given by \( L_{B \& H}=-r\left( t+1\right) \). For the aforementioned strategy we have that \( {\mathbb {E}}\left[ L_{\widehat{s\left( t+1\right) }}\right] < {\mathbb {E}}\left[ L_{B \& H}\right] \) if the null hypothesis of the test given by Theorem 4 is not true for the demeaned returns \(r\left( 1\right) -{\overline{r}}, r\left( 2\right) -{\overline{r}}, \ldots , r\left( t\right) -{\overline{r}}\).

We conclude the section by describing a predictive algorithm that attempts to forecast the sign of future asset returns using the method described in Theorem 5. Notice that the method given by Theorem 5 dynamically decides whether the prediction should be made expecting a continuation of sign (\({\hat{\zeta }}=1\)) or reversal of sign (\({\hat{\zeta }}=-1\)). Given that the particular case of trading strategies that only assume continuation in sign (trend-following) receives special attention in Finance, see CSzakmary et al. (2010). Our algorithm will cater for both cases (only continuation assumed or dynamic decision between continuation and reversals). To achieve that, we define two different predictors in Definitions 4 and 5:

Definition 4

(Moving Average (MA) Classifier) An MA(\(n, {\overline{r}}\)) predictor receives a log-return sample \(r=\left( r\left( 1\right) ,\ldots ,r\left( n\right) \right) \) and predicts that \(\widehat{s\left( n+1\right) }={\text {sgn}}\left( {r}_{-n}\left( n\right) -{\overline{r}}\right) \).

Definition 5

(Dynamic Moving Average (DMA) Classifier) A DMA(\(n, {\overline{r}}, {\hat{\zeta }}\)) predictor receives a log-return sample \(r=\left( r\left( 1\right) ,\ldots ,r\left( n\right) \right) \) and predicts that \(\widehat{s\left( n+1\right) }={\hat{\zeta }} \times {\text {sgn}}\left( {r}_{-n}\left( n\right) -{\overline{r}}\right) \) where \({\hat{\zeta }} \in \{-1,1\}\).

In order to apply these methods for prediction, parameters n, \({\overline{r}}\) and \({\hat{\zeta }}\) have to be estimated. Naturally, \({\overline{r}}\) can be estimated as the sample mean log-return. The remaining parameters can be estimated by weighted least squares of an adequately defined error function. A simple suitable error weighted function can be given as \(\varepsilon \left( t\right) = r\left( t\right) \left( \widehat{s\left( t\right) }-s\left( t\right) \right) \). Also, it is clear from Theorem 5 that predictions made by the aforementioned predictors will not be useful in a process that has no signed path dependence. Therefore, before attempting to apply any of these methods, the test defined in Theorem 4 is applied to the return series used to fit the model to ascertain the presence of signed path dependence, given that in the absence of this property no prediction should be attempted. This yields Algorithm .

Remark 8

Algorithm 2 implements the DMA predictor, but it can be easily modified to implement the MA predictor by fixing \({\hat{\zeta }}=1\) and performing a single sided test in Step 1.

As with any statistical model, there are multiple ways to estimate its parameters and weighted least squares is just one simplified possibility. The empirical tests presented in Sect. 5 have been performed using a more sophisticated machine learning algorithm, whose detailed description is outside of the scope of the main text of this manuscript. A description of the machine learning algorithm in the form of commented pseudocode is available in the Technical Appendix and the R implementation of the machine learning algorithm used in the empirical experiments of this manuscript is available in the Supplementary Material.

While the use of such machine learning method is not required to establish the main results in the paper, we have chosen it for our empirical application because the use of Train and Test sets reduces potential overfitting that could come out of a simple weighted least squares procedure, and the use of a rolling model tuning stage produces better estimates of how accurately the predictive model will perform in a practical scenario where the model gets updated as new data arrives with the passage of time.

4 Validation by Simulation

In this section we validate by simulation the properties of the test given in Sect. 3 by simulating synthetic time series where parameters are chosen so that positive or negative signed path dependence is guaranteed in the simulated series. As part of the validation exercise, the test defined in Sect. 3 is applied to a number of different synthetic series and used to detect the simulated dependence. Such results can be used to infer the robustness of the test statistic against the following factors:

-

1.

Different assumptions in the functional form of the input time series;

-

2.

Strength of the dependence present in the input time series, including the effect of long-range dependence;

-

3.

Time varying variance in the input time series when the required assumption of covariance stationarity is met; and

-

4.

Time varying variance in the input time series when the required assumption of covariance stationarity is not met.

Robustness against the aforementioned factors in a controlled environment can give assurances for the suitability of the test in empirical applications, where the actual functional form of the input time series is not known and the required assumption of covariance stationarity might not necessarily be met. Moreover, comparing different levels of Type I and Type II error probabilities across all validation scenarios can give useful insights on which properties have higher impact on the accuracy of the test and any potential biases.

We start our synthetic examples by the simplest case of the AR(1) models. As per Lemma 1, we know that the sign of the dependence for an AR(1) process depends entirely on the sign of the parameter \(\phi \), and all series \({r}_{j}\left( i\right) \) have positive path dependence when \(\varphi _{j}>0\) while all other series will not have so. As such, the first synthetic validation experiment performed by us is given by the steps below:

Experiment 1

Perform the following steps to illustrate Lemma 1:

-

1.

Generate \(P=1000\) AR(1) series of length \(z=1000\) so that \({r}_{j}\left( i\right) =\varphi _{j}{r}_{j}\left( i-1\right) +\varepsilon _{j}\left( i\right) \) with \({r}_{j}\left( 1\right) =\varepsilon _{j}\left( 1\right) \), \(0<i\le z\), \(0<j\le P\), \(\varphi _{j}=4\left( \frac{j}{5P}-\frac{P+1}{10P}\right) \) and \(\varepsilon _{j}\left( i\right) \ \sim \ {\mathcal {N}}\left( 0,1\right) \)

-

2.

Fix the significance threshold \(\alpha \) and apply the single sided version of the asymptotic test to detect positive path dependence as per Remark 5 to all the P series.

-

3.

Use the known presence of positive dependence to calculate the type I and type II error probabilities respectively by counting how many of the series where \(j \le \frac{P}{2}\) had the null hypothesis rejected and how many of the series where \(j>\frac{P}{2}\) had the test failing to reject the null hypothesis.

-

4.

Perform Steps 2 and 3 using the bootstrap test defined in Algorithm 1 to detect positive path dependence (as a control case).

-

5.

Repeat the procedure for different values of \(\alpha \).

Figure 1 shows the behaviour of type I and type II errors for the bootstrap and asymptotic tests as a function of the significance threshold for the procedure given by Experiment 1. As expected by Lemma 3, the two tests converged and the type I error probability of the asymptotic test was not statistically different than the one of the bootstrap test. Both tests had their type I error probabilities below the identity line suggesting that the test is being conservative, though not statistically incorrect. The type II error probabilities of both tests are also not statistically different than each other and always lower than 50%, suggesting that even though the test is conservative, for the AR(1) series constructed it will fail to reject the null when the alternative hypothesis truly holds only in the minority of the cases.

Performance of the tests in the simulated AR(1) series. The solid lines represent the simulated error probabilities and the dashed lines represent a 95% confidence margin of the error probability of each test, obtained via a Poisson approximation for the count of false acceptances/rejections. The chart on the left shows the simulated type I error probabilities of the asymptotic and bootstrap statistics as functions of the significance threshold when these tests are applied to the generated series. The black straight line corresponds to the identity line, where the test type I error is exactly equal to the desired significance threshold. The chart on the right shows the simulated type II error probabilities of the two tests as functions of the significance threshold with the dashed lines being the 95% confidence margin

The theoretical model has also been validated by simulating an ARFIMA(0,d,0) series. Lemma 2 gives the condition under the forecast horizon \(h = 1\) where the sign of the path dependence for this model class is known for the whole series: the sign of the parameter d. To keep the simulation procedure simple, we have reused the procedure given by Experiment 1 and in Step 1 we constructed the series based on a single parameter \(-0.1 \le d \le 0.1\), with the only modification being that the series generated in Step 1 are ARFIMA(0,\(\phi \),0) series (instead of AR(1)).

Figure 2 shows the behaviour of type I and type II errors for the bootstrap and asymptotic tests as function of the significance threshold for the simulated ARFIMA(0,d,0) series. The type I error probability of the asymptotic test is not statistically different than the one of the bootstrap test; however, both tests have their type I error probabilities well below the identity line suggesting that the test is being very conservative, though not statistically incorrect. The type II error probabilities of both tests are also not statistically different than each other and always lower than 25%, suggesting that even though the test is conservative, in the large majority of the cases it will not fail to reject the null when the alternative hypothesis truly holds. The synthetic experiment for the ARFIMA(0,d,0) model yielded conclusions similar to the ones obtained for the AR(1) model, with the difference being that both tests were considerably more powerful. This suggests the long-memory feature of the ARFIMA model introduces a stronger signed path dependence to the modelled time series than the one introduced by an AR(1).

Performance of the tests in the simulated ARFIMA(0,d,0) series. Lines have the same meaning as in Fig. 1

Finally, to ascertain some boundary conditions for the applicability of our tests, we have created a synthetic MA(n) series and analysed the behaviour of the test by modifying it so that it has a) a very weak correlation structure; b) a non-constant volatility that follows a stationary GARCH process; or c) a non-constant and non-stationary volatility that is driven by a two-state Markov-switching process.

To build the baseline MA(n) series we note that, as per Theorem 2, when the forecast horizon \(h = 1\) we have that the sign of the sum of the MA coefficients will determine the sign of the path dependence of the model. We again have reused the procedure given by Experiment 1 but in Step 1 we generated MA(5) series based on a single parameter \(-0.1 \le \phi \le 0.1\) so that the coefficients of all 5 lags were equal to \(\frac{4}{5}\phi \). The synthetic experiment for the MA(5) model yielded conclusions similar to the ones obtained for the AR(1) model, with the difference being that the test was slightly less powerful. Figure 3 shows the behaviour of type I and type II errors for the bootstrap and asymptotic tests as function of the significance threshold for the simulated MA(5) series. Like in the AR(1) case the tests converged, with both the type I and type II error probabilities of these tests not being statistically different amongst themselves. Additionally, the type II error probabilities of both tests was still lower than 50% when the significance threshold was 0.05 or higher.

Performance of the tests in the baseline simulated MA(5) series. Lines have the same meaning as in Fig. 1

After generating the baseline case, we created a stressed MA(5) simulation where series length is reduced considerably and the correlation structure is weakened. In this stressed MA(5) simulation, the procedure given by Experiment 1 was reused but in Step 1 we generated MA(5) series based on a single parameter \(-0.1 \le \phi \le 0.1\) so that the coefficients of lags 1, 3 and 5 were equal to \(\phi \) and the coefficients of lags 2 and 4 were equal to \(-\phi \). In this situation, the average sum of all MA coefficients when the alternative hypothesis holds was still positive, equal to 0.05, though the alternating signs of the lags weaken the overall positive dependence. Further, the procedure generated series of shorter length \(z=100\). Figure 4 shows the behaviour of type I and type II errors for the bootstrap and asymptotic tests as function of the significance threshold for the stressed MA(5) series. The type I error probability of the asymptotic test is always greater than the one of the bootstrap test for a given significance threshold; when the significance threshold is lower than 0.05 it is also above the identity line and for a significance threshold lower than 0.015 the identity line falls below the 95% confidence interval of the error probability for the asymptotic test though stays very close to its lower boundary. At the same time, the type II error probability of the asymptotic test is always lower than the one of the bootstrap test for a given significance threshold, though it is always higher than 90%, suggesting the test will conservatively fail to reject the null in many genuine cases that the alternative hypothesis truly holds. Of course, the reduced power is a consequence of the intentionally weak correlation structure imposed.

Despite being subject to series of very weak correlation structure, the asymptotic test still behaved reasonably, with adequate conservatism for all but very low significance thresholds (\(\alpha \le 0.02\), when the lower 95% confidence bound goes above the identity line. The bootstrap test remained adequate even for this low significance threshold, though at the cost of an even reduced power.

Performance of the tests in the stressed MA(5). Lines have the same meaning as in Fig. 1

All simulations so far assumed constant volatility. In empirical applications, volatility is known vary over time and to exhibit serial dependence (Cont 2001). To verify the effect of a non-constant volatility, we have changed the baseline MA(5) case so that the error variance follows a GARCH(1,1) process with coefficients \(\alpha =1\) (the constant term), \(\beta =0.89\) (the term that multiplies the lagged squared error) and \(\gamma =0.1\) (the term that multiplies the lagged variance). The choice of GARCH parameters ensured the series was still unconditionally covariance stationary. All other parameters of the baseline MA(5) model remained unchanged.

We note that, while the power of the test reduced slightly compared to the baseline case, comparing Figs. 4 and 5 one can see that the impact of a GARCH volatility in the power of the test was smaller than the impact of reduced correlation structure. The test remained very adequate even in the presence of GARCH volatility and Type I errors stayed either within or under the identity line for all significance thresholds, indicating adequate conservatism.

Performance of the tests in the MA(5)-GARCH(1,1) series. Lines have the same meaning as in Fig. 1

Finally, to ascertain a boundary scenario where the main assumption of covariance stationarity is not strictly satisfied, we have changed the baseline MA(5) case so that the error variance follows a Markov Switching between two equally probably GARCH(1,1) states. Such framework is widely popular in financial market research and is accepted to be a relevant deviation from time series models that has been extensively observed in empirical applications. In one of the states, the error variance follows a GARCH(1,1) process with coefficients \(\alpha =1\), \(\beta =0.89\) and \(\gamma =0.1\) and in the other state the error variance follows a GARCH(1,1) process coefficients \(\alpha =3\), \(\beta =0.95\) and \(\gamma =0.049\). All other parameters of the baseline MA(5) model remained unchanged.

We again note that, while the power of the test reduced slightly compared to the baseline case, comparing Figs. 4 and 6 one can see that the impact of a GARCH volatility in the power of the test was smaller than the impact of reduced correlation structure. The test remained very adequate even in the presence of Regime Switching GARCH volatility and Type I errors stayed either within or under the identity line for all significance thresholds, indicating adequate conservatism.

Performance of the tests in the MA(5)-RSGARCH(1,1) series. Lines have the same meaning as in Fig. 1

5 Empirical Applications

This section applies to real financial data the test given by Theorem 4 and validates the performance of trading strategies created based on Theorem 5. The dataset used comprised of the time series of the prices for the MSCI Equity Total Return Indices (in US Dollar, price returns plus dividend returns net of withholding taxes) for 21 different countries and the nominal exchange rates of 16 different currencies, all of them traded against the US Dollar. Table 1 lists the equity indices and currencies studied.

For all the 37 financial instruments the daily log-returns (defined as the logarithm of the closing price at the end of day d + 1 divided by the closing price at the end of day d) were obtained based on the price series from 31-Dec-1998 to 31-May-2017, yielding 4803 observations per instrument (and 177,711 observations in total). To conduct further analyses at different levels of observational noise, several other sampling frequencies were studied. The same log-returns were aggregated to weekly log-returns (defined as the logarithm of the closing price at the end of the Friday of week w + 1 divided by the closing price at the end of the Friday of week w), yielding 961 observations per instrument (and 35,557 observations in total); the returns were also aggregated to monthly log-returns (defined as the logarithm of the closing price at the end of the last business day of month m + 1 divided by the closing price at the end of the last business day of month m), yielding 221 observations per instrument (and 8177 observations in total). Each of the MSCI Index studied currently has at least one tradable Futures contract linked to it and many of them have also shortable ETFs linked to them, which means the any predictive model resulting out of the experiments performed in this paper can also be used to guide real equity investment strategies.

In the case of exchange rates, to accurately reflect the total returns of the investment strategies proposed, the results of the experiment include the financial returns arising out of the interest rate differential between the issuing country and the US (also known as the “rollover interest”) for each open position and also include the interest paid on the cash deposit held as margin for the currency trade.

Before testing any trading strategies, the first half of the sample described in the second paragraph of this section (corresponding to observations from 31-Dec-1999 to 31-Mar-2008) was used to calculate the test statistic defined in Equation 3.2 and detect the presence of significant serial dependence in individual assets. Tables 1 and 2 show the values of the serial correlation coefficient calculated as Remark 6 for forecast horizons of one day, 1 week and 1 month (\(n=5\) in all cases).

The statistical significances in the tables were obtained using the test procedure described in Theorem 4. For control, the tables also display the Pearson correlation coefficient between the average return of the n previous periods and the 1-step ahead return. The significances of the Pearson correlation were estimated using a Fisher transform and its limiting Gaussian approximation. The rationale for applying the test only on the first half of the sample is to ensure there is no fitting bias in the trading model, whose performance is evaluated using the second half of the sample and whose equities and currencies traded are selected based on the test results.

It can be seen that the correlation coefficient proposed in this paper and its associated test are more powerful than the Pearson estimate. Notably, only in the case of New Zealander equities that the Pearson estimate was able to detect significant correlation without our proposed method not simultaneously finding any significant correlation, whilst for many equity indices only our proposal could find a significant coefficient when the Pearson estimate was not significantly different than zero. Another interesting finding is that even though most of the equity indices had positive dependence, this was not always the case. For example, US, UK and French equities bucked the trend and exhibited significant negative dependence at a 1-day forecast horizon and Russian equities had significant negative dependence at a 1-month forecast horizon. Interestingly enough, the markets that had significant negative 1-day dependence had significant positive 1-month dependence and the market that had significant negative 1-month dependence had significant positive 1-day dependence.

It can also be seen that the procedure proposed in this paper detected dependence in a mix of emerging and developed market currencies, with significant 1-day dependence being found in the Brazilian Real, Indian Rupee, Polish Zloty, Canadian Dollar and Swiss Franc in addition 1 month-dependence being found in the Euro and British Pound. Our proposal detected dependence in more currencies than the Pearson estimate could, though the Pearson estimate detected 1-day dependence on the Japanese Yen and Mexican Peso that was undetected by our proposal. However, in most cases that the dependence found is statistically significant it is of lower magnitude than with Equities and therefore less pronounced and likely to be less exploitable for economic purposes.

To validate the power of our predictive framework, the machine learning version of Algorithm 2 (described in the “Technical Appendix”) has been applied to the data described in the beginning of this section and the predictions were translated into investment decisions: buy (when the predicted sign is positive) and sell (when the predicted sign is negative). The trading strategies were tested assuming the following: (i) there are no short sale restrictions—which is most likely the case if only futures are used to trade; and (ii) if a shortable ETF is used, its borrow cost is no more than the interest on the cash received from the short sale proceeds. Initially the markets are assumed frictionless but this assumption is relaxed at the end of this section by performing a sensitivity analysis to transaction costs.

To ascertain the out-of-sample performance of the trading models proposed, five different performance metrics were calculated for all strategies and compared against the same values of a Buy and Hold benchmark portfolio. The Buy and Hold benchmark portfolio consisted of an equal weighted long position across all 21 equity indices in the case of equity strategies and an equal weighted long position across all 16 foreign currencies in the case of currency strategies. As the rollover rates are being included in the calculation of the returns, the currencies benchmark can be interpreted as a global unhedged money market investment portfolio from a US investor’s perspective.

The performance metrics used were:

-

Average monthly out-of-sample returns of a portfolio that invested equally weighted across all equities and currencies according to the underlying classification strategy, per asset class per classifier;

-

Standard Deviation of the out-of-sample monthly returns of the said portfolio;

-

Sharpe Ratio, not adjusted by the risk-free rate: simple average of the out-of-sample monthly returns divided by their respective standard deviations; and

-

Maximum Drawdown: the maximum loss from a peak to through of the cumulative monthly portfolio returns before a new peak is attained.

-

Alpha, not adjusted by the risk-free rate: the intercept of a linear regression between the returns of the strategy and the returns of the Buy and Hold portfolio.

The returns used to calculate the Sharpe Ratio and Alpha were not adjusted by the risk-free rate to keep the implementation simple and avoid an extra choice of variable (i.e. the choice of what constitutes a risk-free rate). The lack of adjustment does not change the conclusions of the present section of the paper given that these performance metrics are being used solely to compare the performance of the different strategies against the Buy and Hold without making a more general assessment of the economic value of such strategies. All performance measures are also accompanied by their standard errors calculated using the leave-one-out Jackknife method. Additionally, the Alpha was tested for statistical significance and the Type I Error probability of the Hypothesis Test that the Alpha is greater than zero is shown on all comparative performance tables.

The trading strategy given by Theorem 5 has been empirically tested using the MA and DMA predictors to obtain out-of-sample predictions for the period corresponding to the months from April-2008 to May-2017, equivalent to the second half of the entire dataset used in this study. As our predictive method only predicts one step ahead, after a prediction is made the in-sample period gets the actual return observed one step ahead appended to it whilst the first in-sample point is discarded, so that in the next iteration the algorithm is over the new in-sample period. This is repeated until the end of the out-of-sample period. Tables 3, 4, 5, 6, 7 and 8 list the returns gross of transaction costs; however, we later incorporate transaction costs into our analysis.

The strategies proposed obtained their best performance when trading equities using a 1-day forecast horizon. As Table 3 shows, the MA strategy was able to get a monthly return that was more than three times the return of the Buy and Hold strategy, with a monthly standard deviation that was almost one third and, more importantly, a maximum drawdown that was less than one sixth, meaning that the tail behaviour was even better than the average behaviour. The resulting Sharpe Ratio, when annualised, is greater than one, a level taken as very good for the practitioners. The DMA strategy, that takes both trend-following and contrarian positions, performed even better, with an 18bps gain in expected return over the MA and a reduced maximum drawdown of just 3.7%, a fraction of the Buy and Hold maximum drawdown. The resulting Sharpe Ratio, when annualised, is very high and close to two. This can be seen as evidence that, on a 1-day trading interval, the introduction of contrarian positions in the DMA strategy can have a significant improvement over the pure trend-following MA strategy. However, when applied to foreign exchange markets, both the MA and DMA trading strategies had returns close to zero with very small standard errors. This is driven by the fact that not many currencies had displayed significant correlation (as per Table 2) and the trading model only enters into positions when the correlation detected is statistically significant.

As demonstrated on Table 5, there was a reduction in performance, gross of transaction costs, when the forecast horizon lengthened from 1 day to 1 week. However, as Table 9 shows, there was a commensurate reduction in trading activity, meaning that depending on the level of transaction costs, it might be more advantageous to trade using a 1 week forecast horizon (as opposed to a 1 day forecast horizon). Whilst the average monthly return of the strategies is no longer statistically different than the Buy and Hold, they have considerable lower risk which translates into a significanly positive Alpha. The DMA trading strategy did not have a significant difference in risk-adjusted performance compared to the MA strategy, with both obtaining similar Sharpe Ratios and Alphas. This can be seen as evidence that, in longer forecast horizons, equity markets have mostly positive dependence and trend-following trading strategies alone will capture this dependency, with the inclusion of contrarian trading not adding economic value.

In the case of weekly currency trading, Table 6 shows similar performance when compared to the daily strategy (gross of transaction costs), though the DMA strategy had a slight improvement. Like in the case of the daily frequency, the Alphas generated by currency trading are not significanly different than zero, though the maximum drawdown of the DMA strategy was much lower than the one of the Buy and Hold, indicating potential use of the model for risk management and forecasting purposes.

As shown in Table 7, the equity strategies did not provide monthly returns statistically different than the one of the Buy and Hold though they did have much reduced risk, again translating into a significanly positive Alpha. This means all equity trading strategies applied to all forecast horizons proposed produced significantly positive Alphas. As it can be seen on Table 9, there was a reduction in portfolio turnover when lengthening the forecast horizon from 1 week to 1 month (from about 75% of the portfolio per month to about 25% of the portfolio per month). This reduction is most likely not enough to justify the reduction in performance for investors with access to relatively sophisticated execution methods and low execution costs, but is likely to justify the implementation of a smart-beta/semi-passive strategy, as it will yield a similar return to Buy and Hold but with a considerable reduction in market risk and limited portfolio turnover.

We remark that the currency strategies with monthly trading (shown in Table 8) also did not produce an Alpha statistically different than zero, though the significant reduction in maximum drawdown was another feature present in this case. Therefore, we conclude that the use of the strategies proposed in currency markets is not likely to add economic value on its own, but there is potential risk management and forecasting value for the underlying correlation model.

All the results so far are gross of transaction costs. The previous steps have avoided embedding the costs as part of the model given that the actual transaction costs an investor has will depend massively on a large numbers of factors that are particular to each individual investor: whether market or limit orders are used, the order execution algorithm, the latency between the server issuing the order and the exchange receiving the order, the size of the order, the choice of broker, the level of commissions and rebates are all factors affecting the final cost.

In Anand et al. (2012) it is presented a thorough descriptive analysis of trades executed in the US equity markets by 664 institutions that traded 100 times or more per month during the time period from January 1, 1999 to December 31, 2005. The authors found that the difference between the costs of the lowest quintile and the highest quintile was as high as 69 basis points. They also found that institutions in the lowest quintile were able to get negative execution shortfalls, meaning their technology, order execution policy and broker arrangements was able to add value to the gross returns, instead of removing it. Similar findings are made by Brogaard et al. (2014) where the authors claim the lowest quartile of execution costs by institutional investors in UK equities was only 0.4 basis points whilst the top quartile had costs of 27.8 basis points. Therefore, instead of fixing a single cost number, we have chosen a set of possible costs per transaction and combined it with the out-of-sample metrics provided in Tables 3, 4 5, 6 7 and 8 to determine what would be return of a given strategy if it was subject to a given level of transaction costs. To make the numbers easier to compare between themselves and with Buy and Hold, we have rescaled the net returns for all strategies by the ratio of the strategy’s monthly standard deviation and the Buy and Hold’s monthly standard deviation—i.e. all returns, net of transaction costs, are risk adjusted so that all of them have the same market risk as the Buy and Hold strategy.

The level of transaction activity for each strategy was also calculated as this is an additional input needed to calculate the net returns. Table 9 shows how many times the portfolio was entirely rebalanced over a month. It can be seen that the Daily Equities MA strategy had the highest turnover, trading on average 11.319 times the entire portfolio in a typical month. On the other hand, the Monthly FX DMA strategy had the lowest turnover, trading only 5% of the portfolio per month. FX strategies naturally had lower turnovers as the test identified less evidence of serial correlation in currencies than in equities, so it traded them less.

Table 10 shows the returns of the strategies applied to equities. The daily strategies have the best returns for investors that can achieve low trading costs. In particular, if one can achieve trading costs of 5bps per trade (a level that can be achieved for the most sophisticated investors), the EQ DMA strategy would have obtained an annualised return of more than 20% for the same level of risk that the Buy and Hold would have obtained an annualised return of 3.87%. However, even with trading costs as high as 20bps per trade (a level that is attainable even for savvy retail investors), trading the DMA strategy on a monthly basis can achieve an annualised return in the region of 10% for the same level of risk of the Buy and Hold.

Table 11 shows the returns of the strategies applied to currency trading. Given that no strategy could have a positive Alpha, it is also seen that no strategy could have a positive annualised return net of costs, though the Buy and Hold return was also negative for the period. Therefore, these strategies when applied to currencies cannot provide absolute returns on their own. Nevertheless, it is also worth noting that the lower maximum drawdown indicates some potential use of the underlying model for Global Fixed Income portfolios, where the foreign carry interest is also a component of the return, and the strategies would be acting as currency risk mitigants.

6 Concluding Remarks

This research has proposed a novel framework to deal with unstructured serial dependence by introducing a measure of dependence that only focuses on the behaviour of the sign of changes in the process. This definition also has the power to simplify the interpretation of models with several parameters like ARFIMA by establishing boundaries in the values of the parameters that can be interpreted as simply positive or negative dependence.

The definition was used to propose a statistical inference framework to detect unstructured serial dependence, which was validated both via simulation in synthetic models and in empirical data. In the case of empirical data for equity indices, the framework was able to detect significant dependence that could not be detected using a linear correlation model and was also able to feed a quantitative trading model that provided risk adjusted returns which were significantly superior to the ones of a Buy and Hold strategy and also robust to the presence of transaction costs.

References

Anand, A., Irvine, P., Puckett, A., & Venkataraman, K. (2012). Performance of institutional trading desks: An analysis of persistence in trading cost. The Review of Financial Studies, 25, 557–598.

Baltas, A. N., & Kosowski, R. (2013). Momentum strategies in futures markets and trend-following funds. Paper presented at the 2012 finance meeting EUROFIDAI-AFFI

Banerjee, A. N., & Hung, C. H. D. (2013). Active momentum trading versus passive “naive diversification”. Quantitative Finance, 13, 655–663.

Brogaard, J., Hendershott, T., Hunt, S., & Ysusi, C. (2014). High-frequency trading and the execution costs of institutional investors. The Financial Review, 49, 345–369.

Chena, Y., & Huainan, Z. (2012). Informed trading, information uncertainty, and price momentum. Journal of Banking and Finance, 36, 2095–2109.

Choudhry, R., & Garg, K. (2008). A hybrid machine learning system for stock market forecasting. World Academy of Science, Engineering and Technology: International Journal of Computer and Information Engineering, 2, 689–692.

Chung, C. F. (1996). Estimating a generalized long memory process. Journal of Econometrics, 73, 237–259.

Cont, R. (2001). Empirical properties of asset returns: Stylized facts and statistical issues. Quantitative Finance, 1, 223–236.

Corte, P. D., Kosowski, R., & Wang, T. (2016). Market closure and short-term reversal. Paper presented at the Asian Finance Association (AsianFA) 2016 conference

CSzakmary, A., Shen, Q., & Sharmac, S. C. (2010). Trend-following trading strategies in commodity futures: A re-examination. Journal of Banking and Finance, 34, 409–426.

Efron, B., & Tibshirani, R. (1993). An introduction to the bootstrap. London: Chapman and Hall.

Fama, E. (1963). Mandelbrot and the stable paretian hypothesis. Journal of Business, 36, 420–429.

Fama, E. (1965a). The behavior of stock market prices. Journal of Business, 38, 34–105.

Fama, E. (1965b). Random walks in stock market prices. Journal of Business, 21, 55–59.

Fama, E. (1970). Efficient capital markets: A review of theory and empirical work. Journal of Finance, 25, 383–417.

Granger, C. W. J., & Joyeux, R. (1980). An introduction to long-memory time series models and fractional differencing. Journal of Time Series Analysis, 1, 15–29.

Griffin, J. M., Kelly, P. J., & Nardari, F. (2010). Do market efficiency measures yield correct inferences? A comparison of developed and emerging markets. Journal of Economic Surveys, 23, 3225–3277.

H Diananda, P. (1954). The central limit theorem for m-dependent variables asymptotically stationary to second order. Mathematical Proceedings of the Cambridge Philosophical Society, 50, 287–292.

Huang, W., Nakamori, Y., & Wang, S. Y. (2005). Forecasting stock market movement direction with support vector machine. Computers and Operations Research, 32, 2513–2522.

Kohavi, R. (1995). A study of cross-validation and bootstrap for accuracy estimation and model selection. Proceedings of the Fourteenth International Joint Conference on Artificial Intelligence, 12, 1137–1143.

Lee, J., & Hong, Y. (2001). Testing for serial correlation of unknown form using wavelet methods. Econometric Theory, 17, 386–423.

Lesmonda, D. A., Schill, M. J., & Zhou, C. (2004). The illusory nature of momentum profits. Journal of Financial Economics, 71, 349–380.

Lim, K. P., & Brooks, R. (2011). The evolution of stock market efficiency over time: A survey of the empirical literature. Journal of Economic Surveys, 25, 69–108.

Lim, K. P., Luo, W., & Kim, J. H. (2013). Are us stock index returns predictable? Evidence from automatic autocorrelation-based tests. Applied Economics, 45(8), 953–962.

Moskowitz, T. J., Ooi, Y. H., & Pedersen, L. H. (2012). Time series momentum. Journal of Financial Economics, 104, 228–250.

Novy-Marx, R. (2012). Is momentum really momentum? Journal of Financial Economics, 103, 429–453.

Peters, G. W., & Shevchenko, P. V. (2015). Advances in heavy tailed risk modeling: A handbook of operational risk. New York: Wiley.

Platen, E., & Heath, D. (2006). A benchmark approach to quantitative finance. New York: Springer.

Qian, B., & Rasheed, K. (2004). Hurst exponent and financial market predictability. Paper presented at the 2004 IASTED conference on financial engineering and applications

Qian, B., & Rasheed, K. (2007). Stock market prediction with multiple classifiers. Applied Intelligence, 26, 25–33.

Urquhart, A., & McGroarty, F. (2016). Are stock markets really efficient? Evidence of the adaptive market hypothesis. International Review of Financial Analysis, 47, 420–429.

Wold, H. (1954). A study in the analysis of stationary time series. Stockholm: Almqvist and Wiksell Book Co.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.