Abstract

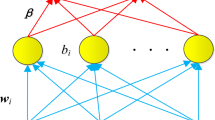

This paper presents a novel technique—Floating Centroids Method (FCM) designed to improve the performance of a conventional neural network classifier. Partition space is a space that is used to categorize data sample after sample is mapped by neural network. In the partition space, the centroid is a point, which denotes the center of a class. In a conventional neural network classifier, position of centroids and the relationship between centroids and classes are set manually. In addition, number of centroids is fixed with reference to the number of classes. The proposed approach introduces many floating centroids, which are spread throughout the partition space and obtained by using K-Means algorithm. Moreover, different classes labels are attached to these centroids automatically. A sample is predicted as a certain class if the closest centroid of its corresponding mapped point is labeled by this class. Experimental results illustrate that the proposed method has favorable performance especially with respect to the training accuracy, generalization accuracy, and average F-measures.

Similar content being viewed by others

References

Qinlan JR (1986) Introduction of decision trees. Mach Learn 1(1): 81–106

Freund Y (1995) Boosting a weak learning algorithm by majority. Inf Comput 121(2): 256–285

Hongjun Lu, Rudy S, Huan L (1996) Effect data mining using neural networks. IEEE Trans Knowl Data Eng 8(6): 957–961

Misraa BB, Dehurib S, Dashc PK, Pandad G (2008) A reduced and comprehensible polynomial neural network for classification. Pattern Recognit Lett 29(12): 1705–1712

Daqi G, Yan J (2005) Classification methodologies of multilayer perceptrons with sigmoid activation functions. Pattern Recognit 38(10): 1469–1482

Chen Y, Abraham A, Yang Bo (2006) Feature selection and classification using flexible neural tree. Neurocomputing 70(1-3): 305–313

Vapnik VN (1995) The nature of statistical learning theory. Springer, New York

Chua YS (2003) Efficient computations for large least square support vector machine classifiers. Pattern Recognit Lett 24(1-3): 75–80

Koknar-Tezel S, Latecki LJ (2010) Improving SVM classification on imbalanced time series data sets with ghost points. Knowl Inf Syst (in print)

Benjamin XW, Japkowicz N (2010) Boosting support vector machines for imbalanced data sets. Knowl Inf Syst (in print)

Gomez-Ruiz JA, Jerez-Aragones JM, Munoz-Perez J, Alba-Conejo E (2004) A neural network based model for prognosis of early breast cancer. Appl Intell 20(3): 231–238

Cho SB, Won H-H (2007) Cancer classification using ensemble of neural networks with multiple significant gene subsets. Appl Intell 26(3): 243–250

Venkatesh YV, Kumar Raja S (2003) On the classification of multispectral satellite images using the multilayer perceptron. Pattern Recognit 36(9): 2161–2175

Garfield S, Wermter S (2006) Call classification using recurrent neural networks, support vector machines and finite state automata. Knowl Inf Syst 9(2): 131–156

Tsai C-Y, Chou S-Y, Lin S-W, Wang W-H (2009) Location determination of mobile devices for an indoor WLAN application using a neural network. Knowl Inf Syst 20(1): 81–93

Bridle JS (1990) Probabilistic interpretation of feedforward classification network outputs, with relationships to statistical pattern recognition. Neuralcomputing: algorithms, architectures and applications, Springer, pp 227–236

Dietterich TG, Bakiri G (1995) Solving multiclass learning problems via error-correcting output codes. J Artif Intell Res 2(1): 263–286

Wang L, Yang B, Chen Z, Abraham A, Peng L (2007) A novel improvement of neural network classification using further division of partition space. In: Proceedings of international work-conference on the interplay between natural and artificial computation, 2007. Lecture notes in computer science vol 4527. Springer, Berlin, pp 214–223

Hartigan JA, Wong MA (1979) A k-means clustering algorithm. Applied Stat 28(1): 100–108

Kennedy J, Eberhart RC (1995) A new optimizer using paritcle swarm theory. In: Proceddings of the sixth international symposium on micromachine and human science, pp 39–43

Lin S-W, Chen S-C, Wu W-J, Chen C-H (2009) Parameter determination and feature selection for back-propagation network by particle swarm optimization. Knowl Inf Syst 21(2): 249–266

Wang T, Yang J (2010) A heuristic method for learning Bayesian networks using discrete particle swarm optimization. Knowl Inf Syst 24(2): 269–281

Platt JC (1998) Sequential minimal optimization: a fast algorithm for training support vector machines. Technical Report MSR-TR-98-14, Microsoft Research

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wang, L., Yang, B., Chen, Y. et al. Improvement of neural network classifier using floating centroids. Knowl Inf Syst 31, 433–454 (2012). https://doi.org/10.1007/s10115-011-0410-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10115-011-0410-8