Abstract

Variance reduction is a crucial tool for improving the slow convergence of stochastic gradient descent. Only a few variance-reduced methods, however, have yet been shown to directly benefit from Nesterov’s acceleration techniques to match the convergence rates of accelerated gradient methods. Such approaches rely on “negative momentum”, a technique for further variance reduction that is generally specific to the SVRG gradient estimator. In this work, we show for the first time that negative momentum is unnecessary for acceleration and develop a universal acceleration framework that allows all popular variance-reduced methods to achieve accelerated convergence rates. The constants appearing in these rates, including their dependence on the number of functions n, scale with the mean-squared-error and bias of the gradient estimator. In a series of numerical experiments, we demonstrate that versions of SAGA, SVRG, SARAH, and SARGE using our framework significantly outperform non-accelerated versions and compare favourably with algorithms using negative momentum.

Similar content being viewed by others

1 Introduction

We are interested in solving the following composite convex minimisation problem:

Throughout, we assume \(f_i : \mathbb {R}^m \rightarrow \mathbb {R}\) are convex and have L-Lipschitz continuous gradients for all i. We also assume \(g : \mathbb {R}^m \rightarrow \mathbb {R}\cup \{\infty \}\) is proper, lower semicontinuous, and \(\mu \)-strongly convex with \(\mu \ge 0\), but we do not require g to be differentiable. Problems of this form are ubiquitous in many fields, including machine learning, compressed sensing, and image processing (see, e.g., [11, 12, 25, 35]). Fundamental examples include LASSO [35] and matrix completion [12], where f is a least-squares loss and g is the \(\ell _1\) or nuclear norm, respectively, and sparse logistic regression, where f is the logistic loss and g is the \(\ell _1\) norm.

One well-studied algorithm that solves (1) is the forward-backward splitting algorithm [13, 29]. This method has a worst-case convergence rate of \(\mathcal {O}\left( 1/T \right) \) when F is not strongly convex, and when F is \(\mu \)-strongly convex, it converges linearly with a rate of \(\mathcal {O}\left( (1 + \kappa ^{-1})^{-T} \right) \), where \(\kappa {\mathop {=}\limits ^{{ \text{ def }}}}L / \mu \) is the condition number of F. The inertial forward-backward splitting algorithm [9] converges at an even faster rate of \(\mathcal {O}\left( 1/T^2 \right) \) without strong convexity and a linear rate of \(\mathcal {O}\left( (1+\kappa ^{-1/2})^{-T}\right) \) when F is strongly convex. The inertial forward-backward method is able to achieve these optimal convergence rates because it incorporates momentum, using information from previous iterates to adjust the current iterate.

Although the inertial forward-backward algorithm converges quickly, it requires access to the full gradient \(\nabla f\) at each iteration, which can be costly, for instance, when n is large. In many applications, common problem sizes are so large that computing \(\nabla f\) is prohibitively expensive. Stochastic gradient methods exploit the separable structure of f, using the gradient of a few of the components \(\nabla f_i\) to estimate the full gradient at the current iterate. In most cases, the complexity of computing \(\nabla f_i\) for one i is 1/n-times the complexity of computing the full gradient, so stochastic gradient methods generally have a much smaller per-iteration complexity than full-gradient methods. Moreover, it has recently been shown that the optimal convergence rates of stochastic gradient methods are \(\mathcal {O}\left( n/T^2 \right) \) without strong convexity and \(\mathcal {O}\left( \theta _S^{-T} \right) \) with \(\theta _S {\mathop {=}\limits ^{{ \text{ def }}}}1 + \sqrt{\frac{\mu }{L n}}\) when g is \(\mu \)-strongly convex, matching the optimal dependence on T and \(\kappa \) of full-gradient methods [37].Footnote 1 Stochastic gradient methods have undergone several revolutions to improve their convergence rates before achieving this lower bound. We summarise these revolutions below, beginning with traditional stochastic gradient descent.

Stochastic Gradient Descent (SGD). Stochastic gradient descent, dating back to [32], uses the gradients \(\nabla f_j, \ \forall j \in J_k \subset \{1,2,\ldots ,n\}\) to estimate the full gradient. The mini-batch \(J_k\) is an index set chosen uniformly at random from all subsets of \(\{ 1,2,\ldots ,n \}\) with cardinality \(b {\mathop {=}\limits ^{{ \text{ def }}}}|J_k|\). When \(b \ll n\), the per-iteration complexity of stochastic gradient descent is much less than full-gradient methods. However, the per-iteration savings come at the cost of a slower convergence rate, as SGD converges at a rate of \(\mathcal {O} (1/\sqrt{T})\) in the worst case. Still, SGD outperforms full-gradient methods on many problems, especially if a low-accuracy solution is acceptable.

Variance Reduction. Variance-reduced estimators use gradient information from previous iterates to construct a better estimate of the gradient at the current step, ensuring that the mean-squared error of these estimates decreases as the iterations increase. Variance-reduction improves the convergence rates of stochastic gradient methods, but either have a higher per-iteration complexity or have larger storage requirements than SGD. The two most popular variance-reduced algorithms are SVRG [21] and SAGA [15], which use the following estimators to approximate \(\nabla f(x_{k+1})\):

In SVRG, the full gradient \(\nabla f({\widetilde{x}})\) is computed every \(m \approx 2n\) iterations, and \(\nabla f({\widetilde{x}})\) is stored and used for future gradient estimators. SAGA takes a similar approach, storing n past stochastic gradients, and updating the stored gradients so that \(\nabla f_j(\varphi _{k+1}^j) = \nabla f_j(x_{k+1})\). In this work, we consider a variant of SVRG where the full gradient is computed at every iteration with probability \(1/p \in (0,1]\) rather than deterministically computing the full gradient every 2n iterations.

SVRG, SAGA, and related variance-reduced methods converge at a rate of \(\mathcal {O} \left( n/T \right) \) when no strong convexity is present. With strong convexity, these algorithms enjoy linear convergence, with a rate of \(\mathcal {O} ( \left( 1 + (n + \kappa )^{-1} \right) ^{-T} )\). Although these convergence rates are significantly faster than the rate of SGD, they do not match the asymptotic convergence rates of accelerated first-order methods, converging like \(\mathcal {O}\left( n/T^2 \right) \) without strong convexity and \(\mathcal {O}( (1+(n \kappa )^{-1/2})^{-T} )\) with strong convexity.

Variance Reduction with Bias. SAGA and SVRG are unbiased gradient estimators because they satisfy \(\mathbb {E}_k{\widetilde{\nabla }}_{k+1} = \nabla f(x_{k+1})\), where \(\mathbb {E}_k\) is the expectation conditioned on the first k iterates. There are several popular variance-reduced algorithms that use biased gradient estimators [27, 33]. In [16], the authors develop a framework for proving convergence guarantees for biased methods, suggesting that the convergence rates of biased stochastic gradient estimators depend on the sum of two terms:

These terms are the mean-squared error (MSE) of the gradient estimator and the “bias term”, respectively. The authors also show that recursive gradient estimators such as SARAH [27] and SARGE [16] minimise these terms better than other biased or unbiased estimators, leading to better convergence rates in some settings. The SARAH gradient estimator is

As with SVRG, we consider a slight variant of the SARAH estimator in this work, where we compute the full gradient at every step with probability 1/p. The SARGE gradient estimator is similar to the SAGA estimator.

where the variables \(\psi _k^i\) follow the update rule \(\psi _{k+1}^j = \nabla f_j(x_{k+1}) - \left( 1 - \frac{b}{n}\right) \) \(\nabla f_j (x_k)\) for all \(j \in J_k\), and \(\psi _{k+1}^i = \psi _k^i\) otherwise. Like SAGA, SARGE uses stored gradient information to avoid having to compute the full gradient. These estimators differ from SAGA and SVRG because they are biased (i.e., \(\mathbb {E}_k{\widetilde{\nabla }}_{k+1} \not = \nabla f(x_{k+1})\). Many works have recently shown that algorithms using the SARAH or SARGE gradient estimators achieve faster convergence rates than algorithms using other estimators in certain settings. Importantly, these recursive gradient methods produce algorithms that achieve the oracle complexity lower bound for non-convex composite optimisation [16, 17, 30, 36, 42]. They have not yet been shown to achieve optimal convergence rates for convex problems.

Variance Reduction with Negative Momentum. Starting with Katyusha [2] and followed by many others [1, 3, 4, 22, 34, 40, 41], a family of stochastic gradient algorithms have recently emerged that achieve the optimal convergence rates implied by Woodworth and Srebro [37]. There are two components to these algorithms that make this acceleration possible. First, these algorithms incorporate momentum into each iteration, either through linear coupling [6], as in the case of [1,2,3,4, 40], or in a more traditional manner reminiscent of Nesterov’s accelerated gradient descent [34, 41]. Second, these algorithms incorporate an “anchor-point” into their momentum updates that supposedly softens the negative effects of bad gradient evaluations. Almost all of these algorithms are an accelerated form of SVRG with updates of the form

using traditional acceleration or linear coupling, respectively (\(z_k\) and \(y_k\) are as defined in Algorithm 1, and \(\tau _k, \tau _1, \tau _2 \in [0,1]\)). We see that these updates “attract” the current iterate toward a “safe” point, \({\widetilde{x}}\), where we know the full gradient. Because of this “attractive” rather than “repulsive” quality, updates of this type have been termed “negative momentum”.

There are several issues with negative momentum. Most importantly, negative momentum is algorithm-specific. Unlike Nesterov’s method of momentum or linear coupling, negative momentum cannot be applied to other stochastic gradient algorithms. SAGA, for example, cannot be accelerated using negative momentum of this form, because there does not exist a point \({\widetilde{x}}\) where we compute the full gradient (however, see [40]). Also, numerical experiments show that negative momentum is often unnecessary to achieve acceleration (see the discussion in [2] or Sect. 7, for example), suggesting that acceleration is possible without it.

Other Accelerated Methods. Outside of the family of algorithms using negative-momentum, there exist many stochastic gradient methods that achieve near-optimal convergence rates, including Catalyst [24] and RPDG [23]. Catalyst’s convergence rates are a logarithmic factor worse than Katyusha’s when the objective is strongly convex or smooth. RPDG achieves optimal convergence rates in the strongly convex setting, matching Katyusha’s rate. When strong convexity is not present, RPDG achieves optimal rates up to a logarithmic factor. We include further discussion of these and other related works in Sect. 3.

Contributions. In this work, we provide a framework to accelerate many stochastic gradient algorithms that does not require negative momentum. We introduce the MSEB property, a property that implies natural bounds on the bias and MSE of a gradient estimator, and we prove accelerated convergence rates for all MSEB gradient estimators. As special cases, we show that incorporating the SAGA, SVRG, SARAH, and SARGE gradient estimators into the framework of Algorithm 1 creates a stochastic gradient method with an \(\mathcal {O}\left( 1 / T^2 \right) \) convergence rate without strong convexity, and a linear convergence rate that scales with \(\sqrt{\kappa }\) when strong convexity is present, achieving the optimal convergence rates in both cases up to a factor of n depending on the bias and MSE of the estimator.

Roadmap. We introduce our algorithm and state our main result in Sect. 2. We compare our results to existing work in Sect. 3. The next four sections are devoted to proving our main results. In Sect. 4, we review elementary results on the subdifferential relation, results on the proximal operator, and lemmas from convex analysis. We prove a general inequality for accelerated stochastic gradient methods using any stochastic gradient estimator in Sect. 5. This inequality implies that many stochastic gradient methods can be accelerated using our momentum scheme; to prove an accelerated convergence rate for a specific algorithm, we only need to apply an algorithm-specific bound on the MSE and bias of the gradient estimator. We do this for the SAGA, SVRG, SARAH, and SARGE gradient estimators in Sect. 6. Finally, in Sect. 7, we demonstrate the performance of our algorithms in numerical experiments.

2 Algorithm and main results

The algorithm we propose is outlined in Algorithm 1. Algorithm 1 takes as input any stochastic gradient estimator \({\widetilde{\nabla }}_{k+1}\), so it can be interpreted as a framework for accelerating existing stochastic gradient methods. This algorithm incorporates momentum through linear coupling [6], but is related to Nesterov’s accelerated gradient method after rewriting \(x_{k+1}\) as follows:

With \(\tau _k = 1\), there is no momentum, and the momentum becomes more aggressive for smaller \(\tau _k\). Although linear coupling provides the impetus for our acceleration framework, similar acceleration schemes appear in earlier works, including Auslender and Teboulle [8], and Ghadimi and Lan, 2016 [19].

We show that as long as the MSE and bias of a stochastic gradient estimator satisfy certain bounds and the parameters \(\gamma _k\) and \(\tau _k\) are chosen correctly, Algorithm 1 converges at an accelerated rate. There are three principles for choosing \(\gamma _k\) and \(\tau _k\) so that Algorithm 1 achieves acceleration.

-

1.

The step size \(\gamma _k\) should be small, roughly \(\mathcal {O}\left( 1 / n \right) \) with the exact dependence on n decreasing with larger MSE and bias of the gradient estimator.

-

2.

On non-strongly convex objectives, the step size should grow sufficiently slowly, so that \(\gamma _k^2 \left( 1 - \rho \right) \le \gamma _{k-1}^2 \left( 1 - \frac{\rho }{2}\right) \) with \(\rho = \mathcal {O} \left( 1 / n \right) \) decreasing with larger MSE and bias.

-

3.

The momentum should become more aggressive with smaller step sizes, with \(\tau _k = \mathcal {O}\left( \frac{1}{n \gamma _k}\right) \).

For strongly convex objectives, \(\gamma _k\) and \(\tau _k\) can be kept constant.

For Algorithm 1 to converge, the stochastic gradient estimator must have controlled bias and MSE. Specifically, we require the estimator to satisfy the MSEB property,Footnote 2 introduced below.

Definition 1

For any sequence \(\{x_{k+1}\}\), let \({\widetilde{\nabla }}_{k+1}\) be a stochastic gradient estimator generated from the points \(\{x_{\ell +1}\}_{\ell = 0}^k\). The estimator \({\widetilde{\nabla }}_{k+1}\) satisfies the MSEB\((M_1,M_2,\rho _M,\) \(\rho _B, \rho _F)\) property if there exist constants \(M_1, M_2 \ge 0\), \(\rho _M,\rho _B,\rho _F \in (0,1]\), and sequences \(\mathcal {M}_k\) and \(\mathcal {F}_k\) satisfying

and

On a high-level, the MSEB property guarantees that the bias and MSE of the gradient estimator decrease sufficiently quickly with k.

Remark 1

In [10], the authors study the convergence of unbiased stochastic gradient methods under first- and second-moment bounds on the gradient estimator. The bounds implied by the MSEB property are similar, but with the crucial difference that they are non-Markovian; we allow our bound on \(\mathcal {M}_k\) to depend on all preceding iterates, not just \(x_k\).

In this work, we show that most existing stochastic gradient estimators satisfy the MSEB property, including SAGA, SVRG, SARAH, SARGE, and the full gradient estimator. We list their associated parameters in the following propositions.

Proposition 1

The full gradient estimator \({\widetilde{\nabla }}_{k+1} = \nabla f(x_{k+1})\) satisfies the MSEB property with \(M_1 = M_2 = 0\) and \(\rho _M = \rho _B = \rho _F = 1\).

Proof

The bias and MSE of the full gradient estimator are zero, so it is clear these parameter choices satisfy the bounds in the MSEB property. \(\square \)

Although trivial, Proposition 1 allows us to show that our analysis recovers the accelerated convergence rates of the inertial forward-backward algorithm as a special case. The MSEB property applies to the SAGA and SVRG estimators non-trivially.

Proposition 2

The SAGA gradient estimator (3) satisfies the MSEB property with \(M_1 = \mathcal {O} ( n/b^2 )\), \(\rho _M = \mathcal {O} ( b/n )\), \(M_2 = 0\), and \(\rho _B = \rho _F = 1\). Setting \(p = \mathcal {O}( n / b )\), the SVRG gradient estimator (2) satisfies the MSEB property with the same parameters.

We prove Proposition 2 in “Appendix C”. We are able to choose \(\rho _B = 1\) for the SAGA and SVRG gradient estimators because they are unbiased, and we can choose \(M_2 = 0\) and \(\rho _F = 1\) for these estimators because they admit Markovian bounds on their variance. This is not true for SARAH and SARGE, but these estimators are still compatible with our framework. We prove Propositions 3 and 4 in “Appendices D and E”, respectively.

Proposition 3

Setting \(p = \mathcal {O}( n )\), the SARAH gradient estimator (4) satisfies the MSEB property with \(M_1 = \mathcal {O}(1)\), \(M_2 = 0\), \(\rho _M = \mathcal {O}( 1 / n )\), \(\rho _B = \mathcal {O}(1/n)\), and \(\rho _F = 1\).

Proposition 4

The SARGE gradient estimator (5) satisfies the MSEB property with \(M_1 = \mathcal {O}(1/n)\), \(M_2 = \mathcal {O}( 1/n^2 )\), \(\rho _M = \mathcal {O}(b/n)\), \(\rho _B = \mathcal {O}(b/n)\), and \(\rho _F = \mathcal {O} (b/n)\).

All gradient estimators satisfying the MSEB property can be accelerated using the framework of Algorithm 1, as the following two theorems guarantee.

Theorem 1

(Acceleration Without Strong Convexity) Suppose the stochastic gradient estimator \({\widetilde{\nabla }}_{k+1}\) satisfies the MSEB\((M_1,M_2,\) \(\rho _M,\rho _B,\rho _F)\) property. Define the constants

With

and \(\nu \ge \max \left\{ 0,\frac{2-6 \rho }{\rho } \right\} \), set \(\gamma _k = \frac{k+\nu +4}{2 c L}\) and \(\tau _k = \frac{1}{c L \gamma _k}\). After T iterations, Algorithm 1 produces a point \(y_T\) satisfying the following bound on its suboptimality:

where

A similar result gives an accelerated linear convergence rate when strong convexity is present.

Theorem 2

(Acceleration With Strong Convexity)

Suppose the stochastic gradient estimator \({\widetilde{\nabla }}_{k+1}\) satisfies the MSEB\((M_1,M_2,\) \(\rho _M,\rho _B,\rho _F)\) property and g is \(\mu \)-strongly convex with \(\mu > 0\). With the constants \(\varTheta _1, \varTheta _2, c\), and \(\nu \) set as in Theorem 1, set \(\gamma = \min \{ \frac{1}{\sqrt{\mu c L}}, \frac{\rho }{2 \mu } \}\) and \(\tau = \mu \gamma \). After T iterations, Algorithm 1 produces a point \(z_T\) satisfying the following bound:

where

Remark 2

Although we prove accelerated convergence rates for many popular gradient estimators, the generality of Theorems 1 and 2 allows our results to extend easily to gradient estimators not considered in this work as well. These include, for example, the gradient estimators considered in [20].

Remark 3

With some manipulation, we see that these rates with \(c = \nu = \mathcal {O}(n)\) are similar to the rates proved for Katyusha. In [2], the author shows that in the non-strongly convex case, Katyusha satisfies

Recall that Katyusha follows the algorithmic framework of SVRG; S denotes the epoch number, \({\widetilde{x}}_S\) the point where the full gradient was computed at the beginning of epoch S, and \(P = \mathcal {O}(n)\) is the epoch length. In our notation, \(S = T/P = \mathcal {O}\left( T/n \right) \). Theorem 1 with \(c = \nu = \mathcal {O}(n)\) shows that Algorithm 1 achieves a similar convergence rate of

In the strongly convex case, an appropriately adapted version of Katyusha satisfies

Similarly, with \(c = \rho = \mathcal {O}( n )\), Theorem 2 shows that the iterates of Algorithm 1 satisfy

which again matches the rate of Katyusha. Of course, not all stochastic gradient estimators satisfy the bounds necessary to set \(c = \nu = \rho = \mathcal {O}( n )\), so these optimal rates are conditional on being able to construct an “optimal estimator”. SAGA, SVRG, SARAH, and SARGE all require c to be slightly larger than \(\mathcal {O}(n)\).

The proofs of Theorems 1 and 2 use a linear coupling argument adapted from [6], but we use a different adaptation than the one in [2] used to prove convergence rates for Katyusha. To explain the differences between our approach and existing approaches, let us give a high-level description of linear coupling and the generalisation used in [2].

In [6], the authors suggest that gradient descent and mirror descent can be coupled to create an accelerated algorithm. We do not discuss gradient descent and mirror descent in detail (for this, see [6]), but the main idea of linear coupling can be understood from only two bounds arising from these algorithms. For the purpose of this argument, suppose \(g \equiv 0\), so that \(F \equiv f\). Gradient descent with step size \(\eta \) satisfies the following bound on the decrease of the objective (equation (2.1) in [6]):

This bound shows that gradient descent is indeed a descent method; it is guaranteed to make progress at each iteration. The iterates of mirror descent using step size \(\gamma \) satisfy a bound on the sub-optimality of each iterate (equation (2.2) in [6]).

While gradient descent is guaranteed to make progress proportional to \(\Vert \nabla f(x_k)\Vert ^2\) each iteration, mirror descent potentially introduces an “error” that is proportional to \(\Vert \nabla f(x_k)\Vert ^2\). Linear coupling takes advantage of this duality. Loosely speaking, by combining the sequence of iterates produced by gradient descent with the sequence produced by mirror descent, the guaranteed progress of gradient descent balances the potential error introduced by mirror descent, accelerating convergence.

This argument does not immediately hold for stochastic gradient methods. This is because in addition to the norm \(\Vert \nabla f(x_k)\Vert ^2\) arising in inequalities (6) and (7), we also get the MSE of our gradient estimator \(\Vert {\widetilde{\nabla }}_k - \nabla f(x_k)\Vert ^2\) as well as a “bias term”. In the stochastic setting, analogues of inequalities (6) and (7) read

where the last inequality is Young’s, and

If the MSE or bias term is too large, the gradient step is no longer a descent step, and the progress does not balance the “error terms” in each of these inequalities, so we cannot expect linear coupling to offer any acceleration. This problem with the MSE and bias term exists for non-accelerated algorithms as well, and all analyses of stochastic gradient methods bound the effect of these terms, but in different ways. Katyusha and other accelerated algorithms in this family incorporate negative momentum to cancel part of the MSE. In contrast, analyses of non-accelerated algorithms do not try to cancel any of the variance, but show that the variance decreases fast enough so that it does not affect convergence rates.

3 Related work

Besides Katyusha, there are many algorithms that use negative momentum for acceleration. In [34], the authors consider an accelerated version of SVRG that combines negative momentum with Nesterov’s momentum to achieve the optimal \(\sqrt{\kappa }\) dependence in the strongly convex case. This approach to acceleration is almost the same as Katyusha, but uses a traditional form of Nesterov’s momentum instead of linear coupling. MiG [41] is another variant of these algorithms, corresponding to Katyusha with a certain parameter set to zero. VARAG is another approach to accelerated SVRG using negative momentum. VARAG achieves optimal convergence rates in the non-strongly convex and strongly convex settings under the framework of a single algorithm, and it converges linearly on problems that admit a global error bound, a quality that other algorithms have not yet been shown to possess [22].

The only direct acceleration of a SAGA-like algorithm is SSNM from [40]. Using the notation of (3), SSNM chooses a point from the set \(\{\varphi _k^i\}_{i=1}^n\) uniformly at random, and uses this point as the “anchor point” for negative-momentum acceleration. Although SSNM admits fast convergence rates, there are a few undesirable qualities of this approach. SAGA has heavy storage requirements because it must store n gradients from previous iterations, and SSNM exacerbates this storage problem by storing n points from previous iterations as well. SSNM must also compute two stochastic gradients each iteration, so its per-iteration computational cost is similar to SVRG and Katyusha, and always higher than SAGA’s.

Many algorithms for non-convex optimisation also use negative momentum for acceleration. KatyushaX [3] is a version of Katyusha adapted to optimise sum-of-non-convex objectives. To achieve its acceleration, KatyushaX uses classical momentum and a “retraction step”, which is effectively an application of negative momentum (this relationship is acknowledged in [3] as well). Natasha [1] and Natasha2 [4] are accelerated algorithms for finding stationary points of non-convex objectives. Both algorithms employ a “retraction step” that is similar to negative momentum [1].

There are also many accelerated stochastic gradient algorithms that do not use negative momentum. In [28], the author applies Nesterov’s momentum to SVRG without any sort of negative momentum, proving a linear convergence rate in the strongly convex regime. However, the proven convergence rate is suboptimal, as it implies even worse performance than SVRG when the batch size is small and worse performance than accelerated full-gradient methods when the batch size is close to n. Our results show that a particular application of Nesterov’s momentum to SVRG does provide acceleration.

Point-SAGA [14] is another SAGA-like algorithm that achieves optimal convergence rates, but point-SAGA must compute the proximal operator corresponding to F rather than the proximal operator corresponding to g. This is not possible in general, even if the proximal operator corresponding to g is easy to compute, so point-SAGA applies to a different class of functions than the class we consider in this work.

There are also many algorithms that indirectly accelerate stochastic gradient methods. This class of algorithms include Catalyst [24], APPA [18], and the primal-dual methods in [39]. These algorithms call a variance-reduced stochastic gradient method as a subroutine, and provide acceleration using an inner-outer loop structure. These algorithms are often difficult to implement in practice due to the difficulty of solving their inner-loop subproblems, and they achieve a convergence rate that is only optimal up to a logarithmic factor.

4 Preliminaries

In this section, we present some basic definitions and results from optimisation and convex analysis. Much of our analysis involves Bregman divergences. The Bregman divergence associated with a function h is defined as

where \(\xi \in \partial h(x)\) and \(\partial \) is the subdifferential operator. If h is differentiable, we drop the superscript \(\xi \) as the subgradient is unique. The function h is convex if and only if \(D^\xi _h(y,x) \ge 0\) for all x and y. We say h is \(\mu \)-strongly convex with \(\mu \ge 0\) if and only if

Bregman divergences also arise in the following fundamental inequality.

Lemma 1

([26], Thm. 2.1.5) Suppose f is convex with an L-Lipschitz continuous gradient. We have for all \(x,y \in \mathbb {R}^m\),

Lemma 1 is equivalent to the following result, which is more specific to our analysis due to the finite-sum structure of the smooth term in (1).

Lemma 2

Let \(f(x) = \tfrac{1}{n} \sum _{i=1}^n f_i(x)\), where each \(f_i\) is convex with an L-Lipschitz continuous gradient. Then for every \(x, y \in \mathbb {R}^m\)

Proof

This follows from applying Lemma 1 to each component \(f_i\). \(\square \)

The proximal operator is defined as

The proximal operator is also defined implicitly as \(y - \text {prox}_g(y) \in \partial g(\text {prox}_g\) (y)). From this definition of the proximal operator, the following standard inequality is clear.

Lemma 3

Suppose g is \(\mu \)-strongly convex with \(\mu \ge 0\), and suppose \(z = \text {prox}_{\eta g} \left( x - \eta d \right) \) for some \(x, d \in \mathbb {R}^m\) and constant \(\eta \). Then, for any \(y \in \mathbb {R}^m\),

Proof

By the strong convexity of g,

From the implicit definition of the proximal operator, we know that \(\frac{1}{\eta } (z - x) + d \in \partial g(z)\). Therefore,

Multiplying by \(\eta \) and rearranging yields the assertion. \(\square \)

5 The acceleration framework

To apply the linear coupling framework, we must couple stochastic analogues of (6) and (7) to construct a lower bound on the one-iteration progress of Algorithm 1.

Lemma 4

(One-Iteration Progress) The following bound describes the progress made by one iteration of Algorithm 1.

Proof

We use a linear coupling argument. The extrapolated iterate \(x_{k+1}\) can be viewed as a convex combination of an iterate produced from mirror descent (namely, \(z_k\)) and one from gradient descent (\(y_k\)). This allows us to provide two bounds on the term \(f(x_{k+1}) - f(x^*)\): one is a regret bound inspired by the classical analysis of mirror descent, and the other is inspired by the traditional descent guarantee of gradient descent.

Inequality \(\textcircled {1}\) uses the convexity of f, \(\textcircled {2}\) follows from the fact that \(x_{k+1} = \tau _k z_k + (1 - \tau _k) y_k\), and \(\textcircled {3}\) uses \(x_{k+1} - y_{k+1} = \tau _k (z_k - z_{k+1})\). We proceed to bound the inner product \(\langle {\widetilde{\nabla }}_{k+1}, z_{k+1} - x^* \rangle \) involving the sequence \(z_{k+1}\) using a regret bound from mirror descent, and we bound the term \(\langle {\widetilde{\nabla }}_{k+1}, x_{k+1} - y_{k+1} \rangle \) using an argument similar to the descent guarantee of gradient descent.

By Lemma 3 with \(z = z_{k+1}\), \(x = z_k\), \(y = x^*\), \(d = {\widetilde{\nabla }}_{k+1}\), and \(\eta = \gamma _k\),

For the other term,

Inequality \(\textcircled {1}\) follows from the Lipschitz continuity of \(\nabla f_i\), \(\textcircled {2}\) is Young’s inequality, and \(\textcircled {3}\) uses the convexity of g and the update rule \(y_{k+1} = \tau _k z_{k+1} + (1-\tau _k) y_k\). Combining inequalities (9) and (10) with (8) and rearranging yields the assertion. \(\square \)

Lemma 4 completes the linear coupling part of our argument. If not for the MSE and bias terms, we could telescope this inequality as in [6] and prove an accelerated convergence rate. As with all analyses of stochastic gradient methods, we need a useful bound on these qualities of the estimator.

Existing analyses of unbiased stochastic gradient methods bound the variance term by a pair of terms that telescope over several iterations, showing that the variance tends to zero with the number of iterations. It is difficult to generalise these arguments to accelerated stochastic methods because one must prove that the variance decreases at an accelerated rate that is inconsistent with existing variance bounds. In the analysis of Katyusha, negative momentum cancels part of the variance term, leaving telescoping terms that decrease at an accelerated rate. Without negative momentum, we must handle the variance term differently.

In the inequality of Lemma 4, we have two non-positive terms:

This makes our strategy clear: we must bound the MSE and bias terms by terms of the form \(\Vert x_{k+1} - y_{k+1}\Vert ^2\) and \(D_f(y_k,x_{k+1})\). The following two lemmas use the MSEB property to establish bounds of this form.

Lemma 5

(Bias Term Bound) Suppose the stochastic gradient estimator \({\widetilde{\nabla }}_{k+1}\) satisfies the MSEB\((M_1\), \(M_2,\) \(\rho _M,\rho _B,\rho _F)\) property, let \(\rho = \min \{ \rho _M, \rho _B, \rho _F \}\), and let \(\{\sigma _k\}\) and \(\{s_k\}\) be any non-negative sequences satisfying \(\sigma _k s_k^2 \left( 1 - \rho \right) \le \sigma _{k-1} s^2_{k-1} \left( 1 - \frac{\rho }{2} \right) \) and \(\sigma _k \left( 1 - \rho \right) \le \sigma _{k-1} \left( 1 - \frac{\rho }{2} \right) \). The bias term can be bounded as

Proof

Because \(z_k\) depends only on the first \(k-1\) iterates, we can use the MSEB property to say

Equality \(\textcircled {1}\) is due to the MSEB property. We are able to pass the conditional expectation into the second inner product in \(\textcircled {2}\) because \(z_{k-1}\) is independent of \({\widetilde{\nabla }}_k\) conditioned on the first \(k-2\) iterates, and inequality \(\textcircled {3}\) is Young’s. We can repeat this process once more, applying the MSEB property to obtain

Inequality \(\textcircled {4}\) uses our hypotheses on the decrease of \(\sigma _k s_k^2\) and \(\sigma _k\). This is a recursive inequality, and expanding the recursion yields

The above uses the fact that \({\widetilde{\nabla }}_1 = \nabla f(x_1)\), so the inner product \(\langle \nabla f(x_{1}) - {\widetilde{\nabla }}_{1},\) \( z_0 - x^* \rangle = 0\). Taking the sum over the iterations \(k=0\) to \(k=T-1\), we apply Lemma 7 to simplify this bound.

Inequality \(\textcircled {1}\) follows from Lemma 7, and equality \(\textcircled {2}\) is the identity \(y_{k+1} - x_{k+1} = \tau _k (z_{k+1} - z_k)\). \(\square \)

This bound on the bias term includes the MSE, so to complete our bound on the bias term, we must combine Lemma 5 with the following lemma.

Lemma 6

(MSE Bound) Suppose the stochastic gradient estimator \({\widetilde{\nabla }}_{k+1}\) satisfies the MSEB\((M_1,M_2,\rho _M,\) \(\rho _B,\rho _F)\) property, let \(\rho = \min \{ \rho _M, \rho _B, \rho _F \}\), and let \(\{s_k\}\) be any non-negative sequence satisfying \(s^2_k \left( 1 - \rho \right) \le s^2_{k-1} \left( 1 - \frac{\rho }{2} \right) \). For convenience, define \(\varTheta _2 = \frac{M_1 \rho _F + 2 M_2}{\rho _M \rho _F}\). The MSE of the gradient estimator is bounded as

Proof

First, we derive a bound on the sequence \(\mathcal {F}_k\) arising in the MSEB property. Taking the sum from \(k=0\) to \(k=T-1\),

Inequality \(\textcircled {1}\) uses the fact that \(s_k^2 (1-\rho _F) \le s_{k-1}^2\left( 1-\frac{\rho _F}{2} \right) \), and \(\textcircled {2}\) uses Lemma 7. With this bound on \(\mathcal {F}_k\), we proceed to bound \(\mathcal {M}_k\) in a similar fashion.

Inequality \(\textcircled {1}\) uses \(s_k^2(1-\rho _M) \le s_{k-1}^2\left( 1-\frac{\rho _M}{2} \right) \), \(\textcircled {2}\) uses Lemma 7, \(\textcircled {3}\) uses the inequality \(\Vert a-c\Vert ^2 \le 2 \Vert a - b\Vert ^2 + 2 \Vert b - c\Vert ^2\), and \(\textcircled {4}\) uses Lemma 1 and the Lipschitz continuity of \(\nabla f_i\). \(\square \)

Lemmas 5 and 6 show that it is possible to cancel the bias term and the MSE using the non-negative terms appearing in the inequality of Lemma 4. Without these terms, we can telescope this inequality over several iterations and prove accelerated convergence rates. We are now prepared to prove Theorems 1 and 2.

Proof of Theorem 1

We set \(\mu = 0\) in the inequality of Lemma 4, apply the full expectation operator, and sum the result over the iterations \(k=0\) to \(k=T-1\).

We bound the terms in the final line, beginning with the bias term. Our choice for \(\gamma _k\) satisfies \(\gamma _k^2 \left( 1 - \rho \right) \le \gamma _{k-1}^2 \left( 1 - \frac{\rho }{2} \right) \), so with \(s_k = \gamma _k\) and \(\sigma _k = 1\), we apply Lemma 5. This gives

where we have dropped the term \(-1/2 \mathbb {E} \Vert z_T - x^*\Vert ^2\) because it is non-positive. Applying Lemma 6 to bound the MSE, we have

With the parameters set as in the theorem statement, it is clear that the final two lines of (11) are non-positive (see “Appendix B” for a proof). This allows us to drop these lines from the inequality, leaving

Rewriting \(\tau _k\) in terms of \(\gamma _k\) shows that this is equivalent to

Our choice for \(\gamma _k\) satisfies \(c L \gamma _k^2 - \gamma _k = c L \gamma _{k-1}^2 - \frac{1}{4 c L}\), allowing the \(F(y_k)\) terms to telescope. Hence, our inequality is equivalent to

Using the facts that \(c L \gamma _{T-1}^2 = \frac{(T + \nu + 3)^2}{4 c L}\), \(c L \gamma _0^2 - \gamma _0 = \frac{(\nu +2)(\nu +4)}{4 c L}\), and \(F(y_k) \le F(x^*)\), we have

Dropping the term \(1 / 2 \Vert z_T - x^*\Vert ^2\) on the left side of this inequality and rearranging, we have proved the assertion.

\(\square \)

A similar argument proves an accelerated linear convergence rate when strong convexity is present.

Proof of Theorem 2

We recall the inequality of Lemma 4.

By our choice of \(\gamma \) and \(\tau \), we have

Therefore, we can extract a factor of \((1+\mu \gamma )\) from the left.

Multiplying this inequality by \((1+\mu \gamma )^k\), summing over iterations \(k=0\) to \(k = T-1\), and applying the full expectation operator, we obtain the bound

As in the proof of Theorem 1, we bound the bias term and the MSE using Lemmas 5 and 6, respectively. To apply Lemma 5, we let \(\sigma _k = (1+\mu \gamma )^k\) and \(s_k = \gamma \). These choices are appropriate because \((1 + \mu \gamma )^k (1-\rho ) \le (1 + \mu \gamma )^{k-1} (1 - \frac{\rho }{2})\) due to the fact that \(\mu \gamma \le \rho /2\).

Combining these bounds with (12), we have

The parameter settings in the theorem statement ensure the final two lines are non-positive (see “Appendix B” for details). This gives

which is the desired result. \(\square \)

6 Convergence rates for specific estimators

In light of Theorems 1 and 2, we must only establish suitable bounds on the MSE and bias terms of a gradient estimator to prove accelerated convergence rates for Algorithm 1. We consider four variance-reduced gradient estimators: SAGA, SVRG, SARAH, and SARGE, beginning with the unbiased estimators. We defer proofs to the “Appendix”. To preserve the generality of our framework, we have not optimised the constants appearing in the presented convergence rates.

Theorem 3

(SAGA Convergence Rates) When using the SAGA gradient estimator in Algorithm 1, set \(b \le 4 \sqrt{2} n^{2/3}\), \(\gamma _k = \frac{b^3 (k+\frac{4 n}{b}+4)}{192 n^2 L}\), and \(\tau _k = \frac{b^3}{96 n^2 L \gamma _k}\). After T iterations, the suboptimalty at \(y_T\) satisfies

where

If g is \(\mu \)-strongly convex, set \(\gamma = \min \left\{ \frac{b^{3/2}}{4 n \sqrt{6 \mu L}}, \frac{b}{4 n \mu } \right\} \) and \(\tau = \mu \gamma \). After T iterations, the point \(z_T\) satisfies

where \(K_2\) is defined as in Theorem 2.

It is enlightening to compare these rates to existing convergence rates for full and stochastic gradient methods. In the non-strongly convex setting, our convergence rate is \(\mathcal {O}\left( n^2/T^2\right) \), matching that of Katyusha. As with Katyusha, this rate could be improved for SVRG using the epoch-doubling procedure in SVRG++ (see Allen-Zhu, 2018 [7] for further details). In the strongly convex case, if F is poorly conditioned so that \(L/\mu \ge \mathcal {O}( b )\), we prove linear convergence at the rate \(\mathcal {O} \left( \left( 1 + \frac{b^{3/2} \sqrt{\mu }}{n \sqrt{L}} \right) ^{-T} \right) \). With \(b = n^{2/3}\), this rate matches the convergence rate of inertial forward-backward on the same problem (i.e., the rate is independent of n), but we require only \(n^{2/3}\) stochastic gradient evaluations per iteration compared to the n evaluations that full gradient methods require. This is reminiscent of the results of [5, 31], where the authors show that SAGA and SVRG achieve the same convergence rate as full gradient methods on non-convex problems using only \(n^{2/3}\) stochastic gradient evaluations per iteration. This is slightly worse than the results proven for Katyusha, which requires \(\mathcal {O}(\sqrt{n})\) stochastic gradient evaluation per iteration to match the convergence rate of full-gradient methods.

The analogous convergence guarantees for SVRG are included in Theorem 4.

Theorem 4

(SVRG Convergence Rates) When using the SVRG gradient estimator in Algorithm 1, set \(b \le 32 p^2\), \(\gamma _k = \frac{b (k+4 p+4)}{192 p^2 L}\), and \(\tau _k = \frac{b}{ 96 p^2 L \gamma _k}\). After T iterations, the suboptimalty at \(y_T\) satisfies

where

If g is \(\mu \)-strongly convex, set \(\gamma = \min \left\{ \frac{\sqrt{b}}{4 p \sqrt{6 \mu L}}, \frac{1}{4 p \mu } \right\} \) and \(\tau = \mu \gamma \). After T iterations, the point \(z_T\) satisfies

where \(K_2\) is defined as in Theorem 2.

The convergence rates for SVRG are similar to the rates for SAGA if p and b are chosen appropriately. In the strongly convex case, setting \(b = p^2\) allows SVRG to match the convergence rate of full gradient methods, and the expected number of stochastic gradient evaluations per iteration is \(n/p + b\). To minimise the number of stochastic gradient evaluations while maintaining the convergence rate of full gradient methods, we set \(p = \mathcal {O}(n^{1/3})\), showing that Algorithm 1 using the SVRG gradient estimator achieves the same convergence rate as full gradient methods using only \(\mathcal {O}(n^{2/3})\) stochastic gradient evaluations per iteration.

Remark 4

The above discussion shows that when using the SAGA gradient estimator on a strongly convex objective with \(b = \mathcal {O}(n^{2/3})\) and \(\gamma = \mathcal {O}(1 / \sqrt{\mu L} )\), Algorithm 1 finds a point satisfying \(\mathbb {E} \Vert z_T - x^*\Vert ^2 \le \epsilon \) in \(\mathcal {O}(n^{2/3} \sqrt{\kappa } \log (1 / \epsilon ))\) iterations. This is compared to the complexity lower bound of \(\mathcal {O}(\sqrt{n \kappa } \log (1 / \epsilon ) )\) achieved by Katyusha [37]. SVRG has a similar complexity when \(b = n^{2/3}\) and \(p = n^{1/3}\).

The SARAH gradient estimator is similar to the SVRG estimator, as both estimators require the full gradient to be computed periodically. SARAH differs from SVRG by using previous estimates of the gradient to inform future estimates. The recursive nature of the estimator seems to decrease its MSE, which can be observed in experiments and in theory [16, 27]. However, this comes at the cost of introducing bias into the estimator.

Biased stochastic gradient methods are underdeveloped compared to their unbiased counterparts. The convergence proofs for biased algorithms are traditionally complex and difficult to generalize (see [33], for example), and proximal support has only recently been extended to the biased algorithms SARAH and SARGE, as well as biased versions of SAGA and SVRG in the convex setting [16]. It is difficult to determine conclusively if the negative effect of the bias outweighs the benefits of a lower MSE. We show that Algorithm 1 is able to achieve accelerated rates of convergence using biased estimators as well, beginning with the SARAH estimator.

Theorem 5

(SARAH Convergence Rates) When using the SARAH gradient estimator in Algorithm 1, set \(\gamma _k = \frac{k + 2 p + 4}{288 p^4 L}\) and \(\tau _k = \frac{1}{144 p^4 L \gamma _k}\). After T iterations, the suboptimalty at \(y_T\) satisfies

where

If g is \(\mu \)-strongly convex, set \(\gamma = \min \left\{ \sqrt{ \frac{1}{144 p^4 \mu L}}, \frac{1}{2 p \mu } \right\} \) and \(\tau = \mu \gamma \). After T iterations, the point \(z_T\) satisfies

where \(K_2\) is defined as in Theorem 2.

We provide a proof of this result in “Appendix D”. Theorem 5 shows that using the SARAH gradient estimator in Algorithm 1 achieves an optimal \(\mathcal {O}\left( 1 / T^2 \right) \) convergence rate on convex objectives, but with \(p = \mathcal {O}(n)\), the constant is a factor of \(n^2\) worse than it is for accelerated SAGA, SVRG, and Katyusha. In the strongly convex case, setting \(p = \mathcal {O} (n)\) and \(b = \mathcal {O}(1)\) guarantees a linear convergence rate of \(\mathcal {O} ( (1 + n^{-2} \sqrt{\mu /L} )^{-T} )\), achieving the optimal dependence on the condition number, but with a constant that is a factor of n worse than accelerated SAGA and SVRG, and a factor of \(n^{3/2}\) worse than Katyusha. Despite this dependence on n, experimental results, including those in Sect. 7 and [27], show that the SARAH gradient estimator exhibits competitive performance.

Finally, we provide convergence rates for the SARGE estimator. In [16], the authors introduce the SARGE gradient estimator to mimic the recursive nature of SARAH but trade larger storage costs for a lower average per-iteration complexity, similar to the relationship between SAGA and SVRG. We prove in “Appendix E” that SARGE satisfies the MSEB property with similar constants to SARAH, and achieves similar convergence rates as well.

Theorem 6

(SARGE Convergence Rates) LetFootnote 3\(c = 86016 n^4/b^4\). When using the SARGE gradient estimator in Algorithm 1, set \(\gamma _k = \frac{k+\frac{4 n}{b}+4}{2 c L}\) and \(\tau _k = \frac{1}{c L \gamma _k}\). After T iterations, the suboptimalty at \(y_T\) satisfies

where

If g is \(\mu \)-strongly convex, set \(\gamma = \min \left\{ \frac{1}{\sqrt{c \mu L}}, \frac{b}{4 n \mu } \right\} \) and \(\tau = \mu \gamma \). After T iterations, the point \(z_T\) satisfies

where \(K_2\) is defined as in Theorem 2.

The convergence rates for SARGE are of the same order as the convergence rates for SARAH, even though SARGE requires fewer stochastic gradient evaluations per iteration on average.

Although our bound on the MSE of the SARAH and SARGE estimators is a factor of n smaller than our bound on the MSE of the SAGA and SVRG estimators, the analytical difficulties due to the bias lead to a worse dependence on n. Nevertheless, SARAH and SARGE are competitive in practice, as we demonstrate in the following section.

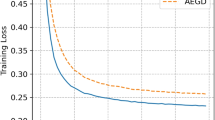

7 Numerical experiments

To test our acceleration framework, we use it to accelerate SAGA, SVRG, SARAH, and SARGE on a series of ridge regression and LASSO tasks using the binary classification data sets australian, mushrooms, phishing, and ijcnn1 from the LIBSVMFootnote 4 database. We include Katyusha and Katyushans for comparison as well. For SVRG and SARAH, we compare our accelerated variants that compute the full gradient probabilistically to the non-accelerated versions that compute the full gradient deterministically at the beginning of each epoch.

With feature vectors \(a_i\) and labels \(y_i\) for \(i \in \{1,2,\ldots ,n\}\), ridge regression and LASSO can be written as

where \(R \equiv \tfrac{1}{2} \Vert \cdot \Vert ^2\) in ridge regression and \(R \equiv \Vert \cdot \Vert _1\) for LASSO. Letting \(g \equiv \lambda R\), it is clear that g is \(\lambda \)-strongly convex in ridge regression and g is not strongly convex for LASSO. In all our experiments, we rescale the value of the data to \([-1, 1]\). For ridge regression, we set \(\lambda = 1 / n\), and for LASSO, we set \(\lambda = 1 / \sqrt{n}\).

For accurate comparisons, we automate all our parameter tuning. For our experiments using ridge regression, we select the step size and momentum parameters from the set \(\{ 1 / t : t \in \mathbb {N} \}\). For LASSO, we use the parameters suggested by Theorem 1, but we scale the step size by a constant \(s \in \mathbb {N}\), and we rescale the momentum parameter so that \(\tau _0 = 1 / 2\). We perform the same parameter-tuning procedure for Katyusha, and set the negative momentum parameter \(\tau _2 = 1 / 2\) as suggested in [2] unless otherwise stated. In our accelerated variants of SVRG and SARAH, we set \(p = \frac{1}{2 n}\), and for the non-accelerated variants and Katyusha, we set the epoch length to 2n. We use a batch size of \(b=1\) for all algorithms.

We measure performance with respect to the suboptimality \(F(x_{k+1}) - F(x^*)\), where \(x^*\) is a low-tolerance solution found using forward-backward. To fairly compare algorithms that require a different number of stochastic gradient evaluations per iteration, we report their performance with respect to the number of effective full gradient computations they perform on average each iteration. By this metric, SAGA performs 1/n full gradient computations each iteration, while SVRG performs an average of \(\frac{2}{n} + \frac{1}{2 n}\), for example.

Figures 1 and 2 display the median of 100 trials of ridge regression and LASSO, respectively. We observe the following trends:

-

Acceleration without negative momentum significantly improves the performance of SAGA, SVRG, SARAH, and SARGE in most cases. The improvement is least dramatic on the smallest data set, australian, and slightly less dramatic for the biased algorithms, SARAH and SARGE.

-

Because they require only one stochastic gradient evaluation per iteration, SAGA and Accelerated SAGA require significantly less computation to achieve the same accuracy as other methods.

-

In the strongly convex setting, Katyusha performs similarly to or better than SVRG with acceleration in most cases.

-

In the non-strongly convex setting, Katyushans performs much worse than other methods when using negative momentum. Without negative momentum, it performs much better than all algorithms except Accelerated SAGA. Because Katyusha without negative momentum is almost exactly the same algorithm as Accelerated SVRG, this improved performance is likely due to the second proximal step and additional step size \(\eta \) in Katyusha. All of the algorithms presented in this work can adopt these features without changing their convergence rates.

8 Conclusion

Although acceleration is a widely used and an extensively researched technique in first-order optimisation, its application to stochastic gradient methods is still poorly understood. The introduction of negative momentum adds another layer of complexity to this line of research. Although algorithms using negative momentum enjoy fast convergence rates and strong performance when the parameters are tuned appropriately, it is unclear if negative momentum is necessary for acceleration. In this work, we propose a universal framework for accelerating stochastic gradient methods that does not rely on negative momentum.

Because our approach does not rely on negative momentum, it applies to a much broader class of stochastic gradient estimators. As long as the estimator admits natural bounds on its bias and MSE, it can be used in our framework to produce an accelerated stochastic gradient method with an optimal \(1/T^2\) dependence on convex problems and an optimal \(\sqrt{\kappa }\) dependence in the strongly convex setting. The bias and MSE of the estimator appear only in the constants of our convergence rates. From this perspective, negative momentum is effectively a variance-reduction technique, reducing the variance in the iterates to improve the dependence on n in the convergence rates. A natural question for future research is whether there exist gradient estimators with smaller bias and MSE than SAGA, SVRG, SARAH, and SARGE that can be accelerated using our framework and admit a better dependence on n.

Notes

The results in [37] are complexity bounds, bounding the number of gradient and prox oracle calls required to achieve a given tolerance. For algorithms performing \(\mathcal {O}(1)\) oracle calls per iteration, these complexity bounds imply the stated bounds on convergence rates.

Because this property asserts bounds on the mean-squared-error and bias of a stochastic gradient estimator, the name MSEB is a natural choice. We suggest the pronunciation “M-SEB”.

Throughout this manuscript, we have sacrificed smaller constants for generality and ease of exposition, so the constant appearing in c is not optimal.

References

Allen-Zhu, Z.: Natasha: Faster non-convex stochastic optimization via strongly non-convex parameter. In: ICML (2017)

Allen-Zhu, Z.: Katyusha: the first direct acceleration of stochastic gradient methods. J. Mach. Learn. Res. 18, 1–51 (2018)

Allen-Zhu, Z.: Katyusha X: practical momentum method for stochastic sum-of-nonconvex optimization. In: ICML (2018)

Allen-Zhu, Z.: Natasha 2: Faster non-convex optimization than SGD. In: Advances in Neural Information Processing Systems (2018)

Allen-Zhu, Z., Hazan, E.: Variance reduction for faster non-convex optimization. In: Proceedings of the 33rd International Conference on Machine Learning, vol. 48 (2016)

Allen-Zhu, Z., Orecchia, L.: Linear coupling: an ultimate unification of gradient and mirror descent. In: Proceedings of the 8th Innovations in Theoretical Computer Science (ITCS) (2017)

Allen-Zhu, Z., Yuan, Y.: Improved SVRG for non-strongly-convex or sum-of-non-convex objectives. In: ICML (2018)

Auslender, A., Teboulle, M.: Interior gradient and proximal methods for convex and conic optimization. SIAM J. Optim. 16(3), 697–725 (2006)

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009)

Bottou, L., Curtis, F.E., Nocedal, J.: Optimization methods for large-scale machine learning. SIAM Rev. 60, 223–311 (2018)

Candès, E.J., Li, X., Ma, Y., Wright, J.: Robust principal component analysis? J. ACM 58(3), 1–37 (2009)

Candés, E.J., Recht, B.: Exact matrix completion via convex optimization. In: Foundations of Computational Mathematics, pp. 717–772 (2009)

Combettes, P.L., Wajs, V.R.: Signal recovery by proximal forward–backward splitting. Multiscale Model. Simul. 4(4), 1168–1200 (2005)

Defazio, A.: A simple practical accelerated method for finite sums. In: Advances In Neural Information Processing Systems, pp. 676–684 (2016)

Defazio, A., Bach, F., Lacoste-Julien, S.: SAGA: a fast incremental gradient method with support for non-strongly convex composite objectives. In: Advances in Neural Information Processing Systems, pp. 1646–1654 (2014)

Driggs, D., Liang, J., Schönlieb, C.B.: A unified analysis of biased stochastic gradient methods and one new one. Technical Report, University of Cambridge (2019)

Fang, C., Li, C.J., Lin, Z., Zhang, T.: Spider: near-optimal non-convex optimization via stochastic path integrated differential estimator. In: 32nd Conference on Neural Information Processing Systems (2018)

Frostig, R., Ge, R., Kakade, S.M., Sidford, A.: Un-regularizing: approximate proximal point and faster stochastic algorithms for empirical risk minimization. ICML 37, 1–28 (2015)

Ghadimi, S., Lan, G.: Accelerated gradient methods for nonconvex nonlinear and stochastic programming. Math. Program. Ser. A 156, 59–99 (2016)

Hofmann, T., Lucchi, A., Lacoste-Julien, S., McWilliams, B.: Variance reduced stochastic gradient descent with neighbors. In: Advances in Neural Information Processing Systems (2015)

Johnson, R., Zhang, T.: Accelerating stochastic gradient descent using predictive variance reduction. In: Advances in Neural Information Processing Systems, pp. 315–323 (2013)

Lan, G., Li, Z., Zhou, Y.: A unified variance-reduced accelerated gradient method for convex optimization. arXiv:1905.12412 (2019)

Lan, G., Zhou, Y.: An optimal randomized incremental gradient method. Math. Program. 171(1–2), 167–215 (2018)

Lin, H., Mairal, J., Harchaoui, Z.: A universal catalyst for first-order optimization. In: Advances in Neural Information Processing Systems (2015)

Lustig, M., Donoho, D., Pauly, J.M.: SparseMRI: the application of compressed sensing for rapid MR imaging. Magn. Reson. Med. 6, 1182–1195 (2007)

Nesterov, Y.: Introductory Lectures on Convex Programming. Springer, Berlin (2004)

Nguyen, L.M., Liu, J., Scheinberg, K., Takáĉ, M.: SARAH: s novel method for machine learning problems using stochastic recursive gradient. In: Proceedings of the 34th International Conference on Machine Learning, vol. 70, pp. 2613–2621 (2017)

Nitanda, A.: Stochastic proximal gradient descent with acceleration techniques. In: Advances in Neural Information Processing Systems, pp. 1574–1582 (2014)

Passty, G.B.: Ergodic convergence to a zero of the sum of monotone operators in Hilbert space. J. Math. Anal. Appl. 72(2), 383–390 (1979)

Pham, N.H., Nguyen, L.M., Phan, D.T., Tran-Dinh, Q.: ProxSARAH: an efficient algorithmic framework for stochastic composite nonconvex optimization. arXiv:1902.05679 (2019)

Reddi, S.J., Sra, S., Póczós, B., Smola, A.: Fast stochastic methods for nonsmooth nonconvex optimization. In: ICML (2016)

Robbins, H., Monro, S.: A stochastic approximation method. Ann. Math. Stat. 22(3), 400–407 (1951)

Schmidt, M., Roux, N.L., Bach, F.: Minimizing finite sums with the stochastic average gradient. Math. Program. 162, 83–112 (2017)

Shang, F., Liu, Y., Cheng, J., Zhuo, J.: Fast stochastic variance reduced gradient method with momentum acceleration for machine learning. In: Proceedings of the 34th International Conference on Machine Learning (ICML) (2017)

Tibshirani, R.: Regression shrinkage and variable selection via the lasso. J. R. Stat. Soc. Ser. B 58, 267–288 (1996)

Wang, Z., Ji, K., Zhou, Y., Liang, Y., Tarokh, V.: SpiderBoost: a class of faster variance-reduced algorithms for nonconvex optimization. arXiv:1810.10690 (2018)

Woodworth, B., Srebro, N.: Tight complexity bounds for optimizing composite objectives. In: Advances in Neural Information Processing Systems (2016)

Xiao, L., Zhang, T.: A proximal stochastic gradient method with progressive variance reduction. SIAM J. Optim. 24(4), 2057–2075 (2014)

Zhang, Y., Xiao, L.: Stochastic primal-dual coordinate method for regularized empirical risk minimization. In: ICML (2015)

Zhou, K.: Direct acceleration of saga using sampled negative momentum. arXiv:1806.11048 (2018)

Zhou, K., Shang, F., Cheng, J.: A simple stochastic variance reduced algorithm with fast convergence rates. In: ICML (2018)

Zhou, Y., Wang, Z., Ji, K., Liang, Y., Tarokh, V.: Momentum schemes with stochastic variance reduction for nonconvex composite optimization. arXiv:1902.02715 (2019)

Acknowledgements

C.-B.S. and M.J.E. acknowledge support from the EPSRC grant No. EP/S0260 45/1. C.-.B.S. acknowledges support from the Leverhulme Trust project “Breaking the nonconvexity barrier”, the Philip Leverhulme Prize, the EPSRC grant No. EP/M00483X/1, the EPSRC Centre No. EP/N014588/1, the European Union Horizon 2020 research and innovation programmes under the Marie Skodowska-Curie grant agreement No. 777826 NoMADS and No. 691070 CHiPS, the Cantab Capital Institute for the Mathematics of Information and the Alan Turing Institute.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

C.-B.S. and M.J.E. acknowledge support from the EPSRC Grant No. EP/S026045/1. C.-B.S. further acknowledges support from the Leverhulme Trust project on ‘Breaking the non-convexity barrier’, the Philip Leverhulme Prize, the EPSRC grant EP/T003553/1, the EPSRC Centre Nr. EP/N014588/1, the Wellcome Innovator Award RG98755, European Union Horizon 2020 research and innovation programmes under the Marie Skodowska-Curie Grant Agreement No. 777826 NoMADS and No. 691070 CHiPS, the Cantab Capital Institute for the Mathematics of Information and the Alan Turing Institute. D.D. acknowledges support from the Gates Cambridge Trust and the Cantab Capital Institute for the Mathematics of Information.

Appendices

One technical lemma

Lemma 7

Given a non-negative sequence \(\sigma _k\), a constant \(\rho \in [0,1]\), and an index \(T \ge 1\), the following estimate holds:

Proof

This follows from expanding the double-sum and computing a series:

\(\square \)

Proofs of non-positivity

The goal is to show that the two terms

are non-positive with the parameter choices of Theorems 1 and 2. We consider three cases.

Case 1. Let \(\gamma _k\) and \(\tau _k\) be as in the statement of Theorem 1. For the first term in (13),

The constraint

ensures that this quadratic in c is non-positive. For the second term, we require \(\tau _k \le 1/2\) for all k, which holds because \(\tau _k = \frac{2}{k + \nu + 4} \le \frac{1}{2}\). Therefore,

The constraint \(c \ge 16 \varTheta _1 \varTheta _2\) implies that this quantity is non-positive.

Case 2. Let \(\gamma \) and \(\tau \) be as in the statement of Theorem 2, and suppose \(\frac{1}{\sqrt{\mu L c}} \le \frac{\rho }{2 \mu }\). In this case, \(\tau = \sqrt{\frac{\mu }{L c}} = \frac{1}{L c \gamma }\). As in Case 1,

which is non-positive due to the constraints on c. For the second term, all we must show is that \(1 - \tau \ge 1 / 2\). We have \(\tau = \sqrt{\frac{\mu }{L c}} \le \frac{1}{\sqrt{c}}\), and c is larger than 4, so the constraint \(c \ge 16 \varTheta _1 \varTheta _2\) ensures that the second term in (13) is non-positive.

Case 3. In Theorem 2, suppose instead that \(\frac{\rho }{2 \mu } \le \frac{1}{\sqrt{\mu L c}}\), so that \(\gamma = \frac{\rho }{2 \mu }\) and \(\tau = \frac{\rho }{2}\). This assumption implies the inequality \(\frac{L}{\mu } \le \frac{4}{c \rho ^2}\), so

This is a quadratic in c with the root

Because c is larger than this quantity, this term is non-positive. For the second term in (13),

where the last inequality follows from the fact that \(c \ge 16 \varTheta _1 \varTheta _2\).

Proofs for SAGA and SVRG

Our results for SAGA and SVRG require the following lemma, which appears also as [31, Lem. 7].

Lemma 8

Suppose \(X_1, \ldots , X_t\) are independent random variables satisfying \(\mathbb {E}_kX_i = 0\) for all i. Then

Proof

Our hypotheses on these random variables imply \(\mathbb {E}_k[X_i X_j] = 0\) for \(i \not = j\). Therefore,

\(\square \)

We begin with a standard bound on the variance \(\Vert {\widetilde{\nabla }}^{\text {SAGA}}_{k+1} - \nabla f(x_{k+1})\Vert ^2\) that is an easy consequence of the variance bound in [15], but [15] and related works [2, 14, 21, 38] ultimately use a much looser bound in their convergence analysis.

Lemma 9

The variance of the SAGA gradient estimator with minibatches of size b is bounded as follows:

Proof

Let \(X_i = \nabla f_j (x_{k+1}) - \nabla f_j(\varphi _k^j)\) for \(j \in J_k\). We then have

Equality \(\textcircled {1}\) is due to Lemma 8. \(\square \)

Lemma 9 provides a variance bound that is compatible with the MSEB property, as we show in the following lemma.

Lemma 10

The SAGA gradient estimator satisfies the MSEB property with \(M_1 = \frac{3 n}{b^2}\), \(\rho _M = \frac{b}{2 n}\), \(M_2 = 0\), and \(\rho _B = \rho _F = 1\).

Proof

Lemma 9 shows that the MSE of the SAGA gradient estimator is dominated by \(\frac{1}{b n} \sum _{i=1}^n \mathbb {E} \Vert \nabla f_i(x_{k+1})\) \(- \nabla f_i(\varphi _k^i)\Vert ^2\), so we choose this sequence for \(\mathcal {M}_k\). Using the inequality \(\Vert a-c\Vert ^2 \le (1+\frac{2 n}{b}) \Vert a-b\Vert ^2 + (1+\frac{b}{2 n}) \Vert b-c\Vert ^2\),

Equality \(\textcircled {1}\) follows from computing expectations and the update rule for \(\varphi _k^i\):

and \(\textcircled {2}\) follows from the the inequalities \(\left( 1 + \frac{b}{2 n} \right) \left( 1 - \frac{b}{n} \right) \le \left( 1 - \frac{b}{2 n} \right) \) and \(1+\frac{2 n}{b} \le \frac{3 n}{b}\). This shows that we can take \(M_1 = \frac{3 n}{b^2}\), \(M_2 = 0\), and \(\rho _F = 1\). Because the SAGA gradient estimator is unbiased, we can clearly set \(\rho _B = 1\), proving the claim. \(\square \)

A similar result holds for the SVRG gradient estimator.

Corollary 1

The SVRG gradient estimator satisfies the MSEB property with \(M_1 = \frac{3 p}{b}\), \(\rho _M = \frac{1}{2 p}\), \(M_2 = 0\), and \(\rho _B = \rho _F = 1\).

Proof

Following the same argument as in the proof of Lemma 9, we have the bound

The factor \(1 - 1/p\) that appears is due to the fact that \({\widetilde{\nabla }}_{k+1} = \nabla f(x_{k+1})\) with probability 1/p. With \(\mathcal {M}_k = \frac{1 - 1/p}{b n} \sum _{i=1}^n \mathbb {E} \Vert \nabla f_i(x_{k+1}) - \nabla f_i({\widetilde{x}}) \Vert ^2\), we follow the proof of Lemma 10.

Equality \(\textcircled {1}\) follows from the fact that \({\widetilde{x}} = x_k\) with probability 1/p. \(\square \)

With the MSEB property established for the SAGA and SVRG gradient estimators, we can apply Theorems 1 and 2 to get a rate of convergence. For the SAGA estimator, Lemma 10 ensures that the choices \(c = \frac{96 n^2}{b^3}\) and \(\rho = \frac{b}{2 n}\) satisfy the hypotheses of Theorems 1 and 2 as long as \(b \le 4 \sqrt{2} n^{2/3}\). Similarly, for the SVRG estimator, the choices \(c = \frac{b}{96 p^2}\) and \(\rho = \frac{1}{2 p}\) satisfy the conditions of Theorems 1 and 2 as long as \(b \le 32 p^2\).

Proofs for SARAH

To prove the convergence rates of Theorem 5, we first show that the SARAH gradient estimator satisfies the MSEB property.

Lemma 11

The SARAH gradient estimator satisfies the MSEB property with \(M_1 = 1\), \(M_2 = 0\), \(\rho _M = 1 / p\), \(\rho _B = 1 / p\), and \(\rho _F = 1\).

Proof

The SARAH gradient estimator is equal to \(\nabla f(x_{k+1})\) with probability 1/p, so the expectation of the SARAH gradient estimator is

Therefore,

so \(\rho _B = 1 / p\). Next, we prove a bound on the MSE. Let \(\mathbb {E}_{k,p}\) denote the expectation conditioned on the first k iterations and the event that the full gradient is not computed at iteration \(k+1\). Under the condition that the full gradient is not computed, the expectation of the SARAH estimator is

The beginning of our proof is similar to the proof of the MSE bound in [27, Lem. 2].

We consider each inner product separately. The first inner product is equal to

For the next two inner products, we use the fact that

With this equality established, we see that the second inner product is equal to

The third inner product can be bounded using a similar procedure.

Altogether, we have

For the second term,

The inequality is Jensen’s. This results in the recursive inequality

This provides a bound on the MSE under the condition that the full gradient is not computed at iteration k. If the full gradient is computed, the MSE of the estimator is clearly equal to zero, so applying the full expectation operator yields

With \(\mathcal {M}_k = \mathbb {E} \Vert {\widetilde{\nabla }}^{\text {SARAH}}_{k+1} - \nabla f(x_{k+1})\Vert ^2\), it is clear that we can take \(M_1 = 1, \rho _M = 1 / p\), \(M_2 = 0\), and \(\rho _F = 1\). \(\square \)

With these MSEB constants established, convergence rates easily follow from Theorems 1 and 2 with \(c = 144 p^4\) and \(\rho = 1 / p\).

Proofs for SARGE

For the proofs in this section, we rewrite the SARGE gradient estimator in terms of the SAGA estimator to make the analysis easier to follow. Define the operator

where the variables \(\{\xi _k^i\}_{i=1}^n\) follow the update rules \(\xi _{k+1}^j = x_k\) for all \(j \in J_k\) and \(\xi _{k+1}^i = \xi _k^i\) for all \(i \not \in J_k\). The SARGE estimator is equal to

Before we begin, we require a bound on the MSE of the \(\xi \)-SAGA gradient estimator that follows immediately from Lemma 10.

Lemma 12

The MSE of the \(\xi \)-SAGA gradient estimator satisfies the following bound:

Proof

Following the proof of Lemma 9,

Equality \(\textcircled {1}\) is an application of Lemma 8. To continue, we follow the proof of Lemma 10.

Equality \(\textcircled {2}\) follows from computing expectations, and \(\textcircled {3}\) uses the estimate \(\left( 1-\frac{b}{n}\right) \left( 1+\frac{b}{2n}\right) \le \left( 1-\frac{b}{2n}\right) \). \(\square \)

Due to the recursive nature of the SARGE gradient estimator, its MSE depends on the difference between the current estimate and the estimate from the previous iteration. This is true for the recursive SARAH gradient estimate as well, but bounding the quantity \(\Vert {\widetilde{\nabla }}^{\text {SARAH}}_k - {\widetilde{\nabla }}^{\text {SARAH}}_{k-1}\Vert ^2\) is a much more straightforward task than bounding the same quantity for the SARGE estimator. The next lemma provides this bound.

Lemma 13

The SARGE gradient estimator satisfies the following bound:

Proof

To begin, we use the standard inequality \(\Vert a - c\Vert ^2 \le (1+\delta ) \Vert a - b\Vert ^2 + (1+\delta ^{-1}) \Vert b - c\Vert ^2\) for any \(\delta > 0\) twice. For simplicity, we set \(\delta = \sqrt{3/2} - 1\) and use the fact that \(1 + \frac{1}{\sqrt{3/2} - 1} \le 6\) for both applications of this inequality.

We now bound the first two of these three terms separately. Consider the first term.

Equality \(\textcircled {1}\) is the standard variance decomposition, which states that for any random variable X, \(\mathbb {E}_k\Vert X - \mathbb {E}_kX \Vert ^2 = \mathbb {E}_k\Vert X\Vert ^2 - \Vert \mathbb {E}_kX\Vert ^2\). The second term can be reduced further by computing the expectation. Let \(j_k\) be any element of \(J_k\). The probability that \(\nabla f_{j_k} (\varphi _k^{j_k}) = \nabla f_{j_{k-1}}(x_k)\) is equal to the probability that \(j_k \in J_{k-1}\), which is b/n. The probability that \(\nabla f_{j_k}(\varphi _k^{j_k}) = \nabla f_{j_{k-2}} (x_{k-1})\) is equal to the probability that \(j_k \not \in J_{k-1}\) and \(j_k \in J_{k-2}\), which is \(b / n \left( 1 - b / n \right) \). Continuing in this way,

This implies that

We include the inequality of the second line to simplify later arguments. This completes our bound for the first term of (14). For the second term, we recall Lemma 12.

Combining all of these bounds, we have shown

\(\square \)

Lemma 13 allows us to take advantage of the recursive structure of our gradient estimate. With this lemma established, we can prove a bound on the MSE.

Lemma 14

The SARGE gradient estimator satisfies the following recursive bound:

Proof

The beginning of our proof is similar to the proof of the variance bound for the SARAH gradient estimator in [27, Lem. 2].

We consider each inner product separately. The first inner product is equal to

For the next two inner products, we use the fact that

With this equality established, we see that the second inner product is equal to

The third inner product can be bounded using a similar procedure.

where the inequality is Young’s. Altogether and after applying the full expectation operator, we have

Finally, we bound the last term on the right using Lemma 13.

\(\square \)

Lemma 14 shows that the SARGE gradient estimator satisfies the MSEB property with suitably chosen parameters.

Corollary 2

The SARGE gradient estimator with \(b \le n / 3\) satisfies the MSEB property with \(M_1 = 12\), \(M_2 = (27 + 12 b) / n^2\), \(\rho _M = \frac{b}{2 n}\), \(\rho _B = b / n\), and \(\rho _F = \frac{b}{2 n}\).

Proof

It is easy to see that \(\rho _B = b / n\) by computing the expectation of the SARGE gradient estimator.

The result of Lemma 14 makes it clear that \(M_1 = 12\). To determine \(\rho _M\), we must first choose a suitable sequence \(\mathcal {M}_k\). Let \(\mathcal {M}_k = \mathbb {E} \Vert {\widetilde{\nabla }}^{\text {SARGE}}_{k+1} - \nabla f(x_{k+1})\Vert ^2\). The requirement that \(b \le n / 3\) implies \(1 - \frac{b}{n} + \frac{3 b^2}{2 n^2} \le 1 - \frac{b}{2 n}\), so Lemma 14 ensures that with \(\rho _M = \frac{b}{2 n}\), \(\mathcal {M}_k \le (1-\rho _M) \mathcal {M}_{k-1}\).

Finally, we must compute \(M_2\) and \(\rho _F\) with respect to some sequence \(\mathcal {F}_k\). Lemma 14 motivates the choice

and the choices \(M_2 = \frac{27 + 12 b}{n^2}\) and \(\rho _F = \frac{b}{2 n}\) are clear. \(\square \)

To prove the convergence rates of Theorem 6, we simply combine the MSEB constants of Corollary 2 with Theorems 1 and 2.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Driggs, D., Ehrhardt, M.J. & Schönlieb, CB. Accelerating variance-reduced stochastic gradient methods. Math. Program. 191, 671–715 (2022). https://doi.org/10.1007/s10107-020-01566-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10107-020-01566-2