How to build an embodiment lab: achieving body representation illusions in virtual reality

- 1Experimental Virtual Environments for Neuroscience and Technology Laboratory, Facultat de Psicologia, Universitat de Barcelona, Barcelona, Spain

- 2CERMA UMR CNRS 1563, École Centrale de Nantes, Nantes, France

- 3Renaissance Computing Institute, University of North Carolina at Chapel Hill, Chapel Hill, NC, USA

- 4Institut d’Investigacions Biomédiques August Pi i Sunyer (IDIBAPS), Barcelona, Spain

- 5Institució Catalana Recerca i Estudis Avançats (ICREA), Barcelona, Spain

Advances in computer graphics algorithms and virtual reality (VR) systems, together with the reduction in cost of associated equipment, have led scientists to consider VR as a useful tool for conducting experimental studies in fields such as neuroscience and experimental psychology. In particular virtual body ownership, where the feeling of ownership over a virtual body is elicited in the participant, has become a useful tool in the study of body representation in cognitive neuroscience and psychology, concerning how the brain represents the body. Although VR has been shown to be a useful tool for exploring body ownership illusions, integrating the various technologies necessary for such a system can be daunting. In this paper, we discuss the technical infrastructure necessary to achieve virtual embodiment. We describe a basic VR system and how it may be used for this purpose, and then extend this system with the introduction of real-time motion capture, a simple haptics system and the integration of physiological and brain electrical activity recordings.

Introduction

Advances in computer graphics algorithms and virtual reality (VR) systems, together with the decreasing cost of associated equipment, have led scientists to consider VR as a useful tool for conducting experimental studies with high ecological validity in fields such as neuroscience and experimental psychology (Bohil et al., 2011). VR places participants in a 3D computer-generated virtual environment (VE), providing the illusion of being inside the computer simulation and with the ability to act there – a sensation typically referred to as presence (Sanchez-Vives and Slater, 2005). In VR, it is also possible to replace the participant’s body with a virtual body seen from a first-person perspective (1PP), enabling a wide variety of experiments concerned with how the brain represents the body (Blanke, 2012) that could not be realized without VR. In recent years, VR has been employed to systematically alter the participant’s sense of body ownership and agency (Kilteni et al., 2012a). By “body ownership” we mean the illusory perception a person might have that an artificial body or body part is their own, and is the source of their sensations (Tsakiris, 2010). By “agency” we mean that the person recognizes themselves as the cause of the actions and movements of that body. We use the term “virtual embodiment” (or just “embodiment”) to describe the physical process that employs the VR hardware and software to substitute a person’s body with a virtual one. Embodiment under a variety of conditions may give rise to the subjective illusions of body ownership and agency.

A fundamental aspect of VR is to enable participants to be immersed in, explore and interact within a computer-generated VE. For full immersion, visual, auditory, and haptic displays together with a tracking system are required to enable the system to deliver to the participant the illusion of being in a place and that what is happening in this place is considered plausible by the participant (Slater, 2009). It is necessary to track the participant’s movements in order to adapt the display of the VE to these movements. We distinguish between head-tracking, which consists of following in real-time the movements of the head of a participant enabling the system to update the virtual viewpoint based on the data of the tracked head, and body tracking. Body tracking tracks the movements of the participant’s body parts (e.g., hands, arms, etc.), or their whole body ideally including facial expressions.

Although there are many different types of display systems for VR including powerwalls, CAVE systems (Cruz-Neira et al., 1992), and head-mounted displays (HMDs), here we concentrate almost exclusively on HMD-based systems since these are the most appropriate for body ownership illusions (BOIs). HMDs display an image for each of the participant’s eyes, which are fused by the human visual system to produce stereoscopic images. This is achieved by placing either two screens or a single screen with a set of lenses directly in front of the participant’s eyes. Therefore, combined with head-tracking, wherever the participant looks, he/she will only see the VE and nothing of physical reality including his/her own body.

BOIs are used in body representation research, the most well-known of which is the rubber hand illusion (RHI) (Botvinick and Cohen, 1998). The RHI involves, for example, a left rubber hand placed in front of the participant in an anatomically plausible position, close to the participant’s hidden left hand. Using two paintbrushes, the experimenter strokes both the real and rubber hands synchronously at approximately the same anatomical location. The resulting visuo-tactile contingency between the seen and the felt strokes elicits, in the majority of participants, the sensation that the rubber hand is part of their body, as reflected in a questionnaire, proprioceptive drift toward the rubber hand, and physiological stress responses when the rubber hand is attacked (Armel and Ramachandran, 2003). The RHI is also elicited when replacing visuo-tactile stimuli with synchronous visuo-motor correlations, i.e., when the artificial body part is seen to move synchronously with the real hidden counterpart (Tsakiris et al., 2006; Dummer et al., 2009; Kalckert and Ehrsson, 2012).

Analogously, the same principles of multimodal stimulation have been demonstrated for full body ownership illusions (FBOIs): BOIs with respect to entire artificial bodies. For example, it was shown that people could experience a manikin as their own body when seeing a 1PP live video feed from the artificial body (congruent visuo-proprioceptive information), and when synchronous visuo-tactile stimulation was applied to the artificial and real bodies (Petkova and Ehrsson, 2008; Petkova et al., 2011). However, by projecting the fake body far away from the participant’s viewpoint and therefore violating the 1PP, the FBOI was not induced (Petkova and Ehrsson, 2008; Petkova et al., 2011). Conversely, other researchers have argued for a FBOI even when the other body is in extra-personal space facing away from the participant, but where there is synchronous visuo-tactile stimulation on the back of the real and fake bodies (Lenggenhager et al., 2007, 2009; Aspell et al., 2009). For a review see Blanke (2012).

Body Ownership Illusions Can also be Created in Virtual Reality

One of the advantages of VR techniques is that they enable the experimenter to tune precisely the spatial and temporal properties of the multimodal stimuli provided. Therefore, it is not surprising that most of the above results have been successfully replicated within VR. For example, the virtual arm illusion (Slater et al., 2008) was shown to result in the illusion of ownership over a virtual arm and hand comparable with the results of the original RHI study. In this case, instead of a rubber hand, a virtual arm displayed in stereo on a powerwall was seen to substitute the participant’s hidden real one, with synchronous visuo-tactile stimulation on the real and virtual hand. Analogously, the virtual arm illusion was also demonstrated using synchronous visuo-motor feedback (Sanchez-Vives et al., 2010) where the participant’s fingers and the virtual counterpart moved in synchrony. When they did not move in synchrony the illusion occurred to a significantly lesser extent. Finally, VR has enabled the induction of a FBOI through the substitution in immersive VR of the participant’s body with a full virtual body seen from its own 1PP (Slater et al., 2010a).

Virtual Embodiment Systems are Becoming Very Important Tools for Psychologists and Neuroscientists

Although VR is a promising and useful tool for studying BOIs, integrating the various technologies necessary for such a system can be daunting. In this paper, we discuss the technical infrastructure necessary for virtual embodiment experiments, and provide basic guidelines for scientists who wish to use VR in their studies. We intend this paper to help experimenters and application builders to carefully setup a VR system by highlighting benefits and drawbacks of the different components that have to be integrated together. Moreover, by exhibiting a complete VR multimodal system, we hope to suggest new ways of using VR to induce and evaluate virtual embodiment experiments.

The remainder of this document is organized as follows: Section “Core Virtual Embodiment System” describes the core VR system responsible for generating 1PP views of a virtual body. The core VR system consists of the VR application, head-tracking and display hardware, and serves as the integration point for the other modules. Section “Multimodal Stimulation Modules” describes multimodal stimulation modules – full body tracking and haptics – used to induce the BOIs. Section “Quantitative Measurement Modules” describes quantitative measurement modules – electroencephalography (EEG) and other physiological signals – used primarily to measure the degree of embodiment of the participant. Section “Results” provides examples of experiments incorporating the various modules. The paper concludes with a discussion in Section “Discussion”.

Materials and Methods

In the following sections, we describe a modular VR system for BOI experiments. For each module, we first give a general introduction before detailing its role within the VR system and why it is important for BOIs. We then list existing technical implementations and present their characteristics. Finally, we suggest a set of important criteria that need to be considered when building one’s own VR system for virtual embodiment, before describing the solution we chose. It is outside the scope of this paper to go into full detail for all technologies that could be used to enable a virtual embodiment system. We therefore point to reviews of subsystems where possible and put an emphasis on the technology that is used in our own lab.

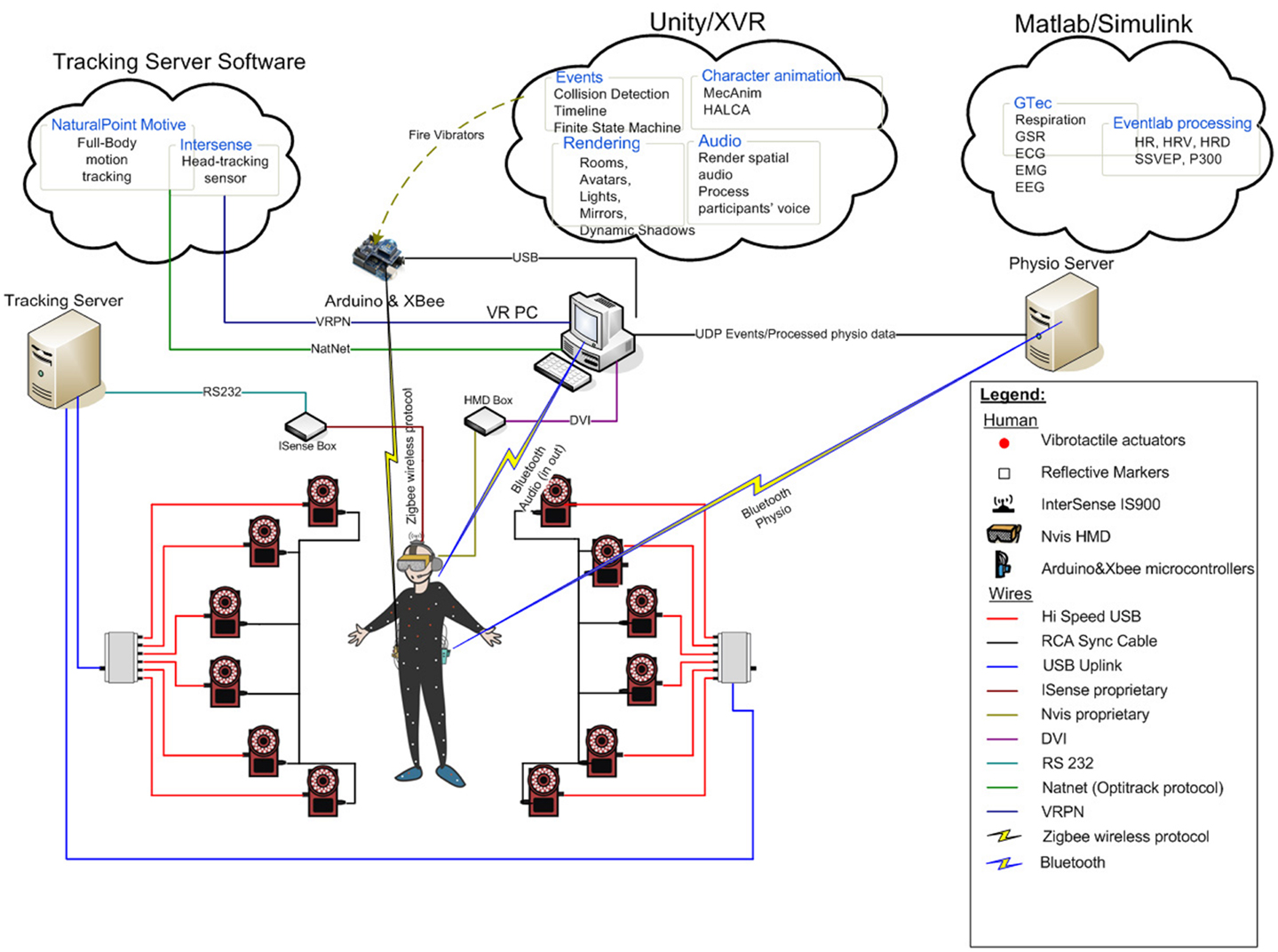

It is important to note that the scope of the technology and solutions reviewed in this report are aimed almost exclusively at immersive VR systems that can be used to support BOIs in the sense discussed above. Hence the systems we consider (see Figure 1) require the use of: (i) HMDs (so that the real body cannot be seen), (ii) head-tracking (so that the participant can look in any direction and still see within the VE – and in particular look down toward his or her body but see a virtual body substituting the real one), (iii) real-time motion capture, so that as the participant moves his or her real body the virtual body moves accordingly (how it moves will depend on experimental conditions), (iv) tactile feedback so that when something is seen to touch the virtual body there is a corresponding sensation on the real body, and (v) audio so that events in the VE can be heard. Critically, we consider software solutions that bind all of these together (as illustrated in Figure 2). Additionally we consider other necessary features of such a setup including how to record physiological responses of participants to events within the VE, and how such recording devices and their associated software must be integrated into the overall system. We do not consider the general issue of how to build a VR system – for example, we do not concentrate on projection based systems such as powerwalls and CAVEs, nor surround displays such as domes, nor IMAX type of systems. For a more comprehensive description of VR systems and associated issues such as interactive computer graphics, the reader can consult reference textbooks such as Slater et al. (2001), Sherman and Craig (2002), Burdea and Coiffet (2003), and Kalawsky (2004).

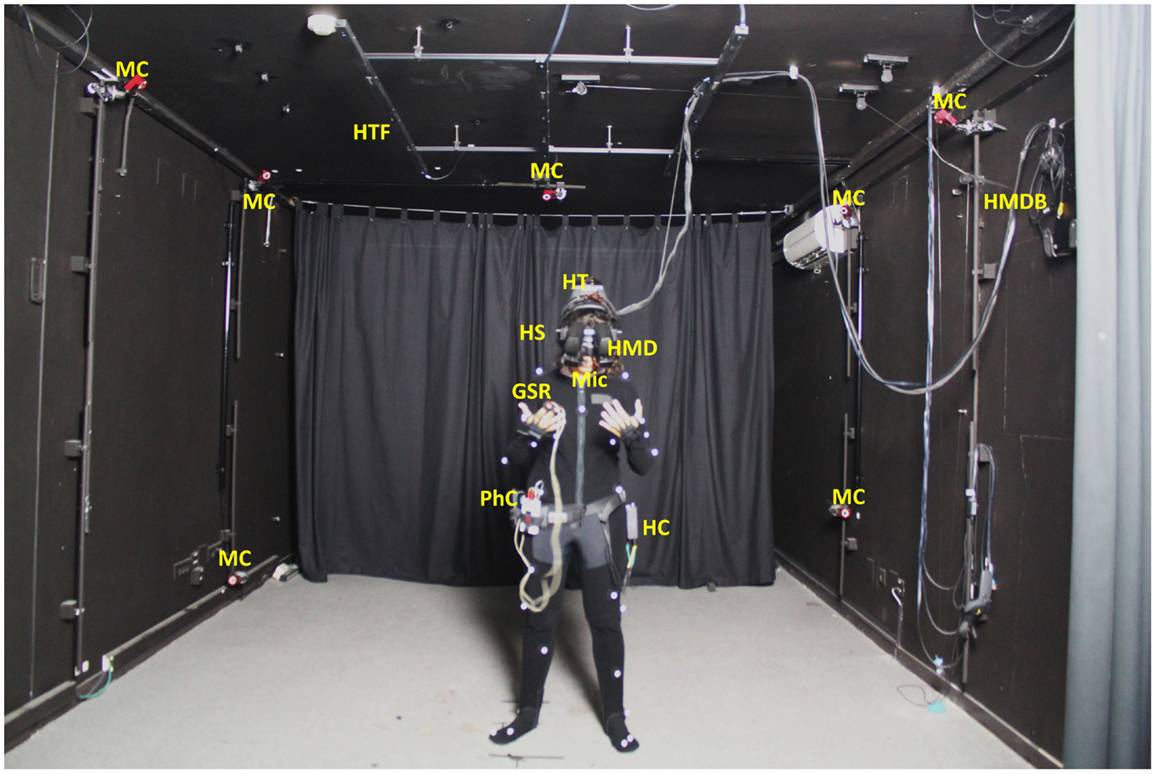

Figure 1. Hardware elements of the embodiment system. The participant wears a suit with retro-reflective markers for full body motion capture, an NVIS SX111 Head-Mounted Display (HMD), which is fed with the real-time stereo video signal from the NVIS HMD box (HMDB), an Intersense IS-900 Head-Tracker (HT), an audio headset (HS), a microphone for voice capture (Mic), a g.tec Mobilab wireless physiology measurement device (PhC) capable of measuring ECG, respiration, EMG and GSR, and an Arduino haptics controller (HC) that has vibrotactile actuators attached inside the motion capture jacket to deliver some sense of touch. ECG and EMG sensors are not visible in the image. Respiration is typically measured with an elastic belt around the chest. In the room, which is painted black to overcome possible lighting distractions, there are 12 Optitrack motion capture cameras (MC), 7 of which are visible in the image. An Intersense IS-900 head-tracking frame (HTF) installed on the ceiling sends out ultrasound signals in order for the system to capture very accurate head position and orientation.

Figure 2. An overview of the embodiment system. In the center the participant is shown with head-tracking and an optical full body tracking suit, wearing devices for physiological measurements, vibrotactile haptic actuators for haptic response, a HMD for showing the stereo imagery and a headset with microphone for delivering and recording audio.

Core Virtual Embodiment System

We distinguish between a set of core modules required for the production of 1PP views of a virtual body, and additional modules that can be added to provide multimodal stimulation and physiological measurement. The core modules necessary for creating 1PP views of a virtual body are:

• A VR module that handles the creation, management, and rendering of all virtual entities. This module also serves as the integration point for all other modules.

• A head-tracking module, which maps the head movements of the participant to the virtual camera, updating the viewpoint of the virtual world as in the real world.

• A display module, which consists of the hardware devices used to display the VE.

VR module

A VR scene consists of a set of 3D objects. Each object is typically represented by a set of 3D polygons or triangles. For example, a table, a particularly simple object, can be modeled as a set of five cuboids (table top and four legs) in a precise and fixed geometrical relationship to one another. Additionally each object has critical associated information – such as its material properties, in particular information about its color, how it reflects light, and if the application requires this, other physical properties such as mass, viscosity, auditory properties, and so on. A scene is composed of a set of such objects typically arranged in a hierarchy. The hierarchy describes “parent”–“child” relationships, determining what happens to the child node when a parent node is transformed in some way. For example, imagine a book resting on a table. When the table is moved the book will move with it. However, if the book is moved the table will not move. Hence the table is the “parent” of the book. Imagine a room – the root of the whole scene might be a node representing the room itself. This will have a set of associated polygons describing the ceiling, floor, and walls. Direct children of this root might be the lights and furniture within the room.

Our example of a table and book fits here because the table would be a child of the room, and the book a child of the table. This hierarchical composition describing the scene is very useful for modeling – since only the parent–child geometric relationships need to be specified, and therefore starting at the root the entire scene can be geometrically described. In other words we do not have to think about the geometrical relationship between the room and the book, but only about the room and the table, and the table and the book.

Each object might itself be arranged as a hierarchy. The representation of a virtual human follows these principles. A human body can be considered as a hierarchy with root at the pelvis, the upper legs as children of the pelvis, lower legs as children of the upper legs, feet as children of the lower legs, and so on for the toes. The whole body can be described hierarchically in this way. If the hand moves the whole body does not move. But if the pelvis moves then the whole body is carried with it. Contrary to the usual description of geometry using individual rigid polyhedral objects with their materials applied, virtual humans are usually described as a single vertex weighted polyhedral mesh that deforms with the underlying bone hierarchy (represented as a hierarchy of transformations) in which the vertex weights represent the influence of each skeletal transformation on the vertex. In this way, animation makes direct use of the hierarchical description, since e.g., to animate the forearm, appropriate transformations have to be applied at the elbow joint only. One can see that to animate the whole body requires applying geometrical transformations at the joints (i.e., the nodes of the hierarchy).

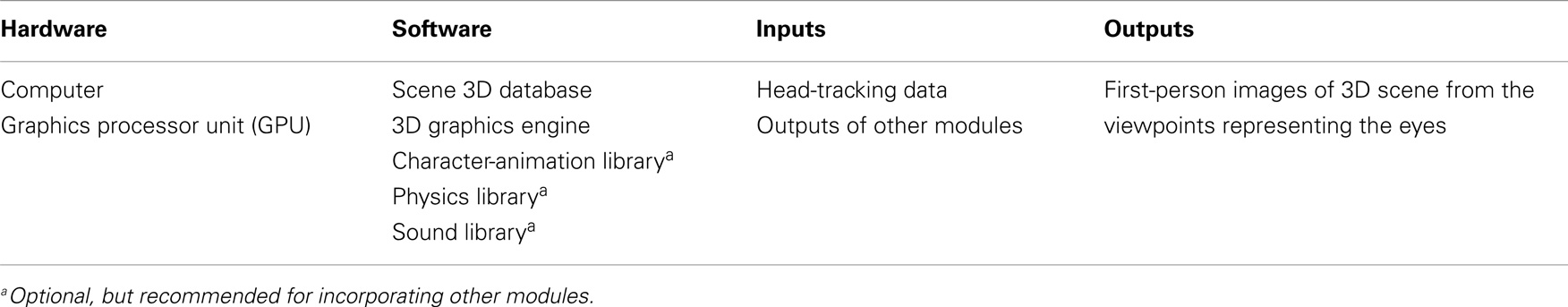

The entire scene is thus represented by a database describing the hierarchical arrangement of all the objects in the scene. The VR module amongst other things includes the management of this scene hierarchy. This includes the animation of virtual objects and other dynamic changes to the environment. Because this module is responsible for updating the internal state of the scene and rendering the result, it serves as the integration point for the other modules. Table 1 presents the main characteristics of the VR module in terms of hardware and software dependencies as well as in terms of required inputs and produced outputs.

The scene is rendered onto displays from a pair of viewpoints in the scene (for stereo), representing the positions of the two eyes of an observer. Rendering requires resolving visibility (i.e., what can and cannot be seen from the viewpoint), and typically the process of a computer program traversing the scene data base and producing output onto the displays should occur at least 30 times a second. The location and the gaze direction are typically determined by head-tracking – i.e., a device that sends to the computer responsible for rendering information about the participant’s head position and orientation at a high data rate. Thus the scene is displayed from the current viewpoint of the participant’s head position and orientation.

What does (visual) embodiment require? A virtual human body can form part of the scene database – it is an object just like any other. If this body is placed in the appropriate position in relation to the head of the participant, then when the participant wearing a HMD looks down toward his or her real body, they would see the virtual body instead. Thus the virtual and real bodies are perceptually spatially coincident. If the VR system is such that the only visual information that is seen is from the virtual world, as is the case with a HMD, then it will appear to the participant as if the virtual body had substituted their real one. Similarly, recall that objects have material properties – in particular specifying how they reflect light. Then when the participant looks into a virtual mirror in the scene he/she would see a reflection of their virtual body.

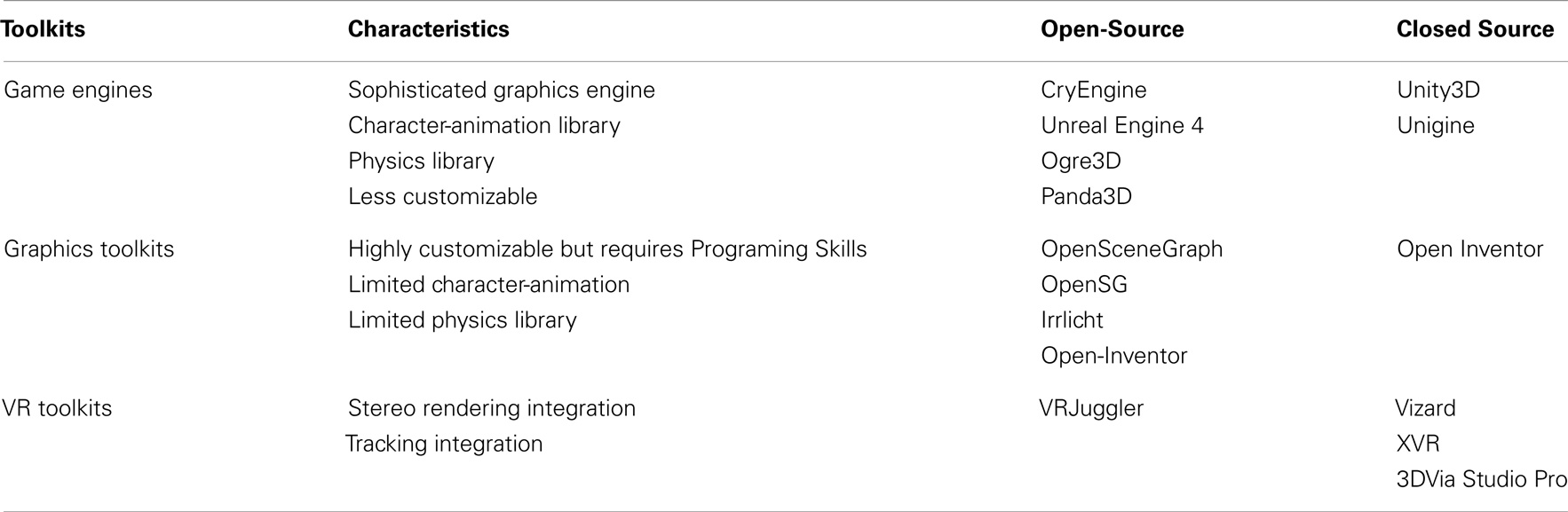

A wide range of off-the-shelf software suites are appropriate for the VR module. These toolkits can generally be grouped into three families: game engines, VR toolkits, and general graphics toolkits. A framework for selecting the right game engine is given by Petridis et al. (2012) although not focusing on immersive VR. For VR and graphics toolkits, an overview about the experiences with several systems, some also discussed here, is given by Taylor et al. (2010).

Game engines. Game engines specialized for game development, such as Unity3D1, Unigine2, Unreal3, Ogre3D4, Panda3D5, etc., offer the advantage of state-of-the-art graphics, as well as many of the features listed in Table 1 (required or optional). In addition to a 3D graphics engine, game engines typically provide character-animation, network communication, sound, and physics libraries. Many game engines also offer extensibility via a plug-in architecture. Moreover, generating appropriate stereo views for the HMD systems typically used for virtual embodiment scenarios is usually possible by manipulating one or two virtual cameras. Most game engines do not offer native support for integrating tracking information, but this can often be performed via an available networking interface or plug-in system. The cost of game engines can vary widely. Companies typically charge a percentage of the profit if an application is sold successfully, which is usually not a consideration in a research laboratory setting. Unreal is available in source code at low cost. It is cheaper than Unity3D but has a smaller user community. Unigine has a small community in large part due to its high cost.

Graphics toolkits. Graphics toolkits such as OpenSceneGraph6, OpenSG7, Open Inventor8, and Irrlicht9 are used for multiple purposes (e.g., scientific visualization, games, interaction, modeling, visual simulation, etc.). Unlike game engines, these toolkits are not specialized for a single use, and thus may not offer all of the modules offered by a game engine, such as a character-animation engine. Most of these graphics toolkits are open source, so it is typically possible to extend them in order to add specific features. As in the case of game engines, graphics toolkits usually do not provide VR-specific features such as direct HMD or tracking support.

Since graphics toolkits are often open source, this enables a high degree of customizability. OpenSceneGraph, OpenSG, and Irrlicht are freely available open source while there are both open and closed source versions of Open-Inventor. The commercial version of Open-Inventor is more expensive but has better user support.

Virtual reality toolkits. Virtual reality toolkits, such as XVR10 (Tecchia et al., 2010), VR Juggler11 (Bierbaum et al., 2001), 3DVia Studio Pro12, and Vizard13 are designed specifically for the development of VR applications, and include features such as stereo rendering, and the integration of tracking data. Their other capabilities can vary widely, e.g., XVR is closer to a graphics toolkit, with the ability to program using a low level scripting language, and Vizard has many features of a game engine, such as character-animation and a graphical level editor. Support is generally obtained via community sites. Extensibility can be an issue for non-open source platforms, although plug-in architectures are often available. XVR and Vizard are closed source systems while VR Juggler is available for free in source code. XVR is available for free with a rotating logo that is deactivated after purchase. Vizard probably contains the most features but also costs considerably more than other solutions. Table 2 contains a summary of various key characteristics for the VR module across a variety of systems.

When choosing an engine for a VR module of a virtual embodiment system, one should take the following important characteristics into account:

• Trade-off between extensibility (i.e., possibility to add specific features) and built-in functionality.

• Ease of tracking system integration (see “Head-tracking module” and “Full body motion capture module”).

• Reliable workflow for loading 3D file formats.

• Support for virtual mirrors, often used to enhance BOIs.

Our solution. We have successfully used both the XVR platform and the Unity game engine for a number of virtual embodiment experiments (see “Results”). XVR is attractive due to its low cost, extensibility via plug-ins, and built-in cluster rendering support (largely used for non-embodiment VR applications). Additionally, it is straightforward to render in stereo to a HMD. XVR also provides a software interface to access tracking data via network connections. Unity is available for free with a reduced feature set, although a license is necessary for plug-in support. HMD stereo rendering can also be added quite easily in Unity. Unity is especially attractive due to its integrated development environment, which simplifies the creation of interactive VEs for individuals with limited computer programing experience. It also includes a character-animation library, which is useful for virtual embodiment.

Extending XVR and Unity is made possible by the development of external dynamically linked libraries (DLLs) in C++ that can be loaded at runtime. XVR provides direct support for OpenGL, whereas Unity has a built-in high-quality graphics engine including real-time shadows and dynamic particle systems. The flexibility of the plug-in approach enables the easy integration of our custom avatar animation mapping library (Spanlang et al., 2013), which supports mapping the movements of a tracked participant to virtual characters, discussed in Section “Full Body Motion Capture Module”. In addition, for high-quality visualization of virtual characters we have developed the HALCA hardware-accelerated library for character-animation (Gillies and Spanlang, 2010) that we have integrated with a virtual light field approach for real-time global illumination simulation in VR (Mortensen et al., 2008). This enables us to simulate light interactions so that the participant can see reflections and shadows of their virtual avatar, which is quite common in virtual embodiment scenarios (see Results). In Unity, we create virtual mirrors via virtual cameras, whereas in XVR this is achieved via the use of OpenGL stencil buffer or frame buffer object mirrors. One additional feature of the VR module is integration with modeling programs for the creation of the VE. XVR provides a 3DS Max14 exporter plug-in that makes the importing of objects created in 3DS Max quite straightforward. Unity enables the user to import from a number of modeling tools, and enables interactive editing of the VE within the Unity editor.

We have used virtual characters from AXYZ Design15, Daz3D16, Mixamo17, Poser18, Rocketbox19, and the Open-Source Software MakeHuman20. AXYZ Design, Mixamo, and Rocketbox characters consist of a relatively small number of polygons and are therefore ideal for real-time visualization in Games and VR. Rocketbox and some Mixamo avatars also have skeletal mapping for the face for facial animation. Daz3D and Poser characters usually require an extra conversion step before loaded for visualization. They consist of a larger number of polygons and are therefore not ideal for real-time visualization. There are a few free Daz3D and Poser characters. MakeHuman characters are available with a choice of skeletons and can be stored in low polygon representations and are therefore suitable for real-time visualization.

Since the advent of low cost range sensors like the Microsoft Kinect for Windows, systems to create lookalike virtual characters have been demonstrated (Tong et al., 2012; Li et al., 2013; Shapiro et al., 2014). ShapifyMe21 technology based on Li et al. (2013), for example, provides a service to create full body scans from eight depth views of a person rotating clockwise stopping at a similar pose every 45° using a single Kinect. The system can be run by a single person without the requirement of an operator. Such scans can be rigged automatically with systems such as the Mixamo22 autorigging service.

Much of the work involved in generating a virtual embodiment scenario will take place using the VR module, so great care should be taken to make sure that useful workflows exist from content creation to display in the VE.

Head-tracking module

The head-tracking module sends the 3D head position and orientation of the participant to the VR module, which are then used to update the virtual camera and change the viewpoint of the participant in the VE. In other words, the participant head motions are transformed into real-time 3D head position and orientation.

Head-tracking is essential for providing a correct first-person perspective in immersive VEs, which is a necessary component of a virtual embodiment system. Updating the virtual viewpoint from the head movements of the participant has been proposed to be one of the fundamental requirements for presence in VR due to its contribution to the affordance of natural sensorimotor contingencies (Slater, 2009). Head-tracking information can also be used to animate the head of the participant’s virtual character, providing additional feedback to enhance the illusion of embodiment (full body tracking is discussed in Section “Full body motion capture module”).

Various technologies used for tracking include optical, magnetic, inertial, acoustic, and mechanical. Each has its benefits and drawbacks, varying in price, usability, and performance. Low latency, high precision, and low jitter are all very important for VR. In addition, many tracking systems provide 6-DOF wand-like devices that enable manipulation of virtual objects, which can be useful for various scenarios.

While for various technologies described below wireless solutions exist, we have found that the latency with wireless communication can increase substantially. In addition, wireless connections are prone to getting lost occasionally, which makes them unusable for experiments in which uninterrupted tracking is crucial.

Optical systems. Optical tracking systems are either marker-based or markerless. Marker-based optical head-tracking uses special markers (usually retro-reflective) tracked by a set of cameras surrounding the participant. The markers are typically attached to the stereo glasses or HMD worn by the participant. In order to provide robust results, a number of cameras must be placed around the participant, and their number and placement determine the working volume of the tracking system. This kind of system requires occasional calibration, in which the software provided with the cameras computes their geometric configuration to improve the quality of the tracking. Mounting one or more cameras onto the object that needs to be tracked in an environment fitted with fiducial markers is also a possibility for head-tracking. Examples of marker-based optical head-tracking systems include Advanced Real-time Tracking (ART)23, NaturalPoint’s OptiTrack24, PhaseSpace25, and Vicon26. While markerless optical systems exist (see Full body motion capture module), they are typically used for body motion capture and are not currently stable enough to be used for immersive VR head-tracking.

Magnetic systems. Magnetic tracking systems measure magnetic fields generated by running an electric current sequentially through three coils placed perpendicular to each other. A good electromagnetic tracking system is very responsive, with low levels of latency. One disadvantage of these systems is that anything that can generate a magnetic field (e.g., mobile phones, etc.) can interfere with the signals sent to the sensors. Also, significant warping of the electromagnetic field, and therefore the tracking data, occurs further away from the center of the tracked space. Polhemus27 offers a set of electromagnetic-based tracking devices.

Acoustic systems. Acoustic trackers emit and sense ultrasonic sound waves to determine the position and orientation of a target by measuring the time it takes for the sound to reach a sensor. Usually the sensors are stationary in the environment and the participant wears the ultrasonic emitters. Acoustic tracking systems have many disadvantages. Sound travels relatively slowly, so the update rate of the participant’s position and orientation is also slow. The environment can also adversely affect the system’s efficiency because the speed of sound through air can change depending on temperature, humidity, or barometric pressure.

Inertial systems. Inertial tracking systems use small gyroscopes to measure orientation changes. If full 6-DOF tracking is required, they must be supplemented by a position-tracking device. Inertial tracking devices are fast and accurate, and their range is only limited by the length of the cable connected to the computer. Their main disadvantage is the drift between actual and reported values that is accumulated over time, which can be as high as 10° per minute. Inertial head-tracking is therefore sometimes combined with a slower tracking technology that can be used to correct for drift errors while maintaining the high responsiveness of the inertial tracker.

Mechanical systems. Mechanical tracking systems measure position and orientation with a direct mechanical connection to the target. Typically a lightweight arm connects a control box to a headband, and joint angles are measured by encoders to determine the position and orientation of the end of the arm. The lag for mechanical trackers is very small (<5 ms), their update rate is fairly high (300 Hz), and they are very accurate. Their main disadvantage is that participant motion is constrained by the reach of the mechanical arm.

The role of the head-tracking module is to connect to the tracking device, retrieve the position and orientation of the head of the participant, and send them to the VR module. The head-tracking module must therefore have some communication interface to the VR module. Two basic approaches for this are communicating via a network interface (either locally or remotely), or via a plug-in architecture in the VR module. Similarly, there are two basic approaches for software organization. One is to write software per device to interface directly between the tracker control software and the VR module, and the other is to use some middleware solution, such as VRPN (Taylor et al., 2001) or trackd28, which presents a consistent interface to the VR module for a large number of devices. Interfacing directly enables the experimenter to implement some specific operations that might be required. On the other hand, new software must be written for each new tracking system that might be connected to the VR module, and each device may have a very different software interface.

Important characteristics of a head-tracking system include:

• Stability: the head-tracker has to be as stable as possible in order to prevent jitter, which could induce simulator sickness.

• Latency: similarly, systems with high latency can result in increased simulator sickness (Allison et al., 2001; Meehan et al., 2002).

• Precision: head-tracking is an essential aspect for immersion (Allison et al., 2001), and as such, even minimal head movements should be taken into account in order not to break the feeling of presence within the VE.

Our solution. We use the Intersense IS-90029 tracker, which is a hybrid acoustic/inertial system. We chose this in part due to its stability when the participant is not moving. This is very important for VR systems, especially when participants wear HMDs, otherwise motion sickness can occur when the head-tracking is jittery.

This device has low latency (around 4 ms), an update rate of 180 Hz and a precision of 0.75 mm and 0.05°. We connect to the tracker with a VRPN server that reads the information from the device and sends it to a VRPN client plug-in running in the VR module. This connection can either be via a network in the case of two different machines, or directly over a loopback connection if running on the same machine (VRPN handles both cases transparently). The IS-900 also offers a tracked wand that can be used as an interaction device during experiments, also interfaced with VRPN.

Display module

The display module comprises the hardware used to present the VE to the participant. Various display types can be used to achieve various levels of immersion in conjunction with the other VR technologies employed (e.g., head-tracking), where we characterize immersion by the sensorimotor contingencies supported by the VR system (Slater, 2009). Increased immersion may lead to a greater chance for the illusion of presence to occur in the VE.

Typical displays used for VR include standard computer screens, powerwalls, CAVEs (Cruz-Neira et al., 1992), and HMDs. Although the CAVE and HMD both support high levels of immersion, the HMD is the only device that blocks the participants’ own body from their FOV. This makes the replacement of the participant’s real body with a virtual body much more straightforward. For the remainder of this section, we therefore concentrate on HMDs. More in depth information on the design of HMDs can be found in Patterson et al. (2006).

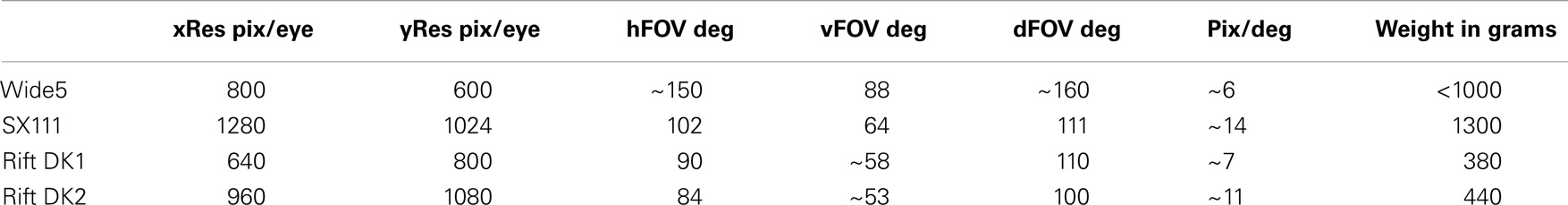

Important characteristics of an HMD for virtual embodiment experiments include resolution, FOV, and weight. Stereoscopy is standard for most HMDs.

Resolution. Resolution refers to the number and density of pixels in the displays of the HMD. Attention should be paid to whether the resolution specified is per eye or in total, and to the difference between horizontal and vertical number of pixels.

FOV. FOV refers to the extent of the VE visible to the participant at any given moment. FOV has been argued to be a very important factor in the perceived degree of presence in VEs (Slater et al., 2010b) and on performance of tasks carried out in VEs (Arthur, 1996; Willemsen et al., 2009). Three types of FOV are usually distinguished, horizontal (hFOV), vertical (vFOV), and diagonal (dFOV). For virtual embodiment applications, vFOV can be especially important, as only a large vFOV enables more of the virtual body to be seen. Most HMDs do not hide the wearer’s view of the real world completely, which can have a strong impact on the level of immersion. We have used black cloth on top of our HMDs in order to ensure that the real world is completely occluded. This is an important shortcoming to which HMD designers should pay more attention.

Weight. Weight can be an important factor in the choice of HMD, as a cumbersome HMD can cause fatigue in the participant, especially over the course of a long experiment. The weight issue can be exacerbated in virtual embodiment scenarios if the participant is looking down often to see their virtual body. Indeed, it was shown (Knight and Baber, 2004, 2007) that while participants with an unloaded head would show no sign of musculoskeletal fatigue after 10 min, wearing a load of 0.5 kg attached to the front of the head would induce fatigue after 4 min and a 2-kg load would induce fatigue after only 2 min. However, the distribution of the weight must also be taken into consideration as a slightly heavier HMD with well distributed weight can be more comfortable than a a lighter HMD that has most of its weight in the front.

In order to display the VE in an HMD, the rendering software has to take into account the technical characteristics of the device used. In particular, each HMD differs in its optical configuration, and therefore the virtual camera used to render the stereo views for the HMD must be set up correctly for each HMD. Each manufacturer should provide the technical specifications necessary to set up the virtual cameras accordingly. HMDs can vary widely in price, and often there is a trade-off between cost, weight, resolution, and field-of-view. Recently HMDs targeting consumer use for video games have been developed. Important properties of the Oculus Rift30 developer kits (DK1 and DK2) are given in Table 3.

Our solution. The display module is a fundamental aspect for generating BOIs in VR and before choosing an HMD the characteristics such as FOV, resolution, and weight must be carefully taken into account.

We have found that FOV is a crucial feature of the HMD for inducing presence in the VE (Slater et al., 2010b), although a good balance of FOV and resolution is desirable. We first used a FakeSpace Labs Wide 531. The Wide 5 separates the view for each eye into a high resolution focused and a lower resolution peripheral view, and therefore can provide a sharper image in the center while maintaining a wide hFOV. In practice, however, users experienced pixelization artifacts from low resolution with the Wide 5.

We are now using the NVIS nVisor SX11132. The SX111 uses LCoS (Liquid Crystal on Silicon) displays and more complicated optics to deliver much less pixelated images to the users eyes than the Wide 5. On the other hand, owing to the use of LCoS, some red and blue vertical lines may appear during rapid head movements. With a weight of 1.3 kg the SX111 is also quite heavy, due in large part to the size of the displays. We are currently evaluating consumer-based HMDs to hopefully achieve a good balance between FOV, resolution, weight, and price. An overview of HMD properties is given in Table 3.

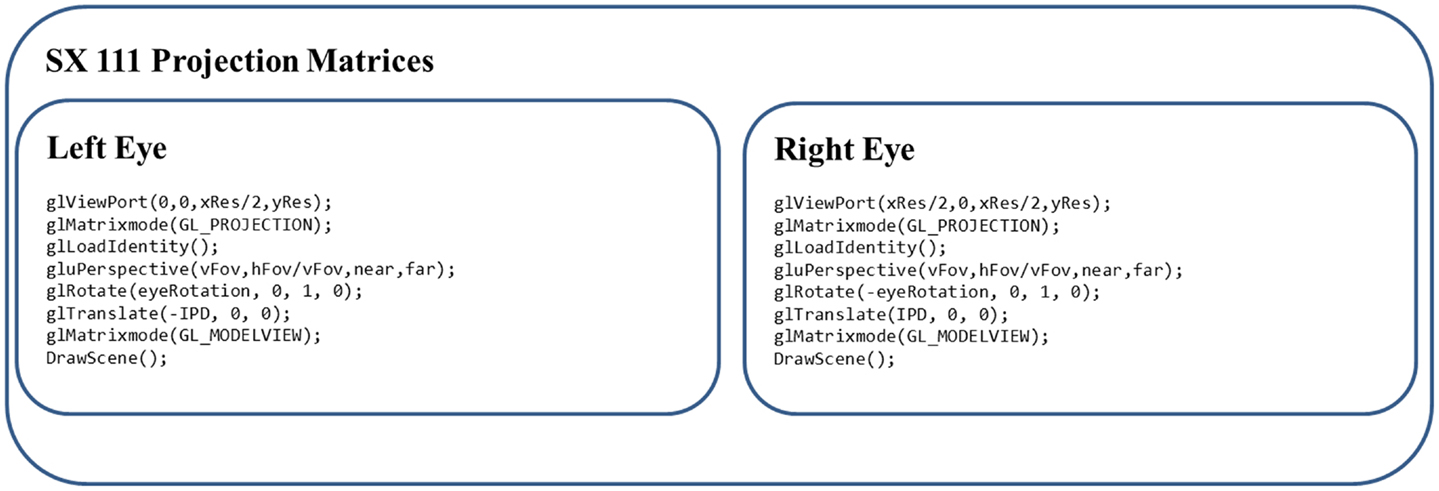

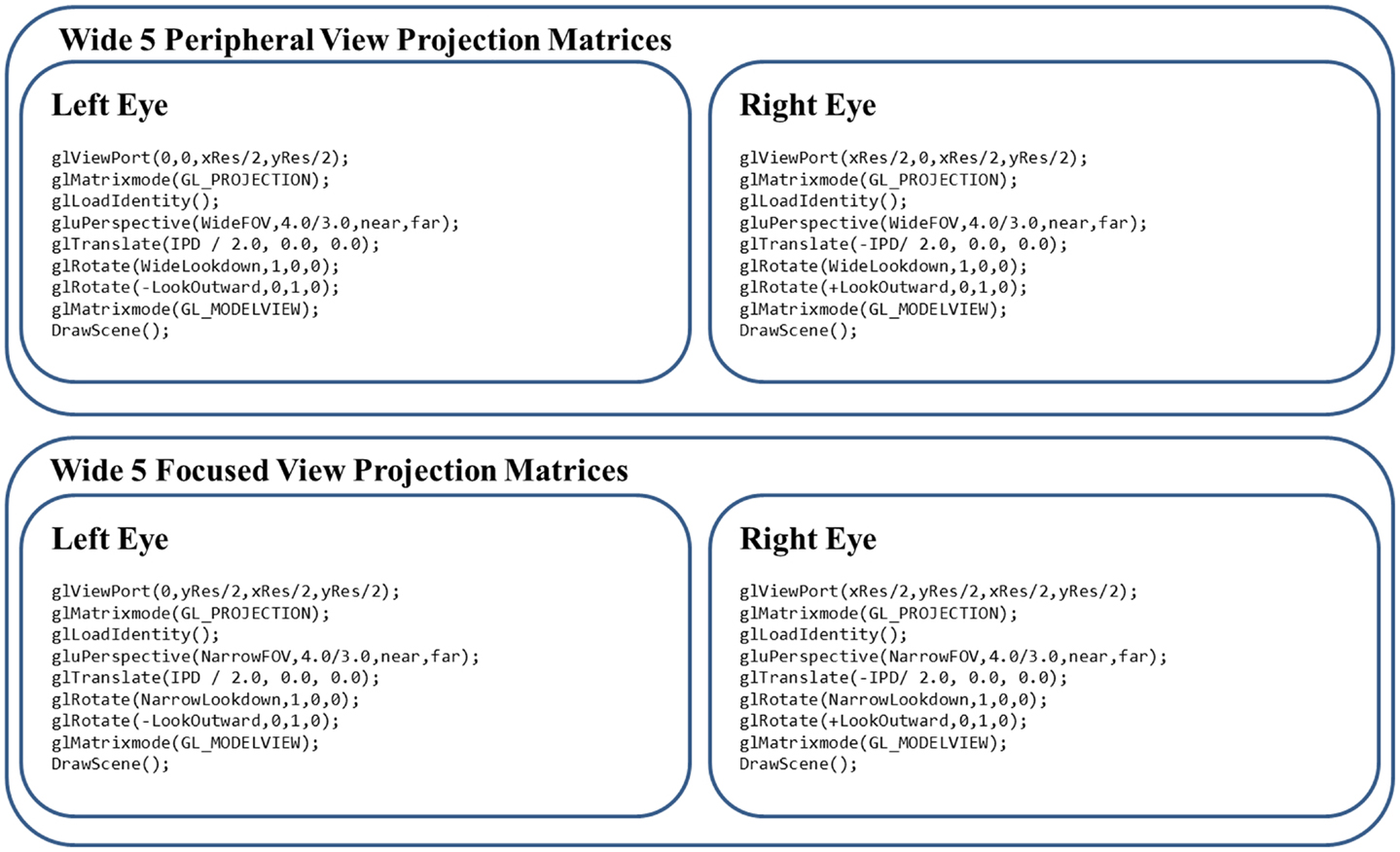

Incorporating different HMDs into both XVR and Unity has proven to be quite straightforward, but it should be noted again that each HMD has different characteristics, and the virtual cameras used to render for the HMD must be set up correctly to match the HMD specifications. In Figure 3, we show OpenGL code for the specifications of the SX111 and in Figure 4 for the Wide5 HMDs. For the Oculus Rift, Oculus provides an SDK for correct rendering in game engines such as Unity.

Figure 3. SX111 HMD OpenGL commands required to setup the matrices for the left and right eye display. According to manufacturer specifications xRes = 2560; yRes = 1024; hFov = 76; vFov = 64; eyeRotation = 13.0; IPD 0.064; and we set the near and far clipping plane to 0.01 and 1000, respectively.

Figure 4. Fakespace Labs Wide5 HMD OpenGL commands required to setup the matrices for the left and right eye both in peripheral and focused view. The rendering for the left and right display of the peripheral view is performed on the top half of the screen while the focused view is rendered to the bottom half of the screen. According to manufacturer specifications xRes = 1600; yRes = 1200; WideFOV = 140; NarrowFOV = 80; IPD = 0.064; WideLookDown = 5.0°; NarrowLookDown = 10.0°; LookOutward = 11.25°; and we set the near and far clipping plane to 0.01 and 1000, respectively.

Core system summary

In this section we have presented a minimal set of modules required for a system to achieve virtual embodiment. Such a system is able to present 1PP views of a virtual avatar, co-locating the virtual body with the participant’s real body and therefore providing a match between the visual and proprioceptive information perceived by the participant. Such a core system is somewhat limited, as only the participant’s head is tracked and thus is the only part of the body whose movements can be replicated in the avatar. Nevertheless, such a simple system was shown to be sufficient to induce FBOIs (Slater et al., 2010a; Maselli and Slater, 2013). The following sections present extensions used to enhance and measure the BOIs.

Multimodal Stimulation Modules

Multimodal stimulations, such as visuo-motor and visuo-tactile correlations, can provide a greater degree of the feeling of ownership over the virtual body. The following sections describe modules enabling such multisensory stimulation.

Full body motion capture module

This module is used to capture the motion of the body in addition to the head. These body movements can be used to animate the virtual human character representing the self, providing visuo-motor feedback which is a powerful tool for inducing BOIs (Banakou et al., 2013). A virtual mirror can be particularly useful when combined with full body tracking, as the participant can view the reflection of their virtual human body moving as they move. We concentrate our discussion on full body tracking systems, as these have the greatest general utility for virtual embodiment scenarios. However tracking of individual limbs, often in conjunction with inverse kinematics solutions, or even tracking the eyes (Borland et al., 2013), can also be useful. Generally the full body motion capture module is a set of hardware and software that maps participant body movements into a set of positions and/or rotations of the bones of the avatar.

As with the head-tracking module (see Head-Tracking Module), multiple solutions exist for motion capture of an entire body, based on a similar set of technologies: optical, magnetic, mechanical, and inertial. Here, we briefly present the characteristics of each motion capture technology as well as its benefits and drawbacks. A thorough review of motion capture systems can be found in Welch and Foxlin (2002).

Marker-based optical systems. Marker-based optical systems include the OptiTrack ARENA system from NaturalPoint, ART, PhaseSpace, and Vicon. These systems require the participant to wear a suit with reflective or active (using LEDs at specific frequencies) markers viewed by multiple infrared cameras surrounding the participant. A calibration phase is typically necessary for each participant to map his/her body morphology to the underlying skeleton in the tracking system. While NaturalPoint offers fairly affordable low end and reasonably priced high end systems, systems from Vicon, PhaseSpace, and ART are generally more costly.

Markerless optical systems. Markerless optical tracking systems exploit computer vision techniques that can identify human bodies in motion. Organic Motion33 offers the OpenStage markerless optical tracking system that relies on computer vision algorithms. While this system does not necessitate any per-participant calibration step before being used (even if the system still requires a periodic overall calibration step), it requires fine control of lighting conditions and background color. A very inexpensive solution for full body motion optical markerless tracking is Microsoft’s Kinect34. This device uses a structured light camera or a time-of-flight system in version 2 coupled with a standard color camera in order to track the movements of a participant without the need for any markers. While the capture volume is rather small (good precision can only be achieved at a distance ranging from 80 cm to 2.5 m to the camera), the system is internally calibrated to an accuracy of about 1.5 cm (Boehm, 2012), which is usually sufficient considering measurement errors in the order of 1 cm at a distance of more than 1 m between sensor and user. While it is possible to use the Kinect for very specific scenarios, the types of motions that the Kinect can track are limited. It is not possible, for example, to track the user from a side view or in a seated position without severe tracking issues. More complex postures are not possible to track owing to the single view nature of the Kinect. We have recently started to use the Kinect in our development and experimental studies.

The advantage of markerless tracking systems is that the participant does not have to wear any kind of suit or extra tracking device or markers (tight fitting clothing is recommended), although this benefit is lessened if the participant is already wearing stereo glasses or an HMD. The drawbacks are that optical systems often only work well in controlled lighting environments (some active marker-based systems claim to also work outdoors while passive marker-based systems require very controlled environments), and markerless systems can induce higher latency due to heavy computer vision processing, and are typically less precise than marker-based tracking systems. In general, optical technologies are sensitive to occlusions and therefore make tracking participants in cluttered environments or with close interactions between multiple participants difficult.

Inertial systems. Inertial systems use a suit with gyroscopes and accelerometers that measure the participant’s joint rotations. No cameras or markers are required. Unlike the optical solution presented above, tracking can be performed both indoors and outdoors since no cameras are required and communication is usually wireless. A calibration step is typically required after the participant puts on the suit. Benefits of an inertial system include: little computation power required, portability, and large capture areas. Disadvantages include lower positional accuracy and positional and rotational drift, which can compound over time. Drift is often corrected by using magnetic north as a reference point, although this can be affected by metallic structures in the environment.

The MVN system is an inertia-based full body tracking system commercialized by Xsens35. Animazoo36 offers the IGS system, based on gyroscopes attached to a suit. The two solutions are quite similar, in terms of specifications, capabilities, and price. Given the ubiquity of inertia-based sensors in mobile devices, a recently announced system aims to deliver similar quality at a price of two orders of magnitude less37.

Mechanical systems. Mechanical systems use an exoskeleton worn by the participant that measures the joint angles of the participant. Mechanical motion capture systems are occlusion-free, and can be wireless (untethered), with a virtually unlimited capture volume. However, they require wearing a cumbersome and heavy device, which limits the range and the speed of motion available to the participant. An example of a full body mechanical tracking system is the Gypsy738 from MetaMotion.

Magnetic systems. Magnetic systems also require the participant to wear a body suit to which the electromagnetic trackers are attached. The capture volumes for magnetic systems are typically much smaller than they are for optical systems, and significant warping can occur toward the edge of the tracked space. Polhemus39 offers a set of electromagnetic-based tracking devices.

Regardless of the tracking technology used, the tracking data must be transferred to the VR module to animate the virtual character. Often the skeletal structure used to represent the joint angles of the participant in the tracking system will differ from that used to represent the participant in the VE, so some mapping from one to the other must be performed. Apart from our system we only know of Vizard in combination with Autodesk Motion Builder that provides a solution to deal with different tracking systems in a VR system. This comes at a considerable cost just for the plug-in that reads Motion Builder data into Vizard. We have therefore developed our own interface, described below.

Important characteristics when choosing a full body motion capture system include:

• Latency: as for head-tracking, displaying delayed movements in the VE can induce breaks-in-presence for the participants.

• Precision: this criterion is less important than for the head-tracking module, in particular for body parts further away from the eyes, but precise movements can improve the relationship between real and virtual movements of the participants.

• Occlusions: in order not to restrain the participant’s movements, the motion tracking solution should be able to deal with self-occlusions.

• Ergonomics: some of the full body motion capture solutions require participants to wear a suit that can reduce their movement and increase the setup duration of the experiment.

• Integration with the VR module: the full body motion capture solution should provide an easy way to integrate tracked movements with the VR module.

• Indoor/Outdoor use: experimenters have to decide whether they want to be able to carry out outdoor experiments or not.

Our solution. We have experience mainly with optical and inertial full body motion tracking systems. As mentioned before, each technology has its own benefits and drawbacks. Optical systems need controlled lighting and are sensitive to occlusions (either of the markers or of body parts). On the other hand they are relatively cheap, easy to use, and relatively precise in a controlled environment. Inertial systems are prone to drift (error accumulating over time), usually only provide rotational information (although additional modules can provide position information as well), the suit is more cumbersome to put on, they are usually more expensive than optical systems, and their drift correction usually depends on finding magnetic north, which can be affected in some buildings with metal structures. Some advantages are that they enable tracking in large environments, do not suffer from occlusions, have latencies typically smaller than in optical systems, and do not require a controlled lighting environment.

As stated by Welch and Foxlin (2002) there is no “silver bullet” for motion tracking systems, but there are many options available. Hence, the choice of a full body motion tracking system will greatly depend on the environment where the experiments or applications are going to take place (in terms of space, lighting, presence of magnetic perturbations, etc.). Moreover, the choice will depend as well on the setup. For example, the use of haptic devices proximal to the participant might prevent the use of optical tracking systems.

For our embodiment experiments where the participant wears a HMD (see Display module), we recommend using either a marker-based optical tracking system (e.g., Optitrack, PhaseSpace, ART, and Vicon) or an inertial suit (such as the Xsens MVN). Such systems provide stable and easy to use tracking and present fewer constraints than markerless optical and mechanical systems.

Experimenters should note that using a full body tracking system requires some time for the participant to put on and to remove the suit (or the markers depending on the system used) as well as for calibration of the participant’s body to the underlying tracking skeleton. This time is not negligible and in our experience it can add up to 20 min to the normal duration of the experiment.

Because the skeletal structure used internally to the tracking system may differ from the skeletal structure of the avatar used to represent the participant in the VE, it is necessary to map one skeleton to the other. We have developed an avatar mapping library that enables such mappings from a number of tracking systems (e.g., OptiTrack, Xsens, and Kinect) to humanoid skeletons by specifying which bones are related between the two skeletons, and what the coordinate system transform and rotational offset is per bone mapping. Once specified, this mapping can be applied in real-time. This library has been successfully incorporated as a plug-in to both XVR and Unity (Spanlang et al., 2013).

Additionally, while performing virtual embodiment experiments with HMDs, we realized that head-tracking was far more robust and stable when using a separate head-tracker (see Head-tracking module) instead of relying on the full body tracking software to provide head movements. The head movements from the full body motion software induced more jitter and jerkiness and as a consequence, the participants were more prone to suffer from simulation sickness and we would sometimes have to interrupt and stop the experiment. There are several possible reasons for less stable head-tracking data from a full body tracking system. Full body tracking software performs an optimization across all skeletal joints, which may cause errors in the head position and orientation. Also, for optical systems, in addition to the usual occlusion problems, HMDs may cause reflections that can temporarily cause erroneous identification of markers and therefore cause jitter.

To overcome these issues, we have developed techniques for integrating the data from a full body tracking system and a separate head-tracking system that is agnostic to the particular tracking system used (Borland, 2013).

Haptics module

This module is divided into the hardware that provides the tactile stimuli and the software in charge of triggering the haptic actuators in correlation with the virtual objects visible in the scenario. There have been a number of studies that show that haptic feedback enhances the sense of presence in VEs (Reiner, 2004) as well as the sense of social presence in collaborative VEs (Sallnäs et al., 2000; Giannopoulos et al., 2008). Furthermore, it has been shown that the addition of vibro-tactile haptics to a VR training scenario can improve task performance (Lieberman and Breazeal, 2007; Bloomfield and Badler, 2008). Vibro-tactile displays have been used in a variety of different scenarios and applications, such as aids in navigations in VEs (Bloomfield and Badler, 2007), improving motor learning by enhancing auditory and visual feedback (Lieberman and Breazeal, 2007) and for sensory substitution (Visell, 2009). A survey of haptics systems and applications is given by Lin et al. (2008).

Haptic modalities differ considerably from audio-visual modalities in the means of information transmission. Whereas with vision and sound information is propagated through space from the source to the sensory organ, the haptic modality requires direct contact between the source and the receptor (an exception is the use of low frequency audio). Furthermore, the delivery of the haptic stimulus is complicated by the variety and specialization of touch receptor cells in our skin for the different types of haptic stimuli, such as pressure, vibration, force, and temperature.

Therefore haptic technologies are usually developed to be application-specific, usually accommodating one or two types of stimulation. As a consequence, there are different devices for delivering different types of haptic sensations.

Active force-feedback devices. Active force-feedback devices can simulate the forces that would be encountered by touching the real-object counterpart of its virtual representation. Example devices include point-probe devices, e.g., the Phantom®40 (Massie and Salisbury, 1994), and exoskeletal devices, e.g., the CyberGlove®41.

Low frequency audio. Low frequency audio delivered for example through subwoofers can be an effective way to deliver tactile sensations. For example, they can provide a means of collision response if higher quality haptics is not available (Blom et al., 2012).

Encounter-type devices. Encounter-type devices (Klare et al., 2013), provide force-feedback for various objects, by tracking the participant’s body and moving (sometimes even shaping) the device to provide the correct surface at the correct position with respect to the virtual scenario.

Temperature transducers. Temperature transducers (e.g., Peltier elements) can produce a range of temperatures on the participant’s skin. The most common temperature transducers are common electric heaters, which can be used to give the sensation of warm or cool air via fans.

Pressure. Pressure can be simulated via air-pocket jackets (e.g., TN Games® Tactile gaming vest42) and mechanical devices (similar to force-feedback devices). Pressure can also be delivered from a remote device (Sodhi et al., 2013).

Passive haptics. Passive haptics are static surfaces that mimic the haptic and geometric properties of collocated virtual surfaces in the scenario. Such haptic feedback has been shown to increase presence in VEs (Insko, Brent Edward/Adviser-Brooks, 2001; Meehan et al., 2002; Kohli et al., 2013).

Vibro-tactile. Vibro-tactile displays and transducers are devices that can deliver vibratory sensations.

There are quite a few commercially available devices, such as the ones mentioned above, but as mentioned earlier, a relatively large number of haptic devices are application-specific and are thus often custom-made.

Important characteristics that should be taken into account before deciding upon a haptics solution include:

• Application: as mentioned above, haptic systems are very application-specific, thus the type of haptic feedback (force-feedback, vibration, temperature, etc.) has to be decided based upon the target application.

• Safety: usually the haptic system will come into contact with the participant’s body, thus safety and conformity to safety regulations have to be considered (electric/mechanical safety, etc.).

• Ergonomics: some haptics devices impair participants from moving freely during the experiment, thus limiting their range of motion.

Our solution. We have concentrated on displaying vibratory sensations to the participant by introducing a vibro-tactile system that can deliver vibrations to different parts of the body depending on the needs of the specific VR application. Vibro-tactile actuators in general cannot deliver the sensation of touch expected from real experiences of touching objects, e.g., a vibrating sensation when sliding your hand along the surface of a virtual table top will not feel natural. However, for the visuo-tactile stimulations that we require to elicit embodiment illusions in our experiments they have proven sufficient.

The number of vibrating actuators and vibrator positions can be configured for each scenario, including simulating stroking as used in the RHI and other BOIs. Such a system also integrates well with the suits used in our body tracking systems. Our haptics controller can run in a fully automated mode or can be controlled by the operator of the system. It uses off-the-shelf hardware and software technologies and has been thoroughly tested in several experimental setups. Tapping – the act of repeatedly lightly touching the participant to stimulate the tactile system – combined with spatiotemporally congruent visuals of a virtual object touching the virtual avatar, has become standard practice for introducing congruent visuo-tactile feedback for virtual embodiment. We have also developed a hardware-accelerated approach for collision detection between moving avatars (Spanlang et al., 2010) and simple objects with which in combination with vibro-tactile actuators we efficiently deliver a sensation of touch to the participant when the avatar is being touched in the VR.

In order to be able to deliver the desired haptic stimulation to the participant, we use the Velcro® jacket of the Optitrack motion capture system, which supports any configuration of vibrators that the scenario may require. The hardware layer of our system is based on Arduino43 microprocessors (Arduino UNO, Arduino Mega and other configurations depending on input/output requirements). Similar configurations can be achieved with other microprocessors. We have also created a VibroGlove (Giannopoulos et al., 2012) with a fixed configuration of 14 vibrators, 2 per finger and 4 for the palm of the hand, that can be individually triggered and intensity controlled (Figure 5). The haptic elements that we use are primarily vibrating, coin-type actuators.

Figure 5. A VibroGlove to deliver a sensation of touch to the hands. Here we show a glove with 14 vibrotactile actuators controlled by an Arduino Mega.

The haptics module has been designed to be simple and flexible, enabling different hardware and software parts to be unplugged or replaced with new functionality when necessary. In the future, as Wireless Sensor Networks become more accessible, we plan on experimenting with less intrusive hardware components for participants with better wireless capabilities.

Quantitative Measurement Modules

The ability to induce the illusion of ownership over virtual bodies raises the question of how to measure such illusions both qualitatively and quantitatively. Nearly all BOI studies explicitly address illusory ownership feelings through questionnaires originally adapted from Botvinick and Cohen (1998). Many studies of the RHI use proprioceptive drift – the difference between the participant’s estimation of the location of their hidden hand before and after visuo-tactile stimulation – as a more objective measure indicating a spatial shift toward the visible fake hand as an owned body part (Botvinick and Cohen, 1998; Tsakiris et al., 2005, 2010; Costantini and Haggard, 2007; Slater et al., 2008). In our virtual embodiment system, we additionally employ various quantitative physiological measurements that can be correlated with qualitative questionnaire data to determine the degree of the induced BOI. In this section, we differentiate between “bodily” physiological signals (e.g., heart rate, skin conductance, etc.) and brain signals (EEG).

Physiological measurement module

Bodily responses under a threat to the fake hand or body have been investigated, with studies showing that participants tried to avoid a threat toward the fake body or limb when experiencing BOIs (Gonzalez-Franco et al., 2010; Kilteni et al., 2012b). Autonomic system functioning, such as skin conductance response (SCR) (Armel and Ramachandran, 2003; Petkova and Ehrsson, 2008; Petkova et al., 2011; van der Hoort et al., 2011) and heart rate deceleration (HRD) (Slater et al., 2010a; Maselli and Slater, 2013) have also been measured under threat of harm to the virtual body. BOIs have been shown to cause a decrease in the temperature of the real counterpart (Moseley et al., 2008; Hohwy and Paton, 2010) and even alter participants’ temperature sensitivity (Llobera et al., 2013). Similarly, changes in electrical activity due to muscle contraction (EMG) (Slater et al., 2008) have also been correlated with BOIs.

On the other hand, it has been shown that using physiological measures such as electrocardiogram (ECG) or respiration to change the visual appearance of a virtual body can also be used to create BOIs. Adler et al. (2014) used visuo-respiratory and Aspell et al. (2013) used cardio-visual conflicts in order to investigate the use of physiological measures to induce BOIs.

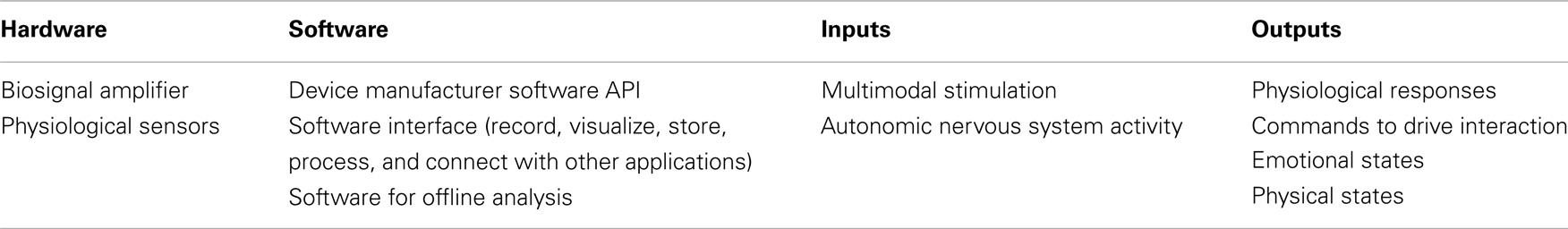

The physiological measurement module is primarily used for obtaining objective measures that may indicate BOIs. It consists of five main submodules that carry out the tasks of acquisition, visualization, real-time processing, storage, and communication with the VR application. Its architecture facilitates the use of different biosignal amplifiers, physiological measures, and the inclusion of existing and/or new algorithms for real-time processing of signals and the extraction of physiological features. Table 4 presents the main characteristics of the physiological measurement module in terms of hardware and software dependencies as well as in terms of required inputs and produced outputs.

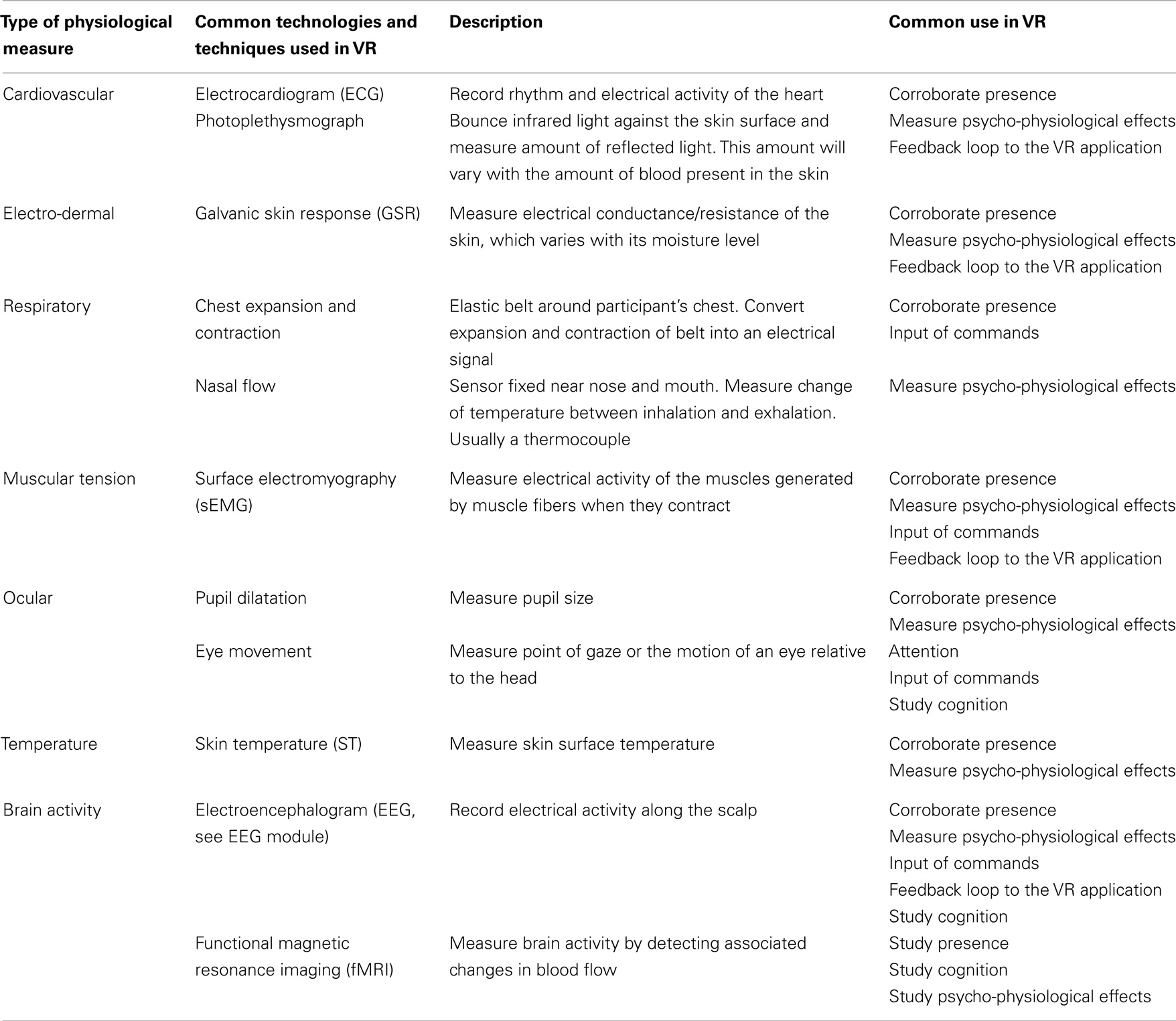

Physiological activity has been widely used in VR as an objective measure to corroborate the sense of presence (Meehan et al., 2002), investigate factors that enhance presence (Slater et al., 2009), detect emotions (Nasoz et al., 2004), and to interact with and move around the VE (Davies and Harrison, 1996; Pfurtscheller et al., 2006). Common physiological measures used include: cardiovascular, electro-dermal, respiratory, muscular tension, ocular, skin temperature, and electrical brain activity (see EEG module). Table 5 presents a summary of physiological measures, technologies, and common uses in VR.

The primary goal of this module is to record the participant’s physiological responses to the VE. We therefore introduce the concept of event markers: coded time stamps sent from the VR module to the physiological measurement module that enable us to synchronize physiological and virtual events. In addition, information from the physiological module can be used within the VR module (e.g., making the virtual avatar breathe in synchrony with the participant).

Biosignal amplifiers and sensors. Biosignal amplifiers and sensors record physiological signals with a wide variety of characteristics for various purposes, including personal use at home, medical monitoring, bio-feedback practitioners, and education and research. Some considerations to take into account when selecting the biosignal amplifier include the types of signal required, the number of recording channels needed, the types of participants, ease of use of the device, portability and mobility of the device, signal quality, sample rate per channel, digital resolution (e.g., 14, 16, 24 bits), and of course price. For physiological sensors some considerations include: sensitivity, range, precision, resolution, accuracy, offset, response time, and the fact that some recording devices only use sensors provided by the manufacturer.

Examples of companies that provide multichannel physiological recording equipment commonly used in VR research include: g.tec44, Thought Technology45, Mind Media46, Biopac Systems47, and TMSI48. The Association for Applied Psychophysiology and Biofeedback (AAPB)49 provides a comparison guide that contains useful information for users when choosing adequate physiological devices.

Software. Software for interfacing with the physiological devices should provide acquisition, real-time processing, presentation, communication, and analysis of physiological signals. There are a wide variety of software packages that offer some of these functions. Equipment manufacturers typically provide two types of solution: software packages aimed for clinical or bio-feedback practitioners and APIs/SDKs aimed for developers and programmers.

Software packages are usually quite user-friendly with an easy-to-use graphical user interface incorporating graphs for visualizing the different signals. Advanced physiological software platforms also enable the inclusion of movies, pictures, and flash games or animations for bio-feedback purposes. They support many physiological applications and protocols for the clinician/therapist and provide different types of recording sessions and screens. However, their main drawbacks include a lack of flexibility for the inclusion of new algorithms or filters for online signal processing and limited capabilities for communication with third-party applications. Even when such communication is available, typical limitations include lack of support for TCP/UDP, data transmission at a low sample rate, limited number of channels, one-way communication, and binary-only data transmission.

On the other hand, APIs/SDKs enable programmers to develop their own physiological software components, customize them, and expand their tools. Typically manufacturers provide APIs/SDKs for C++ , Java, and/or MATLAB. The major drawback is the need of programing skills to use this solution.

Open-Source software toolkits can also be used for viewing, analyzing, and simulating physiological signals. The PhysioNet research resource (Goldberger et al., 2000) provides a comprehensive list of free toolkits.

Important characteristics that should be taken into account before deciding upon a physiological measurement module include:

• Flexibility: capability to support a wide variety of biosignal amplifier devices as well as communication protocols.

• Capabilities: the module should be able to measure and extract a wide spectrum of physiological signals in real-time.

• Extensibility: experimenters should be able to add custom functionalities to the physiological module.

• Ease of integration with the VR module: network communication, programing interfaces (API, SDK), etc.

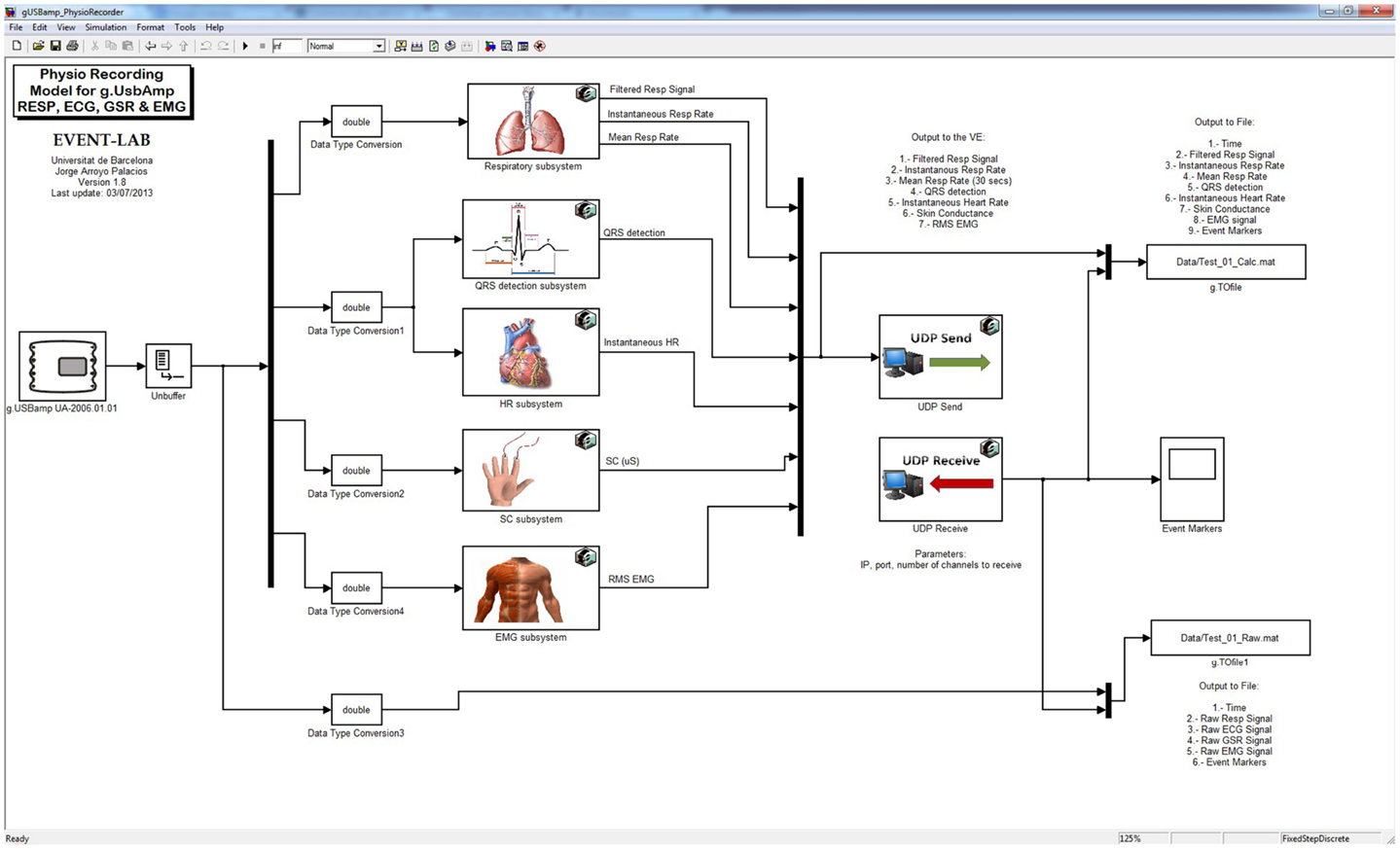

Our solution. We have adopted a MATLAB Simulink50 -based software architecture that is highly flexible and supports: (i) wired and wireless biosignal amplifiers from different companies, (ii) different physiological measures and extracted features, (iii) complementary devices to record additional measures (e.g., Nintendo Wii Balance Board to record body balance measures), and (iv) communication with other applications. In addition, we can easily include existing functions from MATLAB toolboxes and proprietary Simulink blocks (e.g., g.HIsys51 from g.tec), along with our own functions.

We have different wired and wireless biosignal amplifiers that we use depending on the needs of the experiment, such as gUSBamp52, gMOBIlab53, Nexus-454, and Enobio55. Common physiological sensors used in our experiments include ECG, surface electromyography, galvanic skin response, and respiration sensors. Although many different devices may be used, we have found using a common software interface makes the integration more straightforward and easy to use.

We selected the Simulink platform because it provides several customizable blocks and libraries. Also, the user can create new functions for data processing and add them as new blocks. Another important advantage of Simulink is that it is fully integrated with MATLAB, which provides several useful toolboxes for signal processing, machine learning, communication with other computers and devices with built-in support for TCP/IP, UDP, and Bluetooth serial protocols, etc. Figure 6 illustrates the implementation of a Simulink model used to record respiratory, cardiovascular, electro-dermal, and muscular measures with the gUSBamp device from g.tec.

Figure 6. A Simulink model in MATLAB. This module enables real-time recording and processing of physiological data within VEs. This can handle respiratory, cardiovascular, electro-dermal and electromyography measurements using the gUSBamp or the gMobilab devices from gtec. gUSBamp is connected to the PC via USB whereas the gMobiLab uses Bluetooth. To deliver physiological data to the VE and to synchronize these data with events from the VE we use the UDP network protocol both in MATLAB and in XVR/Unity.

For offline analyses of the physiological signals we typically use either gBSanalyze56 from g.tec or custom MATLAB scripts. The advantages of gBSanalyze are the wide range of functions available to analyze physiological data and the possibility of including your own functions.

EEG module

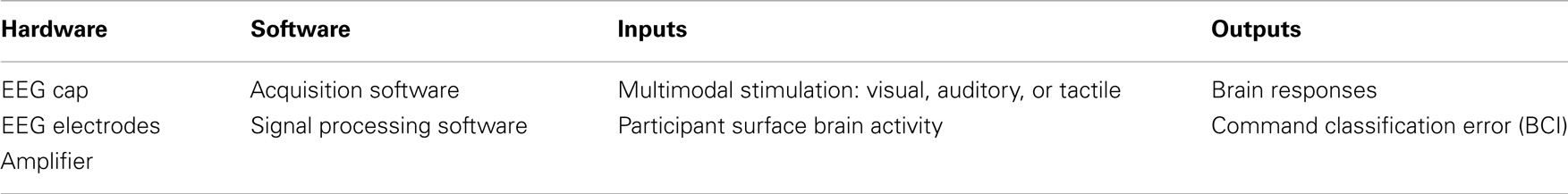

The role of the electroencephalography (EEG) module is to both measure the reaction to and perform interactions with the VE by means of brain activity. The EEG module provides an objective way to measure real responses to virtual stimuli with a high temporal resolution in order to study the dynamics of the brain using event-related techniques. At the same time, EEG can be used as a brain-computer interface (BCI) for enhanced interaction and control over the VE, including virtual avatars, in real-time. A survey of BCI use is given in Wolpaw et al. (2002). Table 6 introduces the main characteristics of the EEG module in terms of hardware and software dependencies as well as in terms of required inputs and produced outputs.

Methods for measuring reactions in VR, including BOIs, have traditionally relied on questionnaires and behavioral responses. However, those techniques tell little about the neural processes involved in the response. Therefore, conducting experiments using EEG recording can help us investigate the unconscious natural reactions that take place in the brain during such experiences (González-Franco et al., 2014).

EEG can also be used for BCI to provide a means of interacting with VEs that does not require physical motion from the user. EEG can therefore be used to control avatars in situations where body tracking is not possible, or to add behaviors not possible with body tracking (Perez-Marcos et al., 2010). The most common BCI paradigms are P300, motor-imagery, and steady-state visually evoked potentials (SSVEP). P300 is an ERP elicited as a response roughly 300 ms after stimulus presentation, typically measured using the oddball task paradigm (Squires et al., 1975). Motor-imagery works by thinking about moving a limb, producing an activation similar to when performing the real action (Pfurtscheller and Neuper, 2001). SSVEPs are produced as a result of visual stimulation at specific frequencies, and can be used to detect when the participant looks at specific areas or objects (Cheng et al., 2002).

These BCI paradigms can be classified according to the mental activity that leads to specific brain patterns. Those referred to as active strategies (based on voluntary mental actions, e.g., motor-imagery) facilitate asynchronous BCI, i.e., the point in time at which to trigger an action is determined by the user. In contrast, reactive strategies (e.g., P300 and SSVEP) are based on brain responses to external stimuli, as opposed to being actively generated by the user. Reactive strategies usually offer a wider range of commands (Table 7) which draws a comparison between different EEG technologies.

Before detailing our solution, we list some important characteristics of an EEG module:

• Number of channels supported: the typical range is between 16 and 64;

• Real-time capabilities: refresh rate should be between 128 and 512 Hz;

• Communication support: the EEG module should be able to support different types of communication protocols;

• Ease of integration with the VR module: simple I/O triggering through parallel port or ad hoc Ethernet connection with less than 5 ms delay.

Our solution. We have typically used the g.tec g.USBAmp for recording up to 32 EEG channels. When a wireless solution is required, then the g.tec g.Mobilab, a Bluetooth-based amplifier with up to eight channels, was used. Other hardware solutions include those from BrainProducts57 and Neuroscan58. In particular, BrainProducts amplifiers offer efficient synchronization between the generated event triggers and the data acquisition during the recording, and may be easily combined with the Open-Source software BCILab (Delorme et al., 2011).

We use various software packages, mainly from gtec and EEGLab (Delorme et al., 2011). For real-time (in particular BCI) applications, the gtec software packages are modular and flexible, thus appropriate for research purposes. The High-Speed Online Processing toolbox running under Simulink/MATLAB is used for real-time parameter extraction and saving. The g.UDPInterface is used for data exchange between MATLAB and the VR module via a UDP interface. For the offline analysis of the acquired data, we use either g.BSAnalyze from g.tec or the Open-Source EEGLab (Delorme et al., 2011). In particular, we recommend the Open-Source EEGLab software for ERP signal analysis due to the available visualization features.

Results

This section discusses some results that were obtained based on different combinations of the virtual embodiment modules presented previously. While all of them rely on the core virtual embodiment system, most of them also use combinations of the other modules. Each result is briefly presented and preceded by a short description of the experiment that led to it.

Core Virtual Embodiment System