Abstract

We present a new dataset of English word recognition times for a total of 62 thousand words, called the English Crowdsourcing Project. The data were collected via an internet vocabulary test in which more than one million people participated. The present dataset is limited to native English speakers. Participants were asked to indicate which words they knew. Their response times were registered, although at no point were the participants asked to respond as quickly as possible. Still, the response times correlate around .75 with the response times of the English Lexicon Project for the shared words. Also, the results of virtual experiments indicate that the new response times are a valid addition to the English Lexicon Project. This not only means that we have useful response times for some 35 thousand extra words, but we now also have data on differences in response latencies as a function of education and age.

Similar content being viewed by others

Research on word recognition has seen an interesting development in the last two decades. Whereas previously, word recognition was investigated in small-scale studies involving some 100 words divided over a factorial design with a few conditions and evaluated with analysis of variance, the new development consisted of collecting word processing times for thousands of words and analyzing them with regression analysis whenever a variable of interest is better represented continuously rather than categorically. Such studies are often called megastudies. Table 1 gives an overview of the megastudies available.

Balota, Yap, Hutchison, and Cortese (2013) and Keuleers and Balota (2015) summarized the advantages of the megastudy approach. First, they listed the disadvantages of the factorial approach. These are:

The difficulty to equate the stimuli in the conditions.

The fact that many words with a shared feature are presented in a short experiment, which may give rise to context effects.

The fact that continuous variables are categorized (e.g., divided into high vs. low).

The fact that the study is limited to stimuli at the extremes of a word characteristic.

The danger of experimenter bias when selecting words for the various conditions.

The disadvantages of the factorial design are less of an issue in the megastudy approach, because the various control variables can be entered in the regression analysis, participants see a random selection of words, continuous variables are not categorized, and there is no prior stimulus selection by the experimenter (for the last aspect, see also Liben-Nowell, Strand, Sharp, Wexler, & Woods, 2019). Additional advantages of the megastudy approach are:

More power due to the large number of stimuli.

The data can be used multiple times to address new questions.

The relative importance of existing word characteristics can be assessed.

The impact of a variable can be studied across the entire range.

The strength of a new, theoretically important variable can be evaluated; the data can also be used to search for new variables.

The quality of newly presented computational models can be evaluated.

The quality of competing metrics (e.g., word frequency norms) can be compared.

If the megastudy includes many participants in addition to many stimuli, individual differences can be studied.

The new possibilities can be illustrated with the English Lexicon Project (ELP; Balota et al., 2007), consisting of lexical decision and naming times for over 40 thousand English words. In several studies, the dataset has been used to examine the relative importance of word features, such as frequency, length, similarity to other words, part of speech, age of acquisition, valence, arousal, concreteness, and letter bigrams (e.g., Brysbaert & Cortese, 2011; Kuperman, Estes, Brysbaert, & Warriner, 2014; Muncer, Knight, & Adams, 2014; New, Ferrand, Pallier, & Brysbaert, 2006; Schmalz & Mulatti, 2017; Yap & Balota, 2009). It has also been used to test new variables, such as OLD20 (Yarkoni, Balota, & Yap, 2008), the consonant–vowel structure of words (Chetail, Balota, Treiman, & Content, 2015), and word prevalence (Brysbaert, Mandera, McCormick, & Keuleers, 2019). It has been valuable to test mathematical models of word recognition and individual differences (Yap, Balota, Sibley, & Ratcliff, 2012), to understand how compound words are processed (Schmidtke, Kuperman, Gagné, & Spalding, 2016), to study the influence of semantic variables on word recognition (Connell & Lynott, 2014), to find the best frequency measure for English words (Brysbaert & New, 2009; Gimenes & New, 2016; Herdağdelen & Marelli, 2017), to test new computational models (Norris & Kinoshita, 2012), and to predict word learning in speakers of English as a second language (Berger, Crossley, & Kyle, 2019).

To ensure the usefulness of the ELP, it is important to check for converging evidence from other, independent sources. This motivated Keuleers, Lacey, Rastle, and Brysbaert (2012) to compile the British Lexicon Project (BLP), consisting of lexical decisions to 28,000 monosyllabic and disyllabic words. Other interesting additions were the collection of auditory lexical decision times (Goh, Yap, Lau, Ng, & Tan, 2016; Tucker et al., 2019) and semantic decision times (Pexman, Heard, Lloyd, & Yap, 2017).

In the present article, we discuss the development of a new large English database of word-processing times (there are large databases for other languages as well, as can be seen in Table 1). The present database is the result of a crowdsourcing project (Keuleers, Stevens, Mandera, & Brysbaert, 2015) that was not primarily set up to analyze response times. Because previous research showed that the collection of reaction times in a web browser can be accurate enough to be a useful method for behavioral research (Crump, McDonnell, & Gureckis, 2013; Reimers & Stewart, 2015), we will examine to what extent the response times from such a paradigm inform us about the ease of word recognition.

Method

Keuleers and Balota (2015) defined a crowdsourcing study as a study in which data are collected outside of the traditional, controlled laboratory settings. The English Crowdsourcing Project (ECP), which is presented here, is part of a series of internet-based vocabulary tests developed at Ghent University, in which participants have to indicate which of the presented stimuli they know as words. The vocabulary tests were started in 2013 in Dutch (Keuleers et al., 2015). The English test started in 2014 (Brysbaert, Stevens, Mandera, & Keuleers, 2016a) and is still running (available at http://vocabulary.ugent.be/). Its main goal was to get an idea of how well words are known in the population, a variable we call word prevalence (Brysbaert et al., 2019; Brysbaert, Stevens, Mandera, & Keuleers, 2016b; Keuleers et al., 2015).

The exact instructions of the ECP vocabulary test are:

In this test you get 100 letter sequences, some of which are existing English words (American spelling) and some of which are made-up nonwords. Indicate for each letter sequence whether it is a word you know or not. The test takes about 4 min and you can repeat it as often as you want (you will get new letter sequences each time). If you take part, you consent to your data being used for scientific analysis of word knowledge. Do not say yes to words you do not know, because yes-responses to nonwords are penalized heavily!

Per test, participants received 70 words and 30 nonwords. We expected average participants to know about 70% of the presented words, so we corrected for response bias by presenting around one third of the stimuli as nonwords. To discourage guessing, participants were warned that they would be penalized if they responded “word” to nonword stimuli. At the end of the test, participants received an estimate of their vocabulary size, which was a big motivation for them to take part and to recommend the test to others. The presented estimate was computed by subtracting the percentage of word responses to nonwords (false alarms) from the percentage of word responses to words (hits).

The yes/no format with guessing correction is an established form of vocabulary testing in the language proficiency literature (Ferré & Brysbaert, 2017; Harrington & Carey, 2009; Lemhöfer & Broersma, 2012; Meara & Buxton, 1987). However, in the ECP the presented words and nonwords were not fixed like in a regular vocabulary test.

The words were selected from a set of 61,851 English wordsFootnote 1 compiled over the years. These words included the lemmas and high-frequency irregular word forms from the SUBTLEX databases, supplemented with stimuli from dictionaries and spelling checkers. Figure 1 shows the distributions of word length, word frequency, and word prevalence in the stimulus list. Word length varied from 1 to 22 letters. Word frequency is expressed as Zipf scores (Brysbaert, Mandera, & Keuleers, 2018), going from 1.29 (not present in the corpus) to 7.62 (the word you). Particularly interesting is the large number of words not observed in the SUBTLEX-US frequency list (or in most other frequency lists) but present in dictionaries and spelling checkers. Many of these are well known, even though they are rarely used in spoken or written language (such as mindfully, rollerblade, submissiveness, toolbar, jumpstart, freefall, touchable, . . . ; see Brysbaert et al., 2019, for more information). Word prevalence ranges from less than – 2 (a word unknown to virtually everybody) to over + 2.33 (a word known by more than 99% of the population).

The nonwords were selected from a list of 329,851 pseudowords generated with Wuggy (Keuleers & Brysbaert, 2010). They were constructed to be as similar as possible to the words in terms of length and letter transition probabilities within and across syllables. Because the stimuli presented in the test were not fixed, participants could take the test more than once. Indeed, a few participants took several hundreds of tests over the years.

Specific to the ECP stimulus set is that the vast majority of words consist of uninflected lemma forms. This is different from the BLP, in which about half of the stimuli were inflected forms (the only inclusion criterion was monosyllabic or disyllabic words), and the ELP, which consisted of all words observed in a corpus, including inflected forms and proper nouns (names of people and places).

Although the ECP task involves a yes/no decision, it is important to consider the differences from a traditional lexical decision task. First, at no point were participants told time was an issue. Second, participants were explicitly instructed to only indicate which words they knew, and not to guess if they were unfamiliar with a sequence of letters. Participants did the test outside of a university setting and did it because they wanted to know their English proficiency level. Still, Harrington and Carey (2009) noticed that under these conditions the response times (RTs) can be informative. Because averaging over large numbers reduces the noise in the individual observations, the worth of RTs is expected to increase with the number of participants taking part.

Before the start of the test, participants were asked a few basic questions. These were (1) what their native language was, (2) where they grew up, (3) what the highest degree was they obtained or were working towards, (4) their gender and age, (5) how many languages they spoke in addition to English and their mother tongue, and (6) how good their knowledge of English was. Participants were not required to provide this information before they could take part, but the vast majority did.

Results and discussion

The data used in the present article are based on all the tests taken between January 2014 and September 2018. During that period we collected more than 142 million answers from 1.42 million experimental sessions.

For the analyses of the present article, we used the following data-pruning pipeline (run entirely before looking at the data; nothing was changed as a result of the analyses).Footnote 2

- 1)

We only took into account the word data. This reduced the dataset from 142 million to 99.5 million.

- 2)

We only used the first three sessions from each IP address, to make sure that no individual had an undue influence (some participants did hundreds of sessions). This reduced the dataset to 93.6 million observations.

- 3)

We deleted the first nine trials of each session, which were considered training trials, leaving us with 84.3 million observations.

- 4)

RTs longer than 8,000 ms were deleted, so that no dictionary consultation could take place. This reduced the dataset to 83.5 million observations.

- 5)

Outliers were filtered out on the basis of an adjusted boxplot method for positively skewed distributions (Hubert & Vandervieren, 2008) calculated separately for the words in each individual session, leaving 79.0 million observations.

- 6)

Sessions with more yes-responses to nonwords than to words were omitted (often people pressing the wrong buttons), further reducing the dataset to 78.7 million data points.

- 7)

Finally, only data from users with English as native language who answered the person-related questions were retained. This reduced the final dataset to 41.2 million observations coming from almost 700 thousand sessions.

For 47% of the sessions, responses were collected from a device with a touchscreen; in the other sessions, responses were given on a keyboard. In the touch interface, responses were made using virtual YES and NO buttons; in the keyboard interface, the F key was used for the “no” response, and the J key for the “yes” response.Footnote 3

About 60% of the participants grew up in the United States, 22% in the United Kingdom, and the remaining 18% in other countries. All words had American spellings (e.g., labor, center, analyze).Footnote 4

Per word, there were on average 666 observations in the resulting subset of the data, going from a minimum of 190 to a maximum of 7,895. The reasons for these deviations are twofold. First, we received feedback from the users that our initial list contained too many nonexisting adverbs (lucklessly, felinely) and non-existing nouns ending on -ness (gingerliness, gelatinousness). These were pruned, together with some other letter sequences that created confusion (such as compound words written as a single word—clairsentience, taylormade—and the letters of the alphabet). At that time we also entered new words we had come across since the start of the project, which explains why the minimum number of responses is only 190. The high maximum number of responses was due to two occasions on which the randomization algorithm blocked. As a result, the same sequence was presented repeatedly, until we were alerted to the problem. Because of these infelicities, cautious users may want to exclude entries with less than 316 observations (N = 2,544) or more than 1,000 observations (N = 140), although we do not think these RTs are problematic and we did not exclude them from the analyses presented here.

RTs were calculated on correct trials only. RTs were defined as the time interval between the presentation of the stimulus and the response of the participant. Overall accuracy was .78. Mean RT was 1,297 ms (SD over stimuli is 357). The mean standard deviation in RTs per stimulus across participants was 784 ms (SD over stimuli is 264). Both values are considerably higher than in laboratory based megastudies. For comparison: in the lexical decision part of ELP the mean RT for the words was 784 ms (SD = 135), and the mean standard deviation of the LDT latencies was 278 ms (SD = 92; Balota et al., 2007).

Correlations with data from other megastudies

A first way to measure the merit of the RTs in ECP is to correlate them with the RTs from other megastudies. The prime candidate, of course, is ELP, with its lexical decision RTs and naming latencies. Next is the BLP, also providing lexical decision times. For both databases, we used standardized RTs (zRTs), because they correlate more with word characteristics. There was no need to work with standardized RTs for the ECP, since the correlation between raw RTs and zRTs was r = .992. The reasons for the high correlation are the large number of observations per word (several hundred, as compared to the 30–40 observations per word in ELP) and the fact that each participant added only a tiny fraction of the data. Raw RTs are easier to understand, because they are closer to human intuitions and they retain individual differences in RTs (but see below for some analyses with zRTs).

We also excluded words that had an accuracy of less than .85 in ECP, since the RTs of these words are less trustworthy.Footnote 5 This left us with a total of 12,001 words for which we had RTs in all databases. Because of the design of the BLP, the observations are limited to monosyllabic and disyllabic words (the words most often used in experimental research). Table 2 gives the correlations between the databases. As can be seen, for this particular dataset, ECP correlates almost as much with ELP lexical decision times (ELPLDT) as the BLP correlates with the same database. This is good news for the value of ECP.

A second way to examine the usefulness of the ECP RTs is to see how well they correlate with the RTs from the other studies mentioned in Table 1 and, more importantly, how the correlations compare to those with ELPLDT and BLP. Table 3 lists the findings for some classic datasets.

As can be seen in Table 3, the ECP RTs correlated .79 with the standardized ELP lexical decision times, and .73 with the BLP zRTs. These correlations can be considered as the bottom level of reliability for the dataset (based on convergent validity), indicating that some 75%–80% of the variance in ECP times is systematic variance that can be explained by stimulus characteristics. As for the correlations with the other datasets, ECP seems to be slightly worse than ELP (in particular for short words) and on par with BLP.

Variance accounted for by word characteristics

A third way to gauge the quality of the ECP dataset is to see how strongly the RTs are influenced by word characteristics. In a recent article, Brysbaert et al. (2019) evaluated the contributions of seven variables on ELP zRTs.Footnote 6 These were:

Word frequency (SUBTLEX-US; Brysbaert & New, 2009)

Word length (in letters)

Word length (in syllables)

Number of morphemes (from Balota et al., 2007)

Orthographic distance to other words (OLD from Balota et al., 2007)

Phonological distance to other words (PLD from Balota et al., 2007)

Age of acquisition (Kuperman, Stadthagen-Gonzalez, & Brysbaert, 2012)

Concreteness (Brysbaert, Warriner, & Kuperman, 2014)

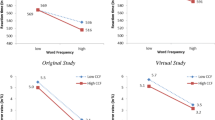

Table 4 compares the regression analysis for the words in common between ELP and ECP (N = 18,305; the words dropped from the analyses in Table 3 were words for which we did not have information on all variables and words not recognized by 75% of the ELP participants, the criterion used by Brysbaert et al., 2019). For ease of comparison, the regression weights are expressed as beta coefficients, meaning that the dependent and independent variables were standardized. Figures 2 and 3 give a graphical display of the effects.

Effects of the variables on the standardized ELP lexical decision times. The first row shows the effects of word frequency and length in letters; the second shows those of number of syllables and number of morphemes; the third shows those of orthographic and phonological similarity to other words; and the last row shows the effects of age of acquisition and concreteness

Effects of the variables on the ECP word recognition times. The first row shows the effects of word frequency and length in letters; the second shows those of number of syllables and number of morphemes; the third shows those of orthographic and phonological similarity to other words; and the last row shows the effects of age of acquisition and concreteness

As can be seen in Table 4 and Figs. 2 and 3, the effects of the word variables were quite comparable in the lexical decision parts of ELP and ECP. High-frequency words were responded to faster than low-frequency words, except for the very-high-frequency words, which are mostly function words (auxiliaries, conjunctions, determiners, particles, prepositions, or pronouns). Function words do not seem to be expected in lexical decision experiments or vocabulary tests, possibly because they are rarely seen in isolation, or because of list context effects, since the vast majority of stimuli presented in lexical decision tasks are content words. Indeed, the processing cost for these words is not seen in eye movement studies (Dirix, Brysbaert, & Duyck, 2018).

Words of six to eight letters were responded to faster than longer and shorter words; the effect was very much the same in ECP and ELP. Words with extra syllables were responded to more slowly and morphologically complex words were responded to more rapidly than expected on the basis of the other variables. These effects were stronger in ELP than in ECP. Also the similarity to other words tended to have a stronger effect in ELP than in ECP. Here we see the only contradiction between ELP and ECP: Whereas orthographic distance to other words hindered processing in ELP, it facilitated processing in ECP. Finally, the effects of age of acquisition (AoA) and concreteness were larger in ECP than in ELP.

All in all, the variables related to the activation of representations in the mental lexicon (frequency, AoA, concreteness) were stronger in ECP than in ELP. In contrast, the variables related to the similarity with other words (morphology, orthographic, and phonological similarity) tended to weigh more heavily in the speeded responses of ELP than in the unspeeded responses of ECP. Interestingly, words were responded to more slowly in ECP when they were orthographically similar to other words, whereas the reverse effect was observed in ELP. The ECP finding is in line with the hypothesis that it is more difficult to recognize a word when it resembles many other words. The ELP finding is in line with the proposal that speeded responses in a lexical decision task are not always based on individual word recognition, but can be based on the total degree of orthographic activation caused by the letter string (Grainger & Jacobs, 1996; Pollatsek, Perea, & Binder, 1999).

The regression accounted for 68% of the variance in ELP zRTs and 60% of the variance in ECP RTs. The correlation between ELP and ECP was .79 for the dataset. This is the same as for all words in common (Table 3), and it means that we are still missing some 11%–19% of the systematic variance in the datasets.

Virtual experiments

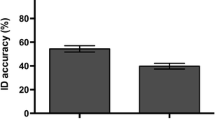

A final way to probe the value of ECP is to see whether we can replicate some classic studies with the dataset. This is done by extracting the RTs from ECP for the stimuli used in the original experiments and running analyses over items. Keuleers et al. (2012) ran a number of such virtual experiments with BLP. The first question they addressed was whether the word frequency effect could be replicated. Given that ECP has a stronger frequency effect than ELP, we would expect this to be the case. Table 5 shows the outcome. To ease the comparison, the ELP and BLP data are given as average RTs and not as zRTs.

The next variable Keuleers et al. (2012) investigated was AoA. Given that the AoA effect was stronger in ECP than in ELP, we again expected to replicate the findings. Table 6 shows the results. We indeed were able to replicate the published patterns. In particular for Gerhand and Barry (1999) the virtual experiment was closer to the original experiment than ELP and BLP, partly because there were several missing observations for the hardest condition in ELP and BLP.

Another topic Keuleers et al. (2012) addressed was orthographic neighborhood size. The first computational models suggested that words with many neighbors should take longer to process, because there is more competition between activated word forms. A series of lexical decision experiments pointed to facilitation, however, which Grainger and Jacobs (1996) explained by assuming that lexical decision responses can be based on the total activation in the mental lexicon. Words with many neighbors initially create more activation in the lexicon than words with few neighbors and this would lead to a “word” response before the target word is fully recognized.

Given that the OLD effect in ECP was opposite to the one observed in ELP, it is interesting to see what virtual experiments give for this variable. Table 7 shows the results for some classic studies. Remember that these all involved monosyllabic words, a very small subset of the words in ECP. Although the results of the virtual experiments are largely in line with those of the original studies (including those of ECP), Table 7 is primarily a testimony to the weaknesses of the factorial design, as listed in the introduction. Most studies had too few stimuli to find anything significant in an analysis over stimuli, meaning that the differences could have been due to one or two stimuli in one or the other condition. Overall, however, it looks like the effects of neighborhood size are facilitatory in lexical decision (in particular, the number of body neighbors), and that inhibitory effects are largely due to the presence of a neighbor with a higher frequency (see also Chen & Mirman, 2012). In addition, neighbors are not limited to words of the same length, but include words with one letter omitted or added (Davis & Taft, 2005), as captured by the OLD and PLD measures. More importantly for the present discussion, the ECP findings are well in line with those of the other data for the monosyllabic words.

In a series of articles, Yates and colleagues argued that, in particular, phonological neighbors speed up lexical decisions (Yates, 2005, 2009; Yates, Locker, & Simpson, 2004). Table 8 looks at how well these findings replicate in ECP, ELP, and BLP. The basic finding of Yates et al. was replicated successfully with the stimuli selected by the authors, but the difference between two and three phonological neighbors (Yates, 2009) was less consistent. This agrees with Davis’s (2010) argument that the main neighborhood size effect is between no neighbors and one neighbor (with higher frequency).

Another effect worth looking at is the influence of word ambiguity. Rodd, Gaskell, and Marslen-Wilson (2002) argued that ambiguity has two opposite effects. Words with unrelated meanings (e.g., can, second) have longer lexical decision times than unambiguous control controls, whereas words with related senses (uniform, burn) are responded to more rapidly than unambiguous control words. Table 9 shows that the facilitatory effect of multiple senses tends to be stronger than the inhibitory effect of multiple unrelated meanings, and that the effects seem to be clearer in ELP than in ECP, at least for the stimuli selected by Rodd et al.

A final finding in lexical decision research we will look at is the size effect. Sereno, O’Donnell, and Sereno (2009) reported that participants respond faster to words representing big things (bed, truck, buffalo) than to matched words representing small things (cup, thumb, apricot). The authors related this finding to the importance of embodied cognition, a view according to which cognitive processing involves internal simulations of perceptual and motor processes (Barsalou, 2008; Fischer & Zwaan, 2008). Kang, Yap, Tse, and Kurby (2011), however, were unable to replicate the finding and, in addition, reported that the effect was absent in ELP. Table 10 gives the outcome of a virtual experiment in ECP, in addition to ELP. BLP could not be used, because nearly half of the stimulus materials were longer than two syllables. As can be seen in Table 10, the size effect was not replicated in ECP, either.

Education differences

Up to now we have discussed findings that ECP has in common with ELP and BLP, and seen that for these words ECP is a valid addition to the existing megastudies. However, the merit of ECP goes further. For a start, ECP offers data for 35 thousand words not covered by ELP, and for 50 thousand words not present in BLP. This substantially increases the resources available to researchers.

In addition, ECP includes more participants than the typical undergraduate students. Some participants had only finished high school, others had achieved a bachelor degree (often outside university), a master degree (at university), or a PhD degree. On average, we had 170 observations per word for participants who finished high school, 296 for participants with a bachelor degree, 125 for participants with a master degree, and 46 for participants with a PhD degree. Because of the small numbers in the last group, we limit the analysis to the first three groups.

Keuleers et al. (2015) and Brysbaert et al. (2016a) already discussed the number of words known as a function of education level. Participants with more education know more words than participants with less education. Interestingly, the differences were modest when the participants’ age was taken into account and mainly originated during the study years, arguably because the participants then were acquiring the academic vocabulary related to their studies and word use in higher education (Coxhead, 2000).

To compare the three education groups, we report the outcomes of the regression analysis with the data discussed in Table 4 (N = 18,305). Two outcomes are given: first the analysis with the unchanged regression weights, and then the analysis with the beta coefficients. The former tells us how the RTs differ between groups, the latter how the relative importance of the variables varies. Variables were centered, so that the intercept gives us the RT of the “middle” word. Interestingly, the ELP zRTs correlate highest with the participants who finished high school (r = .79), followed by those who had bachelors degrees (r = .77), and lowest with the participants who had a masters degree (r = .71). This is in line with the fact that most ELP participants were undergraduate students. On the other hand, the lower correlation with the masters degree group is probably also to some extent due to the lower number of observations for this group (resulting in a lower reliability of the ECP RTs).

Table 11 shows the outcomes of the analyses. Participants with less education responded more slowly, as can be seen in the intercepts, and tended to show stronger effects of frequency, AoA, and number of syllables. Participants with masters degrees seem to be more willing to respond on the basis of total orthographic activation, given that the effect of OLD is stronger for them. Overall, however, the differences are small and do not seem to offset the smaller number of observations per word. In particular, R2 for the participants with a masters degree has dropped considerably (R2 = .48).

Figure 4 shows how the predicted RTs differ for the three education groups as a function of word frequency. This illustrates that the effect of education is particularly strong for low-frequency words.

Predicted response times for the three education groups as a function of word frequency. The regressions included all variables mentioned in Table 11

Age differences

Another variable we can look at is the age group of the participants (Wulff et al., 2019). Davies, Arnell, Birchenough, Grimmond, and Houlson (2017) reported that the effects of word frequency and AoA on lexical decision times become smaller with increasing age over adult life. At the same time, there was ageing-related response slowing, which could be attributed to decreasing efficiency of stimulus encoding and/or response execution in older age, but is also consistent with increased processing costs related to the accumulation of information learned over time (Ramscar, Hendrix, Shaoul, Milin, & Baayen, 2014).

The decrease of the word frequency effect in older participants is expected on the basis of their longer exposure to the language. A number of publications indicate that the word frequency effect becomes smaller as participants are exposed to more language (Brysbaert, Lagrou, & Stevens, 2017; Brysbaert et al., 2018; Cop, Keuleers, Drieghe, & Duyck, 2015; Diependaele, Lemhöfer, & Brysbaert, 2013; Mainz, Shao, Brysbaert, & Meyer, 2017; Mandera, 2016, chap. 4; Monaghan, Chang, Welbourne, & Brysbaert, 2017). This is expected on the basis of two models. First, connectionist models at a certain point show a decrease in the frequency effect, when overlearning takes place (Monaghan et al., 2017). Second, Mandera (chap. 4) showed that a decrease in the frequency effect as a function of practice is predicted if word learning follows a power law rather than an exponential law (Logan, 1988).

At the same time, exposure to language increases the vocabulary of a person. Healthy old participants indeed have a larger vocabulary than young adults (Verhaeghen, 2003) and vocabulary has been shown to have logarithmic growth over age (Keuleers et al., 2015). Particularly related to the ECP stimulus set, Brysbaert et al. (2016a) reported that a 60-year-old person on average knows 6,000 lemmas more than a 20-year-old person, or an increase of some three words per week.

In contrast to Davies et al. (2017) and our own work, Cohen-Shikora and Balota (2016) failed to find a decrease in the word frequency effect as a function of age. They administered three tasks (lexical decision, word naming, and animacy judgment) to 148 participants, ranging in age from 18 to 86 years. Each task consisted of responses to 400 words (in counterbalanced order). Only in word-naming latencies was there a hint of a smaller word frequency effect in older participants than in younger participants. At the same time, the data of Cohen-Shikora and Balota (2016) replicated the core effects of the other studies: (1) Older participants were slower and more accurate than younger participants, (2) older participants had a larger vocabulary than younger participants, and (3) there was a negative correlation between vocabulary size and the word frequency effect. The analyses of Cohen-Shikora and Balota were done on the z scores of RTs. Could this have made a difference, as z scores not only eliminate differences in means but also equalize the standard deviations?

To compare age groups, we made a distinction between participants of 18–23 (on average, 104 observations per word), 24–29 (117 observations), 30–39 (150 observations), 40–49 (106 observations), and 50+ years (124 observations). To see whether our age differences were in line with those of Spieler and Balota (1997; young participants) and Balota and Spieler (1998; old participants), we looked at the correlations with these datasets. For the young participants of Spieler and Balota, the correlations with increasing age group were: .60, .59, .58, .52, and .50. For the old participants of Balota and Spieler the correlations were, respectively, .51, .50, .53, .48, and .49. The pattern of result was as expected for the young participants, but not for the old participants. One reason may be that the old participants of Balota and Spieler had a mean age of 74 years, substantially older than the ECP participants. Another contributor probably is differences in the reliability of the word processing estimates in the various age groups.

Table 12 and the left panel of Fig. 5 show the results of the regression analyses. They are in line with the observation of Davies et al. (2017) that the frequency and AoA effects decrease over age. The OLD and PLD effects also seem to become smaller, in line with the observation that the older participants took some more time to respond. Finally, it looks like the effects of number of syllables and concreteness increase as adults grow older.

Predicted response times for the five age groups as a function of word frequency: (Left) Raw RTs. (Right) zRTs. The regressions included all variables mentioned in Table 12.

The left panel of Fig. 5 shows the predicted RTs for the five age groups as a function of word frequency. These point to longer response times for older participants. At the same time, because the cost for low-frequency words is smaller for older participants, the age differences in RTs are smallest for the low-frequency words.

To make sure that our results did not rely on the use of raw RTs as the dependent variable, we also analyzed the standardized RTs. As can be seen in the right panel of Fig. 5, the findings remained the same. Because zRTs eliminate differences in average RTs, they more clearly illustrate the smaller frequency effect in older than in younger participants.

One reason for the difference in findings between Fig. 5 and Cohen-Shikora and Balota (2016) could be that Cohen-Shikora and Balota were very careful to equate their groups on education level. It is possible that in our study we had relatively more educated older people than younger people (e.g., because they have more access to the internet).Footnote 7 To test this possibility, we compared the age group of 24- to 30-year-olds to the age group of 50- to 59-year-olds for the participants with high school, bachelors, and masters education, once with the data analyzed as in Fig. 5 and once with equal weights given to the three education levels. The age group 24–29 was chosen because it is the youngest group for which masters-level education is possible; the group 50–59 was chosen because it is the oldest group with comparable homogeneity. To optimize the comparison with Cohen-Shikora and Balota, we used zRTs as the dependent variable.

Figure 6 shows the outcome. There is no evidence that the smaller frequency effect in the 50–59 group is due to differences in education level (something we did not see in the distribution of education levels in the two age groups, either). So, our data agree more with those of Davies et al. (2017) than with those of Cohen-Shikora and Balota (2016). A further challenge for the interpretation of Cohen-Shikora and Balota is how to square the absence of a correlation between age and frequency with the presence of significant correlations between vocabulary size and frequency effect, on the one hand, and between vocabulary size and age, on the other hand. To the defense of Cohen-Shikora and Balota, their study is the only one to have included several word-processing tasks, a large group of participants with ages above 60 years, and extensive attempts to match the groups of participants. On the negative side, they included words in a more restricted frequency range than we did (Zipf scores going from roughly 2.0 to 5.2, with a mean of 3.6). This might have made it more difficult to see the interaction.

Comparison of frequency effects in the age groups of 24–30 and 50–59 years, when not controlled for possible differences in education level (left) and when controlled for such differences (right). Effects are calculated on zRTs. The regressions included all variables mentioned in Table 12.

Conclusions

We present a new word dataset, the English Crowdsourcing Project, which is larger than all currently available datasets (Table 1). It is larger both in the number of words included and the number and variety of participants taking part.

The dataset was collected by means of an internet vocabulary test, in which participants indicated which words they knew and which not. To discourage “yes” responses to unknown words, about one third of the stimuli were nonwords, and participants were penalized if they said “yes” to these nonwords.

Although speed of responding was not mentioned as an evaluation criterion to the participants, the present analyses show that the RTs correlate well with the lexical decision times collected in laboratory settings; they are just some 250 ms longer. Surprisingly, the longer RTs did not lead to larger effects in the virtual experiments. For all the experiments, the effects in ECP were comparable to the original effects and to those in the English Lexicon Project and the British Lexicon Project. This was unexpected, because often longer RTs are accompanied by larger differences between conditions (see, e.g., Table 8 of Keuleers et al., 2012). It suggests that the extra time in ECP was largely unrelated to word recognition and the decision processes (for a model including such a time delay, see Ratcliff, Gomez, & McKoon, 2004). Apparently, participants took extra time to perceive the stimulus and give a response. In this respect, it is important to mention that the RTs in a lexical decision task drop by some 100 ms in the first few hundred trials (Keuleers, Diependaele, & Brysbaert, 2010; Keuleers et al., 2012). Given that most participants completed only 100 trials in ECP, this could explain some of the extra time taken to respond. Another contributor might have been the software used to present the stimuli and collect the responses via the internet.

To some extent, it is surprising that untimed answers to a vocabulary test would resemble lexical decision times so well, when based on large numbers of observations. This testifies to the ecological validity of the lexical decision task, in that very much the same results were obtained in an untimed vocabulary test outside of academia as on a speeded response task in the laboratory.

ECP is further interesting because a large range of people took part. Surprisingly, we found no large differences between education levels (Fig. 4). Presumably this is due to the fact that only people interested in language and with easy access to the internet took part in the test. There is evidence that the size of the frequency effect depends more on the amount of reading and language exposure than on the intelligence or the education level of the participants (Brysbaert, Lagrou, & Stevens, 2017). ECP does point to some interesting effects of age (or language exposure), however. The effects of frequency and age of acquisition seem to become smaller as adults grow older (see also Davies et al., 2017), whereas older people seem to be more affected by the meanings of words (as indicated by the concreteness effect) and by the complexity of a word (the number of syllables). Further, targeted experiments will have to confirm these initial impressions. Such experiments could also try to include an even wider variety of participants.

Availability

The raw data and Excel files containing the most important information can be found at the Open Science Framework webpage https://osf.io/rpx87/ or on our website http://crr.ugent.be/. To facilitate analyses of the full dataset, we have released a Python module for working with the raw data (available at https://github.com/pmandera/vocab-crowd).

The Excel files are included for a broader audience as their usage does not require programming skills. First, we have the master file containing the information calculated across all participants, called English Crowdsourcing Project All Native Speakers. Its outline is shown in Fig. 7.

Column A gives the word. Column B says how many observations there were for that word. Column C gives the response accuracy, indicating the number of observations on which the RTs are based. We would prefer that users not use the information in column C for anything other than the analysis of RTs. In Brysbaert et al. (2019), we present the word prevalence measure, which is better than accuracy (even though it correlates .96 with the accuracies reported here). Word prevalence is given in column D. Columns E–H contain the new information: the ECP RTs and their standard deviations across participants, plus the zRTs and their standard deviations. Finally, for the user’s convenience, column I includes the SUBTLEX-US frequencies, expressed as Zipf values (Brysbaert et al., 2018).

In addition to the master file, we also have files with the data split per education level (ECP education groups), per age (ECP age groups), and per Age × Education Level. Users who want other summary files are invited to make them themselves on the basis of the raw data.

Notes

One stimulus (null) got lost in the various handlings of the database, because Microsoft Excel automatically converts a number of words to other variable types. The same was true for the words false and true in the initial list; this mistake was corrected about halfway through the period covered in this article.

Readers who have doubts about the choices made (introduced in Mandera, 2016, Chap. 4) are invited to analyze the raw data.

The average RTs were 1,161 ms for keyboard devices and 1,258 for touch devices.

We do not present separate data for US and UK participants, because all stimuli were presented in American spelling, and the strength of the database lies in the high number of observations per stimulus.

Given that RTs are based on correct responses, the number of responses decreases as accuracy decreases. In addition, it can be assumed that, in particular, long RTs are missing, as a result of “no” responses (of participants doubting whether they know the word, but in the end deciding they do not). An accuracy of .85 corresponds to a prevalence of 1 in Fig. 1. The data do not differ much from when a criterion of .75 was used (see below), but the criterion of .85 ensures that the correlation is valid for well-known words.

The word prevalence variable cannot be tested here, because it is based on the same dataset.

The authors thank one of the reviewers for the suggestion.

References

Adelman, J. S., Marquis, S. J., Sabatos-DeVito, M. G., & Estes, Z. (2013). The unexplained nature of reading. Journal of Experimental Psychology: Learning, Memory, and Cognition, 39, 1037–1053. https://doi.org/10.1037/a0031829

Aguasvivas, J., Carreiras, M., Brysbaert, M., Mandera, P., Keuleers, E., & Duñabeitia, J. A. (2018). SPALEX: A Spanish lexical decision database from a massive online data collection. Frontiers in Psychology, 9, 2156. https://doi.org/10.3389/fpsyg.2018.02156

Andrews, S. (1992). Frequency and neighborhood effects on lexical access: Lexical similarity or orthographic redundancy. Journal of Experimental Psychology: Learning, Memory, and Cognition, 18, 234–254. https://doi.org/10.1037/0278-7393.18.2.234

Balota, D. A., Cortese, M. J., Sergent-Marshall, S. D., Spieler, D. H., & Yap, M. J. (2004). Visual word recognition of single-syllable words. Journal of Experimental Psychology: General, 133, 283–316.

Balota, D. A., & Spieler, D. H. (1998). The utility of item level analyses in model evaluation: A reply to Seidenberg & Plaut (1998). Psychological Science, 9, 238–240.

Balota, D. A., Yap, M. J., Hutchison, K. A., & Cortese, M. J. (2013). Megastudies: What do millions (or so) of trials tell us about lexical processing? In J. S. Adelman (Ed.), Visual word recognition Volume 1: Models and methods, orthography and phonology (pp. 90–115). New York, NY: Psychology Press.

Balota, D. A., Yap, M. J., Hutchison, K. A., Cortese, M. J., Kessler, B., Loftis, B., . . .,Treiman, R. (2007). The English lexicon project. Behavior Research Methods, 39, 445–459.

Barsalou, L. W. (2008). Grounded cognition. Annual Review of Psychology, 59, 617–645.

Berger, C. M., Crossley, S. A., & Kyle, K. (2019). Using native-speaker psycholinguistic norms to predict lexical proficiency and development in second-language production. Applied Linguistics, 40, 22–42. https://doi.org/10.1093/applin/amx005

Brysbaert, M., & Cortese, M. J. (2011). Do the effects of subjective frequency and age of acquisition survive better word frequency norms? Quarterly Journal of Experimental Psychology, 64, 545–559.

Brysbaert, M., Lagrou, E., & Stevens, M. (2017). Visual word recognition in a second language: A test of the lexical entrenchment hypothesis with lexical decision times. Bilingualism: Language and Cognition, 20, 530–548.

Brysbaert, M., Mandera, P., & Keuleers, E. (2018). The word frequency effect in word processing: An updated review. Current Directions in Psychological Science, 27, 45–50.

Brysbaert, M., Mandera, P., McCormick, S. F., & Keuleers, E. (2019). Word prevalence norms for 62,000 English lemmas. Behavior Research Methods, 51, 467–479.

Brysbaert, M., & New, B. (2009). Moving beyond Kučera and Francis: A critical evaluation of current word frequency norms and the introduction of a new and improved word frequency measure for American English. Behavior Research Methods, 41, 977–990.

Brysbaert, M., Stevens, M., Mandera, P., & Keuleers, E. (2016a). How many words do we know? Practical estimates of vocabulary size dependent on word definition, the degree of language input and the participant’s age. Frontiers in Psychology 7, 1116. https://doi.org/10.3389/fpsyg.2016.01116

Brysbaert, M., Stevens, M., Mandera, P., & Keuleers, E. (2016b). The impact of word prevalence on lexical decision times: Evidence from the Dutch Lexicon Project 2. Journal of Experimental Psychology: Human Perception and Performance, 42, 441–458.

Brysbaert, M., Warriner, A. B., & Kuperman, V. (2014). Concreteness ratings for 40 thousand generally known English word lemmas. Behavior Research Methods, 46, 904–911.

Chang, Y. N., Hsu, C. H., Tsai, J. L., Chen, C. L., & Lee, C. Y. (2016). A psycholinguistic database for traditional Chinese character naming. Behavior Research Methods, 48, 112–122.

Chateau, D., & Jared, D. (2003). Spelling–sound consistency effects in disyllabic word naming. Journal of Memory and Language, 48, 255–280.

Chen, Q., & Mirman, D. (2012). Competition and cooperation among similar representations: toward a unified account of facilitative and inhibitory effects of lexical neighbors. Psychological Review, 119, 417–430.

Chetail, F., Balota, D., Treiman, R., & Content, A. (2015). What can megastudies tell us about the orthographic structure of English words? Quarterly Journal of Experimental Psychology, 68, 1519–1540.

Cohen-Shikora, E. R., & Balota, D. A. (2016). Visual word recognition across the adult lifespan. Psychology and Aging, 31, 488–502.

Cohen-Shikora, E. R., Balota, D. A., Kapuria, A., & Yap, M. J. (2013). The past tense inflection project (PTIP): Speeded past tense inflections, imageability ratings, and past tense consistency measures for 2,200 verbs. Behavior Research Methods, 45, 151–159.

Connell, L., & Lynott, D. (2014). I see/hear what you mean: Semantic activation in visual word recognition depends on perceptual attention. Journal of Experimental Psychology: General, 143, 527–533. https://doi.org/10.1037/a0034626

Cop, U., Dirix, N., Drieghe, D., & Duyck, W. (2017). Presenting GECO: An eyetracking corpus of monolingual and bilingual sentence reading. Behavior Research Methods, 49, 602–615.

Cop, U., Keuleers, E., Drieghe, D., & Duyck, W. (2015). Frequency effects in monolingual and bilingual natural reading. Psychonomic Bulletin & Review, 22, 1216–1234.

Cortese, M. J., Hacker, S., Schock, J., & Santo, J. B. (2015a). Is reading aloud performance in megastudies systematically influenced by the list context? Quarterly Journal of Experimental Psychology, 68, 1711–1722. https://doi.org/10.1080/17470218.2014.974624

Cortese, M. J., Khanna, M. M., & Hacker, S. (2010). Recognition memory for 2,578 monosyllabic words. Memory, 18, 595–609. DOI: https://doi.org/10.1080/09658211.2010.493892.

Cortese, M. J., Khanna, M. M., Kopp, R., Santo, J. B, Preston, K. S., & Van Zuiden, T. (2017). Participants shift response deadlines based on list difficulty during reading aloud megastudies, Memory & Cognition, 45, 589–599.

Cortese, M. J., McCarty, D. P., & Schock, J. (2015b). A mega recognition memory study of 2897 disyllabic words. Quarterly Journal of Experimental Psychology, 68, 1489–1501. https://doi.org/10.1080/17470218.2014.945096

Cortese, M. J., Yates, M., Schock, J., & Vilks, L. (2018). Examining word processing via a megastudy of conditional reading aloud. Quarterly Journal of Experimental Psychology, 71, 2295–2313.

Coxhead, A. (2000). A new academic word list. TESOL Quarterly, 34, 213–238.

Crump, M. J. C., McDonnell, J. V., & Gureckis, T. M. (2013). Evaluating Amazon’s Mechanical Turk as a tool for experimental behavioral research. PLoS ONE, 8, e57410. https://doi.org/10.1371/journal.pone.0057410

Davies, R., Barbón, A., & Cuetos, F. (2013). Lexical and semantic age-of-acquisition effects on word naming in Spanish. Memory & Cognition, 41, 297–311.

Davies, R. A., Arnell, R., Birchenough, J. M., Grimmond, D., & Houlson, S. (2017). Reading through the life span: Individual differences in psycholinguistic effects. Journal of Experimental Psychology: Learning, Memory, and Cognition, 43, 1298–1338.

Davis, C. J. (2010). The spatial coding model of visual word identification. Psychological Review, 117, 713–758.

Davis, C. J., & Taft, M. (2005). More words in the neighborhood: Interference in lexical decision due to deletion neighbors. Psychonomic Bulletin & Review, 12, 904–910.

Diependaele, K., Lemhöfer, K., & Brysbaert, M. (2013). The word frequency effect in first and second language word recognition: A lexical entrenchment account. Quarterly Journal of Experimental Psychology, 66, 843–863.

Dirix, N., Brysbaert, M., & Duyck, W. (2018). How well do word recognition measures correlate? Effects of language context and repeated presentations. Behavior Research Methods. https://doi.org/10.3758/s13428-018-1158-9

Dufau, S., Grainger, J., Midgley, K. J., & Holcomb, P. J. (2015). A thousand words are worth a picture: Snapshots of printed-word processing in an event-related potential megastudy. Psychological Science, 26, 1887–1897.

Ernestus, M., & Cutler, A. (2015). BALDEY: A database of auditory lexical decisions. Quarterly Journal of Experimental Psychology, 68, 1469–1488.

Ferrand, L., Brysbaert, M., Keuleers, E., New, B., Bonin, P., Méot, A., . . . Pallier, C. (2011). Comparing word processing times in naming, lexical decision, and progressive demasking: Evidence from Chronolex. Frontiers in Psychology, 2, 306. https://doi.org/10.3389/fpsyg.2011.00306

Ferrand, L., Méot, A., Spinelli, E., New, B., Pallier, C., Bonin, P., . . . Grainger, J. (2018). MEGALEX: A megastudy of visual and auditory word recognition. Behavior Research Methods, 50, 1285–1307.

Ferrand, L., New, B., Brysbaert, M., Keuleers, E., Bonin, P., Méot, A., . . . Pallier, C. (2010). The French Lexicon Project: Lexical decision data for 38,840 French words and 38,840 pseudowords. Behavior Research Methods, 42, 488–496.

Ferré, P., & Brysbaert, M. (2017). Can Lextale-Esp discriminate between groups of highly proficient Catalan-Spanish bilinguals with different language dominances? Behavior Research Methods, 49, 717–723.

Fischer, M. H., & Zwaan, R. A. (2008). Embodied language: A review of the role of the motor system in language comprehension. Quarterly Journal of Experimental Psychology, 61, 825–850.

Frank, S. L., Monsalve, I. F., Thompson, R. L., & Vigliocco, G. (2013). Reading time data for evaluating broad-coverage models of English sentence processing. Behavior Research Methods, 45, 1182–1190.

Frank, S. L., Otten, L. J., Galli, G., & Vigliocco, G. (2015). The ERP response to the amount of information conveyed by words in sentences. Brain and Language, 140, 1–11.

Futrell, R., Gibson, E., Tily, H. J., Blank, I., Vishnevetsky, A., Piantadosi, S. T., & Fedorenko, E. (2018). The Natural Stories Corpus. In N. Calzolari, K. Choukri, C. Cieri, T. Declerck, S. Goggi, K. Hasida, . . . T. Tokunaga (Eds.), Proceedings of LREC 2018, Eleventh International Conference on Language Resources and Evaluation (pp. 76–82). Paris, France: European Language Resources Association. Available at www.lrec-conf.org/proceedings/lrec2018/pdf/337.pdf

Gerhand, S., & Barry, C. (1998). Word frequency effects in oral reading are not merely age-of-acquisition effects in disguise. Journal of Experimental Psychology: Learning, Memory, and Cognition, 24, 267–283.

Gimenes, M., & New, B. (2016). Worldlex: Twitter and blog word frequencies for 66 languages. Behavior Research Methods, 48, 963–972.

Goh, W. D., Yap, M. J., Lau, M. C., Ng, M. M., & Tan, L. C. (2016). Semantic richness effects in spoken word recognition: A lexical decision and semantic categorization megastudy. Frontiers in psychology, 7, 976.

González-Nosti, M., Barbón, A., Rodríguez-Ferreiro, J., & Cuetos, F. (2014). Effects of the psycholinguistic variables on the lexical decision task in Spanish: A study with 2,765 words. Behavior Research Methods, 46, 517–525.

Grainger, J., & Jacobs, A. M. (1996). Orthographic processing in visual word recognition: A multiple read-out model. Psychological Review, 103, 518–565.

Harrington, M., & Carey, M. (2009). The on-line Yes/No test as a placement tool. System, 37, 614–626.

Herdağdelen, A., & Marelli, M. (2017). Social media and language processing: How Facebook and Twitter provide the best frequency estimates for studying word recognition. Cognitive Science, 41, 976–995.

Heyman, T., Van Akeren, L., Hutchison, K. A., & Storms, G. (2016). Filling the gaps: A speeded word fragment completion megastudy. Behavior Research Methods, 48, 1508–1527.

Hubert, M., & Vandervieren, E. (2008). An adjusted boxplot for skewed distributions. Computational Statistics & Data Analysis, 52, 5186–5201.

Husain, S., Vasishth, S., & Srinivasan, N. (2015). Integration and prediction difficulty in Hindi sentence comprehension: Evidence from an eye-tracking corpus. Journal of Eye Movement Research, 8(2), 3:1–12.

Hutchison, K. A., Balota, D. A., Neely, J. H., Cortese, M. J., Cohen-Shikora, E. R., Tse, C. S., . . . Buchanan, E. (2013). The semantic priming project. Behavior Research Methods, 45, 1099–1114.

Kang, S. H., Yap, M. J., Tse, C. S., & Kurby, C. A. (2011). Semantic size does not matter: “Bigger” words are not recognized faster. Quarterly Journal of Experimental Psychology, 64, 1041–1047.

Kessler, B., Treiman, R., & Mullennix, J. (2002). Phonetic biases in voice key response time measurements. Journal of Memory and Language, 47, 145–171.

Keuleers, E., & Balota, D. A. (2015). Megastudies, crowd-sourcing, and large datasets in psycholinguistics: An overview of recent developments. Quarterly Journal of Experimental Psychology, 68, 1457–1468.

Keuleers, E., & Brysbaert, M. (2010). Wuggy: A multilingual pseudoword generator. Behavior Research Methods, 42, 627–633.

Keuleers, E., Diependaele, K., & Brysbaert, M. (2010). Practice effects in large-scale visual word recognition studies: A lexical decision study on 14,000 Dutch mono- and disyllabic words and nonwords. Frontiers in Psychology, 1, 174. https://doi.org/10.3389/fpsyg.2010.00174

Keuleers, E., Lacey, P., Rastle, K., & Brysbaert, M. (2012). The British Lexicon Project: Lexical decision data for 28,730 monosyllabic and disyllabic English words. Behavior Research Methods, 44, 287–304.

Keuleers, M., Stevens, M., Mandera, P., & Brysbaert, M. (2015). Word knowledge in the crowd: Measuring vocabulary size and word prevalence in a massive online experiment. Quarterly Journal of Experimental Psychology, 68, 1665–1692.

Kliegl, R., Nuthmann, A., & Engbert, R. (2006). Tracking the mind during reading: The influence of past, present, and future words on fixation durations. Journal of Experimental Psychology: General, 135, 12–35. https://doi.org/10.1037/0096-3445.135.1.12

Kuperman, V., Estes, Z., Brysbaert, M., & Warriner, A. B. (2014). Emotion and language: Valence and arousal affect word recognition. Journal of Experimental Psychology: General, 143, 1065–1081.

Kuperman, V., Stadthagen-Gonzalez, H., & Brysbaert, M. (2012). Age-of-acquisition ratings for 30 thousand English words. Behavior Research Methods, 44, 978–990.

Laurinavichyute, A. K., Sekerina, I. A., Alexeeva, S., Bagdasaryan, K., & Kliegl, R. (2019). Russian Sentence Corpus: Benchmark measures of eye movements in reading in Russian. Behavior Research Methods, 51, 1161–1178. https://doi.org/10.3758/s13428-018-1051-6

Lee, C. Y., Hsu, C. H., Chang, Y. N., Chen, W. F., & Chao, P. C. (2015). The feedback consistency effect in Chinese character recognition: Evidence from a psycholinguistic norm. Language and Linguistics, 16, 535–554.

Lemhöfer, K., & Broersma, M. (2012). Introducing LexTALE: A quick and valid lexical test for advanced learners of English. Behavior Research Methods, 44, 325–343.

Lemhöfer, K., Dijkstra, T., Schriefers, H., Baayen, R. H., Grainger, J., & Zwitserlood, P. (2008). Native language influences on word recognition in a second language: A megastudy. Journal of Experimental Psychology: Learning, Memory, and Cognition, 34, 12–31.

Liben-Nowell, D., Strand, J., Sharp, A., Wexler, T., & Woods, K. (2019). The danger of testing by selecting controlled subsets, with applications to spoken-word recognition. Journal of Cognition, 2, 2. https://doi.org/10.5334/joc.51

Liu, Y., Shu, H., & Li, P. (2007). Word naming and psycholinguistic norms: Chinese. Behavior Research Methods, 39, 192–198.

Logan, G. D. (1988). Toward an instance theory of automatization. Psychological Review, 95, 492–527.

Luke, S. G., & Christianson, K. (2018). The Provo Corpus: A large eye-tracking corpus with predictability norms. Behavior Research Methods, 50, 826–833.

Mainz, N., Shao, Z., Brysbaert, M., & Meyer, A. (2017). Vocabulary knowledge predicts lexical processing: Evidence from a group of participants with diverse educational backgrounds. Frontiers in Psychology, 8, 1164. https://doi.org/10.3389/fpsyg.2017.01164

Mandera, P. (2016). Psycholinguistics on a large scale: Combining text corpora, megastudies, and distributional semantics to investigate human language processing (Unpublished PhD thesis). Ghent University, Ghent, Belgium. Available at http://crr.ugent.be/papers/pmandera-disseration-2016.pdf

Meara, P. M., & Buxton, B. (1987). An alternative to multiple choice vocabulary tests. Language Testing, 4, 142–154.

Monaghan, P., Chang, Y. N., Welbourne, S., & Brysbaert, M. (2017). Exploring the relations between word frequency, language exposure, and bilingualism in a computational model of reading. Journal of Memory and Language, 93, 1–21.

Monsell, S., Doyle, M. C., & Haggard, P. N. (1989). Effects of frequency on visual word recognition tasks: Where are they? Journal of Experimental Psychology: General, 118, 43–71.

Morrison, C. M., & Ellis, A. W. (1995). Roles of word-frequency and age of acquisition in word naming and lexical decision. Journal of Experimental Psychology: Learning, Memory, and Cognition, 21, 116–133.

Mousikou, P., Sadat, J., Lucas, R., & Rastle, K. (2017). Moving beyond the monosyllable in models of skilled reading: Mega-study of disyllabic nonword reading. Journal of Memory and Language, 93, 169–192.

Muncer, S. J., Knight, D., & Adams, J. W. (2014). Bigram frequency, number of syllables and morphemes and their effects on lexical decision and word naming. Journal of Psycholinguistic Research, 43, 241–254.

New, B., Ferrand, L., Pallier, C., & Brysbaert, M. (2006). Re-examining word length effects in visual word recognition: New evidence from the English Lexicon Project. Psychonomic Bulletin & Review, 13, 45–52.

Norris, D., & Kinoshita, S. (2012). Reading through a noisy channel: Why there’s nothing special about the perception of orthography. Psychological Review, 119, 517–545.

Perea, M., & Pollatsek, A. (1998). The effects of neighborhood frequency in reading and lexical decision. Journal of Experimental Psychology: Human Perception and Performance, 24, 767–779.

Pexman, P. M., Heard, A., Lloyd, E., & Yap, M. J. (2017). The Calgary Semantic Decision Project: concrete/abstract decision data for 10,000 English words. Behavior Research Methods, 49, 407–417. https://doi.org/10.3758/s13428-016-0720-6

Pollatsek, A., Perea, M., & Binder, K. S. (1999). The effects of “neighborhood size” in reading and lexical decision. Journal of Experimental Psychology: Human Perception and Performance, 25, 1142–1158.

Pritchard, S. C., Coltheart, M., Palethorpe, S., & Castles, A. (2012). Nonword reading: Comparing dual-route cascaded and connectionist dual-process models with human data. Journal of Experimental Psychology: Human Perception and Performance, 38, 1268–1288.

Pynte, J., & Kennedy, A. (2006). An influence over eye movements in reading exerted from beyond the level of the word: Evidence from reading English and French. Vision Research, 46, 3786–3801.

Ramscar, M., Hendrix, P., Shaoul, C., Milin, P., & Baayen, H. (2014). The myth of cognitive decline: Non-linear dynamics of lifelong learning. Topics in Cognitive Science, 6, 5–42.

Ratcliff, R., Gomez, P., & McKoon, G. (2004). A diffusion model account of the lexical decision task. Psychological Review, 111, 159–182.

Reimers, S., & Stewart, N. (2015). Presentation and response timing accuracy in Adobe Flash and HTML5/JavaScript Web experiments. Behavior Research Methods, 47, 309–327. https://doi.org/10.3758/s13428-014-0471-1

Rodd, J., Gaskell, G., & Marslen-Wilson, W. (2002). Making sense of semantic ambiguity: Semantic competition in lexical access. Journal of Memory and Language, 46, 245–266.

Schmalz, X., & Mulatti, C. (2017). Busting a myth with the Bayes factor. The Mental Lexicon, 12, 263–282.

Schmidtke, D., Kuperman, V., Gagné, C. L., & Spalding, T. L. (2016). Competition between conceptual relations affects compound recognition: the role of entropy. Psychonomic Bulletin & Review, 23, 556–570.

Schröter, P., & Schroeder, S. (2017). The Developmental Lexicon Project: A behavioral database to investigate visual word recognition across the lifespan. Behavior Research Methods, 49, 2183–2203.

Sears, C. R., Hino, Y., & Lupker, S. J. (1995). Neighborhood size and neighborhood frequency effects in word recognition. Journal of Experimental Psychology: Human Perception and Performance, 21, 876–900.

Seidenberg, M. S., & Waters, G. S. (1989). Word recognition and naming: A mega study. Bulletin of the Psychonomic Society, 27, 489.

Sereno, S. C., O’Donnell, P. J., & Sereno, M. E. (2009). Short article: Size matters: Bigger is faster. Quarterly Journal of Experimental Psychology, 62, 1115–1122.

Soares, A. P., Lages, A., Silva, A., Comesaña, M., Sousa, I., Pinheiro, A. P., & Perea, M. (2019). Psycholinguistic variables in visual word recognition and pronunciation of European Portuguese words: A mega-study approach. Language, Cognition and Neuroscience, 34, 689–719. https://doi.org/10.1080/23273798.2019.1578395

Spieler, D. H., & Balota, D. A. (1997). Bringing computational models of word naming down to the item level. Psychological Science, 8, 411–416.

Sze, W. P., Liow, S. J. R., & Yap, M. J. (2014). The Chinese Lexicon Project: A repository of lexical decision behavioral responses for 2,500 Chinese characters. Behavior Research Methods, 46, 263–273.

Treiman, R., Mullennix, J., Bijeljac-Babic, R., & Richmond-Welty, E. D. (1995). The special role of rimes in the description, use, and acquisition of English orthography. Journal of Experimental Psychology: General, 124, 107–136.

Tsang, Y. K., Huang, J., Lui, M., Xue, M., Chan, Y. W. F., Wang, S., & Chen, H. C. (2018). MELD-SCH: A megastudy of lexical decision in simplified Chinese. Behavior Research Methods, 50, 1763–1777.

Tse, C. S., Yap, M. J., Chan, Y. L., Sze, W. P., Shaoul, C., & Lin, D. (2017). The Chinese Lexicon Project: A megastudy of lexical decision performance for 25,000+ traditional Chinese two-character compound words. Behavior Research Methods, 49, 1503–1519.

Tucker, B. V., Brenner, D., Danielson, D. K., Kelley, M. C., Nenadić, F., & Sims, M. (2019). The Massive Auditory Lexical Decision (MALD) database. Behavior Research Methods, 51, 1187–1204. https://doi.org/10.3758/s13428-018-1056-1

Verhaeghen, P. (2003). Aging and vocabulary score: A meta-analysis. Psychology and Aging, 18, 332–339.

Winsler, K., Midgley, K. J., Grainger, J., & Holcomb, P. J. (2018). An electrophysiological megastudy of spoken word recognition. Language, Cognition and Neuroscience, 33, 1063–1082.

Wulff, D. U., De Deyne, S., Jones, M. N., Mata, R., Austerweil, J. L., Baayen, R. H., . . . Veríssimo, J. (2019). New perspectives on the aging lexicon. Trends in Cognitive Sciences. https://doi.org/10.1016/j.tics.2019.05.003

Yap, M. J., & Balota, D. A. (2009). Visual word recognition of multisyllabic words. Journal of Memory and Language, 60, 502–529.

Yap, M. J., Balota, D. A., Sibley, D. E., & Ratcliff, R. (2012). Individual differences in visual word recognition: Insights from the English Lexicon Project. Journal of Experimental Psychology: Human Perception and Performance, 38, 53–79.

Yap, M. J., Balota, D. A., Tse, C. S., & Besner, D. (2008). On the additive effects of stimulus quality and word frequency in lexical decision: Evidence for opposing interactive influences revealed by RT distributional analyses. Journal of Experimental Psychology: Learning, Memory, and Cognition, 34, 495–513.

Yap, M. J., Liow, S. J. R., Jalil, S. B., & Faizal, S. S. B. (2010). The Malay Lexicon Project: A database of lexical statistics for 9,592 words. Behavior Research Methods, 42, 992–1003.

Yarkoni, T., Balota, D. A., & Yap, M. J. (2008). Moving beyond Coltheart’s N: A new measure of orthographic similarity. Psychonomic Bulletin & Review, 15, 971–979.

Yates, M. (2005). Phonological neighbors speed visual word processing: Evidence from multiple tasks. Journal of Experimental Psychology: Learning, Memory, and Cognition, 31, 1385–1397.

Yates, M. (2009). Phonological neighborhood spread facilitates lexical decisions. Quarterly Journal of Experimental Psychology, 62, 1304–1314.

Yates, M., Locker, L., & Simpson, G. B. (2004). The influence of phonological neighborhood on visual word perception. Psychonomic Bulletin & Review, 11, 452–457.

Ziegler, J. C., & Perry, C. (1998). No more problems in Coltheart’s neighborhood: Resolving neighborhood conflicts in the lexical decision task. Cognition, 68, B53–B62.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Mandera, P., Keuleers, E. & Brysbaert, M. Recognition times for 62 thousand English words: Data from the English Crowdsourcing Project. Behav Res 52, 741–760 (2020). https://doi.org/10.3758/s13428-019-01272-8

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-019-01272-8